Abstract

In this paper, we introduce a new image auto-segmentation algorithm based on PCNN and fuzzy mutual information (FMI). The image was first segmented by PCNN, and then the FMI was used as the optimization criterion to automatically stop the segmentation with the optimal result. The experimental results demonstrated that the CT and ultrasound images could be well segmented by the proposed algorithm with strong robustness against noise. The results suggest that the proposed algorithm can be used for medical image segmentation.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image segmentation is important in the field of medical imaging team. To accurately segment the image has been a hot topic. All these half-or fully automatic algorithms can be divided into five types mainly depending on the strategy of region of interest (ROI) division and edge detection, texture and characteristic analysis, deformation and positive mode; the algorithm is a mixed methods and multi-scale-based method [1, 2]. The model method often uses a prior knowledge and active contour model and the statistical model, or does not use any deformation prior information about the interested region return-on-investment (ROI). The foundation of active contour model, including snake model and the level set, is very popular, usually by semi-automatic division. The mix method is based on the combination of different algorithms to optimize the result [2, 3].

2 Method

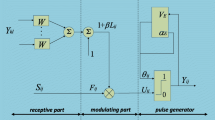

2.1 The Principle of PCNN Image Segmentation

Figure 68.1 shows the neurons in mathematical model. It consists of three parts: the accept area, a field, and pulse generator. Accept areas the role of the other neurons is affected by input and from outside of two channels that connect material F and channel L. The feeding input F ij receives the external stimulus I ij and the pulse Y from the neighboring neurons. The linking input L ij receives the pulses from the neighboring neurons and output signals. In the modulation field, F ij and L ij are input and modulated. The modulation result U ij is then sent to the pulse generator, which is composed of a pulse generator and a comparator. The U ij is compared with the dynamic threshold θ ij to decide whether the neuron fires or not. If the U ij is greater than threshold θ ij , the pulse generator will output one and the dynamic threshold will be enlarged accordingly. When θ ij exceeds U ij , the pulse generator will output zero. Then a pulse burst will be generated. The corresponding mathematical model is expressed as follows:

where i and j refer to the pixel positions in image, k and l are the dislocations in a symmetric neighborhood around a pixel, and n denotes the current iteration (discrete time step). The M ijkl, W ijkl are the constant synaptic weights, and V F, V L and V T are the magnitude scaling terms. The α F, α L and α θ are the time delay constants of the PCNN neuron, and β is the linking strength.

From the point of view of image processing, the model still has some limitations in practical applications. There are many parameters required to be adjusted in the model, which is time-consuming and also difficult. In order to further reduce the computational complexity, the improved PCNN model [4] as follows:

Production neurons pulse will lead to their launch around the fire of the interaction between neurons, which will cause nearby neurons to be used in the same way. Hence it can produce a pulse spread outside the activity of the field. In a neuron, fire will fire any group of neurons the whole group. So the image segmentation can quickly realize the use of synchronization characteristics.

2.2 Fuzzy Mutual Information

Mutual information (MI) is a kind of similarity measure because of its proven versatility, and has been widely used in image processing [5]. This information-theoretic is not dependent on any hypothetical data, and does not assume particular relationships in different forms of strength. As regards image processing, it is assumed that the largest reliance is on the shades of gray image between them to the correct aligned. The Max-MI standard has been used for image segmentation. However, it does not always get the best segmentation effect, because it will be affected by the transformation of missile value for the overlapping area image. The fuzzy theory applied to image segmentation puts forward the fuzzy mutual information (FMI). This paper introduces the MI based on the correlation coefficient as follows:

Given image A and B, the MI is defined as

where p AB(a, b) is the joint probability distribution of two images, and p A (a) and p B (b) are marginal distribution of image A and B, respectively. Then the FMI is given by

where α is an adjustable factor which is greater than 0, and ρ(a, b) is the correlation coefficient of image A and B. FMI has the following properties:

-

1.

Symmetry: FMI (A, B) = FMI (B, A).

-

2.

Conversion: If the adjustable factor α = 0, then the FMI is the same as MI.

2.3 Auto-Segmentation Algorithm

The algorithm can be implemented with the following steps:

-

1.

Setting the parameters: VL = 0.5, α = 10, θ0 = 255, β = 0.1, and iteration number n = 1.

-

2.

Inputting the normalized gray image to PCNN network as the external stimulus signal I ij .

-

3.

Iterating n = n + 1.

-

4.

Segmenting the image by PCNN.

-

5.

Computing the value of FMI. If FMI < FMImax, go to Step 3, otherwise stop segmentation and the final result is obtained.

3 Results and Discussions

Figure 68.2 shows the segmentation results of tire images by different algorithms. The images from left to right in the first line are the original tire image, image with Gauss noise, image with salt and pepper noise, and image with multiplicative noise, respectively.

Figure 68.3 shows the segmentation results of medical cerebral CT image. Figure 68.3a is the original CT image, and Figs. 68.3b–d are the images segmented by Otsu, PCNN with max-entropy, and PCNN with max-FMI algorithm, respectively. Figure 68.4 shows the segmentation results of breast tumor ultrasound image with the same sequence as Fig. 68.3. The PCNN with max-FMI algorithm again illuminated the well segmentation effect than other two algorithms, especially in the boundaries of region of interest. Despite the volume effect in CT, the CT image is clear enough without pre-processing. The intracranial regions in cerebral CT, such as the cerebrospinal fluid and the brain matter, are well segmented by PCNN with max-FMI, while there is too much noise in the images segmented by Otsu and PCNN with max-entropy. For ultrasound image, there are too many small spots in Fig. 68.4b, which means that Otsu algorithm suffered from the speckle noise. The boundary in Fig. 68.4c is obviously smooth, and the details are lost for PCNN with max-entropy algorithm. However, the boundary of breast boundary could be accurately segmented in ultrasound image, although the speckle noise was inherent in ultrasound image. Furthermore, our algorithm can segment the ultrasound image without the pre-processing of denoising or enhancement, which will reduce the running time. So the proposed algorithm has strong robustness against noise and high performance efficiency (Fig. 68.4).

4 Conclusion

In conclusion, we introduce a new image auto-segmentation algorithm based on PCNN and FMI. The proposed algorithm was able to effectively segment the CT and ultrasound images, which was confirmed by the experiments. The results suggest that the proposed algorithm has the potential application in medical images.

References

Noble JA, Boukerroui D (2006) Ultrasound image segmentation: a survey. IEEE Trans Med Imaging 25:987–1010

Tsantis S, Dimitropoulos N, Cavouras D et al (2006) A hybrid multi-scale model for thyroid nodule boundary detection on ultrasound images. Comput Methods Programs Biomed 84:86–98

Archip N, Rohling R, Cooperberg P (2005) Ultrasound image segmentation using spectral clustering. Ultrasound Med Biol 31:1485–1497

Eckhorn R, Reitboeck HJ, Arndt M (1990) Feature linking via synchronization among distributed assemblies: simulation of results from cat cortex. Neural Comput 2(3):293–307

Madabhushi A, Metaxas DN (2003) Combining low-, high-level and empirical domain knowledge for automated segmentation of ultrasonic breast lesions. IEEE Trans Med Imaging 22:255–269

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag London

About this paper

Cite this paper

Lin, Y., Xu, X. (2013). An Improved Image Segmentation Approach Based on Pulse Coupled Neural Network. In: Zhong, Z. (eds) Proceedings of the International Conference on Information Engineering and Applications (IEA) 2012. Lecture Notes in Electrical Engineering, vol 220. Springer, London. https://doi.org/10.1007/978-1-4471-4844-9_68

Download citation

DOI: https://doi.org/10.1007/978-1-4471-4844-9_68

Published:

Publisher Name: Springer, London

Print ISBN: 978-1-4471-4843-2

Online ISBN: 978-1-4471-4844-9

eBook Packages: EngineeringEngineering (R0)