Abstract

In this paper, we propose a novel method that fits linear complementarity problems arising in interactive rigid-body simulations, based on the accelerated modulus-based Gauss–Seidel (AMGS) method. We give a new sufficient condition for the convergence of the generated sequence under a milder condition on the matrix splitting than the special case of the AMGS method. This gives a flexibility in the choice of the matrix splitting, and an appropriate matrix splitting can lead to a better convergence rate in practice. Numerical experiments show that the proposed method is more efficient than the simple application of the AMGS method, and that the accuracy in each step of the proposed method is superior to that of the projected Gauss–Seidel method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In rigid-body simulations, interactions (for example, normal forces) between rigid bodies are often mathematically modeled as constraints. For computing these constraint forces, we usually need to solve certain equations. Two representative constraint formulations for rigid-body simulations are acceleration-based formulations [3] and velocity-based formulations [1]. In the acceleration-based formulations, the constraints are described with forces and accelerations of rigid bodies; we first compute forces and accelerations, then integrate them to obtain velocity changes. On the other hand, in the velocity-based formulations, the variables in the constraints are impulses and velocities of the rigid bodies. In this paper, we focus on the velocity-based formulations, since the velocity-based formulations are widely used and are known to be superior to the acceleration-based formulations in many aspects (for example, see [9, 14]). In recent years, position-based formulations [8] have also been developed for rigid-body simulations. The position-based method was originally proposed for cloth simulations [7], but it is widely used for various simulations especially in the context of computer graphics [6].

There are two main categories for solving constraints, iterative approaches and direct approaches. Our interest in this paper is the iterative approaches rather than the direct approaches, since the direct approaches such as pivoting methods often suffer from time complexity and numerical instability as pointed in [13]. In the impulse-based iterative approaches [9], impulses are applied to the rigid bodies sequentially, until certain convergence conditions are satisfied. Tang et al. [16] proposed an impulse-based energy tracking method that computes accurate velocities by applying impulses iteratively. This method implicitly computes relative velocities after collisions, since an explicit computation of the velocities may lead to physically inaccurate results when multiple contacts occur simultaneously.

Contact constraints are frequently modeled in a form of complementarity problems [4]; in particular, linear complementarity problems (LCPs) give mathematical formulations for frictionless contacts. For frictional contacts, we can use nonlinear complementarity problems (NCPs) to formulate accurate Coulomb friction [18], or, as we will discuss later in Sect. 4.1, we can use boxed LCPs for approximated contact friction which employs friction pyramids instead of accurate friction cones. Among various iterative methods for solving LCPs, the projected Gauss–Seidel (PGS) method [5] has many extensions for solving contact constraints [9, 10, 14]. Another iterative approach for solving LCPs is the use of modulus-based methods. Bai [2] established modulus-based matrix splitting iteration (MMSI) methods, which include modulus-based Jacobi (MJ), modulus-based Gauss–Seidel (MGS), modulus-based successive over relaxation (MSOR), and modulus-based accelerated overrelaxation (MAOR) iteration methods as special cases. Mezzadri and Galligani [12] proposed an extension of the MMSI methods so that they can be used to solve horizontal linear complementarity problems (HLCPs). Zheng and Vong [19] examined the convergence of the MMSI methods for HLCPs, and they proposed a more general convergence result. Zheng and Yin [20] proposed accelerated modulus-based matrix splitting iteration (AMMSI) methods as an improvement of Bai [2]. In a similar way to the MMSI methods, the AMMSI methods include accelerated modulus-based Jacobi (AMJ), accelerated modulus-based SOR (AMSOR), and accelerated modulus-based accelerated overrelaxation (AMAOR) iteration methods. Furthemore, the AMMSI methods also devised the accelerated modulus-based Gauss–Seidel (AMGS) method.

In this paper, we give a theoretical proof on the convergence of the accelerated modulus-based Gauss–Seidel (AMGS) method. Zheng and Yin [20] already discussed the convergence in a general case, but their assumption for the case of positive-definite coefficient matrices is too restrictive to apply the same discussion to rigid-body simulations. We propose another sufficient condition of the convergence of the AMGS method when the coefficient matrix of the LCP is positive definite, so that we can choose a parameter which leads to a faster convergence. We also show a simple example that is covered by the condition we propose, but is not covered by the condition of a general case proposed in [20].

We also improve the efficency of the proposed method. In many applications of real-time simulations, interactive computer graphics and operations are considered most important, since, if the computation in each simulation step is considerably slower than real time, the quality of the users’ experience would be seriously degraded. Since the AMGS method proposed in [20] is not designed for interactive rigid-body simulations, a simple application of the AMGS method causes inefficiency and it is a serious disadvantage for real-time simulations. The proposed method focuses the update formula in the AMGS method and exploits the structures related to the generalized velocity vector of rigid bodies.

Through numerical experiments, we observed that the proposed AMGS method attained shorter computation time than the original AMGS method. Furthermore, its convergence rate in each iteration was better than that of the PGS method. These results indicate that the proposed method is useful for practical real-time simulations. Mezzadri [11] showed that under a specific condition, the PGS method and the AMGS method are equivalent in that AMGS iterations can be written as PGS iterations. Mezzadri also pointed out that the AMGS method also performs like the projected successive over-relaxation (PSOR) method under a specific parameter choice, and this is consistent with our numerical results.

The outline of this paper is as follows. In Sect. 2, we briefly introduce a formulation of velocity-based constraints as an LCP. We also discuss the PGS method and the AMGS method to solve LCPs. We prove convergence theorems of the AMGS method in Sect. 3, and the application of the AMGS method to rigid-body simulations is developed in Sect. 4. In Sect. 5, we will show numerical results to verify the efficiency of the AMGS method. Finally, we will give a conclusion in Sect. 6.

2 Preliminaries

2.1 Linear complementarity problem with velocity-based constraints

For the latter discussions, we briefly introduce an LCP that arises from velocity-based constraints. For more details, the readers can refer to [4, 15, 17].

During a rigid-body simulation, we keep tracking movements of the rigid bodies in multiple time periods, therefore, an entire simulation is divided into a sequence of simulation steps, and each simulation step corresponds to a small time step. Since the constraints on the rigid bodies should be satisfied at each time, we solve the following LCP in each simulation step:

In this paper, we use the superscript T to denote the transpose of a vector or a matrix.

The decision variable in this LCP is \(\varvec{\lambda }\in \mathbb {R}^m\), which is the impulse vector applied to the rigid bodies in the constraint space. The first constraint in (1) requires \(\varvec{\lambda }\) to be nonnegative to ensure that the constraint impulse must be repulsive.

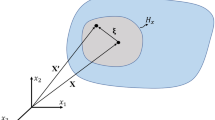

The second constraint in (1) corresponds to the velocity constraints in the rigid-body simulation. The vectors \(\varvec{b}\in \mathbb {R}^m\) and \(\varvec{v}\in \mathbb {R}^n\) are the bias vector in a constraint space and the generalized velocity vector of rigid bodies, respectively. More precisely, when we have N rigid bodies, \(\varvec{v}\) is a vector that consists of N linear and angular velocities, i.e.,

where \(\varvec{v}_i\in \mathbb {R}^3\) and \(\varvec{\omega }_i\in \mathbb {R}^3\) are the linear velocity and the angular velocity of the ith rigid body, respectively, for \(i=1,\ldots ,N\); thus the length of \(\varvec{v}\) is \(n = 6 N\). The matrix \(\varvec{J}\in \mathbb {R}^{m \times n}\) is the Jacobian matrix corresponding to the velocity constraints. The generalized mass matrix of the rigid bodies \(\varvec{M}\in \mathbb {R}^{n\times n}\) consists of masses and inertia tensor matrices in the diagonal positions:

where \(m_i\in \mathbb {R}\) and \(\varvec{I}_i\in \mathbb {R}^{3\times 3}\) are the mass and the inertia tensor matrix of the ith rigid body, respectively. We also use \(\varvec{E}_r \in \mathbb {R}^{r\times r}\) to denote the identity matrix of order r. The inertia tensor matricies \(\varvec{I}_1, \ldots , \varvec{I}_N\) are symmetric, so is \(\varvec{M}\).

The third constraint in (1) is a complementarity condition. We can understand this complementarity condition as follows. If \((\varvec{J}\varvec{M}^{-1}\varvec{J}^T\varvec{\lambda }+ \varvec{J}\varvec{v}+\varvec{b})_i>0\) holds for some i, then the rigid bodies are moving away from each other in the direction of the ith constraint, therefore, the ith constraint should be “inactive”. However, \(\varvec{\lambda }\) is the impulse vector, thus \(\lambda _i>0\) implies that the ith constraint must be “active”. Hence, \(\lambda _i>0\) and \((\varvec{J}\varvec{M}^{-1}\varvec{J}^T\varvec{\lambda }+ \varvec{J}\varvec{v}+\varvec{b})_i>0\) should not hold simultaneously, and this requirement is implemented in the complementarity condition.

By denoting \(\varvec{q}= \varvec{J}\varvec{v}+\varvec{b}\) and \(\varvec{A}= \varvec{J}\varvec{M}^{-1}\varvec{J}^T\) and introducing an auxiliary variable \(\varvec{w}\in \mathbb {R}^m\), the LCP (1) can be expressed in a general LCP as follows:

It is known that if the constraints are non-degenerate, the Jacobian matrix \(\varvec{J}\) is full row rank and the matrix \(\varvec{A}=\varvec{J}\varvec{M}^{-1}\varvec{J}^T\) is positive definite (see [5], for example). Throughout this paper, we assume that \(\varvec{A}\) is positive definite. Since any positive definite matrix is a P-matrix, \(\varvec{A}\) is also a P-matrix and therefore the LCP (3) has a unique solution for an arbitrary vector \(\varvec{q}\).

At the end of this subsection, we should note that the input data \(\varvec{b}, \varvec{v}, \varvec{J}\) and \(\varvec{M}\) vary in accordance with simulation steps. If we express the time dependence explicitly, they should be \(\varvec{b}^{(t)}, \varvec{v}^{(t)}, \varvec{J}^{(t)}\) and \(\varvec{M}^{(t)}\) where t is the simulation step. However, in this paper, we mainly focus on solving (1) in each simulation step, therefore, we usually drop the simulation step (t) from \(\varvec{b}^{(t)}, \varvec{v}^{(t)}, \varvec{J}^{(t)}\) and \(\varvec{M}^{(t)}\).

2.2 Projected Gauss–Seidel method

In the LCP (3) from the rigid-body simulation, the matrix \(\varvec{A}\) has a structure such that \(\varvec{A}= \varvec{J}\varvec{M}^{-1}\varvec{J}^T\). The projected Gauss–Seidel (PGS) method [9] is designed to solve more general LCPs (3) in the sense that the assumption for \(\varvec{A}\) is only positive definiteness. The PGS method is an iterative method and generates a sequence \(\left\{ \varvec{\lambda }^k\right\} _{k=0}^{\infty } \subset \mathbb {R}^m\).

A key property in the PGS method is to decompose \(\varvec{A}\) into \(\varvec{A}=\varvec{D}-\varvec{L}-\varvec{U}\) such that \(\varvec{D}\) is a diagonal matrix, \(\varvec{L}\) a strictly lower triangular matrix, and \(\varvec{U}\) a strictly upper triangular matrix. Since we assume \(\varvec{A}\) is a positive definite matrix, \(\varvec{D}\) is invertible. Due to this decomposition, \(\varvec{A}\varvec{\lambda }+ \varvec{q}= \varvec{0}\) is equivalent to \(\varvec{\lambda }= \varvec{D}^{-1} (\varvec{L}\varvec{\lambda }+ \varvec{U}\varvec{\lambda }- \varvec{q}).\)

Taking this formula and the complementarity condition into consideration, the PGS method computes the next iteration \(\varvec{\lambda }^{k+1}\) by the following update formula:

Throughout this paper, we use \(\max \left\{ \varvec{a}, \varvec{b}\right\}\) (\(\min \left\{ \varvec{a}, \varvec{b}\right\}\)) to denote the element-wise maximum (minimum, respectively) of two vectors \(\varvec{a}\) and \(\varvec{b}\). The PGS method continues the update by (4) until the sequence \(\left\{ \varvec{\lambda }^k\right\} _{k=0}^\infty\) converges enough, or the number of the iterations reaches a certain limit.

In the rigid-body simulation, the initial vector \(\varvec{\lambda }^0\) is usually set as a zero vector \(\varvec{0}\) or the impulse vector obtained in the previous simulation step. Since the initial vector often affects the performance of iterative approaches, the use of the solution from the previous simulation step makes the convergence faster [9]. Such a technique is called warm start.

2.3 Accelerated modulus-based Gauss–Seidel method

For solving the general LCP (3), Bai [2] devised the following implicit fixed-point equation that is essential for the modulus-based matrix splitting iteration (MMSI) methods:

Here, the matrices \(\varvec{M}_0 \in \mathbb {R}^{m \times m}\) and \(\varvec{N}_0 \in \mathbb {R}^{m \times m}\) are a splitting pair of \(\varvec{A}\) such that \(\varvec{A}= \varvec{M}_0 - \varvec{N}_0\). \(\varvec{\varOmega }\in \mathbb {R}^{m \times m}\) and \(\varvec{\varGamma }\in \mathbb {R}^{m \times m}\) are two diagonal matrices whose diagonal entries are positive. \(\varvec{\varOmega }_1 \in \mathbb {R}^{m\times m}\) and \(\varvec{\varOmega }_2 \in \mathbb {R}^{m\times m}\) are nonnegative diagonal matrices such that \(\varvec{\varOmega }=\varvec{\varOmega }_1+\varvec{\varOmega }_2\). We should emphasize that the variable in (5) is \(\varvec{x}\), and we use \(\left|\varvec{x}\right|\) to denote the element-wise absolute values of \(\varvec{x}\). The relation between \(\varvec{x}\) and the pair of \(\varvec{\lambda }\) and \(\varvec{w}\) in (3) will be discussed in Theorem 1.

By setting \(\varvec{\varOmega }_1=\varvec{\varOmega }\), \(\varvec{\varOmega }_2=\varvec{O}\), and \(\varvec{\varGamma }=\frac{1}{\gamma }\varvec{E}_m\) with a parameter \(\gamma >0\) in (5), we can obtain a simplified implicit fixed-point equation:

Based on (6), the iteration of the MMSI method can be derived as follows:

We decompose \(\varvec{A}\) into \(\varvec{A}=\varvec{D}-\varvec{L}-\varvec{U}\) in the same way as the PGS method. Set \(\gamma =2\), and let \(\alpha >0\) and \(\beta > 0\) be two parameters. Then, we can derive the update formula of four methods from (7); the modulus-based Jacobi (MJ) by setting \(\varvec{M}_0=\varvec{D}\) in (7), the modulus-based Gauss–Seidel (MGS) by \(\varvec{M}_0=\varvec{D}-\varvec{L}\), the modulus-based successive over relaxation (MSOR) by \(\varvec{M}_0=\frac{1}{\alpha }\varvec{D}-\varvec{L}\), and the modulus-based accelerated overrelaxation (MAOR) iteration method by \(\varvec{M}_0=\frac{1}{\alpha }(\varvec{D}-\beta \varvec{L})\), respectively.

Zheng and Yin [20] utilized two splitting pairs of the matrix \(\varvec{A}\) such that \(\varvec{A}=\varvec{M}_1-\varvec{N}_1=\varvec{M}_2-\varvec{N}_2\), and devised a new equation based on (5):

Zheng and Yin [20] established the following theorem to show an equivalence between (8) and \(\text {LCP}(\varvec{q},\varvec{A})\) in (3). Since a detailed proof is not given in [20], we give the proof here.

Theorem 1

[20] The following statements hold between (8) and \(\text {LCP}(\varvec{q},\varvec{A})\):

-

(i)

if \((\varvec{\lambda },\varvec{w})\) is a solution of \(\text {LCP}(\varvec{q},\varvec{A})\), then \(\varvec{x}=\frac{1}{2}(\varvec{\varGamma }^{-1}\varvec{\lambda }-\varvec{\varOmega }^{-1}\varvec{w})\) satisfies (8).

-

(ii)

if \(\varvec{x}\) satisfies (8), then the pair of \(\varvec{\lambda }=\varvec{\varGamma }(\left|\varvec{x}\right|+\varvec{x})\) and \(\varvec{w}=\varvec{\varOmega }(\left|\varvec{x}\right|-\varvec{x})\) is a solution of \(\text {LCP}(\varvec{q},\varvec{A})\).

Proof

We first prove (i). Since \((\varvec{\lambda },\varvec{w})\) is a solution of \(\text {LCP}(\varvec{q},\varvec{A})\), \((\varvec{\lambda },\varvec{w})\) satisfies the four constraints, \(\varvec{A}\varvec{\lambda }+ \varvec{q}= \varvec{w}\), \(\varvec{w}^T \varvec{\lambda }= 0\), \(\varvec{\lambda }\ge \varvec{0}\) and \(\varvec{w}\ge \varvec{0}\). The first constraint \(\varvec{A}\varvec{\lambda }+ \varvec{q}= \varvec{w}\) is equivalent to

From the rest three constraints and the fact that \(\varvec{\varGamma }\) and \(\varvec{\varOmega }\) are diagonal matrices whose diagonal entries are positive, if \(\varvec{x}=\frac{1}{2}(\varvec{\varGamma }^{-1}\varvec{\lambda }-\varvec{\varOmega }^{-1}\varvec{w})\), it holds that \(\left|\varvec{x}\right| =\frac{1}{2}(\varvec{\varGamma }^{-1}\varvec{\lambda }+\varvec{\varOmega }^{-1}\varvec{w})\). Therefore, \(\varvec{x}\) satisfies

and this is equivalent to (8).

To prove (ii), from (9), it holds that \(\varvec{A}\varvec{\varGamma }(\left|\varvec{x}\right|+\varvec{x})+\varvec{q}= \varvec{\varOmega }(\left|\varvec{x}\right|-\varvec{x})\). By the relations \(\varvec{\lambda }=\varvec{\varGamma }(\left|\varvec{x}\right|+\varvec{x})\) and \(\varvec{w}=\varvec{\varOmega }(\left|\varvec{x}\right|-\varvec{x})\), we obtain \(\varvec{A}\varvec{\lambda }+\varvec{q}=\varvec{w}\). Since \(\varvec{\varGamma }\) and \(\varvec{\varOmega }\) are positive diagonal matrices, it is easy to check that \(\varvec{\lambda }\) and \(\varvec{w}\) are nonnegative vectors. Finally, it is also easy to show the element-wise complementarity between \(\varvec{\lambda }\) and \(\varvec{w}\). \(\square\)

We may use Theorem 1 to establish some iterative methods for solving \(\text {LCP}(\varvec{q},\varvec{A})\), but we need to set appropriate matrices for the implicit fixed-point equation (8) in actual computations. In particular, the splitting pair of \(\varvec{\varOmega }\) is not unique. By fixing \(\varvec{\varOmega }_1= \varvec{\varOmega }\), \(\varvec{\varOmega }_2=\varvec{O}\) and \(\varvec{\varGamma }=\frac{1}{\gamma } \varvec{E}_m\), we derive a simplified update equation of (8) as follows:

As mentioned in Sect. 2.1, we use \(\varvec{E}_r\in \mathbb {R}^{r\times r}\) to denote the identity matrix of order r. Based on this equation, Zheng and Yin [20] provided an update formula of the AMMSI methods:

When the sequence \(\left\{ \varvec{x}^k\right\} _{k=0}^\infty\) converges enough, the AMMSI methods output the impulse vector by using the relation \(\varvec{\lambda }= \varvec{\varGamma }(\left|\varvec{x}\right| + \varvec{x}) = \frac{\left|\varvec{x}\right| + \varvec{x}}{\gamma }\). As Mezzadri [11] points out, every AMMSI method can be written in a projection form, and the value of \(\gamma\) does not play an important role in convergence rates as it just changes the first iteration of the method in its projection form.

By changing the splitting pairs of \(\varvec{A}\), the update formula (11) above yields variant methods; MMSIM (\(\varvec{M}_2=\varvec{A}\) and \(\varvec{N}_2=\varvec{O}\)), the accelerated modulus-based Jacobi (AMJ) iteration method (\(\varvec{M}_1=\varvec{D}\), \(\varvec{N}_1=\varvec{L}+\varvec{U}\), \(\varvec{M}_2=\varvec{D}-\varvec{U}\) and \(\varvec{N}_2=\varvec{L}\)), the accelerated modulus-based SOR (AMSOR) iteration method (\(\varvec{M}_1=\frac{1}{\alpha }\varvec{D}-\varvec{L}\), \(\varvec{N}_1=\left( \frac{1}{\alpha }-1\right) \varvec{D}+\varvec{U}\), \(\varvec{M}_2=\varvec{D}-\varvec{U}\) and \(\varvec{N}_2=\varvec{L}\)), and the accelerated modulus-based accelerated overrelaxation (AMAOR) iteration method (\(\varvec{M}_1=\frac{1}{\alpha }(\varvec{D}-\beta \varvec{L})\), \(\varvec{N}_1=\frac{1}{\alpha }((\alpha -1)\varvec{D}+(\alpha -\beta )\varvec{L}+\alpha \varvec{U})\), \(\varvec{M}_2=\varvec{D}-\varvec{U}\) and \(\varvec{N}_2=\varvec{L}\)).

In particular, the update formula of the accelerated modulus-based Gauss–Seidel (AMGS) method in [20] is derived with \(\varvec{M}_1=\varvec{D}-\varvec{L}\), \(\varvec{N}_1=\varvec{U}\), \(\varvec{M}_2=\varvec{D}-\varvec{U}\) and \(\varvec{N}_2=\varvec{L}\) as follows:

Let \(\varDelta \varvec{x}^k = \varvec{x}^{k+1} - \varvec{x}^k\) be the difference between \(\varvec{x}^k\) and \(\varvec{x}^{k+1}\). Then, (12) is equivalent to

By Theorem 1 and \(\varvec{\varGamma }= \frac{1}{\gamma } \varvec{E}_m\), the sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty\) for the LCP (3) can be associated with the sequence \(\{\varvec{x}^k\}_{k=0}^\infty\) generated by (12) by the relation \(\varvec{\lambda }^k=\frac{\left|\varvec{x}^k\right|+\varvec{x}^k}{\gamma }=\frac{2}{\gamma }\max \{\varvec{0},\varvec{x}^k\}\), thus \(\varvec{\lambda }^k\) is a multiple of the positive part of \(\varvec{x}^k\). This motivates us to split \(\varvec{x}^k\) into the positive and negative parts such that \(\varvec{x}^k=\varvec{x}_+^k - \varvec{x}_-^k\), where \(\varvec{x}_+^k = \max \{\varvec{0},\varvec{x}^k\} = \frac{1}{2}(\left|\varvec{x}^k\right| + \varvec{x}^k)\) and \(\varvec{x}_-^k=-\min \{\varvec{0},\varvec{x}^k\} = \frac{1}{2}(\left|\varvec{x}^k\right|-\varvec{x}^k)\). From the relations \(\varvec{x}^k = \frac{\gamma }{2} \varvec{\lambda }^k - \varvec{x}_-^k\) and \(\left|\varvec{x}^k\right| = \frac{\gamma }{2} \varvec{\lambda }^k + \varvec{x}_-^k\), (14) is equivalent to

Therefore, for computing \(\varDelta x_i^k\), we only need the ith component of \(\varvec{x}_-^k\), which will be denoted as \((\varvec{x}_-^k)_i\), since \(\varvec{D}\) and \(\varvec{\varOmega }\) are diagonal matrices. This simplifies the computation of \(\varDelta \varvec{x}^k\). Recalling the decomposition of \(\varvec{A}= \varvec{D}- \varvec{L}- \varvec{U}\), we compute \(\varDelta x^k_i\) for each \(i=1,\ldots ,m\) by

We can summarize a framework of the AMGS method as follows.

Method 1

(the AMGS method for ( 3 ))

Choose a nonnegative vector \(\varvec{\lambda }^0\in \mathbb {R}^m\) as an initial vector. Generate the iteration sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty\) by the following procedure:

3 Convergence theorem

In this section, we focus on the convergence of the accelerated modulus-based Gauss–Seidel (AMGS) method.

Although Zheng and and Yin [20] gave a generic form of a sufficient condition of the convergence, a more detailed discussion of the case that \(\varvec{A}\) is a positive definite matrix is somewhat limited: \(\varvec{\varOmega }\) is assumed to be a multiple of the identity matrix (\(\varvec{\varOmega }={{\bar{\omega }}}\varvec{E}_m\) for some \({{\bar{\omega }}}>0\)), so we cannot take \(\varvec{\varOmega }=\alpha \varvec{D}\) that is actually used in the numerical experiments in [20].

Since the matrix \(\varvec{A}\) is always positive definite in the rigid-body simulation, it is worthwhile to give a sufficient condition of the convergence when \(\varvec{A}\) is positive definite while allowing a more generic form of \(\varvec{\varOmega }\). For example, if we can take \(\varvec{\varOmega }= \alpha \varvec{D}\), we may be able to improve the convergence, as we will show in Sect. 5. Here, \(\alpha \in \mathbb {R}\) is a positive constant, and \(\varvec{D}\) is the diagonal matrix whose diagonal elements are those of \(\varvec{A}\). We also give an example in Example 1 that is covered by the theorem we will show, but is not covered by theorems in [20].

We use \(\left\Vert \varvec{x}\right\Vert = \sqrt{\varvec{x}^T \varvec{x}}\) to denote the Euclidean norm of \(\varvec{x}\in \mathbb {R}^m\). We also use \(\left\Vert \varvec{L}\right\Vert\) to denote an arbitrary norm of a matrix \(\varvec{L}\in \mathbb {R}^{m\times m}\) that is consistent with the Euclidean vector norm, so that we can employ \(\left\Vert \varvec{L}\varvec{x}\right\Vert \le \left\Vert \varvec{L}\right\Vert \left\Vert \varvec{x}\right\Vert\). Especially, \(\left\Vert \varvec{L}\right\Vert _2\) represents the spectral norm of a matrix L.

The following theorem covers the case that \(\varvec{\varOmega }=\alpha \varvec{D}\), which will be actually used in numerical experiments of Sect. 5.

For the subsequent discussion, we assume that \(\varvec{A}\) is a symmetric positive definite matrix and hence \(\varvec{U}=\varvec{L}^T\). Let \({{\bar{\varvec{\varOmega }}}}=\gamma \varvec{\varOmega }\). The matrix \({{\bar{\varvec{\varOmega }}}}\) is also a positive diagonal matrix.

Theorem 2

Let \(\varvec{A}\) be a symmetric positive definite matrix, \({{\bar{\varvec{\varOmega }}}}\) be a positive diagonal matrix, and \(\varvec{A}=\varvec{D}-\varvec{L}-\varvec{L}^T\) be a splitting of the matrix \(\varvec{A}\) such that \(\varvec{D}\) is a positive diagonal matrix and \(\varvec{L}\) is a strictly lower triangular matrix. Also, let \(\left\Vert \cdot \right\Vert\) be an arbitrary submultiplicative matrix norm consistent with the Euclidean vector norm. Then, if the inequality

holds, the iteration sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty \subset \mathbb {R}^m\) generated by Method 1 with an arbitrary nonnegative initial vector \(\varvec{\lambda }^0\in \mathbb {R}^m\) converges to the unique solution \(\varvec{\lambda }^*\in \mathbb {R}^m\) of \(\text {LCP}(\varvec{q}, \varvec{A})\).

Proof

In the AMMSI methods [20] from which the AMGS was derived, we chose \(\varvec{\varOmega }_1 = \varvec{\varOmega }, \varvec{\varOmega }_2 = \varvec{O}\) and \(\varvec{\varGamma }= \frac{1}{\gamma } \varvec{E}_m\) for the simplified fixed-point equation (10). Let \((\varvec{\lambda }^*,\varvec{w}^*)\in \mathbb {R}^m \times \mathbb {R}^m\) be a solution of \(\text {LCP}(\varvec{q},\varvec{A})\), and let \(\varvec{x}^*=\frac{1}{2} (\gamma \varvec{\lambda }^* - \varvec{\varOmega }^{-1} \varvec{w}^*)\). From Theorem 1, \(\varvec{x}^*\) satisfies (10), and the convergence of \(\left\{ \varvec{\lambda }^k \right\} _{k=0}^\infty\) to \(\varvec{\lambda }^*\) can be guaranteed by that of \(\left\{ \varvec{x}^k \right\} _{k=0}^\infty\) to \(\varvec{x}^*\), therefore, we discuss the convergence of the sequence \(\left\{ \varvec{x}^k \right\} _{k=0}^\infty\). Subtracting (10) with \(\varvec{x}^*\) from the update formula (11), we obtain

In the setting of the AMGS method (\(\varvec{M}_1 = \varvec{D}- \varvec{L}, \varvec{N}_1 = \varvec{L}^T, \varvec{M}_2 = \varvec{D}- \varvec{L}^T\) and \(\varvec{N}_2 = \varvec{L}\)), we can further evaluate this inequality as follows:

Using the triangular inequality \(\left\Vert \left|\varvec{a}\right| - \left|\varvec{b}\right|\right\Vert \le \left\Vert \varvec{a}- \varvec{b}\right\Vert\) for any two vectors \(\varvec{a}\) and \(\varvec{b}\) of the same dimension, we have

and it holds that

under the assumption of \(\left\Vert (\varvec{D}- \varvec{L}+ {{\bar{\varvec{\varOmega }}}})^{-1}\right\Vert \left\Vert \varvec{L}\right\Vert < 1\). Therefore, if

or equivalently

holds, we obtain the convergence of \(\{\varvec{x}^k\}_{k = 0}^{\infty }\) by the fixed-point theorem and we know that \(\varvec{x}^*\) is the unique point to which the sequence \(\{\varvec{x}^k\}_{k = 0}^{\infty }\) converges. Note that (17) implies \(\left\Vert (\varvec{D}- \varvec{L}+ {{\bar{\varvec{\varOmega }}}})^{-1}\right\Vert \left\Vert \varvec{L}\right\Vert < 1\). \(\square\)

Based on Theorem 2, we obtain the following corollary.

Corollary 1

Let \(\varvec{A}\) be a symmetric positive definite matrix, \({{\bar{\varvec{\varOmega }}}}\) be a positive diagonal matrix, and \(\varvec{A}=\varvec{D}-\varvec{L}-\varvec{L}^T\) be a splitting of the matrix \(\varvec{A}\) such that \(\varvec{D}\) is a positive diagonal matrix and \(\varvec{L}\) is a strictly lower triangular matrix. Also, let \(\left\Vert \cdot \right\Vert _2\) be the spectral matrix norm, \(\sigma _{\min }\) be the minimum singular value of the matrix \(\varvec{D}- \varvec{L}+ {{\bar{\varvec{\varOmega }}}}\), and \(\sigma _{\max }\) be the maximum singular value of the matrix \(\varvec{D}- \varvec{L}- {{\bar{\varvec{\varOmega }}}}\). Then, if the inequality

holds, the iteration sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty \subset \mathbb {R}^m\) generated by Method 1 with an arbitrary nonnegative initial vector \(\varvec{\lambda }^0\in \mathbb {R}^m\) converges to the unique solution \(\varvec{\lambda }^*\in \mathbb {R}^m\) of \(\text {LCP}(\varvec{q}, \varvec{A})\).

Proof

By applying the spectral norm to (17), we get

From the fact that

holds for any invertible matrix \(\varvec{P}\) and its singular values \(\sigma _i\), we have

Thus,

follows from the assumption and this completes the proof. \(\square\)

Example 1

The following example gives a case that is applicable to Corollary 1, but is not applicable to Theorem 4.1 in [20]:

Indeed, \(\left\Vert \varvec{L}\right\Vert _2=1\), \(\sigma _{\min }=\frac{1}{2}\left( \sqrt{65}-1\right) =3.5311\ldots\), \(\sigma _{\max }=1\) and \(2\left\Vert \varvec{L}\right\Vert _2<\sigma _{\min }-\sigma _{\max }\) holds. However, it holds that

so \(\mu ({{\bar{\varvec{\varOmega }}}}) + 2\xi ({{\bar{\varvec{\varOmega }}}}) + 2\eta ({{\bar{\varvec{\varOmega }}}}) = 1.2653\ldots >1\) and therefore the example does not fulfill the assumption of Theorem 1.4 in [20].

4 Accelerated modulus-based matrix splitting iteration methods for interactive rigid-body simulation

Since the AMGS method is a general method for LCPs, it is possible to simply apply the AMGS method to (3). However, explicit evaluation of \(\varvec{A}=\varvec{J}\varvec{M}^{-1}\varvec{J}^T\) is inefficient even though the matrices \(\varvec{J}\) and \(\varvec{M}^{-1}\) are sparse. Thus, such a simple application of the AMGS method is not practical. To overcome this inefficiency, we modify the AMGS method so that it does not require the explicit evaluation of the matrix \(\varvec{A}\). In a similar way to the AMGS method, we can also modify the AMSOR method for solving LCPs in the rigid-body simulations.

As already pointed out at above, the direct computation of \(\varvec{A}\) is unfavorable for real-time simulations. Thus, we should avoid the computations of \(\sum _{j=1}^{i-1}A_{ij}\lambda ^{k+1}_j\) and \(\sum _{j=i}^mA_{ij}\lambda ^k_j\) in (15), which involve all the off-diagonal elements of \(\varvec{A}\).

To improve the computational efficiency, we introduce an intermediate variables \(\varvec{\lambda }^{k+1,i} \in \mathbb {R}^m\) and \(\varvec{v}^{k+1,i} \in \mathbb {R}^{n}\). In particular, \(\varvec{v}^{k+1,i}\) stores information of applied impulse [9, 17]. For \(i=1,\ldots ,m\), let

where \({\widehat{\varvec{M}}} = \varvec{M}^{-1}\varvec{J}^T.\) By the definitions of \(\varvec{A}\) and \(\varvec{q}\), it holds

and this leads to

Due to the relation \(\varvec{\lambda }^{k} = \frac{2}{\gamma } \varvec{x}_+^k\), we can compute \(\varvec{\lambda }^{k+1, i+1}\) by updating only the ith position of \(\varvec{\lambda }^{k+1, i}\),

Thus, from (19), we obtain

where \({\widehat{\varvec{M}}}_{*i}\) is the ith column of \({\widehat{\varvec{M}}}\).

The following method summarizes the proposed AMGS method for rigid-body simulations.

Method 2

(the proposed AMGS method for rigid-body simulations)

Choose a nonnegative vector \(\varvec{\lambda }^0\in \mathbb {R}^m\) as an initial vector. Generate the iteration sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty\) by the following procedure:

In Sect. 5, we compare the computational costs of Method 1 and Method 2, which is an optimized version of Method 1 for interactive rigid-body simulations. As the experiments will show, Method 2 achieves considerably lower computational costs than that of Method 1 in all cases, and is suitable for interactive rigid-body simulations.

4.1 Linear complementarity problems with lower and upper bounds

In this section, we discuss a method for solving LCPs with lower and upper bounds on \(\varvec{\lambda }\), which often occur in the formulations of contact constraints with friction [14, 17]. We call such LCPs with lower and upper bounds “Boxed LCPs (BLCPs)”. Consider the following BLCP:

Here, \(\varvec{l}\in \mathbb {R}^m\) and \(\varvec{u}\in \mathbb {R}^m\) are the lower and the upper bounds, respectively. Without loss of generality, we assume \(0 \le l_i < u_i\) for each \(i=1,\ldots ,m\).

We define a projection function of \(\varvec{\lambda }\) to the interval \(\varvec{l}\) and \(\varvec{u}\) by

Since \(\varvec{l}\) is a nonnegative vector, so is \(p_\text {BLCP}(\varvec{\lambda })\). With this projection, we can give an AMGS method for Boxed LCPs as follows.

Method 3

(the AMGS method for Boxed LCPS in rigid-body simulations)

Choose a nonnegative vector \(\varvec{\lambda }^0\in \mathbb {R}^m\) as an initial vector. Generate the iteration sequence \(\{\varvec{\lambda }^k\}_{k=0}^\infty\) by the following procedure:

Note that \(\text {BLCP}(\varvec{q},\varvec{l},\varvec{u},\varvec{A})\) with \(\varvec{l}= \varvec{0}\) and \(\varvec{u}\) being a very large vector represents the same problem as \(\text {LCP}(\varvec{q},\varvec{A})\), thus Method 3 can be considered as a generalization of Method 1.

5 Numerical experiments

In this section, we show numerical results of several 2-dimensional examples to verify the numerical performance of the proposed method. All tests were performed on an Windows 8 computer with Intel Core i7-5500U (2.4 GHz CPU) and 8 GB memory space.

In the numerical experiments, we utilized the warm-start strategy [9], that is, the final solution obtained in a simulation step will be used as the first guess of the solution in the next simulation step. More precisely, let \(\left\{ \varvec{\lambda }^{(t),k}\right\} _{k=0}^\infty\) denote the sequence generated for solving \(\text {LCP}(\varvec{q}^{(t)}, \varvec{A}^{(t)})\) at a simulation step t. We set the number of iterations in a single simulation step to 10, that is, \(\varvec{\lambda }^{(t),10}\) is used as the first guess \(\varvec{\lambda }^{(t+1),0}\). The initial point of the entire simulation is set as the zero vector \((\varvec{\lambda }^{(0),0} = \varvec{0})\). To evaluate the accuracy of \(\varvec{\lambda }^{(t),k}\), we use a residual function in [2]:

For the AMGS methods, we set \(\gamma = 2\), and \({\bar{\omega }}\) is chosen as \(\frac{\sum _{i=1}^m D_{ii}}{m \gamma }\) (the average of \(D_{11}, \ldots , D_{mm}\) divided by \(\gamma\)) based on preliminary experiments.

For the numerical experiments, we use examples “Pool 1”, “Pool 2” and “Stacking”; in the first two examples, rigid circles are stuffed into a small space, while, in the last case, rigid circles are vertically stacked.

Pool 1

Figure 1 displays an example with 221 circles in an area of 6 meters wide. All the circles have the same mass (2 kilograms) and the same radius (21 centimeters). The coefficients of friction are set to 0.1, and the coefficients of restitution are set to 0.2. When the coefficient of restitution is 1, collisions are perfectly elastic (i.e., no energy loss), and when the coefficient of restitution is 0, collisions are perfectly inelastic. The gravitational acceleration is set to 9.80665 \({\text{m/s}}^2\) in the downward direction in the figure.

In Pool 1, the size n in the matrices \(\varvec{J}\in \mathbb {R}^{m\times n}\) and \(\varvec{M}\in \mathbb {R}^{n\times n}\) is 672, while the size m depend on the simulation step t, since the number of contact points between the circles changes as the simulation proceeds. During the entire simulation, the average of m is 516.

Pool 2

The most part of this example is same as Pool 1, but the masses of the circles increases linearly from 1.0 kilograms to 3.0 kilograms in accordance with their initial heights from the ground; circles in higher positions have larger masses than those in lower positions, and the mass of the heaviest circles (the top circles) are three times of that of the lightest circles (the bottom circles). This is expected that the convergence will be slower, since it is hard for the lower (lighter) circles to support higher (heavier) circles. The sizes n and m of the matrices are the same as Pool 1.

Stacking

Figure 2 displays a simple example with 30 vertically stacked circles of 18-centimeter radius. All circles have the same masses (1.0 kilograms), and the coefficients of restitution are set to 0.2. The coefficients of friction are set to 0.1, but no frictional forces are produced because of the arrangement of the circles. The sizes of n and m (average) are 48 and 14, respectively.

5.1 Convergence in each simulation step

In each simulation step t, we execute only 10 iterations and we move to the next simulation step \(t+1\) with the first guess \(\varvec{\lambda }^{(t+1),0} = \varvec{\lambda }^{(t),10}\). In this subsection, we execute more iterations to compare the convergence of the PGS method and the proposed AMGS methods in each simulation step. The average values of \({\bar{\omega }}\) in the numerical experiments are 1.928, 1.086, and 3.872 in Pool 1, Pool 2 and Stacking, respectively.

For Pool 1, Fig. 3 plots \(RES(\varvec{\lambda }^{(100),k})\) for \(k=1,\ldots , 200\) of the PGS method, Method 2 with \(\varvec{\varOmega }= {\bar{\omega }} \varvec{E}_m\) (shortly, Method 2[\({\bar{\omega }} \varvec{E}_m\)]) and Method 2 with \(\varvec{\varOmega }= \frac{1}{\gamma } \varvec{D}\) (shortly, Method 2[\(\frac{1}{\gamma } \varvec{D}\)]). The horizontal axis is the iteration number k of \(RES(\varvec{\lambda }^{(100),k})\). In a similar way, Figs. 4 and 5 show \(RES(\varvec{\lambda }^{(100),k})\) of Pool 2 and Stacking, respectively. We chose the 100th simulation step, since the early steps contained a lot of noise and the warm-start strategy did not work effectively there.

From Figs. 3, 4, and 5 , we observe that Method 2 attains better convergence than the PGS method in most cases.

Table 1 reports the iteration number k and the computation time in seconds of each method to reach \(RES(\varvec{\lambda }^{(100),k}) < 10^{-4}\). Since the standard AMGS method cannot directly handle contacts with frictions, coefficients of friction are set to 0 only for this experiment. Also, the computation time for the 30 circles in Stacking (Fig. 2) was too short to measure (shorter than 0.001 s), therefore we used 150 circles instead of 30 circles. Method 1 computes the coefficient matrix \(\varvec{A}\) explicitly, and we observe that Method 1 is the slowest in Table 1. This computation time is insufficient for real-time simulations.

In the comparison between Method 2[\({\bar{\omega }} \varvec{E}_m\)] and Method 2[\(\frac{1}{\gamma } \varvec{D}\)], we can see that Method 2[\(\frac{1}{\gamma } \varvec{D}\)] achieves better convergences than Method 2[\({\bar{\omega }} \varvec{E}_m\)]. Furthermore, Method 2[\(\frac{1}{\gamma } \varvec{D}\)] achieves the smallest number of iterations and computation times in all three cases.

5.2 Convergence in entire simulation

In this subsection, we report the computational errors \(RES(\varvec{\lambda }^{(t), 10})\) along with the progress of simulation steps \(t \ge 60\). We removed the first 60 steps from the figure, since the early steps contained much noise. In the previous subsection, we observed that Method 2[\(\frac{1}{\gamma } \varvec{D}\)] is superior to Method 1 and Method 2[\({\bar{\omega }} \varvec{E}_m\)] in each simulation step. Thus, we use only Method 2[\(\alpha \varvec{D}\)] in this subsection, changing the value of \(\alpha\).

In Fig. 6, the horizontal axis is the simulation step t, and the vertical axis is the residual \(RES(\varvec{\lambda }^{(t), 10})\). From Fig. 6, we observe that Method 2 converges faster than the PGS method for \(t \ge 300\). Among different values of \(\alpha\), \(\alpha =0.2\) shows the fastest convergence in the figure.

The result of Pool 2 illustrated in Fig. 7 indicates that there are no clear differences of the convergence speed between the PGS method and the AMGS method with various values of \(\alpha\), but when \(\alpha =0.1\), the simulation is unstable during about the first 100 simulation steps.

From Fig. 8 for Stacking, in a similar way to Pool 1, we can again observe that the AMGS method with the smaller \(\alpha\) gives the faster convergence, and the AMGS method with \(\alpha =0.6\) still converges faster than the PGS method.

Finally, Table 2 shows the entire computation time for 1,000 simulation steps, with 200 iterations for each step, that is, we computed \(\varvec{\lambda }^{(1000),200}\). The entire computation time is shorter in the proposed method than in the PGS method. As mentioned in Table 1, the convergence rate is better in the proposed method (Method 2[\(\alpha \varvec{D}\)]) than in the PGS method, in other words, the proposed method simulates the circles more accurately than the PGS method. Therefore, the proposed method has the advantages for interactive rigid-body simulations.

6 Conclusion

We presented a numerical method based on the AMGS method for interactive rigid-body simulations. We established the convergence theorem of the AMGS method for the case the matrix \(\varvec{A}\) is positive definite and \(\varvec{\varOmega }=\alpha \varvec{D}\) with \(\alpha >0\). This case was examined in the numerical experiments, and we observed that the proposed method attained the better accuracy than the PGS method and the computation time of the proposed method was shorter than that of a simple application of the AMGS method.

In practical cases, however, determining a proper value of \(\alpha\) is not simple. The numerical results showed that a smaller value of \(\alpha\) gave a better convergence, but it is also shown that a too small value of \(\alpha\) results in an unstable behavior. An approach that adaptively determines the value of \(\alpha\) may resolve this problem, and we leave a discussion on such an approach as a future task of this paper. Further numerical experiments in 3-dimensional spaces that take frictions into consideration will be another topic of our future studies. Related to computing frictions correctly, applying the proposed method to more general form of LCPs, such as NCPs and HLCPs, will also be an interesting extension of this paper.

References

Anitescu M, Potra FA (1997) Formulating dynamic multi-rigid-body contact problems with friction as solvable linear complementarity problems. Nonlinear Dyn 14(3):231–247

Bai ZZ (2010) Modulus-based matrix splitting iteration methods for linear complementarity problems. Numer Linear Algebra Appl 17(6):917–933

Baraff D (1993) Non-penetrating rigid body simulation. In: Eurographics ’93 State of the art reports

Baraff D (1994) Fast contact force computation for nonpenetrating rigid bodies. In: Proceedings of the 21st annual conference on computer graphics and interactive techniques. ACM, pp 23–34

Bender J, Erleben K, Trinkle J (2014) Interactive simulation of rigid body dynamics in computer graphics. In: Computer Graphics Forum, vol 33. Wiley Online Library, pp 246–270

Bender J, Müller M, Macklin M (2015) Position-based simulation methods in computer graphics. In: Eurographics (tutorials), p 8

Bender J, Müller M, Otaduy M.A, Teschner M, Macklin M (2014) A survey on position-based simulation methods in computer graphics. In: Computer graphics forum, vol 33. Wiley Online Library, pp 228–251

Deul C, Charrier P, Bender J (2016) Position-based rigid-body dynamics. Comput Anim Virtual Worlds 27(2):103–112

Erleben K (2004) Stable, robust, and versatile multibody dynamics animation. Unpublished Ph. D. Thesis, University of Copenhagen, Copenhagen

Erleben K, Sporring J, Henriksen K, Dohlmann H (2005) Physics-based animation. Charles River Media Hingham

Mezzadri F (2019) On the equivalence between some projected and modulus-based splitting methods for linear complementarity problems. Calcolo 56(4):41

Mezzadri F, Galligani E (2020) Modulus-based matrix splitting methods for horizontal linear complementarity problems. Numer Algor 83(1):201–219

Nakaoka S, Hattori S, Kanehiro F, Kajita S, Hirukawa H (2007) Constraint-based dynamics simulator for humanoid robots with shock absorbing mechanisms. In: IROS 2007 (IEEE/RSJ international conference on intelligent robots and systems, 2007). IEEE, pp 3641–3647

Poulsen M, Abel S.M.N, Erleben K (2010) Heuristic convergence rate improvements of the projected gauss-seidel method for frictional contact problems. In: 18th international conference in Central Europe on computer graphics, visualization and computer vision. Václav Skala-Union Agency, pp 135–142

Stewart DE, Trinkle JC (1996) An implicit time-stepping scheme for rigid body dynamics with inelastic collisions and coulomb friction. Int J Numer Meth Eng 39(15):2673–2691

Tang X, Paluszny A, Zimmerman RW (2014) An impulse-based energy tracking method for collision resolution. Comput Methods Appl Mech Eng 278:160–185

Tonge R, Benevolenski F, Voroshilov A (2012) Mass splitting for jitter-free parallel rigid body simulation. ACM Trans Graph (TOG) 31(4):105

Xie J, Chakraborty N (2016) Rigid body dynamic simulation with line and surface contact. In: 2016 IEEE international conference on simulation, modeling, and programming for autonomous robots (SIMPAR). IEEE, pp 9–15

Zheng H, Vong S (2020) On convergence of the modulus-based matrix splitting iteration method for horizontal linear complementarity problems of h+-matrices. Appl Math Comput 369:124890

Zheng N, Yin JF (2013) Accelerated modulus-based matrix splitting iteration methods for linear complementarity problem. Numer Algor 64(2):245–262

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Availability of data and material

All data generated or analysed during this study are included in the article.

Code Availability

Not applicable

Disclosure of potential conflicts of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Research involving Human Participants and/or Animals

Not applicable

Informed consent

Not applicable

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miyamoto, S., Yamashita, M. An improved convergence based on accelerated modulus-based Gauss–Seidel method for interactive rigid body simulations. SN Appl. Sci. 3, 266 (2021). https://doi.org/10.1007/s42452-021-04238-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04238-8