Abstract

A semi-martingale reflecting Brownian motion is a popular process for diffusion approximations of queueing models including their networks. In this paper, we are concerned with the case that it lives on the nonnegative half-line, but the drift and variance of its Brownian component discontinuously change at its finitely many states. This reflecting diffusion process naturally arises from a state-dependent single server queue, studied by the Miyazawa (Diffusion approximation of the stationary distribution of a two-level single server queue, 2024. https://arxiv.org/abs/2312.11284). Our main interest is in its stationary distribution, which is important for application. We define this reflecting diffusion process as the solution of a stochastic integral equation, and show that it uniquely exists in the weak sense. This result is also proved in a different way by Atar et al. (Parallel server systems under an extended heavy traffic condition: A lower bound, 2022. https://arxiv.org/pdf/2201.07855). In this paper, we consider its Harris irreducibility and stability, that is, positive recurrence, and derive its stationary distribution under this stability condition. The stationary distribution has a simple analytic expression, likely extendable to a more general state-dependent SRBM. Our proofs rely on the generalized Ito formula for a convex function and local time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are concerned with a semi-martingale reflecting Brownian motion (SRBM for short) on the nonnegative half-line in which the drift and variance of its Brownian component discontinuously change at its finitely many states. The partitions of its state space which is separated by these states are called levels. This reflecting SRBM is denoted by \(Z(\cdot ) \equiv \{Z(t); t \ge 0\}\), and will be called a one-dimensional multi-level SRBM (see Definition 2.1). In particular, if the number of the levels is k, then it is called a one-dimensional k-level SRBM. Note that the one-dimensional 1-level SRBM is just the standard SRBM on the half line.

Let \(Z(\cdot )\) be a one-dimesional k-level SRBM. This reflecting process for \(k=2\) arises in the recent study of Miyazawa (2024) for asymptotic analysis of a state dependent single server queue, called 2-level GI/G/1 queue, in heavy traffic. This queueing model was motivated by an energy saving problem on servers for internet.

In Miyazawa (2024), it is conjectured that the reflecting process \(Z(\cdot )\) for \(k=2\) is the weak solution of a stochastic integral equation (see (2.1) in Sect. 2) and, if its stationary distribution exists, then this distribution agrees with the limit of the scaled stationary distribution of the 2-level GI/G/1 queue under heavy traffic, which is obtained under some extra conditions in Theorem 3.1 of Miyazawa (2024). While writing this paper, we have known that the weak existence of the solution is shown by Atar et al. (2022) for a more general model than we have studied here, and its uniqueness is proved in (Atar et al. (2023), Lemma 4.1) under one of the conditions of this paper.

We refer to these results of Atar et al. (2022, 2023) as Lemma 2.1. However, we here prove a slightly different lemma, Lemma 2.2, which is restrictive for the existence but less restrictive for the uniqueness. Lemma 2.2 includes some further results which will be used. Furthermore, its proof is sample path based and different from that of Lemma 2.1. We then show in Lemma 2.3 that \(Z(\cdot )\) is Harris irreducible, and give a necessary and sufficient condition for it to be positive recurrent in Lemma 2.4. These three lemmas are bases for our stochastic analysis.

The main results of this paper are Theorem 3.2 and Corollary 3.1 for the k-level SRBM, which derive the stationary distribution of \(Z(\cdot )\) without any extra condition under the stability condition obtained in Lemma 2.4. However, we first focus on the case for \(k=2\) in Theorem 3.1, then consider the case for general k in Theorem 3.2. This is because the presentation and proof for general k are notationally complicated while the proof for \(k=2\) can be used with minor modifications for general k. The stationary distribution for \(k=2\) is rather simple, it is composed of two mutually singular measures, one is truncated exponential or uniform on the interval \([0,\ell _{1}]\), and the other is exponential on \([\ell _{1},\infty )\), where \(\ell _{1}\) is the right endpoint of the first level at which the variance of the Brownian component and drift of the \(Z(\cdot )\) discontinuously change. One may easily guess these measures, but it is not easy to compute their weights by which the stationary distribution is uniquely determined (see (Miyazawa 2024)). We resolve this computation problem using the local time of the semi-martingale \(Z(\cdot )\) at \(\ell _{1}\).

The key tools for the proofs of the lemmas and theorems are the generalized Ito formula for a convex function and local time due to Tanaka (1963). We also use the notion of a weak solution of a stochastic integral equation. These formulas and notions are standard in stochastic analysis nowadays (e.g, see (Chung and Williams 1990; Cohen and Elliott 2015; Harrison 2013; Kallenberg 2001; Karatzas and Shreve 1998)), but they are briefly supplemented in the appendix because they play major roles in our proofs.

This paper is made up by five sections. In Sect. 2, we formally introduce a one-dimensional reflecting SRBM with state-dependent Brownian component which includes the one-dimensional multi-level SRBM as a special case, and present preliminary results including Lemmas 2.2, 2.3 and 2.4, which are proved in Sect. 4. Theorems 3.1 and 3.2 are presented and proved in Sect. 3. Finally, a related problems and a generalization of Theorem 3.2 are discussed in Sect. 5. In the appendix, the definitions of a weak solution for a stochastic integral equation and local time of a semi-martingale are briefly discussed in Sect. A.1 and A.2, respectively.

2 Problem and Preliminary Lemmas

Let \(\sigma (x)\) and b(x) be measurable positive and real valued functions, respectively, of \(x \in \mathbb {R}\), where \(\mathbb {R}\) is the set of all real numbers. We are interested in the solution \(Z(\cdot ) \equiv \{Z(t); t \ge 0\}\) of the following stochastic integral equation, SIE for short.

where \(W(\cdot )\) is the standard Brownian motion, and \(Y(\cdot ) \equiv \{Y(t); t \ge 0\}\) is a non-deceasing process satisfying that \(\int _{0}^{t} 1(Z(u) > 0) dY(u) = 0\) for \(t \ge 0\). We refer to this \(Y(\cdot )\) as a regulator. The state space of \(Z(\cdot )\) is denoted by \(S \equiv \mathbb {R}_{+}\), where \(\mathbb {R}_{+} = \{x \in \mathbb {R}; x \ge 0\}\).

As usual, we assume that all continuous-time processes are defined on stochastic basis \((\Omega ,\mathcal {F}, \mathbb {F}, \mathbb {P})\), and right-continuous with left-limits and \(\mathbb {F}\)-adapted, where \(\mathbb {F} \equiv \{\mathcal {F}_{t}; t \ge 0\}\) is a right-continuous filtration. Note that there are two kinds of solutions, strong and weak ones, for the SIE (2.1). See Appendix A.1 for their definitions. In this paper, we call weak solution simply by solution unless stated otherwise.

If functions \(\sigma (x)\) and b(x) are Lipschitz continuous and their squares are bounded by \(K(1+x^{2})\) for some constant \(K > 0\), then the SIE (2.1) has a unique solution even for the multidimensional SRBM which lives on a convex region (see (Tanaka (1979), Theorem 4.1)). However, we are interested in the case that \(\sigma (x)\) and b(x) discontinuously change. In this case, the solution \(Z(\cdot )\) may not exist in general, so we need a condition. As we discussed in Sect. 1, we are particularly interested when they satisfy the following conditions. Let \(\overline{\mathbb {R}} = \mathbb {R} \cup \{-\infty ,+\infty \}\).

Condition 2.1

There are an integer \(k \ge 2\) and a strictly increasing sequence \(\{\ell _{j} \in \overline{\mathbb {R}}; j = 0,1,\ldots ,k\}\) satisfying \(\ell _{0} = - \infty\), \(\ell _{j} > 0\) for \(j=1,2,\ldots ,k-1\) and \(\ell _{k} = \infty\) such that functions \(\sigma (x) > 0\) and \(b(x) \in \mathbb {R}\) for \(x \in \mathbb {R}\) are given by

where \(1(\cdot )\) is the indicator function of proposition “\(\cdot\)”, and \(\sigma _{j} > 0\), \(b_{j} \in \mathbb {R}\) for \(j = 1,2,\ldots ,k\) are constants.

Since Z(t) of Eq. (2.1) is nonnegative, \(\sigma (x)\) and b(x) are only used for \(x \ge 0\) in Eq. (2.1). Taking this into account, we partition the state space \(S \equiv \mathbb {R}_{+}\) of \(Z(\cdot )\) by \(\ell _{1}, \ell _{2}, \ldots , \ell _{k-1}\) under Condition 2.1 as follows.

We call these \(S_{j}\)’s levels. Note that \(\sigma (x)\) and b(x) are constants in x on each level, and they may discontinuously change at state \(\ell _{j}\) for \(j=1,2,\ldots , k-1\) under Condition 2.1.

We start with the existence of the solution \(Z(\cdot )\) of Eq. (2.1), which will be implied by the existence of the solution \(X(\cdot ) \equiv \{X(t); t \ge 0\}\) of the following stochastic integral equation in the weak sense (see Remark 4.1).

Taking this into account, we will also consider the condition below, which is sufficient for the existence of the weak solution \((X(\cdot ), W(\cdot ))\).

Condition 2.2

The functions \(\sigma (x)\) and b(x) are measurable functions satisfying that

For the unique existence of the weak solution \((X(\cdot ), W(\cdot ))\), Condition 2.2 is further weakened to Condition 5.1 by Theorem 5.15 of Karatzas and Shreve (1998) (see Sect. 5.2 for its details). However, the latter condition is quite complicated. So, we take the simpler condition (2.5), which is sufficient for our arguments.

Definition 2.1

The solutions \(Z(\cdot )\) of Eq. (2.1) under Condition 2.1 is called a one-dimensional multi-level SRBM, in particular, called one-dimensional k-level SRBM if it has k levels, namely, the total number of partitions of Eq. (2.3) is k, while it under Condition 2.2 is called a one-dimensional state-dependent SRBM with bounded drifts.

Using the weak solution \((X(\cdot ), W(\cdot ))\) of Eq. (2.4), Atar et al. (2022, 2023) proves:

Lemma 2.1

(Lemma 4.3 of Atar et al. (2022) and Lemma 4.1 of Atar et al. (2023)) (i) Under Condition 2.2, the stochastic integral equation (2.1) has a weak solution such that Y(t) is continuous in \(t \ge 0\). (ii) Under Condition 2.1, the solution is weakly unique.

The proof of (i) is easy (see Remark 4.1) while the proof of (ii) is quite technical. Instead of this lemma, we will use the following lemma, in which (i) and (ii) of Lemma 2.1 are proved under more restrictive and less restrictive conditions, respectively.

Lemma 2.2

Under Condition 2.2, if there are constants \(\sigma _{1}, \ell _{1} > 0\) and \(b_{1} \in \mathbb {R}\) such that

then the stochastic integral equation (2.1) has a unique weak solution such that Y(t) is continuous in \(t \ge 0\) and \(Z(\cdot )\) is a strong Markov process.

We prove this lemma in Sect. 4.1, which is different from the proof of Lemma 2.1 by Atar et al. (2022, 2023).

The main interest of this paper is to derive the stationary distribution of the \(Z(\cdot )\) for the one-dimensional multi-level SRBM under an appropriate stability condition. Since this reflecting diffusion process satisfies the conditions of Lemma 2.2, \(Z(\cdot )\) is a strong Markov process. Hence, our first task for deriving its stationary distribution is to consider its irreducibility and positive recurrence. To this end, we introduce Harris irreducibility and recurrence following (Meyn and Tweedie 1993). Let \(\mathcal {B}(\mathbb {R}_{+})\) be the Borel field, that is, the minimal \(\sigma\)-algebra on \(\mathbb {R}_{+}\) which contains all open sets of \(\mathbb {R}_{+}\). Then, a real valued process \(X(\cdot )\) which is right-continuous with left-limits is called Harris irreducible if there is a non-trivial \(\sigma\)-finite measure \(\psi\) on \((\mathbb {R}_{+}, \mathcal {B}(\mathbb {R}_{+}))\) such that, for \(B \in \mathcal {B}(\mathbb {R}_{+})\), \(\psi (B) > 0\) implies

while it is called Harris recurrent if Eq. (2.7) can be replaced by

where \(\mathbb {P}_{x}(A) = \mathbb {P}(A|X(0)=x)\) for \(A \in \mathcal {F}\), and \(\mathbb {E}_{x}[H|X(0)=x]\) for a random variable H.

Harris conditions (2.7) and (2.8) are related to hitting times. Define the hitting time at a subset of the state space S as

where \(\tau _{B} = \infty\) if \(X(t) \not \in B\) for all \(t \ge 0\). We denote \(\tau _{B}\) simply by \(\tau _{a}\) for \(B = \{a\}\). Then, it is known that Harris recurrent condition (2.8) is equivalent to

See Theorem 1 of Kaspi and Mandelbaum (1994) (see also (Meyn and Tweedie 1993)) for the proof of this equivalence. However, Harris irreducible condition (2.7) may not be equivalent to \(\mathbb {P}_{x}[\tau _{B} < \infty ]>0\). In what follows, \(\tau _{B}\) is defined for the process to be discussed unless stated otherwise.

Using those notions and notations, we present the following basic facts for the one-dimensional state-dependent SRBM \(Z(\cdot )\) with bounded drifts, where \(Z(\cdot )\) is strong Markov by Lemma 2.2.

Lemma 2.3

For the one-dimensional state-dependent SRBM with bounded drifts, if the condition (2.6) of Lemma 2.2 is satisfied, (i) it is Harris irreducible, and (ii) \(\mathbb {E}_{x}[\tau _{a}] < \infty\) for \(0 \le x < a\).

Remark 2.1

(ii) is not surprising because we can intuitively see that the drift is pushed to the upward direction by reflection at the origin and the positive-valued variances.

Lemma 2.4

For the one-dimensional state-dependent SRBM with bounded drifts, if the condition (2.6) of Lemma 2.2 is satisfied and if there are constants \(\ell _{*}, b_{*}\) and \(\sigma _{*}\) such that

then \(Z(\cdot )\) has a stationary distribution if and only if \(b_{*} < 0\). In particular, the one-dimensional k-level SRBM has a stationary distribution if and only if \(b_{k} < 0\).

These lemmas may be intuitively clear, but their proofs may have own interests because they are not immediate and we observe that the Ito formula nicely work. So we prove Lemmas 2.3 and 2.4 in Sects. 4.2 and 4.3, respectively. We are now ready to study the stationary distribution of \(Z(\cdot )\).

3 Stationary Distribution of Multi-level SRBM

We are concerned with the multi-level SRBM. Denote the number of its levels by k. We first introduce basic notations. Let \(\mathcal {N}_{k} = \{1,2,\ldots ,k\}\), and define

In this section, we derive the stationary distribution of the one-dimensional k-level SRBM for arbitrary \(k \ge 2\). We first focus on the case for \(k=2\) because this is the simplest case but its proof contains all ideas will be used for general k.

3.1 Stationary Distribution for \(k=2\)

Throughout Sect. 3.1, we assumed that \(k=2\).

Theorem 3.1

(The case for \(k=2\)) The \(Z(\cdot )\) of the one-dimensional 2-level SRBM has a stationary distribution if and only if \(b_{2} < 0\), equivalently, \(\beta _{2} < 0\). Assume that \(b_{2} < 0\), and let \(\nu\) be the stationary distribution of Z(t), then \(\nu\) is unique and has a probability density function h which is given below. (i) If \(b_{1} \not = 0\), then

where \(h_{11}\) and \(h_{2}\) are probability density functions defined as

and \(d_{1j}\) for \(j=1,2\) are positive constants defined by

(ii) If \(b_{1} = 0\), then

where \(h_{2}\) is defined in Eq. (3.2), and

Remark 3.1

-

(a)

Equations (3.5) and (3.6) are obtained from Eqs. (3.2) and (3.3) by letting \(b_{1} \rightarrow 0\).

-

(b)

Assume that \(Z(\cdot )\) is a stationary process, and define the moment generating functions (mgf for short):

$$\begin{aligned}&\varphi (\theta ) = \mathbb {E}\left[ e^{\theta Z(1)}\right] , \\&\varphi _{1}(\theta ) = \mathbb {E}\left[ e^{\theta Z(1)}1\left( 0 \le Z(1) < \ell _{1}\right) \right] , \qquad \varphi _{2}(\theta ) = \mathbb {E}\left[ e^{\theta Z(1)}1\left( Z(1) \ge \ell _{1}\right) \right] . \end{aligned}$$

Here, \(\varphi (\theta )\) and \(\varphi _{2}(\theta )\) are finite for \(\theta \le 0\), and \(\varphi _{1}(\theta )\) does so for \(\theta \in \mathbb {R}\). However, all of them are uniquely identified for \(\theta \le 0\) as Laplace transforms. So, in what follows, we always assume that \(\theta \le 0\) unless stated otherwise.

For \(i=0,1\), let \(\widehat{h}_{i1}\) and \(\widehat{h}_{2}\) be the moment generating functions of \(h_{i1}\) and \(h_{2}\), respectively, then

where the singular points \(\theta = - \beta _{1}, 0\) in Eq. (3.7) are negligible to determine \(h_{i1}\), so we take the convention that \(\widehat{h}_{i1}(\theta )\) exists for these \(\theta\).

Hence, Eq. (3.1) for \(b_{1} \not = 0\) and Eq. (3.4) for \(b_{1} = 0\) are equivalent to

Thus, Theorem 3.1 is proved by showing these equalities.

Remark 3.2

Miyazawa (2024) conjectures that the diffusion scaled process limit of the queue length of the 2-level GI/G/1 queue in heavy traffic is the solution of the stochastic integral equation of (5.2) in Miyazawa (2024). This stochastic equation corresponds to Eq. (2.1), but \(\ell _{1}\), \(b_{i}\) and \(\sigma _{i}\) of the present paper needs to replace by \(\ell _{0}\), \(-b_{i}\), \(\sqrt{c_{i}} \sigma _{i}\) for \(i=1,2\), respectively. Under these replacements, \(\beta _{i}\) also needs to replace by \(- 2b_{i}/(c_{i}\sigma _{i}^{2})\). Then, it follows from Eqs. (3.3), (3.6), (3.10) and (3.11) that, under the setting of Miyazawa (2024), for \(b_{1} \not = 0\),

and, for \(b_{1} = 0\),

Hence, the limiting distributions in (ii) of Theorem 3.1 of Miyazawa (2024) are identical with the stationary distributions in Theorem 3.1 here. Note that the limiting distributions in Miyazawa (2024) are obtained under some extra conditions, which are not needed for Theorem 3.1.

3.2 Proof of Theorem 3.1

By Remark 3.1, it is sufficient to show Eqs. (3.9), (3.10) and (3.11) for the proof of Theorem 3.1. We will do it in three steps.

3.2.1 1st Step of the Proof

In this subsection, we derive two stochastic equations from Eq. (2.1). For this, we use the generalized Ito formulas for a continuous semi-martingale \(X(\cdot )\) with finite quadratic variations \([X]_{t}\) for all \(t \ge 0\). For a convex test function f, this Ito formula is given by

where \(L_{x}(t)\) is the local time of \(X(\cdot )\) which is right-continuous in \(x \in \mathbb {R}\), and \(\mu _{f}\) on \((\mathbb {R}_{+}, \mathcal {B}(\mathbb {R}_{+}))\) is a measure on \((\mathbb {R},\mathcal {B}(\mathbb {R})\), defined by

where \(f'(x-)\) is the left derivative of f at x. See Appendix A.2 for the definition of local time and more about its connection to the generalized Ito formula (3.12).

Furthermore, if f(x) is twice differentiable, then Eq. (3.12) can be written as

which is well known Ito formula.

In our application of the generalized Ito formula, we first take the following convex function f with parameter \(\theta \le 0\) as a test function.

Since \(f'(\ell _{1}+) = 0\) and \(f'(\ell _{1}-) = \theta e^{\theta \ell _{1}}\), it follows from Eq. (3.13) that

On the other hand, \(f''(x) = \theta ^{2} e^{\theta x}\) for \(x < \ell _{1}\). Hence,

Then, applying local time characterization (A.1) to this formula, we have

We next compute the quadratic variation \(\big [ Z \big ]_{t}\) of \(Z(\cdot )\). Define \(M(\cdot ) \equiv \{M(t); t \ge 0\}\) by

then \(M(\cdot )\) is a martingale. Denote the quadratic variations of \(Z(\cdot )\) and \(M(\cdot )\), respectively, by \(\big [ Z \big ]_{t}\) and \(\big [ M \big ]_{t}\). Since Z(t) and Y(t) are continuous in t, it follows from Eq. (2.1) that

Hence, from \(f'(\theta) = \theta e^{\theta x} 1(x < \ell_{1}),\) Eqs. (3.16) and (3.17), the generalized Ito formula (3.12) becomes

We next applying Ito formula for the test function \(f(x) = e^{\theta x}\) to Eq. (2.1). In this case, we use Ito formula (3.14) because f(x) is twice continuously differentiable. Then, we have, for \(\theta \le 0\),

3.2.2 2nd Step of the Proof

The first statement of Theorem 3.1 is immediate from Lemma 2.4. Hence, under \(b_{2} < 0\), we can assume that \(Z(\cdot )\) is a stationary process by taking its stationary distribution for the distribution of Z(0). In what follows, this is always assumed.

Recall the moment generating functions \(\varphi , \varphi _{1}\) and \(\varphi _{2}\), which are defined in Remark 3.1. We first consider the stochastic integral Eq. (3.19) to compute \(\varphi _{1}\). Since \(\mathbb {E}[L_{\ell _{1}}(1)]\) is finite by Lemma A.1, taking the expectation of Eq. (3.19) for \(t = 1\) and \(\theta \le 0\) yields

because \(\beta _{1} \sigma _{1}^{2} = 2 b_{1}\). Note that this equation implies that \(\mathbb {E}[Y(1)]\) is also finite.

Using Eq. (3.21), we consider \(\varphi _{1}(\theta )\) separately for \(b_{1} \not = 0\) and \(b_{1} = 0\). First, assume that \(b_{1} \not = 0\). Then, from Eq. (3.21) and \(\beta _{1} > 0\), we have

This equation can be written as

Observe that the first term in the right-hand side of Eq. (3.23) is proportional to the moment generating function (mgf) of the signed measure on \([0,\infty )\) whose density function is exponential while its second term is the mgf of a measure on \([0,\ell _{1}]\), but the left-hand side of Eq. (3.23) is the mgf of a probability measure on \([0,\ell _{1})\). Hence, we must have

and therefore Eq. (3.23) yields

where \(\widehat{h}_{11}(\theta )\) is defined in Eq. (3.7), but also exists for \(\theta = - \beta _{1}\) by our convention.

We next assume that \(b_{1} = 0\). In this case, \(\beta _{1} = 0\), and it follows from Eq. (3.22) that

Since \(\frac{e^{\theta \ell _{1}} - 1}{\ell _{1} \theta }\) is the mgf of the uniform distribution on \([0,\ell _{1})\), by the same reason as in the case for \(b_{1} \not = 0\), we must have

Note that this equation is identical with Eq. (3.24) for \(b_{1} = 0\). Furthermore, \(\lim _{\theta \uparrow 0} \frac{e^{\theta \ell _{1}} - 1}{\ell _{1} \theta } = 1\) and \(\lim _{\theta \uparrow 0} \varphi _{1}(\theta ) = \varphi _{1}(0)\). Hence, Eq. (3.26) implies that

by our convention for \(\widehat{h}_{01}(0)\) similar to \(\widehat{h}_{11}(-\beta _{1})\). Thus, we have the following lemma.

Lemma 3.1

The mgf \(\varphi _{1}\) is obtained as

We next consider the stochastic integral Eq. (3.20) to derive \(\varphi _{2}(\theta )\). In this case, we use (3.20). Note that \(\varphi _{1}(\theta )\) and \(\varphi _{2}(\theta )\) are finite for \(\theta \le 0\). Hence, taking the expectations of both sides of Eq. (3.20) for \(t=1\) and \(\theta \le 0\) yields

Substituting \(\frac{1}{2} \sigma _{1}^{2} \left( \beta _{1} + \theta \right)\) of Eq. (3.21) and \(\mathbb {E}[Y(1)]\) of Eq. (3.24) into this equation, we have

The following lemma is immediate from this equation since \(\beta _{2} < 0\).

Lemma 3.2

The mgf \(\varphi _{2}\) is obtained as

where recall that \(\widehat{h}_{2}\) is defined by Eq. (3.8).

3.2.3 3rd Step of the Proof

We now prove (3.9), (3.10) and (3.11). Since \(\widehat{h}_{11}(0) = \widehat{h}_{01}(0) = \widehat{h}_{2}(0) = 1\), Eq. (3.9) is immediate from Lemmas 3.1 and 3.2. To prove (3.10), assumed that \(b_{1} \not = 0\). In this case, from Eqs. (3.25) and (3.31), we have

Taking the ratios of both sides, we have

Since \(\varphi _{1}(0) + \varphi _{2}(0) = 1\), this and \(\beta _{i} \sigma _{i}^{2} = 2 b_{i}\) yield

This proves (3.10). We next assume that \(b_{1} = 0\), then it follows from Eqs. (3.27) and (3.31) that

Similarly to the case for \(b_{1} \not = 0\), this yields

This proves (3.11). Thus, the proof of Theorem 3.1 is completed.

3.3 Stationary Distribution for General k

We now derive the stationary distribution of the one-dimensional k-level SRBM for a general positive integer k. Recall the definition of \(\beta _{j}\), and define \(\eta _{j}\) as

where \(x^{+} = 0 \vee x \equiv \max (0,x)\) for \(x \in \mathbb {R}\). Also recall that the state space S is partitioned to \(S_{j}\) defined in Eq. (2.3) for \(j \in \mathcal {N}_{k}\).

Theorem 3.2

(The case for general \(k \ge 2\)) The \(Z(\cdot )\) of the one-dimensional k-level SRBM has a stationary distribution if and only if \(b_{k} < 0\), equivalently, \(\beta _{k} < 0\). Let \(J = \{i \in \mathcal {N}_{k}; b_{i} = 0\}\), and assume that \(b_{k} < 0\), then denote the stationary distribution of Z(t) by \(\nu\), then \(\nu\) is unique and has a probability density function \(h^{J}\) for which is given below.

(i) If \(J = \emptyset\), that is, \(b_{j} \not = 0\) for all \(j \in \mathcal {N}_{k}\), then

where \(h_{j}\) for \(j \in \mathcal {N}_{k}\) are probability density functions defined as

and \(d_{j}\) for \(j \in \mathcal {N}_{k}\) are positive constants defined as

(ii) If \(J \not = \emptyset\), that is, \(b_{i} = 0\) for some \(i \in J\), then

where \(\displaystyle h^{J}_{j}(x) = \lim _{b_{i} \rightarrow 0, i \in J} h_{j}(x)\) and \(\displaystyle d^{J}_{j} = \lim _{b_{i} \rightarrow 0, i \in J} d_{j}\) for \(j \in \mathcal {N}_{k}\).

Before proving this theorem in Section 3.4, we note that the density \(h^{\emptyset }\) has a simple expression, which is further discussed in Sect. 5.2.

Corollary 3.1

Under the assumptions of Theorem 3.2, the density function \(h^{\emptyset }\) of the stationary distribution of the k-level SRBM when b(x) ≠ 0 for all x ≧ 0 is given by

where

Proof

Let \(C = \sum _{i=1}^{k-1} b_{i}^{-1}\left( \eta _{i} - \eta _{i-1}\right) - b_{k}^{-1} \eta _{k-1}\), and write \(\eta _{j}\) for \(j \in \mathcal {N}_{k-1}\) as

Then, from (i) of Theorem 3.2, we have, for \(x \in [\ell _{j-1}^{+}, \ell _{j})\) with \(j \le k-1\).

because \(\beta _{j}/b_{j} = 2/\sigma _{j}^{2} = 2/\sigma ^{2}(x)\) for \(x \in \left[ \ell _{j-1}, \ell _{j}\right)\). Similarly, for \(x \ge \ell _{k-1}\),

Hence, putting Ck = C/2, we have Eq. (3.38)\(.\) \(\square\)

Remark 3.3

\(d_{j}\) defined by Eq. (3.36) must be positive, which is easily checked. Nevertheless, it is interesting that their positivity is visible through Eqs. (3.40) and (3.41) of this corollary.

3.4 Proof of Theorem 3.2

Similar to the proof of Theorem 3.1, the first statement is immediate from Lemma 2.4, and we can assume that \(Z(\cdot )\) is a stationary process since \(b_{k} < 0\). We also always assume that \(\theta \le 0\). Define moment generating functions (mgf):

which are obviously finite because \(\theta \le 0\). Then, the mgf \(\varphi (\theta )\) of Z(0) is expressed as

and \(d_{j} = \varphi _{j}(0)\) for \(j \in \mathcal {N}_{k}\).

We first prove (i). In this case, let \(\widehat{h}_{j}\) be the mgf of \(h_{j}\) for \(j \in \mathcal {N}_{k}\), then

Hence, Eq. (3.34) is obtained if we show that, for \(j \in \mathcal {N}_{k}\),

To prove (3.43) and (3.44), we use the following convex function \(f_{j}\) with parameter \(\theta \le 0\) as a test function for the generalized Ito formula similar to Eq. (3.15).

Since \(f_{j}'\left( \ell _{j}-\right) = \theta e^{\theta \ell _{j}}\) and \(f_{j}'\left( \ell _{1}+\right) = 0\), it follows from Eq. (3.13) that

and, \(f''(x) = \theta ^{2} e^{\theta x}\) for \(x < \ell _{j}\). Hence, similarly to Eq. (3.19), the generalized Ito formula (3.12) for \(f = f_{j}\) becomes

Similarly to the proof of Theorem 3.1, we next apply Ito formula for test function \(f(x) = e^{\theta x}\) to Eq. (2.1), then we have Eq. (3.20) for b(x) and \(\sigma (x)\) which are defined by Eq. (2.2). From Eqs. (3.46) and (3.20), we will compute the stationary distribution of Z(t).

We first consider this equation for \(j=1\). In this case, Eq. (3.46) becomes

Then, by the same arguments in the proof of Theorem 3.1, we have Eqs. (3.21) and (3.24), which imply

Hence, we have

Thus, Eq. (3.43) is proved for \(j=1\). We prove (3.44) after (3.43) is proved for all \(j \in \mathcal {N}_{k}\).

We next prove (3.43) for \(j \in \{2,3,\ldots ,k-1\}\). In this case, we use \(f_{j}(Z(1)\) of Eq. (3.46). Take the difference \(f_{j}(Z(1)) - f_{j-1}(Z(1))\) for each fixed j and take the expectation under which \(Z(\cdot )\) is stationary, then we have

because \(\beta _{j} = 2b_{j}/\sigma _{j}^{2}\). This yields

Since \(\varphi _{j}\) is the mgf of a measure on \([\ell _{j-1}, \ell _{j})\), we must have

Hence, Eq. (3.50) becomes, for \(j \in \{2,3,\ldots ,k-1\}\),

Hence, we have (3.43) for \(j=2,3,\ldots ,k-1\).

We finally prove (3.43) for \(j=k\). Similarly to the case for \(k=2\) in the proof of Theorem 3.1, it follows from Eq. (3.20) that

Similarly, from Eq. (3.46) for \(j=k-1\), we have

Taking the difference of Eqs. (3.54) and (3.55), we have

which yields

Hence, we have (3.43) for \(j=k\). Namely,

It remains to prove (3.44) for \(j \in \mathcal {N}_{k}\). For this, we note that Eq. (3.24) is still valid, which is

Hence, recalling that \(\eta _{j} = \prod _{i=1}^{j} e^{\beta _{i}\left( \ell _{i} - \ell _{i-1}^{+}\right) }\), Eq. (3.51) yields

From Eqs. (3.53), (3.56), (3.57) and the fact that \(\left( e^{\beta _{j}\left( \ell _{j} - \ell _{j-1}^{+}\right) } - 1\right) \eta _{j-1} = \eta _{j} - \eta _{j-1}\), we have

Since \(\sum _{j=1}^{k} \varphi _{j}(0) = 1\), it follows from Eq. (3.58) that

because ηk = 0. Substituting this into Eq. (3.58) and using \(\sigma _{i}^{2} \beta _{i} = 2 b_{i}\), we have Eq. (3.44) for \(j \in \mathcal {N}_{k}\) because \(d_{j}\) is defined by Eq. (3.36).

(ii) is proved for \(k=2\) from (i) and (a) of Remark 3.1. It is not hard to see that this observation (a) is also valid for any \(b_{j}\) for \(j \in \mathcal {N}_{k}\). Hence, (ii) can be proved also for \(k \ge 2\) from (i).

4 Proofs of Preliminary Lemmas

4.1 Proof of Lemma 2.2

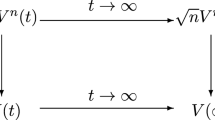

Recall that Lemma 2.2 assumes the conditions of Eq. (2.6) and of the one-dimensional state-dependent SRBM with bounded drifts. Since \(\ell _{1} > 0\), there are constants \(c, d > 0\) such that \(0< c< d < \ell _{1}\). Using these constants, we construct the weak solution of \((Z(\cdot ),W(\cdot ))\) of Eq. (2.1). The basic idea is to construct the sample path of \(Z(\cdot )\) separately for disjoint time intervals, where, for the first interval, if \(Z(0) < d\), then \(Z(\cdot )\) stays there until it hits d or, if \(Z(0) \ge d\), then it stays there until it hits c, and, for the subsequent intervals, \(Z(\cdot )\) starts below c until hits \(d > c\), which is called an up-crossing period, and those in which \(Z(\cdot )\) starts start at d or above it until hits \(c < d\), which is called a down-crossing period. Namely, except for the first interval, the up-crossing period always starts at c, and the down-crossing period always starts at d (see Fig. 1). In this construction, we also construct the filtration for which \((Z(\cdot ),W(\cdot ))\) is adapted.

Define \(X_{1}(\cdot ) \equiv \{X_{1}(t); t \ge 0\}\) as

and let \(X_{2}(\cdot ) \equiv \{X_{2}(t); t \ge 0\}\) be the solution of the following stochastic integral equation:

Note that the SIE (4.14) is stochastically identical with the SIE (4.2). Hence, as we discussed below (4.14), the SIE (4.2) has a unique weak solution under Condition 2.2. Thus, the solution \(X_{2}(\cdot )\) weakly exists because the assumptions of Lemma 2.2 imply Condition 2.2. For this weak solution, we use the same notations for \(X_{2}(\cdot )\), \(W_{2}(\cdot )\) and stochastic basics \((\Omega ,\mathcal {F},\mathbb {F},\mathbb {P})\) for convenience, where \(\mathbb {F} = \{\mathcal {F}_{t}; t \ge 0\}\). Without loss of generality, we expand this stochastic basic which accommodates \(X_{1}(\cdot )\), and have countable independent copies of \(W_{i}(\cdot )\) and \(X_{i}(\cdot )\) for \(i=1,2\), which are denoted by \(W_{n,i}(\cdot ) \equiv \{W_{n,i}(t); t \ge 0\}\) and \(X_{n,i}(\cdot ) \equiv \{X_{n,i}(t); t \ge 0\}\) for \(n=1,2,\ldots\).

We first construct the weak solution \(Z(\cdot )\) of Eq. (2.1) when \(Z(0) = x < d\), using \(W_{n,i}(\cdot )\) and \(X_{n,i}(\cdot )\). For this construction, we introduce up and down crossing times for a given real-valued semi-martingale \(V(\cdot ) \equiv \{V(t); t \ge 0\}\). Denote the n-th up-crossing time at d from below by \(\tau ^{(+)}_{d,n}(V)\), and denote the down-crossing time at \(c \; (< d)\) from above by \(\tau ^{(-)}_{c,n}(V)\). Namely, for \(n \ge 1\) and \(0< c< d < \ell _{1}\),

where \(\tau ^{(-)}_{c,0}(V) = 0\). Note that \(\tau ^{(+)}_{d,n}(Z)\) and \(\tau ^{(-)}_{c,n}(Z)\) may be infinite with positive probabilities. In this case, there is no further splitting, which causes no problem in constructing the sample path of \(Z(\cdot )\) because such a sample path is already defined for all \(t \ge 0\). After the weak solution is obtained, we will see that \(\mathbb {P}_{y}\left[ \tau ^{(+)}_{d,n}(Z) < \infty \right] = 1\) for \(y \in [0,d)\) by Lemma 2.3, but \(\tau ^{(-)}_{c,n}(Z)\) may be infinite with a positive probability.

We now inductively construct \(Z_{n}(t)\equiv \{Z_{n}(t); t \ge 0\}\) for \(n=1,2,\ldots \), where the construction below is stopped when \(\tau ^{(-)}_{c,n}(Z_{n})\) diverges. For \(n=1\), we denote the independent copy of \(X_{1}(\cdot )\) with \(X_{1}(0)=x < d\) by \(X_{11}(\cdot ) \equiv \{X_{11}(t); t \ge 0\}\), and define \(Z_{11}(t)\) as

then it is well known that \(Z_{11}(\cdot )\) is the unique solution of the stochastic integral equation:

where \(Y_{11}(t)\) is nondecreasing and \(\int _{0}^{t} 1(Z_{11}(u) > 0) dY_{11}(u) = 0\) for \(t \ge 0\). Furthermore, for \(X_{11}(0) = Z_{11}(0)\), \(Y_{11}(t) = \sup _{u \in [0,t]} (-X_{11}(u))^{+}\) (e.g., see (Kruk et al. 2007)). Since \(Z_{11}(0) = X_{11}(0) = x < d\) and \(X_{11}(t) \le Z_{11}(t) \le d < \ell _{1}\) for \(t \in [0,\tau ^{(+)}_{d,1}(Z_{11})]\), Eq. (4.4) can be written as

We next denote the independent copy of \(X_{2}(\cdot )\) with \(X_{2}(0)=d\) by \(X_{12}(\cdot ) \equiv \{X_{12}(t); t \ge 0\}\), and define

then we have, for \(t \in \left[ \tau ^{(+)}_{d,1}(Z_{11}), \tau ^{(-)}_{c,1}(Z_{12})\right) \),

where recall that \(X_{12}(0) = d\). Define

then \(Z_{11}(t)\) is stochastically identical with \(Z_{12}(t)\) for \(t \in \left[ \tau ^{(+)}_{d,1}(Z_{11}), \tau ^{(+)}_{\ell _{1},1}(Z_{11}) \wedge \tau ^{(+)}_{\ell _{1},1}(Z_{12})\right) \). Hence, it follows from Eqs. (4.5) and (4.7) that, for \(t \in \left[ 0, \tau ^{(-)}_{c,1}(Z_{1})\right) \),

where \(Y_{1}(t) = Y_{11}\left( \tau ^{(+)}_{d,1}(Z_{11}) \wedge t\right) \) and

We repeat the same procedure to inductively define \(X_{n,i}(t)\) with \(X_{n,i}(0) = c \, 1(i=1) + d \, 1(i=2)\) and \(Z_{n,i}(t)\) for \(i=1,2\) together with \(\tau ^{(+)}_{d,n}(Z_{n1})\) and \(\tau ^{(-)}_{c,n}(Z_{n2})\) for \(n \ge 2\) by

as long as \(\tau ^{(-)}_{c,n-1}(Z_{n-1}) < \infty \), and define

then we have, for \(t \in \left[ 0, \tau ^{(-)}_{c,n}(Z_{n2})\right) \),

where

From Eq. (4.10), we can see that \(Z_{n}(\cdot ) \equiv \{Z_{n}(t); 0 \le t < \tau ^{(-)}_{c,n}(Z_{n2})\}\) is the solution of Eq. (2.1) for \(t < \tau ^{(-)}_{c,n}(Z_{n2})\). Furthermore, \(Z_{n}(t) = Z_{n+1}(t)\) for \(0 \le t < \tau ^{(-)}_{c,n}(Z_{n2})\). From this observation, we define \(Z(\cdot )\) by \(Z(0) = x\) and

where \(\tau ^{(-)}_{c,0}(Z_{0}) = 0\), then \(Z(\cdot )\) is the solution of Eq. (2.1) for \(t < \tau ^{(-)}_{c,n}(Z_{n2})\) if \(\tau ^{(-)}_{c,n-1}(Z_{n-1}) < \infty \). Otherwise, if \(\tau ^{(-)}_{c,n-1}(Z_{n-1}) = \infty \) and \(\tau ^{(-)}_{c,m}(Z_{n-1}) < \infty \) for \(m = 1,2, \ldots , n-2\), then we stop the procedure by the \((n-1)\)-th step.

Up to now, we have assumed that \(Z(0) = Z_{1}(0) = Z_{11}(0) = x < d\). If this x is net less than d, then we start with \(Z_{12}(\cdot )\) of Eq. (4.6) with \(Z_{12}(0) = X_{12}(0) = x \ge d\), and replace \(Z_{11}(\cdot )\) of Eq. (4.3) by

Then, define \(Z_{1}(\cdot )\) as

where \(\tau ^{(-)}_{c,1}(Z_{12}) < \tau ^{(+)}_{d,1}(Z_{11})\) because the order of \(Z_{11}(\cdot )\) and \(Z_{12}(\cdot )\) is swapped. Similarly to the previous case that \(x < d\), we repeat this procedure to inductively define \(Z_{n}(\cdot )\) for \(n \ge 2\), then we can defined \(Z(\cdot )\) and \(Y(\cdot )\) similarly to Eqs. (4.11) and (4.12).

Hence, \(Z(\cdot )\) of Eq. (4.11) is the solution of Eq. (2.1) if we show that there is some \(n \ge 1\) for each \(t > 0\) such that \(t < \tau ^{(-)}_{c,n}(Z)\). This condition is equivalent to \(\sup _{n \ge 1} \tau ^{(-)}_{c,n}(Z) = \infty\) almost surely. To see this, assume that \(\tau ^{(-)}_{c,n}(Z) < \infty\) for all \(n \ge 1\), then let \(J_{n} = \tau ^{(-)}_{c,n}(Z) - \tau ^{(-)}_{c,n-1}(Z)\) for \(n \ge 1\), then \(\{J_{n}; n \ge 2\}\) is a sequence of i.i.d. positive valued random variables. Hence, we have

and therefore Z(t) is well defined for all \(t \ge 0\). Otherwise, if \(\tau ^{(-)}_{c,n}(Z) = \infty\) for some \(n \ge 1\), then we stop the procedure by the n-th step.

Thus, we have constructed the solution \(Z(\cdot )\) of Eq. (2.1). Note that the probability distribution of this solution does not depend on the choice of c, d as long as \(0< c< d < \ell _{1}\) because of the independent increment property of the Brownian motion. Furthermore, this \(Z(\cdot )\) is a strong Markov process because \(Z_{n,1}(\cdot )\) and \(Z_{n,2}(\cdot )\) are strong Markov processes (e.g. see (8.12) of Chung and Williams (1990), Theorem 21.11 of Kallenberg (2001), Theorem 17.23 and Remark 17.2.4 of Cohen and Elliott (2015)) and \(Z(\cdot )\) is obtained by continuously connecting their sample paths using stopping times. Thus, the \(Z(\cdot )\) is the weak solution of Eq. (2.1) which is strong Markov.

It remains to prove the weak uniqueness of the solution \(Z(\cdot )\). This is immediate from the construction of \(Z(\cdot )\). Namely, suppose that \(\widetilde{Z}(\cdot )\) is the solution of Eq. (2.1) with \(\widetilde{Z}(0) = x\) for given \(x \ge 0\). Assume that \(x < d\), then the process \(\{\widetilde{Z}(t); 0 \le t < \tau ^{(+)}_{d,1}(\widetilde{Z})\}\) with \(\widetilde{Z}(0)=x< d < \ell _{1}\) is stochastically identical with \(\{Z_{11}(t); 0 \le t < \tau ^{(+)}_{d,1}(Z)\}\) with \(Z_{11}(0)=x\), which is the unique solution of Eq. (4.4), while the process \(\{\widetilde{Z}(t); \tau ^{(+)}_{d,1}(\widetilde{Z}) < t \le \tau ^{(-)}_{c,1}(\widetilde{Z})\}\) must be stochastically identical with \(\{X_{12}(t); 0 \le t < \tau ^{(-)}_{c,1}(Z)\}\) with \(X_{12}(0)=d\), which is the unique weak solution of Eq. (4.2). Similarly, we can see such stochastic equivalences in the subsequent periods for \(\widetilde{Z}(0)=x < d\). On the other hand, if \(\widetilde{Z}(0) = x \ge d\), then similar equivalences are obtained. Hence, \(\widetilde{Z}(\cdot )\) and \(Z(\cdot )\) have the same distribution for each fixed initial state \(x \ge 0\). Thus, the \(Z(\cdot )\) is a unique weak solution, and the proof of Lemma 2.2 is completed.

Remark 4.1

From an analogy to the reflecting Brownian motion on the half line \([0,\infty )\), it may be questioned whether the solution \(Z(\cdot )\) of Eq. (2.1) can be directly obtained from the weak solution \(X(\cdot )\) of Eq. (4.14) by its absolute value, that is by \(|X|(\cdot ) \equiv \{|X(t)|; t \ge 0\}\). This question is affirmatively answered under Condition 2.2 by Atar et al. (2022). It may be interesting to see how they prove (i) of Lemma 2.1, so we explain it below.

Recall that the solution \(X(\cdot )\) of the SIE (4.14) weakly exists under Condition 2.2. If \(|X|(\cdot )\) is the solution \(Z(\cdot )\) of the stochastic integral Eq. (2.1), then we must have

On the other hand, from Tanaka formula (A.6) for \(a = 0\), we have

Hence, letting \(Y(\cdot ) = L_{0}(\cdot )\), Eq. (4.14) is stochastically identical with Eq. (4.15) if

and if \(W(\cdot )\) is replaced by \(\widetilde{W}(\cdot ) \equiv \{\textrm{sgn}(X(t)) W(t); t \ge 0\}\). Since the stochastic integral in Eq. (2.1) does not depend on \(\sigma (x)\) and b(x) for \(x < 0\), Eq. (4.16) does not cause any problem for Eq. (2.1).

4.2 Proof of Lemma 2.3

Recall the definition of \(\tau _{a} = \tau _{B}\) for \(B = \{a\}\) (see (2.9)). We first prove that

Since \(Z\left( \tau _{a} \wedge t\right) \le a\), \(\mathbb {E}_{x}\left[ e^{\theta Z(\tau _{a} \wedge t)}\right] < \infty\) for \(\theta \in \mathbb {R}\). Hence, substituting the stopping time \(\tau _{a} \wedge t\) into t of the generalize Ito formula (3.20) for test function \(f(x) = e^{\theta x}\) and taking the expectation under \(\mathbb {P}_{x}\), we have, for \(x < a\) and \(\theta \in \mathbb {R}\),

where \(\gamma (x,\theta ) = b(x) \theta + \frac{1}{2} \sigma ^{2}(x) \theta ^{2}\). Note that f, for each \(\varepsilon > 0\), \(\gamma (x,\theta ) \ge \varepsilon\) if

Recall that \(\beta _{i} = 2b_{i}/\sigma ^{2}_{i}\), and introduce the following notations.

Then, \(|\beta |_{\max } < \infty\), \(\sigma ^{2}_{\max } < \infty\) and \(\sigma ^{2}_{\min } > 0\) by Condition 2.2, which is assumed in Lemma 2.3. Hence, for each \(\varepsilon > 0\), \(\gamma (x,\theta ) \ge \varepsilon\) for \(\theta \ge \frac{1}{2}\left( |\beta |_{\max } + \sqrt{|\beta |^{2}_{\max } + 8\varepsilon /\sigma ^{2}_{\min }}\right)\) and \(x \ge 0\). For this \(\theta\), it follows from Eq. (4.19) that

because \(\theta > 0\) and \(e^{\theta Z(u)} \ge 1\) for \(u \in [0,\tau _{a} \wedge t]\). This proves (4.17) because we have

We next consider the case for \(x> a > 0\). Similarly to the previous case but for \(\theta < 0\), from Eq. (4.20), we have \(\gamma (x,\theta ) > \varepsilon\) for \(x > a\) and \(\varepsilon > 0\) if \(\theta\) satisfies

Since \(Y(t) = 0\) for \(t \le \tau _{a}\) because \(Z(0)=x > a\), we have, from Eq. (4.19), for \(\theta\) satisfying (4.22),

Assume that \(\mathbb {P}_{x}(\tau _{a} = \infty ) = 1\), then \(\mathbb {P}_{x}[t > \tau _{a}] = 0\), so we have, from Eq. (4.23),

Denote \(\mathbb {E}_{x}\left[ e^{\theta Z(u)}1(u \le \tau _{a})\right]\) by g(u). Then, after elementary manipulation, this yields

and therefore, by integrating both sides of this inequality, we have

because \(g(u) = \mathbb {E}_{x}\left[ e^{\theta Z(u)}1(t \le \tau _{a})\right] \le e^{\theta a}\) for \(\theta < 0\). Letting \(t \rightarrow \infty\) in this inequality, we have a contradiction because its right-hand side diverges. Hence, we have Eq. (4.18). We finally consider the case \(0 = a < x\). If \(\mathbb {P}_{x}\left[ Y(\tau _{0}) = 0\right] = 1\), then (4.23) holds, and the arguments below it works, which proves (4.18). Otherwise, if \(\mathbb {P}_{x}(Y(\tau _{0}) = 0) < 1\), that is, \(\mathbb {P}_{x}(Y(\tau _{0})> 0) > 0\), then \(\mathbb {P}_{x}[\tau _{0} < \infty ] > 0\) because of the definition of \(Y(\cdot )\). Hence, we again have Eq. (4.18) for \(a=0\).

We finally check Harris irreducible condition (see (2.7)). For this, let \(\tau = \tau _{0} \wedge \tau _{\ell _{0}}\), then \(\{Z(t); t \in (0,\tau )\}\) is stochastically identical with \(\{X(t); t \in (0,\tau )\}\), where \(X(t) \equiv X(0) + b_{1} t + \sigma _{1} W(t)\). Then, from Tanaka’s formula (A.4) for \(Z(\cdot )\), if \(Z(0) = y \in (x, \ell _{1})\),

Hence, if \(b_{1} \ge 0\), then

Similarly, from Eq. (A.4) for \(X(\cdot ) = Z(\cdot )\), if \(b_{1} < 0\), then, for \(y \in (0,x) \subset (0,\ell _{1})\),

Assume that \(b_{1} \ge 0\), then we choose \(y \in (x, \ell _{1})\) and the Lebesque measure on [0, y] for \(\psi\). Then, it follows from Eqs. (4.24) and (A.1) with \(g = 1_{B}\) that \(\psi (B) > 0\) for \(B \in \mathcal {B}(\mathbb {R}_{+})\) implies

Since \(Z(\cdot )\) hits state \(y \in (0,\ell _{1})\) from any state in S with positive probability, this inequality implies the Harris irreducibility condition (2.7). Similarly, this condition is proved for \(b_{1} < 0\) using Eq. (4.25) and the Lebesgue measure on \([y, \ell _{1}]\) for \(\psi\). Thus, the proof of Lemma 2.3 is completed.

4.3 Proof of Lemma 2.4

Obviously, \(b_{*} < 0\) is necessary for \(Z(\cdot )\) to have a stationary distribution because Z(t) a.s. diverges if \(b_{*} > 0\) by the strong law of large numbers and Lemma 2.3 while \(Z(\cdot )\) is null recurrent if \(b_{*} = 0\).

Conversely, assume that \(b_{*} < 0\). We note the following fact which is partially a counter part of Eq. (4.17).

Lemma 4.1

If \(b_{*} < 0\), then

Proof

Assume that \(\ell _{*} \le a < x\), and let \(X(t) \equiv x + b_{*}t + \sigma _{*} W(t)\) for \(t \ge 0\). Since \(\ell _{*} < x\), \(\{Z(t); 0 \le t \le \tau ^{X}_{\ell _{*}}\}\) under \(\mathbb {P}_{x}\) has the same distribution as \(\{X(t); 0 \le t \le \tau ^{X}_{\ell _{*}}\}\), where \(\tau ^{X}_{y} = \inf \{u \ge 0; X(u) = y\}\) for \(y \ge \ell _{*}\). Hence, applying the optional sampling theorem to the martingale \(X(t) - x - b_{*} t\) for stopping time \(\tau ^{X}_{a} \wedge t\), we have

Since \(X(t) \rightarrow - \infty\) as \(t \rightarrow \infty\) w.p.1 by strong law of large numbers, letting \(t \rightarrow \infty\) in this equation yields \(b_{*} \mathbb {E}_{x}\left[ \tau ^{X}_{a}\right] = a - x\). Hence, we have

This proves (4.26). \(\square\)

We return to the proof of Lemma 2.4. For \(n \ge 1\) and \(x, y > \ell _{*}\) such that \(x < y\), inductively define \(S_{n}, T_{n}\) as

where \(T_{0} = 0\). Because \(\ell _{*}< x < y\), we have, from Eqs. (4.17) and (4.26),

Hence, \(Z(\cdot )\) is a regenerative process with regeneration cycles \(\{T_{n}; n \ge 1\}\) because the sequence of \(\{Z(t); T_{n-1} \le t < T_{n}\}\) for \(n \ge 1\) is i.i.d. by its strong Markov property. Hence, \(Z(\cdot )\) has the stationary probability measure \(\pi\) given by

Thus, \(Z(\cdot )\) is positive recurrent.

5 Concluding Remarks

We discuss two topics here.

5.1 Process limit

It is conjectured in Miyazawa (2024) that a process limit of the diffusion scaled queue length in the 2-level GI/G/1 queue in heavy traffic is the solution \(Z(\cdot )\) of stochastic integral Eq. (2.1) for the 2-level SRBM. As we discussed in Remark 3.2, the stationary distribution of \(Z(\cdot )\) is identical with the limit of the stationary distribution of the scaled queue length in the 2-level GI/G/1 queue in heavy traffic, obtained in Miyazawa (2024). This strongly supports this conjecture on the process limit.

We believe that the conjecture is true. However, the standard proof for diffusion approximation based on functional central limit theorem may not work because of the state dependent arrivals and service speed in the 2-level GI/G/1 queue. We are now working on this problem by formulating the queue length process for the 2-level GI/G/1 queue as a semi-martingale. However, we have not yet completed its proof, so this is an open problem.

5.2 Stationary Distribution Under Weaker Conditions

In this paper, we derived the stationary distribution for a one-dimensional multi-level SRBM under the stability condition. In the view of Corollary 3.1, it is naturally questioned whether a similar stationary distribution is obtained under more general conditions than Condition 2.1.

To consider this problem, we first need the existence of the solution \(Z(\cdot )\) of Eq. (2.1), for which the existence of the solution \(X(\cdot )\) of Eq. (4.14) is sufficient as discussed in Remark 4.1. For the latter existence, Condition 2.2 is weaker than Condition 2.1, but Theorem 5.15 of Karatzas and Shreve (1998) and Theorem 23.1 of Kallenberg (2001) show that it can be further weakened to

Condition 5.1

It is easy to see that Condition 5.1 is indeed implied by Condition 2.2. Note that the local integrability condition (5.2) implies that

which is equivalent to \(S_{\sigma } = \emptyset\), where

This condition \(S_{\sigma } = \emptyset\) is needed for X(t) to exist for all \(t \ge 0\) in the weak sense as shown by Theorem 23.1 of Kallenberg (2001) and its subsequent discussions.

Assume Condition 5.1 for general \(\sigma (x)\) and b(x). If these functions are well approximated by simple functions (e.g., the discontinuity points of \(\sigma (x)\) and b(x) is finite for x in each finite interval) and if \(b(x) \not = 0\) for all \(x \ge 0\), then Corollary 3.1 suggests that the stationary density is given by Eq. (3.38) under the condition that

To legitimize this suggestion, we need to carefully consider the approximation, but we have not yet done it. So, we leave it as a conjecture.

References

Miyazawa M (2024) Diffusion approximation of the stationary distribution of a two-level single server queue. Technical report (February 2024), submitted for publication. https://arxiv.org/abs/2312.11284

Atar R, Castiel E, Reiman M (2022) Parallel server systems under an extended heavy traffic condition: a lower bound. Technical report . https://arxiv.org/pdf/2201.07855

Atar R, Castiel E, Reiman M (2023) Asymptotic optimality of switched control policies in a simple parallel server system under an extended heavy traffic condition. Stochastic systems to appear

Tanaka H (1963) Note on continuous additive functionals of the 1-dimensional Brownian path. Z Wahrscheinlichkeitstheorie verw Gebiete 1:251–257

Chung KL, Williams RJ (1990) Introduction to stochastic integration, 2nd edn. Birkhäuser, Boston

Cohen SN, Elliott RJ (2015) Stochastic calculus and applications, 2nd edn. Springer, New York

Harrison JM (2013) Brownian models of performance and control. Cambridge University Press, New York

Kallenberg O (2001) Foundations of modern probability, 2nd edn. Springer Series in Statistics, Probability and its applications. Springer, New York

Karatzas I, Shreve SE (1998) Brownian motion and stochastic calculus, 2nd edn. Graduate text in mathematics, vol. 113. Springer, New York

Tanaka H (1979) Stochastic differential equations with reflecting boundary condition in convex regions. Hiroshima Math J 9:163–177

Meyn SP, Tweedie RL (1993) Stability of Markovian processes II: continuous time processes and sampled chains. Adv Appl Probab 25:487–517

Kaspi H, Mandelbaum A (1994) On Harris recurrence in continuous time. Math Oper Res 19:211–222

Kruk L, Lehoczky J, Ramanan K (2007) An explicit formula for the Skorokhod map on (0, a]. Anna Probab 35(5):1740–1768

Braverman A, Dai JG, Miyazawa M (2024) The BAR-approach for multiclass queueing networks with SBP service policies. Stochastic Systems.

Acknowledgements

This study is originally motivated by the BAR approach, coined by Jim Dai (e.g., see (Braverman et al. 2024)). I am grateful to him for his continuous support for my work. I am also grateful to Rami Atar for his helpful comments on the solution of stochastic integral equation (2.1). I also benefit Rajeev Bhaskaran through personal communications. At last but not least, I sincerely thank Krishnamoorthy for encouraging me to present a talk at International Conference on Advances in Applied Probability and Stochastic Processes, held in Thrissur, India in January, 2024. This paper is written to follow up this talk and my paper (Miyazawa 2024).

Funding

No funding was provided for the completion of this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

This paper has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Weak Solution of a Stochastic Integral Equation

There are two kinds of solutions for a stochastic integral equation such as Eq. (2.1). We here only consider them for the SIE (2.1). Recall that this equation is defined on stochastic basis \((\Omega ,\mathcal {F},\mathbb {F},\mathbb {P})\). On this stochastic basis, if Eq. (2.1) holds almost surely on this stochastic basis, then the SIE (2.1) is said to have a strong solution. In this case, the standard Brownian motion \(W(\cdot )\) is defined on \((\Omega ,\mathcal {F},\mathbb {F},\mathbb {P})\). On the other hand, the SIE (2.1) is said to have a weak solution if there are some stochastic basis \((\widetilde{\Omega },\widetilde{\mathcal {F}},\widetilde{\mathbb {F}},\widetilde{\mathbb {P}})\) and some \(\widetilde{\mathbb {F}}\)-adapted process \((Z(\cdot ),W(\cdot ),Y(\cdot ))\) on it such that Eq. (2.1) holds almost surely and \(W(\cdot )\) is the standard Brownian motion under \(\widetilde{\mathbb {P}}_{x}\) for each \(x \ge 0\), where \(\widetilde{\mathbb {P}}_{x}\) is the conditional distribution of \(\widetilde{\mathbb {P}}\) given \(Z(0) = x\) (e.g., see (Karatzas and Shreve (1998), Section 5.3)).

It may be better to use a different notation for the weak solution, e.g., \((\widetilde{Z}(\cdot ),\widetilde{W}(\cdot ),\widetilde{Y}(\cdot ))\). However, we have used the same notation not only for this process but also stochastic basis for notational convenience. Thus, when we discuss about the weak solution, the stochastic basis \((\Omega ,\mathcal {F},\mathbb {F},\mathbb {P})\) is considered to be appropriately replaced.

1.2 Local Time and Generalized Ito Formula

We briefly discuss about local time for a generalized Ito formula (3.12). This Ito formula is also called Ito–Meyer–Tanaka formula (e.g., see Theorem 6.22 of Karatzas and Shreve (1998) and Theorem 22.5 of Kallenberg (2001)). Let \(X(\cdot )\) be a continuous semi-martingale with finite quadratic variations \([X]_{t}\) for all \(t \ge 0\). For this \(X(\cdot )\), local time \(L_{x}(t)\) for \(x \in \mathbb {R}\) and \(t \ge 0\) is defined through

See Theorem 7.1 of Karatzas and Shreve (1998) for details about the definition of local time. Note that the local time of Karatzas and Shreve (1998) is half of the local time in this paper. Applying \(g(y) = 1_{(x-\varepsilon ,x+\varepsilon )}(y)\) for \(\varepsilon > 0\) to Eq. (A.1), we can see that

This can be used as the definition of the local time.

There are two versions of the local time since \(L_{x}(t)\) is continuous in t, but may not be continuous in x. So, usually, the local time \(L_{x}(t)\) is assumed to be right-continuous for the generalized Ito formula (3.12). However, if the finite variation component of \(X(\cdot )\) is not atomic, then \(L_{x}(t)\) is continuous in x (see Theorem 22.4 of Kallenberg (2001)). In particular, the finite variation component of \(Z(\cdot )\) is continuous by Lemma 2.2, so we have the following lemma.

Lemma A.1

For the \(Z(\cdot )\) of an 1-dimensional state-dependent SRBM with bounded drifts, its local time \(L_{x}(t)\) is continuous in x for each \(t \ge 0\). Furthermore, \(\mathbb {E}[L_{x}(t)]\) is finite by Eq. (A.1) for \(X(\cdot ) = Z(\cdot )\).

Let f be a concave test function from \(\mathbb {R}\) to \(\mathbb {R}\), then \(-f\) is a convex function, where \((-f)(x) = - f(x)\), so the generalized Ito formula (3.12) becomes

For constant \(a \in \mathbb {R}\), let \(f(x) = (x-a)^{+} \equiv \max (0,x-a)\) for Eq. (3.12), then \(f'(x-) = 1(x > a)\) and \(\mu _{f}(B) = 1(a \in B)\). Hence, it follows from Eq. (3.12) that

Similarly, applying \(f(x) = (x-a)^{-} \equiv \max (0, -(x-a))\) and \(f(x) = |x-a|\), we have, by Eqs. (A.3) and (3.12),

where \(\textrm{sgn}(x) = 1(x>0) - 1(x \le 0)\). Note that either one of these three formulas can be used to define local time \(L_{a}(t)\). In particular, Eq. (A.6) is called a Tanaka formula because it is originally studied for Brownian motion by Tanaka (1963).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Miyazawa, M. Multi-level Reflecting Brownian Motion on the Half Line and Its Stationary Distribution. J Indian Soc Probab Stat (2024). https://doi.org/10.1007/s41096-024-00205-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s41096-024-00205-9

Keywords

- Reflecting Brownian motion

- State-dependent variance and drift

- Discontinuous changes of variance and drift

- Stationary distribution

- Stochastic integral equation

- Generalized Ito formula

- Tanaka formula

- Harris irreducibility