Abstract

We study the large deviation behaviors of a stochastic fluid queue with an input being a generalized Riemann–Liouville (R–L) fractional Brownian motion (FBM), referred to as GFBM. The GFBM is a continuous mean-zero Gaussian process with non-stationary increments, extending the standard FBM with stationary increments. We first derive the large deviation principle for the GFBM by using the weak convergence approach. We then obtain the large deviation principle for the stochastic fluid queue with the GFBM as the input process as well as for an associated running maximum process. Finally, we study the long-time behavior of these two processes; in particular, we show that a steady-state distribution exists and derives the exact tail asymptotics using the aforementioned large deviation principle together with some maximal inequality and modulus of continuity estimates for the GFBM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic fluid queues have been used to model communication networks, in particular, the flow of data through the network as a “fluid” continuously over time. The input of such fluid queues is assumed to be an exogenous random process while the output is a constant rate. The fluid queue, which is often viewed as the fluid workload process, is then modeled via the one-dimensional reflection. See, for example, an overview of the stochastic fluid queues in [33, Chapter 5] (and an overview of scheduling of stochastic fluid networks in [7, Chapter 12]). Such models are also used to model the dynamics in storage or dams [30].

Although the input can be of any general continuous-time stochastic process, in the telecommunication literature, Gaussian processes with self-similarity and long-range dependence, such as fractional Brownian motion (FBM), are often used to model the traffic flow into the system [22, 23, 25, 26, 28, 34]. However, the existing studies using FBM only model stationary inputs that have these self-similarity and long-range dependent properties. Many Internet and communication input flows exhibit non-stationarity (see, e.g., [5, 19, 31]). Therefore, it is desirable to use a process to capture all these characteristics.

Recently, Pang and Taqqu [27] have introduced a generalized fractional Brownian motion (GFBM) as the scaling limit of power-law shot noise processes extending [29, Chapter 3.4] and [21]. The GFBM loses the stationary increments property of the standard FBM, while exhibiting self-similarity and long-range dependence. In this paper, we use a special case of GFBM, which is the generalized Riemann–Liouville (R–L) FBM (see Eq. (2.1)), as the input process for fluid queues.

We particularly focus on the large deviation principles (LDPs) of the fluid queues with the GFBM input. Large deviations of fluid queues have been well studied (see an overview in [13]). Our paper is of similar flavor as Chang et al. [6], which studies the large deviations and moderate deviations properties of fluid queues with an input process that can be regarded as an extension of the R–L FBM. Specifically, the Brownian motion in the R–L FBM is replaced by a process of stationary increments that satisfies a large deviations or a moderate deviations principle. That construction obviously differs from the GFBM. One distinction is that the mapping in that construction is continuous from the process of stationary increments to the input process, and thus, the contraction principle can be applied to establish the LDP for the input process. However, that is not the case for the GFBM. We explain in detail why the contraction principle cannot be directly used to establish the LDP for the GBM from that of the driving BM in Sect. 2.2.2.

Therefore, we establish an LDP for the GFBM (\(\{X^\varepsilon \}_{\varepsilon >0}\) as defined in (2.8)) using a different approach i.e., the weak convergence approach (see Sect. 2.2.3 for a brief description). This approach is commonly used in proving LDPs of processes that can be expressed as a measurable map of a Brownian motion (\(X^\varepsilon \) is clearly an example). We establish the LDP for \(\{X^\varepsilon \}_{\varepsilon >0}\) by proving Lemmas 3.1, 3.2 and 3.3 (following the procedure for LDPs according to Theorem 2.1). The advantage of this approach lies in the fact that the LDP for \(\{X^\varepsilon \}_{\varepsilon >0}\) is simply equivalent to tightness of processes \(\{X^{\varepsilon ,v^\varepsilon }\}_{\varepsilon >0}\) (defined in (3.2)), for an appropriate precompact family of processes \(\{v^\varepsilon \}_{\varepsilon >0}\) and uniqueness of solutions to Eq. (3.2), for an appropriately specified process v. The aforementioned tightness (which is required to prove Lemma 3.1) is derived under the assumption that the set of parameters \((\alpha ,\gamma )\) for the GFBM in (2.1) satisfying (2.6) (noting that the Hurst parameter H can take values in (0, 1) in this range unlike the standard FBM \(B^H\) with \(H\in (1/2,1)\) when \(\gamma =0\)). On the other hand, the rate function obtained using the weak convergence approach is given in the form of an optimization problem (see (3.1)). In fact, even for standard FBM, the rate function using the contraction principal in [6] is also implicitly given via the integral mapping. Here we present an expression of the rate function for the GFBM using Laplace transform in Lemma 3.4.

We then move on to prove the LDP for the workload process \(V(\cdot )\) of a stochastic fluid queue with the GFBM as input and with a constant service rate and the corresponding running maximum process \(M(\cdot )\). See (4.1) and (4.2). It is clear that the sample path LDP for \(V(\cdot )\) and \(M(\cdot )\) can be easily obtained by applying the contraction principle by the continuity of the reflection mapping in the Skorohod topology. However, by adapting the method in [6, Section 4 & 5], using the LDP result for the GFBM, we obtain the LDP for \(V(\cdot )\) and \(M(\cdot )\) at a fixed time, in which the rate function is explicitly provided (see Theorem 2.2 and Lemma 4.1).

Finally, we analyze the long-time behavior of these processes in Sect. 5. As it is well known, if the input process as stationary increments, the study of V(t) and M(t) is equivalent (see (4.3)). Since the GFBM has non-stationary increments, the usual approach with stationary input to derive the steady-state of the queueing process does not apply (see, e.g, tail asymptotics of fluid queues with the R–L FBM in [9, 10, 12, 14, 15] and the reference therein).

To study the long-time behavior, we first establish that the laws of V(t) and M(t) have a weak limit point as \(t\rightarrow \infty \) (in fact, we show that M(t) converges almost surely as \(t\rightarrow \infty \)). We first derive an alternative representation of the GFBM in Lemma 5.1 by using Itô product formula for which we have to use an approximation approach to avoid an ill-defined issue around time zero. We then derive a new maximal inequality for the scaled GFBM (see Lemma 5.3), in particular, the tail asymptotics for \(\max _{\delta _0\le s\le t} \big \{s^{-H}X(s)\big \},\) for some \(\delta _0>0\) and a modulus of continuity type estimates for X(t), when t is around 0. Moreover, by using this new maximal inequality, we can show that the tail of laws of V(t) and M(t) at fixed t is sub-exponential (Theorems 5.1 and 5.3), from which we conclude that the laws of V(t) have a weak limit point as \(t\rightarrow \infty \). In addition, this sub-exponential tail behavior also implies that expectation of the M(t) is uniformly bounded in time, and thus conclude that M(t) converges almost surely.

Now that the existence of a steady-state distribution is proved, we next study the tail asymptotics of these steady-state distributions. Due to the non-stationarity of the processes, the steady-state distribution of this process is not necessarily equal to the steady state of the queuing process mentioned above. We derive tail asymptotics for the steady states in Theorems 5.2 and 5.4. For this purpose, we derive a maximal inequality (see Lemma 5.3) and a modulus of continuity estimates (see Lemmas 5.2 and 5.4) for the GFBM.

We also provide alternative proofs for certain results in Sects. 4 and 5 using well-known results on the extremes of Gaussian processes. Specifically, we give proofs for Theorem 4.1 and Lemma 4.1 in Sect. 4.1 using Landau–Marcus–Shepp asymptotics [24, Equation (1.1)], and discuss how it is used to prove Lemma 5.4 in Remark 5.5. We also give an alternative proof for Theorem 5.2 in Sect. 5.1 using results on the tail asymptotics for locally stationary self-similar Gaussian processes by Hüsler and Piterbarg [16]. For this, we show that the GFBM is locally stationary, despite its non-stationary increments (see Lemma A.2).

1.1 Notation

Let \((\Omega , \mathcal {F}, \{\mathcal {F}_t\}_{t\ge 0}, \mathbb {P})\) be the filtered probability space with \(\mathcal {F}_t\) satisfying the usual conditions. \(\mathbb {E}\) denotes the expectation with respect to \(\mathbb {P}\). For \(T>0\), let \(\mathcal {C}_T\) be the space of continuous real-valued functions f on [0, T] such that \(f(0)=0\) and equipped with the uniform topology (\(\Vert \cdot \Vert _\infty \) denotes the corresponding norm). When there is no ambiguity, we write \(\mathcal {C}_T\) as \(\mathcal {C}\). \(L^2([0,T])\) denotes the space of square integrable Lebesgue measurable functions on [0, T]. \(\mathcal {P}_Z\) denotes the law of the random variable Z.

1.2 Organization of the paper

In Sect. 2, we introduce the GFBM process and give its basic properties. In Sect. 2.2, we give the definitions and necessary results from the general theory of large deviations. As mentioned already we use the approach of weak convergence in this work, we introduce and compare this approach to other well-known approaches proving large deviation principle. We also state important results used in this approach. In Sect. 3, we prove that the GFBM process defined in (2.8) satisfies a large deviation principle. In Sect. 4, we establish a large deviation principle for the workload process and the running maximum process of a stochastic fluid queue with constant service rate and scaled GFBM as the arrival process. Finally, in Sect. 5 we study the long-time behavior of the the running maximum process and the queuing process.

2 Preliminaries

2.1 Generalized Riemann–Liouville FBM

The generalized Riemann–Liouville (R–L) FBM \(\{X(t): t\ge 0\}\) is introduced in [27, Remark 5.1] and further studied in [17, Section 2.2]. The process X(t) is defined by

where B(t) is a standard Brownian motion and \(c \in \mathbb {R}\),

The normalization constant c is such that \(E[X(t)^2] = t^{2H}\) (it can be explicitly given as in Lemma 2.1 of [17]). The process X(t) is a continuous self-similar Gaussian process with Hurst parameter

It has non-stationary increments; in particular, the second moment for its increments is

for any \(0\le s<t\). It has mean zero and covariance function

for \(0\le s \le t\). For simplicity, we refer to this process as GFBM.

When \(\gamma =0\), the process X(t) becomes the standard R–L FBM

which has

and the covariance function

It is clear that the GFBM X loses the stationary increment property that the standard FBM \(B^H\) possess.

Some sample path properties of the GFBM X have been studied. It is shown in [27, Proposition 5.1] and [17, Theorems 3.1 and 4.1] that X has continuous sample paths almost surely, and moreover, is Hölder continuous with parameter \(H-\epsilon \) for \(\epsilon >0\); and the paths of X is non-differentiable if \(\gamma \in (0,1)\) and \((\gamma -1)/2 <\alpha \le 1/2\), and differentiable if \(\gamma \in (0,1)\) and \(1/2<\alpha \le (1+\gamma )/2\), almost surely. In [32], the additional properties of the exact uniform modulus of continuity are studied.

For standard FBM, the Hurst parameter H not only indicates the self-similarity property, but also dictates the short and long-range dependences, that is, \(H \in (0,1/2)\) and \(H\in (1/2,1)\) for short and long-range dependences, respectively. The usual definition of long-range dependence is through the autocovariance functions, namely, letting \(\gamma _s=Cov(Z(t), Z(t+s))\) be the covariance function of a stationary process Z(t) (noting that \(\gamma _s\) is independent of t due to stationary increments), one says the process has long-range dependence if \(\sum _{s=-\infty }^\infty \gamma _s =\infty \). However, for processes with non-stationary increments this definition does not apply. In [18], a concept of long-range dependence for self-similar processes (not necessarily stationary) is introduced via the associated Lamperti transform (which turns the non-stationary process into a stationary one). Specifically, for a self-similar process Z(t) with Hurst parameter H and \(Z(0)=0\), the Lamperti transform \(\widetilde{Z}\) is defined by \(\widetilde{Z}(t) = e^{-Ht} Z(e^t)\) for \(t \in \mathbb {R}\), which is strictly stationary with covariance function \(\widetilde{\gamma }_s = \mathbb {E}[\widetilde{Z}(t) \widetilde{Z}(t+s) ] \) for any \(t, s \in \mathbb {R}\). We then say that the process Z has a long-range dependence if \(\lim _{t\rightarrow \infty } \frac{1}{t} \log |\widetilde{\gamma }_t| + H >0\). For standard FBM, it can be checked that this condition is equivalent to \(2H-1>0\), that is, \(H>1/2\). It is shown in [18, Proposition 6] that the GFBM has long range dependence in that sense if and only if \(\alpha >0\). As a special case, when \(\gamma =0\), the FBM \(B^H\) is long range dependent if \(H=\alpha + 1/2>1/2\). Observe that, for the GFBM, when

the value of the Hurst parameter \(H = \alpha -\gamma /2+1/2\) can take any value in (0, 1). Specifically, for \(0<\alpha < \gamma /2\), \(H\in (0,1/2)\) while for \(\gamma /2<\alpha < (1+\gamma )/2\), \(H \in (1/2,1)\). Our results below in the large deviation of the GFBM and the fluid queue with the GFBM input assume this parameter range in (2.6).

2.2 Large deviation principle for functionals of BM

Suppose \((S,\mathcal {B}(S))\) is a Polish space with \(\mathcal {B}(S)\) being the Borel \(\sigma \)-algebra of S. Consider a family of S-valued random variables \(\{X^\varepsilon \}_{\varepsilon >0}\), whose corresponding family of probability measures is denoted by \(\mu ^\varepsilon \).

Definition 2.1

The family of S-valued random variables \(\{X^\varepsilon \}_{\varepsilon >0}\) (or the family of probability measures \(\{\mu ^\varepsilon \}_{\varepsilon >0}\)) is said to satisfy a large deviation principle (LDP), if there is a lower semicontinuous function \(I:S\rightarrow [0,\infty ]\) and the following is satisfied:

-

(1)

For every \(A\in \mathcal {B}(S)\),

$$\begin{aligned} -\inf _{x\in A^\circ }I(x)\le \liminf _{\varepsilon \rightarrow 0}\varepsilon \log \mu ^\varepsilon (A)\le \limsup _{\varepsilon \rightarrow 0}\varepsilon \log \mu ^\varepsilon (A)\le -\inf _{x\in {\bar{A}}}I(x), \end{aligned}$$where \(A^\circ \) and \({\bar{A}}\) denote the interior and closure of the measurable set A.

-

(2)

For \(l\ge 0\), \(\{x:I(x)\le l\}\) is a compact set in S.

We refer to I as the rate function and \(\varepsilon \) as the rate.

It is well known that an equivalent way of defining the LDP is given by the result below (see [4, Theorem 1.5 and 1.8]).

Theorem 2.1

A family of probability measures \(\{\mu ^\varepsilon \}_{\varepsilon >0}\) satisfies an LDP with rate function I and rate \(\varepsilon \) if and only if for every bounded continuous function \(\Phi :S\rightarrow \mathbb {R}\),

and for every \(l\ge 0\), \(\{x\in S: I(x)\le l\}\) is compact in \(\mathcal {B}(S)\).

The following result is used often in the sections that follow [4, Theorem 1.16].

Theorem 2.2

(Contraction principle) Suppose \((S',\mathcal {B}(S'))\) is another Polish space and \(F:(S,\mathcal {B}(S))\rightarrow (S',\mathcal {B}(S'))\) be a continuous map. If the family \(\{\mu ^\varepsilon \}_{\varepsilon >0}\) satisfies LDP with rate function I and rate \(\varepsilon \), then the family \(\{\nu ^\varepsilon \doteq \mu ^\varepsilon \circ F^{-1}\}_{\varepsilon >0}\) also satisfies LDP on \(S'\) with the rate \(\varepsilon \) and the rate function \(I'\) given by

One of the main goals of this work is to prove that

satisfies the LDP with appropriate rate and rate function for the GFBM X in (2.1). From the existing literature, three common approaches can be used to arrive at the desired result. We briefly describe these approaches and point out the difficulties or lack thereof in adopting these approaches to our case.

2.2.1 Using Gartner-Ellis theorem [11, Section 4.5.3]

In this approach, we study the logarithm of moment generating function of finite dimensional distribution of \(X^\varepsilon \) and its limiting behavior as \(\varepsilon \rightarrow 0\). It is also required to prove the exponential tightness (See [11, Page 8]) of the process. In contrast, using the weak convergence approach described briefly below, we are only required to show tightness of some appropriate family of processes.

2.2.2 Using LDP of \(\{\varepsilon B\}_{\varepsilon >0}\) and Theorem 2.2

It is well known that the family of \(\mathcal {C}\)- valued random variables \(\{\varepsilon B\}_{\varepsilon >0}\) satisfies LDP [11, Theorem 5.2.3] with rate \(\varepsilon ^2\) and rate function \(I_B:\mathcal {C}\rightarrow [0,\infty ]\) given by

Remark 2.1

Fix \(b(\varepsilon )\) such that

Suppose an S-valued process A on [0, T] such that \(\{\varepsilon A(\varepsilon ^{-1} \cdot )\}_{\varepsilon >0}\) satisfies an LDP with rate function I and rate \(\varepsilon \) and \(\{\sqrt{\varepsilon } A(\varepsilon ^{-1}\cdot )\}_{\varepsilon >0}\) is weakly convergent to a non-trivial distribution. Then it is of interest to study the asymptotic behavior \(\{b(\varepsilon )\sqrt{\varepsilon } A(\varepsilon ^{-1}\cdot )\}_{\varepsilon >0}\) which is in some sense, in-between the above behaviors. The process \(\{b(\varepsilon )\sqrt{\varepsilon } A(\varepsilon ^{-1}\cdot )\}_{\varepsilon >0}\) is said to satisfy a moderate deviations principle if it satisfies an LDP with some rate function \({\bar{I}}\) and rate \(b(\varepsilon )^2\).

Clearly, both the families \(\{\sqrt{\varepsilon }B\}_{\varepsilon >0}\) and \(\{b(\varepsilon ) B\}_{\varepsilon >0}\) satisfy LDP with same rate function \(I_B\) and rates \(\varepsilon \) and \(b(\varepsilon )^2\), respectively. But the LDP of \(\{b(\varepsilon )B\}\) can be framed as the MDP by noting that the laws of \(\{b(\varepsilon )\sqrt{\varepsilon }B(\cdot \varepsilon ^{-1})\}\) and \(\{b(\varepsilon ) B\}\) are equal. In other words, the rate functions corresponding to LDP and MDP are the same. It is just the rates that change accordingly. Since GFBM X as defined in (2.1) is a linear function of Brownian motion B, similar comments can be made for X. Hence, without loss of generality, we just consider the large deviation behavior as the driving noise in our case is a Brownian motion.

Suppose a \(\mathcal {C}\)-valued process defined by \(Y^\varepsilon \doteq F(\varepsilon B)\), for a continuous function \(F:\mathcal {C}\rightarrow \mathcal {C}\). Using Theorem 2.2, we can conclude that \(\{Y^\varepsilon \}_{\varepsilon >0}\) satisfies LDP with rate \(\varepsilon ^2\) and rate function \(I_Y:\mathcal {C}\rightarrow [0,\infty ]\) given by

This approach was used in [6, Theorem 3.1] to prove the LDP of the standard FBM:

It can be checked that F as defined above is a continuous map from \(\mathcal {C}\) to \(\mathcal {C}\). (In fact, a more general class of processes are considered in [6] where the Brownian motion B is replaced by any process with stationary increments satisfying an LDP.) Unfortunately, we cannot adopt this method to our case as the map defined by

fails to be continuous from \(\mathcal {C}\) to \(\mathcal {C}\). This is mainly due to the presence of the term \(s^{-\frac{\gamma }{2}}\) in the integral and without having strong decaying behavior of \(\xi (s)\) as \(s\rightarrow 0\), the above integral may not be well-defined. Indeed, we consider the following: Fix \(\gamma \in (0,1)\) and choose \(\xi \in \mathcal {C}\) such that \(\xi (s)= s^{\beta }\) on \([0,\delta _1]\), with \(0<\delta _1<t\) and \(0<\beta <\frac{\gamma }{2}\). This choice is sufficient to illustrate the effect of \(s^{-\frac{\gamma }{2}}\), although \(\xi \) with a more general form can also be considered. With the above choice of \(\xi \), we have for any \(0<\delta<\delta _1<t\),

It is easy to see that the set of all functions \(\xi \in \mathcal {C}\) satisfying the above property form an open set in \(\mathcal {C}\). Therefore, we can conclude that the map G is not well defined on at least an open set of \(\mathcal {C}\). In other words, we cannot use Theorem 2.2 on the map G. However, we note that the rate function corresponding to \(Y^\varepsilon \) is obtained from the rate function corresponding to \(X^\varepsilon \) by directly evaluating it as \(\gamma =0\). Compare [6, Theorem 3.1] and Theorem 3.1.

2.2.3 Using weak convergence approach [4, Section 3.2]

This approach can be used to study the large deviation behavior of any \(\mathcal {C}\)-valued family of random variables defined as \(\{Z^\varepsilon \doteq R(\varepsilon B) \}\), where \(R:\mathcal {C}\rightarrow \mathcal {C}\) is Borel measurable. The key tool used in this approach is the following variational representation of exponential functionals of Brownian motion B.

Theorem 2.3

[3, Theorem 3.1] For a bounded Borel measurable functional \(\Psi :\mathcal {C}\rightarrow \mathbb {R}\),

Here, \(\mathcal {A}\) is the set of \(\mathcal {F}_t\)- progressively measurable processes \(v(\cdot )\) such that

In what follows, we sometimes refer to elements of \(\mathcal {A}\) as controls. Using the above result, we are set to prove the LDP of \(Z^\varepsilon =R(\varepsilon B)\) in the following way.

For \(\varepsilon >0\) and any bounded continuous function \(\Phi :\mathcal {C}\rightarrow \mathbb {R}\), we first rewrite (2.10) by choosing \(\Psi (B)= \varepsilon ^{-2}\Phi \circ R(\varepsilon B)= \varepsilon ^{-2}\Phi (Z^\varepsilon )\) and defining \(Z^{\varepsilon ,v}\doteq R(\varepsilon B +\int _0^\cdot v(s)ds)\):

To get the final equality, we re-defined \(\varepsilon v\) as v. Note that this does not change the right-hand side. To prove the LDP for \(\{Z^\varepsilon \}_{\varepsilon >0}\), we now work with the expression on the left hand side above. Note that this resembles the left-hand side of (2.7) without the limit.

Using Theorem 2.1, to conclude that \(\{Z^\varepsilon \}_{\varepsilon >0}\) satisfies LDP, it remains to show that

-

(1)

the expression in (2.11) has a limit;

-

(2)

this limit is equal to

$$\begin{aligned} \inf _{x\in \mathcal {C}}\left[ I(x)+ \Phi (x)\right] , \end{aligned}$$for some lower semi-continuous function \(I:\mathcal {C}\rightarrow [0,\infty ]\) with compact level sets.

To this end, we require the following lemma [4, Page 62] which states that there are nearly optimal controls of the right-hand side in (2.11) which are almost surely finite in \(L^2([0,T])\) norm.

Lemma 2.1

For every \(\delta >0\), there is \(M<\infty \) such that

for every \(\delta >0\). Here, \(\mathcal {A}_{b,M}\) is a subset of \(\mathcal {A}\) that contains \(v\in \mathcal {A}\) such that \(\int _0^Tv(s)^2ds\le M,\quad \mathbb {P}-\) a.s.

In the above, the maps F and R are chosen to be \(\mathcal {C}\)-valued for simplicity. They are allowed to take values in any Polish space.

3 LDP for the generalized R–L FBM

In this section, we prove the LDP result of the process \(\{X^\varepsilon \}_{\varepsilon >0}\) in (2.8).

Theorem 3.1

Assuming that \((\alpha ,\gamma )\) satisfy (2.6), \(\{X^\varepsilon \}_{\varepsilon >0}\) satisfies an LDP with rate \(\varepsilon ^2\) and rate function \(I_X:\mathcal {C}\rightarrow [0,\infty ]\) given by

Here \(\mathcal {S}_\xi \), for \(\xi \in \mathcal {C}\), is the collection of all \(v\in L^2([0,T])\) such that

Remark 3.1

This result for the case where \(\gamma =0\) can be obtained as a special case of [6, Theorem 3.1]. In the above theorem, we get the rate function in an implicit form. This is not a consequence of the \(s^{-\frac{\gamma }{2}}\) term in the definition of \(X(\cdot )\), but because of the \((t-s)^\alpha \) term. To see this, one can take \(\alpha =0\) and proceed with the same proof. The rate function in this case turns out to be

whenever \(\xi \) is absolutely continuous on [0, T] and \(\infty \), otherwise. Note that the hypothesis of the above theorem assumes \(\alpha >0\), but this will not be an issue in adopting the same proof.

Remark 3.2

This result is used repeatedly in the sections that follow. The techniques of the proof break down as \(\gamma \rightarrow 1\). This is mainly because the process

is not well defined, \(\mathbb {P}-\) a.s.

Define

This process will be used in the following two lemmas.

Lemma 3.1

For any bounded continuous function \(\Phi :\mathcal {C}\rightarrow \mathbb {R}\),

with \(I_X\) as defined in the statement of Theorem 3.1.

Proof

Fix \(\delta >0\). From Lemma 2.1, we have

for every \(\delta >0\). Recall that \(A_{b,M}\) is a subset of \(\mathcal {A}\) that contains \(v\in \mathcal {A}\) such that \(\int _0^Tv(s)^2ds\le M,\; \mathbb {P}-\text {a.s.}\)

Now consider a \(\delta \)-optimal control \(v^\varepsilon \in \mathcal {A}_{b,M}\) to the above infimum, that is, \(v^\varepsilon \) satisfies

Since \(\int _0^T v^\varepsilon (s)^2ds \le M\), \(\{v^\varepsilon \}_{\varepsilon >0}\) is weakly compact in \(L^2([0,T])\), i.e., there exists a subsequence \(\varepsilon _n\) and a \(v\in L^2([0,T])\) such that \(\int _0^T v^{\varepsilon _n}(s)u(s)ds\rightarrow \int _0^T v(s) u(s)ds \), for every \(u\in L^2([0,T])\).

For now, let us assume that the family of \(\mathcal {C}\times L^2([0,T])\) - valued random variables \(\{(X^{\varepsilon ,v^\varepsilon },v^\varepsilon )\}_\varepsilon \) is tight. Let \(\varepsilon _n\) be the converging subsequence with \(({\bar{X}}^v, v)\) as the corresponding weak limit and write \((X^{\varepsilon _n,v^{\varepsilon _n}},v^{\varepsilon _n})\) as \((X^n,v^n)\) when there is no ambiguity. From the Skorohod representation theorem, we have a probability space \(\left( \Omega ^*,\mathcal {F}^*,\mathbb {P}^*\right) \) in which

and the distributions of B, \(\{X^n\}\), \(\{v^n\}\), \({\bar{X}}^v\) and v remain the same under \(\mathbb {P}^*\) and \(\mathbb {P}\). We have

Here the second inequality follows from Fatou’s lemma. From the arbitrariness of \(\delta \), we have the result. The construction of \((\Omega ^*,\mathcal {F}^*,\mathbb {P}^*)\) is necessary to characterize the limit points \(({\bar{X}}^v, v)\).

It now remains to show that \(\{(X^{\varepsilon ,v^\varepsilon },v^\varepsilon )\}_{\varepsilon >0}\) is in fact tight in \(\mathcal {C}\times L^2([0,T])\). To that end, \(\{v^{\varepsilon }\}_{\varepsilon >0}\) is precompact in \(L^2([0,T])\) under weak\(^*\) topology. Indeed, since any closed ball is compact in \(L^2([0,T])\) under weak\(^*\) topology and \(\int _0^Tv^{\varepsilon }(s)^2ds\le M\). Let \(\varepsilon _n\) (denoted simply by n) be the converging subsequence and v be the corresponding limit. Note that we have only concluded that the laws of \(v^n\) converge weakly to the law of v. From the Skorohod representation theorem, we can infer that

Finally, we show that \(X^{\varepsilon _n,v^{\varepsilon _n}}\) (written as \(X^n\)) converges almost surely in \(\mathcal {C}\) and also characterize the limit. Note that

Recall that

and from the \(\mathbb {P}^*-\) a.s. convergence of \(\{v^n\}\), we know that for any \(u\in L^2([0,T])\),

And since \( (t-s)^{\alpha }s^{-\frac{\gamma }{2}}\in L^2([0,T])\), for every \(t\in [0,T]\), we have

Consider the following: for \(1>h>0\), \(\mathbb {P}^*-\) a.s., we have

where

and the last inequality follows since \(\alpha >0\) and \(0\le \gamma <1\). In the above, we have used the fact that

are uniformly bounded in n. To summarize, we have proved that \(X^n_2\) is \(\alpha \)-Hölder continuous, \(\mathbb {P}^*-\) a.s. \(X^n_2\) is clearly uniformly bounded in n. Indeed, from (3.3) (note that this is valid for every \(0\le h\le T\)) with \(t=0\),

Since \(\{X^n_2\}\) is uniformly bounded and equicontinuous in \(\mathcal {C}\), \(\mathbb {P}^*-\) a.s., the Arzelà-Ascoli theorem gives us the precompactness of \(\{X^n_2\}\), \(\mathbb {P}^*-\) a.s.

We now show that any limit point of \(\{X^n_2\}\) is given by

In other words, \(\{X^n_2\}\) is convergent in \(\mathcal {C}\), \(\mathbb {P}^*-\) a.s. To show this, for \(t\in [0,T]\), we have

since \(v^n\rightarrow v\), \(\mathbb {P}^*-\) a.s.

We now shift our focus on to \(X^n_1\). Note that from [2, Theorem 1.6], for every \(\delta >0\), there is a compact set \(\textsf{K}_\delta \subset \mathcal {C}\) such that \(\mathbb {P}(X\in \textsf{K}_\delta )>1-\delta \). For every n,

To understand the second inequality, note that for every compact set \(\textsf{K}\subset \mathcal {C}\), from the Arzelà-Ascoli theorem, there are two parameters that correspond to \(\textsf{K}\): C, the uniform bound in n of the \(\Vert .\Vert _\infty \) norm and \(\rho (\cdot )\), the modulus of continuity of the elements in \(\textsf{K}\). Checking the following parameters for \(\{\varepsilon _n X\}\), we can clearly see that C and \(\rho (\cdot )\) can be used to conclude the uniform boundedness and equicontinuity of \(\{\varepsilon _n X\}\). Hence, \(\{\varepsilon _n X\in \varepsilon _n \textsf{K}\}\subset \{\varepsilon _n X\in \textsf{K}\}\). Therefore, \(\{\varepsilon _n X\}\) is tight in \(\mathcal {C}\). This completes the proof of the lemma. \(\square \)

Lemma 3.2

For any bounded continuous function \(\Phi :\mathcal {C}\rightarrow \mathbb {R}\),

where \(I_X\) is as defined in the statement of Theorem 3.1.

Proof

Choose a \(\delta \)-optimal \(x^*\in \mathcal {C}\) of the right-hand side of (3.4), i.e.,

and also choose a \(\delta \)-optimal \(v^*\in \mathcal {S}_{x^*}\), i.e.,

We note here that \(v^*\) is non-random, from the definition of \(\mathcal {S}_x^*\), as \(x^*\) is non-random. Now by (2.12), we obtain

To proceed further, recall the fact from the proof of Lemma 3.1 that \(X^{\varepsilon ,v^*}(\cdot )\) converges weakly to

which is non-random. Since \(v^*\in \mathcal {S}_{x^*}\),

Thus we obtain

Here the first inequality follows from the last display in (3.5) by applying the continuous mapping theorem and the weak convergence of \(X^{\varepsilon ,v^*}(\cdot )\) to \(X^{0,v^*}(\cdot )\), and the second inequality follows since \(X^{0,v^*}\) is non-random. From the arbitrariness of \(\delta \), we have the result. \(\square \)

Lemma 3.3

For every \(l\ge 0\), \(\{x\in \mathcal {C}:I_X(x)\le l\}\) is compact in \(\mathcal {C}\).

Proof

Fix \(l\ge 0\) and consider a sequence \(\{\xi _n\}_{n\in \mathbb {N}}\subset \{\xi :I_X(\xi )\le l\}\). Now, for every \(n\in \mathbb {N}\), there exists \(v_n\in \mathcal {S}_{\xi _n}\) such that

Therefore, \(\{v_n\}_{n\in \mathbb {N}}\) is precompact in \(L^2([0,T])\) under weak\(^*\) topology. Denote the converging subsequence again by n and the limit by \({\bar{v}}\).

Consider

From the proof of Lemma 3.1, it is clear that \(\{\xi _n\}_{n\in \mathbb {N}}\) is precompact in \(\mathcal {C}\). Let \({\bar{\xi }}\) be a sequential limit of \(\{\xi _n\}\) along a subsequence, which we again denote by n. Also, we have

Clearly, \({\bar{v}}\in \mathcal {S}_{{\bar{\xi }}}\) and \(I_X({\bar{\xi }})\le \frac{1}{2}\int _0^T {\bar{v}}(s)^2ds\le l\). Hence, \({\bar{\xi }}\in \{\xi :I_X(\xi )\le l\}\). This proves the desired result. \(\square \)

Proof of Theorem 3.1

Combining Lemmas 3.1, 3.2 and 3.3, it is clear that from Theorem 2.1, we have the LDP of \(\{X^\varepsilon \}_{\varepsilon >0}\). \(\square \)

The following result gives the expression for the rate function \(I_X\) at \(\xi \) explicitly in terms of \(\xi \), rather than as an optimal value to an optimization problem.

Lemma 3.4

Suppose \(\mathcal {L}[f]\) denotes the Laplace transform of f, whenever it is defined. Then,

whenever \(\xi \) is absolutely continuous on [0, T].

Proof

To begin with, we consider \({\bar{u}}\in L^2([0,\infty ))\) such that \(s^{-\frac{\gamma }{2}}{\bar{u}}\in L^2([0,\infty ))\). Now define a continuous function \({\bar{\xi }}\) on \([0,\infty )\) in the following way:

Recall that the Laplace transform of a function f on \([0,\infty )\) is defined as

whenever the integral is finite. Since \(|{\bar{\xi }}(t)|\le Ct^{1+\alpha }\), for some \(C>0\), then \(\mathcal {L}[{\bar{\xi }}]\) is well defined. We are now in a position to consider the Laplace transform of \({\bar{\xi }}\). We have

Therefore,

Now, suppose the inverse Laplace transform \(\mathcal {L}^{-1}\) of the right hand side above exists. Then,

where \(\mathcal {L}^{-1}[F(p)](t)\) is defined (see [8, Page 42]) as

whenever F(p) is analytic for \(\Re (p)>\eta \). Since \(|{\bar{\xi }}(t)|<Ct^{1+\alpha }\), we have the following:

From [8, Section 2.1], \(\mathcal {L}[{\bar{\xi }}](p)\) is analytic for \(\Re (p)>0\). Therefore, \(|p^{\alpha +1}\mathcal {L}[{\bar{\xi }}](p)|\le C_1|p|^\alpha \) and \(p^{\alpha +1}\mathcal {L}[{\bar{\xi }}](p)\) is analytic for \(\Re (p)>0\). Hence, taking \(c>0\) in (3.7) gives a convergent integral. In other words, the definition in (3.7) is well defined for \(c>0\) and the inverse Laplace transform of \(p^{\alpha +1 } \mathcal {L}[{\bar{\xi }}](p)\) exists. To summarize, we have our desired result in (3.6). \(\square \)

4 LDP for fluid queues with GFBM input

The main content of this section is the study of LDPs in the context of a stochastic fluid queue with GFBM input. In particular, we focus our attention on two processes: workload process and running maximum process which will be defined below.

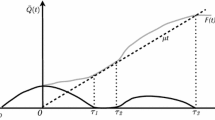

We consider a stochastic fluid queue with the GFBM X in (2.1) as the arrival process, and a deterministic service rate \(k>0\). In particular, the workload process V(t) (assuming that \(V(0)=0\)) is given by

We also define another process that is closely related to V(t) viz., the running maximum process

Recall that for a stationary input process X (stationary increments), the workload process V(t) in (4.1) has the same distribution as the following:

This equivalent-in-distribution expression is often used to derive the stationary distribution of V(t) as \(t\rightarrow \infty \) (we defer the analysis of steady state of V(t) to Sect. 5). It can be shown that for an input process with stationary increments, it is also equivalent in distribution to the running maximum process M(t). However, this approach does not apply to the queueing process with GFBM input, since it has non-stationary increments.

In [6], as a special case of [6, Theorem 4.1], the authors have studied the LDP for the workload process V(t) with the FBM process Y in (2.9) as the input, and proved that \( F(\varepsilon Y)(T)\) satisfies an LDP with rate \(\varepsilon ^2\) and an appropriate rate function. (In fact, their result applies to a more general process for Y in (2.9) with the Brownian motion B being replaced by a stationary process satisfying an LDP.) It is well known that the map \(F:\mathcal {C}\rightarrow \mathcal {C}\) (reflection mapping) is continuous (see, e.g., [7, Chapter 6], [33, Chapter 13.5]). Therefore, we can apply the contraction principle and obtain the sample path LDP for the process \(\{ F(\varepsilon X)(t): t\ge 0\}\). In the following, we study the LDP of \( F(\varepsilon X)(T)\) at a fixed time T, for which the rate function can be characterized explicitly.

Let

Theorem 4.1

Assume that \((\alpha ,\gamma )\) satisfy (2.6). \(\{ V^\varepsilon \}\) satisfies an LDP with rate \(\varepsilon ^2\) and rate function \(I_V:\mathbb {R}_+\rightarrow [0,\infty ]\),

Moreover, for \(\lambda \ge 0\), we have

where

and

Proof

From the continuity of the map \(F:\mathcal {C}\rightarrow \mathcal {C}\) and Theorem 2.2, we know that \( V^\varepsilon \) satisfies the LDP with rate \(\varepsilon ^2\) and rate function \(I_V:\mathbb {R}_+\rightarrow [0,\infty ]\) given in (4.4).

The proof for the result in (4.5) follows exactly along the lines of the proof of [6, Theorem 4.1]. We adapt that proof for our process. From the LDP of \(\{ V^\varepsilon \}\) and Theorem 2.1, we know that for any Borel set \(A\subset \mathbb {R}_+\),

For \(\lambda \ge 0\), taking \(A=[\lambda ,\infty )\), we have

To prove (4.5), it suffices to show that

Since

is continuous in \(\lambda \), proving that

automatically implies that

Therefore, we only show (4.8).

The left-hand side of (4.8) can be rewritten as

where,

Clearly,

with

Then,

The infimum inside can be solved explicitly using [6, Lemma 3.3 (ii)]. We then get

and the minimizer is given as follows:

This proves the result. \(\square \)

We now prove an LDP for the running maximum process \(M(\cdot )\). Define \(M^\varepsilon \) by

Lemma 4.1

Assume that \((\alpha ,\gamma )\) satisfy (2.6). \(\{M^\varepsilon \}\) satisfies an LDP with rate \(\varepsilon ^2\) and rate function \(I_M:\mathbb {R}_+\rightarrow [0,\infty ]\) given by

Moreover, we have

where

Proof

The proof for the result in (4.9) follows exactly along the lines of the proof of [6, Corollary 3.4]. We adapt that proof for our process. From the LDP of \(\{ M^\varepsilon \}\) and Theorem 2.1, we know that for any Borel set \(A\subset \mathbb {R}_+\),

For \(\lambda \ge 0\), taking \(A=[\lambda ,\infty )\), we have

To prove (4.9), it suffices to show that

Since

is continuous in \(\lambda \), proving that

automatically implies that

Therefore, we only show (4.11).

The left-hand side of (4.11) can be rewritten as

where

Clearly,

with

Then,

The infimum inside on the right-hand side can be solved explicitly using [6, Lemma 3.3 (i)]. We then get

and the minimizer is given as follows:

Therefore,

This proves the result. \(\square \)

4.1 Alternative proofs of Theorem 4.1 and Lemma 4.1 using Landau–Marcus–Shepp Asymptotics

In the proofs of Theorem 4.1 and Lemma 4.1, we have used the large deviation asymptotics of \(X^\varepsilon =\varepsilon X\) in Theorem 3.1. Alternatively, these proofs can also be given by using a straightforward application of the well-known Landau–Marcus–Shepp asymptotics [24, Equation (1.1)] which is given as follows. For \(T>0\), suppose \(\{G_t:0\le t\le T\}\) is a centered Gaussian process. Then we have

where \(\sigma ^2\doteq \sup _{0\le s\le T} \mathbb {E}[G_s^2]\).

To apply (4.12) in Theorem 4.1 and Lemma 4.1 (below, we only illustrate this to prove (4.1) using (4.12) as the other case follows exactly along the same lines), we make the following observation:

Since \(\frac{X(T)-X(s)}{\lambda + k(T-s)}\) is a centered Gaussian process, from (4.12), we have

where

In the last equality, we have used (2.2), and \(v_1\) and \(v_2\) are defined in (4.6), (4.7). This gives (4.5).

Even though using (4.12) gives us a shorter proof of Theorem 4.1 and Lemma 4.1, we believe that using the LDP (Theorem 3.1) of \(\{\varepsilon X\}_{\varepsilon >0}\) is a general and robust approach. To see this, we note that (4.12) can be obtained as a consequence of LDP of a general Gaussian process (see [1, Pages 53 and 57]).

5 Long-time behavior of \(M(\cdot )\) and \(V(\cdot )\)

In this section, we first study the existence of the steady state for the processes M and V. Upon showing the existence, we derive the tail asymptotics of the steady state \(M^*\) and \(V^*\) of M(t) and V(t), respectively.

We now briefly describe the method that is adopted.

-

(1)

We first derive a certain type of maximal inequalities and modulus of continuity estimates for the GFBM process X(t) in Lemmas 5.3 and 5.4. Using these and exploiting the self-similarity of X (Lemma 5.5), we establish (uniform in time) sub-exponential tail bounds of M(t) and V(t) for each fixed \(t>0\).

-

(2)

We next prove the existence of a weak limit of the laws of V(t) as \(t\rightarrow \infty \), and the almost sure convergence of M(t) as \(t\rightarrow \infty \). Then using the LDPs of \(\{M^\varepsilon \}_{\varepsilon >0}\) and \(\{V^\varepsilon \}_{\varepsilon >0}\), we derive the tail asymptotics of \(M^*\) and \(V^*\) (a weak limit point of \(\{V(t)\}_{t\in \mathbb {R}_+}\)).

Remark 5.1

In this section, for any analysis related to M(t), we assume that \((\alpha ,\gamma )\) satisfy (2.6) and for any analysis related to V(t) we assume a stronger assumption: \(\alpha >\frac{\gamma }{2}\), in which case, the Hurst parameter \(H \in (1/2,1)\).

Remark 5.2

Throughout the section, \(\delta _0\) is always the positive constant in Corollary A.1. We still occasionally remind the reader of this.

We first give an alternate expression for X that is easily amenable for analysis.

Lemma 5.1

Assume that \((\alpha ,\gamma )\) satisfy (2.6). Then, for \(t\ge 0\), \(\mathbb {P}-\) a.s., the GFBM X in (2.1) can be equivalently represented as

Proof

We begin by recalling Itô’s product rule: for semi-martingales \(Z^1\) and \(Z^2\), for \(0 \le s \le t\),

where \([Z^1,Z^2](\cdot )\) is the cross-quadratic variation of the corresponding martingale component.

Let

Define

Even though \(Z^1(u)\) depends on t, we drop this dependence, because t is fixed throughout. Also observing that \(Z^1(t)=0\). Moreover, \([Z^1,Z^2](\cdot )\equiv 0\). Note that we cannot apply the Itô’s product rule with \(s=0\) since \(Z^1(0)\) is ill-defined for \(\gamma >0\). To overcome this issue, we set \(s=\rho \) and then take \(\rho \rightarrow 0\), and therefore define the process \(X_\rho (t)\) above. Thus applying the Itô’s product rule, we obtain

Now, we take \(\rho \rightarrow 0\) (or along a subsequence). First of all, we have

This follows from the property of Brownian motion: \(\limsup _{\rho \rightarrow 0}\frac{B(\rho )}{ \sqrt{\rho }\log \log (\rho ^{-1})}=\sqrt{2}\), \(\mathbb {P}-\) a.s.; see, e.g., [20, Theorem 2.9.23]. We then have

Indeed,

where the second inequality follows from Tonelli’s theorem. Similarly, one can show that

Using Ito’s isometry along with similar analysis as above, we can also show that

Therefore, we can find a subsequence \(\rho _n \rightarrow 0\) along which we will have

From these and (5.2), we obtain the expression of X(t) in (5.1). \(\square \)

As mentioned earlier we require a maximal inequality for X which is the content of Lemma 5.4 below. For our purposes, we only estimate the maximal inequality over \(0\le s\le 1\). In the following, without loss in generality, we assume \(\delta _0\) in Corollary A.1 is less than one. In the following, \(\textbf{B}(x_1,x_2)\) denotes the Beta function with parameters \(x_1,x_2>0\). We also use the inequality given below, often in what follows. For \(0<x<1\),

for some \(K_\eta \), depending on \(\eta >0\).

In the next two lemmas, we study the the behavior of X(t) in two subintervals,\(0 \le t\le \delta _0\) and \(\delta _0<t \le 1\). These results are used in Theorem 5.1 to ensure that if we condition that maximum of \(X(t)-kt\) over [0, T] is appropriately large, then the maximizer is almost surely attained in the complement of \([0,\delta _0]\).

Lemma 5.2

Assume that \((\alpha ,\gamma )\) satisfy (2.6) and \(\frac{1-\gamma }{2}>\eta >0\). Then,

for \(0\le t\le \delta _0\) and

Here, \(\delta _0\) is as in Corollary A.1.

Proof

Fix \(\rho >0\) and choose \(\delta _0\) from the Corollary A.1 corresponding to \(\rho >0\). Then, from Corollary A.1,

Using this, for \(t\le \delta _0\), we have

In the above, we chose \(\frac{1-\gamma }{2}>\eta >0\) and used the fact in (5.3). This completes the proof. \(\square \)

Lemma 5.3

Assume that \((\alpha ,\gamma )\) satisfy (2.6) and \(\frac{1-\gamma }{2}>\eta >0\). For \(\delta _0<t\le 1\) and \(K>0\),

where

and

Here, \(\delta _0\) is as in Corollary A.1.

Remark 5.3

Since

we have

Proof

Fix \(t>\delta _0\). From Lemma 5.1, using the expression of X(t) in (5.1), we have \(\mathbb {P}-\) a.s.

where we have used change of variables from u to vt in the integral terms to obtain the second inequality.

We now focus on the integral in (5.7). We observe that we cannot directly pull \(\max _{0\le s\le t} B(s)\) out of the integral as \(\int _0^1 (1-v)^\alpha v^{-\frac{\gamma }{2}-1}dv \) is not well-defined. So fixing some \(\frac{1-\gamma }{2}>\eta >0\), we obtain

Here the first inequality follows from Corollary A.1 and the second inequality uses (5.3).

Thus, by (5.7) and (5.8), we obtain for \(t>\delta _0\),

Consider the following event:

On this event,

In the above, we used the fact that

The quantities \(\Lambda \) and \(\Delta \) are as given in (5.5) and (5.6), and in the last inequality, we bound the terms involving t (inside the parenthesis) by 1 to make the quantity inside, uniform in t.

From the above inequality, we have

Therefore,

where the second inequality uses (5.9) and the last uses the maximal inequality of Brownian motion, i.e., \( \mathbb {P}\left( \sup _{0\le s\le t} B(s)>\lambda \right) \le \exp \left( -\frac{\lambda ^2}{2t}\right) . \) Therefore, the inequality in (5.4) holds. \(\square \)

Remark 5.4

In Lemma 5.2 and Eq. (5.10) in the proof of Lemma 5.3, the exponents are \(H-\eta \) and \(H+\eta \) are the consequence of behavior of Brownian motion near \(t=0\) (see Theorem A.1) and away from zero (the maximal inequality of Brownian motion).

The following modulus of continuity type estimate is used in establishing the uniform in t sub-exponential tail bounds of V(t).

Lemma 5.4

Assume that \(\alpha >\frac{\gamma }{2}\). Then, we have the following:

for some \(C=C(K)>0\) such that \(C(K)\uparrow \infty \) as \(K\rightarrow \infty \).

Proof

Consider the event:

We consider the following on A(K). For \(1-\delta _0\le s\le 1\), by (5.1),

We estimate the first integral using Theorem A.1 and Corollary A.1. Choose \(\eta <\frac{\gamma }{2}+\frac{1}{2}-\alpha = 1-H\). We have

for some \(C_1, C_2>0\). To get (5.12), we applied Remark A.2 to uniformly bound \(|B(v)-B(vs)|.\) Finally, we estimate the second term in the similar way:

In the above, to arrive at the final inequality, we bounded the first integral using (5.8), noting that \(\sup _{0\le v\le 1} B(v)(\omega )\le K\) on the event A(K) and used the fact that \(\eta <\frac{\gamma }{2}+\frac{1}{2}-\alpha \). \(C_3\) and \(C_4\) are appropriate constants that are independent of K and s. Defining \(C(K)\doteq \max \{\alpha c (KC_1+C_2), \frac{\gamma c}{2} (KC_3+C_4)\}\) gives the result. \(\square \)

Remark 5.5

In the following, we observe that

is a centered Gaussian process and hence, symmetric (i.e., \({\widetilde{X}}\) and \(-\widetilde{X} \) have the same distribution). Therefore,

Here,

and the ratio of probabilities is simply the conditional probability of the event \(\big \{\inf _{1-\delta _0\le s\le 1}{\widetilde{X}}(s)\le -K\big \}\) conditioned on the occurrence of the event \(\big \{\sup _{1-\delta _0\le s\le 1}{\widetilde{X}}(s)\ge K\big \}\). Since the paths of \({\widetilde{X}}\) are almost surely continuous, this probability approaches 0 as \(K\rightarrow \infty \). Therefore, \(R_K\rightarrow 2\) as \(K\rightarrow \infty .\)

Now by a similar argument in Sect. 4.1, we obtain the following:

where

Again in the last equality, we have used (2.2), with the definitions of \(v_1\) and \(v_2\) in (4.6) and (4.7). From the above, we can conclude that for every \(\delta >0\), there is \(K_0>0\) such that

We remark that Lemma 5.4 is only used in the proof of Theorem 5.3. Even though the alternative estimate in (5.13) is different from that in (5.11), it is still sufficient for the proof of Theorem 5.3. Note that (5.11) is used in Eqs. (5.22) and (5.23) in the proof of Theorem 5.3. Now following the same arguments of the proof with (5.13) instead of (5.11) gives us the similar result to (5.21) with appropriately different constants. We do not give the exact modified version of (5.21) as this estimate is only used to prove tightness of \(\{V(t)\}_{t\ge 0}\) in Corollary 5.1 and this estimate is sufficient.

In the following, we exploit the self-similarity of X and show that the random variables M(t) and V(t) at fixed time \(t>0\), are equal in laws to respective random variables which involve \(C([0,1],\mathbb {R})\)-valued processes \({\bar{Z}}\) and Z which have the same law as that of X when it is defined on [0, 1]. That is, for \(T=1\), \({\bar{Z}}\) and Z are \(C([0,1],\mathbb {R})\) such that

Lemma 5.5

For any fixed \(t>0\), we have

Proof

Fix \(t>0\). Using self-similarity of X and Lemma A.1, the following holds:

where \(\mathcal {P}_X\) is the law of X and \(J_t\) is as defined in (A.1). Then the equal in law relationships in (5.14) and (5.15) follow directly. \(\square \)

Remark 5.6

We stress that the statement of the above lemma only states that for every given \(t>0\), the laws of M(t) and V(t) are expressed as above. To study the sample path of M and V, more detailed analysis is needed, which we do not pursue in this paper.

Theorem 5.1

Assume that \((\alpha ,\gamma )\) satisfy (2.6). There exist \(t_0\doteq t_0(\delta _0, k,H, \eta ,C )\) and \(Q\doteq Q(\delta _0,H,C,\eta ,k)\) such that the following holds:

for \(t>t_0\) and \(\rho >Q\). Here,

Proof

We fix t throughout the proof after choosing it large enough. In the rest of the proof, we suppress the dependence on \(\omega \) for all the random processes that follow. The method of the proof goes as follows: We prove that the maximum

is almost surely less than a positive constant Q (uniformly in large t). This implies that the maximizers for

conditioned on the event

are greater than \(\delta _0\), \(\mathbb {P}-\) a.s. Indeed, if the maximum satisfies

then from the hypothesis,

This implies that the maximizers on [0, 1] are strictly greater than \(\delta _0\).

To that end, we recall from Lemma 5.2 that \(\mathbb {P}-\) a.s.,

for \(0 \le v\le \delta _0\) with \(0<\eta <\frac{1-\gamma }{2}\). Thus, \(\mathbb {P}-\) a.s.,

The maximum of the right-hand side is attained at \(v=\min \{\delta _0, \left( \frac{kt}{Ct^H}\right) ^{\frac{1}{1+\eta -H}} \} \). For

(this ensures that maximum is attained at \(\delta _0\)), we have

It is thus clear that for

the maximizer cannot be in \([0,\delta _0]\). Therefore, for \(\rho >Q\) and \(t>t_0\),

where the last inequality follows from Remark 5.3 and \(\widehat{\Delta }\) is as in the hypothesis. In the first inequality, we used the following: For \(\rho >Q\), \(t>t_0\) and \(v\in [0,1]\), from the inequality in (5.16) and the definition of Q,

\(\square \)

Lemma 5.6

Assume that \((\alpha ,\gamma )\) satisfy (2.6). Then,

Proof

Since M(t) is nondecreasing and is a submartingale with respect to its own filtration, if

then from the submartingale convergence theorem ([20, Theorem 1.3.15]), we know that \( M(\infty )\doteq \lim _{t\rightarrow \infty } M(t)\) exists \(\mathbb {P}-\) a.s. From Theorem 5.1, it is easy to see that sub-exponential tail behavior of M(t) ensures that (5.17) holds. \(\square \)

We will next study the tail behavior of \( M^* \doteq M(\infty )\).

Theorem 5.2

Assume that \((\alpha ,\gamma )\) satisfy (2.6). Then,

where

Proof

We first prove the lower bound. For \(\lambda >0\),

In the above, we used the fact that \(X(\varepsilon ^{-1}){\mathop {=}\limits ^{\textrm{d}}}\varepsilon ^{-H}X(1)\). Since X(1) is a Gaussian random variable with zero mean and unit variance (recall that the choice of c ensures this), we have

A simple computation gives us that the above infimum is \(\theta ^*\) and attained at \(\lambda = \frac{1-H}{H}k\).

To prove the upper bound, we again write for \(\lambda >0\),

Choose \(T_0> \frac{H}{k(1-H)}\). Clearly, for any \(\varepsilon >0\),

We now compute the above terms individually,

and as earlier in Lemma 4.1,

In the above, we applied Lemma 4.1, for \(T=T_0\) and \(\lambda =1\).

We now estimate

In the fourth line above, we partitioned \((\lfloor T_0\varepsilon ^{-1}\rfloor ,\infty )\) into sets of the form \((n-1,n]\), for integer \(n>\lfloor T_0\varepsilon ^{-1}\rfloor \).

In the following, we bound the individual terms. To that end, define

We have

where \(0<\delta <1\). In the first equality, we used the following:

where we have changed s to \(\varepsilon ^{-1}s\) and applied Corollary A.1. The inequality 5.20 is obtained in the similar way as it was done in the proof of Lemma 4.1. Taking \(\delta \downarrow 0\), we have

Therefore, for \(\delta >0\), there exists \(\varepsilon _0>0\) such that for every \(\varepsilon <\varepsilon _0\),

for some constant \(C>0\). This gives us

Putting all the terms together, we have

Now, we take \(T_0\uparrow \infty \) (the second term goes to \(-\infty \)), to get the result. \(\square \)

We now study the tail asymptotics and long-time behavior of \(\{V(t)\}_{t\in \mathbb {R}_+}\).

Theorem 5.3

Assume that \(\alpha >\frac{\gamma }{2}\). For every \(K>0\), there exist \(t_0=t_0(K,\delta _0,k,\alpha ,\gamma )\) and \(Q=Q(K,\delta _0,k,\alpha ,\gamma )\) such that the following holds.

for \(t>t_0\) and \(\rho >Q\). Here, \({\widehat{\Delta }}\) is as given in Theorem 5.1.

Proof

Consider the following set:

Here, C(K) is the same constant that appears in Lemma 5.4. On the event S, we consider the following: We follow a similar argument as in the proof of Theorem 5.1. We fix t throughout the proof after choosing it large enough. In the rest of the proof, we suppress the dependence on \(\omega \) for all the random processes that follow. We now show that on S,

is less than a positive constant Q (uniformly in large t). This implies that the maximizers for

conditioned on the event

are less than \(1-\delta _0\). Indeed, if

then from the hypothesis,

This implies that the maximizers are strictly less than \(1-\delta _0\). To that end, we recall that on the event S,

for \({1-\delta _0\le v\le 1}\). Hence, on S,

The maximum of the right-hand side is attained at

For

(this ensures that maximum is attained at \(1-\delta _0\)), we have

It is thus clear that for

the maximizer cannot be in \([1-\delta _0,1]\).

Therefore, for \(\rho >Q\) and \(t>t_0\),

Above, \(\widehat{\Delta }\) is as in hypothesis of Theorem 5.1. To get (5.24), we used the following: For \(t>t_0\) and \(\rho >Q\), from the above analysis

To get (5.25), we used

Finally, to get (5.26), we applied Lemmas 5.3 and 5.4. \(\square \)

Corollary 5.1

The laws of \(\mathbb {R}_+\) valued random variables \(\{V(t)\}\) have a weak limit point as \(t\rightarrow \infty \).

Proof

From Theorem 5.3, it is clear that for any \(\epsilon >0\), there exists \(\rho _0\) and such that for \(t>t_0\), for some \(t_0\) such that the upper bound in (5.21) is less than \(\epsilon \). From this and Prohorov’s theorem, we have the existence of weak limit point of the law of V(t) as \(t\rightarrow \infty \). \(\square \)

In the following, without loss of generality, we assume that V(t) converges to along every subsequence, almost surely to respective limit points.

Theorem 5.4

Let \(V^*\) be a weak limit point of \(\{V(t)\}_{t\in \mathbb {R}_+}\) as \(t\rightarrow \infty \). Then,

Proof

We have already seen from Lemma 5.5 that for \(t>0\),

Now we consider a sequence \(t_n\uparrow \infty \) such that \(V(t_n)\) converges weakly to \(V^*\). From the above equality of laws, \({\bar{V}}(t_n)\) also converges weakly to \(V^*\). From Skorohod representation theorem, we can without loss of generality, assume that

Therefore, we have

Now we replace t in \({\bar{V}}(t)\) by \(\varepsilon {^{-1}}\) and treat \(t\rightarrow \infty \) as \(\varepsilon \rightarrow 0\). In other words, we have

From Theorem 4.1, we know that \(\varepsilon {\bar{V}}(\varepsilon ^{-1})\) satisfies an LDP. From (5.27), we also know that

where f is a deterministic positive function such that \(f(x)\rightarrow 0\), as \(x \rightarrow 0\), \( \mathbb {P}-\) a.s. Then, we have \( |\varepsilon _n V^*- \varepsilon _n{\bar{V}}(\varepsilon _n^{-1})|=\varepsilon _n f(\varepsilon _n).\)

Now we are in a position to derive the tail behavior of \(V^*\):

Similarly,

From Theorem 4.1, we have

Since the right-hand side of the above equation is independent of the sequence \(\varepsilon _n\rightarrow 0\), we can replace \(\varepsilon _n\) in the above equation with \(\varepsilon \). This gives us

This completes the proof. \(\square \)

5.1 Alternative proof of Theorem 5.2 using the results of Hüsler and Piterbarg [16]

The proof of Theorem 5.2 uses the large deviation asymptotics of the processes \(\{M^\varepsilon \}_{\varepsilon >0}\) (Lemma 4.1) and \(\{V^\varepsilon \}_{\varepsilon >0}\) (Theorem 4.1). But to use these results, it was necessary in the proofs to establish the existence of the limit points of \(\{M(t)\}_{t>0} \) and \(\{V(t)\}_{t>0}\) which was the content of Theorems 5.1 and 5.3, respectively. Alternatively, the proof can be given as a direct application of a result by Hüsler and Piterbarg [16]. Before we state the result in [16], we recall the following definition: A centered self-similar Gaussian process (with Hurst parameter \(0<H<1\)) with continuous sample paths \(\{Z(t)\}_{t>0}\) is called locally stationary self-similar if for some positive K and \(0<\eta \le 2\),

Theorem 5.5

[16, Theorem 1] Suppose that \(\{Z(t)\}_{t>0}\) is a locally stationary self-similar Gaussian process. Then, as \( \lambda \rightarrow \infty ,\)

Here, \(C_\eta \) is a positive constant (its explicit form is given in [16]) and

and \(\Psi \) is the tail distribution function of standard normal random variable.

In Lemma A.2, we show that the GFBM \(X(\cdot )\) is locally stationary. Hence, from Theorem 5.5, we have the following: In this case, with \(A= \sqrt{2\theta ^*}\),

In the last equality, we used the tail behavior of a standard normal random variable (\(\Psi \) is its tail distribution function). This shows that [16, Theorem 1] can be applied to prove Theorem 5.2.

References

Azencott, R., Guivarc’h, Y., Gundy R.: Grandes déviations et applications. In: Ecole d’été de probabilités de Saint-Flour VIII-1978, pp. 1–176. Springer (1980)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1968)

Boué, M., Dupuis, P.: A variational representation for certain functionals of Brownian motion. Ann. Probab. 26(4), 1641–1659 (1998)

Budhiraja, A., Dupuis P.: Analysis and approximation of rare events. Representations and Weak Convergence Methods. Series Prob. Theory and Stoch. Modelling, 94 (2019)

Cao, J., Cleveland, W.S., Lin, D., Sun, D.X.: On the nonstationarity of Internet traffic. In: Proceedings of the 2001 ACM SIGMETRICS international conference on Measurement and modeling of computer systems, pp. 102–112 (2001)

Chang, C.-S., Yao, D.D., Zajic, T.: Large deviations, moderate deviations, and queues with long-range dependent input. Adv. Appl. Probab. 31(1), 254–278 (1999)

Chen, H., Yao, D.D.: Fundamentals of Queueing Networks: Performance, Asymptotics, and Optimization. Stochastic Modelling and Applied Probability (SMAP, volume 46). Springer (2001)

Davies B.: Integral Transforms and Their Applications. Texts in Applied Mathematics (TAM, volume 41). Springer (2002)

Dȩbicki, K., Hashorva, E., Liu, P.: Extremes of \(\gamma \)-reflected Gaussian processes with stationary increments. ESAIM Probab. Stat 21, 495–535 (2017)

Dȩbicki, K., Mandjes, M.: Open problems in Gaussian fluid queueing theory. Queueing Syst. 68(3), 267–273 (2011)

Dembo, A., Zeitouni, O.: Large Deviations Techniques and Applications. Stochastic Modelling and Applied Probability (SMAP, volume 38). Springer (2009)

Duncan, T.E., Jin, Y.: Maximum queue length of a fluid model with an aggregated fractional Brownian input. Markov Processes and Related Topics: A Festschrift for Thomas G. Kurtz 4, 235–251 (2008)

Ganesh, A.J.: Big Queues. Springer, Berlin (2004)

Hashorva, E., Ji, L.: Approximation of passage times of \(\gamma \)-reflected processes with FBM input. J. Appl. Probab. 51(3), 713–726 (2014)

Hashorva, E., Ji, L., Piterbarg, V.I.: On the supremum of \(\gamma \)-reflected processes with fractional Brownian motion as input. Stoch. Process. Appl. 123(11), 4111–4127 (2013)

Hüsler, J., Piterbarg, V.: Extremes of a certain class of Gaussian processes. Stoch. Process. Appl. 83(2), 257–271 (1999)

Ichiba, T., Pang, G., Taqqu, M.S.: Path properties of a generalized fractional Brownian motion. J. Theor. Probab. 35(1), 550–574 (2022)

Ichiba, T., Pang,G., Taqqu M.S.: Semimartingale properties of a generalized fractional Brownian motion and its mixtures with applications in asset pricing. Working paper (2022). arXiv:2012.00975

Karagiannis, T., Molle, M., Faloutsos, M., Broido, A.: A nonstationary Poisson view of Internet traffic. In: IEEE INFOCOM 2004, vol. 3, pp. 1558–1569. IEEE (2004)

Karatzas, I., Shreve, S.: Brownian motion and stochastic calculus. Graduate Texts in Mathematics (GTM, volume 113). Springer (1991)

Klüppelberg, C., Kühn, C.: Fractional Brownian motion as a weak limit of Poisson shot noise processes-with applications to finance. Stoch. Process. Appl. 113(2), 333–351 (2004)

Konstantopoulos, T., Lin, S.-J.: Macroscopic models for long-range dependent network traffic. Queueing Syst. 28(1), 215–243 (1998)

Leland, W.E., Taqqu, M.S., Willinger, W., Wilson, D.V.: On the self-similar nature of ethernet traffic. In: Conference Proceedings on Communications Architectures, Protocols and Applications, pp. 183–193 (1993)

Marcus, M., Shepp, L.: Sample behavior of Gaussian processes. In: Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, p. 423. Univ of California Press (1972)

Mikosch, T., Resnick, S., Rootzén, H., Stegeman, A.: Is network traffic appriximated by stable Lévy motion or fractional Brownian motion? Ann. Appl. Probab. 12(1), 23–68 (2002)

Norros, I.: On the use of fractional Brownian motion in the theory of connectionless networks. IEEE J. Sel. Areas Commun. 13(6), 953–962 (1995)

Pang, G., Taqqu, M.S.: Nonstationary self-similar Gaussian processes as scaling limits of power-law shot noise processes and generalizations of fractional Brownian motion. High Freq. 2(2), 95–112 (2019)

Park, K., Willinger, W.: Self-similar network traffic: an overview. Self-Similar Network Traffic and Performance Evaluation, pp. 1–38 (2000)

Pipiras, V., Taqqu, M.S.: Long-Range Dependence and Self-Similarity. Cambridge University Press, Cambridge (2017)

Prabhu, N.U.: Stochastic Storage Processes: Queues, Insurance Risk, and Dams, and Data Communication. Springer, Berlin (1998)

Uhlig, S.: Non-stationarity and high-order scaling in TCP flow arrivals: a methodological analysis. ACM SIGCOMM Comput. Commun. Rev. 34(2), 9–24 (2004)

Wang, R., Xiao, Y.: Exact uniform modulus of continuity and Chung’s LIL for the generalized fractional Brownian motion. J. Theor. Probab. 35, 2442–2479 (2022)

Whitt, W.: Stochastic-Process Limits: An Introduction to Stochastic-Process Limits and Their Application to Queues. Springer, Berlin (2002)

Willinger, W.: Traffic modeling for high-speed networks: theory versus practice. Inst. Math. Appl. 71, 395 (1995)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Auxiliary results

Appendix A: Auxiliary results

In the following, we present a few results that used in the Sect. 5. Define a following continuous map from \(\mathcal {C}_T\) to \(\mathcal {C}_1\):

Lemma A.1

For the GFBM X in (2.1), and for any \(T>0\),

where \(\mathcal {P}_X\) is the law of X.

Proof

For a given \(n\in \mathbb {N}\), consider \(0<u_1<u_2<u_3<\cdots<u_n<1\). Now from the self-similarity of X [27, Proposition 5.1], it is clear that for \(n=1\),

In other words,

For \(n>1\), assume that

for any \(A\subset \mathcal {B}(\mathbb {R}^{n-1})\). Now consider the Borel set \(B\in \mathcal {B}(\mathbb {R})\). Then, we have

Since the sets of the form \(A\times B\) generate all the Borel sets of \(\mathbb {R}^{n}\), the self-similarity property (A.2) holds for n and therefore by induction, all finite dimensional distributions. It is trivial to see that the finite dimensional distributions of \(\mathcal {P}_X\circ J_T^{-1}\) and \(\mathcal {P}_X \) are consistent families of measures. Therefore, using the Kolmogorov consistency theorem, we get the desired result. \(\square \)

Remark A.1

The above statement and proof can be generalized to processes with RCLL (right continuous with left limits) paths. Indeed, we construct a similar map \(J_T\) on \(\mathcal {D}_T\) to \(\mathcal {D}_1\) (here, \(\mathcal {D}_T\) is space of functions that right continuous with left limits equipped with the Skorohod topology). We can then proceed exactly as above.

Theorem A.1

[20, Theorem 9.25] For a standard Brownian motion B on [0, 1],

Remark A.2

Clearly, for every \(\rho >0\), there is \(1>\delta _0>0\) such that for every \(\delta <\delta _0\), we have

Corollary A.1

For \(\rho >0\), there is \(1>\delta _0=\delta _0(\rho )>0\) such that whenever \(t>\delta _0\),

Otherwise,

Proof

From Theorem A.1, as seen already for every \(\rho >0\), there is a \(1>\delta _0>0\), such that for every \(\delta <\delta _0\), we have

In particular,

This implies that we have

Assuming that \(t>\delta _0\), we have

It is easy to see that for \(t\le \delta _0\),

Hence, the proof is complete. \(\square \)

Using the technique similar to Theorem 5.4, we have the following.

Alternate proof of Theorem 5.2

We follow the argument almost exactly as in Theorem 5.4. We have already seen from Lemma 5.5 that for \(t>0\),

And from Lemma 5.6, we know that M(t) \(\mathbb {P}-\) a.s. converges to \(M^*\) as \(t\rightarrow \infty \).

Therefore, we have

Now we replace t in \({\bar{M}}(t)\) by \( \varepsilon {^{-1}}\) and treat \(t\rightarrow \infty \) as \(\varepsilon \rightarrow 0\). In other words, we have

From Lemma 4.1, we know that \(\varepsilon {\bar{M}}( \varepsilon ^{-1})\) satisfies an LDP. From (A.3), we also know that

where g is a deterministic positive function such that \(g(x)\rightarrow 0\) as \(x \rightarrow 0\), \( \mathbb {P}-\) a.s. Then, we have \( |\varepsilon M^*- \varepsilon {\bar{M}}( \varepsilon ^{-1})|=\varepsilon g(\varepsilon ).\)

Now we are in a position to derive the tail behavior of \(M^*\):

Similarly,

From Lemma 4.1 with \(T=1\), we have

We now notice that

by changing \(\varepsilon \) to \(\lambda ^{-1}{\bar{\varepsilon }} \). With the same argument as above, for \(\lambda >0\), we have the following

Therefore, choosing \(\lambda > \frac{k(1-H)}{H}\), from (A.4), we have

This completes the proof. \(\square \)

Remark A.3

The intuition for the choice \(\lambda >\frac{k(1-H)}{H}\) in the end of the proof is that (A.4) suggests us a scale invariance of the tail of \(M^*\). Therefore, the decay rate of tail asymptotics is always one of the two cases in (4.10) which scales in \(\lambda \) as \(\lambda ^{2(1-H)}\). This case happens when \(\lambda >\frac{k(1-H)}{H}\).

The next lemma concerns the locally stationary property of the GFBM process and is used in the proof in Sect. 5.1. Recall the definition of local stationarity for a self-similar Gaussian process in (5.28).

Lemma A.2

The GFBM \(X(\cdot )\) defined in (2.1) is locally stationary.

Proof

For \(0\le s\le t\),

To check that

it suffices to prove that the corresponding limits exist for the three terms on the right-hand side of (A.5). Before we proceed to do that, using (2.2) and (2.3), we rewrite \(\mathbb {E}[|X(t)-X(s)|^2]\) and \(\mathbb {E}[(X(t)-X(s))X(s)]\) by making the following change of variables: \(u=s-(t-s)v\) and \(v=xw\) with \(x=\frac{s}{t-s}\) for integrals over [0, s] and \(u=s+ (t-s)v\) for integrals over [s, t]. We have

It is clear that

Recall that \(\mathbf{{B}}(a,b)\) is the Beta function for \(a,b>0\). Since \(-\frac{1}{2}+\frac{\gamma }{2}<\alpha <\frac{1+\gamma }{2}\), we have

Indeed, we have

Here,

Now showing that \(\sup _{y>0} g(y)<\infty \), we are done. To that end, we show that \(\lim _{y\rightarrow 0}g(y)\) and \(\lim _{y\rightarrow \infty }g(y)\) both exist and are finite. Then from continuity of \(g(\cdot )\) in \((0,\infty )\), we know that g(y) is finite for every \(y\in (0,\infty )\) and it will then imply that \(\sup _{y>0} g(y)<\infty \). Consider

where we used \(\frac{1}{2}-\frac{\gamma }{2}>0\) and \(\alpha > -\frac{1}{2}+\frac{\gamma }{2}\). Now consider

In the above, \({\mathop {=}\limits ^{H}}\) denotes that we used L’Hôpital’s rule, as the we have a \(\frac{0}{0}\) form (recall that \(\alpha +\frac{1}{2}-\frac{\gamma }{2}>0\)). To get the final equality, we used the fact that \(\alpha <\frac{1}{2}+\frac{\gamma }{2}.\)

Now consider (observe that it is \((t-s)^H\), instead of \((t-s)^{2H}\)),

The finiteness of

can be proved in the similar way as done for g(y). From (A.9) and (A.10) ((A.11), respectively.), we can conclude that quantity in parenthesis of (A.7) ((A.8), respectively) is continuous in (s, t) when \(\delta <s\le t\), for every \(\delta >0\).

We are finally in a position to prove local stationarity of \(X(\cdot )\). For the first term in (A.5), from (A.7) and continuity of the term in the parenthesis, we know that

exists uniformly for \(t_0>\delta \), for any \(\delta >0\). To see that the corresponding limit of the second term in (A.5) exists uniformly for \(t_0>\delta \), for any \(\delta >0\), we write

and from H-Hölder continuity of function \(f(t)= t^H\), we can conclude the existence of the above limit.

Now, to see that the corresponding limit of the third term in (A.5) exists uniformly for \(t_0>\delta \), for any \(\delta >0\), we write

From the H-Hölder continuity of function \(f(t)=t^H\), we obtain

uniformly for \(t_0>\delta \), for any \(\delta >0\) and from (A.8) and continuity of the quantity inside the parenthesis, we know that

Thus, we have proved that (A.6) holds. This proves the result. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Anugu, S.R., Pang, G. Large deviations and long-time behavior of stochastic fluid queues with generalized fractional Brownian motion input. Queueing Syst 105, 47–98 (2023). https://doi.org/10.1007/s11134-023-09889-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-023-09889-5