Abstract

In order to have more flexibility, several double distributions have been studied in the literature. In this paper, we propose a double inverse Gaussian distribution using random sign mixture transform and study its associated inferences. The maximum likelihood estimation is performed to estimate the parameters. Extensive simulation studies are carried out to examine the performance of the estimators and the corresponding confidence intervals of the parameters. A real data set example is presented to illustrate the procedure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last 40 years, there has been a considerable literature on the double continuous distributions on the real line, some of which explain the word double and some do not. Several of them use the word double as the distribution of the absolute value and some of them use the word reflection. Balakrishnan and Kocherlakota (1985) and Rao and Narasimham (1989) presented the double Weibull distribution and studied its order statistics and linear estimation. Bindu and Sangita (2015) studied the double Lomax distribution as the distribution of the absolute value of the ratio of two independent Laplace distributed variables. Govindarajulu (1966) studied the reflected version of the exponential distribution. The reflected version of the generalized Gamma was studied by Plucinska (1965, 1966, 1967) and the reflected version of the gamma distribution was studied by Kantam and Narasimham (1991). Kumar and Jose (2019) called the distribution of the absolute value of the Lindley variable as the double Lindley distribution, see also Ibrahim et al. (2020). Nadarajah et al. (2013) presented a double generalized Pareto distribution and Halvarsson (2020) studied double Pareto type II distribution. Armagan et al. (2013) presented a generalized double Pareto shrinkage distribution and used it as a prior for Bayesian shrinkage estimation and inferences in linear models.

Most of the above cited double continuous distributions have some limitations such as (i) non-existence of moments for some values of the parameters, see for example Nadarajah et al. (2013) and, (ii) non-existence of some MLEs of the parameters, see for example de Zea Bermudez and Kotz (2010a) and de Zea Bermudez and Kotz (2010b).

Recently, Aly (2018) presented a unified approach for developing double continuous/discrete distributions using two well known transforms (representations), namely

(i) random sign transform (RST):

where Y is a Bernoulli r.v. with parameter \(\beta \) and X is a non-negative r.v. independent of Y. The probability density function (p.d.f.) of \(Z_1\) is given by

where \(f_{X}(\cdot ;\, {\varvec{\theta }})\) is the p.d.f. of a non-negative r.v. X with (vector) parameter \({\varvec{\theta }}\) and \({\overline{\beta }}=1-\beta .\)

(ii) random sign mixture transform (RSMT):

where Y is a Bernoulli r.v. with parameter \(\beta \) while \(X_1, X_2\) are independent non-negative r.v.’s independent of Y.

The p.d.f. of \(Z_2\) is given by

where \(f_{X_j}(\cdot ; \,{\varvec{\theta }}_j), \ j=1,2, \) are the p.d.f.’s of a non-negative r.v.’s \(X_1, X_2\) with (vector) parameters \({\varvec{\theta }}_1, \ j=1,2.\)

If \(X_1\) and \(X_2\) are from the same family of distributions \({{\mathcal {F}}}\), we say that \(Z_2\) has a double \({{\mathcal {F}}}\) distribution.

Note that RST is a special case of RSMT when \(X_1, X_2\) are independent and identically distributed (i.i.d.), i.e. \(X_1 \overset{d}{=}X_2 \overset{d}{=}X.\) Moreover, all the above cited double distributions considered only the case \(\beta ={1\over 2}.\)

The inverse Gaussian distribution, denoted by \(IG (\mu , \lambda ),\) has a p.d.f.

where \(\mu \) is the mean and \(\lambda \) is the shape parameter. This distribution is a very versatile life distribution and its various modifications and transformations have been extensively studied in the literature. We refer the reader to Gupta and Akman (1995, 1996, 1997, 1998), Gupta and Kundu (2011) and the references therein. The inverse Gaussian distribution has also been studied under the umbrella of Birnbaum Saunders distribution. For a survey article on Birnbaum Saunders distribution, we refer to Balakrishnan and Kundu (2019).

We follow the procedure presented by Aly (2018) and study the double inverse Gaussian distribution. Specifically, we consider four double inverse-Gaussian distributions:

-

1.

Double inverse Gaussian 1, DIG-1\((\beta , \mu _1, \lambda _1, \mu _2, \lambda _2),\)

-

2.

Double inverse Gaussian 2, DIG-2\((\beta , \mu _1, \mu _2, \lambda ) \equiv \) DIG-1\((\beta , \mu _1, \lambda , \mu _2, \lambda ),\)

-

3.

Double inverse Gaussian 3, DIG-3\((\beta , \mu , \lambda _1, \lambda _2)\equiv \) DIG-1\((\beta , \mu , \lambda _1, \mu , \lambda _2),\)

-

4.

Double inverse Gaussian 4, DIG-4\((\beta , \mu , \lambda )\equiv \) DIG-1\((\beta , \mu , \lambda , \mu , \lambda ).\)

These distributions are bimodal with one mode on each side of the origin.

The contents of this paper are organized as follows. In Sect. 2, we present the statistical properties of the double inverse Gaussian distributions, including the probability density function, cumulative distribution function (c.d.f.), modes, moment generating function (m.g.f.), raw moments, variance, skewness, kurtosis, Tsallis entropy, Shannon entropy and extropy. The maximum likelihood estimation of the parameters and their asymptotic distributions are studied in Sect. 3. Extensive simulation studies are carried out in Sect. 4 to study the performance of the estimators. In Sect. 5, a real data set application is presented to illustrate the procedure. Finally, some conclusion and comments are presented in Sect. 6.

2 Statistical Properties

In this section, we present a comprehensive summary of the basic properties of the DIG-1 \((\beta , \mu _1, \lambda _1, \mu _2, \lambda _2)\) distribution. These properties include, the p.d.f., c.d.f., modes, m.g.f., raw moments and associated measures, Tsallis entropy, Shannon entropy and extropy. Corresponding properties for the nested distributions DIG-2, DIG-3 and DIG-4 are obtained as special cases when \((\lambda _1= \lambda _2=\lambda ),\) \((\mu _1= \mu _2=\mu )\) and \((\mu _1= \mu _2=\mu , \lambda _1= \lambda _2=\lambda )\), respectively.

2.1 Probability Density Function

The p.d.f of DIG-1 distribution is given by

where

are the p.d.f.’s of inverse Gaussian distributions.

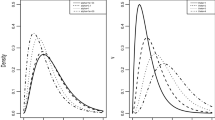

Figure 1 shows the bimodality of the p.d.f. of DIG distributions as a function in \(\beta \). Also, this figure shows that the left (right) peak gets smaller (larger) as \(\beta \) increases.

The DIG-1 distribution has two modes given by

where

are the modes of inverse Gaussian distributions.

2.2 Cumulative Distribution Function

The c.d.f of DIG-1 distribution is given by

where

are the c.d.f.’s of inverse Gaussian distributions and

is the c.d.f. of the standard normal distribution.

Figure 2 shows the c.d.f. of the DIG-1 distribution as a function in \(\beta \). Also, this figure shows that \(F_{Z_2}(0)={\overline{\beta }}\) and hence \(F_{Z_2}(0)\) decreases as \(\beta \) increases.

2.3 Moment Generating Function

The m.g.f. of DIG-1 distribution is given by

where

are the m.g.f.’s of inverse Gaussian distributions.

2.4 Moments and Associated Measures

The rth moment of DIG-1 distribution is given by

where, using the result of Sato and Inoue (1994),

are the rth moments of inverse Gaussian distributions.

Using the last expressions of \(E(Z_2^r)\), the mean, variance, skewness, and kurtosis of DIG-1 distribution are easily obtained. Note that \(E(Z_2)\) does not depend on \(\lambda _1\) and \(\lambda _2\).

Figure 3 shows the mean, variance, skewness, and kurtosis of the DIG-1 distribution as a function in \(\beta \). Also, this figure shows that the skewness can be negative/positive, i.e. the DIG-1 distribution can be skewed to the left/right.

2.5 Tsallis Entropy

Entropies are measures of a system’s variation, instability, or unpredictability. The Tsallis entropy, Tsallis (1988), is an important measure in statistics as index of diversity. It has many applications in areas such as physics, chemistry, biology and economics.

For a continuous r.v. V with p.d.f. \(f_V(v)\), the Tsallis entropy of V is defined as

where S is the support of V.

First, we derive the Tsallis entropy of RSMT \(Z_2\).

where

Note that Tsallis entropy of RSMT \(\mathcal {T}(Z_2) \) is a non-linear function in \(\beta \).

Second, we find the Tsallis entropy of \(X\sim IG(\mu , \lambda )\).

where

is the modified Bessel function of the second kind.

Therefore, Tsallis entropy of DIG-1 distribution is explicitly given by

where \(\mathcal {T}_\alpha (Y)\) is given by (15) and

2.6 Shannon Entropy

Using L’Hospital rule, we have

which is the Shannon entropy of V, Shannon (1948).

As \(\alpha \rightarrow 1\), (14) and (15), are simplified to

where

which agrees with the result obtained by Aly (2018). Note that Shannon entropy of RSMT \(\mathcal {H}(Z_2) \) is a non-linear function in \(\beta \).

Using (16), the Shannon entropy of \(X\sim \textrm{IG}(\mu , \lambda )\) is given by

where \(K_\nu (s), s>0,\) is given by (17). The proof follows by using L’HÔpital’s rule.

The last expression can be calculated using the Mathematica function \(\textrm{BesselK}^{(1,0)}[1/2, \lambda /\mu ]= {\partial \over \partial \nu } K_\nu (\lambda /\mu ) {|}_{\nu =1/2}.\)

Using (20), the Shannon entropy of DIG-1 distribution is given by

where \(\mathcal {H} (Y)\) is given by (21) and

2.7 Extropy

The following relation

is known as the extropy of V (Lad et al. 2015).

Using (14) and (15), with \(\alpha =2\), the extropy of RSMT is given by

Note that extropy of RSMT \(\mathcal {H}(Z_2) \) is a quadratic function in \(\beta \).

Using (16), with \(\alpha =2\), the extropy of \(X\sim \textrm{IG}(\mu , \lambda )\) is given by

where \(K_\nu (z)\) is given by (17).

The extropy of DIG-1 distribution is given by

where

where \(K_\nu (z)\) is given by (17).

Figure 4 shows Tsallis entropy, Shannon entropy and extropy of DIG-1 distribution as a function in \(\beta \) for selected values of the parameters. This figure also shows that Tsallis entropy of DIG-1 distribution decreases as \(\alpha \) increases.

3 Maximum Likelihood Estimation

In this section, we derive the MLEs of the parameters of DIG distributions and their asymptotic distributions. These asymptotic distributions turned out to be multivariate normal which can be used to make statistical inference (confidence intervals and hypothesis testing) about the parameters of DIG distributions.

3.1 DIG-1: Maximum Likelihood Estimation

Let \(z_{2,1}, z_{2,2}, \ldots , z_{2,n}\) be a r.s. from DIG-1\((\beta ,\mu _1, \lambda _1,\mu _2, \lambda _2)\) distribution. The log-likelihood function is given by

where \(\textbf{1}_{A}= 1 (0)\) if A is true (false) is the indicator function.

The MLEs of \((\beta ,\mu _1, \lambda _1,\mu _2, \lambda _2)\) are:

where

Moreover, the asymptotic distribution of the MLEs is given by:

as \(n\rightarrow \infty \),

where \( \overset{d}{\longrightarrow }\) denotes convergence in distribution and MVN stands for multivariate normal distribution.

3.2 DIG-2: Maximum Likelihood Estimation

Let \(z_{2,1}, z_{2,2}, \ldots , z_{2,n}\) be a r.s. from DIG-2\((\beta ,\mu _1, \mu _2, \lambda )\) distribution. The log-likelihood function is given by

The MLE of \(\beta \) is

and the MLEs of \((\mu _1,\mu _2, \lambda )\) are the solutions of the normal equations:

It follows that

Moreover, the asymptotic distribution of the MLEs is given by: as \(n\rightarrow \infty \),

3.3 DIG-3: Maximum Likelihood Estimation

Let \(z_{2,1}, z_{2,2}, \ldots , z_{2,n}\) be a r.s. from DIG-3\((\beta , \mu , \lambda _1, \lambda _2)\) distribution. The log-likelihood function is given by

The MLE of \(\beta \) is

and the MLEs of \((\mu , \lambda _1, \lambda _2)\) are the solutions of the normal equations:

It follows that

where \({\widehat{\mu }}\) is the solution in \(\mu \) of the cubic equation:

where

The discriminant of the above cubic equation is given by

and it is well known that if this is negative, the cubic equation has a unique real root. This will imply that the MLEs \( {\widehat{\lambda }}_1 \) and \( {\widehat{\lambda }}_2\) are also unique.

Moreover, the asymptotic distribution of the MLEs is given by:

as \(n\rightarrow \infty \),

3.4 DIG-4: Maximum Likelihood Estimation

Let \(z_{1,1}, z_{1,2}, \ldots , z_{1,n}\) be a r.s. from DIG-4\((\beta ,\mu , \lambda )\) distribution. The log-likelihood function is given by

The MLEs of \((\beta ,\mu , \lambda )\) are:

Moreover, the asymptotic distribution of the MLEs is given by:

as \(n\rightarrow \infty \),

4 Simulations

The purpose of this section is to perform simulation studies to evaluate the behaviour of the MLEs of the parameters of the proposed four DIG distributions. Such behaviour will be evaluated in terms of the bias, mean-square error of the MLEs and the coverage probability of the 95% confidence intervals of the parameters. All computations in the simulation studies were done using the R language Version 4.0.5 for Windows.

To generate a random sample of size n, \(Z_{2,1}, Z_{2,2}, \ldots , Z_{2,n}\), from DIG-1, DIG-2 and DIG-3 distributions, we use the following algorithms:

-

1.

Generate \(Y_i \sim Bernoulli (\beta ), \ i=1, 2,\ldots ,n;\)

-

2.

Generate \(X_{1,i} \sim IG (\mu _1, \lambda _1), \ i=1, 2,\ldots ,n;\)

-

3.

Generate \(X_{2,i} \sim IG (\mu _2, \lambda _2), \ i=1, 2,\ldots ,n;\)

-

4.

Set \(Z_{2,i}=Y_i\; X_{1,i}- (1-Y_i) \;X_{2,i}, \ i=1, 2,\ldots ,n.\)

To generate a random sample of size n, \(Z_{1,1}, Z_{1,2}, \ldots , Z_{1,n}\), from DIG-4 distribution, we use the following algorithm:

-

1.

Generate \(Y_i \sim Bernoulli (\beta ), \ i=1, 2,\ldots ,n;\)

-

2.

Generate \(X_{i} \sim IG (\mu , \lambda ), \ i=1, 2,\ldots ,n;\)

-

3.

Set \(Z_{1,i}= (2 Y_i-1) \; X_{i}, \ i=1, 2,\ldots ,n.\)

The sample sizes considered in the simulation studies are \(n=50, 100, \ldots , 500.\)

The above process of generating random data from DIG distributions is repeated \(M=10,000\) times. In each of the M repetitions, the MLEs of the parameters and their standard errors (S.E.) were calculated using the expressions given in Subsections 3.1 to 3.4.

Measures examined in these simulation studies are:

-

(1)

Bias of the MLE \({\widehat{\nu }}\) of the parameter \(\nu =\beta , \mu _1, \lambda _1, \mu _2, \lambda _2\):

$$\begin{aligned} \textrm{Bias} ({\widehat{\nu }})= {1\over M} \sum _{i=1}^M ({\widehat{\nu }}_i-\nu ), \end{aligned}$$where \({\widehat{\nu }}_i\) is the MLE of the parameter \(\nu \) in the ith simulation repetition.

-

(2)

Mean square error (MSE) of the MLE \({\widehat{\nu }}\) of the parameter \(\nu \):

$$\begin{aligned} \textrm{MSE} ({\widehat{\nu }})= {1\over M} \sum _{i=1}^M ({\widehat{\nu }}_i-\nu )^2. \end{aligned}$$ -

(3)

Coverage probability (CP) of 95% confidence intervals of the parameter \(\nu \):

$$\begin{aligned} \textrm{CP}(\nu )= {1\over M} \sum _{i=1}^M \textbf{1}_{\{\nu \in (L_i, U_i)\}}, \end{aligned}$$where \(L_i= {\widehat{\nu }}_i - 1.96 \; S.E.({\widehat{\nu }}_i), \qquad U_i= {\widehat{\nu }}_i + 1.96 \; S.E.({\widehat{\nu }}_i), \qquad i=1,2, \ldots , M.\)

The reported figures of the simulation studies support the following conclusions:

-

1.

Figures 5, 6, 7, 8 show that the absolute biases of the MLEs of the parameters are small and tend to zero for large n.

-

2.

Figures 9, 10, 11, 12 show that the MSE of the MLEs of the parameters are small and decrease as n increases.

-

3.

Figures 13, 14, 15, 16 show that the coverage probability of 95% confidence intervals of the parameters is close to the nominal level of 95%.

The above conclusions show that the MLEs of the parameters of the DIG distributions are well behaved for point estimation and confidence intervals.

5 Application

In this section, we apply the proposed DIG models to a real data set for illustration. The description of the data is as follows.

In an online final exam at Kuwait university during Covid-19 shut down, students are requested to write down their solutions on paper sheets, scan these sheets as a “pdf” file and send such file to the instructor via Teams Chat. The time of submitting the solution file of each student is recorded automatically on the Teams system. Here, we are interested in modelling the difference between the time (in minutes) spent to submitting the solution file \(t_i\) and the two hours exam period of 38 students, i.e. \(z_i= t_i -120, \ i=1,2, \ldots , 38.\)

The data set is given below.

− 18.06, −17.45, −9.90, −8.62, −6.14, −3.47, −2.57, −2.43, − 1.56, 0.84, 1.14, 1.26, 1.34, 1.58, 1.81, 1.82, 1.89, 2.11, 2.23, 2.26, 2.33, 2.36, 2.40, 2.43, 2.52, 2.89, 2.92, 3.25, 3.30, 3.30, 3.47, 3.71, 3.77, 4.02, 4.41, 4.85, 5.20, 7.94 where negative (positive) value means the student submitted the solution file earlier (later) than the two hours exam time.

Table 1 shows the MLE’s of the parameters, their standard errors (S.E.’s) and the maximized log-likelihood of the DIG models. Note that for DIG-3, the discriminant \(\Delta = -1.08345\times 10^{17}\), showing that the MLE \({\widehat{\mu }}\) is unique.

Table 2 shows two goodness-of-fit tests, Anderson-Darling (AD) and Cramer von-Misses (CvM) tests. Clearly, this table shows that all DIG models pass the two tests, i.e., we accept the null hypothesis that the data are drawn from each of the DIG models. However, the test statistics (p-value) for DIG-1 and DIG-2 are much smaller (larger) than those for DIG-3 and DIG-4.

Since DIG-2, DIG-3 and DIG-4 models are nested in DIG-1 model, we can use the likelihood ratio test (LRT) to test each of the following hypotheses:

-

(i)

\(H_0: \lambda _1=\lambda _2\) (DIG-2 model) versus \(H_1: \lambda _1\ne \lambda _2\) (DIG-1 model)

-

(ii)

\(H_0: \mu _1=\mu _2\) (DIG-3 model) versus \(H_1: \mu _1\ne \mu _2\) (DIG-1 model)

-

(iii)

\(H_0: \mu _1=\mu _2, \lambda _1=\lambda _2\) (DIG-4 model) versus \(H_1: \mu _1\ne \mu _2, \lambda _1\ne \lambda _2\) (DIG-1 model)

Table 3 shows that DIG-2 model cannot be rejected for the given data.

We have seen above that LR test favour the DIG-2 model to be suitable for the given data. This conclusion is also supported by the Probability-Probability (P-P) plots presented in Fig. 17 and the Quantile-Quantile (Q-Q) plots presented in Fig. 18.

6 Conclusion and Comments

Double inverse Gaussian distribution, presented here, has been formed by a procedure proposed by Aly (2018). This procedure is completely different from the procedures adopted in the literature. The unified approach, adopted here, is quite general and can be used to formulate double distributions for various classes of distributions. A natural extension of such double distributions is to include possible covariates to allow more flexibility for modelling purposes. We hope that the model presented here will be found useful for data analysts.

References

Aly E (2018) A unified approach for developing Laplace-type distributions. J Indian Soc Probab Stat 19:245–269

Armagan A, Dunson D, Lee J (2013) Generalized double Pareto shrinkage. Statistica Sinica 83:119–143

Balakrishnan N, Kocherlakota S (1985) On the double Weibull distribution: order statistics and estimation. Sankhya Indian J Stat Series B 47:161–178

Balakrishnan N, Kundu D (2019) Birnbaum-saunders distribution: a review of models, analysis, and applications (with discussion). Appl Stoch Models Bus Ind 35:4–125

Bindu P, Sangita K (2015) Double Lomax distribution and its applications. Statistica 75:331–342

de Zea Bermudez P, Kotz S (2010a) Parameter estimation of the generalized Pareto distribution-Part I. J Stat Plan Inference 140:1353–1373

de Zea Bermudez P, Kotz S (2010b) Parameter estimation of the generalized Pareto distribution-Part II. J Stat Plan Inference 140:1374–1388

Govindarajulu Z (1966) Best linear estimates under symmetric censoring of the parameters of a double exponential population. J Am Stat Assoc 61:248–258

Gupta RC, Akman O (1995) On the reliability studies of a weighted inverse gaussian model. J Stat Plan Inference 48:69–83

Gupta RC, Akman O (1996) Estimation of coefficient of variation in a weighted inverse gaussian model. Appl Stoch Models Bus Industry 12:255–263

Gupta RC, Akman O (1997) Estimation of critical points in the mixture inverse gaussian model. Stat Papers 38:445–452

Gupta RC, Akman O (1998) Statistical inference based on the length-biased data for the inverse-gaussian distribution. Statistics 31:325–337

Gupta RC, Kundu D (2011) Weighted inverse gaussian - a versatile lifetime model. J Appl Stat 38:2695–2708

Halvarsson D (2020) Maximum likelihood estimation of asymmetric double type II Pareto distributions. J Stat Theor Pract 22(14):1–34

Ibrahim M, Mohammed W, Yousof HM (2020) Bayesian and classical estimation for the one parameter double Lindley model. Pakistan J of Stat Oper Res 16:409-420

Kantam RRL, Narasimham VL (1991) Linear estimation in reflected gamma distribution. Sankhya Indian J Stat Series B 53:25–47

Kumar SC, Jose R (2019) On double Lindley distribution and some of its properties. Am J Math Manag Sci 38:23–43

Lad F, Sanfilippo G, Agrò G (2015) Extropy: complementary dual of entropy. Stat Sci 30:40–58

Nadarajah S, Afuecheta E, Chan S (2013) A double generalized Pareto distribution. Stat Probab Lett 83:2656–2663

Plucinska A (1965) On certain problems connected with a division of a normal population into parts. Zastosow Mat 8:117–125

Plucinska A (1966) On a general form of the probability density function and its application to the investigation of the distribution of rheostat resistence. Zastosow Mat 9:9–19

Plucinska A (1967) The reliability of a compound system under consideration of the system elements prices. Zastosow Mat 9:123–134

Rao AVD, Narasimham VL (1989) Linear estimation in double Weibull distribution. Sankhya Indian J Stat Series B 51:24–64

Sato S, Inoue J (1994) Inverse Gaussian distribution and its application. Electron Commun Japan Part III Fundam Electron Sci 77:32–42

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27:379–423

Tsallis C (1988) Possible generalization of Boltzmann-Gibbs statistics. J Stat Phys 52:479–487

Acknowledgements

We are grateful to the Editor-in-Chief and anonymous referees for their valuable comments which greatly improved the presentation of the paper.

Funding

No funding for this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Almutairi, A., Ghitany, M.E., Alothman, A. et al. Double Inverse-Gaussian Distributions and Associated Inference. J Indian Soc Probab Stat 24, 151–182 (2023). https://doi.org/10.1007/s41096-023-00150-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41096-023-00150-z

Keywords

- Random sign transform

- Random sign mixture transform

- Inverse-Gaussian distribution

- Maximum likelihood estimation

- Monte Carlo simulations