Abstract

We present a unified approach for the development and the study of discrete and continuous Laplace-type distributions. As illustrations, we used the proposed approach to develop and study Laplace-type versions of the generalized Pareto, the Geometric, the Poisson and the Negative Binomial distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Preliminaries

The Laplace distribution (LD) has been studied extensively in the literature. A number of important representations of the LD are given and studied in Kotz et al. [11]. In this paper we present and study in detail two of these representations (transformations) and use them to present a unified approach for the development and the study of discrete and continuous Laplace-type distributions. These transformations are defined next.

Definition 1

The Random Sign Transformation (RST). Assume X and Y are independent rv and Y is \(Bernoulli(\beta ).\) The RST transformation of X is given by

Definition 2

The Random Sign Mixture Transformation (RSMT). Assume \(X_{1},X_{2},Y\) are independent rv and Y is \(Bernoulli(\beta ).\) The RSMT of \(X_{1}\) and \(X_{2}\) is given by

Note that the RST is a special case of the RSMT.

The cumulative distribution function (CDF), the quantile function (QF), the probability density function (pdf) and the moment generating function (MGF) of the random variable V will be denoted, respectively, by \(F_{V}(\cdot ),F_{V}^{-1}(\cdot ),f_{V}(\cdot )\) and \(M_{V}(\cdot ).\) The entropy (Shannon [19]) of V is defined as \(H(V)=-E\left\{ \ln (f_{V} (V)\right\} .\) The reliability of V relative to the random variable U is defined as \(R(V,U)=P(U<V)\). For any \(0\le \alpha \le 1,\) we write \(\overline{\alpha }=1-\alpha .\)

Lemma 1

The following results hold for \(Z_{1}\) of the RST:

and

Proof

By

we get (1). Similarly we get (2). For (3) we note that \(Z_{1}^{+}\) equals \(X^{+}\) if \(Y=1\) and \((-X)^{+}=X^{-}\) if \(Y=0.\) Similarly, \(Z_{1}^{-}\) equals \(X^{-}\) if \(Y=1\) and \((-X)^{-}=X^{+}\) if \(Y=0.\) The proofs of (4) and (5) are straight forward. By (4) and (5) we obtain

and

Hence (6)–(8) are obtained by straightforward computation.

Lemma 2

The following results hold for \(Z_{2}\) of the RSMT:

and

Proof

By

we get (9). Similarly we get (10). For (11) we note that \(Z_{2}^{+}\) equals \(X_{1}^{+}\) if \(Y=1\) and \((-X_{2})^{+}=X_{2}^{-}\) if \(Y=0.\) Similarly, \(Z_{2}^{-}\) equals \(X_{1}^{-}\) if \(Y=1\) and \((-X_{2})^{-}=X_{2} ^{+}\) if \(Y=0.\) The proof of (12) is straight forward. By (12) we obtain (13) using

Definition 3

The difference transformation (DT). Assume \(X_{1} ,X_{2}\) are independent rv. The DT of \(X_{1}\) and \(X_{2}\) is given by

Different versions of the LD are obtained using the DT, the RST and the RSMT as explained next (see, for example, Sections 2.2 and 3.2 of Kotz et al. [11]). The RST when X has the exponential distribution with mean \(\lambda \) \((X\sim EXP(\lambda ))\), results in the LD,

The RSMT, \(Z_{2}=YX_{1}-\left( 1-Y\right) X_{2}\), with \(X_{i}\sim EXP(\lambda _{i}),i=1,2\) and \(X_{1},X_{2}\) and Y are independent, results in the LD,

The DT, \(Z_{3}=X_{1}-X_{2},\) with \(X_{i}\sim EXP(\lambda _{i}),i=1,2\) and \(X_{1}\) and \(X_{2}\) are independent results in the LD,

Note that taking \(\beta =\frac{\lambda _{1}}{\lambda _{1}+\lambda _{2}}\) in (14) results in \(Z_{2}\overset{d}{=}Z_{3}\).

In general, the RSMT and the DT result in two different distributions. For example, when \(X_{1}\) and \(X_{2}\) are iid uniform rv on (0, 1), the DT results in

while the RSMT results in

Another more general example is as follows. Let \(X_{1}\) and \(X_{2}\) be independent rv with \(E(X_{i})=\mu _{i}\) and \(Var(X_{i})=\sigma _{i} ^{2},i=1,2.\) In this case the DT results in \(E(Z_{3})=\mu _{1}-\mu _{2}\) and \(Var(Z_{3})=\sigma _{1}^{2}+\sigma _{2}^{2},\) whereas the RSMT with \(\beta =\frac{\mu _{1}}{\mu _{1}+\mu _{2}}\) results in \(E(Z_{2})=\mu _{1}-\mu _{2}\) and \(Var(Z_{2})=\frac{1}{\mu _{1}+\mu _{2}}\left\{ \mu _{1}\sigma _{1}^{2}+\mu _{2}\sigma _{2}^{2}+\mu _{1}\mu _{2}\left( \mu _{1}+\mu _{2}\right) \right\} .\)

The RST, RSMT and DT are most useful when applied to nonnegative rv to create new rv with negative and positive values. In this paper we will focus our attention on the RST and RSMT when applied to nonnegative rv

In Sects. 2 and 3 we study in details the RST and the RSMT of nonnegative rv. In Sect. 4 we introduce and study the Double Generalized Pareto distributions. In Sect. 5 we introduce and study new double discrete distributions based on the discrete Generalized Pareto, the Geometric, the Poisson, the Binomial and the Negative Binomial distributions. In Sect. 6 we consider the distributions of sums of independent rv obtained using the RST and the RSMT of nonnegative rv. In Sect. 7 we apply the Double Poisson distribution to two real data sets.

2 The RST of a Nonnegative rv

We assume in this section that \(X\ge 0\) in the RST.

Lemma 3

In addition to Lemma 1, the following results hold

-

1.

\(Z_{1}^{+}=YX,Z_{1}^{-}=\left( 1-Y\right) X\) and \(\left| Z_{1}\right| \overset{d}{=}X\)

-

2.

\(E\left( \left| Z_{1}\right| ^{r}\right) =E(X^{r}),\) for \(r=1,2,3,\ldots \)

-

3.

$$\begin{aligned} F_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }(1-F_{X}(\left| x\right| )), &{}\quad x<0\\ \overline{\beta }+\beta F_{X}(x), &{}\quad x\ge 0 \end{array} \right. , \end{aligned}$$(15)$$\begin{aligned} f_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }f_{X}(\left| x\right| ), &{}\quad x<0\\ \beta f_{X}(x), &{}\quad x\ge 0 \end{array} \right. \end{aligned}$$(16)

and

$$\begin{aligned} F_{Z_{1}}^{-1}(t)=\left\{ \begin{array} [c]{cc} -F_{X}^{-1}(1-\frac{t}{\overline{\beta }}), &{} \quad 0<t\le \overline{\beta }\\ F_{X}^{-1}\left( \frac{t-\overline{\beta }}{\beta }\right) , &{} \quad \overline{\beta }\le t<1 \end{array} \right. . \end{aligned}$$

Lemma 4

The entropy of \(Z_{1}\) is given by

if X is continuous and

if X is discrete, where

We will not give a proof of Lemma 4 because it follows from Lemma 7 as a special case.

Lemma 5

For \(i=1,2\), assume that \(Y_{i}\sim Bernoulli(\beta _{i}),X_{i}>0\) is continuous and \(Z_{1,i}=(2Y_{i}-1)X_{i},\) where \(Y_{1} ,Y_{2},X_{1}\) and \(X_{2}\) are independent. Then,

Proof

Note that (18) follows from (15), (16) and

Theorem 1

Assume that \(\underline{T}(\underline{X})\) is the MLE of \(\underline{\theta }\) based on a random sample from \(f_{X}(x;\underline{\theta })\) and let \(I_{X}(\underline{\theta })\) be the corresponding Fisher information Matrix. Let \(Z_{1,1},Z_{1,2},\ldots ,Z_{1,n}\) be a random sample from

Assume that \(0<n_{1}=\sum I(z_{1,i}\ge 0)<n.\) Let \(\widehat{\beta }\) and \(\widehat{\underline{\theta }}\) be the MLE of \(\beta \) and \(\underline{\theta }\). Then,

-

1.

$$\begin{aligned} \widehat{\underline{\theta }}=\underline{T}(\left| Z_{1,1}\right| ,\left| Z_{1,2}\right| ,\ldots ,\left| Z_{1,n}\right| ) \end{aligned}$$(19)

-

2.

If X is continuous

$$\begin{aligned} \widehat{\beta }=\frac{n_{1}}{n}. \end{aligned}$$(20) -

3.

If X is discrete with \(f_{X}(0;\underline{\theta })>0\)

$$\begin{aligned} \widehat{\beta }=\frac{\sum I(z_{1,i}>0)}{\sum I(z_{1,i}>0)+\sum I(z_{1,i}<0)}. \end{aligned}$$(21) -

4.

$$\begin{aligned} \sqrt{n}\left( \begin{array} [c]{c} \widehat{\beta }-\beta \\ \widehat{\underline{\theta }}-\underline{\theta } \end{array} \right) \overset{d}{\longrightarrow }N\left( \underline{0},\left[ \begin{array} [c]{cc} \beta \left( 1-\beta \right) &{}\quad 0\\ 0 &{}\quad I_{X}^{-1}(\underline{\theta }) \end{array} \right] \right) . \end{aligned}$$(22)

Proof

For simplicity, we will prove the results when X is continuous and \(\underline{\theta }\) has dimension 1. The LF of the sample is given by

Hence we obtain (19) and (20). Note that

and

Hence

and

This proves (22).

3 The RSMT of Nonnegative rv

We assume in this section that \(X_{1}\ge 0\) and \(X_{2}\ge 0\) in the RSMT.

Lemma 6

In addition to the results of Lemma 2 we have

-

1.

\(\left| Z_{2}\right| \overset{d}{=}YX_{1}+\left( 1-Y\right) X_{2}\)

-

2.

\(\left| Z_{2}\right| ^{r}\overset{d}{=}YX_{1}^{r}+\left( 1-Y\right) X_{2}^{r}\) and \(E\left( \left| Z_{2}\right| ^{r}\right) \overset{d}{=}\beta E\left( X_{1}^{r}\right) +\overline{\beta }E\left( X_{2}^{r}\right) \)

-

3.

$$\begin{aligned} F_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }(1-F_{X_{2}}(\left| x\right| )), &{}\quad x<0\\ \overline{\beta }+\beta F_{X_{1}}(x), &{}\quad x\ge 0 \end{array} \right. , \\ f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }f_{X_{2}}(\left| x\right| ), &{}\quad x<0\\ \beta f_{X_{1}}(x), &{}\quad x\ge 0 \end{array} \right. \end{aligned}$$

and

$$\begin{aligned} F_{Z_{2}}^{-1}(t)=\left\{ \begin{array} [c]{cc} -F_{X_{2}}^{-1}(1-\frac{t}{\overline{\beta }}), &{}\quad 0<t\le \overline{\beta }\\ F_{X_{1}}^{-1}\left( \frac{t-\overline{\beta }}{\beta }\right) , &{} \quad \overline{\beta }\le t<1 \end{array} \right. . \end{aligned}$$

Lemma 7

Let H(Y) be as in (17). For the entropy of \(Z_{2}\) we have

-

1.

If \(X_{1}\) and \(X_{2}\) are continuous or \(X_{1}\) and \(X_{2}\) are discrete with \(f_{X_{1}}(0)\cdot \) \(f_{X_{2}}(0)=0,\) then

$$\begin{aligned} H(Z_{2})=H(Y)+\beta H(X_{1})+\overline{\beta }H(X_{2}). \end{aligned}$$(23) -

2.

If \(X_{1}\) and \(X_{2}\) are discrete with \(f_{X_{1}}(0)\cdot \) \(f_{X_{2} }(0)>0,\) then

$$\begin{aligned} H(Z_{2})&= \beta H(X_{1})+\overline{\beta }H(X_{2})+H(Y)+\beta f_{X_{1} }(0)\ln \left( \frac{\beta f_{X_{1}}(0)}{\beta f_{X_{1}}(0)+\overline{\beta }f_{X_{2}}(0)}\right) \nonumber \\&\quad +\,\overline{\beta }f_{X_{2}}(0)\ln \left( \frac{\overline{\beta }f_{X_{2}} (0)}{\beta f_{X_{1}}(0)+\overline{\beta }f_{X_{2}}(0)}\right) . \end{aligned}$$(24)

Proof

We will prove only (24). Assume that \(X_{1}\) and \(X_{2}\) are discrete with \(f_{X_{1}}(0)\cdot \) \(f_{X_{2}}(0)>0.\) Then,

Note that (24) follows from

and

Remarks

-

1.

If \(f_{X_{1}}(0)=f_{X_{2}}(0)=p\) in (24), then

$$\begin{aligned} H(Z_{2})=\beta H(X_{1})+\overline{\beta }H(X_{2})+\left( 1-p\right) H(Y). \end{aligned}$$ -

2.

Lemma 4 is the special case of Lemma 7 when \(X_{1}\overset{d}{=}X_{2}.\)

Lemma 8

For \(i=1,2\), assume that \(Y_{i}\sim Bernoulli(\beta _{i}),X_{i,j}>0,j=1,2\) are continuous and \(Z_{2,i}=Y_{i}X_{i,1}-\left( 1-Y_{i}\right) X_{i,2},\) where \(Y_{1},Y_{2}\) and the \(X^{\prime }s\) are independent. Then,

The proof of Lemma 8 is parallel to that of Lemma 5.

Remark

In the rest of this paper when the \(X^{\prime }s\) of the RSMT are discrete we will assume that \(f_{X_{2}}(0;\underline{\theta }_{2})=0.\)

Theorem 2

Assume, for \(j=1,2,\) that \(\underline{T}_{j,m} (\underline{X}_{j})\) is the MLE of \(\underline{\theta }_{j}\) based on a random sample of size m from \(f_{X_{j}}(x;\underline{\theta }_{j})\) and let \(I_{X_{j}}(\underline{\theta }_{j})\) be the corresponding Fisher information Matrix. Let \(Z_{2,1},Z_{2,2},\ldots ,Z_{2,n}\) be a random sample from

Assume, without any loss of generality that \(z_{2,i}\ge 0,i=1,2,\ldots ,n_{1},0<n_{1}<n\) and \(z_{2,i}<0,i=n_{1}+1,\ldots ,n\). Let \(\widehat{\beta },\) \(\widehat{\underline{\theta }}_{1}\) and \(\widehat{\underline{\theta }}_{2}\) be the MLE of \(\beta ,\underline{\theta } _{1}\) and \(\underline{\theta }_{2}\). Then,

and

Proof

For simplicity, we will prove the results when both \(\underline{\theta }_{1}\) and \(\underline{\theta }_{2}\) have dimension 1. It is clear that (25)–(27) follow from the result that the LF of the sample is given by

Note that

and

This completes the proof of (28).

Next we consider the special case when \(X_{1}\) and \(X_{2}\) are from the same family but with some common parameters. In this case

Theorem 3

Let \(\widehat{\beta },\underline{\widehat{\delta } },\widehat{\underline{\theta }}_{1}\) and \(\widehat{\underline{\theta }}_{2}\) be the MLE of \(\beta ,\underline{\delta },\underline{\theta }_{1}\) and \(\underline{\theta }_{2}\) based on a random sample \(Z_{2,1},Z_{2,2},\ldots ,Z_{2,n}\) from

Assume that Fisher information Matrix associated with \(f(x;\underline{\delta },\underline{\theta })\) is given by

and \(0<\sum _{i=1}^{n}I(Z_{2,i}>0)<n.\) Then,

and \(\underline{\widehat{\delta }},\widehat{\underline{\theta }}_{1}\) and \(\widehat{\underline{\theta }}_{2}\) are obtained by solving the normal equations

and

In addition, we have

where

4 The Double Generalized Pareto distributions

Following the notation of de Zea Bermudez and Kotz [6], the rv X has the two parameter Generalized Pareto distribution \(GP(\kappa ,\sigma )\) if its CDF and pdf are given by

and

with \(0\le x\le \frac{\sigma }{\kappa }\) if \(\kappa >0\) and \(0\le x<\infty \) if \(\kappa <0.\) Smith [22] proved the asymptotic Normality of the MLE of \(\kappa \) and \(\sigma \), for \(\kappa <0.5.\)

The first Double Generalized Pareto distribution, denoted by \(DGP(\beta ,\kappa ,\sigma ),\) is obtained using the RST when X has the \(GP(\kappa ,\sigma )\). By Lemma 1, the CDF, QF and pdf of the \(DGP(\beta ,\kappa ,\sigma )\) are given by

and

The MLE of the parameters of \(DGP(\beta ,\kappa ,\sigma )\) and the corresponding asymptotic theory are obtained using Theorem 1 and the results of Smith [22].

Note that \(DGP(\frac{1}{2},\kappa ,\sigma )\) appeared in the work of Armagan et al. [2] and Wang [24]. Nadarajah et al. [13] studied in details the distribution of \(DGP(\frac{1}{2} ,\kappa ,\sigma )\) and obtained the maximum likelihood estimators of its parameters.

The second distribution, denoted by \(DGP(\beta ,\kappa _{1},\sigma _{1} ;\kappa _{2},\sigma _{2}),\) is obtained by assuming in the RSMT that \(X_{i}\) has the \(GP(\kappa _{i},\sigma _{i}),i=1,2.\) For this distribution, by Lemma 2, we have

and

Other important special cases of \(DGP(\beta ,\kappa _{1},\sigma _{1};\kappa _{2},\sigma _{2})\) are \(DGP(\beta ,\kappa ,\sigma _{1};\kappa ,\sigma _{2} ),\) \(DGP(\frac{1}{2},\kappa _{1},\sigma _{1};\kappa _{2},\sigma _{2}),DGP(\frac{1}{2},\kappa ,\sigma _{1};\kappa ,\sigma _{2}),DGPD(\beta ,\kappa _{1},\sigma ;\kappa _{2},\sigma )\) and\(DGP(\frac{1}{2},\kappa _{1},\sigma ;\kappa _{2},\sigma ).\) In any of these special cases the MLE of the parameters and the corresponding asymptotic theory are obtained using Theorem 2 or 3 and the results of Smith [22].

The rv X has the Generalized Pareto(IV) distribution GP-\(IV(\kappa ,\sigma ,\gamma )\) if its CDF and pdf are given by

and

where \(\gamma >0\) and \(0\le x\le \frac{\sigma }{\kappa ^{\gamma }}\) if \(\kappa >0\) and \(0\le x<\infty \) if \(\kappa <0.\) The information Matrix for the parameters of GP-\(IV(\kappa ,\sigma ,\gamma )\) is given in Barazauskas [4].

Similar to \(DGP(\beta ,\kappa ,\sigma )\) we can use the RST to obtain the first Double Generalized Pareto (IV) distribution, denoted by DGP-\(IV(\beta ,\kappa ,\sigma ,\gamma )\). In addition, similar to \(DGP(\beta ,\kappa _{1} ,\sigma _{1};\kappa _{2},\sigma _{2})\) we can use the RSMT to obtain the second Double Generalized Pareto (IV) distribution, denoted by DGPD-\(IV(\beta ,\kappa _{1},\sigma _{1},\gamma _{1};\kappa _{2},\sigma _{2},\gamma _{2})\).

5 Double Discrete Distributions

The development of integer-valued rv with negative and positive support has received increased attention in the past decade, see for example, Skellam [21], Kozubowski and Inusah [12], Alzaid and Omair [1], Barbiero [5], Seetha Lekshmi and Sebastian [18] and Bakouch et al. [3]. Integer-valued rv with negative and positive support have recently been used in the developement of stationary integer-valued Time Series with negative and positive support. Some exmples of theses models are given in Freeland [8] and Nastić et al. [14].

In this section we introduce and study a number of double discrete distributions using the RST and the RSMT. To avoid facing the issue of identifiably at zero when using the RSMT for discrete rv we only use distributions for \(X_{1}\) and \(X_{2}\) such that \(f_{X_{1}}(0)\cdot \) \(f_{X_{2} }(0)=0.\) In the following, without any loss of generality, we will assume that \(f_{X_{2}}(0)=0.\)

5.1 The Double Discrete Generalized Pareto Distributions

The rv X has the two parameter Discrete Generalized Pareto distribution \(DGP(\kappa ,\sigma )\) (See Buddana and Kozubowski [7] for an alternative definition) if its CDF and pdf are given by

and

where \(\left\lfloor \cdot \right\rfloor \) is the floor function and \(x=0,1,\ldots ,\left\lfloor \frac{\sigma }{\kappa }\right\rfloor -1\) if \(\kappa >0\) and \(x=0,1,2,\ldots \) if \(\kappa <0.\)

The first Double Discrete Generalized Pareto distribution, denoted by DDGP-\(I(\beta ,\kappa ,\sigma ),\) is obtained using the RST when X has the \(DGP(\kappa ,\sigma )\). By Lemma 1, the CDF and pdf of the \(DDGP(\beta ,\kappa ,\sigma )\) are given by

and

The second distribution, denoted by DDGP-\(II(\beta ,\kappa _{1},\sigma _{1},\kappa _{2},\sigma _{2}),\) is obtained by assuming in the RSMT that \(X_{1}\sim DGP(\kappa _{1},\sigma _{1})\) and \(X_{2}\sim \left( DGP(\kappa _{2},\sigma _{2})+1\right) .\) For this distribution, by Lemma 2, we have

and

Important special cases of DDGP-\(II(\beta ,\kappa _{1},\sigma _{1};\kappa _{2},\sigma _{2})\) are DDGP-\(II(\beta ,\kappa ,\sigma _{1},\sigma _{2})\overset{d}{=}DDGP\)-\(II(\beta ,\kappa ,\sigma _{1},\kappa ,\sigma _{2})\) and DDGP-\(II(\beta ,\kappa _{1},\kappa _{2},\sigma )\overset{d}{=}DDGP\)-\(II(\beta ,\kappa _{1},\sigma ,\kappa _{2},\sigma ).\)

5.2 The Double Geometric Distributions Using the RST

The rv U is said to have the Geometric \(Geo_{1}(\theta )\) (resp. \(Geo_{0}(\theta )\)) if its pdf is given by \(f_{U}(x)=\overline{\theta } \theta ^{x-1},x=1,2,\ldots \) (resp., \(f_{U}(x)=\overline{\theta } \theta ^{x},x=0,1,2,\ldots \)). Note that for \(U\sim Geo_{1}(\theta )\) we have \(F_{U}(x)=1-\theta ^{\left\lfloor x\right\rfloor },x\ge 0,E(U)=\frac{1}{\overline{\theta }},Var(U)=\frac{\theta }{\overline{\theta }^{2}}\) and

For both \(Geo_{1}(\theta )\) and \(Geo_{0}(\theta ),I(\theta )=\frac{1}{\theta \overline{\theta }^{2}}\).

Consider the RST \(Z_{1}=\left( 2Y-1\right) X,\) where \(Y\sim Bernoulli(\beta )\) and X is Geometric rv and X and Y are independent. Depending on the distribution of X we have two Double Geometric distributions. The MLE of the parameters and the corresponding asymptotic theory are obtained using Theorem 1 and the well known results for the MLE of the Geometric distribution.

5.2.1 \(DG-I(\beta ,\theta )\)

Using \(X\sim Geo_{1}(\theta )\) in the RST we obtain \(DG-I(\beta ,\theta )\). For this distribution we have

-

1.

\(f_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\overline{\theta }\theta ^{-x-1} &{} ,x=-1,-2,\ldots \\ 0 &{} ,x=0\\ \beta \overline{\theta }\theta ^{x-1} &{} ,x=1,2,\ldots \end{array} \right. \)

-

2.

\(F_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\theta ^{-\left\lfloor x\right\rfloor -1} &{} ,x<-1\\ \overline{\beta } &{} ,-1\le x<1\\ 1-\beta \theta ^{\left\lfloor x\right\rfloor } &{} ,x\ge 1 \end{array} \right. \)

-

3.

\(M_{Z_{1}}(t)=\frac{\overline{\theta }^{2}+\beta \overline{\theta } \xi (t)+\overline{\beta }\overline{\theta }\xi (-t)}{\overline{\theta }^{2} -\theta \xi (t)-\theta \xi (-t)},\xi (t)=e^{t}-1\)

-

4.

\(\mu _{Z_{1}}=\left( 2\beta -1\right) \frac{1}{\overline{\theta }}\)

-

5.

\(\sigma _{Z_{1}}^{2}=\frac{\theta }{\overline{\theta }^{2}}+4\beta \overline{\beta }\left( \frac{1}{\overline{\theta }}\right) ^{2}.\)

Note that this distribution can be useful in modelling data with no zeros. Note also that \(DG-I(\frac{1}{2},\theta )\) is symmetric about zero.

5.2.2 \(DG-II(\beta ,\theta )\)

Using \(X\sim Geo_{0}(\theta )\) in the RST we obtain \(DG-II(\beta ,\theta )\). For this distribution we have

-

1.

\(f_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\overline{\theta }\theta ^{-x}, &{}\quad x=-1,-2,\ldots \\ \overline{\theta } &{} ,x=0\\ \beta \overline{\theta }\theta ^{x}, &{}\quad x=1,2,\ldots \end{array} \right. \)

-

2.

\(F_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\theta ^{-\left\lfloor x\right\rfloor }, &{}\quad x<0\\ \overline{\beta }+\beta \overline{\theta }, &{}\quad 0\le x<1\\ 1-\beta \theta ^{\left\lfloor x\right\rfloor +1}, &{}\quad x\ge 1 \end{array} \right. \)

-

3.

\(M_{Z_{1}}(t)=\frac{\overline{\theta }^{2}-\beta \theta \overline{\theta }\xi (t)-\overline{\beta }\theta \overline{\theta }\xi (-t)}{\overline{\theta } ^{2}-\theta \xi (t)-\theta \xi (-t)}\)

-

4.

\(\mu _{Z_{1}}=\left( 2\beta -1\right) \frac{\theta }{\overline{\theta }}\)

-

5.

\(\sigma _{Z_{1}}^{2}=\frac{\theta }{\overline{\theta }^{2}}+4\beta \overline{\beta }\left( \frac{\theta }{\overline{\theta }}\right) ^{2}.\)

Note that \(DG-II(\frac{1}{2},\theta )\) is symmetric about zero.

5.3 The Double Geometric Distributions Using the RSMT

In this case \(Z_{2}=YX_{1}-\left( 1-Y\right) X_{2},\) where \(Y\sim Bernoulli(\beta ),X_{1}\) and \(X_{2}\) are Geometric rv and \(X_{1},X_{2}\) and Y are independent. We will consider the following three Double Geometric distributions.

\(X_{2}\sim Geo_{0}(\theta _{2})\) | \(X_{2}\sim Geo_{1}(\theta _{2})\) | |

|---|---|---|

\(X_{1}\sim Geo_{0}(\theta _{1})\) | \(DG-III(\beta ,\theta _{1},\theta _{2})\) | |

\(X_{1}\sim Geo_{1}(\theta _{1})\) | \(DG-IV(\beta ,\theta _{1},\theta _{2})\) | \(DG-V(\beta ,\theta _{1},\theta _{2})\) |

The MLE of the parameters and the corresponding asymptotic theory are obtained using Theorem 2 and the well known results for the MLE of the Geometric distribution.

5.3.1 \(DG-III(\beta ,\theta _{1},\theta _{2})\)

In this case \(X_{1}\sim Geo_{0}(\theta _{1})\) and \(X_{2}\sim Geo_{1}(\theta _{2}).\) The following results hold:

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\overline{\theta }_{2}\theta _{2}^{-x-1}, &{}\quad x=-1,-2,\ldots \\ \beta \overline{\theta }_{1}, &{}\quad x=0\\ \beta \overline{\theta }_{1}\theta _{1}^{x}, &{} \quad x=1,2,\ldots \end{array} \right. \)

-

2.

\(F_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\theta _{2}^{-\left\lfloor x\right\rfloor -1}, &{}\quad x<0\\ \overline{\beta }+\beta \overline{\theta }_{1}, &{}\quad 0\le x<1\\ 1-\beta \theta _{1}^{\left\lfloor x\right\rfloor +1}, &{}\quad x\ge 1 \end{array} \right. \)

-

3.

\(M_{Z_{2}}(t)=\frac{\overline{\theta }_{1}\overline{\theta }_{2}+\left( \overline{\beta }\overline{\theta }_{2}-\beta \overline{\theta }_{1}\theta _{2}\right) \xi (-t)}{\overline{\theta }_{1}\overline{\theta }_{2}-\theta _{1} \xi (t)-\theta _{2}\xi (-t)}\)

-

4.

\(\mu _{Z_{2}}=\beta \frac{\theta _{1}}{\overline{\theta }_{1}} -\overline{\beta }\frac{1}{\overline{\theta }_{2}}\)

-

5.

\(\sigma _{Z_{2}}^{2}=\beta \frac{\theta _{1}}{\overline{\theta }_{1}^{2} }+\overline{\beta }\frac{\theta _{2}}{\overline{\theta }_{2}^{2}}+\beta \overline{\beta }\left( \frac{\theta _{1}}{\overline{\theta }_{1}}+\frac{1}{\overline{\theta }_{2}}\right) ^{2}.\)

Remarks

-

1.

Kozubowski and Inusah [12] introduced and studied the Skew Discrete Laplace distribution (\(SDL(\theta _{1},\theta _{2})\)) with parameters \(0<\theta _{1},\theta _{2}<1\) as \(Z_{3}=X_{1}-X_{2}\), where \(X_{1}\) and \(X_{2}\) are independent rv such that \(X_{1}\sim Geo_{0}(\theta _{1})\) and \(X_{2}\sim Geo_{0}(\theta _{2})\) (or \(X_{1}\sim Geo_{1}(\theta _{1})\) and \(X_{2}\sim Geo_{1}(\theta _{2})\)). Inusah and Kozubowski [9] introduced and studied the Discrete Laplace distribution (\(DL(\theta )\)) which is the special case of \(SDL(\theta _{1},\theta _{2})\) when \(\theta _{1}=\theta _{2}=\theta .\)

-

2.

By Proposition 3.3 of Kozubowski and Inusah [12], \(DG-III(\frac{\overline{\theta }_{2}}{1-\theta _{1}\theta _{2}},\theta _{1},\theta _{2})\overset{d}{=}SDL(\theta _{1},\theta _{2})\).

-

3.

\(DG-III(\frac{\ln \theta _{2}}{\ln \left( \theta _{1}\theta _{2}\right) },\theta _{1},\theta _{2})\) is the same as the Skew discrete Laplace distribution of Barbiero [5] who derived it as a discretization of a certain parametrization of the LD.

5.3.2 \(DG-IV(\beta ,\theta _{1},\theta _{2})\)

In this case \(X_{1}\sim Geo_{1}(\theta _{1})\) and \(X_{2}\sim Geo_{0}(\theta _{2}).\) Note that \(DG-IV(\beta ,\theta _{1},\theta _{2})=-DG-III(\overline{\beta },\theta _{2},\theta _{1}).\)

5.3.3 \(DG-V(\beta ,\theta _{1},\theta _{2})\)

In this case \(X_{1}\sim Geo_{1}(\theta _{1})\) and \(X_{2}\sim Geo_{1}(\theta _{2}).\) The following results hold:

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\overline{\theta }_{2}\theta _{2}^{-x-1}, &{} \quad x=-1,-2,\ldots \\ 0, &{}\quad x=0\\ \beta \overline{\theta }_{1}\theta _{1}^{x-1}, &{}\quad x=1,2,\ldots \end{array} \right. \)

-

2.

\(F_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\theta _{2}^{-\left\lfloor x\right\rfloor -1}, &{}\quad x<0\\ \overline{\beta }, &{} \quad 0\le x<1\\ 1-\beta \theta _{1}^{\left\lfloor x\right\rfloor }, &{} \quad x\ge 1 \end{array} \right. \)

-

3.

\(M_{Z_{2}}(t)=\frac{\overline{\theta }_{1}\overline{\theta }_{2} -\beta \overline{\theta }_{1}\theta _{2}\xi (-t)-\overline{\beta }\theta _{1}\overline{\theta }_{2}\xi (t)}{\overline{\theta }_{1}\overline{\theta } _{2}-\theta _{1}\xi (t)-\theta _{2}\xi (-t)}\)

-

4.

\(\mu _{Z_{2}}=\beta \frac{1}{\overline{\theta }_{1}}-\overline{\beta } \frac{1}{\overline{\theta }_{2}}\)

-

5.

\(\sigma _{Z_{2}}^{2}=\beta \frac{\theta _{1}}{\overline{\theta }_{1}^{2} }+\overline{\beta }\frac{\theta _{2}}{\overline{\theta }_{2}^{2}}+\beta \overline{\beta }\left( \frac{1}{\overline{\theta }_{1}}+\frac{1}{\overline{\theta }_{2}}\right) ^{2}.\)

Note that this distribution is useful in modelling data with no zeros. Note also that \(DG-V(\beta ,\theta ,\theta )=DG-I(\beta ,\theta ).\)

5.4 The Double Poisson Distributions

The first distribution, \(DP-I(\beta ,\theta ),\) is obtained using \(X\sim P(\theta )\) in the RST. This distribution is the same as the Extended Poisson distribution of Bakouch et al. [3].

The second distribution, \(DP-II(\beta ,\theta _{1,}\theta _{2})\) is obtained using \(X_{1}\backsim P(\theta _{1})\) and \(X_{2}\backsim (P(\theta _{2})+1)\) in the RSMT. For this distribution we have

-

1.

$$\begin{aligned} f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\frac{\theta _{2}^{-x-1}e^{-\theta _{2}}}{\left( \left| x\right| -1\right) !}, &{}\quad x=-1,-2,\ldots \\ \beta \frac{\theta _{1}^{x}e^{-\theta _{1}}}{x!}, &{} \quad x=0,1,2,\ldots \end{array} \right. \end{aligned}$$

-

2.

\(M_{Z_{2}}(t)=\beta e^{\theta _{1}\left( e^{t}-1\right) } +\overline{\beta }e^{\theta _{2}\left( e^{-t}-1\right) +t}\)

-

3.

\(\mu _{Z_{2}}=\beta \theta _{1}-\overline{\beta }\left( \theta _{2}+1\right) \)

-

4.

\(\sigma _{Z_{2}}^{2}=\beta \theta _{1}+\overline{\beta }\theta _{2} +\beta \overline{\beta }\left( \theta _{1}+\theta _{2}+1\right) ^{2}.\)

The third distribution is the well known Skellam distribution \((SK(\theta _{1},\theta _{2})\) developed by Skellam [21] by using the DT \(Z_{3} =X_{1}-X_{2},\) where \(X_{i}\sim P(\theta _{i}),i=1,2\) and are independent. This distribution was studied in details in Alzaid and Omair [1]. For this distribution

where

is the modified Bessel function of the first kind.

5.5 The Double Negative Binomial Distributions

The first DNBD, denoted by \(DNBD-I(\beta ,\nu ,\theta ),\) is developed using the RST when \(X\sim NB(\upsilon ,\theta )\) with

For this distribution we obtain

-

1.

\(f_{Z_{1}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\left( {\begin{array}{c}\left| x\right| +\nu -1\\ \left| x\right| \end{array}}\right) \overline{\theta }^{\nu }\theta ^{\left| x\right| }, &{}\quad x=-1,-2,\ldots \\ \overline{\theta }^{\nu }, &{}\quad x=0\\ \beta \left( {\begin{array}{c}x+\nu -1\\ x\end{array}}\right) \overline{\theta }^{\nu }\theta ^{x},&\quad x=1,2,\ldots \end{array} \right. \)

-

2.

\(M_{Z_{1}}(t)=\beta \left( \frac{\overline{\theta }}{1-\theta e^{t} }\right) ^{\nu }+\overline{\beta }\left( \frac{\overline{\theta }}{1-\theta e^{-t}}\right) ^{\nu }\)

-

3.

\(\mu _{Z_{1}}=\left( 2\beta -1\right) \frac{\nu \theta }{\overline{\theta }}\)

-

4.

\(\sigma _{Z_{1}}^{2}=\frac{\nu \theta }{\overline{\theta }^{2}} +4\beta \overline{\beta }\left( \frac{\nu \theta }{\overline{\theta }}\right) ^{2}.\)

The second DNBD, denoted by \(DNBD-II(\beta ,\nu _{1},\nu _{2},\theta _{1} ,\theta _{2}),\) is developed using \(X_{1}\sim NB(\upsilon _{1},\theta _{1})\) and \(X_{2}\sim \left( NB(\upsilon _{2},\theta _{2})+1\right) \) in the RSMT. For this distribution we obtain

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array}[c]{cc} \overline{\beta }\left( {\begin{array}{c}\left| x\right| +\nu _{2}-2\\ \left| x\right| -1\end{array}}\right) \overline{\theta }_{2}^{\nu _{2}}\theta _{2}^{\left| x\right| -1}, &{}\quad x=-1,-2,\ldots \\ \beta \left( {\begin{array}{c}x+\nu _{1}-1\\ x\end{array}}\right) \overline{\theta }_{1}^{\nu _{1}}\theta _{1}^{x},&\quad x=0,1,2,\ldots \end{array} \right. \)

-

2.

\(M_{Z_{2}}(t)=\beta \left( \frac{\overline{\theta }_{1}}{1-\theta _{1}e^{t}}\right) ^{\nu _{1}}+\overline{\beta }e^{t}\left( \frac{\overline{\theta }_{2}}{1-\theta _{2}e^{-t}}\right) ^{\nu _{2}}\)

-

3.

\(\mu _{Z_{2}}=\beta \frac{\nu _{1}\theta _{1}}{\overline{\theta }_{1} }-\overline{\beta }\left( \frac{\nu _{2}\theta _{2}}{\overline{\theta }_{2} }+1\right) \)

-

4.

\(\sigma _{Z_{2}}^{2}=\beta \frac{\nu _{1}\theta _{1}}{\overline{\theta } _{1}^{2}}+\overline{\beta }\frac{\nu _{2}\theta _{2}}{\overline{\theta }_{2} }+\beta \overline{\beta }\left( \frac{\nu _{1}\theta _{1}}{\overline{\theta }_{1} }+\frac{\nu _{2}\theta _{2}}{\overline{\theta }_{2}}+1\right) ^{2}.\)

Remark

Note that \(DNBD-II(\beta ,\nu _{1},\nu _{2},\theta _{1} ,\theta _{2})\) has the following important special cases

-

1.

\(DNBD-II(\beta ,\nu ,\theta _{1},\theta _{2})\overset{d}{=}DNBD-II(\beta ,\nu ,\nu ,\theta _{1},\theta _{2})\)

-

2.

\(DNBD-II(\beta ,\nu ,\theta )\overset{d}{=}DNBD-II(\beta ,\nu ,\nu ,\theta ,\theta )\)

-

3.

\(DNBD-II(\beta ,1,\nu ,\theta _{1},\theta _{2})\)

-

4.

\(DNBD-II(\beta ,\nu ,1,\theta _{1},\theta _{2})\)

-

5.

\(DNBD-II(\beta ,1,\nu ,\theta )\overset{d}{=}DNBD-II(\beta ,1,\nu ,\theta ,\theta )\)

Using the DT, Seetha Lekshmi and Sebastian [18] introduced and studied the DNBD, \(Z_{3}=X_{1}-X_{2},\) where \(X_{i}\sim NB(\upsilon ,\theta _{i}),i=1,2\) and are independent. Let \(DNBD-III(\nu _{1},\nu _{2},\theta _{1},\theta _{2})\) denotes the distribution of \(Z_{3}\) when \(X_{i}\sim NB(\upsilon _{i},\theta _{i}),i=1,2\) and are independent. For this distribution we have

-

1.

$$\begin{aligned} f_{Z_{3}}(x)=\left\{ \begin{array} [c]{cc} \overline{\theta }_{2}^{\nu _{2}}\overline{\theta }_{1}^{\nu _{1}}\sum \limits _{k=-x}^{\infty }\left( {\begin{array}{c}\nu _{2}+k-1\\ k\end{array}}\right) \left( {\begin{array}{c}\nu _{1}+k+x-1\\ k+x\end{array}}\right) \theta _{2}^{k}\theta _{1}^{k+x}, &{}\quad x=-1,-2,\ldots \\ \overline{\theta }_{2}^{\nu _{2}}\overline{\theta }_{1}^{\nu _{1}}\sum \limits _{k=x}^{\infty }\left( {\begin{array}{c}\nu _{1}+k-1\\ k\end{array}}\right) \left( {\begin{array}{c}\nu _{2}+k+x-1\\ k+x\end{array}}\right) \theta _{1}^{k}\theta _{2}^{k-x},&\quad x=0,1,2,\ldots \end{array} \right. \end{aligned}$$(30)

-

2.

\(M_{Z_{3}}(t)=\left( \frac{\overline{\theta }_{1}}{1-\theta _{1}e^{t} }\right) ^{\nu _{1}}\left( \frac{\overline{\theta }_{2}}{1-\theta _{2}e^{-t} }\right) ^{\nu _{2}}\)

-

3.

\(\mu _{Z_{3}}=\frac{\nu _{1}\theta _{1}}{\overline{\theta }_{1}}-\frac{\nu _{2}\theta _{2}}{\overline{\theta }_{2}}\)

-

4.

\(\sigma _{Z_{3}}^{2}=\frac{\nu _{1}\theta _{1}}{\overline{\theta }_{1}^{2} }+\frac{\nu _{2}\theta _{2}}{\overline{\theta }_{2}}.\)

Remarks

-

1.

Note that \(DNBD-III(\nu _{1},\nu _{2},\theta _{1},\theta _{2})\) has the following important special cases

-

(a)

\(DNBD-III(\nu ,\theta _{1},\theta _{2})\overset{d}{=}DNBD-III(\nu ,\nu ,\theta _{1},\theta _{2})\) which has been introduced and studied in Seetha Lekshmi and Sebastian [18]

-

(b)

\(DNBD-III(1,\nu ,\theta _{1},\theta _{2})\)

-

(c)

\(DNBD-III(1,\nu ,\theta ,\theta )\)

-

(a)

-

2.

Ong et al. [16] proved recurrence relations and gave some distributional properties of the rv resulting from the DT when \(X_{1}\) and \(X_{2}\) are discrete rv belonging to Panjer’s [17] family of discrete distributions. Sundt and Jewell [23] proved that the only non-degenerate members of this family are the Binomial, Poisson and Negative Binomial distributions.

5.6 The RSMT of a Binomial and a Poisson Distributions

The RSMT of a Binomial and a Poisson rv, \(DPB(\theta _{1},\theta _{2},\beta ),\) is developed using \(X_{1}\sim Binomial(n,\theta _{1})\) and \(X_{2}\sim \left( P(\theta _{2})+1\right) \) in the RSMT. For this distribution we obtain

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\frac{\theta _{2}^{\left| x\right| -1}e^{-\theta _{2}} }{\left( \left| x\right| -1\right) !}, &{}\quad x=-1,-2,\ldots \\ \beta \left( {\begin{array}{c}n\\ \left| x\right| \end{array}}\right) \theta _{1}^{x}\left( 1-\theta _{1}\right) ^{n-x},&\quad x=0,1,2,\ldots \end{array} \right. \)

-

2.

\(M_{Z_{2}}(t)=\beta \left( 1-\theta _{1}+\theta _{1}e^{-t}\right) ^{n}+\overline{\beta }e^{\theta _{2}\left( e^{t}-1\right) +t}\)

-

3.

\(\mu _{Z_{2}}=n\beta \theta _{1}-\overline{\beta }\left( \theta _{2}+1\right) \)

-

4.

\(\sigma _{Z_{2}}^{2}=n\beta \theta _{1}\left( 1-\theta _{1}\right) +\overline{\beta }\theta _{2}+\beta \overline{\beta }\left( n\theta _{1} +\theta _{2}+1\right) ^{2}.\)

5.7 The RSMT of a NB and a Poisson Distributions

The \(DPNBD-(\beta ,\nu ,\theta _{1},\theta _{2})\) is obtained using \(X_{1}\) \(\sim NB(\upsilon ,\theta _{1})\) and \(X_{2}\sim \left( P(\theta _{2})+1\right) \) in the RSMT. For this distribution we obtain

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\frac{\theta _{2}^{\left| x\right| -1}e^{-\theta _{2}} }{\left( \left| x\right| -1\right) !}, &{}\quad x=-1,-2,\ldots \\ \beta \left( {\begin{array}{c}x+\nu -1\\ x\end{array}}\right) \overline{\theta }_{1}^{\nu }\theta _{1}^{x},&\quad x=0,1,2,\ldots \end{array} \right. \)

-

2.

\(M_{Z_{2}}(t)=\beta \left( \frac{\overline{\theta }_{1}}{1-\theta _{1}e^{-t}}\right) ^{\nu }+\overline{\beta }e^{\theta _{2}\left( e^{t} -1\right) +t}\)

-

3.

\(\mu _{Z_{2}}=\beta \frac{\nu \theta _{1}}{\overline{\theta }_{1}} -\overline{\beta }\left( \theta _{2}+1\right) \)

-

4.

\(\sigma _{Z_{2}}^{2}=\beta \frac{\nu \theta _{1}}{\overline{\theta }_{1}^{2} }+\overline{\beta }\theta _{1}+\beta \overline{\beta }\left( \theta _{2} +1+\frac{\nu \theta _{1}}{\overline{\theta }_{1}}\right) ^{2}.\)

Note that \(DPNBD-(\beta ,1,\theta _{1},\theta _{2})\) is the RSMT of \(X_{1}\sim Geo_{0}(\theta _{1})\) and \(X_{2}\sim \left( P(\theta _{2})+1\right) \) and will be denoted by \(DPG-I(\theta _{1},\theta _{2},\beta ).\) The RSMT of \(X_{1}\sim P(\theta _{1})\) and \(X_{2}\sim Geo_{1}(\theta _{2})\) will be denoted by \(DPG-II(\theta _{1},\theta _{2},\beta ).\) For this distribution

-

1.

\(f_{Z_{2}}(x)=\left\{ \begin{array} [c]{cc} \overline{\beta }\overline{\theta }_{2}\theta ^{-x-1}, &{} \quad x=-1,-2,\ldots ,-n\\ \beta e^{-\theta _{1}}, &{}\quad x=0\\ \beta \frac{\theta _{1}^{x}e^{-\theta _{1}}}{x!}, &{}\quad x=1,2,\ldots \end{array} \right. \)

-

2.

\(M_{Z_{2}}(t)=\beta e^{\theta _{1}\left( e^{t}-1\right) } +\overline{\beta }\frac{\overline{\theta }_{2}e^{-t}}{1-\theta _{2}e^{-t}}\)

-

3.

\(\mu _{Z_{2}}=\beta \theta _{1}-\overline{\beta }\frac{1}{\overline{\theta }_{2}}\)

-

4.

\(\sigma _{Z_{2}}^{2}=\beta \theta _{1}+\overline{\beta }\frac{\theta _{2} }{\overline{\theta }_{2}^{2}}+\beta \overline{\beta }\left( \theta _{1}+\frac{1}{\overline{\theta }_{2}}\right) ^{2}.\)

6 The Distribution of Sums

In this section we use the notation that \(\varphi \ne J\varsubsetneq \left\{ 1,2,\ldots ,n\right\} \) and \(n_{J}=\#\) of elements in J.

Lemma 9

Assume that \(Y_{i}\) and \(X_{i},i=1,2,\ldots ,n\) are such that \(Y_{i}\sim Bernoulli(\beta _{i}),X_{i}\sim F_{i}(\cdot )\) and are all independent. Define

Then,

For discrete rv

The proof follows from the result that

Example 1

Assume \(X_{i}\sim P(\theta _{i}),i=1,2,\ldots ,n.\) Define \(\theta _{J}=\sum _{i\in J}\theta _{i}.\) Then,

where \(P\left\{ SK(\theta _{1},\theta _{2})=x\right\} \) is as given in (29). In particular, when \(\beta _{i}=\beta \) and \(\theta _{i} =\theta ,i=1,2,\ldots ,n\) we have

The special case of (31) when \(n=2\) is given in (15) - (17) of Bakouch et al. [3].

Example 2

Assume \(X_{i}\sim Geo_{o}(\theta ),i=1,2,\ldots ,n.\) Then,

where \(P\left\{ NB(\nu _{1},\theta _{1})-NB(\nu _{2},\theta _{2})=x\right\} \) can be computed using (30). When \(\beta _{i}=\beta ,\)

Lemma 10

Assume that \(Y_{i},X_{i,1}\) and \(X_{i,2},i=1,2,\ldots ,n\) are such that \(Y_{i}\sim Bernoulli(\beta _{i}),X_{i,j}\sim F_{i,j}(\cdot ),j=1,2\) and are all independent. Define

Then,

For discrete rv

Example 3

For \(i=1,2,\ldots ,n,\)assume \(X_{i,1}\sim P(\theta _{i,1})\) and \(X_{i,2}\sim \left( P(\theta _{i,2})+1\right) .\) Then,

In particular, when \(\beta _{i}=\beta ,\theta _{i,1}=\theta _{1}\) and \(\theta _{i,2}=\theta _{2},i=1,2,\ldots ,n\) we have

Example 4

Assume \(X_{i,j}\sim EXP(\theta _{j}),i=1,2,\ldots ,n,j=1,2.\) Then,

where \(J\underset{\ne }{\subset }\left\{ 1,2,\ldots ,n\right\} .\) When \(\beta _{i}=\beta ,\)

For the computation of \(P\left\{ G(n_{J},\theta _{1})-G(n_{J^{c}},\theta _{2})\le x\right\} \) we refer to Klar [10], Omura and Kailath ( [15], p. 25) and Simon ( [20], p.28).

Example 5

For \(i=1,2,\ldots ,n,\)assume \(X_{i,1}\sim Geo_{o}(\theta _{1})\) and \(X_{i,2}\sim Geo_{1}(\theta _{2}).\) Then,

where \(P\left\{ NB(\nu _{1},\theta _{1})-NB(\nu _{2},\theta _{2})=x\right\} \) can be computed using (30). When \(\beta _{i}=\beta ,\)

7 Applications

Consider the \(DP-II(\beta ,\theta _{1,}\theta _{2})\) for which \(Z_{2} =YX_{1}-(1-Y)X_{2}\) with \(X_{1}\backsim P(\theta _{1})\) and \(X_{2}\backsim (P(\theta _{2})+1))\) as a competitor to the Skellam (Poisson Difference) distribution.

Let \(Z_{2,i},i=1,2,\ldots ,n\) be a random sample from \(DP-II(\beta ,\theta _{1,}\theta _{2}).\) Let \(n_{1}=\sum I(Z_{2,i}\ge 0),Sum_{+}=\sum Z_{2,i}I(Z_{2,i}\ge 0)\) and \(Sum_{-}=-\sum Z_{2,i}I(Z_{2,i}<0)\) and assume that \(0<n_{1}<n\). By Theorem 2, the MLE of \(\beta ,\) \(\theta _{1}\) and \(\theta _{2}\) are given by

In addition,

Alzaid and Omair (2010) considered the following two real data sets from the Saudi Stock Exchange (TASI). Trading in Saudi Basic Industry (SABIC) and Arabian Shield from TASI recorded every minute of June 30, 2007. The price can move up and down by a multiple of SAR 0.25. The two data sets consist of \(4\times (close\) \(price-open\) price) in every minute. They used the runs test on each sample to show that the samples are random. They used the Skellam distribution to fit each of the two data sets. We used the \(DP-II(\beta ,\theta _{1,}\theta _{2})\) to fit each of the two data sets Their results together with ours are summarized in Table 1. The given p values of Table 1 are obtained by using Pearson Chi-square goodness-of-fit test.

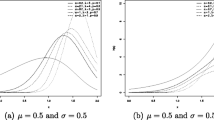

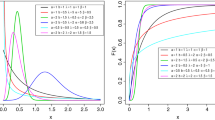

Figure 1 gives a plot of the empirical distribution and the fitted \(DP-II(0.8125,0.1641,0.1556)\) distribution for SABIC data. Figure 2 gives a plot of the empirical distribution and the fitted \(DP-II(0.721,0.399,0.403)\) distribution for Arabian Shield data.

Bakouch et al. [3] used the \(DP-I(\beta ,\theta )\) to fit a data set based on the number of students from the Bachelor program at the IDRAC International Management School (Lyon, France) in 60 consecutive Sessions of courses in Marketing.

References

Alzaid AA, Omair M (2010) On the Poisson difference distribution inference and applications. Bull Malays Math Sci Soc 33:17–45

Armagan A, Dunson D, Lee J (2013) Generalized double Pareto shrinkage. Stat. Sin. 23:119–143

Bakouch HS, Kachour M, Nadarajah S (2016) An extended Poisson distribution. Commun Stat Theory Methods 45:6746–6764

Barazauskas V (2003) Information matrix for Pareto (IV), Burr, and related distributions. Commun Stat Theory Methods 32:315–325

Barbiero A (2014) An alternative discrete skew Laplace distribution. Stat Methodol 16:47–67

de Zea Bermudez P, Kotz S (2010) Parameter estimation of the generalized Pareto distribution-part I. J Stat Plan Inference 140:1353–1373

Buddana A, Kozubowski TJ (2014) Discrete Pareto distributions. Econ Qual Control 29:143–156

Freeland RK (2010) True integer value time series. Adv Stat Anal 94:217–229

Inusah S, Kozubowski TJ (2006) A discrete analogue of the Laplace distribution. J Stat Plan Inference 136:1090–1102

Klar B (2015) A note on gamma difference distributions. J Stat Comput Simul 85:3708–3715

Kotz S, Kozubowski TJ, Podgórski K (2001) The Laplace distribution and generalizations: a revisit with applications to communications, economics, engineering and finance. Birkhäuser, Boston

Kozubowski TJ, Inusah S (2006) A skew Laplace distribution on integers. Ann Inst Stat Math 58:555–571

Nadarajah S, Afuecheta E, Chan S (2013) A double generalized Pareto distribution. Stat Probab Lett 83:2656–2663

Nastić AS, Ristić MM, Djordjević MS (2016) An INAR model with discrete Laplace marginal distributions. Braz J Probab Stat 30:107–126

Omura JK, Kailath T (1965) Some useful probability functions. Technical Report 7050-6, Stanford University

Ong SH, Shimizu K, Ng CM (2008) A class of discrete distributions arising from difference of two random variables. Comput Stat Data Anal 52:1490–1499

Panjer HH (1981) Recursive evaluation of compound distributions. ASTIN Bull 12:22–26

Seetha Lekshmi V, Sebastian S (2014) A skewed generalized discrete Laplace distribution. Int J Math Stat Invent 2:95–102

Shannon CE (1951) Prediction and entropy of printed English. Bell Syst Tech J 30:50–64

Simon MK (2002) Probability distributions involving Gaussian rvs. A handbook for engineers and scientists. Springer, Berlin

Skellam JG (1946) The frequency distribution of the difference between two Poisson variates belonging to different populations. J R Stat Soc Ser A 109:296

Smith RL (1984) Threshold methods for sample extremes. In: de Oliveira JT (ed) Statistical extremes and applications. Reidel, Dordrecht, pp 621–638

Sundt B, Jewell WS (1981) Further results on recursive evaluation of compound distributions. ASTIN Bull 12:27–39

Wang H (2012) Bayesian graphical lasso models and efficient posterior computation. Bayesian Anal 7:867–886

Acknowledgements

We wish to thank the referee for his/her comments which has improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Aly, EE. A Unified Approach for Developing Laplace-Type Distributions. J Indian Soc Probab Stat 19, 245–269 (2018). https://doi.org/10.1007/s41096-018-0042-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41096-018-0042-3