Abstract

The paper deals with the asymptotic joint posterior distribution of \((\theta , \phi )\) in a GI / G / 1 queueing system over a continuous time interval (0, T] where \(\theta \) and \(\phi \) are unknown parameters of arrival process and departure process respectively and T is a suitable stopping time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Though statistical inference plays a major role in any use of queueing models, study of asymptotic inference problems for queueing system can be hardly traced back to the works by Basawa and Prabhu (1981, 1988) where they have discussed about the maximum likelihood (ML) estimators of the parameters in single server queues. Basawa et al. (1996) have studied the consistency and asymptotic normality of the parameters in a GI / G / 1 queue based on information on waiting times. Acharya (1999) has studied the rate of convergence of the distribution of the maximum likelihood estimators of the arrival and the service rates from a single server queue. Acharya and Mishra (2007) have proved the Bernstein–von Mises theorem for the arrival process in a M / M / 1 queue.

From a Bayesian outlook, inferences about the parameter are based on its posterior distribution. The study of asymptotic posterior normality can be traced back to the time of Laplace and it has attracted the attention of many authors. A conventional approach to such problems starts from a Taylor series expansion of the log-likelihood function around the maximum likelihood estimator (MLE) and proceeds from there to develop expansions that have standard normal as a leading term and hold in probability or almost surely, given the data. This type of study have not been done in queueing system. For the general set up in this direction the previous work seems to be those by Walker (1969), Johnston (1970) for i.i.d observations; Hyde and Johnston (1979), Basawa and Prakasa Rao (1980), Chen (1985) and Sweeting and Adekola (1987) for stochastic process. The most recent work was done by Kim (1998) in which he provided a set of conditions to prove the asymptotic normality under quite general situations of possible non-stationary time series model and Weng and Tsai (2008) where they studied asymptotic normality for multiparameter problems.

In this paper, our aim is to prove that the joint posterior distribution of \((\theta , \phi )\) is asymptotically normal for GI / G / 1 queueing model in the context of exponential families. In Sect. 2 we introduce the model of our interest and explain some elements of maximum likelihood estimator (MLE) as well as Bayesian procedure. In Sect. 3 we prove our main result. For the illustration purpose we provide an example Sect. 4. Section 5 deals with the simulation study while in Sect. 6 concluding remarks are given.

2 GI / G / 1 Queueing Model

Consider a single server queueing system in which the interarrival times \(\{u_{k}, k\ge 1\}\) and the service times \(\{v_{k}, k\ge 1\}\) are two independent sequences of independent and identically distributed nonnegative random variables with densities \(f(u; \theta )\) and \(g(v; \phi )\), respectively, where \(\theta \) and \(\phi \) are unknown parameters. Let us assume that f and g belong to the continuous exponential families given by

and

where \(\Theta _1=\{\theta >0:~ k_1(\theta )< \infty \}\) and \(\Theta _2=\{\phi >0:~ k_2(\phi )< \infty \}\) are open subsets of \(\mathbb {R}\). It is easy to see that, \(E_{\theta }(h_1(u)) = k_1^{\prime }(\theta )\), \(var_{\theta }(h_1(u))=k_1^{\prime \prime }(\theta )\), \(E_{\phi }(h_2(v)) = k_2^{\prime }(\phi )\), \(var_{\phi }(h_2(v)) = k_2^{\prime \prime }(\phi )\), are supposed to be finite.

For simplicity we assume that the initial customer arrives at time \(t=0\). Our sampling scheme is to observe the system over a continuous time interval (0, T], where T is a suitable stopping time. The sample data consist of

where A(T) is the number of arrivals and D(T) is the number of departures during (0, T]. Obviously no arrivals occur during \([\sum _{i=1}^{A(T)} u_{i}, T]\) and no departures during \([\gamma (T)+\sum _{i=1}^{D(T)}v_{i}, T]\), where \(\gamma (T)\) is the total idle period in (0, T].

The likelihood function based on data (2.3) is given by

where F and G are distribution functions corresponding to the densities f and g respectively.

The approximate likelihood \(L_{T}^{(a)}(\theta ,\phi )\) is defined as

where

and

The maximum likelihood estimates obtained from (2.5) are asymptotically equivalent to those obtained from (2.4) provided that the following two conditions are satisfied for \(T \rightarrow \infty \):

and

The implications of these conditions have been explained by Basawa and Prabhu (1988).

Basawa and Prabhu (1988) have shown that the maximum likelihood estimator of \(\theta \) and \(\phi \) are given by

where \(\eta _i^{-1}(.)\) denotes the inverse functions of \(\eta _i(.)\) for \(i=1, 2\) and

and

The Fisher information matrix is given by

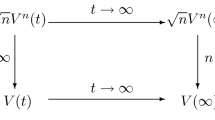

Under suitable stability conditions on stopping times, Basawa and Prabhu (1988) have proved that the estimators \(\hat{\theta }_T\) and \(\hat{\phi }_T\) are consistent, i.e,

and

where \(\theta _0\) and \(\phi _0\) denote the true value of \(\theta \) and \(\phi \) respectively, and the symbol \(\Rightarrow \) denotes the convergence in distribution.

From Eq. (2.5) we have the loglikelihood function

where

and

Let

Similarly \(\ell _T^{'}(\hat{\theta }_T)\), \(\ell _T^{'}(\hat{\phi }_T)\), \(\ell _T^{'}(\phi _0)\), \(\ell _T^{''}(\phi _0)\), \(\ell _T^{''}(\hat{\theta }_T)\) and \(\ell _T^{''}(\hat{\phi }_T)\) are defined.

Let \(\pi _1(\theta )\) and \(\pi _2(\phi )\) be the prior distributions of \(\theta \) and \(\phi \) respectively. Let the joint prior distribution \(\theta \) and \(\phi \) be \(\pi (\theta , \phi )\). Since the interarrival time and service time distributions are independent, so we have \(\pi (\theta , \phi )=\pi _1(\theta ) \pi _2(\phi )\). Then the joint posterior density of \((\theta , \phi )\) is

with

and

the marginal posterior densities of \(\theta \) and \(\phi \), respectively. Let \(\tilde{\theta }_T\) and \(\tilde{\phi }_T\) be Bayes estimator of \(\theta \) and \(\phi \) respectively.

In the next section we will state and prove our main result.

3 Main Result

Theorem 3.1

Let \((\theta _0, \phi _0)\in \Theta _1 \times \Theta _2\). If the prior densities \(\pi _1(\theta )\) and \(\pi _2(\phi )\) are continuous and positive at \(\theta _0\) and \(\phi _0\) respectively then, for any \(\alpha _i\), \(\beta _i\) such that \(-\infty \le \alpha _i \le \beta _i \le \infty \), \(i=1, 2\), the posterior probability that \((\hat{\theta }_T + \alpha _1 \sigma _T \le \theta \le \hat{\theta }_T + \beta _1 \sigma _T, \hat{\phi }_T + \alpha _2 \tau _T \le \phi \le \hat{\phi }_T + \beta _2 \tau _T)\), namely

tends in \([P_{(\theta _0, \phi _0)}]\) probability to

as \(T\rightarrow \infty \), where \(\sigma _T\) and \(\tau _T\) are the positive square roots of \([-\ell _T^{''}(\hat{\theta }_T)]^{-1}\) and \([-\ell _T^{''}(\hat{\phi }_T)]^{-1}\) respectively.

Proof of Theorem 3.1

The former integral of the above theorem can be written as the product of the integrals of the marginal posterior densities, i.e.,

and the convergence of both can be established separately.

For any \(\delta >0\), let us write \(\mathcal {N}(\varrho , \delta )=(\varrho -\delta , \varrho +\delta ) \) with \(\varrho \in \Theta _1\) and \(\mathcal {J}_B=\int _{B}L_T^{(a)}(\theta ) \pi _1(\theta ) d\theta \) where \( B \subseteq \Theta _1\). Hence,

with \(\delta _T=\frac{\sigma _T(\beta _1-\alpha _1)}{2}\) and \(\theta _T=\hat{\theta }_T +\frac{\sigma _T(\alpha _1+\beta _1)}{2}\). Then, we want to prove that

in probability \([P_{\theta _0}]\), where \(\Phi (z)=\frac{1}{\sqrt{2\pi }} \int _{-\infty }^{z}e^{-\frac{s^2}{2}}ds\).

Let us split \(\mathcal {J}_{\Theta _1}\) into \(J_{\Theta _1 \setminus \mathcal {J}_{\mathcal {N}(\theta _0, \delta )}}\) and \(\mathcal {J}_{\mathcal {N}(\theta _0, \delta )}\). Then, to obtain the above result it is sufficient to prove that the following statements holds in probability \([P_{\theta _0}]\) : For some \(\delta >0\),

-

(a)

\( \lim \limits _{ T \rightarrow \infty } [L_T^{(a)}(\hat{\theta }_T) \sigma _T]^{-1} J_{\Theta _1 \setminus \mathcal {J}_{\mathcal {N}(\theta _0, \delta )}} = 0 \)

-

(b)

\( \lim \limits _{ T \rightarrow \infty } [L_T^{(a)}(\hat{\theta }_T) \sigma _T]^{-1} \mathcal {J}_{\mathcal {N}(\theta _0, \delta )} = (2\pi )^\frac{1}{2} \pi _1(\theta _0) \)

-

(c)

\( \lim \limits _{ T \rightarrow \infty } [L_T^{(a)}(\hat{\theta }_T) \sigma _T]^{-1} \mathcal {J}_{\mathcal {N}(\theta _T, \delta _T)} = (2\pi )^\frac{1}{2} \pi _1(\theta _0) (\Phi (\beta _1)-\Phi (\alpha _1)) \)

Define

If \(\theta \) belongs to \(\mathcal {N}(\theta _0,\delta )\) for some \(\delta >0\), \(\ell _T^{\prime \prime }(\theta )/ \ell _T^{\prime \prime }(\theta _0)\) is close enough to 1 and, since \(\hat{\theta }_T \rightarrow \theta _0\) almost surely, \( \ell _T^{\prime \prime }(\hat{\theta }_T)/ \ell _T^{\prime \prime }(\theta _0)\) is almost surely close to 1 for T sufficiently large. Therefore we can deduce that for given \(\varepsilon >0\), we can take \(\delta \) such that, if T is large enough,

Consider also

Since \(\ell _T(.)\) has a strict maximum at \(\hat{\theta }_T\), it is obvious that \(q_T(.)\) is negative on \(\Theta _1 \setminus \mathcal {N}(\theta _0,\delta )\) for T large enough. Moreover, since \(\hat{\theta }_T \rightarrow \theta _0\) almost surely, it can be shown that there exists a positive constant \(\kappa (\delta )\) such that

Now,

We have \(-\ell _T^{\prime \prime }(\theta _0)= A(T) \sigma ^2(\theta _0)\) diverges to \(\infty \) almost surely as \(T \rightarrow \infty \). So, in the above expression

in probability and, using Eq. (3.5), for some constant M and T large enough

in probability and, consequently (a) holds.

Let us prove (b). Write

Using Taylor expansion around \(\hat{\theta }_T\),

for \(\bar{\theta }_T=\theta +\xi (\hat{\theta }_T-\theta )\) with \(0<\xi <1\). Thus letting

we have

Using Eqs. (3.8) and (3.9) in Eq. (3.7) and, for some \(\delta >0\) and T large enough such that \(\hat{\theta }_T \in \mathcal {N}(\theta _0,\delta )\), we have, for every \(\theta \in \mathcal {N}(\theta _0,\delta )\)

and consequently,

Since \(\pi _1(\theta )\) is continuous and positive at \(\theta =\theta _0\), then for given \(0<\varepsilon <1\), we can choose \(\delta \) small enough so that

Denote

Then from Eq. (3.12) we get that

If \(\sup _{\theta \in \mathcal {N}(\theta _0,\delta )} |R_T|< \varepsilon <1\), then

and for \(\eta =+\varepsilon \) or \(-\varepsilon \), making a change of variable,

Since \(\sigma _T^{-1} \rightarrow \infty \) and \(\hat{\theta }_T \rightarrow \theta _0\) almost surely, it is deduced that the limits \((\theta _0-\delta -\hat{\theta }_T)(1+\eta )^\frac{1}{2}\sigma _T^{-1}\) and \((\theta _0+\delta -\hat{\theta }_T)(1+\eta )^\frac{1}{2}\sigma _T^{-1}\) of the integrals in the above equation converges to \(-\infty \) and \(\infty \) respectively. Therefore, the term in square brackets in Eq. (3.14) converges to 1. Thus, using an appropriate bound on \(R_T\) it follows that,

in probability as \(T \rightarrow \infty \) and, using the above expression with the Eq. (3.13) we have the following bounds for \(\mathcal {J}_{\mathcal {N}(\theta _0,\delta )}\):

Hence (b) holds.

Finally, let us show (c). Using the same arguments and notations above, given \(\varepsilon >0\), there exists \(\delta \) such that if \(\mathcal {N}(\theta _T,\delta _T) \subseteq \mathcal {N}(\theta _0,\delta )\) for T large enough then

While the last term in Eq. (3.14) becomes

Therefore, we obtain that

and now (3.3) is established.

Similarly, using the same arguments as in the above, it can be shown that

in probability \([P_{\phi _0}]\) and the proof is completed. \(\square \)

4 Example

Let us consider a M / M / 1 queueing system. Under the Markovian set-up we have

So, the loglikelihood function is written as

and the MLEs are given by

Here \(\sigma _T=\left[ -\ell _T^{''}(\hat{\theta }_T)\right] ^{-\frac{1}{2}}=\frac{\sum _{i=1}^{A(T)}u_i}{\sqrt{A(T)}}\) and \(\tau _T=\left[ -\ell _T^{''}(\hat{\phi }_T)\right] ^{-\frac{1}{2}} =\frac{\sum _{i=1}^{D(T)}v_i}{\sqrt{D(T)}}\).

Let us assume that the conjugate prior distributions of \(\theta \) and \(\phi \) are gamma distributions with hyper-parameters \((a_1, b_1)\) and \((a_2, b_2)\), that is

where \(a_i, b_i > 0\) for \(i=1,2\).

Then, the posterior distribution of \(\theta \) can be computed as:

Similarly,

It is easy to see that

Here, the posterior distributions of \(\theta \) and \(\phi \) are seen to be gamma distributions [\(\text {Gamma}(A(T)+a_1, \sum _{i=1}^{A(T)}u_i+b_1)\) and \(\text {Gamma}(D(T)+a_2, \sum _{i=1}^{D(T)}v_i+b_2)\)]. Hence, by Central Limit Theorem (CLT), the joint posterior distribution converges to normal distribution as \(T \rightarrow \infty \).

5 Simulation

For the feasibility of the main result discussed in Sect. 3, simulation was conducted for M / M / 1 queueing system. For given values of true parameters \(\theta _0\) and \(\phi _0\) MLEs (\(\hat{\theta }_T\) and \(\hat{\phi }_T\)) are computed at different time interval (0, T]. Also by choosing different values of hyper-parameters of gamma distribution we compute the Bayes estimators (\(\tilde{\theta }_T\) and \(\tilde{\phi }_T\)) of \(\theta \) and \(\phi \). Here, we consider two pair of true value of parameters \(\theta _0\) and \(\phi _0\) as (1, 2) and (2, 3). For the hyper-parameters we have taken as: \((a_1, b_1)=(1.5, 2.5)\), \((a_2, b_2)=(3, 3.5)\) and \((a_1, b_1)=(3, 5)\), \((a_2, b_2)=(4, 5.5)\). The simulation procedure are repeated 10000 time to estimate the parameters. The computed values of estimators and their respective standard errors are presented in Tables 1, 2 and 3. The values in the parenthesis indicate the standard errors.

6 Concluding Remarks

In simulation study we present the estimates by proposed methods. It is clear that the estimators are quite closer to the true parameter values and their standard errors are negligible.

References

Acharya SK (1999) On normal approximation for maximum likelihood estimation from single server queues. Queueing Syst 31:207–216

Acharya SK, Mishra MN (2007) Bernstein–von Mises theorem for \(M|M|1\) queue. Commun Stat Theory Methods 36:207–209

Basawa IV, Prabhu NU (1981) Estimation in single server queues. Naval Res Logist Q 28:475–487

Basawa IV, Prabhu NU (1988) Large sample inference from single server queues. Queueing Syst 3(4):289–304

Basawa IV, Prakasa Rao BLS (1980) Statistical inference for stochastic processes. Academic, London

Basawa IV, Prabhu NU, Lund R (1996) Maximum likelihood estimation for single server queues from waiting time data. Queueing Syst 24:155–167

Chen CF (1985) On asymptotic normality of limiting density functions with Bayesian implications. J R Stat Soc Ser B 47:540–546

Hyde CC, Johnston IM (1979) On asymptotic posterior normality for stochastic process. J R Stat Soc Ser B 41:184–189

Johnston R (1970) Asymptotic expansion associated with posterior distributions. Ann Math Stat 41:851–864

Kim JY (1998) Large sample properties of posterior densities, Bayesian information criterion and the likelihood principle in nonstationary time series models. Econometrica 66(2):359–380

Sweeting TJ, Adekola AO (1987) Asymptotic posterior normality for stochastic process revisted. J R Stat Soc Ser B 49:215–222

Walker AM (1969) On the asymptotic behaviour of posterior distributions. J R Stat Soc Ser B 31:80–88

Weng RC, Tsai WC (2008) Asymptotic posterior normality for multiparameter problems. J Stat Plan Inference 138:4068–4080

Acknowledgements

The authors are thankful to the referees for their comments and useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Singh, S.K., Acharya, S.K. Normal Approximation of Posterior Distribution in GI / G / 1 Queue. J Indian Soc Probab Stat 20, 51–64 (2019). https://doi.org/10.1007/s41096-018-0055-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41096-018-0055-y

Keywords

- GI / G / 1 queue

- Exponential families

- Maximum likelihood estimator

- Posterior distribution

- Asymptotic normality