Abstract

A super-structure system for probability densities, covering not just typical types but also fractional ones, was developed using the time scale theory. From a mathematical point of view, we discover duals of the Poisson process on the time scale \(\mathbb {T}=\mathbb {R}\) for the time scales \(\mathbb {T}=\mathbb {Z}\) and \( \mathbb {T}=q^{\mathbb {Z}},\) evaluating \(\nabla -\)calculus and \(\Delta -\)calculus. Also, we search the fractional extensions of the Poisson process on these time scales and detect duals of them. A simulation allows for comparing the nabla and delta types of the observed distributions, not just typical types but also fractional ones. As an application, we also propose new substitution boxes (S-boxes) using the proposed stochastic models and compare the performance of S-boxes created in this way. Given that the S-box is the core for confusion in Advanced Encryption Standard (AES), the formation of these new S-boxes represents an interesting application of these stochastic models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time scale theory ([1,2,3]) as a mathematical framework provides a unified formalism for both continuous and discrete calculus and extends the study of dynamic systems on any closed subset, called “time scale”, of the real line \(\mathbb {T}.\) The case \(\mathbb {T}=\mathbb {R}\) corresponds to real analysis and the case \(\mathbb {T}=\mathbb {Z}\) to discrete-time analysis. It also generalizes the concepts of differential and difference equations to a single framework. On the other hand, such formalism enables fractional Riemann–Liouville computation on any time scale [4]. Fractional calculus deals with derivatives and integrals of arbitrary order ([48, 49]). It extends the Newtonian calculus to allow for differentiation and integration of any real or complex order, not just integer values. The combination of time scale theory and fractional calculus has been used to model complex systems that exhibit both continuous and discrete behaviors, providing a more comprehensive understanding of their dynamics. Time scale theory extends the \(m-\)th Riemann integration of a function (by Cauchy formula, which, for repeated integration, allows for compressing m integrations of a function into a single integral) for fractional orders. Recent studies employing the combination of time scales and fractional calculus in probability theory demonstrate the potential of time scale theory; for example see [6,7,8,9,10] and [11].

Poisson process is used to model random events that occur in a given time interval in telecommunications, biology, physics and so on. The key assumption of Poisson process is that the number of events occurring in non-overlapping time intervals are independent of each other. Some of discrete distributions like binomial, geometric and negative binomial are defined on the stochastic model of the sequence of independent and identical Bernoulli trials. The Poisson distribution defined as an approximation of the binomial or negative binomial distributions. Recently, it was observed that binomial distribution on \(\mathbb {Z}\) is a dual of Poisson distribution on real time scale [5]. The authors in [5] have used delta calculus to generalize Poisson process on arbitrary time scale. This is just one of the surprises that time scale theory has brought to probability theory. To discover the useful aspects of applying this theory on distribution theory two essential questions arise:

a) Is it possible to generate Poisson process on arbitrary time scale by applying delta calculus?, and

b) Is it possible to generate a fractional version of the Poisson process on arbitrary time scale by applying both nabla and delta calculus? We try to find solutions for these questions.

This work introduces two fractional and ordinary extensions of the differential equation that describes a Poisson process on an ordinary time scale. For both fractional and ordinary, the nabla and delta types of calculus on a time scale are considered: (ordinary or fractional) nabla Poisson processes, referring to the situation where applied time scale for both fractional and ordinary differential equations are nabla; and (ordinary or fractional) delta Poisson processes, where both fractional and ordinary differential equations are described by using delta calculus. The obtained distributions from ordinary differential equations (delta or nabla types) include some cases of power series distributions like Poisson, binomial, negative binomial, as well as gamma (continuous and discrete types) and Euler distributions. Also, the obtained distributions from fractional differential equations (delta or nabla types) include only fractional poisson distribution as a known distribution, that is the most of obtained distributions are new. A simulation study in order to compare of the observed distributions by nabla and delta calculus is done. For the simulated data set, the parameters estimation are obtained using the maximum likelihood method.

Fractional distributions are employed as a powerful tool to model complex data and complex systems. They are characterized by their probability density functions (PDFs). The main property of fractional distributions is that these models can take different values of the real line by changing their parameters and mass values. This property has made them flexible in comparison to the ordinary models. The clear rationale behind the flexibility of PDFs lies in their ability to accommodate fractional support, which is the very reason they are labeled as fractional models. The flexibility gives more freedom to obtain different random values. It is a desirable situation in the construction of S-boxes. That means increasing the randomness rate and then increasing the security of the networks. In this paper, for the obtained distributions (ordinary and fractional types), new S-boxes are proposed and compared the performance of S-boxes created in this way.

Some of advantages of this paper are listed in the following:

a) Finding a versatile differential equation that generates most of the distributions;

b) Finding the relationship between ordinary and fractional types of distributions;

c) Finding the relationship between ordinary distributions and quantum distributions;

d) Finding the relationship between continuous and discrete types of distributions (ordinary and fractional);

e) Introducing delta and nabla versions of distributions; as an example, this paper realizes a new relationship between the binomial and negative binomial distributions by applying time scale calculus.,

f) Introducing fractional stochastic models as perfect models to create S-boxes in cryptography.

g) Comparing the observed distributions with nabla and delta calculus by using a simulation study.

The paper is organized as follows: in the next section, we briefly describe the instruments from time scale theory in order to define a versatile differential equation. This section gives an insight about how this equation is resulted from its ordinary one and time scale calculus. Section 3 includes the steps of finding solutions of the equation on arbitrary time scale. Also, we obtain the other relative distributions, such as Erlang and exponential. We present a fractional version of the versatile differential equation along with its solutions in Section 4. Some S-boxes designed based on the obtained distributions are found in Section 5.

2 Preliminaries

Every closed subset of the real line can be viewed as a time scale \( \mathbb {T} \) . In this work, we consider nabla calculus on time scale \(\mathbb {T},\) where \( t \ge 0,\,t \in \mathbb {T}.\) The graininess and the backward jump operators are defined as \( \rho (t)=sup\lbrace s\in \mathbb {T}; s<t\rbrace \) and \( \nu (t)=t-\rho (t),\) respectively. The nabla derivative of a function f(t) on \( \mathbb {T} \) is defined as

The nabla integral is the antiderivative in the sense that, if \( f(t) =f^{\nabla }(t), \) then for \( s,t\in \mathbb {T},\)

The nabla Taylor monomials are defined as

The differential equation

has the solution \( f(t)=e_{z} (t,0),\) where

and z belongs to the set of regressive and right dense (rd)-continuous functions \( f : \mathbb {T} \rightarrow \mathbb {R}.\) For a regulated function \( f : \mathbb {T} \rightarrow \mathbb {R}, \) the nabla Laplace transform is defined by

where \( \Theta s :=-s/(1-\nu (t)s). \) The nabla fractional Taylor monomials are defined as

to those regressive functions \( s \in \mathbb {C} \setminus \lbrace 0 \rbrace ,\, t \ge t_{0};\) and for \( t < t_{0},\, h_{\alpha } (t,t_{0})= 0.\) Also, \(h_{-\alpha } (t,0):=h_{-\alpha } (t)\) is nabla Dirac delta function. The Caputo nabla fractional derivative of order \( \alpha \ge 0\) is defined as

where \( \mathcal {D}_{s}^{\alpha }f(t) =\mathcal {D} ^{n} \mathcal {I}_{s}^{n-\alpha }f(t),\, \mathcal {I}_{s}^{ \alpha }f(t)=\int _{s}^{t}h_{\alpha -1}(t,\rho (\tau ))f (\tau )\nabla \tau \) are Riemann-Liouville nabla fractional derivative and integral, respectively. The solution to the differential equation

is \( f(t)= \texttt{E} _{\alpha }(z ; t, s ):= \texttt{E}_{\alpha ,1}(z; t, s)\), where

is Mittag-Leffler function defined as provided the right-hand series is convergent, where \(\alpha ,\,\beta >0\) and \( \lambda \in \mathbb {R}.\) The nabla Laplace transform of this function is \( \frac{s^{\alpha -\beta }}{s^{\alpha }-z}\), where \(\vert z\vert <\vert s\vert ^{\alpha }\). By differentiating k times with respect to \(\lambda \) on both sides of the formula in the theorem above, we get the following result:

Some of important time scales will form the basis of the theory in this study. By choosing the time scale \(\mathbb {T}=\mathbb {R},\) the continuous calculus is provided and every point is dense. Hence, we have \( \rho (t) = t, \nu (t) =0 \) and \( f ^{\nabla } = f ^{\prime }. \) The Lebesgue \(\nabla \)-integral is the same with the standard Lebesgue integral. The Taylor monomials can be written as

and the fractional Taylor monomial is defined as

while \(h_{-\alpha } (t)=\frac{t^{-\alpha }}{\Gamma (1-\alpha )}\) is the Dirac delta function on \(\mathbb {T}=\mathbb {R}.\) Further \( e_{\lambda }(t, a)=e^{\lambda (t-a)}, \) where “e” is ordinary exponential function.

Discrete calculus can be obtained by choosing \(\mathbb {T}=\mathbb {Z}.\) We have \( \rho (t) = t-1, \nu (t) = 1\) and so every point is discrete. The derivatives correspond to the left difference operator is defined as \( f^{\nabla }(t) = f (t)- f (t -1) = \nabla f(t). \) Finally, the \(\nabla \)-integrals correspond to a finite summation

The Taylor monomials can be written as

where \(t ^{^{\frac{}{n}}}=\Pi _{j=0}^{n-1}(t+j)\) and the fractional Taylor monomial is defined as

where \( t^{^{\frac{}{\alpha }}}=\frac{\Gamma (t+\alpha )}{\Gamma (t)}, \) while \(h_{-\alpha } (t)=\frac{t^{\frac{}{-\alpha }}}{\Gamma (1-\alpha )}\) is the nabla Dirac delta function on \(\mathbb {T}=\mathbb {Z}.\) Also, the nabla exponential function is \( e_{\lambda }(t, a)=(1-\lambda )^{a-t}. \)

Quantum calculus is the result of choosing the time scale

where we have fixed \(q \in (0, 1) \cup (1,\infty ).\) The choice of taking \(q > 1\) or \(0<q < 1\) is an arbitrary matter. One can convert one to another using the transformation \(q \rightarrow q^{-1}.\) Let \( 0<q < 1,\) then for all \(t \in \mathbb {T}, \rho (t) = qt. \) We have \(\nu (0)=0 \) and for \( t > 0,\, \nu (t) = (1-q )t. \) The nabla \(q-\)derivative of a function \( f:\mathbb {T}_{q}\rightarrow \mathbb {R}\) is given by

The nabla q-integral are given by the formula

The q-Taylor monomials can be written as

where \((t-s)^{n}_{q}=\prod _{i=0}^{n-1} (t-q^{i}s),\, [n]_{q }!=[1]_{q } [2]_{q }...[n]_{q }, \) and \( [n]_{q} =\frac{1-q^{n}}{1-q} \) is called a q-real number. When \(\alpha \) is a non-positive integer, the q-Taylor monomials is defined by

where the q-gamma function is defined by

with \( E_{q}(x)=\Pi _{i=1}^{\infty }(1+x(1-q)q^{i-1}), \) where \( E_{q^{-1}}(x)=e_{q}(x)=\Pi _{i=1}^{\infty }(1-x(1-q)q^{i-1})^{-1}.\) Also, \(h_{-\alpha } (t)=\frac{t^{-\alpha }}{\Gamma _{q}(1-\alpha )}\) is the nabla Dirac delta function on \(\mathbb {T}=q ^{\mathbb {Z}}.\) In this work, we introduce a general form of q-exponential function as \( e_{\Theta \lambda }(t,s)=\Pi _{i=0}^{\infty }(1+\Theta \lambda (s)\nu (t)q^{i }). \)

3 Duals of Poisson Process on Time Scales

3.1 Derivation

We aim to create a system that illustrates the discovery of a stochastic process on a time scale using nabla calculus, reflecting a scenario for the Poisson process on \( \mathbb {T}=\mathbb {R}.\) We assume the probability of occurrence of one event in the time interval \( [t, \rho (s )]_{\mathbb {T}} \) is

where \( \Theta \lambda :=-\lambda /(1-\nu (t)\lambda ) \) and \(\lambda > 0.\) Therefore, the probability of no event occurring in the interval can be expressed as:

where \(o(s - t)\) is such that \(\lim _{s \rightarrow t}\dfrac{o(s - t)}{s-t}=0.\) We also assume no events have occurred at \(t = 0\). Let \(X : \mathbb {T} \rightarrow \mathbb {N}_{0}\) be a counting process, where \( \mathbb {N}_{0} \) is the set of non-negative integers and \(p_{k}(t) =\mathbb {P}[X(t) = k]\) is the probability that k events, \(k \in \mathbb {N}_{0},\) have occurred by time \( t \in \mathbb {N}.\) We also suppose \( t, s \in \mathbb {T}\) with \( \rho (t)<s. \) When considering the successive intervals \( [0, \rho (t))_{\mathbb {T}}\) and \( [\rho (t),s)_{\mathbb {T}},\) the following system of equations is established:

with initial conditions \( p_{0}(0) = 1 \) and \( p_{k}(0) = 0\) for \( k \ne 0. \) Therefore, we let \( p_{k}(0) = \delta _{k,0},\) where \(\delta _{k,a}\) is the Kroneker delta function defined as 1, when \(k\ne a\) and 0, if \(k= a.\) Also, we let s go to t and first consider the \( p_{0} \) equation. Rearranging the terms and by applying the definition of the nabla derivative we get

Applying the initial value \( p_{0}(0)=1\), we get

Now we consider the equation \( p_{1} \). Replacing the solution of the \( p_{0} \) equation yields

which, using the nabla derivative of function on \( \mathbb {T}, \) yields

Using the variation of constants formula on time scales, Theorem 3.42 from [3], we obtain the solution

Note that here we applied Theorem 3.15 (ii), (v), and (iv) from [3]. Now we consider the \( p_{2} \) equation. Substituting the solution of the \( p_{1} \) equation yields

applying the nabla derivative of function on \(\mathbb {T}\) yields

Again, using the variation of constants formula on time scales, we get the solution

In general, for the following general equation

Equations (3.1), (3.4), (3.5), and (3.6) can be summarized by

where \(t\in \mathbb {T}, p_{-1}(t)=0\) and \(\delta (t)\) is the Dirac delta function \(\delta (t):=\frac{t^{-1}}{\Gamma (0)}, t\ge 0\). The general solution by induction is as follows:

As can be seen from the proceeding discussion, the positivity of the above equation follows from the factors that figures in the product term and the \((-1)^k\) term. Equation (3.8) can be seen as a product of \((-1)^{2k}\) and some factors all which are positive.

Further, we can verify that (3.8) is a solution of (3.6). We note that, using the nabla product rule, Theorem 3.3 (ii), and Theorem 3.15 (ii), (v), and (iv) from [3]:

which for time scales \( \mathbb {R},\mathbb {Z} \) and \( q^{\mathbb {Z}}\) gives:

This derivation motivates a general definition of Poisson process on time scales as follows:

Definition 3.1

\( S : \mathbb {T} \rightarrow \mathbb {N}_{0} \) is a \( \mathbb {T}-\)Poisson process with rate \( \lambda > 0 \) on a time scale \( \mathbb {T}. \) For \( t \in \mathbb {T}\) and \( k \in \mathbb {N}_{0},\) we have

The random variable representing the number of arrivals, t, has a distribution that can be generated for each fixed \(t \in \mathbb {T}. \) Three kinds of time scales are considered: \( \mathbb {R}, \mathbb {Z} \) and \( q^{\mathbb {Z}}. \)

\( S:\mathbb {R} \rightarrow \mathbb {N}_{0} \) is an \( \mathbb {R}-\)Poisson process. Under this assumption, \( \rho ^{i}(0) = 0,\,\forall i \in \mathbb {N},\,\,(\Theta \lambda )(t) =-\lambda ,\, \forall t \in \mathbb {R},\, h_{k}(t,0) = \frac{t^{k}}{k!},\) and \(e_{\Theta \lambda }(t, \rho ^{k}(0))= e^{-\lambda t}. \) These lead to the probability density function of the Poisson distribution:

\( S:\mathbb {Z} \rightarrow \mathbb {N}_{0}\) is an \(\mathbb {Z}\)-Poisson process. Following that, \( \rho ^{i}(0) = -i,\,\forall \) \( i\in \mathbb {N},\,\,\,(\Theta \lambda )(t)=\frac{-\lambda }{1-\lambda },\, h_{k} (t,0)=\left( {\begin{array}{c}t + k -1\\ k\end{array}}\right) , \) and \(e_{\Theta \lambda }(t, \rho ^{k}(0))= (1+\frac{\lambda }{1-\lambda })^{-t-k}. \) Thus we have

which we recognize as the negative binomial distribution.

If we let \( S:q^{\mathbb {Z}} \rightarrow \mathbb {N}_{0} \) be an \(q^{\mathbb {Z}}\)-Poisson process, then we get \( \rho ^{i}(0) =0\) for all \( i\in N,\,(\Theta \lambda )(t)=\frac{-\lambda }{1-(1-q)t\lambda },\, h_{k} (t,0)=\frac{t^{k}}{[k]_{q}!} \) and \(e_{\Theta \lambda }(t, \rho ^{k}(0))=\prod _{i=1} ^{\infty }(1-\lambda t(1-q)q^{i-1}).\) Thus, we have

which we recognize as the q-Poisson distribution or Euler distribution. For further details about the Euler distribution, see [15].

The Erlang distribution have been generated on arbitrary time scale equipment of delta calculus in [5]. They have examined the waiting times between any number of events in the \( \mathbb {T}\)-Poisson process. Similarly, it can be generated on arbitrary time scale equipment of the nabla calculus. Let \( S : \mathbb {T}\rightarrow \mathbb {N} _{0}\) be a \( \mathbb {T}\)-Poisson process with rate \( \lambda . \) Let \( T_{n} \) be a random variable that denotes the time until the nth event. We have

which implies

and that motivates the following definition.

Definition 3.2

Let \( \mathbb {T} \) be a time scale, \( S:\mathbb {T}\rightarrow \mathbb {N} _{0}\) be a \( \mathbb {T}\)-Poisson Process with rate \( \lambda >0. \) We say \( F (t; n, \lambda ) \) is the \( \mathbb {T}-\)Erlang cumulative distribution function with parameters \( (n, \lambda ) \) provided

From the derivation, it is clear that the \( \mathbb {T}-\)Erlang distribution models the time until the nth event in the \( \mathbb {T}-\)Poisson process. We would like to know the probability that the nth event is in any subset of \( \mathbb {T}.\) To this end, we introduce the \( \mathbb {T}-\)Erlang PDF in the next definition.

Definition 3.3

Let \( \mathbb {T} \) be a time scale, \( S: \mathbb {T}\rightarrow \mathbb {N} _{0}\) be a \( \mathbb {T-} \) Poisson Process with rate \( \lambda > 0. \) We say \( f (t; n, \lambda )\) is the \( \mathbb {T}-\)Erlang PDF with parameters \( (n, \lambda ). \) We can derive it by appling Theorem 3.3 (ii) and Theorem 3.15 (iv), (viii) from [3] as:

Let \( \mathbb {T} \) be a time scale and let T be a \( \mathbb {T}-\)Erlang random variable with parameter \((n,\lambda ).\) By choosing \(\mathbb {T}= \mathbb {R},\) we have

which is recognized as the gamma distribution. If \(\mathbb {T}= \mathbb {Z},\) then we have

which is recognized as the nabla discrete gamma distribution. For further details about the nabla discrete gamma distribution, see [7].

If we choose the time scale \(\mathbb {T}=q^{\mathbb {Z}}\) then we have

which is recognized as q-gamma distribution or q-Erlang distribution of the second kind (see [15]).

Let us to consider the \( \mathbb {T}-\) Erlang distribution with parameter \(n=1\). By the above discussion and Eq. (3.2), the PDF of this distribution is given by

Let \( \mathbb {T} \) be a time scale and let T be a \( \mathbb {T}-\)Erlang random variable with parameter \(n=1\) and rate \( \lambda . \) Then we say T is a \( \mathbb {T}- \)exponential random variable with rate \( \lambda . \) By choosing \(\mathbb {T}= \mathbb {R},\) then we have

which is recognized as the exponential distribution. Note that in this case \( \rho (t)=t.\) If \(\mathbb {T}= \mathbb {Z},\) then we have

which is recognized as the geometric distribution . If we choose the time scale \(\mathbb {T}=q^{\mathbb {Z}},\) then we have

which is recognized as q-exponential distribution of the second kind (see [15]). We can easily extend the definition of the \( \mathbb {T}\)-gamma distribution with parameters \(( n , \lambda \)) to parameters \( (r, \lambda ) ,\) where \( r>0 \) is not necessarily a positive integer. This can be done by substituting the gamma function as a natural generalization of factorial function in the PDFs.

Remark

There are also delta versions of the equations in Subsection 3.1 that are analogous to the nabla duals of the Poisson process on time scales. The nabla and delta duals of Poisson process on time scales are contained in Table 1.

The alternative way to find \(p_{k}(t)\) requires the probability generating function (PGF) defined as \(\varphi (u,t)=\sum _{k=0}^{\infty }u^{k}p_{k}(t)\). A similar approach will be used in the following section to solve the fractional generalized type of Eq. (3.8). Let us consider this scenario. By taking the derivation of the PGF,

By solving this differential equation, the following result is obtained:

Using the property of the PGF, we have

Similarity, one can obtain the solution (3.8) by induction. Note that when we work with quantum time scale, the recent formula is of the form

3.2 Simulation study

In this subsection we conduct a simulation study in order to compare nabla and delta calculus of the observed distributions. We simulate 100 samples of size 500. For the simulated data set, we estimated parameters of the distributions from Table 1, and calculate their log-likelihood functions. The parameters estimation is obtained using the maximum likelihood method. Thus, for an observed sample \(\{x_1,\ldots , x_k\}\), the maximization of the following log-likelihood function:

has been conducted, where \(\varvec{\theta }\) is the vector of distribution parameters, and \(f(x;\varvec{\theta })\) is the considered probability function. The maximization procedure is obtained numerically, using the programming language R.

For the group of Poisson probability functions, we simulated data set using the Poisson distribution \(\mathcal {P}(\mu )\), where we set \(\mu =0.4\). The obtained results of \({\mathbb {Z}}\)-Poisson and \(q^{\mathbb {Z}}\)-Poisson distributions are given in Table 2.

Further, for the case of the Erlang distributions, we simulated data set by the Erlang distribution with shape parameter \(n=5\) and rate parameter \(\lambda =0.4\). Table 3 contains the results of \({\mathbb {Z}}\)-Erlang and \(q^{\mathbb {Z}}\)-Erlang distributions, where the estimated parameters and log-likelihood functions are presented.

Finally, the data set for the family of exponential distribution is generated with \(exp(\lambda )\), where the parameter is set to be \(\lambda =0.4\). The estimated parameters and values of the log-likelihood function for \({\mathbb {Z}}\)-exponential and \(q^{\mathbb {Z}}\)-exponential are given in Table 4.

From Table 2, we can see that the \({\mathbb {Z}}\)-Poisson distribution derived from nabla and delta calculus provide very similar results. It seams as there are some offset of the estimates. But if we take into consideration that the initial derivation starts with rate \(\mu \), where \(\mu =\lambda t\), then we can conclude that the estimated parameters provide estimate for the parameter \(\mu \) equally close to its real value 0.4. Regarding the \({\mathbb {Z}}\)-Poisson distribution, there is no obvious differences between the obtained results. A slightly better log-likelihood value is achieved with the nabla calculus, while the standard errors of the estimates are quite similar.

According to the values of the log-likelihood functions, Table 3 implies that fitting the Erlang distributions is slightly better with nabla calculus of \({\mathbb {Z}}\)-Erlang, and delta calculus of \(q^{\mathbb {Z}}\)-Erlang. Although, the estimated parameters are close to the real values in all cases.

Results from Table 4 suggest that nabla calculus of \({\mathbb {Z}}\)-exponential gives slightly better fit of the simulated data, while there is no differences for the \(q^{\mathbb {Z}}\)-exponential distribution functions. The estimated parameters are quite close to the real values, with the very small standard error of the estimates.

Finally, we can conclude that fitting data looks pretty similar and equally adequate with both nabla and delta calculus of the observed probability functions.

4 Fractional Duals of Poisson Process on Time Scales

A Poisson process is a stochastic process that represents the number of events occurring in a fixed interval of time or space. It has the property of memory-lessness, meaning that the number of events in non-overlapping intervals are independent. A fractional Poisson process is a generalization of the Poisson process that allows for events to exhibit long-range dependence. The exponential density of the inter-arrival times of the Poisson process is replaced by the corresponding density of the fractional Poisson process, which depends on the (two-parameter) Mittag-Leffler function. For some complete references, see [45,46,47] to earlier developments on the fractional extension of the Poisson process. This subsection is devoted to the derivation of fractional Poisson, Erlang and exponential distribution functions, where both \(\nabla \) and \(\Delta \)-calculus are considered.

4.1 Derivation

Substituting Riemann-Liouville fractional derivative (see Section 2 of this paper) in Equation (3.7), the fractional generalized type of this equation is obtained:

where \(\delta (t)\) is the Dirac delta function on time scale and \( p_{-1}^{\alpha }(t) = 0.\) Note that for \(\alpha =1\), this equation coincides with the equation governing Equation (3.7). Considering

and applying the definition of Riemann-Liouville fractional derivative, the Dirac delta function on time scale can be obtained as

Substituting \(\delta (t)\) Riemann-Liouville fractional derivative in Eq. (4.1), we have:

To obtain \(p_{k}^{\alpha }(t),\) we use the PGF as following:

This leads us to the following fractional differential equation

To solve this equation, by taking the Laplace transform of the above equation we have

By considering Lemma 22 and Definition 16 from [13],

hence,

On the other hand, the Laplace transform of Mittag-Leffler function is obtained as

where the uniqueness of Laplace transform leads to

Using the property of the PGF, we have

where \(\texttt{E}_{\alpha }^{(k)}((\Theta \lambda )(t))\) is kth derivation of the Mittag-Leffler function,

Thus, we obtain the probability mass function (PMF) of fractional Poisson process as:

This derivation motivates a general definition of the fractional Poisson process on time scales as follows:

Definition 4.1

Let \( \mathbb {T} \) be a time scale. We say \( S^{\alpha } : \mathbb {T} \rightarrow \mathbb {N}_{0} \) is a fractional \( \mathbb {T}-\)Poisson process with rate \( \lambda > 0 \) if for \( t \in \mathbb {T}\) and \( k \in \mathbb {N}_{0},\)

By fixing \( t \in \mathbb {T}, \) a distribution of the number of arrivals at t is generated. Three kinds of time scales are considered: \( \mathbb {R}, \mathbb {Z} \) and \( q^{\mathbb {Z}}\).

Let \( S^{\alpha }:\mathbb {R} \rightarrow \mathbb {N}_{0} \) be an fractional \( \mathbb {R}-\)Poisson process. Considering \((\Theta \lambda )(t) =-\lambda \) for all \( t \in \mathbb {R},\) and \( h_{k}(t) = \frac{t^{k}}{k!}\), we have

which is recognized as the fractional Poisson distribution, where \(E^{(k)}_{\alpha }(.)\) is the kth derivation of Mittag-Leffler function on \(\mathbb {T}=\mathbb {R}.\) Note that \(E_{\alpha }(z)=E_{\alpha ,1}(z)\) is an special case of the ordinary Mittag-Leffler function defined with \(E_{\alpha ,\beta }(z)=\sum _{n=0}^{\infty }\frac{z^{n\alpha }}{\Gamma (n \alpha +\beta )}.\)

Now, let \( S^{\alpha }:\mathbb {Z} \rightarrow \mathbb {N}_{0}\) be a fractional \(\mathbb {Z}\)-Poisson process. In this case \((\Theta \lambda )(t)=\frac{-\lambda }{1-\lambda }:=-\mu ,\, h_{k} (t)=\frac{t^{^{\frac{}{k}}}}{\Gamma (k+1)}, \) and thus we have

which is recognized as the fractional nabla Poisson distribution and \(E^{(k)}_{ \frac{}{\alpha }}(. , .)\) is the kth derivation of nabla Mittag-Leffler function [8, 14]. Obviously, based on Eq. (2.1), one can easily see \(E_{\frac{}{\alpha }}(z)= E_{\frac{}{\alpha ,1}}(z)\) is a special case of the nabla Mittag-Leffler function defined with \( E_{\frac{}{\alpha ,\beta }}(\lambda ,z)=\sum _{n=0}^{\infty }\frac{\lambda ^{n} z^{\frac{}{n\alpha +\beta -1}}}{\Gamma (\alpha k+\beta )}.\) Also, \( E_{\frac{\alpha ,\beta }{}} (\lambda ,z) \) is the delta Mittag-Leffler function [8].

If \( S^{\alpha }:q^{\mathbb {Z}} \rightarrow \mathbb {N}_{0} \) is a fractional \(q^{\mathbb {Z}}\)-Poisson process, then we get \((\Theta \lambda )(t)=\frac{-\lambda }{1-(1-q)t\lambda }:=-\nu (t),\, h_{k} (t,0)=\frac{t^{k}}{[k]_{q}!}\) and thus we have

which is recognized as the fractional nabla \(q-\)Poisson distribution and \(_{q}E^{(k)}_{\alpha }(. )\) is the kth derivation of nabla quantum Mittag-Leffler function [12]. Note that based on Equation (2.1), we can easily find that \(_{q}E_{\alpha }(\lambda , z)=_{q}E_{\alpha ,1}(\lambda , z)\) is an special case of the quantum Mittag-Leffler function defined with \(_{q}E_{\alpha ,\beta }(\lambda , z)=\sum _{n=0}^{\infty }\frac{\lambda ^{n}z^{n\alpha }}{\Gamma _{q}(\alpha k+\beta )}.\) Also, \( _{q^{-1}}E_{\alpha ,\beta } (\lambda , z)= _{q}e_{\alpha ,\beta } (\lambda , z)\).

Remark

There are delta forms of the equations in Subsection 4.1 that are analogous to the nabla duals of fractional Poisson processes on time scales with nabla calculus. The nabla and delta duals of the fractional Poisson process on time scales are contained in Table 5.

Similarly, we can define a fractional type of \( \mathbb {T}-\)Erlang distribution. Let \( \mathbb {T} \) be a time scale, \( S^{\alpha }:\mathbb {T}\rightarrow \mathbb {N} _{0}\) be a fractional \( \mathbb {T}\)-Poisson Process with rate \( \lambda >0. \) We say \( F^{\alpha } (t; n, \lambda ) \) is the fractional \( \mathbb {T}-\)Erlang cumulative distribution function with shape parameter n and rate \(\lambda \) provided

with \( f (t; n, \lambda )\) as the fractional \( \mathbb {T}-\)Erlang PDF with shape parameter n and rate \(\lambda \). Hence,

When \(\mathbb {T}= \mathbb {R}\) or \(\mathbb {T}= \mathbb {Z}, \) the term \((\Theta \lambda )(t)\) is a constant value and it can be written with a simple way. Then, by choosing \(\mathbb {T}= \mathbb {R}\), we have

or

which is recognized as the fractional gamma distribution. By the same way, if \(\mathbb {T}= \mathbb {Z}\), we have

which is recognized as nabla fractional gamma distribution. If we choose the time scale \(\mathbb {T}=q^{\mathbb {Z}}\), we have

which is recognized as fractional q-gamma distribution or fractional q-Erlang distribution of the second kind.

Similarly and obviously, if we consider the fractional \( \mathbb {T}-\)Erlang distribution with shape parameter \( k=1,\) we get a fractional \( \mathbb {T}- \)exponential random variable with rate \( \lambda . \) The PDFs of fractional \( \mathbb {T}- \)exponential random variable are mentioned in Table 5.

Remark

There are alternative delta kinds of fractional Poisson processes on time scales, which are comparable to their nabla duals. Both of nabla and delta duals of Poisson process on time scales are contained in Table 5.

4.2 Simulation study

Similarly as in Subsection 3.2 we compare the behaviour of the discussed distribution function on simulated data sets. The distribution functions that we observe here are summarized in Table 5. The data sets contains 100 samples of size 500, generated with the appropriate distributions. Similarly as before, we employ the maximum likelihood method for the parameter estimation, and the maximization procedure is conducted numerically.

For the group of F. Poisson distribution functions, the simulated data set is obtained with \({\mathbb {R}}\)-F. Poisson PMF, where the parameter values are set to \(\lambda =0.4\), \(t=0.9\) and \(\alpha =0.3\). The estimated parameters together with their standard deviation and the log-likelihood values for \({\mathbb {Z}}\)-F. Poisson and \(q^{\mathbb {Z}}\)-F. Poisson distributions are given in Table 6. From the presented results we can conclude that \(\nabla \) and \(\Delta \)-calculus of \(\mathbb {Z}\)-F. Poisson provide quite similar results in modeling the observed data set. The values of log-likelihood function are almost the same and the deviations of the estimates from true values are very similarly. A little bit different conclusion can be made for \(q^{\mathbb {Z}}\)-F. Poisson, where the log-likelihood of the \(\nabla \) function is bigger.

Further, the F-Erlang distribution is simulated by using the \({\mathbb {R}}\)-F. Erlang distribution function from Table 5, where the parameters take values \(\lambda =0.4\), \(n=2\) and \(\alpha =0.3\). The results are summarized in Table 7 for \({\mathbb {Z}}\)-F. Erlang and \(q^{\mathbb {Z}}\)-F. Erlang distributions. From Table 7 we can make similar conclusion for both \(\nabla \) and \(\Delta \)-calculus. While the \(\mathbb {Z}\)-F. Erlang provide a bit better results for \(\Delta \)-calculus, the \(q^\mathbb {Z}\)-F. Erlang appears to be better with \(\nabla \)-calculus.

Finally, \({\mathbb {R}}\)-F. exponential distribution was used to simulate the data set, where the parameter values are \(\lambda =0.4\) and \(\alpha =0.3\). Table 8 contains the results for \(q^{\mathbb {Z}}\)-F .exponential and \(q^{\mathbb {Z}}\)-F .exponential distributions. The presented results suggest that the \(\nabla \)-calculus provides the higher values of the log-likelihood function. While the estimated values are close to the real ones, in both cases, it can be said that the estimates of \(\nabla \)-calculus are closer.

5 The S-box design

Diffusion and confusion are fundamental concepts in cryptography [19]. The Data Encryption Standard (DES) [20] and the Advanced Encryption Standard (AES) [21] are traditional cryptographic standards that utilize S-boxes for the confusion process. Enhancing the complexity of the S-box formation process with newer and more diverse methods makes it harder to reverse-engineer. In simpler terms, the S-box incorporates random numbers generated in various ways. These numbers are generated in two primary methods: True Random Number Generators (TRNGs) that generate numbers based on physical noise, ensuring they are statistically random and entirely unpredictable. On the other hand, Pseudo Random Number Generators (PRNGs) produce numbers that seem statistically random but are actually predictable. In the realm of Pseudo-Random Number Generation (PRNG), recent advancements have focused on increasing the key space of chaotic maps and enhancing their dynamic complexity [22,23,24,25,26,27,28,29,30,31,32]. While this aspect is crucial for combating side-channel attacks [33], challenges arise in implementing chaos-based encryptions [34]. Some approaches involve solving the chaotic cyclic problem using stochastic models [35]. Combining both methods appears to enhance performance in certain scenarios. Chaotic S-boxes exhibit a high maximum probability of differential approximation, as assessed through the Difference Distribution Table (DDT) for differential cryptanalysis. Reference [36] describes a systematic approach for designing chaotic S-boxes, leveraging the DDT, suitable for integration into multimedia encryption algorithms. The design process incorporates the DDT to enhance the differential approximation probability. This reference demonstrates a nonlinearity average of 104, while our method for producing S-boxes achieves a higher nonlinearity average value. Reference [37] introduces an innovative approach for constructing a S-box or Boolean function for block ciphers. This method involves utilizing Gaussian distribution and linear fractional transformation. This reference shows an average Strict Avalanche Criterion (SAC) value of 0.503662, while our S-Box production method achieves an average SAC value closer to 0.5. Reference [31] introduces a novel approach to constructing a robust initial S-box based on the chaotic Rabinovich–Fabrikant fractional order (FO) system. The method involves utilizing numerical results from the FO chaotic Rabinovich–Fabrikant system with specific parameters computed using the four-step Runge Kutta method or Adams–Bashforth–Moulton method. Additionally, a new key-based permutation technique is proposed to improve the initial S-box’s functionality and create the final S-box. This reference evaluates the performance of both the initial and final S-boxes. While it enhances the nonlinearity in the final S-box, it makes results worse in the SAC, BIC-nonlinearity, DP, and LP. In comparison, the S-box production method proposed in this paper appears to offer better results for non-linear SAC and BIC-nonlinearity. Reference [32] worked on generating high nonlinearity S-boxes using cellular automata logic and a chaotic tent map initialization method. In comparison, the proposed S-box has better SAC and BIC-nonlinearity. Because of the randomly distributed noise, the main attention is on the (TRNGs) that utilize an innovative approach to enhance randomness and reduce area utilization. This approach entails generating random values from the PDFs of fractional models, which are more adaptable stochastic models. This method enables the creation of diverse types of S-boxes. The dependence of the supports of fractional distributions on their parameters renders them highly flexible and valuable for various applications, including S-box creation, where modeling plays a crucial role. This fact is applied to introduce a powerful approach to construct S-boxes by using fractional and non-fractional stochastic models by the authors in [38], and then the resulting S-box served as a foundation for achieving a highly secure image encryption. Basically, a PDF offers greater flexibility compared to a pure linear transformation or a random variable as it encompasses its associated random variable and parameters. Therefore, we generate random values from the PDF. Originally, this task was successfully completed using Mathematica 11.0.1, and all random values were generated newly with this software. The proposed algorithm was implemented on Windows 10 Pro, 22H2 version, with 2.20 GHz Gen Intel core and 2.00 GB RAM. In reference [38], normal and fractional stochastic models were utilized to construct S-boxes. In comparison, the models introduced in this paper exhibited superior performance, likely attributable to the generation of higher-quality random numbers. As the creation of S-boxes remains an ongoing challenge [39], this paper represents a new endeavor to explore additional avenues for producing enhanced S-boxes. Indeed, the pursuit of generating superior random numbers to enhance cryptographic performance has long been a challenge for both attackers and security experts. The performance of S-boxes has been tested by common attacks, such as nonlinearity, strict avalanche criterion, bit independence criterion, linear approximation probability, and differential approximation probability.

In this section, we intend to use the stochastic numbers generated with different PDFs from Table 1 and Table 5, and to form new S-boxes. Table 9 provides a list of suggested S-boxes generated from these PDFs. Then, we analyze and compare the obtained values with each other and with the results from other relevant papers. The proposed algorithm is implemented on the Ubuntu 22.04.2 LTS platform with 12th Gen Intel core i9- 12900K and 125 GB RAM using MATLAB R2021b.

5.1 The stochastic S-box

The substitution box is defined mathematically as:

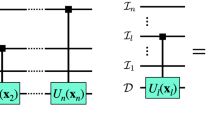

All steps of the stochastic S-box algorithm are:

- Step 1::

-

Enter the parameters of stochastic models.

- Step 2::

-

Create the probability distribution for stochastic model.

- Step 3::

-

Create 1500 random number from the probability distribution.

- Step 4::

-

The A(i) numbers can be defined as follow:

$$\begin{aligned} A(i)= abs(RN(i)*10^6mod256) \end{aligned}$$ - Step 5::

-

Create an empty \(16 * 16\) box.

- Step 6::

-

Select random number from A(i).

- Step 7::

-

Put this number as a S-box number.

$$\begin{aligned} S(e)= A(i) \end{aligned}$$ - Step 8::

-

If S(e) exists in the S-box, it goes to Step 6.

- Step 9::

-

Put S(e) in the S-box table and continue until the entire box is full.

- Step 10::

-

Calculate the nonlinearity.

- Step 11::

-

Save the nonlinearity and S-box.

The S-box flowchart is drawn in Figure 1. Tables 10 and 11 show the best S-boxes produced from the stochastic models from Tables 1 and 5, respectively.

5.2 The analysis of S-box

In this subsection, the S-box Table 10 and Table 11 are evaluated using the following criteria:

-

Nonlinearity:

The most property of the S-box is high nonlinearity. By considering that the affine functions are weak in cryptography, the similarity of the Boolean function variable of S-box can be measured with the affine variable. The nonlinearity can be calculated as

$$\begin{aligned} N = {2^{n - 1}} - \frac{1}{2}\mathop {\max }\limits _{a \in {B^n}} \left| {\sum \limits _{x \in {B^n}} {{{( - 1)}^{f(x) + a.x}}} } \right| , \end{aligned}$$where \(B=\{0,1\}\), \(f:B^{n} \rightarrow B\), \(a\in {B^n}\) and a.x is the dot product between a and x [40]. Nonlinearities of related eight Boolean functions to the suggested S-box are 107, 108, 105, 102, 105, 105, 105, and 108.

-

Strict avalanche criterion (SAC):

SAC is defined by [41], where a change in one bit in the Boolean function’s input changes half of the output bits. Passing this test occurs successfully when the value of SAC is 0.5. The dependence matrix of suggested S-box is calculated based on this definition. This matrix is seen in Table 12.

-

Bit independence criterion (BIC):

The definition of a desirable feature for any encryption transformation for S-box analysis, is the output bits independence criterion (BIC)[41]. In another words, BIC measures the independence of the avalanche vectors sets [42]. We calculated the BIC-nonlinearity and BIC-SAC based on [41]. These results are contained in Tables 13 and 14, respectively.

-

Linear approximation probability (LP):

By considering a, b as the input and output masks, x as all the possible inputs, and \(2^n\) as the number of its elements, linear approximation probability (LP) [43] can be defined as:

$$\begin{aligned} LP = \mathop {\max }\limits _{a,b \ne 0} \left| {\frac{{\# \{ x|x.a = f(x).b\} }}{{{2^{n}}} - 0.5}} \right| . \end{aligned}$$In another words, the maximum value of imbalance in the event between input and output bits is the LP. If this value is low, S-box resists against linear attacks.

-

Differential approximation probability (DP):

Close distribution between the input and output bits in order to S-box resistant against differential attacks is necessary. XOR operator between input and output bits of S-box is calculated based on Biham and Shamir method [44]. In another words, DP is:

$$\begin{aligned} DP = \mathop {\max }\limits _{{\Delta _x} \ne 0,{\Delta _y}} (\# x \in X,{f_x} \oplus f(x + {\Delta _x}) = {\Delta _y}/{2^{n}}), \end{aligned}$$where X is the set of all possible input values, and \(2^n\) shows the number of its elements.

Table 15 exhibits a differential approach table for the suggested S-box. The maximum of this table represents DP. Tables 16 presents results of nonlinearity, SAC, BIC and LP, DP for the suggested S-box and comparison with the results from other relevant papers. The stochastic models for each S-box, whose results are summarized in these tables, are given below:

As can be seen in Table 16, the nonlinearity average value for the suggested S-box of Table 5 is better than the other models, and this value is also better than those stated in [22, 23, 27, 38](case 1), [38](case 2), [31, 36](case 1), and [24]. According to Table 16, the SAC value for the suggested S-box2 of Table 1 and S-box3 of Table 5 are better than the other models. These are also better than all previous references except [38](case 3), [36]. According to Table 16, the BIC-SAC value of the suggested S-box7, S-box9 and S-box14 of Table 5 are better than other models. This value is also better than the previously mentioned references. Regarding the BIC-nonlinearity value, as can be seen, the value of the suggested S-box4 of Table 5 is better than the other models, and compared to the previous works, it is better than all the references in the table except [27, 37], and AES. Considering Table 16, the LP value of the suggested S-box3 and S-box4 of Table 1 are better than the other models. In addition, this value is better compared to all the references in the table except [36], and [37], and [24]. According to the DP values in Table 16, the DP values of the suggested S-box2, S-box5, S-box7, S-box11, S-box12 of Table 1 and suggested S-box4, S-box5, S-box6, S-box9, S-box11, S-box12 of Table 5 are better than the all other models. Also, this value is better according to references [22, 23, 27, 31](case 2), [32], and [24]. Additionally, this value is equal with other references except [36, 37], and AES.

6 Conclusion

This work introduces two fractional and ordinary extensions of the differential equation that describes the Poisson process on an ordinary time scale. For both fractional and ordinary, the nabla and delta types of calculus on a time scale are considered: (ordinary or fractional) nabla Poisson processes, referring to the situation where applied time scale for both fractional and ordinary differential equations are nabla calculus; and (ordinary or fractional) delta Poisson processes, where both fractional and ordinary differential equations are described by using delta calculus. The obtained distributions from the ordinary differential equations (delta or nabla types) include some cases of power series distributions like Poisson, binomial, negative binomial, as well as gamma (continuous and discrete types) and Euler distributions. Also, the obtained distributions from fractional differential equations (delta or nabla types) include only fractional poisson distribution as a known distribution, that is the most of obtained distributions are new. For these obtained distributions (ordinary and fractional types), new S-boxes are proposed and compared to the performance of S-boxes created in this way.

References

Hilger, S.: Analysis on measure chains-a unified approach to continuous and discrete calculus. Results in Mathematics 18(1–2), 18–56 (1990)

Bohner, M., Peterson, A.: Advances in Dynamic Equations on Time Scales. Birkhauser, Boston (2003)

Bohner, M., Peterson, A.: Dynamic Equations on Time Scales. Birkhauser, Boston (2001)

Williams, P. A.: Unifying fractional calculus with time scales. [Ph.D. thesis]. The University of Melbourne (2012)

Poulsen, D. R., Spivey, M. Z., Marks, R. J.: The Poisson Process and Associated Probability Distributions on Time Scales. System Theory (SSST) 2011 IEEE 43rd Southeastern Symposium, pp. 49-54, 14–16 March 2011 (2011)

Ganji, M., Gharari, F.: Bayesian estimation in delta and nabla discrete fractional Weibull distributions. Journal of Probability and Statistics. Advanced online publication, (2016). https://doi.org/10.1155/2016/1969701

Ganji, M., Gharari, F.: A new method for generating discrete analogues of continuous distributions. Journal of Statistics Theory and Applications 17(1), 39–58 (2018)

Ganji, M., Gharari, F.: The discrete delta and nabla Mittag-Leffler distributions. Communications in Statistics-Theory and Methods 47(18), 4568–4589 (2018)

Ganji, M., Gharari, F.: A new stochastic order based on discrete Laplace transform and some ordering results of order statistics. Communications in Statistics-Theory and Methods 52(6), 1963–1980 (2021)

Gharari, F., Bakouch, H., Karakaya, K.: A pliant model to count data: Nabla Poisson-Lindley distribution with a practical data example. Bulletin of the Iranian Mathematical Society 49, 32 (2023). https://doi.org/10.1007/s41980-023-00773-9

Bakouch, H.S., Gharari, F., Karakaya, K., Akdogan, Y.: Fractional Lindley distribution generated by time scale theory, with application to discrete-time lifetime data. Mathematical Population Studies (2024). https://doi.org/10.1080/08898480.2024.2301865

Abdeljawad, T., Alzabut, J.O.: The \( q-\)fractional analogue of Gronwall-type inequality. Journal of Function Spaces and Applications, Volume 2013, Article ID 543839, 7 pages, (2013). https://doi.org/10.1155/2013/543839

Zhu, J., Wu, L.: Fractional Cauchy problem with caputo Nabla derivative on time scales. Hindawi Publishing Corporation Abstract and Applied Analysis Volume 2015, Article ID 486054, 23 pages (2015). https://doi.org/10.1155/2015/486054

Abdeljawad, T.: On delta and nabla caputo fractional differences and dual identities. Discrete Dynamics in Nature and Society, 2013:12. Article ID 406910 (2013)

Charalambides, C.A.: Discrete q-distributions. John Wiley & Sons, Hoboken, New Jersey (2016)

Mainardi, F., Gorenflo, R., Scalas, E.: A fractional generalization of the Poisson processes. Vietnam J. Math. 32, 53–64 (2004). MR2120631

Mainardi, F., Gorenflo, R., Vivoli, A.: Renewal processes of Mittag-Leffler and Wright type. Fract. Calc. Appl. Anal. 8, 7–38 (2005). MR2179226

Meerschaert, M.M., Nane, E., Vellaisamy, P.: The fractional Poisson process and the inverse stable subordinator. Electron. J. Probab. 16, 1600–1620 (2011). MR2835248 (2012k:60252)

Shannon, C.E.: Communication theory of secrecy systems. The Bell system technical journal 28(4), 656–715 (1949)

National Institute of Standards and Technology, FIPS PUB 46-3: Data Encryption Standard (DES), super-sedes FIPS, pp. 46-2 (1999)

Advanced encryption standard (aes), Federal Information Processing Standards Publication 197 Std

Khan, M., Shah, T., Mahmood, H., Asif, M., Iqtadar, G.: A novel technique for the construction of strong S-boxes based on chaotic lorenz systems. Nonlinear Dyn. 70, 2303–2311 (2012)

Ozkaynak, F., Yavuz, S.: Designing chaotic S-boxes based on time-delay chaotic system. Nonlinear Dyn. 74, 551–557 (2013)

Hussain, I., Tariq, S., Muhammad, A.: A novel approach for designing substitution-boxes based on nonlinear chaotic algorithm. Nonlinear Dyn. 70, 1791–1794 (2012)

Cavusoglu, U., Kaçar, S., Zengin, A., Pehlivan, I.: A novel hybrid encryption algorithm based on chaos and S-AES algorithm. Nonlinear Dyn. 91, 939–956 (2018)

Lambic, D.: A new discrete-space chaotic map based on the multiplication of integer numbers and its application in S-box design. Nonlinear Dyn. pp. 1-13 (2020)

Hematpour, N., Ahadpour, S., Behnia, S.: Presence of dynamics of quantum dots in the digital signature using DNA alphabet and chaotic S-box. Multimedia Tools and Applications 80(7), 10509–10531 (2021)

Hematpour, N., Ahadpour, S., Sourkhani, I. G., Sani, R. H.: A new steganographic algorithm based on coupled chaotic maps and a new chaotic S-box. Multimedia Tools and Applications, pp. 1-32 (2022)

Hematpour, N., Ahadpour, S.: Execution examination of chaotic S-box dependent on improved PSO algorithm. Neural Computing and Applications 33(10), 5111–5133 (2021)

Hematpour, N., Ahadpour, S., Behnia, S.: A Quantum Dynamical Map in the Creation of Optimized Chaotic S-Boxes. In Chaotic Modeling and Simulation International Conference (pp. 213-227). Springer, Cham (2022)

Ullah, S., Liu, X., Waheed, A., Zhang, S.: An efficient construction of S-box based on the fractional-order Rabinovich-Fabrikant chaotic system. Integration 94, 102099 (2024)

Artuger, F.: A new S-box generator algorithm based on chaos and cellular automata, pp. 1–10. Signal, Image and Video Processing (2024)

Acikkapi, M.S., Ozkaynak, F., Ozer, A.B.: Side-channel analysis of chaos-based substitution box structures. IEEE Access 7, 79030–79043 (2019)

Akhavan, A., Samsudin, A., Akhshani, A.: Cryptanalysis of “an improvement over an image encryption method based on total shuffling’’. Optics Communications 350, 77–82 (2015)

Fan, C., Ding, Q.: Counteracting the dynamic degradation of high-dimensional digital chaotic systems via a stochastic jump mechanism. Digital Signal Processing 129, 103651 (2022)

Khan, M.A., Ali, A., Jeoti, V., Manzoor, S.: A chaos-based substitution box (S-Box) design with improved differential approximation probability (DP). Iranian Journal of Science and Technology, Transactions of Electrical Engineering 42, 219–238 (2018)

Khan, M.F., Ahmed, A., Saleem, K.: A novel cryptographic substitution box design using Gaussian distribution. IEEE Access 7, 15999–16007 (2019)

Hematpour, N., Gharari, F., Ors, B., Yalcin, M. E.: A novel S-box design based on quantum tent maps and fractional stochastic models with an application in image encryption. Soft Computing, pp. 1-34 (2023)

Waheed, A., Subhan, F., Suud, M.M., Alam, M., Ahmad, S.: An analytical review of current S-box design methodologies, performance evaluation criteria, and major challenges. Multimedia Tools and Applications 82(19), 29689–29712 (2023)

Cusick, T., Stanica, P.: Cryptographic boolean functions and applications. Elsevier, Amsterdam (2017)

Webster, A., Tavares, S.: On the design of S-boxes. Conference on the theory and application of cryptographic techniques, Springer, pp. 523-34 (1985)

Zhang, H., Ma, T., Huang, G., Wang, Z.: Robust global exponential synchronization of uncertain chaotic delayed neural networks via dual-stage impulsive control. IEEE Trans. Syst. Man. Part B Cybern 40, 831–844 (2009)

Matsui, M.: Linear cryptanalysis method for des cipher, Workshop on the theory and application of cryptographic techniques, Springer, Berlin, Heidelberg pp. 386–397. (1993)

Biham, E., Shamir, A.: Differential cryptanalysis of des like cryptosystems. J. Cryptol. 4, 3–72 (1991)

Saichev, A.I., Zaslavsky, G.M.: Fractional kinetic equations: solutions and applications. Chaos. 7, 753–764 (1997)

Repin, O.N., Saichev, A.I.: Fractional Poisson law, Radiophysics and Quantum. Electronics. 43, 738–741 (2000)

Laskin, N.: Fractional Poisson process. Communications in Nonlinear Science and Numerical Simulation. 8, 201–213 (2003)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional. Differential Equations. John Wiley, New York (1993)

Butzer, P.L.L., Westphal, U.: An Introduction to Fractional Calculus. Applications of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Anton Abdulbasah Kamil.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gharari, F., Hematpour, N., Bakouch, H.S. et al. Fractional Duals of the Poisson Process on Time Scales with Applications in Cryptography. Bull. Malays. Math. Sci. Soc. 47, 145 (2024). https://doi.org/10.1007/s40840-024-01737-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40840-024-01737-w