Abstract

The purpose of this paper is to propose the novel generalized exponential intuitionistic fuzzy entropy (GIFE) and generalized exponential interval valued intuitionistic fuzzy entropy (GIVIFE) with interval area. First, we propose a novel GIFE. Then we compare the new GIFE with the existing intuitionistic fuzzy entropy (IFE) measures. Second, we define the interval area and the new axioms for the interval valued intuitionistic fuzzy entropy (IVIFE). Third, according to the newly defined axioms for the IVIFE, we use the interval area to construct the new GIVIFE. Finally, the advantages of the new generalized entropy measures are compared with the existing IVIFE measures by some examples. The two novel generalized exponential entropy measures can distinguish the special cases well. We have the conclusion that the two novel generalized entropy measures are reasonable and more flexible than the existing entropy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Entropy is an important concept for theory of fuzzy sets (FSs) which were proposed by Zadeh [1]. It is used to measure the fuzziness degree of FSs. Entropy is also called the entropy measure. Burillo and Bustince [2] extended the entropy from FSs to intuitionistic fuzzy sets (IFSs). It was intuitionistic fuzzy entropy (IFE). Liu et al. [3] defined the interval valued intuitionistic fuzzy entropy (IVIFE). Thus the uncertainty degree or fuzziness degree of interval valued intuitionistic fuzzy sets (IVIFSs) can be measured. There are also axiomatic conditions with the IFE and IVIFE. We call the axiomatic conditions for the IFE introduced by Burillo and Bustince [2] B-B axioms. We call the axiomatic conditions gave by Szmidt and Kacprzyk [4] S-K axioms. In addition, there are other axiomatic conditions which were proposed in [5,6,7,8].

A lot of literature [9,10,11,12] studied fuzzy multi-attribute decision making and determination of weights is an important research for multi-attribute decision making. Although there are many ways to determine weights [13], entropy weighting method [14] is one of the common used methods to calculate the weights. Thus entropy has become a hot topic of FSs theory. Many researchers studied the IFE and many formulas were proposed. Liu and Ren [15] gave the IFE based on cosine function. About a year later, another cosine entropy was presented by Liu and Ren [16]. Xiong et al. [17] proposed the IFE based on logarithmic function and it was a generalized entropy measure. The IFE coefficients were showed in this article. Mishra [18] also gave the IFE based on logarithmic function. But these two IFE measures were different. Joshi and Kumar [19] proposed a generalized entropy measure based on parameters. When the parameters change, the entropy measure is not only flexible, but also consistent. Motivated by the reliability and amount of knowledge which were introduced by Szmidt and Kacprzyk [20], some IFE measures were constructed [5,6,7,8, 17]. These IFE measures were proved to be well defined and they were symmetric. Symmetry is characteristic of these entropy measures. The entropy must be constructed according to certain rules, i.e., each entropy must follow specific axiomatic conditions. Such as literature [15,16,17,18], they proposed the entropy measures according to S-k axioms. Literature [5,6,7,8, 21] defined the entropy measures according to the other axioms.

The IVIFE is also a hot topic of FSs theory. It is interesting and important to study the IVIFE. Scholars devoted a lot of time and energy to study the IVIFE. Wei et al. [22] extended the existing IFE to the IVIFE based on the average of membership interval, non-membership interval and hesitancy interval. Wei and Zhang [23] presented a new IFE measure and a new IVIFE measure based on cosine function. Based on the IVIFE presented by Wei et al. [22], Meng and Chen [24] proposed a revised IVIFE measure without degree of hesitation. In addition, based on the membership degree, non-membership and hesitancy degree, Gao et al. [25] gave a new IVIFE measure. Zhao and Mao [26] used the logarithmic function to defined an IVIFE measure. Chen et al. [27] presented an IVIFE measure based on cotangent function, thus the advantage of the cotangent function can be used. Another function commonly used to construct the IVIFE is the exponential function. For example, Yin et al. [28] introduced an improved IVIFE measure based on exponential function. But Zhang et al. [29] suggested a new IVIFE measure using the distance of an IVIFS from the fuzziest IVIFS.

The generalized entropy has its own advantages. When the parameters change, the entropy value changes. Moreover, when the parameters change, the entropy becomes another entropy. The parameters have practical significance for entropy. Thus how the parameters affect the entropy is an interesting thing to study. Some scholars studied the generalized entropy. Bhandari and Pal [30] is one of the early scholars who proposed the generalized entropy. Most of the entropy measures showed above are non-generalized entropy except [8, 17, 19]. Joshi and Kumar [19] borrowed the entropy to a probability distribution and the parameters were exponential. The generalized IFE measure in [8] was introduced by logarithmic function. Joshi and Kumar [31, 32] proposed the generalized IFE used the exponential function. Mishra and Rani [33, 34] proposed the generalized IVIFE used the exponential function.

Though so many generalized IFE measures and generalized IVIFE measures were proposed, some problems remain unsolved. (1) [31,32,33,34] did not prove how these parameters affect the entropy. The generalized entropy measures proposed by literature [8, 17, 31,32,33,34] considered only the effects of fuzziness and intuitionism, but they did not consider the weights of fuzziness and intuitionism. (2) When the mean of membership and non-membership of an IVIFS is equal to the mean of membership and non-membership of another IVIFS respectively, the specific IVIFSs cannot be distinguished using some exiting IVIFE measures. (3) Some existing entropy measures cannot distinguish the IFSs located on the line \( \langle 0,0 \rangle \) and \( \langle 0.5,0.5 \rangle.\) (4) The existing axioms for the IVIFE were simply extended from the axioms for the IFE, it doesn’t consider the interval area inherent in IVIFSs. The target and motivation of this paper is to solve these problems.

2 Preliminaries

In this section we will discuss some basic concepts of IFSs and IVIFSs.

An IVIFS [21] \( \widetilde{A} \) in a finite set \( X \) is an object having the following form:

where \( \mu_{{\widetilde{A}}} (x) \subseteq [0,1] \) and \( \upsilon_{{\widetilde{A}}} (x) \subseteq [0,1] \) denote the membership degree and non-membership of \( x \in X \) with the condition \( \sup \mu_{{\widetilde{A}}} (x) + \sup \upsilon_{{\widetilde{A}}} (x) \le 1 \). For the interval hesitation margin \( \pi_{{\widetilde{A}}} (x) \), we have \( \inf \pi_{{\widetilde{A}}} (x) = 1 - \sup \mu_{{\widetilde{A}}} (x) - \sup \upsilon_{{\widetilde{A}}} (x) \) and \( \sup \pi_{{\widetilde{A}}} (x) = 1 - \inf \mu_{{\widetilde{A}}} (x) - \inf \upsilon_{{\widetilde{A}}} (x) \). If \( \inf \mu_{{\widetilde{A}}} (x) \)\( = \sup \mu_{{\widetilde{A}}} (x) \) and \( \inf \upsilon_{{\widetilde{A}}} (x) = \sup \upsilon_{{\widetilde{A}}} (x) \), then the IVIFS \( \widetilde{A} \) reduces to IFS. For convenience, we let \( \mu_{{\widetilde{A}}} (x) = [\mu_{{\widetilde{A}}}^{L} (x),\mu_{{\widetilde{A}}}^{U} (x)] \), \( \upsilon_{{\widetilde{A}}} (x) = [\upsilon_{{\widetilde{A}}}^{L} (x),\upsilon_{{\widetilde{A}}}^{U} (x)] \), and \( \pi_{{\widetilde{A}}} (x) = [\pi_{{\widetilde{A}}}^{L} (x),\pi_{{\widetilde{A}}}^{U} (x)] \), such that \( \mu_{{\widetilde{A}}}^{U} (x) \)\( +\, \upsilon_{{\widetilde{A}}}^{U} (x) \le 1 \) for any \( x \in X \). So an IVIFS \( \widetilde{A} \) on \( X \) can be expressed as

We denote all the IFSs in \( X \) by \( {\text{IFS }}(X) \) and all the IVIFSs in \( X \) by \( {\text{IVIFS }}(X) \).

For any two IVIFSs \( \widetilde{A} \) and \( \widetilde{B} \) over the same finite set \( X \), the relations and the operations of \( \widetilde{A} \) and \( \widetilde{B} \) are given as follows:

-

1.

\( \widetilde{A} \subseteq \widetilde{B} \) if and only if \( \mu_{{\widetilde{A}}}^{L} (x) \le \mu_{{\widetilde{B}}}^{L} (x) \), \( \mu_{{\widetilde{A}}}^{U} (x) \le \mu_{{\widetilde{B}}}^{U} (x) \) and \( \upsilon_{{\widetilde{A}}}^{L} (x) \ge \upsilon_{{\widetilde{B}}}^{L} (x) \), \( \upsilon_{{\widetilde{A}}}^{U} (x) \ge \upsilon_{{\widetilde{B}}}^{U} (x) \);

-

2.

\( \widetilde{A} = \widetilde{B} \) if and only if \( \widetilde{A} \subseteq \widetilde{B} \) and \( \widetilde{A} \supseteq \widetilde{B} \);

-

3.

The complement of \( \widetilde{A} \) is \( \widetilde{A}^{C} = \{ < x,[\upsilon_{{\widetilde{A}}}^{L} (x),\upsilon_{{\widetilde{A}}}^{U} (x)],[\mu_{{\widetilde{A}}}^{L} (x),\mu_{{\widetilde{A}}}^{U} (x)] > \left| {x \in X\} } \right.; \)

-

4.

The triplet \(<\mu_{{\widetilde{A}}} (x),\upsilon_{{\widetilde{A}}} (x),\pi_{{\widetilde{A}}} (x) >\) is called an interval valued intuitionistic fuzzy value.

The normalized Hamming distance of any two IVIFSs \( \widetilde{A} = \left\{ {x,<[\mu_{{\widetilde{A}}}^{L} (x),\mu_{{\widetilde{A}}}^{U} (x)],} \right.[\upsilon_{{\widetilde{A}}}^{L} (x),\upsilon_{{\widetilde{A}}}^{U} (x)] \left. { > \left| {x \in X} \right.} \right\} \) and \( \widetilde{B} = \left\{ {x,<[\mu_{{\widetilde{B}}}^{L} (x),\mu_{{\widetilde{B}}}^{U} (x)],} \right.\left. {[\upsilon_{{\widetilde{B}}}^{L} (x),\upsilon_{{\widetilde{B}}}^{U} (x)] >\left| {x \in X} \right.} \right\} \) is defined as follow when \( \widetilde{A} \) and \( \widetilde{B} \) have only one element [21]:

3 The New Generalized Exponential IFE

3.1 Some Existing IFE Measures and Disadvantages

In order to measure the fuzzy degree of IFSs, many entropy measures were defined. Gao et al. showed [5] \( E_{\text{GMM}} (A). \) Zhu and Li gave [6] \( E_{\text{ZLI}} (A) \). Guo and Song [21] defined \( E_{\text{GKH}} (A) \). But some of them have disadvantages. The disadvantages will be show as follow examples.

Example 1

\( A_{1} = \langle 0.6,0.1 \rangle \), \( A_{2} = \langle 0.6,0.2 \rangle \) and \( A_{3} = \langle 0.6,0.3 \rangle\) are three IFSs.

Using Eq. (2), we have

Using Eq. (3), we have

Using Eq. (4), we have

Though \( E_{\text{GMM}} (A) \), \( E_{\text{GKH}} (A) \) and \( E_{\text{ZLI}} (A) \) are all defined from the perspective of the reliability and amount of knowledge, the entropy values and the orderings are different. \( E_{\text{GMM}} (A) \), \( E_{\text{GKH}} (A) \) and \( E_{\text{ZLI}} (A) \) were well defined, but some key factors were not taken into account.

Liu and Ren [16] constructed \( E_{\text{LMF}} (A) \). Xiong et al. [17] proposed \( E_{\text{XSH}} (A) \).

Example 2

\( A_{4} = \langle 0.2,0.2 \rangle \) and \( A_{5} = \langle 0.4,0.4 \rangle \) are two IFSs.

Using Eq. (5), we have

Using Eq. (6), we have

\( E_{\text{LMF}} (A) \) and \( E_{\text{XSH}} (A) \) cannot distinguish \( A_{4} \) and \( A_{5} \).

3.2 The New Generalized Exponential IFE

Szmidt and Kacprzk [20] defined the amount of knowledge as \( \mu_{A} (x) + \upsilon_{A} (x) \) and defined reliability as \( \mu_{A} (x) - \upsilon_{A} (x) \). Mao and Yao [8] call \( \mu_{A} (x) + \upsilon_{A} (x) \) intuitionistic factor and \( \mu_{A} (x) - \upsilon_{A} (x) \) fuzzy factor. Motivated by [8, 20], we construct the new generalized IFE with the reliability and amount of knowledge to overcome the shortcomings of the IFE measures above.

Definition 1

The new generalized exponential entropy for an IFS \( A = \{ <x,\mu_{A} (x),\upsilon_{A} (x)>\left| {x \in } \right.X\} \) which has only one element is given as follows:

where \( \alpha , \, \beta \) are weight coefficients. \( 0 < \alpha ,\beta < 1 \). \( \alpha + \beta = 1 \). \( p, \, q > 0 \) are IFE coefficients.

\( p, \, q \) are also the effects of lack of reliability and lack of knowledge on IFE, respectively. \( \pi_{A} (x) \) is the lack of knowledge and \( 1 - \left| {\mu_{A} (x) - \upsilon_{A} (x)} \right| \) is the lack of reliability.

Theorem 1

Let\( A \in IFS(X) \), a real function\( E(A) \in [0,1] \)defined by Eq. (7) is a generalized exponential entropy for IFSs.

We can prove that Eq. (7) satisfies the following axioms for the IFE introduced by [8]. The literature [8] proposed the axioms for the IFE considering both fuzziness and intuitionism.

Definition 2

For any \( A \in IFS(X) \), a real function \( E(A) = f(\pi_{A} ,\Delta_{A} ):IFS(X) \to [0,1] \) is called an entropy for IFSs, if \( E(A) \) satisfies the following axioms.

-

(P1) \( E(A) = 0 \Leftrightarrow A \) is a crisp set, i.e., \( \mu_{A} (x){ = 0} \), \( \upsilon_{A} (x){ = 1} \) or \( \mu_{A} (x){ = 1} \), \( \upsilon_{A} (x){ = 0} \);

-

(P2) \( E(A) = 1 \Leftrightarrow A = \{ < x,[0,0] > \left| {x \in X\} } \right. \);

-

(P3) \( E(A) = E(A^{C} ) \);

-

(P4) \( E(A) = f(\pi_{A} ,\Delta_{A} ) \) is a real continuous function which increases with the increasing of the first variable \( \pi_{A} = 1 - \mu_{A} (x) - \upsilon_{A} (x) \) and decreases with the increasing of second variable \( \Delta_{A} = \left| {\mu_{A} (x) - \upsilon_{A} (x)} \right| \).

Proof

(P1) For any \( x \in X, \) let \( A \) be a crisp set. When \( \mu_{A} (x) = 1 \), \( \upsilon_{A} (x) = 0 \), we have

When \( \mu_{A} (x) = 0 \), \( \upsilon_{A} (x) = 1 \), we have \( E(A) = \alpha (1 - \left| {0 - 1} \right|)^{P} + \beta (1 - 0 - 1)^{q} e^{{1 - \alpha (1 - \left| {0 - 1} \right|)^{P} - \beta (1 - 0 - 1)^{q} }} = 0 \).

For any \( x \in X, \) we now suppose \( E(A) = 0. \) Given that \( \alpha \ne 0,\beta \ne 0 \), then we have \( \alpha (1 - \left| {\mu_{A} (x) - \upsilon_{A} (x)} \right|)^{p} \ge 0 \), \( \beta (1 - \mu_{A} (x) - \upsilon_{A} (x))^{q} \ge 0 \), and \( e^{{1 - \alpha (1 - \left| {\mu_{A} (x) - \upsilon (x)} \right|)^{P} - \beta (1 - \mu_{A} (x) - \upsilon (x))^{q} }} > 0 \). It can only be deduced from Eq. (7) that \( \alpha (1 - \left| {\mu_{A} (x)} \right. - \upsilon_{A} (x\left. ) \right|)^{p} = 0 \) and \( \beta (1 - \mu_{A} (x) - \upsilon_{A} (x))^{q} = 0 \), which mean \( \mu_{A} (x) = 1 \), \( \upsilon_{A} (x) = 0 \) or \( \mu_{A} (x) = 0 \), \( \upsilon_{A} (x) = 1 \) and therefore \( A \) is a crisp set.

(P2) For any \( x \in X, \) let \( \mu_{A} (x) = 0, \)\( \upsilon_{A} (x) = 0 \), we have

For any \( x \in X, \) we now suppose \( E(A) = 1. \) Given that \( 0 < \alpha ,\beta < 1, \)\( \alpha + \beta = 1 \), we have \( 1 \ge \alpha (1 - \left| {\mu_{A} (x) - \upsilon_{A} (x)} \right|)^{p} \ge 0, \)\( \, 1 \ge \beta (1 - \mu_{A} (x) - \upsilon_{A} (x))^{q} \ge 0 \) and \( e^{{1 - \alpha (1 - \left| {\mu_{A} (x) - \upsilon (x)} \right|)^{P} - \beta (1 - \mu_{A} (x) - \upsilon (x))^{q} }} \ge 1 \). It can be deduced from Eq. (7) that

i.e.,

Thus, we have

(P3) Trivial from the relations and operations of \( A \) and \( A^{C} . \)

(P4) For any \( x \in X \), let \( E(A) = f(y,z) \), i.e.,

where \( y = \left| {\mu_{A} (x) - \upsilon_{A} (x)} \right| \ge 0 \), \( z = 1 - \mu_{A} (x) - \upsilon_{A} (x) \ge 0 \), \( y \in [0,1] \) and \( z \in [0,1] \). So \( E(A) \ge 0 \). Taking the partial derivative of \( f(y,z) \) with \( y \), we have

Given that \( e^{{^{{1 - a(1 - y)^{p} - \beta z^{q} }} }} < e^{{^{{1 - \beta z^{q} }} }} \), we have \( 1 - \beta z^{q} e^{{^{{1 - a(1 - y)^{p} - \beta z^{q} }} }} > 1 - \beta z^{q} e^{{^{{1 - \beta z^{q} }} }} . \) Let \( M(\xi ) = 1 - \xi e^{{^{1 - \xi } }} , \) where \( \xi = \beta z^{q} \), taking the partial derivative of \( M(\xi ) \) with \( \xi \), we have \( M^{'} (\xi ) = - (1 - \xi )e^{{^{1 - \xi } }} \le 0 \). It means \( 1 - \beta z^{q} e^{{^{{1 - \beta z^{q} }} }} \) decreases monotonically with the increasing of \( \beta z^{q} \). We also know \( 0 \le \beta z^{q} \le 1 \). So when \( \beta z^{q} \to 1 \), we have \( 1 - \beta z^{q} e^{{^{{1 - a(1 - y)^{p} - \beta z^{q} }} }} > 1 - \beta z^{q} e^{{^{{1 - \beta z^{q} }} }} = 0 \). Thus

So \( f_{y} (y,z) \) decreases monotonically with the increasing of \( y. \) Taking the partial derivative of \( f(y,z) \) with \( z \), we have

It means \( f_{z} (y,z) \) increases monotonically with the increasing of \( z \). When \( y = 0 \) and \( z = 1 \), we have \( E(A) = 1 \); When \( y = 1 \) and \( z = 0 \), we have \( E(A) = 0 \). So \( 0 \le E(A) \le 1 \).

Therefore, it proves that Eq. (7) satisfies axioms (P1)–(P4). Thus the GIFE is an IFE.

Let’s compute the entropy values of these IFSs [21], i.e., \( x_{1} = \langle 0.7,0.3 \rangle \), \( x_{2} = \langle 0.6,0.2 \rangle \), \( x_{3} = \langle 0.5,0.1 \rangle \), \( y_{1} = \langle 0,0.6 \rangle \), \( y_{2} = \langle 0,0.5 \rangle \), \( y_{3} = \langle 0,0.4 \rangle \). From Eq. (7), let \( \alpha = \beta = 0.5, \)\( p = q = 1 \), we have

It is obvious that the new GIFE measure can distinguish these IFSs well. So the new GIFE measure is reasonable.

Moreover, much work remains to be done. Given that \( 1 \ge 1 - y \ge 0 \), we get \( \ln (1 - y) \le 0 \). It follows from (P4) that \( 1 - \beta z^{q} e^{{1 - a(1 - y)^{p} - \beta z^{q} }} \ge 0 \). Taking the partial derivative of \( f(y,z) \) with \( p \), \( q \), \( \alpha \) and \( \beta \) respectively, we have

Thus, \( E(A) \) decreases with the increasing of \( p,q \) and increases with the increasing of \( \alpha ,\beta \).

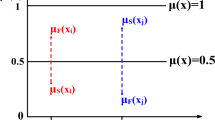

Figures 1 and 2 show how \( \alpha , \, \beta \) and \( p, \, q \) affect the entropy. We can draw more figures similar to Figs. 1 and 2.□

3.3 Comparison with the Existing IFE Measures

In order to reflect the superiority of the GIFE measure proposed in this paper, we compare the new GIFE measure with the existing IFE measures by three examples.

Example 3

\( A_{1} = \langle 0.6,0.1 \rangle \), \( A_{2} = \langle 0.6,0.2 \rangle \), \( A_{3} = \langle 0.6,0.3 \rangle \). Using Eq. (7), we calculate the entropy values and rank them in Table 1.

The entropy values and the ordering are determined by the weight coefficients \( \alpha , \, \beta \) and IFE coefficients \( p, \, q \), i.e., the entropy value is determined by the weights and the effects of lack reliability and lack of knowledge. Thus it is more flexible than the results of Example 1.

Example 4

\( A_{4} = \langle 0.2,0.2 \rangle \), \( A_{5} = \langle 0.4,0.4 \rangle \). \( A_{4} \) and \( A_{5} \) are on the line between \( \langle 0,0 \rangle \) and \( \langle 0.5,0.5 \rangle \). Using Eq. (7), we calculate the entropy values and rank them in Table 2.

No matter how \( \alpha , \)\( \beta \), \( p \) and \( q \) change, \( E(A_{4} ) \) is larger than \( E(A_{5} ) \). So Eq. (7) can distinguish \( A_{4} \) and \( A_{5} \) well. Thus it is better than the results of Example 2.

Mao and Yao [8] introduced the IFE as \( E_{\text{MJJ}} (A) \). Based on the cross entropy measure, Mao and Yao [8] proposed the IFE \( E_{\text{MJJ}}^{p,q} (A) \) with parameters. \( p \) and \( q \) are the effects of fuzzy information (reliability) and intuitionistic information (knowledge), respectively. When \( p = q = 1 \), \( E_{\text{MJJ}}^{p,q} (A) \) reduces to \( E_{\text{MJJ}} (A) \).

Example 5

\( A_{6} = \langle 0.5,0.1 \rangle \), \( A_{7} = \langle 0.5,0.2 \rangle \), \( A_{8} = \langle 0.5,0.3 \rangle \). Using Eqs. (6) and (8), we calculate the entropy values and rank them in Table 3.

From the calculations in Table 3, we know the results can be changed not only by the weight coefficient \( \alpha \) and \( \beta \), but also by the IFE coefficient \( p \) and \( q \). Equation (8) only considers the situation of \( p = \beta \) and \( q = \alpha \), it does not consider the weights of fuzziness and intuitionism \( \alpha \) and \( \beta \) which are independent from \( p \) and \( q \). Thus the GIFE proposed in this paper is more flexible and more general than \( E_{\text{MJJ}}^{p,q} (A) \).

From Examples 3 to 5, we know the new GIFE measure is more flexible and better than some existing IFE measures.

4 The New Generalized Exponential IVIFE

4.1 Some Existing IVFE Measures and Disadvantages

Some IVIFE measures were defined. Guo and Song [21] defined \( E_{\text{GKH}} (\widetilde{A}) \). Zhao and Mao [26] introduced \( E_{\text{ZYM}} (\widetilde{A}) \). Chen et al. [27] gave \( E_{\text{CXH}} (\widetilde{A}) \). But some of them have disadvantages. The disadvantages will be showed as follow examples.

Example 6

\( \widetilde{A}_{1} = \langle [0.4,0.6],[0.1,0.3] \rangle \) and \( \widetilde{A}_{2} = \langle [0.45,0.55],[0.15,0.25] \rangle. \) According to our intuition, \( \widetilde{A}_{1} \) is more fuzzier than \( \widetilde{A}_{2} \). We know \( \frac{0.4 + 0.6}{2} = \frac{0.45 + 0.55}{2} \) and \( \frac{0.1 + 0.3}{2} = \)\( \frac{0.15 + 0.25}{2} \).

Using Eq. (10), we have

Using Eq. (11), we have

Using Eq. (12), we have

\( E_{\text{GKH}} (\widetilde{A}) \), \( E_{\text{ZYM}} (\widetilde{A}) \) and \( E_{\text{CXH}} (\widetilde{A}) \) can not distinguish \( \widetilde{A}_{1} \) and \( \widetilde{A}_{2} \). The results differ from our intuition.

Meng and Chen [24] proposed \( E_{\text{MFY}} (\widetilde{A}) \). Mishra [33] defined \( E_{\text{ARM}} (\widetilde{A}) \).

Example 7

\( \widetilde{A}_{3} = \langle [0.1,0.1],[0.1,0.1] \rangle \), \( \widetilde{A}_{4} = \langle [0.3,0.3],[0.3,0.3] \rangle \). \( \widetilde{A}_{3} \) and \( \widetilde{A}_{4} \) are on the line between \( \langle [0,0],[0,0] \rangle \) and \( \langle [0.5,0.5],[0.5,0.5] \rangle \). From our intuition, the entropy value of \( \widetilde{A}_{3} \) is not equal to the entropy value of \( \widetilde{A}_{4} \).

Using Eq. (13), we have

Using Eq. (14), we have

\( E_{\text{ARM}} (\widetilde{A}) \) and \( E_{\text{MFY}} (\widetilde{A}) \) can not distinguish \( \widetilde{A}_{3} \) and \( \widetilde{A}_{4} \). The results differ from our intuition.

Thus, the above methods have limitations.

4.2 New Axioms of the IVIFE

From our intuition, the bigger the membership interval and non-membership interval, the fuzzier it gets. So we can deduce from the membership interval and non-membership interval that \( <[0,0.5],[0,0.5]>\) is the fuzziest IVIFS. The hesitation degree interval of \( <[0,0.5],[0,0.5]>\) is \( [0,1] \). \( [0,1] \) is the biggest hesitancy interval. According to axiom (P2), \( <0,0>\) is also the fuzziest IFS, thus \( <[0,0],[0,0]>\) is the fuzziest IVIFS. The intrinsic area in IVIFS is \( \left| {\mu_{{\widetilde{A}}}^{U} (x) - } \right.\left. {\mu_{{\widetilde{A}}}^{L} (x)} \right| \cdot \left| {\upsilon_{{\widetilde{A}}}^{U} (x)} \right. - \left. {\upsilon_{{\widetilde{A}}}^{L} (x)} \right|\), which is enclosed by the membership interval and non-membership interval. When IVIFS reduces to IFS, the intrinsic area reduces to 0, thus we introduce the interval area which is represented by \( \left| {\mu_{{\widetilde{A}}}^{U} (x) - \mu_{{\widetilde{A}}}^{L} (x)} \right| \cdot \left| {\upsilon_{{\widetilde{A}}}^{U} (x) - \upsilon_{{\widetilde{A}}}^{L} (x)} \right| + (\left| {\mu_{{\widetilde{A}}}^{U} (x) - } \right.\left. {\mu_{{\widetilde{A}}}^{L} (x)} \right| + \left| {\upsilon_{{\widetilde{A}}}^{U} (x) - \upsilon_{{\widetilde{A}}}^{L} (x)} \right|)/4 \). Furthermore, the entropy value not only determined by interval area, but also by the entropy value of \( < \mu_{{\widetilde{A}}}^{L} (x),\upsilon_{{\widetilde{A}}}^{L} (x) > \) and \( < \mu_{{\widetilde{A}}}^{U} (x),\upsilon_{{\widetilde{A}}}^{U} (x) > \).

From the description above, considering the axioms proposed in [8], we defined the new axioms for the IVIFE as follow.

Definition 3

For any \( \widetilde{A} \in {\text{IVIFS}}(X) \), a real function \( E(\widetilde{A}) = f(\psi_{{\widetilde{A}}} ,\Delta_{{\widetilde{A}}} ):{\text{IVIFS}}(X) \to [0,1] \) is called an entropy for IVIFSs, if \( E(\widetilde{A}) \) satisfies the following axioms.

-

(R1) \( E(\widetilde{A}) = 0 \Leftrightarrow \widetilde{A} \) is a crisp set, i.e., \( \widetilde{A} = \{ < x,[0,0],[1,1] > \left| {x \in X\} } \right. \) or \( \widetilde{A} = \{ < x,[1,1],[0,0] > \)\( \left| {x \in X\} } \right. \);

-

(R2) \( E(\widetilde{A}) = 1 \Leftrightarrow \widetilde{A} = \{ < x,[0,0],[0,0] > \left| {x \in X\} } \right. \) or \( \widetilde{A} = \{ < x,[0,0.5],[0,0.5] > \left| {x \in X\} } \right. \);

-

(R3) \( E(\widetilde{A}) = E(\widetilde{A}^{C} ) \);

-

(R4) \( E(\widetilde{A}) = f(\psi_{{\widetilde{A}}} ,\Delta_{{\widetilde{A}}} ) \) is a real continuous function which increases with the increasing of the first variable \( \psi_{{\widetilde{A}}} \) and decreases with the increasing of the second variable \( \Delta_{{\widetilde{A}}} \).

$$ \psi_{{\widetilde{A}}} = 0.5(\pi_{{\widetilde{A}}}^{L} (x) + \pi_{{\widetilde{A}}}^{U} (x)), \, \Delta_{{\widetilde{A}}} = 0.5 \left(| {\mu_{{\widetilde{A}}}^{L} } \right.\left. {(x) - \upsilon_{{\widetilde{A}}}^{L} (x)} \right| + \left| {\mu_{{\widetilde{A}}}^{U} (x) - \upsilon_{{\widetilde{A}}}^{U} (x)} |\right.) $$where \( \psi_{{\widetilde{A}}} = 0.5(\pi_{{\widetilde{A}}}^{L} (x) + \pi_{{\widetilde{A}}}^{U} (x)) \) is the average of hesitancy. It is also the average of amount of knowledge. \( \Delta_{{\widetilde{A}}} = 0.5(\left| {\mu_{{\widetilde{A}}}^{L} } \right.\left. {(x) - \upsilon_{{\widetilde{A}}}^{L} (x)} \right| + \left| {\mu_{{\widetilde{A}}}^{U} (x) - } \right.\left. {\upsilon_{{\widetilde{A}}}^{U} (x)} \right|) \) is the distance between membership \( [\mu_{{\widetilde{A}}}^{L} (x),\mu_{{\widetilde{A}}}^{U} (x)] \) and non-membership \( [\upsilon_{{\widetilde{A}}}^{L} (x),\upsilon_{{\widetilde{A}}}^{U} (x)] \). It is also the average of reliability.

4.3 The New Generalized Exponential IVIFE

In order to overcome the shortcomings of IVIFE measures mentioned above, we will define a GIVIFE measure from the perspective of reliability and amount of knowledge.

Definition 4

The new generalized exponential entropy for an IVIFS \( \widetilde{A} = \{ < x,[\mu_{{\widetilde{A}}}^{L} (x),\mu_{{\widetilde{A}}}^{U} (x)],[\upsilon_{{\widetilde{A}}}^{L} ( \)\( x),\upsilon_{{\widetilde{A}}}^{U} (x)] > \left| {x \in X\} } \right. \) which has only one element is given as follow.

where \( \alpha ,\beta \) are weight coefficients. \( 0 < \alpha , \, \beta < 1 \). \( \alpha + \beta = 1 \). \( p, \, q > 0 \) are IVIFE coefficients. \( p, \, q \) are also the effects of lack of reliability and lack of knowledge on IVIFE respectively.

Theorem 2

Let\( \widetilde{A} \in {\text{IVIFS}}(X) \), a real function\( E(\widetilde{A}) \in [0,1] \)defined by Eq. (15) is a generalized exponential entropy for IVIFSs.

Proof

(R1) For any \( x \in X, \) let \( \widetilde{A} \) be a crisp set, i.e., when \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 1 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \), we have

When \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 1 \), we have

For any \( x \in X, \) we now suppose \( E(\widetilde{A}) = 0 \). Given that \( 0 < \alpha ,\beta < 1 \), \( \alpha + \beta = 1 \), we have

, and

, it can be deduced from Eq. (15) that

and

Thus \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 1, \, \)\( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \) or \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 1 \).

So \( \widetilde{A} \) is a crisp set.

(R2) Let \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \) for any \( x \in X \), we get

Let \( \mu_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) = 0 \), \( \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0.5 \) for any \( x \in X \), we get

For any \( x \in X, \) we suppose \( E(\widetilde{A}) = 1 \). Given that \( 0 < \alpha ,\beta < 1 \), \( \alpha + \beta = 1 \), we have

and

it can be deduced from Eq. (15) that

and

Thus \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \) or \( \mu_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) = 0 \), \( \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0.5 \).

(R3) Trivial from the relations and operations of \( \widetilde{A} \) and \( \widetilde{A}^{C} \).

(R4) For any \( x \in X, \) let

where

\( y \in [0,1] \) and \( z \in [0,1] \). So \( E(\widetilde{A}) = f(y,z) \ge 0 \).

Taking the partial derivative of \( f(y,z) \) with \( y \), we have

Given that \( \beta (z + m)^{q} e^{{^{{1 - a(1 - y)^{p} - \beta (z + m)^{q} }} }} < \beta (z + m)^{q} e^{{^{{1 - \beta (z + m)^{q} }} }} , \) we have \( 1 - \beta (z + m)^{q} e^{{^{{1 - a(1 - y)^{p} - \beta (z + m)^{q} }} }} > 1 - \beta \)\( (z + m)^{q} e^{{^{{1 - \beta (z + m)^{q} }} }} \). Let \( M(\zeta ) = 1 - \zeta e^{{^{1 - \zeta } }} \), where \( \zeta = \beta (z + m)^{q} \), by differentiating, we have \( M^{'} (\zeta ) = \)\( - (1 - \zeta )e^{{^{1 - \zeta } }} \le 0. \) It means \( 1 - \beta (z + m)^{q} e^{{^{{1 - \beta (z + m)^{q} }} }} \) is strictly decreasing with the increasing of \( \beta (z + m)^{q} \). In addition, we know \( 0 \le \beta (z + m)^{q} \le 1 \). When \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \) or \( \mu_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) = 0 \), \( \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0.5 \), \( \beta \to 1, \)\( \beta (z + m)^{q} = 1 \), we have

Thus

It means \( f_{y} (y,z) \) decreases monotonically with the increasing of \( y. \)

Taking the partial derivative of \( f(y,z) \) with \( z, \) we have

Given that \( 1 \ge \beta (z + m)^{q} \ge 0 \), \( e^{{1 - \alpha (1 - y)^{p} - \beta (z + m)^{q} }} > 0 \), we know

So \( f_{z} (y,z) \) increases monotonically with the increasing of \( z. \)

Let \( \zeta = z + m \), we have \( f(y,\zeta ) = a(1 - y)^{p} + \beta \zeta^{q} e^{{1 - a(1 - y)^{p} - \beta \zeta^{q} }} \). Taking the partial derivative of \( f(y,\zeta ) \) with \( \zeta , \) we have

So \( f_{\zeta } (y,\zeta ) \) increases monotonically with the increasing of \( \zeta \).

When \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \) or \( \mu_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) = 0 \), \( \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0.5 \), \( y \) gets the minimum value 0 and \( \zeta \) gets the maximum value 1. So we have \( E(\widetilde{A}) = 1 \).

When \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 0 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 1 \) or \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = 1 \), \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = 0 \), y gets the maximum value 1 and \( \zeta \) gets the minimum value 0. So we have \( E(\widetilde{A}) = 0 \). Therefore we have \( 0 \le E(A) \le 1 \).

Equation (15) satisfies axioms (R1)–(R4). Thus the GIVIFE is an IVIFE. When the IVIFSs reduce to IFSs, Eq. (15) reduces to Eq. (7).

Let’s compute the entropy values of these IVIFSs, i.e., \( \widetilde{z}_{1} = <[0.3,0.4],[0.3,0.4]>\), \( \widetilde{z}_{2} = <[0.2, 0.3],[0.2,0.3]>\), \( \widetilde{z}_{3} = <[0.1,0.2],[0.1,0.2]>\). From Eq. (15), let \( \alpha = \beta = 0.5, \, p = q = 1 \), we have

It is obvious that the new GIVIFE measure can distinguish the IVIFSs well. So the new GIVIFE measure is reasonable.□

4.4 Comparison with the Existing IVIFE Measures

In order to show the superiority of the GIVIFE measure proposed in this paper, we will compare the new GIVIFE measure with the existing IVIFE measures by five examples.

Example 8

\( \widetilde{A}_{5} = \langle [0.4,0.4],[0.1,0.2] \rangle \), \( \widetilde{A}_{6} = \langle [0.4,0.4],[0.2,0.3] \rangle \), \( \widetilde{A}_{7} = \langle [0.4,0.4],[0.4,0.5] \rangle \). Using Eqs. (10)–(12) and Eq. (15), we calculate the entropy values and rank them in Table 4.

The entropy values and the orderings are determined not only by weight coefficients \( \alpha \) and \( \beta \), but also by the effects of lack of reliability and lack of knowledge \( p \) and \( q \). Compared with \( E_{\text{GKH}} (\widetilde{A}) \), \( E_{\text{ZYM}} (\widetilde{A}) \) and \( E_{\text{CXH}} (\widetilde{A}) \), we know that the results calculated by \( E(\widetilde{A}) \) are more flexible. This is one of the main difference between Eq. (15) and the existing IVIFE measures.

Example 9

\( \widetilde{A}_{1} = <[0.4,0.6],[0.1,0.3]>\) and \( \widetilde{A}_{2} = <[0.45,0.55],[0.15,0.25]>. \) According to our intuition, \( \widetilde{A}_{1} \) is more fuzzier than \( \widetilde{A}_{2} \). We know \( \frac{0.4 + 0.6}{2} = \frac{0.45 + 0.55}{2} \), \( \frac{0.1 + 0.3}{2} = \frac{0.15 + 0.25}{2} \). This is the first special case for IVIFSs in the text. Using Eq. (15), we calculate the entropy values and rank them in Table 5.

No matter how \( \alpha , \)\( \beta \), \( p \) and \( q \) change, \( E(\widetilde{A}_{1} ) \) is always larger than \( E(\widetilde{A}_{2} ) \), i.e., \( \widetilde{A}_{1} \) is more fuzzier than \( \widetilde{A}_{2} \). So Eq. (15) can distinguish \( \widetilde{A}_{1} \) and \( \widetilde{A}_{2} \) well. This is accordance with our intuition and it is better than the results of Example 6.

Example 10

\( \widetilde{A}_{3} = \langle [0.1,0.1],[0.1,0.1] \rangle \), \( \widetilde{A}_{4} = \langle [0.3,0.3],[0.3,0.3] \rangle \). \( \widetilde{A}_{3} \) and \( \widetilde{A}_{4} \) are on the line between \( \langle [0,0],[0,0] \rangle \) and \( \langle [0.5,0.5],[0.5,0.5] \rangle \). This is the second special case for IVIFSs in the text. Using Eq. (15), we calculate the entropy values and rank them in Table 6.

No matter how \( \alpha , \)\( \beta \), \( p \) and \( q \) change, \( E(\widetilde{A}_{3} ) \) is larger than \( E(\widetilde{A}_{4} ) \), i.e., \( \widetilde{A}_{3} \) is not equal to \( \widetilde{A}_{4} \). So Eq. (15) can distinguish \( \widetilde{A}_{3} \) and \( \widetilde{A}_{4} \) well. This is accordance with our intuition and it is better than the results of Example 7.

In practical problems, we should use the consistent parameters in the same problem, because different parameters determine different situations, we will compare the problems under fixed parameters.

Gao et al. [25] defined the IVIFE from the amount of knowledge and reliability as follows:

Example 11

\( \widetilde{A}_{8} = < [0.5,0.5],[0.1,0.5] > \), \( \widetilde{A}_{9} = < [0.5,0.5],[0.2,0.5] > \), \( \widetilde{A}_{10} = < [0.5,0.5],[0.3,0.5] > \). According to our intuition, \( \widetilde{A}_{8} \) is more fuzzier than \( \widetilde{A}_{9} \) and \( \widetilde{A}_{9} \) is more fuzzier than \( \widetilde{A}_{10} \).

Using Eq. (10), we have

Using Eq. (16), we have

\( E_{\text{GMM}} (\widetilde{A}) \) cannot distinguish \( \widetilde{A}_{8} \), \( \widetilde{A}_{9} \) and \( \widetilde{A}_{10} \). The results above differ from our intuition.

Using Eq. (15), let \( \alpha = \beta = 0.5, \, p = q = 1 \), we have

From our intuition, when \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{B}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = \mu_{{\widetilde{B}}}^{U} (x), \) the bigger the non-membership interval, the fuzzier the IVIFS. When \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{B}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{B}}}^{U} (x), \) the bigger the membership interval, the fuzzier the IVIFS. The new GIVIFE can distinguish \( \widetilde{A}_{8} \), \( \widetilde{A}_{9} \) and \( \widetilde{A}_{10} \) well and the results are consistent with our intuition. So \( E(\widetilde{A}) \) is better than \( E_{\text{GMM}} (\widetilde{A}) \) and \( E_{\text{ZYM}} (\widetilde{A}) \).

Mishra [34] presented \( E_{ARM2} (\widetilde{A}) \) as follows:

Example 12

\( \widetilde{A}_{11} = <[0.3,0.4],[0.1,0.6]>\), \( \widetilde{A}_{12} = <[0.2,0.3],[0,0.5]>\). \( \mu_{{\widetilde{A}}}^{L} (x) + \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) + \upsilon_{{\widetilde{A}}}^{U} (x) \) is the characteristics of \( \widetilde{A}_{11} \) and \( \widetilde{A}_{12} \). From our intuition, the entropy value of \( \widetilde{A}_{11} \) is not equal to the entropy value of \( \widetilde{A}_{12} \).

Using Eq. (12), we have

Using Eq. (17), we have

\( E_{\text{CXH}} (\widetilde{A}) \) and \( E_{AMR2} (\widetilde{A}) \) cannot distinguish \( \widetilde{A}_{11} \) and \( \widetilde{A}_{12} \). Using Eq. (15), let \( \alpha = \beta = 0.5, \, p = q = 1 \), we have

When \( \mu_{{\widetilde{A}}}^{L} (x) + \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) + \upsilon_{{\widetilde{A}}}^{U} (x), \quad E(\widetilde{A}) \) can distinguish the special IVIFSs well.

From Examples 9 to 12, we know that \( E(\widetilde{A}) \) can distinguish the IVIFSs well. Thus the new GIVIFE is well defined and it is more reasonable and flexible.

The other differences between \( E(\widetilde{A}) \) and the IVIFE measures mentioned above can be described as in Table 7.

5 Conclusions and Discussion

Though a lot of IFE measures and IVIFE measures were proposed, some of them have drawbacks. In order to overcome the drawbacks, we propose a novel generalized exponential IFE measure with parameters from the perspective of knowledge and reliability. We also propose a novel generalized exponential IVIFE measure with parameters and interval area from the perspective of knowledge and reliability. The two novel generalized entropy measures can distinguish the IFSs and IVIFSs well, respectively. The main contributions of this paper are summarized as follows:

-

1.

The GIFE measure is defined by both the weight coefficients and IFE coefficients. As the weight coefficients and IFE coefficients change, some entropy values and the orderings are changed. Therefore the GIFE not only affected by the IFE coefficients but also affected by the weight coefficients.

-

2.

From our intuition, the larger the interval, the more fuzzier the IVIFS. \( \langle [0,0.5],[0,0.5],[0,1] \rangle \) is the fuzziest IVIFS.

-

3.

We define the new axioms of the IVIFE.

-

4.

We define the interval area which is unique to IVIFE. The interval area is inherent in IVIFE. The bigger the interval area, the fuzzier the IVIFS. Using the interval area, we can reduce the loss of information including in the IVIFS.

-

5.

We propose the novel GIVIFE measure with interval area. This differs from the other IVIFE measures. The weight coefficients and IVIFE coefficients are used to construct the new GIVIFE. When the weight coefficients and IVIFE coefficients change, some entropy values and the orderings are changed.

-

6.

When the mean of membership and non-membership of one IVIFS is equal to the mean of membership and non-membership of another IVIFS respectively, the new GIVIFE can distinguish them well.

-

7.

The two novel generalized exponential entropy measures can distinguish the IFSs on the line between \( \langle 0,0 \rangle \) and \( \langle 0.5,0.5 \rangle \).

-

8.

When \( \mu_{{\widetilde{A}}}^{L} (x) = \mu_{{\widetilde{B}}}^{L} (x) = \mu_{{\widetilde{A}}}^{U} (x) = \mu_{{\widetilde{B}}}^{U} (x) \), the bigger the non-membership interval, the fuzzier the IVIFS. When \( \upsilon_{{\widetilde{A}}}^{L} (x) = \upsilon_{{\widetilde{B}}}^{L} (x) = \upsilon_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{B}}}^{U} (x) \), the bigger the membership interval, the fuzzier the IVIFS. The new GIVIFE can distinguish them well and the results are consistent with our intuition.

-

9.

When \( \mu_{{\widetilde{A}}}^{L} (x) + \mu_{{\widetilde{A}}}^{U} (x) = \upsilon_{{\widetilde{A}}}^{L} (x) + \upsilon_{{\widetilde{A}}}^{U} (x) \), the new GIVIFE can distinguish them well.

We have the conclusion that the two novel generalized exponential entropy measures are reasonable and more flexible. But some problems still unsolved. For example, we do not know if there are other uncertainties that affect the entropy, nor how they affect the entropy. This will be studied in the near future.

References

Zadeh, L.A.: Fuzzy sets. Inf. Control 8(3), 338–353 (1965)

Burillo, P., Bustince, H.: Entropy on intuitionistic fuzzy sets and on interval-valued fuzzy sets. Fuzzy Sets Syst. 78, 305–316 (1996)

Liu, X.D., Zheng, S.H., Xiong, F.L.: Entropy and subsethood for general interval-valued intuitionistic fuzzy sets. In: Wang L., Jin Y. (eds.) Fuzzy systems and knowledge discovery FSKD 2005. Lecture Notes in Computer Science, vol 3613. Berlin, Springer (2005)

Szmidt, E., Kacprzyk, J.: Entropy for intuitionistic fuzzy sets. Fuzzy Sets Syst. 118(3), 467–477 (2001)

Gao, M.M., Sun, T., Zhu, J.J.: Revised axiomatic definition and structural formula of intuitionistic fuzzy entropy. Control. Decis. 29(3), 470–474 (2014)

Zhu, Y.J., Li, D.F.: A new definition and formula of entropy for intuitionistic fuzzy sets. J. Intell. Fuzzy. Syst. 30(6), 3057–3066 (2016)

Song, Y.F., Wang, X.D., Wu, W.H., Lei, L., Quan, W.: Uncertainty measure for Atanassov’s intuitionistic fuzzy sets. Appl. Intell. 46(4), 757–774 (2017)

Mao, J.J., Yao, D.B., Wang, C.C.: A novel cross-entropy and entropy measures of IFSs and their applications. Knowl. Based Syst. 48, 37–45 (2013)

Li, D.F., Chen, G.H., Huang, Z.G.: Linear programming method for multiattribute group decision making using IF sets. Inf. Sci. 180(9), 1591–1609 (2010)

Wan, S.P.: Fuzzy LINMAP approach to heterogeneous MADM considering comparisons of alternatives with hesitation degrees. Omega. 41(6), 925–940 (2013)

Li, D.F., Wan, S.P.: Fuzzy heterogeneous multiattribute decision making method for outsourcing provider selection. Expert Syst. Appl. 41(6), 3047–3059 (2014)

Li, D.F., Wan, S.P.: A fuzzy inhomogenous multi-attribute group decision making approach to solve outsourcing provider selection problems. Knowl. Based Syst. 67, 71–89 (2014)

Yu, G.F., Fei, W., Li, D.F.: A compromise-typed variable weight decision method for hybrid multi-attribute decision making. IEEE Trans. Fuzzy Syst. 27(5), 861–872 (2019)

Yang, Y.Z., Yu, L., Wang, X., Zhou, Z.L., Chen, Y., Kou, T.: A novel method to evaluate node importance in complex networks. Phys. A 526, 121118 (2019)

Liu, M.F., Ren, H.P.: A new intuitionistic fuzzy entropy and application in multi-attribute decision making. Inf. 5(4), 587–601 (2014)

Liu, M.F., Ren, H.P.: A study of multi-attribute decision making based on a new intuitionistic fuzzy entropy measure. Syst. Eng. Theor. Pract. 35(11), 2909–2916 (2015)

Xiong, S.H., Wu, S., Chen, Z.S., Li, Y.L.: Generalized intuitionistic fuzzy entropy and its application in weight determination. Control Decis. 329(5), 845–854 (2017)

Mishra, A.R., Rani, P., Jain, D.: Information measures based TOPSIS method for multicriteria decision making problem in intuitionistic fuzzy environment, Iran. J. Fuzz. Syst. 14(6), 41–63 (2017)

Joshi, R., Kumar, S.: A new parametric intuitionistic fuzzy entropy and its applications in multiple attribute decision making. Int. J. Appl. Comput. Math. 4(1), 52 (2018)

Szmidt, E., Kacprzyk, J.: Amount of information and its reliability in the ranking of Atanassov’s intuitionistic fuzzy alternatives. In: Rakus-Andersson, E., Yager, R.R., Ichalkaranje, N., Jain, L.C. (eds.) Recent advances in decision making, pp. 7–19. Springer, Berlin (2009)

Guo, K.H., Song, Q.: On the entropy for Atanassov’s intuitionistic fuzzy sets: an interpretation from the perspective of amount of knowledge. Appl. Soft. Comput. 24, 328–340 (2014)

Wei, C.P., Wang, P., Zhang, Y.Z.: Entropy, similarity measure of interval-valued intuitionistic fuzzy sets and their applications. Inf. Sci. 181(19), 4273–4286 (2011)

Wei, C.P., Zhang, Q.: Entropy measure for interval-valued intuitionistic fuzzy sets and their application in group decision-making. Math. Problem Eng. 2015, 13 (2015)

Meng, F.Y., Chen, X.H.: Entropy and similarity measure of Atanassov’s intuitionistic fuzzy sets and their application to pattern recognition based on fuzzy measures. Fuzzy. Optim. Decis. Math. 15(1), 75–101 (2016)

Gao, M.M., Sun, T., Zhu, J.J.: Interval-valued intuitionistic fuzzy multiple attribute decision-making method based on revised fuzzy entropy and new scoring function. Control Decis. 31(10), 1757–1764 (2016)

Zhao, Y., Mao, J.J.: New type of interval-valued intuitionistic fuzzy entropy and its application. Comput. Eng. Appl. 52(12), 85–89 (2016)

Chen, X.H., Zhao, C.C., Yang, L.: A group decision-making based on interval-valued intuitionistic fuzzy numbers and its application on social network. Syst. Eng. Theor. Pract. 37(7), 1842–1852 (2017)

Yin, S., Yang, Z., Chen, S.Y.: Interval-valued intuitionistic fuzzy multiple attribute decision making based on improved fuzzy entropy. Syst. Eng. Electron. 40(5), 1079–1084 (2018)

Zhang, Q.S., Xin, H.Y., Liu, F.C., Ye, J., Tang, P.: Some new entropy measures for interval-valued intuitionistic fuzzy sets based on distances and their relationships with similarity and inclusion measures. Inf. Sci. 283, 55–69 (2014)

Dinabandhu, B., Nikhil, R.P.: Some new information measures for fuzzy sets. Inf. Sci. 67(3), 209–228 (1993)

Joshi, R., Kumar, S.: Parametric (R,S)-norm entropy on intuitionistic fuzzy sets with a new approach in multiple attribute decision making. Fuzz. Inf. Eng. 9(2), 181–203 (2017)

Joshi, R., Kumar, S.: An intuitionistic fuzzy (δ, γ)-norm entropy with its application in supplier selection problem. Comput. Appl. Math. 37(5), 5624–5649 (2018)

Mishra, A.R., Rani, P.: Biparametric information measures-based TODIM technique for interval-valued intuitionistic fuzzy environment. Arabian J. Sci. Eng. 43(6), 3291–3309 (2018)

Mishra, A.R., Rani, P.: Interval-valued intuitionistic fuzzy WASPAS method: application in reservoir flood control management policy. Group Decis. Negot. 27(6), 1047–1078 (2018)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wei, AP., Li, DF., Jiang, BQ. et al. The Novel Generalized Exponential Entropy for Intuitionistic Fuzzy Sets and Interval Valued Intuitionistic Fuzzy Sets. Int. J. Fuzzy Syst. 21, 2327–2339 (2019). https://doi.org/10.1007/s40815-019-00743-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-019-00743-6