Abstract

In this paper, we use the multistep collocation method for linear Volterra integro-differential equations of the third kind. First, the structure of multistep collocation method is described, then the convergence of the method and its order are investigated. The comparison of the proposed method with classical one-step collocation method shows that the order of convergence increases with the same computational cost. Some numerical examples are given in the last part of the article to illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many problems in mathematics, physics, biology and engineering involve integral equations and among them, some of the problems are in integro-differential equation form. For example, the problem of determining the shape of a simply supported sandwich beam leads up to a Volterra integro-differential equation (VIDE). Therefore, it is important to provide suitable numerical methods to solve such equations. In particular, great attention has been paid to collocation methods in some literature. For example, in Brunner (2004), the author has applied the collocation method to various classes of Volterra integral and differential equations. Seyed allaei et al have studied analytical properties of the third kind Volterra integral equation (VIE) in Seyed Allaei et al. (2015) and they have also applied the collocation method for solving this equation in Seyed Allaei et al. (2017). A spectral collocation method for a class of nonlinear Volterra Hammerstein integral equations of the third kind has been investigated in Laeli Dastjerdi and Shayanfard (2021). Also, a multistep collocation method for third kind VIEs has been studied in Shayanfard et al. (2019).

In this paper we consider first order linear Volterra integro-differential equation of the third kind:

in which, \(\beta >0\), \(a_{1}(t)=t^{\beta }a(t)\), \(g_{1}(t)=t^{\beta }g(t)\), \(k\in C(D) \) and \(g(t),a(t)\in C(I)\), where \(I=[0,T]\) and \(D=\left\{ (t,x)\left| t\in I,\text { }0\le x\le t \right. \right\} \).

Numerical methods for (1) have also received attention. Recently a collocation method for (1) has been studied by Shayanfard et al. (2020). In Cardone and Conte (2013) the authors have analyzed the multistep collocation method for a class of VIDEs of the second kind. In the multistep collocation method the solution is approximated by a piecewise polynomial, which depends on solutions of r previous steps. The convergence order of this method is higher than classical one-step collocation methods with the same computational cost, see for example (Cardone and Conte 2013; Shayanfard et al. 2019). In this paper, we use the multistep collocation method for approximating solution of third kind Volterra integro-differential equation (1).

The paper is organized as follows:

In Sect. 2, the structure of the multistep collocation method is described, then it is applied to Eq. (1) and its corresponding equations are extracted. In Sect. 3, we have analyzed the convergence of the method by presenting and proving a theorem. Finally, to illustrate the theoretical results some numerical examples are considered in Sect. 4.

2 The multistep collocation method

We consider the first order linear Volterra integro-differential equation of the third kind:

in which, \(\beta >0\), \(a_{1}(t)=t^{\beta }a(t)\), \(g_{1}(t)=t^{\beta }g(t)\), \(k\in C(D) \) and \(g(t),a(t)\in C(I)\), where, \(I=[0,T]\) and \(D=\left\{ (t,x)\left| t\in I,\text { }0\le x\le t \right. \right\} \).

To assure to have a unique solution for Eq. (2), the following two theorems proved, respectively in Seyed Allaei et al. (2015) and Jiang and Ma (2013).

Theorem 2.1

Suppose that \(q\ge 1\) is an integer number, \(0<\beta <1\), \(k(t,x)=x^{\beta +q-1}l(t,x)\) such that:

-

(i)

\(\frac{\partial ^{j}k}{\partial t^j} \in C(D),~~~~~j=0,1,\ldots ,q\),

-

(ii)

\(\frac{\partial ^{j}l}{\partial t^j} \in C(D),~~~~~j=0,1,\ldots ,q-1\),

-

(iii)

\(H_{j+1}(t)=\frac{\partial ^{j}l}{\partial t^j}(t,t) \in C^{q-j-1}(I),~~~~~j=0,1,\ldots ,q-1\),

then the operator

$$\begin{aligned} (\Omega _{\beta }y)(t)=\int _{0}^{t}{t^{-\beta }k(t,x)y(x)dx},~~~~t\in I, \end{aligned}$$(3)is continuous from \(C^{q-1}(I)\) to \(C^{q}(I)\).

Theorem 2.2

If \(a(t),g(t)\in C^{q-1}(I)\) and the assumptions of Theorem (2.1) hold, then Eq. (2) has a unique solution in \(C^{q}(I)\).

Now, we express and construct the multistep collocation method of solving Eq. (2).

Consider \(I_h=\left\{ t_n;~~0=t_0<t_1<\ldots <t_N=T\right\} \) as a partition for interval \(I=[0,T]\), such that \(h=\frac{T}{N}\), \(t_n=nh\).

Also suppose that \(0<c_1<c_2<\cdots <c_m\le 1\) are m collocation parameters and define the set of collocation points in the form

We approximate the solution y(t) of Eq. (2) with a function \(P_{N}(t)\), which its restriction on the interval \(\sigma _{n}= (t_n,t_{n+1}],\) is a polynomial of degree at most \(m+r-1\) and its value on \(\sigma _{n}\) depends on r previous approximations \(y_{n-k}\simeq y(t_{n-k})\), \(k=0,1,\ldots ,r-1\), which are computed in r previous steps. In other words,

in which, \(Y_{n,j}={{P}'_{N}(t_{n,j})}\). It is worth pointing out that the starting values \(y_1, y_2,\ldots , y_r\), which are needed in (5) may be approximated by an appropriate method such as classical one-step collocation method. Also, \(\varphi _{k}(s)\) and \(\psi _{j}(s)\) are polynomials of degree \(m+r-1\) and are determined by interpolation conditions at the points \(t_{n,j}\) and \(t_{n-k}\), namely:

By replacing any specified set of collocation parameters \(c_1,c_2,\ldots ,c_m\) in Eq. (5) and using (6), the Hermite–Birkhoff interpolation problem is obtained, that is:

Since Eq. (2) is valid for \(P_{N}(t)\) at the collocation points \(t_{n,j}\) and using (6), for \(n=r-1,\ldots ,N-1,\) we have:

where

Now, using an appropriate change of variables and the definition of \(P_{N}(t)\) in (5), we can write:

and

Putting (10) and (11) in (8) leads to

Now, we define the following vectors \(Y^{(l)}\in {\mathbb {R}}^{r}\) and \(U^{(l)}, G_{n}, D_{n}^{(l)} \in {\mathbb {R}}^{m}\) appropriately,

also the \((m \times m)\)-matrices \((T^{\beta }_{n})\), A, \((A_{n})\), and \((m \times r)\)-matrices \(({\overline{B}}_{n}^{(l)})\), \(({\widetilde{B}}_{n}^{(l)})\), C and \((C_{n})\) as follows:

Using the above matrices, Eq. (8) can be rewritten in matrix form as follows:

Solving (26) gives us the values of \(Y_{n,j}\)s. Note that the values of \(y_{0},y_{1},\ldots ,y_{r-1}\) can be obtained by using one-step collocation methods. Also we can approximate the values of integrals arising in (26), by appropriate quadrature methods, see Cardone and Conte (2013).

To prove the method’s solvability, we have to prove that the matrices \(\left( T^{\beta }_{n}-h(A_{n}+h{\widetilde{B}}_{n}^{(n)})\right) \) are nonsingular, which we see it in the following theorem.

Theorem 2.3

Consider Eq. (2) and suppose that \(k\in C(D)\) and \(0<\beta <1\). Then for any choice of collocation parameters \(0<{{c}_{1}}<{{c}_{2}}<\cdots <{{c}_{m}}\le 1\) there exists an \({\bar{h}}>0\) such that all matrices \(\left( T^{\beta }_{n}-h(A_{n}+h{\widetilde{B}}_{n}^{(n)})\right) \) are nonsingular for each \(h\le {\bar{h}}\).

Proof

Similar to the proof of Theorem 3.1. in Shayanfard et al. (2020). \(\square \)

3 Convergence analysis

In this section, we analyze the convergence properties of multistep collocation method for Eq. (2).

Theorem 3.1

Consider Eq. (2) with \(y(0)=y_{0}\). Suppose that the conditions of Theorem 2.1 hold and \(a(t), g(t) \in C^{m+r}(I), k \in C^{m+r}(D)\). Let

in which, \(I_{r-1}\) is the identity matrix with dimension \(r-1\) and \(O_{r-1,1}\) is the \((r-1 \times 1)\) zero vector. If the starting errors, arising from approximation of \(y_1, y_2,\ldots , y_r\) satisfy \(|e(t)|=O(h^{m+r}),~~~t\in [t_0,t_{r}]\) and the spectral radius of the matrix \({\widetilde{\Phi }}\) is less than 1, then the global error of multistep collocation method satisfies \({\Vert } e{\Vert }_{\infty }=O(h^{m+r})\).

Proof

According to the assumptions of the current theorem and referring to Theorem 2.2, we conclude that Eq. (2) has a unique solution in \(C^{m+r}[0,T]\).

Now, let y(t) and \(P_{N}(t)\) be the exact and approximate solutions of (2), respectively. Thus the error \(e(t)=y(t)-{P_{N}(t)}\) for \(t \in X_{h}\) satisfy the following equation:

By Peano’s theorem (Brunner 2004) and similar to lemma 4.1 in Conte and Paternoster (2009), we can argue:

where \(\varphi _{k}(s)\) and \(\psi _{j}(s)\) are the same as in (7) and

Thus by using Eq. (5), we have:

in which, \(e_{n-k}=e(t_{n-k})\) and \(\epsilon _{n,j}=e'(t_{n,j})\) for \(k=0,1,\ldots ,r-1\), \(j=1,2,\ldots ,m\).

On the other hand, Eq. (2) is valid for both y(t) and \(P_{N}(t)\) at the collocation points, so we can deduce:

and

By subtracting Eqs. (32) and (33), we have:

But by the assumptions of the current theorem for starting errors, we have:

in which, \(\Vert \gamma _l(s)\Vert _{\infty } \le M_{1}\) and \(M_{1}>0\) is constant. Now, by inserting (35) and (31) in (34) the following equation is derived:

Now, we define the vectors \({\overline{\omega }}_{n}^{(l)} \in {\mathbb {R}}^m\) as:

Then we can rewrite (36) as follows:

in which, \(E_{l}=\left( e_l,e_{l-1},\ldots ,e_{l-r+1}\right) ^{T}\) and \({\mathcal {E}}_{l}=\left( \epsilon _{l,1},\epsilon _{l,2},\ldots ,\epsilon _{l,m}\right) ^{T}\) for \(l=r,\ldots ,n\) and

Now, by choosing \(s=1\) and \(n=l-1\) in Eq. (31), we obtain the following system of linear equations:

where \({\widetilde{\Psi }}=\left[ \begin{aligned}&{{\psi }_{1}}(1)\text { }{{\psi }_{2}}(1)\text { }\ldots \text { }{{\psi }_{m}}(1) \\&\quad {{O}_{r-1,m}} \\ \end{aligned} \right] \) and \({\widetilde{Q}}_{m,r,j}=\left[ \begin{aligned}&R_{m,r,j}(1)\\&O_{r-1,1}\\ \end{aligned} \right] \).

By solving (40), we conclude:

Now, by replacing (41) in (38), we have:

By using theorem 2.3 and referring to Brunner (2017), for \(0<\beta <1\) and \(h<{\overline{h}}\), there exists a constant \(M_{2}\), such that:

hence

Now, by using (35) it is concluded that:

also

in which,

On the other hand, since \(\rho ({\widetilde{\Phi }})<1\) then there is a constant \(D_1\) independent of \(k \in {\mathbb {N}}\), such that:

and

where, \(\alpha _1\) and \(\alpha _2\) are constants. From (42), we will have:

in which, \(\lambda _1\) is a constant. Now, Gronwall’s inequality leads to:

Furthermore from Eq. (41), we deduce:

in which,

and finally from Eq. (31)

This inequality with (51) and (52) completes the proof. \(\square \)

4 Numerical experiments

In this section, we have carried out the multistep collocation method for some examples. We present two examples to numerically verify our results. The numerical order of convergence is defined by:

and the errors are compared via \({{\Vert {{e}_{N}} \Vert }_{\infty }}=\sup _{1\le i\le N}\,\left| {{e}_{N}}({{t}_{i}}) \right| \).

Example 1

We consider the equation

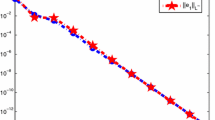

where, \(g_{1}(t)=\frac{3}{2}t-\frac{2}{9}{t^{\frac{9}{2}}}\). The exact solution of this equation is \(y(t)=\sqrt{t^3}\). Here, we have applied the 2-step collocation method with \(m=1\) for different values of collocation parameter c. The results are shown in Table 1. Furthermore the absolute error on [0, 1], for choosing an arbitrary \(c=\frac{1}{\sqrt{5}}\) and \(N=16\) is shown in Fig. 1. Note that when \(m=1\), the polynomials \(\varphi _i,~i=0,1\) and \(\psi _1\) are as follows:

Absolute error for Example 1 with N \(=\) 16 and \(\textrm{c}=\frac{1}{\sqrt{5}}\)

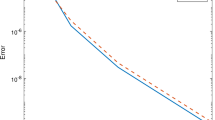

Absolute error for Example 2 with Gauss points And N \(=\) 8

Example 2

We applied 2-step collocation method with \(m=2\) for the Volterra integro-differential equation

where \(g_1(t)=\frac{7}{3}{t}^{\frac{11}{6}}-\frac{9}{208}{t}^{\frac{16}{3}}\) and the exact solution is \(y(t)={t}^{\frac{7}{3}}\). We have used Radau II 2-points \(c_1=\frac{1}{3}\), \(c_2=1\), Gauss points, \(c_1=\frac{3-\sqrt{3}}{6}\), \(c_2=\frac{3+\sqrt{3}}{6}\) and arbitrary points \(c_1=\frac{3}{8}\), \(c_2=\frac{7}{8}\) to approximate the solution of this equation. For each case the polynomials \(\varphi _i, i=0,1\) and \(\psi _j\), \(j=1.2\) are different, for instance when we consider \(c_1=\frac{3-\sqrt{3}}{6}\), \(c_2=\frac{3+\sqrt{3}}{6}\), these polynomials are as follows:

The results are presented in Table 2. The absolute error for \(N=8\), which we used Gauss points as collocation parameters is plotted in Fig. 2.

5 Conclusions

In this paper we applied a multistep collocation method to a linear Volterra integro-differential equation of the third kind with an initial value. Our observations show that under suitable conditions, this method has uniform order \(m+r-1\), and increasing the number of collocation parameters and steps gives us better approximations of the solution. Also numerical experiments state that choosing specific values for collocation parameters leads to better results, But the values obtained for the order of convergence in the present examples are slightly less than the theoretical results, which can be caused by the approximation error of the integrals in the system, which we intend to investigate in future works.

References

Brunner H (2004) Collocation methods for Volterra integral and related functional equations. Cambridge University Press, Cambridge

Brunner H (2017) Volterra integral equations, an introduction to theory and applications. Cambridge University Press, Cambridge

Cardone A, Conte D (2013) Multistep collocation methods for Volterra integro-differential equations. Appl Math Comput 221:770–785

Conte D, Paternoster B (2009) Multistep collocation methods for Volterra integral equations. Appl Numer Math 59:1721–1736

Jiang Y, Ma J (2013) Spectral collocation methods for Volterra integro-differential equations with noncompact kernels. J Comput Appl Math 244:115–124

Laeli Dastjerdi H, Shayanfard F (2021) A numerical method for the solution of nonlinear Volterra Hammerstein integral equations of the third kind. Appl Numer Math 170:353–363

Seyed Allaei S, Yang ZW, Brunner H (2015) Existence, uniqueness and regularity of solutions for a class of third kind Volterra integral equations. J Integral Equ Appl 27:325–342

Seyed Allaei S, Yang ZW, Brunner H (2017) Collocation methods for third kind VIEs. Numer Funct Anal Optim 37:1104–1124

Shayanfard F, Laeli Dastjerdi H, Maalek Ghaini FM (2019) A numerical method for solving Volterra integral equations of the third kind by multistep collocation method. Comput Appl Math 38:1–13

Shayanfard F, Laeli Dastjerdi H, Maalek Ghaini FM (2020) Collocation method for approximate solution of Volterra integro-differential equations of the third kind. Appl Numer Math 150:139–148

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

This work does not have any conflicts of interest.

Additional information

Communicated by Donatella Occorsio.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shayanfard, F., Dastjerdi, H.L. A multistep collocation method for approximate solution of Volterra integro-differential equations of the third kind. Comp. Appl. Math. 43, 176 (2024). https://doi.org/10.1007/s40314-024-02635-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-024-02635-4

Keywords

- Volterra integro-differential equation

- Multistep collocation

- Hermite Birkhoff interpolation

- Convergence