Abstract

In this paper a multistep collocation method for solving Volterra integral equations of the third kind is explained and analyzed. The structure of the method, its solvability and convergence analysis are investigated. Moreover to show the applicability of the presented method and to confirm our theoretical results some numerical examples are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This work is concerned with numerical results for linear Volterra integral equations of the third kind of the form

where \(0\le \alpha <1\), \(0<\beta \le 1\), \(f(x)={x^{\beta }}g(x)\) with \(g(x)\in C(I)\), k is a real continuous function defined on \(D=\left\{ (x,t):0\le t\le x\le T \right\} \) and y(x) is an unknown function.

Volterra integral equations of the third kind have appeared in modeling numerous problems in various branches of science and engineering, such as heat transfer, population growth models and shock wave problems (Brunner 2017). Thus designing appropriate methods for solving these equations are of great importance.

The collocation methods are among the most suitable methods for solving integral equations. The idea of the collocation method is based on approximating the solution of an integral equation with a linear combination of an appropriate set of functions, which are usually piecewise polynomial functions belonging to a finite-dimensional space. Therefore, we obtain a system of equations that provides a suitable approximating polynomial for the solution. Single-step collocation methods for solving VIEs of the second kind are discussed in many researches (see Brunner 2004 and the references therein).

The existence, uniqueness and regularity of solutions of Eq. (1) have been studied in Allaei et al. (2015). Recently, single-step collocation methods for solving linear Volterra integral Eq. (1) have been explained by Seyed Allaei et al. in Allaei et al. (2017), and an analysis of the collocation methods for nonlinear Volterra integral equations of the third kind has been presented by Song et al. Song et al. (2019). The general multistep collocation methods for solving second-kind Volterra integral equations have been established in Conte and Paternoster (2009), and moreover the multistep Hermite collocation methods have been studied in Fazeli et al. (2012).

It follows from Seyed Allaei et al. Allaei et al. (2015) that under certain conditions on \(\alpha , \beta \) and k, the integral operator,

is compact and therefore the algebraic system arising from the collocation method is uniquely solvable for all sufficiently small mesh diameters. But in the noncompact cases, in general, the solvability of this system with uniform or graded mesh is not guaranteed and so in Allaei et al. (2017) the authors have applied the modified graded mesh to solve Eq. (1) with noncompact operator.

Our aim is to apply a multistep collocation method to approximate the solution of VIEs of the third kind when \({{V}_{k,\beta ,\alpha }}\) is compact.

In order to give an origin for VIEs of the third kind, we consider the first-kind VIEs

Differentiating with respect to x, we obtain

If \(H(x,x)\ne 0\) for all \(x\in [0,T]\), then dividing (4) by H(x, x) we obtain the VIE of the second kind

where \(g(x)=\frac{f'(x)}{H(x,x)}\) and \(k(x,t)=\frac{-H_{x}(x,t)}{H(x,x)}\). On the other hand, if H(x, x) vanishes on a non-empty proper subset of [0, T], then (4) is said to be a VIE of the third kind.

The rest of this paper is organized as follows.

In Sect. 2, first we present some preliminary theorems, and then the multistep collocation method is utilized for solving Volterra integral equations of the third kind (1). In Sect. 3, solvability of the method will be discussed. Section 4 is devoted to the convergence of the method, and finally in Sect. 5, the method will be evaluated with some examples.

2 Implementation of the method

In this section first, by noting some theorems we present some preliminary conditions, under which we can apply the multistep collocation method for (1) and then we describe the method and employ it for such equations.

Theorem 2.1

(Allaei et al. 2015) Suppose that in (1), \(\alpha =0\), \(0<\beta <1\) and \(k\in C(D)\). Then the integral operator \({{V}_{k,\beta ,0 }}\) is compact. Furthermore if for an integer \(m\ge 1\), \(g\in C^m(I)\) and the kernel k is of the form \(k(x,t)={t}^{\beta +m-1}h(x,t)\) which satisfies the following conditions:

-

(i)

\(\frac{{{\partial }^{j}}k}{\partial {{x}^{j}}}\in C(D)\) for \(j=0,1,...,m\),

-

(ii)

\(\frac{{{\partial }^{j}}h}{\partial {{x}^{j}}}\in C(D)\) for \(j=0,1,...,m-1\),

-

(iii)

\({{H}_{j+1}}(x)=\frac{{{\partial }^{j}}h}{\partial {{x}^{j}}}(x,x) \in C^{m-j-1}(I)\) for \(j=0,1,...,m-1\),

then Eq. (1) has a unique solution in \(C^m(I)\).

Theorem 2.2

(Allaei et al. 2015) In Eq. (1), let \(0\le \alpha <1\) and \(\alpha +\beta =1\). Then for continuous kernel \(k\in C(D)\) with \(k(0,0)=0\), \({{V}_{k,\beta ,\alpha }}\) is a compact operator. Moreover if \(g(x)\in C^m(I)\) and \(k\in C^m(D),\) then (1) has a unique solution in \(C^m(I)\).

In the rest of the paper, we assume that the conditions of Theorems 2.1 or 2.2 are valid.

Now, we utilize a multistep collocation method for (1) with one of the cases given in the above theorems. To this end, first let \(h=\frac{T}{N}\) for some \(N\in {\mathbb {N}}\) and consider the uniform mesh

Now, we approximate the solution of (1) in the space of all discontinuous piecewise polynomials with degrees at most \(m-1\)

where \({{\sigma }_{n}}=({{x}_{n}},{{x}_{n+1}})\).

Suppose that the set of collocation points is given by

Therefore, the approximate solution \({u}_{h}\) on \(({{x}_{n}},{{x}_{n+1}}]\) is defined by

where \({{y}_{n-k}}={{u}_{h}}({{x}_{n-k}})\), \({{U}_{n,j}}={{u}_{h}}({{x}_{n,j}})\), \({{\phi }_{k}}(v)\) and \({{\psi }_{j}}(v)\) are polynomials of degree \(m+r-1\), which are determined by collocation conditions at the points \({{x}_{n-k}}\), \(k=0,1,\ldots ,r-1\) and \({{x}_{n,j}}\), \(j=1,2,\ldots ,m\).

Using the equalities \({{y}_{n-k}}={{u}_{h}}({{x}_{n-k}})\) and \({{U}_{n,j}}={{u}_{h}}({{x}_{n,j}})\) and substituting them in (8), we obtain the linear system (Conte and Paternoster 2009):

Now with the idea of the collocation method, the function (8) must exactly satisfy (1) at the collocation points \({{x}_{n,j}}\), and so we have the following system of equations:

in which

and

Inserting (8) into (9), we obtain

Now, let

and define the matrices \({\bar{B}}_{n}^{(d)}\in {{{\mathbb {R}}}^{m\times r}}\) and \({\tilde{B}}_{n}^{(d)}\in {{{\mathbb {R}}}^{m\times m}}\) by

for any \(1\le i\le m\), \(0\le k\le r-1\).

for every \(1\le {i,j}\le m\).

Then (10) can be written in the matrix form

By solving the above system of equations, \({{U}_{n,j}}\)’s and then \(u_h\) are determined. Of course, the starting values \({{y}_{1}},{{y}_{2}},\ldots ,{{y}_{_{r}}}\) can be obtained via a classical single-step method.

3 Solvability of the method

We will now prove the solvability of the linear system (14) caused by the multistep collocation method. To this end, we note that the corresponding operators are compact.

Theorem 3.1

Suppose that \(k\in C(D)\) and \(g\in C(I)\). Then for \(0\le \alpha <1\) and \(0<\beta <1\), or \(\beta =1\) with \(k(0,0)=0\), there exists an \({\bar{h}}>0\) such that for any \(0<h\le {\bar{h}}\) the matrix \(\left( {T^\beta _{n}}-{h}^{1-\alpha }{\tilde{B}}_{n}^{(n)} \right) \) is invertible for any n.

Proof

Since \(c_1> 0,\) the diagonal matrix \({{T}^{\beta }_{n}}\) is invertible. By the Neumann lemma (Atkinson 1989), it is enough to prove that there exists an \({\bar{h}}>0\) such that for any \(0<h\le {\bar{h}}\), \({{\left\| {{h}^{1-\alpha }}T_{n}^{-\beta }{\tilde{B}}_{n}^{(n)} \right\| }_{\infty }}<1\). But by the definitions of \({\tilde{B}}_{n}^{(n)}\) and \(T_{n}^{\beta }\), we have

and therefore

where \({\gamma } =Max_{0\le v\le {{c}_{i}}}\,\sum \nolimits _{j=1}^{m}{\left| {{\psi }_{j}}(v) \right| }\).

Now, we consider the following two cases:

-

(a)

If \(\alpha +\beta \in (0,1)\) from (16), we have

$$\begin{aligned} \begin{aligned} {{h}^{1-\alpha }}{{\left\| T_{n}^{-\beta }{\tilde{B}}_{n}^{(n)} \right\| }_{\infty }}&\le {{h}^{1-\alpha }}x_{n,1}^{-\beta }{{\left\| k \right\| }_{\infty }} \frac{{\gamma }c_{m}^{1-\alpha }}{1-\alpha } \\&<\frac{{{h}^{1-\alpha -\beta }}c_{m}^{1-\alpha }{{\left\| k \right\| }_{\infty }}{\gamma } }{(1-\alpha )c_{1}^{\beta }}. \\ \end{aligned} \end{aligned}$$(17)So by choosing \({\bar{h}}<{{\left( \frac{(1-\alpha )c_{m}^{\alpha -1}c_{1}^{\beta }}{2{{\left\| k \right\| }_{\infty }}{\gamma } } \right) }^{\frac{1}{1-\alpha -\beta }}}\), we obtain \({{h}^{1-\alpha }}{{\left\| T_{n}^{-\beta }{\tilde{B}}_{n}^{(n)} \right\| }_{\infty }}<\frac{1}{2}\).

-

(b)

If \(\alpha +\beta =1\) and \(k(0,0)=0\), then there exists a positive constant \(\varepsilon >0\) such that

\(\underset{0\le t\le x\le \varepsilon }{\mathop {\sup }}\,\left| k(x,t) \right| <\frac{c_{1}^{1-\alpha }c_{m}^{\alpha -1}(1-\alpha )}{2{\gamma } }\).

So for \(x_{n+1} \in (0,\varepsilon ]\) from (16), we have

for \(x_{n}\in [\frac{\varepsilon }{2},T]\),

and by choosing \({\bar{h}}=\min \left\{ \frac{\varepsilon }{2},\frac{\varepsilon }{2{{c}_{m}}}{{\left( \frac{1-\alpha }{2{\gamma } {{\left\| k \right\| }_{\infty }}} \right) }^{\frac{1}{1-\alpha }}} \right\} \) the proof is completed. \(\square \)

4 Convergence analysis

In this section, we investigate the order of convergence of the multistep collocation solution to the exact solution.

Theorem 4.1

Suppose that one of the hypotheses of Theorems 2.1 or 2.2 are valid, \(k\in {{C}^{m+r}}(D)\), \(g\in {{C}^{m+r}}[0,T]\), and let \(e(x)=y(x)-{{u}_{h}}({x})\). If the starting error satisfies

and the spectral radius of the matrix,

is less than 1, then \({{\left\| \ e \right\| }_{\infty }}=O({{h}^{m+r}})\).

Proof

By the hypotheses of the theorem and referring (Allaei et al. 2015) we can conclude that Eq. (1) has a unique solution in \(C^{m+r}[0,T]\) and from Peano’s theorem (Brunner 2004), we conclude that for any \(v\in (0,1]\) we have

where \({{R}_{m,r,n}}(v)\) is given by

in which

is the Peano’s kernel, and

Then from (8) and (22), it follows that

where \({{e}_{n,j}}=e({{x}_{n,j}})\) and \({{e}_{n-k}}=e({{x}_{n-k}})\).

Now from (1), we have

and by using the first equation of (9), we have

By subtracting (25) from (24), we have

But according to (20) for the starting error, we have

in which \({{\left\| {{\eta }_{d}} \right\| }_{\infty }}\le C\), where \(C>0\) is a constant.

By replacing from (27) and (23) in (26), we have

Now suppose that the vectors \({\bar{\rho }}_{n}^{(d)}\in {{{\mathbb {R}}}^{m}}\) are defined by:

for \( i=1,\ldots ,m\).

Then using (12), (13) and (29), we can rewrite (28) in the matrix form:

where

\(E_{d}^{(1)}={{\left[ {{e}_{d}},{{e}_{d-1}},\ldots ,{{e}_{d-r+1}} \right] }^{T},}\) \(E_{d}^{(2)}={{\left[ {{e}_{d,1}},{{e}_{d,2}},\ldots ,{{e}_{d,m}} \right] }^{T}}\).

Since \(c_1> 0,\) the diagonal matrix \({{T}^{\beta }_{n}}\) is invertible for all \(n=0,1,\ldots ,N-1\), with \({{\left\| T_{n}^{-\beta } \right\| }_{\infty }}\le {{\left( {{c}_{1}}h \right) }^{-\beta }}\).

Now letting \(n=d-1\) and \(v=1\) in (23), we have

and then the non-homogeneous linear difference system of equations

is concluded, in which

and

By solving this system, we obtain

In the next step by replacing (32) in (30), we obtain

where \({{\tilde{C}}}_{n}^{(d)}=T^{-\beta }_{n}{{\tilde{B}}}_{n}^{(d)}\), \({{\bar{C}}}_{n}^{(d)}=T^{-\beta }_{n}{{\bar{B}}}_{n}^{(d)}\), for \(d=r,\ldots ,n\).

Moreover, by using the assumption \(\rho (A)<1\), and doing some manipulations (Conte and Paternoster 2009), we have

and

Finally from (34), (35) and representation of the local error given in (23), there exists a positive constant \(D_0\) such that

where \({{\Lambda }_{m,r}}=\max \left\{ {{\left\| {{\phi }_{k}} \right\| }_{\infty }},{{\left\| {{\psi }_{j}} \right\| }_{\infty }};k=0,...,r-1,j=1,...,m \right\} ,\) and \({{M}_{m,r}}={{\left\| {{y}^{(m+r)}} \right\| }_{\infty }}\), \({{k}_{m,r}}=\max _{v\in [0,1]}\int \limits _{-r+1}^{1}{\left| {{k}_{m,r}}(v,\tau ) \right| d\tau }.\)

\(\square \)

5 Numerical examples

In this section, we have carried out the multistep collocation method in the space \(S_{m-1}^{(-1)}({{I}_{h}})\) for solving some examples. We have considered two different cases of Eq. (1) with different values for \(\alpha \) and \(\beta \) explained in Theorems 2.1 and 2.2. Note that the errors are compared via \({{\left\| {{e}_{h}} \right\| }_{\infty }}= \sup \nolimits _{1\le i\le N} \,\left| {{e}_{h}}({{x}_{i}}) \right| \), and the numerical order of convergence which is defined by

Example 1

In this example, we consider the equation

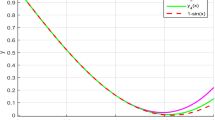

in which, \(\alpha =\frac{1}{2}\), \(\beta =\frac{1}{2}\), \(k(x,t)={{t}^{2}}\) and \(f(x)=x^{2}-B(\frac{1}{2},\frac{9}{2})x^{4}\), where B(a, b) is the beta function. We apply a three-step collocation method to this equation with collocation parameters \({{c}_{1}}=\frac{3}{5}\), \({{c}_{2}}=\frac{9}{10}\), and with Radau II 2-points \({{c}_{1}}=\frac{1}{3}\), \({{c}_{2}}=1\) and Radau II 3-points \({{c}_{1}}=\frac{4-\sqrt{6}}{10}\),\({{c}_{2}}=\frac{4+\sqrt{6}}{10}\) and \({{c}_{3}}=1\). The exact solution is \(y(x)={{x}^{\frac{3}{2}}}\). The comparison between the exact solution and the multistep collocation solution with collocation parameters \({{c}_{1}}=\frac{3}{5}\) and \({{c}_{2}}=\frac{9}{10}\), for \(N=8\) are graphically shown in Fig. 1. The absolute errors and orders of convergence for this example are represented in Table 1, which are in agreement with the results in Theorem 4.1. An interesting result from Table 1 is that a superconvergence behavior can be obtained when Radau II points have been applied.

The exact solution and three-step collocation solution of Example 1 with \(N=8\)

The exact solution and three-step collocation solution of Example 2 with \(N=16\)

Example 2

We consider the following Volterra integral equation of the third kind

in which \(\alpha =0\), \(\beta =1\), \(k(x,t)=t\) and so \(k(0,0)=0\); thus by Theorem 2.2 the equation has a unique solution in \(C^{m+r}[0,1]\), which is given by \(y(x)=x\). We have applied a three-step collocation method on this equation with the Chebyshev nodes \({{c}_{1}}=\frac{1}{2}-\frac{1}{2\sqrt{2}}\) and \({{c}_{2}}=\frac{1}{2}+\frac{1}{2\sqrt{2}}\) (Stoer and Bulirsch 2002). The result for \(N=16\) is shown in Fig. 2.

6 Conclusions

In this paper, we have applied the multistep collocation method on some special cases of the Volterra integral equations of the third kind. It is observed that under some appropriate conditions on f(x) and k(x, t), and by increasing the numbers of collocation parameters and steps, an acceptable order of convergence, in comparison to the collocation method, can be achieved.

References

Atkinson KE (1989) An introduction to numerical analysis, 2nd edn. John Wiley, New York

Brunner H (2004) Collocation methods for Volterra integral and related functional equations. Cambridge University Press, Cambridge

Brunner H (2017) Volterra integral equations, an introduction to theory and applications. Cambridge University Press, Cambridge

Conte D, Paternoster B (2009) Multistep collocation methods for Volterra integral equations. Appl Numer Math 59:1721–1736

Fazeli S, Hojjati G, Shahmorad S (2012) Multistep Hermite collocation methods for solving Volterra integral equations. Numer Algorithm 60(1):27–50

Seyed AS, Yang ZW, Brunner H (2015) Existence, uniqueness and regularity of solutions for a class of third kind Volterra integral equations. J Integral Equ Appl 27:325–342

Seyed AS, Yang ZW, Brunner H (2017) Collocation methods for third-kind VIEs, IMA. J Numer Anal 37:1104–1124

Song H, Yang ZW, Brunner H (2019) Analysis of collocation methods for nonlinear Volterra integral equations of the third-kind. Calcolo 56:7. https://doi.org/10.1007/s10092-019-0304-9

Stoer J, Bulirsch R (2002) Introduction to numerical analysis. Springer, New York

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hui Liang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shayanfard, F., Dastjerdi, H.L. & Ghaini, F.M.M. A numerical method for solving Volterra integral equations of the third kind by multistep collocation method. Comp. Appl. Math. 38, 174 (2019). https://doi.org/10.1007/s40314-019-0947-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0947-9