Abstract

This paper deals with the global exponential stability for a class of Clifford-valued recurrent neural networks with time-varying delays and distributed delays (mixed time delays). The Clifford-valued neural network, as an extension of the real-valued neural network, which includes the familiar complex-valued and the quaternion-valued neural network as special cases, has been an active area of research recently. First, based on the Brouwer’s fixed point theorem, the existence of the equilibrium point of Clifford-valued recurrent neural networks is established. Next, by inequality technique and the method of the Clifford-valued variation parameter, some novel assertions are given to ensure the global exponential stability of the addressed model, which are new and complement some previous works. We illustrate the effectiveness of this approach with a numerical example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the last decades, it is well known that the dynamic of neural networks has received considerable attention, because it is a key factor in applications. Due to the study of the last decades, research on real-value neural networks is mature, see Aouiti and Abed Assali (2019), Yang et al. (2013), Wu et al. (2012), Alimi et al. (2019), and Aouiti and Assali (2019). For example in Aouiti and Abed Assali (2019), the authors studied the stability of a class of delayed inertial neural networks via non-reduced-order method. In Yang et al. (2013), the synchronization for a class of coupled neural networks with mixed probabilistic time-varying delays and random coupling strengths was investigated by applying integral inequalities and Lyapunov functional method. In Wu et al. (2012), the existence and the global exponential stability for delayed impulsive cellular neural networks was studied by the Lyapunov functions and the Razumikhin technique. In Alimi et al. (2019), the finite-time and the fixed-time synchronization of inertial neural networks with proportional delays is discussed by using analytical techniques and Lyapunov functional.

On the other hand, the research of complex-valued neural networks and quaternion-valued neural networks has achieved significant success thanks to the perseverant efforts. Recently, various models of complex value and quaternion value neural networks are proposed and actively studied (see Hu and Wang 2012; Zhang et al. 2017; Aouiti et al. 2020; Zhang et al. 2013; Aouiti and Bessifi 2020; Tu et al. 2019a, b and the references cited therein). In addition, time delays, particularly time-varying delays are an essential characteristic of signal transmission between neurons, and the main cause of instability and oscillation. It is, therefore, necessary to study the stability of neural networks with time delays (Rakkiyappan et al. 2015; Xu et al. 2020; Xu and Aouiti 2020). Furthermore, due to the parallel pathways of a variety of axon sizes and lengths neural networks generally have a spatial nature, it is desired to model them by using distributed delays. Therefore, time-varying delays and distributed delays are an important parameter associated with neural networks (Aouiti et al. 2018; Achouri et al. 2020).

Clifford algebra was discovered by the British mathematician William K. Clifford (Clifford 1878). Clifford’s algebra is a generalization of real number, complex number and quaternion, which has significant and extensive application areas. It has been used in various domains including image and signal processing, neural computing, control problems, computer and robot vision, neural networks and other fields thanks to its powerful and practical framework for the solution and representation of a geometrical problem (Hitzer et al. 2013; Rivera-Rovelo and Bayro-Corrochano 2006; Kuroe 2011; Dorst et al. 2007; Li and Xiang 2019). As an example, in the world of neural networks, Pearson first suggested a Clifford value neural network in Pearson and Bisset (1992), which is represented by Clifford’s value differential equations. Later, Buchholz (2005) concluded that neural networks with Clifford value have greater advantages compared to neural networks with real value. In recent years, research on neural networks with Clifford value has become an active field research and received more attention. Because Clifford’s number multiplication does not satisfy the commutative law, therefore, it has brought great difficulties to the studies of neural networks with Clifford value. Consequently, the existing studies on the existence and the global exponential stability of the equilibrium point of neural networks with Clifford value are still very rare. Only a few publications have been published to date on the stability of the equilibrium point of Clifford-valued neural networks (Liu et al. 2016; Zhu and Sun 2016; Boonsatit et al. 2021; Rajchakit et al. 2021a, b, c, d, e). In Liu et al. (2016), the existence, the uniqueness and global asymptotic stability for the equilibrium for a class of Clifford-valued recurrent neural networks with time delays was investigated by applying differential inequality techniques and linear matrix inequality (LMI) technique. In Zhu and Sun (2016), authors investigated global exponential stability of Clifford-valued recurrent neural networks by Brouwer’s fixed point theorem and inequality technique. It should be noted that time-varying delays and distributed delays are not taken into account in Zhu and Sun (2016). On the other hand, time delays in Liu et al. (2016) are constant, which means that the outcomes therein are invalid to investigate Clifford-valued recurrent neural networks with time-varying delays and distributed delays. In Boonsatit et al. (2021), finite-/fixed-time synchronization for Clifford-valued recurrent neural networks with time-varying delays were studied by Lyapunov-Krasovskii functional and computational techniques. In Rajchakit et al. (2021a, 2021b), global exponential stability of Clifford-valued recurrent neural networks with time delays had been studied by using Lyapunov stability theory, linear matrix inequality techniques and analytical techniques. Global asymptotic stability and global exponential stability for delayed Clifford-valued neutral-type neural network models were investigated by employing the homeomorphism theory, linear matrix inequality and Lyapunov functional methods in Rajchakit et al. (2021e).

To the best of our knowledge, no major investigation on the global exponential stability for Clifford-valued recurrent neural networks involving time-varying delays and distributed delays (mixed time delays) have been carried out. Inspired by the above discussion and analysis, the main goal of this paper is to investigate the existence and the global exponential stability of the equilibrium point of Clifford-valued recurrent neural networks with mixed time delays. The existence of the equilibrium of the system is obtained using the fixed point theorem. In addition, by constructing appropriate delay differential inequality, some sufficient conditions for the global exponential stability of equilibrium are proved. Finally, an example is given to illustrate the effectiveness of the obtained results.

All the rest of the paper is organized into the following structure. In Sect. 2, some preliminaries are introduced that will be used later. Model descriptions is exhibited in Sect. 3. In Sect. 4, based on the Brouwer fixed point and inequality technique, we prove the existence of the equilibrium point and the stability of the addressed model. In Sect. 5, an example is given to show the effectiveness of the obtained results. In Sect. 6, conclusions is provided.

2 Notations and preliminaries

The following section introduces notations, definitions and preliminary facts that are used throughout this work (see Buchholz 2005).

For convenience, let \({\mathbb {R}}\) and \({\mathbb {A}}\) denote the real space and the real clifford space, respectively. Note by \({\overline{x}}\) the conjugate of the clifford number x. We define \({\mathbb {A}}\) is as the clifford which equipped with m generators algebra that has equipped over the real number \({\mathbb {R}}\). m the multiplicative generators \(e_1,e_2,\ldots ,e_m\) are named clifford generators that satisfy the relations

To keep it simple, if an element is the product of more than one Clifford generator, we write its clues together. As an example \(e_1e_2=e{12}\), \(e_{2}e_3=e_{23}\) and \(e_8e_6e_4e_2=e_{8642}\). So, A has its base in the following

Consequently, the real Clifford’s algebra consists of elements such as \(x=\sum \nolimits _{A}x^Ae_A\), in which \(x^A\in {\mathbb {R}}\) is a real number. If \(A=\emptyset \), therefore \(e_{\emptyset }\) can be described as \(e_0\) and \(x_0\) is the coefficient of the \(e_0\) component, i.e., \(x_0\) is real part of x, for more detail see Buchholz (2005). It can be concluded from these properties that

Definition 1

(Buchholz 2005) For an arbitrary base vector, the conjugate is given:

Therefore,

Definition 2

(Buchholz 2005) The inner product in the clifford domain is defined as:

in which \([\cdot ]_0\) indicates the coefficient of its \(e_0\)-component. The norm on \({\mathbb {A}}\) is correspondingly described as following

Definition 3

(Buchholz 2005)

-

1.

The derivative for \(x(t)=\sum \nolimits _{A}x^A(t)e_A\) is given as follows:

$$\begin{aligned} {\dot{x}}(t)=\displaystyle \sum _{A}{\dot{x}}^A(t)e_A \end{aligned}$$ -

2.

The integral for \(x(t)=\sum \nolimits _{A}x^A(t)e_A\) is given as follows:

$$\begin{aligned} \displaystyle \int _{0}^{t}{\dot{x}}(s)ds=\displaystyle \int _{0}^{t}\displaystyle \sum _{A}{\dot{x}}^A(s)e_Ads=\left( \displaystyle \sum _{A}\displaystyle \int _{0}^{t}{\dot{x}}^A(s)ds\right) e_A. \end{aligned}$$

Proposition 1

(Buchholz 2005) Let \(x_1\), \(x_2\), \(x_3\), \(c\in {\mathbb {A}}\). Therefore,

-

1.

\(x_1(x_2+x_3)=x_1x_2+x_1x_3\),

-

2.

\((x_1+x_2)x_3=x_1x_3+x_2x_3\),

-

3.

\(x_1x_2\ne x_2x_1\),

-

4.

\(\lambda x_1=x_1\lambda \) if and only if for every \(\lambda \in {\mathbb {R}}\),

-

5.

\(|x_1+x_2|_0\le |x_1|_0+|x_2|_0\),

-

6.

\(|x_1x_2|_0\le |x_1|_0|x_2|_0\),

-

7.

\(\displaystyle \int _{0}^{t}cx_1(s)ds=c\displaystyle \int _{0}^{t}x_1(s)ds\).

Lemma 1

(Zhu and Sun 2016) Let x(t), \(f(t)\in {\mathbb {A}}\), be two clifford-valued functions, where \(t>0\) and f(t) is a nonlinear function. In addition, let \(\varsigma \in {\mathbb {A}}\), \(\sigma \in {\mathbb {R}}\), if \({\dot{x}}+\sigma x(t)=f(t)\), therefore, \(x(t)=\varsigma e^{-\sigma t}+e^{-\sigma t}\displaystyle \int e^{\sigma t}f(t)dt\).

Lemma 2

(Minc 1988) Let \(M\ge 0\) be a square matrix. If \(\rho (A)<1\), therefore \((I_d-A)\ge 0\), where \(\rho (A)\) is the spectral radius of A and \(I_d\) indicates the identity matrix.

Lemma 3

(Minc 1988) If \(M=(m)_{n\times n}\) is M-matrix, therefore, the matrix MC(CM) is also M-matrix, in which \(C=diag(c_1,\ldots ,c_n)>0.\)

Lemma 4

(Shao 2009) Let \(M=(m)_{n\times n}\ge 0\) be a matrix, \(L=diag(l_1,\ldots ,l_n)0\) (\(l_i>,\;i=1,\ldots ,n\)) and \(C=diag(c_1,\ldots ,c_n)\) (\(c_i>,\;i=1,\ldots ,n\)). The matrix \(CL^{-1}-|M|\) is M-matrix if and only if \(\rho (C^{-1}|A|L)<1.\)

3 Setup of the problem

In this paper, we consider the following Clifford-valued recurrent neural network with mixed time delays:

where, \(i,j=1,2\ldots ,n\), n is the number of neuros, \(x_i(\cdot )\in {\mathbb {A}}\) denotes the state of neuro i, \(a_i>0\) is a real denotes the self-feedback connection weight, \(b_{ij}\), \(c_{ij}\), \(d_{ij}\in {\mathbb {A}}\) indicates the connection weights, \(f_j(\cdot )\in {\mathbb {A}}\) is the activation non-linear function, \(\tau _j(\cdot )\) is the transmission delays that verifies \(0\le \tau _j(t)\le \tau =\max _{1\le j\le n}\sup _{t\ge 0}\tau _j(t),\) \(K_{ij}(\cdot )\) is the kernel delay, \(I_i\in {\mathbb {A}}\) is external constant input.

The initial conditions of system (2) are

For convenience, the equation (2) can be expressed in the vector form

where \(x=(x_1,\ldots ,x_n)^T\in {\mathbb {A}}^n\) is the state vector, \(A=diag(a_1,\ldots ,a_n)\in {\mathbb {R}}^{n\times n}\), \(B=(b_{ij})_{n\times n}\in {\mathbb {A}}^{n\times n}\), \(C=(c_{ij})_{n\times n}\in {\mathbb {A}}^{n\times n}\), \(D=(d_{ij})_{n\times n}\in {\mathbb {A}}^{n\times n}\), \(f(\cdot )=(f_1(\cdot ),\ldots ,f_n(\cdot ))^T\), \(I=(I_1,\ldots ,I_n)^T\), \(K(\cdot )=(k_{ij}(\cdot ))_{n\times n}\).

Remark 1

In this paper, the proposed system model is more general than the system model proposed in previous works (Zhu and Sun 2016; Rajchakit et al. 2021a). If the distributed time-varying delays is not considered, that is to make \(d_{ij}=0,\;\;i=j=1,2,\ldots ,n\), (2) becomes into the following system

which was studied in Rajchakit et al. (2021a), and if \(c_{ij}=d_{ij}=0,\;\;i=j=1,2,\ldots ,n\), (2) becomes into the following clifford-valued recurrent neural network without delays

which was studied in Zhu and Sun (2016). Thus, it can be concluded that the model considered in this paper is more general than the ones in Zhu and Sun (2016) and Rajchakit et al. (2021a).

In this paper, the following hypotheses should be added:

- \((H_1)\):

-

The activation functions \(f_j(x)\) satisfy the Lipschitz condition regarding to the n dimensional clifford vector. That is to say, there exist constants \(L^f_j>0\) such that \(|f_j(x)-f_j(y)|_0\le L^f_j|x-y|_0\) for all \(x,y\in {\mathbb {A}}\) and \(j=1,2,\ldots ,n\).

- \((H_2)\):

-

The delay kernels \(K_{ij}(.):[0,+\infty )\rightarrow [0,+\infty )\), \(i,j=1,2,\ldots ,n\) satisfying

$$\begin{aligned} \displaystyle \int _{0}^{+\infty }K_{ij}(s)ds=1,\;\;\displaystyle \int _{0}^{+\infty }e^{\lambda s}K_{ij}(s)ds=k_{ij}<+\infty . \end{aligned}$$where \(\lambda \) is positive number.

4 Main results

4.1 Existence and uniqueness of equilibrium point

We will study the existence and uniqueness of the equilibrium point of the model (2) in this subsection.

Theorem 1

Suppose that the system (3) satisfies (\(H_1\))–(\(H_2\)) and suppose that

then the system (3) has a unique equilibrium point \(x^*\in {\mathbb {A}}^n\), where \(A=diag(a_1,\ldots ,a_n)^T\), \(|B|_0=(|b_{ij}|_0)_{n\times n}\), \(|C|_0=(|c_{ij}|_0)_{n\times n}\), \(L^f=diag(L^f_1,\ldots ,L^f_n)^T\).

Proof

The equilibrium point \(x^*=(x^*_1,\ldots ,x^*_n)^T\) is obviously subjected to clifford algebra equation:

Define

where

Therefore,

where

Define

Then the vector form is rewritten in the following form:

Based on Lemma 3 and Lemma 4, since \(A^{-1}|B|_0L^f+A^{-1}|C|_0L^f+A^{-1}|D|_0L^f\) is non-negative matrix and \(\rho \left( A^{-1}|B|_0L^f+A^{-1}|C|_0L^f+A^{-1}|D|_0L^f\right) <1\), then \(I_d-\left( A^{-1}|B|_0L^f+A^{-1}|C|_0L^f+A^{-1}|D|_0L^f\right) \) is an M-matrix. Therefore, there exists vector \(\xi =(\xi _1,\ldots ,\xi _n)^T\) such that

Hence, we have

Define \(\varOmega =\{x\in {\mathbb {A}}^n,\;|x|_0<\xi \}\), for any \(x\in {\mathbb {A}}^n\), we get \(|\varLambda (x)|_0\le \xi .\) Thus, the continuous operator \(\varLambda \) maps compact and convex set \(\varOmega \) into itself. Using Brouwer’s fixed point theorem, \(\varLambda \) has a fixed point \(x^*=(x^*_1,\ldots ,x^*_n)^T\) such as \(\varLambda (x^*)=x^*,\) which is the equilibrium point of system (3). Therefore, system (2) has one unique equilibrium point. \(\square \)

4.2 Global exponential stability

Some sufficient conditions to ensure the global exponential stability of the system (2) will be established in this subsection.

Theorem 2

Suppose that the spectral radius of the matrix \((A-\lambda I_d)^{-1}\big (|B|_0L^f+e^{\lambda \tau }|C|_0L^f+|J|_0L^f\big )\) is less than 1, that means \(\rho \bigg ((A-\lambda I_d)^{-1}\big (|B|_0L^f+e^{\lambda \tau }|C|_0L^f+|J|_0L^f\big )\bigg )<1\), in which \(|J|_0=(k_{ij}|d_{ij}|_0)_{n\times n}\) and \(\lambda >0\) is a small real constant, then the equilibrium point of system (3) is globally exponentially stable.

Proof

According to Lemma 1, the model (2) is transformed into the following equality:

therefore

Define

therefore, we get

By calculating the derivative of \(\theta _i(\cdot )\), we obtain

Let

then, we have

Let \({\widetilde{q}}_j(t)=\displaystyle \max _{-\infty \le u\le t}q_j(u)\), we obtain

On the other hand,

By integrating both sides from 0 to t, the following results are obtained

In addition, note that

therefore

Define

then, Eq. (10) can be written as the following vector form:

It follows that

then

Since \(\rho \bigg ((A-\lambda I_d)^{-1}\big (|B|_0L^f+e^{\lambda \tau }|C|_0L^f+|J|_0L^f\big )\bigg )\), \((A-\lambda I_d)^{-1}\big (|B|_0L^f+e^{\lambda \tau }|C|_0L^f+|J|_0L^f\big )\ge 0\), therefore, using Lemma 2, we obtain

by using (13), we have

Because

from (14) and (15), we can obtain the following result:

Hence, model (3) is globally exponentially stable. This completes the proof. \(\square \)

Remark 2

Recently, the authors in Zhu and Sun (2016) provided sufficient conditions for the existence and global exponential stability of Clifford-valued recurrent neural networks without delays. The authors in Liu et al. (2016) studied the existence and the stability of Clifford-valued neural networks with a constant delay. At present, our results in Theorem 1 and Theorem 2 complement the aforementioned work.

Remark 3

In recent years, it has been found that complex-valued and quaternion-valued neural networks have more advantages than real-valued neural networks in some practical applications. Consequently, there have been many papers on complex-valued and quaternion-valued neural networks (Hu and Wang 2012; Zhang et al. 2017; Aouiti et al. 2020; Zhang et al. 2013; Aouiti and Bessifi 2020; Tu et al. 2019a, b). The above result can be easily applied to real-valued, complex-valued and quaternion-valued recurrent neural networks. The Clifford-valued neural network model (2) includes real-valued \((m = 0)\), complex-valued \((m = 1)\) and quaternion-valued \((m = 2)\) neural network models as its special cases, which has important and extensive application fields.

Remark 4

In Boonsatit et al. (2021) and Rajchakit et al. (2021a), some authors have achieved the stability or synchronization of neural networks using the Lyapunov function method, and the decomposition method. In contrast to the method in the above papers, however, we obtain the global exponential stability of Clifford-valued neural networks by using the non-decomposition method, and the proof by inequality technique and matrix theory and its spectral theory. Hence, the aim of this paper is to investigate the global exponential stability of Clifford-valued neural networks.

Remark 5

Because of their practical importance and extensive applications in many fields, such as telecommunications and robotics, aerospace, signal filtering, parallel computing, data mining, several Clifford-valued neural networks have been studied with different methods in recent years, especially for stability, synchronization and pseudo almost automorphic problems. In Boonsatit et al. (2021), Rajchakit et al. (2021a), Rajchakit et al. (2021b), Rajchakit et al. (2021c), Rajchakit et al. (2021d), Rajchakit et al. (2021e) and Aouiti et al. (2021), the authors consider the stability problem for the delayed neural networks via the methods of Lyapunov function and Lyapunov-Krasovskii functional, Banach’s fixed point principle, as well as the matrix inequalities technique with a great deal of integral calculations. For example, Boonsatit et al. (2021) consider the finite-time and fixed-time synchronization for delayed Clifford-valued recurrent neural networks, where the finite/fixed-time synchronization criteria are established by Lyapunov-Krasovskii functional and computational techniques. Rajchakit et al. (2021a) investigate the global exponential stability in the Lagrange sense of the delayed Clifford-valued recurrent neural networks with Lyapunov stability theory, some analytical techniques and the linear matrix inequality (LMI) technique, based on which, the obtained conditions are given in terms of high-dimensional matrices. While in this paper, instead of using the methods above, the Brouwer’s fixed point theorem, the method of Clifford-valued variation parameter, inequality technique and matrix theory and its spectral theory are applied to investigate the considered Clifford-valued neural networks, which can help to avoid a large number of tedious calculations and high-dimensional matrices.

5 Numerical example

We consider the two-neuron Clifford-valued recurrent neural network with mixed time delays represented by:

where \(A=\left( \begin{array}{cc}10 &{} 0 \\ 0 &{} 20\end{array}\right) \),

\(B=\left( \begin{array}{cc} 2 &{} -1+e_{1}-e_{2}+e_{1} e_{2} \\ 1-e_{1}+e_{2}-e_{1} e_{2} &{} 3 \end{array}\right) \),

\(C=\left( \begin{array}{cc} 1-e_1-e_2 &{}\quad 1+2e_{1}+e_{1} e_{2} \\ 1-e_{1}+e_{2}&{}1+ e_1+e_2-e_{12} \end{array}\right) \),

\(D=\left( \begin{array}{cc} -2 &{}\quad -1-e_1 \\ 2-e_{1}+2e_{2}&{} \quad 2-2e_2 \end{array}\right) \),

\(I=\left( \begin{array}{c}\frac{3}{2} e_{1} \\ -\frac{1}{2}+\frac{3}{2} e_{2}+e_{1} e_{2}\end{array}\right) \),

and the activation functions is

where \(u_j=x^0_j+x^1_je_1+x^2_je_2+x^{12}_je_1e_2\in {\mathbb {A}}\), \(j=1,2.\)

We choose the time-varying delays \(\tau _1(t)=0.3+0.1\sin (t),\) \(\tau _2(t)=0.6+0.4\cos (t)\), \(\tau =1\), \(k_{ij}(t-s)=e^{-(t-s)},\) \(t\ge 0\), \(s\in (0,+\infty ].\)

We have in this example

then,

So, the conditions of Theorem 1 hold and system (16) has at least an equilibrium point.

Now, we fix \(\lambda =0.1\), \(\tau =1\) then

then

Therefore, all the conditions of Theorem 2 hold, then the system (16) is globally exponentially stable.

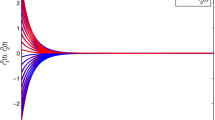

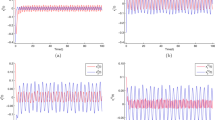

Using the Simulink toolbox in MATLAB, the fact is verified by the simulation in Figs. 1 and 2 with six different initial conditions which demonstrates the state trajectories of the system (16).

a The state trajectories of \(x_1^0(t)\) with 6 different initial conditions; b The state trajectories of \(x_1^1(t)\) with 6 different initial conditions; c The state trajectories of \(x_1^2(t)\) with 6 different initial conditions; d The state trajectories of \(x_1^{12}(t)\) with 6 different initial conditions

a The state trajectories of \(x_2^0(t)\) with 6 different initial conditions; b The state trajectories of \(x_2^1(t)\) with 6 different initial conditions; c The state trajectories of \(x_2^2(t)\) with 6 different initial conditions; d The state trajectories of \(x_2^{12}(t)\) with 6 different initial conditions

6 Conclusion

In this paper, the existence and the global exponential stability of the equilibrium point for a class of Clifford-valued recurrent neural networks with mixed time delays were proven using the Brouwer’s fixed point theorem, inequality technique, and the method of the Clifford-valued variation parameter. This is the first paper to study the global exponential stability for Clifford-valued neural networks with mixed time delays. The results of this article are essentially new, especially when our system degenerates into real, complex and quaternion value systems. Finally, the effectiveness of the results obtained is illustrated by an illustrative example.

Data Availability Statement

No data availability statement applies.

References

Achouri H, Aouiti C, Hamed BB (2020) Bogdanov-Takens bifurcation in a neutral delayed Hopfield neural network with bidirectional connection. Int J Biomath 13(06):2050049

Alimi AM, Aouiti C, Assali EA (2019) Finite-time and fixed-time synchronization of a class of inertial neural networks with multi-proportional delays and its application to secure communication. Neurocomputing 332:29–43

Aouiti C, Abed Assali E (2019) Effect of fuzziness on the stability of inertial neural networks with mixed delay via non-reduced-order method. Int J Comput Math Comput Syst Theory 4(3–4):151–170

Aouiti C, Assali EA (2019) Nonlinear Lipschitz measure and adaptive control for stability and synchronization in delayed inertial Cohen-Grossberg-type neural networks. Int J Adapt Control Signal Process 33(10):1457–1477

Aouiti C, Bessifi M (2020) Periodically intermittent control for finite-time synchronization of delayed quaternion-valued neural networks. Neural Comput Appl 33:6527–6547

Aouiti C, Bessifi M, Li X (2020) Finite-time and fixed-time synchronization of complex-valued recurrent neural networks with discontinuous activations and time-varying delays. Circuits Syst Signal Process 39:5406–5428

Aouiti C, Dridi F, Hui Q, Moulay E (2021) \((\mu ,\nu )-\)Pseudo almost automorphic solutions of neutral type Clifford-valued high-order Hopfield neural networks with D operator. Neural Process Lett 53(1):799–828

Aouiti C, Gharbia IB, Cao J, M’hamdi, MS, Alsaedi A (2018) Existence and global exponential stability of pseudo almost periodic solution for neutral delay BAM neural networks with time-varying delay in leakage terms. Chaos Solitons Fractals 107:111–127

Boonsatit N, Rajchakit G, Sriraman R, Lim CP, Agarwal P (2021) Finite-/fixed-time synchronization of delayed Clifford-valued recurrent neural networks. Adv Diff Equ 2021(1):1–25

Buchholz S (2005) A theory of neural computation with Clifford algebras. Ph.D. thesis, University of Kiel

Clifford P (1878) Applications of Grassmann’s extensive algebra. Am J Math 1(4):350–358

Dorst L, Fontijne D, Mann S (2007) Geometric algebra for computer science (revised edition). Morgan Kaufmann Publishers, Burlington, p 2009

Hitzer E, Nitta T, Kuroe Y (2013) Applications of Clifford’s geometric algebra. Adv Appl Clifford Algebras 23(2):377–404

Hu J, Wang J (2012) Global stability of complex-valued recurrent neural networks with time-delays. IEEE Trans Neural Netw Learn Syst 23(6):853–865

Kuroe Y (2011) Models of Clifford recurrent neural networks and their dynamics. In The 2011 International Joint Conference on Neural Networks. IEEE, pp 1035-1041

Li Y, Xiang J (2019) Existence and global exponential stability of anti-periodic solution for Clifford-valued inertial Cohen-Grossberg neural networks with delays. Neurocomputing 332:259–269

Liu Y, Xu P, Lu J, Liang J (2016) Global stability of Clifford-valued recurrent neural networks with time delays. Nonlinear Dyn 84(2):767–777

Minc H (1988) Nonnegative matrices. Wiley, New York

Pearson JK, Bisset DL (1992) Back propagation in a Clifford algebra. Artificial Neural Networks, 2

Rajchakit G, Sriraman R, Boonsatit N, Hammachukiattikul P, Lim CP, Agarwal P (2021a) Exponential stability in the Lagrange sense for Clifford-valued recurrent neural networks with time delays. Adv Differ Equ 2021(1):1–21

Rajchakit G, Sriraman R, Boonsatit N, Hammachukiattikul P, Lim CP, Agarwal P (2021b) Global exponential stability of Clifford-valued neural networks with time-varying delays and impulsive effects. Adv Differ Equ 2021(1):1–21

Rajchakit G, Sriraman R, Vignesh P, Lim CP (2021c) Impulsive effects on Clifford-valued neural networks with time-varying delays: an asymptotic stability analysis. Appl Math Comput 407:126309

Rajchakit G, Sriraman R, Lim CP, Sam-ang P, Hammachukiattikul P (2021d) Synchronization in finite-time analysis of Clifford-valued neural networks with finite-time distributed delays. Mathematics 9(11):1163

Rajchakit G, Sriraman R, Lim CP, Unyong B (2021e) Existence, uniqueness and global stability of Clifford-valued neutral-type neural networks with time delays. Math Comput Simul. https://doi.org/10.1016/j.matcom.2021.02.023

Rakkiyappan R, Velmurugan G, Li X (2015) Complete stability analysis of complex-valued neural networks with time delays and impulses. Neural Process Lett 41(3):435–468

Rivera-Rovelo J, Bayro-Corrochano E (2006) Medical image segmentation using a self-organizing neural network and Clifford geometric algebra. In The 2006 IEEE International Joint Conference on Neural Network Proceedings. IEEE, pp 3538–3545

Shao J (2009) Matrix analysis techniques with applications in the stability studies of cellar neural networks. Doctoral dissertation, Ph. D. Thesis, University of Electronic Science and Technology of China

Tu Z, Zhao Y, Ding N, Feng Y, Zhang W (2019a) Stability analysis of quaternion-valued neural networks with both discrete and distributed delays. Appl Math Comput 343:342–353

Tu Z, Yang X, Wang L, Ding N (2019b) Stability and stabilization of quaternion-valued neural networks with uncertain time-delayed impulses: direct quaternion method. Physica A 535:122358

Wu B, Liu Y, Lu J (2012) New results on global exponential stability for impulsive cellular neural networks with any bounded time-varying delays. Math Comput Modell 55(3–4):837–843

Xu C, Aouiti C (2020) Comparative analysis on Hopf bifurcation of integer-order and fractional-order two-neuron neural networks with delay. Int J Circuit Theory Appl 48(9):1459–1475

Xu C, Aouiti C, Liu Z (2020) A further study on bifurcation for fractional order BAM neural networks with multiple delays. Neurocomputing 417:501–515

Yang X, Cao J, Lu J (2013) Synchronization of coupled neural networks with random coupling strengths and mixed probabilistic time-varying delays. Int J Robust Nonlinear Control 23(18):2060–2081

Zhang Z, Lin C, Chen B (2013) Global stability criterion for delayed complex-valued recurrent neural networks. IEEE Trans Neural Netw Learn Syst 25(9):1704–1708

Zhang Z, Liu X, Chen J, Guo R, Zhou S (2017) Further stability analysis for delayed complex-valued recurrent neural networks. Neurocomputing 251:81–89

Zhu J, Sun J (2016) Global exponential stability of Clifford-valued recurrent neural networks. Neurocomputing 173:685–689

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Leonardo Tomazeli Duarte.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Assali, E.A. A spectral radius-based global exponential stability for Clifford-valued recurrent neural networks involving time-varying delays and distributed delays. Comp. Appl. Math. 42, 48 (2023). https://doi.org/10.1007/s40314-023-02188-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02188-y