Abstract

In this paper, we study an issue of stability analysis for Clifford-valued recurrent neural networks (RNNs) with time delays. As an extension of real-valued neural network, the Clifford-valued neural network, which includes familiar complex-valued neural network and quaternion-valued neural network as special cases, has been an active research field recently. To the best of our knowledge, the stability problem for Clifford-valued systems with time delays has still not been solved. We first explore the existence and uniqueness for the equilibrium of delayed Clifford-valued RNNs, based on which some sufficient conditions ensuring the global asymptotic and exponential stability of such systems are obtained in terms of a linear matrix inequality (LMI). The simulation result of a numerical example is also provided to substantiate the effectiveness of the proposed results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past years, many efforts have been spent in applications of neural networks to different fields along with the rising research interests of artificial neural networks. The various papers studying the real-valued neural networks are available on the Internet. Through the study of the past few decades, the research of the real-valued neural networks is mature, see [1–14]. Meanwhile, the research of complex-valued neural networks has achieved gratifying success under the perseverant efforts. Complex-valued RNNs have been an indispensable part in practical applications such as physical systems dealing with electromagnetic, ultrasonic, quantum waves, and light and show a remarkable advantage in various fields of engineering.

Several models of complex-valued neural networks have been established [15–21]. In [15], a delayed complex-valued recurrent neural network with two types of complex-valued activation functions was studied. Some sufficient conditions to ensure the existence and uniqueness equilibrium and the global asymptotic and exponential stability were obtained. In [17], a complex-valued recurrent neural network was studied by separating complex-valued neural networks into real and imaginary parts, and forming an equivalent real-valued system. Then sufficient conditions were provided to guarantee the existence, uniqueness and global asymptotic stability of the complex-valued equilibrium point. Moreover, quaternion-valued neural networks are proposed and actively studied [22, 23].

Clifford algebra (geometric algebra) was introduced by William K. Clifford (1845–1879). It has been applied to various fields such as neural computing, computer and robot vision, image and signal processing, control problems and other areas due to its practical and powerful framework for the representation and solution of geometrical problem, see [24–30] and references therein. Recently, as an extension of real value models, Clifford neural networks has become an active research field. Neural networks for function approximation require feature enhancement, rotation and dilatation operations. These operations are limited by the Euclidean metric in real-valued neural networks and can be carried out more efficiently in Clifford-valued neural networks due to the Clifford algebra coordinate-free framework which allows to process patterns between layers and make the metric possible only due to the promising projective split. A multilayered Clifford neural network model was fist proposed by Pearson in [31, 32]. Then, Buchholz derived that the Clifford multilayer neural networks perform superior to the usual real-valued neural networks in [33, 34]. In part II of[35], Sommer learned the Moebius transformations in Clifford algebra and pointed that Moebius transformations cannot be available to the usual real-valued neural networks. Clifford RNNs were first proposed by Y. Kuroe in [28] and [36]. In [28], three models of fully connected Clifford-valued Hopfield-type RNNs were proposed. Sufficient conditions for the existence of an energy function for two classes of Clifford-valued Hopfield-type RNNs were discussed. In [29], a novel self-organizing-type radial basis function (RBF) neural network was presented, and the neural computing field was extended to the geometric algebra. In [30], neural computation in Clifford-valued domain was studied.

Time delay is a main source of causing oscillation controllability and instability and has been recognized that is an inherent feature of signal transmission between neurons [5, 37, 38]. In this paper, we study the Clifford-valued recurrent neural networks with propagation delay rather than process delay (intrinsic delay such as autapse type) investigated in [39, 40]. In the past few decades, the stability of neural networks with time delays has been an attractive subject of research, see [1, 3, 6, 12, 15–18, 41] and so on. The stability criteria for delayed neural networks can be divided into two categories: delay-independent stability and delay-dependent stability. The later one is less conservative, especially when the delay is small [37].

Motivated the above discussions, we investigate the stability of Clifford-valued recurrent neural networks with time delays in this paper. To the best of our knowledge, there is no result on such topic. Compared with complex-valued and quaternion-valued neural networks, the main challenge is that the product of Clifford-valued elements with the involution is not a constant real number in general. Therefore, in this paper, we use the properties \(e_{A}\bar{e}_{A}=\bar{e}_{A}e_{A}=1\) to transform the complicated Clifford-valued RNNs into higher dimensional real-valued RNNs. Then, an asymptotic delay-independent stability condition and a delay-dependent exponential stability criterion of the considered Clifford-valued RNNs are derived. Moreover, we estimate the exponential convergence rates with the constant delay. The results can reduces to real-valued, complex-valued and quaternion-valued neural networks when \(m=0\), \(m=1\) and \(m=2\), respectively. Compared with the delay-independent results of [15–17] for complex-valued RNNs, our delay-dependent condition is less conservative when \(m=1\).

The paper is organized as follows. In Sect. 2, we introduce some notations used in the Clifford analysis, the model description and some definitions and lammas needed. Section 3 presents sufficient conditions to ensure the existence of the unique equilibrium, as well as the global asymptotic and exponential stability of the considered Clifford-valued RNNs. In Sect. 4, a numerical example is given to demonstrate the effectiveness of the proposed results.

2 Preliminaries

Throughout this paper, \(\mathbb {R}^{n}\), \(\mathscr {A}^{n}\), \(\mathbb {R}^{m\times n}\) and \(\mathscr {A}^{m\times n}\) represent, respectively, the n-dimensional real vector space, the n-dimensional real Clifford vector space, the set of all \(m\times n\) real matrices and the set of all \(m\times n\) real Clifford matrices. The superscript ‘T’ and ‘*’ denote, respectively, the matrix transposition and the matrix involution transposition. For a square real matrix B, \([B]^{s}\) is defined by \([B]^{s}=(B+B^{T})/2\). For simplicity, we denote \(x^{\tau }=x(t-\tau )\) and \(x_{k}^{\tau }=x_{k}(t-\tau ).\)

2.1 The Clifford algebra \(\mathscr {A}\)

\(\mathscr {A}\) equipped with m generators is defined as the Clifford algebra over the real number \(\mathbb {R}\) with m multiplicative generators \(e_{1},~e_{2},~\cdots ,~e_{m}\) called Clifford generators which satisfy the following relations

For simplicity, when one element is the product of multiple Clifford generators, we will write its subscripts together. For example \(e_{1}e_{2}=e_{12}\) and \(e_{6}e_{2}e_{4}e_{5}=e_{6245}\). Then \(\mathscr {A}\) has its basis as follows:

Therefore the real Clifford algebra consists of elements such as \(a=\sum \limits _Ax^{A}e_{A}\), where \(x^{A}\in \mathbb {R}\) is a real number. In particular, when \(A=\emptyset \), then \(e_{\emptyset }\) can be denoted as \(e_{0}\) and \(x^{0}\) is the coefficient of the \(e_{0}\)-component, see [42]. From these properties, it is concluded that

Similar to the complex domain, the inversion for an arbitrary basic vector can be defined as follows:

or

where n(A) is r as \(e_{A}=e_{h_{1}h_{2}\cdots h_{r}}\).

Next, the main anti-automorphism in the Clifford algebra is called reversion or hermitian conjugation and given by

Now, we present the involution which is a combination of the reversion and the inversion introduced above. It is given as follows for a basic vector

From the definition, it is directly deduced that \(e_{A}\bar{e}_{A}=\bar{e}_{A}e_{A}=1.\) Moreover, for any Clifford number \(x=\sum \limits _{A}x^{A}e_{A},\) its involution can be denoted by \(\bar{x}=\sum \limits _{A}x^{A}\bar{e}_{A}\). In addition to this, the involution also satisfies \(\overline{xy}=\bar{y}\bar{x},~\forall x,~y\in \mathscr {A}.\)

The inner product in Clifford domain is defined as follows

where \([\gamma \bar{\beta }]_{0}\) denotes the coefficient of its \(e_{0}\)-component. The norm on \(\mathscr {A}\) is correspondingly defined as \(\mid \gamma \mid _{0}=\sqrt{(\gamma ,\gamma )_{0}}.\) Thus \(\mathscr {A}\) is a real Hilbert space and satisfies the Banach algebra with

Next we introduce a real functional on \(\mathscr {A}\), that is, \(\tau _{e_{A}}:\mathscr {A}\rightarrow \mathbb {R}\)

As a special case of \(A=\emptyset \), we have

Therefore, it is concluded that \(\mid \gamma \mid _{0}^{2}= 2^{m}[\gamma \bar{\gamma }]_{0}= <\tau _{e_{0}},\gamma \bar{\gamma } >\).

Finally, the definition of the derivative for \(z(t)=\sum \limits _{A}z^{A}(t)e_{A}\) is given as:

where \(z^{A}(t)\) is a function with real value.

Due to \(e_{B}\bar{e}_{A}=(-1)^{\frac{n(A)(n(A)+1)}{2}}e_{B}e_{A}\), we can simplify and express \(e_{B}\bar{e}_{A}=e_{C}\) or \(e_{B}\bar{e}_{A}=-e_{C}\) with \(e_{C}\) being some basis of Clifford algebra in (1). For example, \(e_{12}\bar{e}_{23}=-e_{12}e_{23}=-e_{1}e_{2}e_{2}e_{3}=e_{1}e_{3}=e_{13}\). Hence it is possible to find a unique corresponding basis \(e_{C}\) for the given \(e_{B}\bar{e}_{A}\). Defining \(n(B\cdot \bar{A})\) satisfying that \(n(B\cdot \bar{A})=0\) when \(e_{B}\bar{e}_{A}=e_{C}\) and \(n(B\cdot \bar{A})=1\) when \(e_{B}\bar{e}_{A}=-e_{C}\), based on which \(e_{B}\bar{e}_{A}=(-1)^{n(B\cdot \bar{A})}e_{C}\). Moreover, for \(K=\sum \limits _{C}K^Ce_C\in \mathscr {A}\), we define \(K^{B\cdot \bar{A}}=(-1)^{n(B\cdot \bar{A})}K^{C}\) for \(e_{B}\bar{e}_{A}=(-1)^{n(B\cdot \bar{A})}e_{C}\). Therefore,

2.2 Model formulation and basic lemmas

Consider the following Clifford-valued RNNs with time delay

where \(z=(z_{1}(t),~z_{2}(t),~\cdots ,~z_{n}(t))^{T}\in \mathscr {A}^{n}\) denotes the state vector, the self-feedback connection weight matrix D satisfies \(D=\mathrm{diag}(d_{1},d_{2},\cdots ,d_{n})\in \mathbb {R}^{n\times n}\) with \(d_{i}>0~(i=1,2,\cdots ,n),\) \(K=(k_{ij})_{n\times n}\in \mathscr {A}^{n\times n}\), \(L=(l_{ij})_{n\times n}\in \mathscr {A}^{n\times n}\) are the connection weight matrices without and with time delay. \(f(z(t))=(f_{1}(z_{1}(t)),~f_{2}(z_{2}(t)),~\cdots ,~f_{n}(z_{n}(t)))^{T}:\mathscr {A}^{n}\rightarrow \mathscr {A}^{n}\) is the vector-valued activation function. \(g(z(t-\tau ))=(g_{1}(z_{1}(t-\tau _{1})),~g_{2}(z_{2} (t-\tau _{2})),~\cdots ,~g_{n}(z_{n}(t-\tau _{n})))^{T}:\mathscr {A}^{n}\rightarrow \mathscr {A}^{n}\) is the vector-valued activation function with time delay, where elements of f(z(t)) and g(z(t)) are composed of Clifford-valued nonlinear functions. \(\tau _{i}~(i=1,2,\cdots ,n)\) and \( u=(u_{1},u_{2},\cdots ,u_{n})^{T}\in \mathscr {A}^{n}\) are constant time delays and the external input vector, respectively.

Now some basic definition and lemmas are presented which will be utilized in the following stability analysis.

Definition 1

Vector z is called an equilibrium point of the Clifford-valued RNNs (2) if it satisfies

Lemma 1

([15]) If \(H(x):\mathbb {R}^{2^{m}n}\rightarrow \mathbb {R}^{2^{m}n}\) is a continuous function and satisfies the following conditions:

-

(1)

H(x) is injective on \(\mathbb {R}^{2^{m}n};\)

-

(2)

\(\lim \limits _{\Vert x\Vert \rightarrow \infty }\Vert H(x)\Vert \rightarrow \infty \) as \(\Vert x\Vert \rightarrow \infty \), where \(||\cdot ||\) denotes the norm of \(\mathbb R^{2^{m}n};\)

then H(x) is a homeomorphism of \(\mathbb {R}^{2^{m}n}\).

Lemma 2

([37]) For positive definite matrix \(P\in \mathbb {R}^{n\times n}\), positive real constant \(\varepsilon \) and \(a,b\in \mathbb {R}^{n}\), it holds that \(a^{T}b+b^{T}a\le \varepsilon a^{T}Pa+\varepsilon ^{-1}b^{T}P^{-1}b.\)

Lemma 3

(Schur Complement) Given constant matrices P, Q and R, where \(P^{T}=P\), \(Q^{T}=Q\), then

is equivalent to the following inequalities

Assumption 1

Functions \(f_{i}(z),~g_{i}(z)~(i=1,2,\cdots ,n)\) satisfy the Lipschitz continuity condition regarding to the n-dimensional Clifford vector. That is, for each \(i=1,2,\cdots ,n,\) there exist positive constants \(\xi _{i},~\eta _{i}\) such that for any \(z, z'\in \mathscr {A}\),

where \(\xi _{i},~\eta _{i}~(i=1,2,\cdots ,n)\) are called Lipschitz constants.

3 Main Results

Firstly, we rewrite the Clifford-valued RNNs with the help of \(e_{A}\bar{e}_{A}=\bar{e}_{A}e_{A}=1\) and \(e_{B}\bar{e}_{A}e_{A}=e_{B}\). From the definition of \(K^C\), it is easy to find a unique \(K^C\) satisfying \(K^{C}e_{C}f^{A}e_{A}=(-1)^{n(B\cdot \bar{A})}K^{C}f^{A}e_{B}=K^{B\cdot \bar{A}}f^{A}e_{B}\), which implies the following system transformation. Decomposing (2) into \(\dot{z}=\sum \limits _{A}\dot{z}^{A}e_{A}\), it follows that

where \( K^{A}=(k_{ij}^{A})_{n\times n}, ~L^{A}=(l_{ij}^{A})_{n\times n}, ~u^{A}=(u_{1}^{A},u_{2}^{A},\cdots ,u_{n}^{A})^{T},\) and \(f^{A}(z)=(f_{1}^{A}(z_{1}),f_{2}^{A}(z_{2}), \cdots ,f_{n}^{A}(z_{n}))^{T}, g^{A}(z^{\tau })=(g_{1}^{A}(z_{1}^{\tau }),g_{2}^{A}(z_{2}^{\tau }),\cdots ,g_{n}^{A}(z_{n}^{\tau }))^{T}\).

According to the basis of Clifford algebra, we are here to rewrite the Clifford-valued RNNs by novel real-valued ones.

Let

then it is deduced from (5) that

Meanwhile, we can obtain the following inequalities :

according to

from Assumption 1, where

Theorem 1

Under Assumption 1, a Clifford-valued RNNs (2) has an unique equilibrium point and it is globally asymptotically stable if there exist a positive \(P\in \mathbb {R}^{(2^{m}n)\times (2^{m}n)}\) and positive real constants \(\varepsilon _{1}, \varepsilon _{2}\) such that the following LMI holds:

Proof

It is obvious that the existence and uniqueness of the equilibrium point of the real-valued system (6) as well as its global asymptotic stability are equivalent to those of the Clifford-valued RNNs (2).

Define \(H(w)=-\bar{D}w+\bar{K}\bar{f}(w)+\bar{L}\bar{g}(w)+\bar{u}\) for convenience. First, we prove the injectiveness of the map H(w) under the given condition. Suppose that there exists w and \(w'~(w'\ne w)\) satisfying \(H(w')=H(w)\), then we get

Left-multiplying both sides of the above equation by \(2(w-w')^{T}P\) gives that

that is to say

Using Lemma 2 and (7)–(8), the left side of equality (11) can be transformed into the following form

Due to Schur Complement and LMI condition (9), one derives

Therefore, \(H(w)-H(w')<0\), which is a contradiction to (11), and hence the map H(w) is injective.

Secondly, we will show that \(\lim \limits _{\Vert w\Vert \rightarrow \infty }\Vert H(w)\Vert \rightarrow \infty \) as \(\Vert w\Vert \rightarrow \infty \). It comes from (13) that

holds for some sufficiently small \(\varepsilon >0\). Assume that \(w'=0\), we have

From the above inequality and Schwartz inequality, it is obtained that

which means

Therefore, \(\lim \limits _{\Vert w\Vert \rightarrow \infty }\Vert H(w)\Vert \rightarrow \infty \) as \(\Vert w\Vert \rightarrow \infty \). According to Lemma 1, the map H(w) is homeomorphism on \(\mathbb {R}^{2^{m}n}\). Thus there exists an unique equilibrium point \(\hat{w}\) for (6).

In the following, we will prove the global asymptotic stablity of (6). First of all, we shift the equilibrium point of (6) into the origin by the transformation \(\tilde{w}=w-\hat{w}\), and rewrite (6) as

where \(\tilde{f}(\tilde{w})=\bar{f}(\tilde{w}+\hat{w})-\bar{f}(\hat{w})\) and \(\tilde{g}(\tilde{w}^{\tau })=\bar{g}(\tilde{w}^{\tau }+\hat{w})-\bar{g}(\hat{w})\). It is clear that the system (6) is globally asymptotically stable if the system (14) is globally asymptotically stable for the origin. Construct the following Lyapunov-Krasovskii functional:

The time derivative of \(V(\tilde{w}(t))\) along the trajectories of system (14) is given by

Considering the inequality condition (9) and the Schur Complement, we can get \(\bar{D}P+P\bar{D}-\varepsilon _{1}^{-1}P\bar{K}\bar{K}^{T} P-\varepsilon _{2}^{-1}P\bar{L}\bar{L}^{T}P -\varepsilon _{1}\bar{M}-\varepsilon _{2}\bar{N}>0\), which means \(\dot{V}(\tilde{w}(t))<0\) when \(\tilde{w}(t)\ne 0\). Therefore, the Clifford-valued RNNs (2) are globally asymptotically stable. \(\square \)

Remark 1

The obtained result can be easily applied to real-valued and complex-valued RNNs. When \(m=0\), the considered system reduces to the real-valued RNNs. For \(m=1\), it can be regarded a complex-valued RNNs. In [15], the nonsingular M-matrix was used on the stability analysis for complex-valued RNNs. Comparing Assumption 1 in this paper with Assumption 1 of [15], it is noticed that [15] needs some additional restrictions on the existence, continuity and boundedness conditions for the partial derivatives of the activation functions. While \(m=2\), we can derive the global asymptotic stability of quaternion-valued neural networks which has not been investigated either.

Corollary 1

Under Assumption 1, a Clifford-valued RNN (2) has an unique equilibrium point and it is globally exponentially stable if there exist a positive definite matrix P and a scalar \(k>0\) such that the following LMI holds:

Moreover

Proof

The proof is similar to that of Theorem 1 and hence is omitted here. The main idea is sketched as follows.

As to the inequality (12), we could magnify it into the following form:

On the other hand, the corresponding Lyapunov-Krasovskii functional is taken the following form:

based on which the global exponential stability could be obtained. \(\square \)

Remark 2

In [15–17], a delay-independent global asymptotic stability of complex-valued RNNs is studied, while Corollary 1 gives a delay-dependent global exponential stability criterion which is less conservative. Moreover, the exponential convergence rates is estimated in the obtained results as well.

4 An Example

In this section, we will demonstrate Theorem 1 through the following example.

Consider a two-neuron Clifford-valued RNN described by

where

and the activation functions are

with \(z_{j}=\sum \limits _Ax_{j}^{A}e_{A}\in \mathscr {A}\) for \(j=1,~2\). We choose the constant delay parameters \(\tau =\{\tau _{1}, \tau _{2}\}=\{0.5, 1\}\) and the initial state \(\varphi _{1}(t)=2(2.5e_{0}-4.5e_{1}+4e_{2}-3e_{3}+1.5e_{12}-2e_{13}+6e_{23}-e_{123})\) for \(t \in [-\tau _{1},0]\), and \(\varphi _{2}(t)=2(-2.5e_{0}+1.5e_{1}-9e_{2}+3e_{3}-6e_{12}+5e_{13}-4e_{23}+8.5e_{123})\) for \(t \in [-\tau _{2},0]\). According to their definitions, we have

Based on the definition of \(\bar{K}\) and \(\bar{L}\), we can obtain

It can be checked that when \(\varepsilon _{1}=\varepsilon _{2}=1\), there exists a positive matrix \(P=(P_{1}~~P_{2}~~P_{3})\) satisfying (9) with

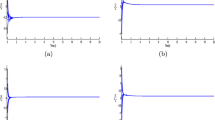

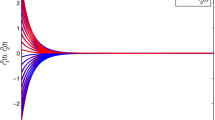

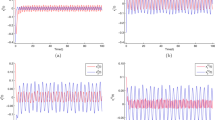

Therefore, the system is globally asymptotically stable from Theorem 1. The numerical simulations for trajectories are shown in Figs. 1 and 2. In the above two figures, each neuron has 8 lines corresponding to its components.

5 Conclusion

In this paper, we have proposed the Clifford-valued RNNs and explored the existence of the unique equilibrium and the stability of such systems. Some sufficient conditions ensuring the global asymptotic stability and the global exponential stability of the delayed Clifford-valued RNNs have been obtained in terms of LMIs. When the system reduces to a complex-valued (\(m=1\)) or real-valued neural network (\(m=0\)), the corresponding stability criterion could be obtained. At last, an example is given to show the effectiveness of the results given.

References

Yang, X., Cao, J., Lu, J.: Synchronization of coupled neural networks with random coupling strengths and mixed probabilistic time-varying delays. Int. J. Robust Nonlin. Control 23(18), 2060–2081 (2013)

Tang, Y., Wong, W.: Distributed synchronization of coupled neural networks via randomly occurring control. IEEE Trans. Neural Netw. Learn. Syst. 24(3), 435–447 (2013)

Wu, X., Tang, Y., Zhang, W.: Stability analysis of switched stochastic neural networks with time-varying delays. Neural Netw. 51, 39–49 (2014)

Zhang, W., Tang, Y., Fang, Ja, Wu, X.: Stability of delayed neural networks with time-varying impulses. Neural Netw. 36, 59–63 (2012)

Wu, Z., Su, H., Chu, J., Zhou, W.: Improved delay-dependent stability condition of discrete recurrent neural networks with time-varying delays. IEEE Trans. Neural Netw. 21(4), 692–697 (2010)

Liu, Y., Wang, Z., Liang, J., Liu, X.: Synchronization of coupled neutral-type neural networks with jumping-mode-dependent discrete and unbounded distributed delays. IEEE Trans. Cybern. 43(1), 102–114 (2013)

Liu, Y., Wang, Z., Liang, J., Liu, X.: Stability and synchronization of discrete-time markovian jumping neural networks with mixed mode-dependent time delays. IEEE Trans. Neural Netw. 20(7), 1102–1116 (2009)

Liang, J., Wang, Z., Liu, Y., Liu, X.: Robust synchronization of an array of coupled stochastic discrete-time delayed neural networks. IEEE Trans. Neural Netw. 19(11), 1910–1921 (2008)

Lu, J., Ho, D.W., Cao, J., Kurths, J.: Exponential synchronization of linearly coupled neural networks with impulsive disturbances. IEEE Trans. Neural Netw. 22(2), 329–336 (2011)

Yang, X., Cao, J., Lu, J.: Synchronization of markovian coupled neural networks with nonidentical node-delays and random coupling strengths. IEEE Trans. Neural Netw. Learn. Syst. 23(1), 60–71 (2012)

Wu, B., Liu, Y., Lu, J.: New results on global exponential stability for impulsive cellular neural networks with any bounded time-varying delays. Math. Comput. Model. 55(3), 837–843 (2012)

Yang, R., Wu, B., Liu, Y.: A halanay-type inequality approach to the stability analysis of discrete-time neural networks with delays. Appl. Math. Comput. 265, 696–707 (2015)

Zhu, Q., Cao, J., Rakkiyappan, R.: Exponential input-to-state stability of stochastic Cohen-Grossberg neural networks with mixed delays. Nonlin. Dyn. 79(2), 1085–1098 (2015)

Rakkiyappan, R., Sivasamy, R., Cao, J.: Stochastic sampled-data stabilization of neural-network-based control systems. Nonlin. Dyn. pp. 1–17 (2015)

Hu, J., Wang, J.: Global stability of complex-valued recurrent neural networks with time-delays. IEEE Trans. Neural Netw. Learn. Syst. 23(6), 853–865 (2012)

Fang, T., Sun, J.: Further investigate the stability of complex-valued recurrent neural networks with time-delays. IEEE Trans. Neural Netw. Learn. Syst. 25(9), 1709–1713 (2014)

Zhang, Z., Lin, C., Chen, B.: Global stability criterion for delayed complex-valued recurrent neural networks. IEEE Trans. Neural Netw. Learn. Syst. 25(9), 1704–1718 (2014)

Chen, X., Song, Q.: Global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales. Neurocomputing 121, 254–264 (2013)

Hirose, A.: Complex-valued neural networks. Springer, Berlin (2006)

Velmurugan, G., Rakkiyappan, R., Cao, J.: Further analysis of global \(\mu \)-stability of complex-valued neural networks with unbounded time-varying delays. Neural Netw. 67, 14–27 (2015)

Rakkiyappan, R., Velmurugan, G., Cao, J.: Finite-time stability analysis of fractional-order complex-valued memristor-based neural networks with time delays. Nonlin. Dyn. 78(4), 2823–2836 (2014)

Shang, F., Hirose, A.: Quaternion neural-network-based polsar land classification in poincare-sphere-parameter space. IEEE Trans. Geosci. Remote Sens. 52(9), 5693–5703 (2014)

Isokawa, T., Kusakabe, T., Matsui, N., Peper, F.: Quaternion neural network and its application. In: Knowledge-Based Intelligent Information and Engineering Systems, pp. 318–324. Springer (2003)

Hitzer, E., Nitta, T., Kuroe, Y.: Applications of Clifford’s geometric algebra. Adv. Appl. Clifford Algebras 23(2), 377–404 (2013)

Dorst, L., Fontijne, D., Mann, S.: Geometric algebra for computer science (revised edition): An object-oriented approach to geometry. Morgan Kaufmann (2009)

Bayro-Corrochano, E., Scheuermann, G.: Geometric Algebra Computing: In Engineering and Computer Science. Springer, London Limited, London (2010)

Rivera-Rovelo, J., Bayro-Corrochano, E.: Medical image segmentation using a self-organizing neural network and Clifford geometric algebra. In: International Joint Conference on Neural Networks, pp. 3538–3545. (2006)

Kuroe, Y.: Models of Clifford recurrent neural networks and their dynamics. In: International Joint Conference on Neural Networks, pp. 1035–1041 (2011)

Bayro Corrochano, E., Buchholz, S., Sommer, G.: Selforganizing Clifford neural network. In: IEEE International Conference on Neural Networks, pp. 120–125 (1996)

Buchholz, S., Tachibana, K., Hitzer, E.M.: Optimal learning rates for Clifford neurons. In: International Conference on Artificial Neural Networks, pp. 864–873. Springer (2007)

Pearson, J., Bisset, D.: Back propagation in a Clifford algebra. Artif. Neural Netw. 2, 413–416 (1992)

Pearson, J., Bisset, D.: Neural networks in the Clifford domain. In: International Conference on Neural Networks, pp. 1465–1469 (1994)

Buchholz, S.: A theory of neural computation with Clifford algebras. Ph.D. thesis, University of Kiel (2005)

Buchholz, S., Sommer, G.: On Clifford neurons and Clifford multi-layer perceptrons. Neural Netw. 21(7), 925–935 (2008)

Olver, H.L.P.J., Sommer, G.: Computer algebra and geometric algebra with applications. Springer, Berlin (2005)

Kuroe, Y., Tanigawa, S., Iima, H.: Models of Hopfield-type Clifford neural networks and their energy functions-hyperbolic and dual valued networks. In: Neural Information Processing, pp. 560–569. Springer (2011)

Liao, X., Chen, G., Sanchez, E.N.: Delay-dependent exponential stability analysis of delayed neural networks: an lmi approach. Neural Netw. 15(7), 855–866 (2002)

Liu, Y., Wang, Z., Liu, X.: Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw. 19(5), 667–675 (2006)

Song, X., Wang, C., Ma, J., Tang, J.: Transition of electric activity of neurons induced by chemical and electric autapses. Sci. China Technol. Sci. 58(6), 1007–1014 (2015)

Qin, H., Ma, J., Jin, W., Wang, C.: Dynamics of electric activities in neuron and neurons of network induced by autapses. Sci. China Technol. Sci. 57(5), 936–946 (2014)

Song, B., Park, J.H., Wu, Z.G., Zhang, Y.: New results on delay-dependent stability analysis for neutral stochastic delay systems. J. Franklin Inst. 350(4), 840–852 (2013)

Brackx, F., Delanghe, R., Sommen, F.: Clifford analysis, vol. 76. Pitman Books Limited, London (1982)

Acknowledgments

The authors wish to thank the editor and reviewers for a number of constructive comments and suggestions that have improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partially supported by Zhejiang Provincial Natural Science Foundation of China under Grant no. LY14A010008, the National Natural Science Foundation of China under Grant Nos. 61573102, 61374077, 61174136, and 61175119, and the China Postdoctoral Science Foundation under Grant No. 2015M580378.

Rights and permissions

About this article

Cite this article

Liu, Y., Xu, P., Lu, J. et al. Global stability of Clifford-valued recurrent neural networks with time delays. Nonlinear Dyn 84, 767–777 (2016). https://doi.org/10.1007/s11071-015-2526-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-2526-y