Abstract

In this paper, we study the indirect stability of Timoshenko system with local or global Kelvin–Voigt damping, under fully Dirichlet or mixed boundary conditions. Unlike Zhao et al. (Acta Mathematica Sinica Engl Ser 21(3):655–666, 2004), Tian and Zhang (Mathematik und Physik 68(1), 2017), and Liu and Zhang (SIAM J Control Optim 56(6):3919–3947, 2018), in this paper, we consider the Timoshenko system with only one locally or globally distributed Kelvin–Voigt damping D [see System (1.1)]. Indeed, we prove that the energy of the system decays polynomially of type \(t^{-1}\) and that this decay rate is in some sense optimal. The method is based on the frequency domain approach combining with multiplier method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study the indirect stability of a one-dimensional Timoshenko system with only one local or global Kelvin–Voigt damping. This system consists of two coupled hyperbolic equations:

System (1.1) is subject to the following initial conditions:

in addition to the following boundary conditions:

or

Here, the coefficients \(\rho _1,\ \rho _2,\ k_1\), and \(k_2\) are strictly positive constant numbers. The function \(D\in L^{\infty }(0,L)\), such that \(D(x)\ge 0, \ \forall x\in [0, L]\). We assume that there exist \(D_0>0\), \(\alpha ,\ \beta \in \mathbb {R}\), \(0\le \alpha < \beta \le L,\) such that:

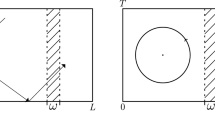

The hypothesis (H) means that the control D can be locally near the boundary (see Fig. 1a, b), or locally internal (see Fig. 2a), or globally (see Fig. 2b). Indeed, in the case when D is local damping (i.e., \(\alpha \ne 0\) or \(\beta \ne L\)), we see that D is not necessary continuous over (0, L) (see Figs. 1a, b and 2a).

The Timoshenko system is usually considered in describing the transverse vibration of a beam and ignoring damping effects of any nature. Indeed, we have the following model, see in Timoshenko (1921):

where \(\varphi \) is the transverse displacement of the beam and \(\psi \) is the rotation angle of the filament of the beam. The coefficients \(\rho ,\ I_\rho ,\ E,\ I,\) and K are, respectively, the density (the mass per unit length), the polar moment of inertia of a cross section, Young’s modulus of elasticity, the moment of inertia of a cross section, and the shear modulus, respectively.

The stabilization of the Timoshenko system with different kinds of damping has been studied in number of publications. For the internal stabilization, Raposo et al. (2005) showed that the Timoshenko system with two internal distributed dissipation is exponentially stable. Messaoudi and Mustafa (2008) extended the results to nonlinear feedback laws. Soufyane and Whebe (2003) showed that Timoshenko system with one internal distributed dissipation law is exponentially stable if and only if the wave propagation speeds are equal (i.e., \(k_1/\rho _1=\rho _2/k_2\)); otherwise, only the strong stability holds. Indeed, Muñoz Rivera and Racke (2008) they improved the results of Soufyane and Whebe (2003), where an exponential decay of the solution of the system has been established, allowing the coefficient of the feedback to be with an indefinite sign. Wehbe and Youssef (2009) proved that the Timoshenko system with one locally distributed viscous feedback is exponentially stable if and only if the wave propagation speeds are equal (i.e., \(k_1/\rho _1=\rho _2/k_2\)); otherwise, only the polynomial stability holds. Tebou in Tebou (2015) showed that the Timoshenko beam with same feedback control in both equations is exponentially stable. The stability of the Timoshenko system with thermoelastic dissipation has been studied in Sare and Racke (2009), Júnior et al. (2013), Fatori et al. (2014), and Hao and Wei (2018). The stability of Timoshenko system with memory type has been studied in Ammar-Khodja et al. (2003), Sare and Racke (2009), Guesmia and Messaoudi (2009), Messaoudi and Said-Houari (2009), and Abdallah et al. (2018). For the boundary stabilization of the Timoshenko beam, Kim and Renardy (1987) showed that the Timoshenko beam under two boundary controls is exponentially stable. Ammar-Khodja et al. (2007) studied the decay rate of the energy of the nonuniform Timoshenko beam with two boundary controls acting in the rotation-angle equation. In fact, under the equal speed wave propagation condition, they established exponential decay results up to an unknown finite-dimensional space of initial data. In addition, they showed that the equal speed wave propagation condition is necessary for the exponential stability. However, in the case of non-equal speed, no decay rate has been discussed. This result has been recently improved by Wehbe et al. in Bassam et al. (2015); i.e., the authors in Bassam et al. (2015) proved nonuniform stability and an optimal polynomial energy decay rate of the Timoshenko system with only one dissipation law on the boundary. In addition to the previously cited papers, we mention Akil et al. (2019) and Benaissa and Benazzouz (2017) for the stability of the Timoshenko system with fractional damping on the boundary. For the stabilization of the Timoshenko beam with nonlinear term, we mention Muñoz Rivera and Racke (2002), Alabau-Boussouira (2004), Araruna and Zuazua (2008), Messaoudi and Mustafa (2008), Cavalcanti et al. (2013), and Hao and Wei (2018).

Kelvin–Voigt material is a viscoelastic structure having properties of both elasticity and viscosity. There are a number of publications concerning the stabilization of wave equation with global or local Kelvin–Voigt damping. For the global case, the authors in Huang (1988) and Liu et al. (1998) proved the analyticity and the exponential stability of the semigroup. When the Kelvin–Voigt damping is localized on an interval of the string, the regularity and stability of the solution depend on the properties of the damping coefficient. Notably, the system is more effectively controlled by the local Kelvin–Voigt damping when the coefficient changes more smoothly near the interface (see Liu and Liu 1998; Renardy 2004; Zhang 2010; Liu and Zhang 2016; Liu et al. 2017).

Last but not least, in addition to the previously cited papers, the stability of the Timoshenko system with Kelvin–Voigt damping has been studied in few papers. Zhao et al. in Zhao et al. (2004) considered the Timoshenko system with local distributed Kelvin–Voigt damping:

They proved that the energy of the System (1.5) subject to Dirichlet–Neumann boundary conditions has an exponential decay rate when coefficient functions \(D_1,\ D_2 \in C^{1,1}([0, L])\) and satisfy \(D_1 \le c D_2 (c > 0).\) Tian and Zhang (2017) considered the Timoshenko System (1.5) under fully Dirichlet boundary conditions with locally or globally distributed Kelvin–Voigt damping when coefficient functions \(D_1,\ D_2 \in C([0, L])\). First, when the Kelvin–Voigt damping is globally distributed, they showed that the Timoshenko System (1.5) under fully Dirichlet boundary conditions is analytic. Next, for their system with local Kelvin–Voigt damping, they analyzed the exponential and polynomial stability according to the properties of coefficient functions \(D_1,\ D_2.\) Liu and Zhang (2018); on \((-1,1)\times \mathbb {R}_{+}\), they considered the Timoshenko System (1.5) under fully Dirichlet boundary conditions, such that \(D_i \in L^\infty (-1,1)\), \(D_i(x)=0\) for \(\in x\in [-1,0],\) \(D_i(x)>0\) is continuous for \( x\in [0,1],\) for \(i=1,2.\) Also, they assumed that there exist positive constants \(k_1\), \(k_2\) and nonnegative constants \(\alpha _1,\ \alpha _2\), such that \(\lim _{x\rightarrow 0^+}{D_i(x)}/{x^{\alpha _i}}=k_i\) for \(i=1,2.\) They analyzed the exponential and polynomial stability according to the properties of coefficient functions \(D_1,\ D_2.\) From the above, we conclude that the number of dampings and its localization play a crucial role in the stabilization of the system. Indeed and to say practically, more than one damping can be either impossible or expensive. Also, we cannot always specify the damping region. Due to the previous restrictions, we were motivated to study the stabilization of System (1.1) generally. Thus, in this paper, unlike Zhao et al. (2004), Tian and Zhang (2017) and Liu and Zhang (2018), we consider the Timoshenko system with only one locally (distributed in any subinterval of the domain) or globally distributed Kelvin–Voigt damping D [see System (1.1)]. Under hypothesis (H), we show that the energy of the Timoshenko System (1.1) subject to initial state (1.2) to either the boundary conditions (1.3) or (1.4) has a polynomial decay rate of type \(t^{-1}\) and that this decay rate is in some sense optimal.

This paper is organized as follows: In Sect. 2, first, we show that the Timoshenko System (1.1) subject to initial state (1.2) to either the boundary conditions (1.3) or (1.4) can reformulate into an evolution equation and we deduce the well-posedness property of the problem by the semigroup approach. Second, using a criteria of Arendt and Batty (1988), we show that our system is strongly stable. In Sect. 3, we prove the polynomial energy decay rate of type \(t^{-1}\) for the System (1.1)–(1.2) to either the boundary conditions (1.3) or (1.4). In Sect. 4, we prove that the energy decay rate of type \(t^{-1}\) is in some sense optimal.

2 Well-posedness and strong stability

2.1 Well-posedness of the problem

In this part, under condition (H), using a semigroup approach, we establish well-posedness result for the Timoshenko System (1.1)–(1.2) to either the boundary conditions (1.3) or (1.4). The energy of solutions of the System (1.1) subject to initial state (1.2) to either the boundary conditions (1.3) or (1.4) is defined by:

Let \(\left( u,y\right) \) be a regular solution for the System (1.1). Multiplying the first and the second equation of (1.1) by \(u_t\) and \(y_t,\) respectively, then using the boundary conditions (1.3) or (1.4), we get:

Thus, System (1.1) subject to initial state (1.2) to either the boundary conditions (1.3) or (1.4) is dissipative in the sense that its energy is non-increasing with respect to the time t. Let us define the energy spaces \(\mathcal {H}_1\) and \(\mathcal {H}_2\) by:

and

such that:

It is easy to check that the space \(H_*^1\) is Hilbert spaces over \(\mathbb {C}\) equipped with the norm:

where \(\Vert \cdot \Vert \) denotes the usual norm of \(L^2\left( 0,L\right) \). Both energy spaces \(\mathcal {H}_1\) and \(\mathcal {H}_2\) are equipped with the inner product defined by:

for all \(U=\left( u,v,y,z\right) \) and \(\Phi =\left( \phi ,\varphi ,\psi ,\theta \right) \) in \(\mathcal {H}_j\), \(j=1,2\). We use \(\Vert U\Vert _{\mathcal {H}_j}\) to denote the corresponding norms. We now define the following unbounded linear operators \(\mathcal {A}_j\) in \(\mathcal {H}_j\) by:

and for \(j=1,2:\)

If \(U=\left( u,u_t,y,y_t\right) \) is the state of System (1.1)–(1.2) to either the boundary conditions (1.3) or (1.4), then the Timoshenko system is transformed into a first order evolution equation on the Hilbert space \(\mathcal {H}_j\):

where:

Proposition 2.1

Under hypothesis (H), for \(j=1,2,\) the unbounded linear operator \(\mathcal {A}_j\) is m-dissipative in the energy space \(\mathcal {H}_j\).

Proof

Let \(j=1,2\), for \(U=(u,v,y,z)\in D\left( \mathcal {A}_j\right) \), one has:

which implies that \(\mathcal {A}_j\) is dissipative under hypothesis (H). Here, \(\Re \) is used to denote the real part of a complex number. We next prove the maximality of \(\mathcal {A}_j\). For \(F=(f_1,f_2,f_3,f_4)\in \mathcal {H}_j\), we prove the existence of \(U=(u,v,y,z)\in D(\mathcal {A}_j)\), unique solution of the equation:

Equivalently, one must consider the system given by:

with the boundary conditions:

Using the fact that \(F\in \mathcal {H}_j,\) we get that \((v,z)\in \mathcal {V}_j(0,L)\), where \(\mathcal {V}_1(0,L)=H_0^1(0,L)\times H_0^1(0,L)\) and \(\mathcal {V}_2(0,L)=H_0^1(0,L)\times H_*^1(0,L)\). Now, let \((\varphi ,\psi )\in \mathcal {V}_j(0,L)\), multiplying Eqs. (2.3) and (2.5) by \(\overline{\varphi }\) and \(\overline{\psi }\), respectively, integrating in (0, L), taking the sum, then using Eq. (2.4) and the boundary condition (2.6), we get:

The left-hand side of (2.7) is a bilinear continuous coercive form on \(\mathcal {V}_j(0,L)\times \mathcal {V}_j(0,L)\), and the right-hand side of (2.7) is a linear continuous form on \(\mathcal {V}_j(0,L)\). Then, using Lax–Milligram theorem [see in Pazy (1983)], we deduce that there exists \((u,y)\in \mathcal {V}_j(0,L)\) unique solution of the variational Problem (2.7). Thus, using (2.2), (2.4), and classical regularity arguments, we conclude that \(-\mathcal {A}_jU=F\) admits a unique solution \(U\in D\left( \mathcal {A}_j\right) \) and consequently \(0\in \rho (\mathcal {A}_j)\), where \(\rho \left( \mathcal {A}_j\right) \) denotes the resolvent set of \(\mathcal {A}_j\). Then, \(\mathcal {A}_j\) is closed and consequently \(\rho \left( \mathcal {A}_j\right) \) is open set of \(\mathbb {C}\) [see Theorem 6.7 in Kato (1995)]. Hence, we easily get \(\lambda \in \rho \left( \mathcal {A}_j\right) \) for sufficiently small \(\lambda >0 \). This, together with the dissipativeness of \(\mathcal {A}_j\), implies that \(D\left( \mathcal {A}_j\right) \) is dense in \(\mathcal {H}_j\) and that \(\mathcal {A}_j\) is m-dissipative in \(\mathcal {H}_j\) [see Theorems 4.5, 4.6 in Pazy (1983)]. Thus, the proof is complete. \(\square \)

Thanks to Lumer–Phillips theorem [see Liu and Zheng 1999; Pazy 1983], we deduce that \(\mathcal {A}_j\) generates a \(C_0\)-semigroup of contraction \(e^{t\mathcal {A}_j}\) in \(\mathcal {H}_j\), and therefore, Problem (2.1) is well posed. Then, we have the following result.

Theorem 2.2

Under hypothesis (H), for \(j=1,2,\) for any \(U_0\in \mathcal {H}_j\), the Problem (2.1) admits a unique weak solution \(U(x,t)=e^{t\mathcal {A}_j}U_0(x)\), such that \(U\in C\left( \mathbb {R}_{+};\mathcal {H}_j\right) .\) Moreover, if \(U_0\in D\left( \mathcal {A}_j\right) ,\) then \(U\in C\left( \mathbb {R}_{+};D\left( \mathcal {A}_j\right) \right) \cap C^1\left( \mathbb {R}_{+};\mathcal {H}_j\right) .\) \(\square \)

Before starting the main results of this work, we introduce here the notions of stability that we encounter in this work.

Definition 2.3

Let \(A:D(A)\subset H\rightarrow H \) generate a C\(_0-\)semigroup of contractions \(\left( e^{t A}\right) _{t\ge 0}\) on H. The \(C_0\)-semigroup \(\left( e^{t A}\right) _{t\ge 0}\) is said to be:

-

1.

strongly stable if:

$$\begin{aligned} \lim _{t\rightarrow +\infty } \Vert e^{t A}x_0\Vert _{H}=0, \quad \forall \ x_0\in H; \end{aligned}$$ -

2.

exponentially (or uniformly) stable if there exist two positive constants M and \(\epsilon \) such that

$$\begin{aligned} \Vert e^{t A}x_0\Vert _{H} \le Me^{-\epsilon t}\Vert x_0\Vert _{H}, \quad \forall \ t>0, \ \forall \ x_0\in {H}; \end{aligned}$$ -

3.

polynomially stable if there exists two positive constants C and \(\alpha \), such that:

$$\begin{aligned} \Vert e^{t A}x_0\Vert _{H}\le C t^{-\alpha }\Vert A x_0\Vert _{H}, \quad \forall \ t>0, \ \forall \ x_0\in D\left( A\right) . \end{aligned}$$In that case, one says that solutions of (2.1) decay at a rate \(t^{-\alpha }\). The \(C_0\)-semigroup \(\left( e^{t A}\right) _{t\ge 0}\) is said to be polynomially stable with optimal decay rate \(t^{-\alpha }\) (with \(\alpha >0\)) if it is polynomially stable with decay rate \(t^{-\alpha }\) and, for any \(\varepsilon >0\) small enough, there exists solutions of (2.1) which do not decay at a rate \(t^{-(\alpha +\varepsilon )}\). \(\square \)

We now look for necessary conditions to show the strong stability of the \(C_0\)-semigroup \(\left( e^{t A}\right) _{t\ge 0}\). We will rely on the following result obtained by Arendt and Batty in Arendt and Batty (1988).

Theorem 2.4

(Arendt and Batty (1988)) Assume that A is the generator of a C\(_0-\)semigroup of contractions \(\left( e^{tA}\right) _{t\ge 0}\) on a Hilbert space H. If A has no pure imaginary eigenvalues and \(\sigma \left( A\right) \cap i\mathbb {R}\) is countable, where \(\sigma \left( A\right) \) denotes the spectrum of A, then the \(C_0\)-semigroup \(\left( e^{tA}\right) _{t\ge 0}\) is strongly stable. \(\square \)

Our subsequent findings on polynomial stability will rely on the following result from Borichev and Tomilov (2010), Liu and Rao (2005), Batty and Duyckaerts (2008), which gives necessary and sufficient conditions for a semigroup to be polynomially stable. For this aim, we recall the following standard result [see Borichev and Tomilov 2010; Liu and Rao 2005; Batty and Duyckaerts 2008 for part (i) and Huang (1985); Prüss (1984) for part (ii)].

Theorem 2.5

Let \(A:D(A)\subset H\rightarrow H \) generate a C\(_0-\)semigroup of contractions \(\left( e^{t A}\right) _{t\ge 0}\) on H. Assume that \(i\lambda \in \rho (A),\ \forall \ \lambda \in \mathbb {R}\). Then, the \(C_0\)-semigroup \(\left( e^{t A}\right) _{t\ge 0}\) is:

-

(i)

Polynomially stable of order \(\frac{1}{\ell }\, (\ell >0)\) if and only if:

$$\begin{aligned} \lim \sup _{\lambda \in \mathbb {R},\ |\lambda |\rightarrow \infty } |\lambda |^{-\ell }\left\| \left( i\lambda I-A\right) ^{-1}\right\| _{\mathcal {L}\left( H\right) }<+\infty . \end{aligned}$$ -

(ii)

Exponentially stable if and only if:

$$\begin{aligned} \lim \sup _{\lambda \in \mathbb {R},\ |\lambda |\rightarrow \infty }\left\| \left( i\lambda I-A\right) ^{-1}\right\| _{\mathcal {L}\left( H\right) }<+\infty . \end{aligned}$$\(\square \)

2.2 Strong stability

In this part, we use general criteria of Arendt-B-atty in Arendt and Batty (1988) [see Theorem 2.4] to show the strong stability of the \(C_0\)-semigroup \(e^{t\mathcal {A}_j}\) associated to the Timoshenko System (2.1). Our main result is the following theorem.

Theorem 2.6

Assume that (H) is true. Then, for \(j=1,2,\) the \(C_0-\)semigroup \(e^{t\mathcal {A}_j}\) is strongly stable in \(\mathcal {H}_j\); i.e., for all \(U_0\in \mathcal {H}_j\), the solution of (2.1) satisfies:

The argument for Theorem 2.6 relies on the subsequent lemmas.

Lemma 2.7

Under hypothesis (H), for \(j=1,2,\) one has:

Proof

For \(j=1,2\), from Proposition 2.1, we deduce that \(0\in \rho \left( \mathcal {A}_j\right) \). We still need to show the result for \(\lambda \in \mathbb {R^*}\). Suppose that there exists a real number \(\lambda \ne 0\) and \( U=\left( u,v,y,z\right) \in D\left( \mathcal {A}_j\right) \), such that:

Equivalently, we have:

First, a straightforward computation gives:

using hypothesis (H), we deduce that:

Inserting (2.9) in (2.8), we get:

with the following boundary conditions:

In fact, System (2.11)–(2.13) admit a unique solution \((u,y)\in C^2((0,L))\). From (2.10) and by the uniqueness of solutions, we get:

-

1.

If \(j=1\), from (2.14) and the fact that \(y(0)=0,\) we get \(u=y=0\) in (0, L), and hence, \(U=0\). In this case, the proof is complete.

-

2.

If \(j=2\), from (2.14) and the fact that \(y\in H^1_*(0,L)\, (i.e.,\ \int _0^L y \mathrm{{dx}}=0),\) we get \(u=y=0\) in (0, L); therefore, \(U=0\). Thus, the proof is complete.

\(\square \)

Lemma 2.8

Under hypothesis (H), for \(j=1,2,\) for all \(\lambda \in \mathbb {R}\), then \(i\lambda I-\mathcal {A}_j\) is surjective.

Proof

Let \(F=(f_1,f_2,f_3,f_4) \in \mathcal {H}_j\), and we look for \(U=(u,v,y,z) \in D(\mathcal {A}_j)\) solution of:

Equivalently, we have:

with the boundary conditions:

such that:

We define the operator \(\mathcal {L}_j \) by:

where:

Using Lax–Milgram theorem, it is easy to show that \(\mathcal {L}_j\) is an isomorphism from \(\mathcal {V}_j(0,L)\) onto \((H^{-1}\left( 0,L\right) )^2\). Let \(\mathcal {U}=\left( u,y\right) \) and \(F=\left( -F_1,-F_2\right) \), and then, we transform System (2.17)–(2.18) into the following form:

Using the compactness embeddings from \(L^2(0,L)\) into \(H^{-1}(0,L)\) and from \(H^1_0(0,L)\) into \(L^{2}(0,L)\), and from \(H^1_L(0,L)\) into \(L^{2}(0,L)\), we deduce that the operator \(\mathcal {L}_j^{-1}\) is compact from \(L^2(0,L)\times L^2(0,L)\) into \(L^2(0,L)\times L^2(0,L)\). Consequently, by Fredholm alternative, proving the existence of \(\mathcal {U}\) solution of (2.20) reduces to proving \(\ker \left( I-\lambda ^2\mathcal {L}^{-1}_j\right) =0\). Indeed, if \(\left( \varphi ,\psi \right) \in \ker (I-\lambda ^2\mathcal {L}_j^{-1})\), then we have \(\lambda ^2 \left( \varphi ,\psi \right) - \mathcal {L}_j \left( \varphi ,\psi \right) =0\). It follows that:

with the following boundary conditions:

It is now easy to see that if \((\varphi ,\psi )\) is a solution of System (2.21)–(2.22), then the vector V defined by:

belongs to \(D(\mathcal {A}_j)\) and \(i\lambda V-\mathcal {A}_jV=0.\) Therefore, \({V}\in \ker \left( i\lambda I-{\mathcal {A}_j}\right) \). Using Lemma 2.7, we get \(V=0\), and so:

Thanks to Fredholm alternative, Eq. (2.20) admits a unique solution \((u,v) \in \mathcal {V}_j(0,L)\). Thus, using (2.15), (2.17) and a classical regularity arguments, we conclude that \(\left( \ i\lambda -\mathcal {A}_j\right) U=F\) admits a unique solution \(U\in D\left( \mathcal {A}_j\right) \). Thus, the proof is complete. \(\square \)

We are now in a position to conclude the proof of Theorem 2.6.

Proof of Theorem 2.6

Using Lemma 2.7, we directly deduce that \({\mathcal {A}}_j\) ha non pure imaginary eigenvalues. According to Lemmas 2.7, 2.8 and with the help of the closed graph theorem of Banach, we deduce that \(\sigma ({\mathcal {A}}_j)\cap i\mathbb {R}=\{\emptyset \}\). Thus, we get the conclusion by applying Theorem 2.4 of Arendt and Batty. \(\square \)

3 Polynomial stability

In this section, we use the frequency domain approach method to show the polynomial stability of \(\left( e^{t\mathcal {A}_j}\right) _{t\ge 0}\) associated with the Timoshenko System (2.1). We prove the following theorem.

Theorem 3.1

Under hypothesis (H), for \(j=1,2,\) there exists \(C>0\), such that for every \(U_0\in D\left( \mathcal {A}_j\right) \), we have:

Since \( \ i\mathbb {R}\subseteq \rho \left( \mathcal {A}_j\right) ,\) then for the proof of Theorem 3.1, according to Theorem 2.5, we need to prove that:

We will argue by contradiction. Therefore, suppose that there exists \(\left\{ (\lambda _n,U_n=(u_n,v_n,y_n,\right. \left. z_n))\right\} _{n\ge 1}\subset \mathbb {R}\times D\left( \mathcal {A}_j\right) \), with \(\lambda _n>1\) and:

such that:

Equivalently, we have:

where:

In the following, we will check the condition (3.2) by finding a contradiction with (3.3) such as \(\left\| U_n\right\| _{\mathcal {H}_j} =o(1).\) For clarity, we divide the proof into several lemmas. From now on, for simplicity, we drop the index n.

Lemma 3.2

Under hypothesis (H), for \(j=1,2,\) the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimates:

Proof

First, taking the inner product of (3.4) with U in \(\mathcal {H}_j\), then using the fact that U is uniformly bounded in \(\mathcal {H}_j\), we get:

hence, we get the first asymptotic estimate of (3.9). Next, using hypothesis (H) and the first asymptotic estimate of (3.9), we get the second asymptotic estimate of (3.9). Finally, from (3.4), (3.7), and (3.9), we get the asymptotic estimate of (3.10). Thus, the proof is complete. \(\square \)

Let \(g \in C^1\left( [\alpha ,\beta ]\right) \), such that:

where \(c_g\) and \(c_{g'}\) are strictly positive constant numbers.

Remark 3.3

It is easy to see the existence of g(x). For example, we can take \(g(x)=\cos \left( \frac{ (\beta -x)\pi }{\beta -\alpha }\right) \) to get \(g(\beta )=-g(\alpha )=1\), \(g\in C^1([\alpha ,\beta ])\), \(|g(x)| \le 1\) and \(|g'(x)|\le \frac{\pi }{\beta -\alpha }\). Also, we can take:

\(\square \)

Lemma 3.4

Under hypothesis (H), for \(j=1,2,\) the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimates:

Proof

The proof is divided into two steps.

Step 1. In this step, we prove the asymptotic behavior estimate of (3.11). For this aim, first, from (3.7), we have:

Multiplying (3.13) by \(2\, g \overline{z}\) and integrating over \((\alpha ,\beta ),\) and then taking the real part, we get:

using by parts integration in the left-hand side of above equation, we get:

Consequently, we have:

On the other hand, we have:

Inserting the above equation in (3.14), then using (3.10) and the fact that \((f_3)_x\rightarrow 0\) in \(L^2(\alpha ,\beta )\), we get:

hence, we get (3.11).

Step 2. In this step, we prove the following asymptotic behavior estimate of (3.12). For this aim, first, multiplying (3.8) by \(-\frac{2\rho _2}{k_2}\, g \left( \overline{y}_x+\frac{D(x)}{k_2} \overline{z}_x\right) \) and integrating over \((\alpha ,\beta ),\) and then taking the real part, we get:

using by parts integration in the left-hand side of above equation, we get:

Consequently, we have:

Now, using Cauchy–Schwarz inequality, and Eqs. (3.9)–(3.10), the fact that \(f_4\rightarrow 0\) in \(L^2(\alpha ,\beta )\), and the fact that \(u_x+y\) is uniformly bounded in \(L^2(\alpha ,\beta )\) in the right-hand side of above equation, we get:

On the other hand, we have:

Inserting the above equation in (3.15), and then using Eqs. (3.9)–(3.10), we get:

hence, we get (3.12). Thus, the proof is complete. \(\square \)

Lemma 3.5

Under hypothesis (H), for \(j=1,2,\) the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimates:

Proof

Multiplying Eq. (3.6) by \(-\frac{2\rho _1}{k_1}g\left( \overline{u}_{x}+\overline{y}\right) \) and integrating over \((\alpha ,\beta ),\) and then taking the real part and using the fact that \(u_x+y\) is uniformly bounded in \(L^2(\alpha ,\beta )\), \(f_2\rightarrow 0 \) in \(L^2(\alpha ,\beta )\), we get:

Now, we divided the proof into two steps.

Step 1. In this step, we prove the asymptotic behavior estimates of (3.16)–(3.17). First, from (3.5), we have:

Inserting the above equation in the second term in left of (3.19), and then using the fact that \(u_x\) is uniformly bounded in \(L^2(\alpha ,\beta )\) and \(f_1\rightarrow 0 \) in \(L^2(\alpha ,\beta )\), we get:

Using by parts integration and the fact that \(g(\beta )=-g(\alpha ) = 1\) in the above equation, we get:

consequently:

Next, since \(\lambda \, u,\ \lambda \, y\) and \(u_x+y\) are uniformly bounded, then from the above equation, we get (3.16) and (3.17).

Step 2. In this step, we prove the asymptotic behavior estimates of (3.18). First, from (3.5), we have:

Inserting the above equation in the second term in left of (3.19), and then using the fact that v is uniformly bounded in \(L^2(\alpha ,\beta )\) and \((f_1)_x\rightarrow 0 \) in \(L^2(\alpha ,\beta )\), we get:

Similar to step 1, using by parts integration and the fact that \(g(\beta )=-g(\alpha ) = 1\) in the above equation, and then using the fact that \(v,\ \lambda \, u,\ \lambda \, y\) and \(u_x+y\) are uniformly bounded in \(L^2(\alpha ,\beta )\), we get (3.18). Thus, the proof is complete. \(\square \)

Lemma 3.6

Under hypothesis (H), for \(j=1,2,\) and for \(\lambda \) large enough, the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimates:

Proof

The proof is divided into two steps.

Step 1. In this step, we prove the following asymptotic behavior estimate:

For this aim, first, we have:

Now, from (3.7) and using the fact that \(f_3\rightarrow 0\) in \(L^2(\alpha ,\beta )\) and z is uniformly bounded in \(L^2(\alpha ,\beta )\), we get:

Next, by using by parts integration, we get:

consequently:

On the other hand, we have:

Inserting (3.11) and (3.17) in the above equation, we get:

Substituting the above equation in (3.25), and then using (3.9) and the fact that \(\lambda u\) is bounded in \(L^2(\alpha ,\beta )\), we get:

Finally, inserting the above equation and Eq. (3.24) in (3.23), we get (3.22).

Step 2. In this step, we prove the asymptotic behavior estimates of (3.20)–(3.21). For this aim, first, multiplying (3.8) by \(-i \lambda ^{-1}\rho _2^{-1} \overline{z}\) and integrating over \((\alpha ,\beta ),\) and then taking the real part, we get:

consequently:

From the fact that z is uniformly bounded in \(L^2(\alpha ,\beta )\) and \(f_5\rightarrow 0\) in \(L^2(\alpha ,\beta )\), we get:

Inserting (3.22) and (3.27) in (3.26), we get:

Now, using by parts integration and (3.9)–(3.10), we get:

Inserting (3.29) in (3.28), we get:

Now, for \(\zeta =\beta \) or \(\zeta =\alpha \), we have:

Inserting the above equation in (3.30), we get:

Substituting Eqs. (3.11) and (3.12) in the above inequality, we obtain:

consequently:

since \(\lambda \rightarrow +\infty \), for \(\lambda \) large enough, we get:

hence, we get the first asymptotic estimate of (3.20). Then, inserting the first asymptotic estimate of (3.20) in (3.7), we get the second asymptotic estimate of (3.20). Finally, inserting (3.20) in (3.12), we get (3.21). Thus, the proof is complete. \(\square \)

Lemma 3.7

Under hypothesis (H), for \(j=1,2,\) and for \(\lambda \) large enough, the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimates:

Proof

The proof is divided into two steps.

Step 1. In this step, we prove the first asymptotic behavior estimate of (3.31). First, multiplying Eq. (3.8) by \( \frac{\rho _2}{k_1}\left( \overline{u}_x+\overline{y}\right) \) and integrating over \((\alpha ,\beta )\), we get:

using by parts integration in the second term in the left-hand side of above equation, we get:

Next, multiplying Eq. (3.6) by \( \frac{\rho _1k_2}{k_1^2} \left( \overline{y}_x+\frac{D}{k_2}\overline{z}_x\right) \) and integrating over \((\alpha ,\beta )\), and then using the fact that \(f_2\rightarrow 0\) in \(L^2(0,L)\) and Eqs. (3.9)–(3.10), we get:

consequently:

Adding (3.32) and (3.33), we obtain:

therefore:

From (3.4), (3.9), (3.10), (3.16), (3.20), (3.21), and the fact that \(v,\ u_x+y\) are uniformly bounded in \(L^2(\alpha ,\beta )\), we obtain:

Inserting the above equation in (3.34), we get:

From the above equation and (3.20), we get the first asymptotic estimate of (3.31).

Step 2. In this step, we prove the second asymptotic behavior estimate of (3.31). Multiplying (3.6) by \(-i\lambda ^{-1} \overline{v}\) and integrating over \((\alpha ,\beta ),\) and then taking the real part, we get:

using by parts integration in the second term in the right-hand side of above equation, we get:

Consequently, we obtain:

Finally, from (3.16), (3.18), (3.20), the first asymptotic behavior estimate of (3.31), the fact that \(\lambda ^{-1} v_x,\ v\) are uniformly bounded in \(L^2(\alpha ,\beta )\), and the fact that \(f_2\rightarrow 0\) in \(L^2(\alpha ,\beta )\), we get the second asymptotic behavior estimate of (3.20). Thus, the proof is complete. \(\square \)

Lemma 3.8

Under hypothesis (H), for \(j=1,2,\) the solution \(U=(u,v,y,z)\in D(\mathcal {A}_j)\) of System (3.5)–(3.8) satisfies the following asymptotic behavior estimate:

Proof

First, under hypothesis (H), for \(j=1,2,\) and for \(\lambda \) large enough, from Lemmas 3.5, 3.6 and 3.7, we deduce that:

Now, let \(\phi \in H^1_0\left( 0,L\right) \) be a given function. We proceed the proof in two steps.

Step 1. Multiplying Eq. (3.6) by \(2{\rho _1 } \phi \overline{u}_x\) and integrating over (0, L), and then using the fact that \(u_x\) is bounded in \(L^2(0,L)\), \(f_2\rightarrow 0\) in \( L^2(0,L)\), and \(\phi (0)=\phi (L)=0\) to get:

From (3.5), we have:

Inserting the above equation in (3.36), then using the fact that \((f_1)_x\rightarrow 0 \) in \(L^2(0,L)\), and the fact that v is bounded in \(L^2(0,L)\), we get:

Similarly, multiplying Eq. (3.8) by \(2\rho _2 \phi \left( \overline{y}_x+\frac{D}{k_1} \overline{z}_x\right) \) and integrating over (0, L), and then using by parts integration and \(\phi (0)=\phi (L)=0\) to get:

For all bounded \(h\in L^2(0,L)\), using Cauchy–Schwarz inequality, the first estimation of (3.9), and the fact that \(D\in L^{\infty }(0,L)\), we obtain:

From (3.38) and using (3.39), the fact that \(z,\ \lambda y,\ y_x\) are bounded in \(L^2(0,L)\), the fact that \(f_4\rightarrow 0\) in \(L^2(0,L)\), we get:

On the other hand, from (3.7), we have:

Inserting the above equation in (3.40), then using the fact that \((f_3)_x\rightarrow 0 \) in \(L^2(0,L)\), and the fact that z is bounded in \(L^2(0,L)\), we get:

Adding (3.37) and (3.41), we get:

Step 2. Let \(\epsilon >0\), such that \(\alpha +\epsilon <\beta \) and define the cut-off function \(\varsigma _1 \text { in } C^1\left( \left[ 0,L\right] \right) \) by:

Take \(\phi =x \varsigma _1\) in (3.42), and then use the fact that \(\left\| U\right\| _{\mathcal {H}_j}=o\left( 1\right) \) on \(\left( \alpha ,\beta \right) \) (i.e., (3.35)), the fact that \(\alpha<\alpha +\epsilon <\beta \), and (3.9)–(3.10), we get:

Moreover, using Cauchy–Schwarz inequality, the first estimation of (3.9), the fact that \(D\in L^{\infty }(0,L)\), and (3.43), we get:

Using (3.43) and (3.44), we get:

Similarly, by symmetry, we can prove that: \(\left\| U\right\| _{\mathcal {H}_j}=o\left( 1\right) \text { on }(\beta ,L)\) and therefore:

Thus, the proof is complete.\(\square \)

Proof of Theorem 3.1

Under hypothesis (H), for \(j=1,2,\) from Lemma 3.8, we have \(\Vert U\Vert _{\mathcal {H}_j}=o\left( 1\right) ,\) over (0, L), which contradicts (3.3). This implies that:

The result follows from Theorem 2.5 part (i). \(\square \)

Remark 3.9

From Lemmas 3.5, 3.6 and 3.7, we deduce that \(\Vert U\Vert _{\mathcal {H}_j}=o\left( 1\right) ,\) over \( \left( \alpha ,\beta \right) .\) After that in Lemma 3.8, we have chosen a particular function \(\phi \in H^1_0\left( 0,L\right) \), to get \(\Vert U\Vert _{\mathcal {H}_j}=o\left( 1\right) ,\) on \( \left( 0,L\right) .\) We note that while proving theses lemmas, we have not used the boundary conditions of u and y. Therefore, we conclude that our study is at the same time valid for fully Dirichlet and mixed boundary conditions. \(\square \)

It is very important to ask the question about the optimality of (3.1). In the next section, we will prove that the decay rate (3.1) is optimal in some cases.

4 Upper bound estimation of the polynomial decay rate

In this section, our goal is to show that energy of the Timoshenko System (1.1)–(1.2) with Dirichlet–Neumann boundary conditions (1.4) has the optimal polynomial decay rate of type \(t^{-1}\).

4.1 Optimal polynomial decay rate of \(\mathcal {A}_2\) with global Kelvin–Voigt damping

In this part, we show that the energy of the Timoshenko System (1.1)–(1.2) with global Kelvin–Voigt damping, and with Dirichlet–Neumann boundary conditions (1.4) has the optimal polynomial decay rate of type \(t^{-1}\). For this aim, assume that:

where \(D_0\) is a strictly positive constant number. We prove the following theorem.

Theorem 4.1

Under hypothesis (H1), for all initial data \(U_0\in D\left( \mathcal {A}_2\right) \) and for all \(t>0,\) the energy decay rate in (3.1) is optimal, i.e., for \(\epsilon >0\left( \text {small enough}\right) \), we cannot expect the energy decay rate \(t^{-\frac{2}{{2-\epsilon }}}\).

Proof

Following to Borichev and Tomilov (2010) [see Theorem 2.4 part (i)], it suffices to show the existence of sequences \(\left( \lambda _n\right) _n\subset \mathbb {R}\) with \(\lambda _n\rightarrow +\infty \), \(\left( U_n\right) _n\subset D\left( \mathcal {A}_2\right) \), and \(\left( F_n\right) _n\subset \mathcal {H}_2\), such that \(\left( i\lambda _n I-\mathcal {A}_2\right) U_n=F_n\) is bounded in \(\mathcal {H}_2\) and \(\lambda _n^{-2+\epsilon }\Vert U_n\Vert _{\mathcal {H}_2}\rightarrow +\infty \). Set:

and

Clearly that \(U_n\in D\left( \mathcal {A}_2\right) ,\) and \(F_n\) is bounded in \(\mathcal {H}_2\). Let us show that:

Detailing \(\left( i\lambda _n I-\mathcal {A}_2\right) U_n\), we get:

where:

Inserting (4.1) in (4.2), we get:

hence, we obtain:

Now, we have:

Therefore, for \(\epsilon >0\left( \text {small enough}\right) \), we have:

Finally, following to Borichev and Tomilov (2010) [see Theorem 2.4 part (i)], we cannot expect the energy decay rate \(t^{-\frac{2}{{2-\epsilon }}}\). \(\square \)

Note that Theorem 4.1 also implies that our system is non-uniformly stable.

Remark 4.2

In fact, when the Kelvin–Voigt damping is global (i.e., under hypothesis (H1)), if mixed Dirichlet–Neumann boundary conditions (1.4) are considered in System (1.1)–(1.2) instead of fully Dirichlet boundary conditions (1.3), then the decay rate (3.1) is optimal [see Theorem 4.1]. Indeed, the idea is to find a sequence of \(\left( \lambda _n\right) _n\subset \mathbb {R}\) with \(\lambda _n\rightarrow +\infty \) and a sequence of vectors \(\left( U_n\right) _n\subset D\left( \mathcal {A}_2\right) \), such that \(\left( i\lambda _n I-\mathcal {A}_2\right) U_n\) is bounded in \(\mathcal {H}_2\) and \(\lambda _n^{-2+\epsilon }\Vert U_n\Vert _{\mathcal {H}_2}\rightarrow +\infty \) for \(\epsilon >0\left( \text {small enough}\right) \). In the case of Dirichlet–Neumann boundary condition, this approach worked well because of the fact that all eigenmodes are separable, i.e., the system operator can be decomposed to a block-diagonal form according to the frequency when the state variables are expanded into Fourier series. However, in the case of fully Dirichlet boundary conditions, this approach has no success in the literature to our knowledge, and the problem is still open. \(\square \)

4.2 Optimal polynomial decay rate of \(\mathcal {A}_2\) with local Kelvin–Voigt damping

In this part, under the equal speed wave propagation condition (i.e., \(\frac{\rho _1}{k_1}=\frac{\rho _2}{k_2}\)), we use the classical method developed by Littman and Markus in Littman and Markus (1988) [see also Curtain and Zwart (1995)], to show that the Timoshenko System (1.1)–(1.2) with local Kelvin–Voigt damping, and with Dirichlet–Neumann boundary conditions (1.4) is not exponentially stable. Also, we will prove the optimality of estimation (3.1). For this aim, assume that:

where \(D_0\in \mathbb {R}^+_{*}\) and \(\alpha \in (0,L)\). For simplicity and without loss of generality, in this part, we take \(\frac{\rho _1}{k_1}=1\), \(D_0=k_2\), \(L=1\), and \(\alpha =\frac{1}{2}\), and hence, the above hypothesis becomes:

Our first result in this part is the following theorem.

Theorem 4.3

Under hypothesis (H2). The semigroup generated by the operator \(\mathcal {A}_2\) is not exponentially stable in the energy space \(\mathcal {H}_2.\)

For the proof of Theorem 4.3, we recall the following definitions: the growth bound \(\omega _0\left( \mathcal {A}_2\right) \) and the spectral bound \(s\left( \mathcal {A}_2\right) \) of \(\mathcal {A}_2\) are defined, respectively, as:

From the Hille–Yoside theorem [see also Theorem 2.1.6 and Lemma 2.1.11 in Curtain and Zwart (1995)], one has that:

By the previous results, one clearly has that \(s\left( \mathcal {A}_2\right) \le 0\) and the theorem would follow if equality holds in the previous inequality. It therefore amounts to show the existence of a sequence of eigenvalues of \(\mathcal {A}_2\) whose real parts tend to zero.

Since \(\mathcal {A}_2\) is dissipative, we fix \(\alpha _0>0\) small enough and we study the asymptotic behavior of the eigenvalues \(\lambda \) of \(\mathcal {A}_2\) in the strip:

First, we determine the characteristic equation satisfied by the eigenvalues of \(\mathcal {A}_2\). For this aim, let \(\lambda \in \mathbb {C}^*\) be an eigenvalue of \(\mathcal {A}_2\) and let \({U=\left( u,\lambda u,y,\lambda y\right) \in D(\mathcal {A}_2)}\) be an associated eigenvector. Then, the eigenvalue problem is given by:

with the boundary conditions:

where \(c=\sqrt{k_1 k_2^{-1}}\). We define:

Thus, System (4.3)–(4.5) become:

with the boundary conditions:

and the continuity conditions:

To proceed, we set the following notation. Here and below, in the case where z is a non zero non-real number, we define (and denote) by \(\sqrt{z}\) the square root of z, i.e., the unique complex number with positive real part whose square is equal to z. Our aim is to study the asymptotic behavior of the large eigenvalues \(\lambda \) of \({\mathcal {A}_2}\) in S. By taking \(\lambda \) large enough, the general solution of System (4.6)–(4.7) with boundary condition (4.10) is given by:

and the general solution of Eqs. (4.8)–(4.9) with boundary condition (4.11) is given by:

where \(\alpha _1,\ \alpha _2,\ \beta _1,\ \beta _2\in \mathbb {C}\):

and

The boundary conditions in (4.12) can be expressed by:

such that:

Denoting the determinant of a matrix M by det(M), consequently, System (4.6)–(4.12) admits a non trivial solution if and only if \(\displaystyle {det\left( M\right) }=0\). Using Gaussian elimination, \(\displaystyle {det\left( M\right) }=0\) is equivalent to \(\displaystyle {det\left( \tilde{M}\right) }=0\), where \(\tilde{M}\) is given by:

One gets that:

such that:

Proposition 4.4

Under hypothesis (H2), there exist \(n_0\in \mathbb {N}\) sufficiently large and two sequences \(\left( \lambda _{1,n}\right) _{ |n|\ge n_0} \) and \(\left( \lambda _{2,n}\right) _{ |n|\ge n_0} \) of simple roots of \(\det (\tilde{M})\) (that are also simple eigenvalues of \(\mathcal {A}_2\)) satisfying the following asymptotic behavior:

Case 1. If there exist no integers \(\kappa \in \mathbb {N}\), such that \(c= 2\kappa \pi \) (i.e., \(\sin \left( \frac{c}{4}\right) \ne 0\) and \(\cos \left( \frac{c}{4}\right) \ne 0\)), then:

Case 2. If there exists \(\kappa _0\in \mathbb {N}\), such that \(c=2\left( 2\kappa _0+1\right) \pi \), (i.e., \(\cos \left( \frac{c}{4}\right) = 0\)), then:

Case 3. If there exists \(\kappa _1\in \mathbb {N}\), such that \(c=4\kappa _1 \pi \), (i.e., \(\sin \left( \frac{c}{4}\right) = 0\)), then:

Here, \({{\,\mathrm{sign}\,}}\) is used to denote the sign function or signum function.

The argument for Proposition 4.4 relies on the subsequent lemmas.

Lemma 4.5

Under hypothesis (H2), let \(\lambda \) be a large eigenvalue of \(\mathcal {A}_2\), and then, \(\lambda \) is large root of the following asymptotic equation:

where:

Proof

Let \(\lambda \) be a large eigenvalue of \(\mathcal {A}_2\), and then, \(\lambda \) is root of \(det\left( \tilde{M}\right) \). In this lemma, we furnish an asymptotic development of the function \(det\left( \tilde{M}\right) \) for large \(\lambda \). First, using the asymptotic expansion in (4.13) and (4.14), we get:

Inserting (4.25) in (4.16), we get:

Substituting (4.26) in (4.15), and then using the fact that real part of \(\lambda \) is bounded in S, we get:

where:

Next, from (4.25) and using the fact that real part of \(\lambda \) is bounded S, we get:

On the other hand, from (4.25) and (4.28), we obtain:

Since the real part of \(\sqrt{\lambda }\) is positive, we obtain:

hence:

Therefore, from (4.29), (4.30), and using the fact that real part of \(\lambda \) is bounded S, we get:

Finally, inserting (4.28) and (4.31) in (4.27), we get \(\lambda \) is large root of F, where F defined in (4.23). Thus, the proof is complete. \(\square \)

Lemma 4.6

Under hypothesis (H2), there exist \(n_0\in \mathbb {N}\) sufficiently large and two sequences \(\left( \lambda _{1,n}\right) _{ |n|\ge n_0} \) and \(\left( \lambda _{2,n}\right) _{ |n|\ge n_0} \) of simple roots of F (that are also simple eigenvalues of \(\mathcal {A}_2\)) satisfying the following asymptotic behavior:

and

Proof

First, we look at the roots of \(f_0\). From (4.24), we deduce that \(f_0\) can be written as:

The roots of \(f_0\) are given by:

Now, with the help of Rouché’s theorem, we will show that the roots of F are close to \(f_0\). Let us start with the first family \(\mu _{1,n}\). Let \(B_n=B\left( 2n\pi i, r_n\right) \) be the ball of centrum \(2n\pi i\) and radius \(r_n=\frac{1}{|n|^{\frac{1}{4}}}\) and \(\lambda \in \partial B_n\); i.e., \(\lambda =2n\pi i+r_n e^{i\theta },\ \theta \in [0,2\pi )\). Then:

Inserting (4.35) in (4.34), we get:

It follows that there exists a positive constant C, such that:

On the other hand, from (4.23), we deduce that:

It follows that, for |n| large enough:

Hence, with the help of Rouché’s theorem, there exists \(n_0\in \mathbb {N}^*\) large enough, such that \(\forall \ |n|\ge n_0\ \ \left( n\in \mathbb {Z}^*\right) ,\) the first branch of roots of F, denoted by \(\lambda _{1,n}\) are close to \(\mu _{1,n}\), hence we get (4.32). The same procedure yields (4.33). Thus, the proof is complete. \(\square \)

Remark 4.7

From Lemma 4.6, we deduce that the real part of the eigenvalues of \(\mathcal {A}_2\) tends to zero, and this is enough to get Theorem 4.3. However, we look forward to knowing the real part of \(\lambda _{1,n}\) and \(\lambda _{2,n}\). Since in the final of this section, we will use the real part of \(\lambda _{1,n}\) and \(\lambda _{2,n}\) for the optimality of polynomial stability. \(\square \)

We are now in a position to conclude the proof of Proposition 4.4.

Proof of Proposition 4.4

The proof is divided into two steps.

Step 1. Calculation of \(\epsilon _{1,n}\). From (4.32), we have:

and

On the other hand, since \(\lim _{|n|\rightarrow +\infty }\epsilon _{1,n}=0\), we have the asymptotic expansion:

Inserting (4.38) in (4.36), we get:

Substituting (4.37) and (4.39) in (4.23), we get:

We distinguish two cases:

Case 1. If \(\sin \left( \frac{c}{4}\right) \ne 0,\) then:

therefore, from (4.40), we get:

hence, we get:

Inserting (4.41) in (4.32), we get (4.17) and (4.19).

Case 2. If \(\sin \left( \frac{c}{4}\right) =0,\) then:

Consequently, from (4.40), we get:

Solving Eq. (4.42), we get:

Inserting (4.43) in (4.32), we get (4.21).

Step 2. Calculation of \(\epsilon _{2,n}\). We distinguish three cases:

Case 1. If \(\sin \left( \frac{c}{4}\right) \ne 0\) and \(\cos \left( \frac{c}{4}\right) \ne 0\), then \(0<\cos ^2\left( \frac{c}{4}\right) < 1.\) Therefore:

From (4.33), we have:

Inserting (4.33) and (4.44) in (4.23), we get:

From (4.33), we obtain:

Since \(\zeta =\arccos \left( \cos ^2\left( \frac{c}{4}\right) \right) \in \left( 0,\frac{\pi }{2}\right) \), we have:

On the other hand, since \(\lim _{|n|\rightarrow +\infty }\epsilon _{2,n}=0\), we have the asymptotic expansion:

Inserting (4.47) and (4.48) in (4.46), we get:

Inserting (4.49) in (4.45), we get:

Consequently, since in this case, \(\cos \left( \frac{c}{4}\right) \ne 0\), then we get:

Substituting (4.50) in (4.40), we get (4.18).

Case 2. If \(\cos \left( \frac{c}{4}\right) = 0\), then:

In this case, \(\lambda _{2,n}\) becomes:

Therefore, we have:

On the other hand, since \(\lim _{|n|\rightarrow +\infty }\epsilon _{2,n}=0\), we have the asymptotic expansion:

Inserting (4.54) in (4.53), we get:

Moreover, from (4.52), we get:

Inserting (4.51), (4.55), and (4.56) in (4.23), we get:

From (4.57), we get:

hence:

Inserting (4.58) in (4.57), we get:

therefore:

Inserting (4.58) in (4.59), we get:

Finally, inserting (4.60) in (4.52), we get (4.20).

Case 3. If \(\sin \left( \frac{c}{4}\right) = 0\), then:

In this case, \(\lambda _{2,n}\) becomes:

Similar to case 2, from (4.62) and using the fact that \(\lim _{|n|\rightarrow +\infty }\epsilon _{2,n}=0\), we have the asymptotic expansion:

Moreover, from (4.62), we get:

Inserting (4.61), (4.63), and (4.64) in (4.23), we get:

Similar to case 2, solving Eq. (4.65), we get:

Finally, inserting (4.66) in (4.62), we get (4.22). Thus, the proof is complete.

Proof of Theorem 4.3

From Proposition 4.4, the operator \(\mathcal {A}_2\) has two branches of eigenvalues with eigenvalues admitting real parts tending to zero. Hence, the energy corresponding to the first and second branch of eigenvalues has no exponential decaying. Therefore, the total energy of the Timoshenko System (1.1)–(1.2) with local Kelvin–Voigt damping, and with Dirichlet–Neumann boundary conditions (1.4), has no exponential decaying in the equal speed case. \(\square \)

Our second result in this part is following theorem.

Theorem 4.8

Under hypothesis (H2), for all initial data \(U_0\in D\left( \mathcal {A}_2\right) \) and for all \(t>0,\) if there exists \(\kappa \in \mathbb {N}\), such that \(c:=\sqrt{\frac{k_1}{k_2}}=2\kappa \pi \), then the energy decay rate in (3.1) is optimal; i.e., for \(\epsilon >0\left( \text {small enough}\right) \), we cannot expect the energy decay rate \(t^{-\frac{2}{{2-\epsilon }}}\).

For the proof of Theorem 4.8, we first recall Theorem 3.4.1 stated in Nadine (2016).

Theorem 4.9

Let \(A:D(A)\subset H\rightarrow H \) generate a C\(_0-\)semigroup of contractions \(\left( e^{t A}\right) _{t\ge 0}\) on H. Assume that \(i\mathbb {R}\in \rho (A)\). Let \(\left( \lambda _{k,n}\right) _{1\le k\le k_0,\ n\ge 1}\) denote the kth branch of eigenvalues of A and \(\left( e_{k,n}\right) _{1\le k\le k_0,\ n\ge 1}\) the system of normalized associated eigenvectors. Assume that for each \(1\le k\le k_0\), there exist a positive sequence \(\mu _{k,n}\rightarrow \infty \) as \(n\rightarrow \infty \) and two positive constant \(\alpha _k>0, \beta _k>0\), such that:

Here, \(\Im \) is used to denote the imaginary part of a complex number. Furthermore, assume that for \(u_0\in D(A)\), there exists constant \(M>0\) independent of \(u_0\), such that:

Then, the decay rate (4.68) is optimal in the sense that for any \(\epsilon >0\), we cannot expect the energy decay rate \(t^{-\frac{2}{\ell _k-\epsilon }}.\) \(\square \)

Proof of Theorem 4.8

If condition (H2) holds, first following Theorem 3.1, for all initial data \(U_0\in D\left( \mathcal {A}_2\right) \) and for all \(t>0,\) we get (4.68) with \(\ell _k=2\). Furthermore, from Proposition 4.4 (case 2 and case 3), we remark that:

Case 1. If there exists \(\kappa _0\in \mathbb {N}\), such that \(c=2\left( 2\kappa _0+1\right) \pi \), we have:

then (4.67) holds with \(\alpha _1=\frac{1}{2}\) and \(\alpha _2=2\). Therefore, \(\ell _{k}=2=\max (\alpha _1,\alpha _2).\) Then, applying Theorem 4.9, we get that the energy decay rate in (3.1) is optimal.

Case 2. If there exists \(\kappa _1\in \mathbb {N}\), such that \(c=4\kappa _1 \pi \), we have:

then (4.67) holds with \(\alpha _1=2\) and \(\alpha _2=2\). Therefore, \(\ell _{k}=2=\max (\alpha _1,\alpha _2).\) Then, applying Theorem 4.9, we get that the energy decay rate in (3.1) is optimal. \(\square \)

Remark 4.10

It would be very interesting to study the optimal decay rate for the Timoshenko System (1.1)–(1.2) with Dirichlet–Neumann boundary conditions (1.4) when \(\frac{\rho _1}{k_1}\ne \frac{\rho _2}{k_2}\) or with fully Dirichlet boundary conditions (1.3). However, in these cases, we can no longer calculate explicitly the eigenvalues as in Proposition 4.4. \(\square \)

Change history

05 March 2022

A Correction to this paper has been published: https://doi.org/10.1007/s40314-021-01755-5

References

Abdallah F, Ghader M, Wehbe A (2018) Stability results of a distributed problem involving Bresse system with history and/or Cattaneo law under fully Dirichlet or mixed boundary conditions. Math Methods Appl Sci 41(5):1876–1907

Akil M, Chitour Y, Ghader M, Wehbe A (2019) Stability and exact controllability of a Timoshenko system with only one fractional damping on the boundary. Asympt Anal 119:1–60

Alabau-Boussouira F (2004) A general formula for decay rates of nonlinear dissipative systems. Comptes Rendus Mathematique 338(1):35–40

Ammar-Khodja F, Benabdallah A, Rivera JM, Racke R (2003) Energy decay for Timoshenko systems of memory type. J Differ Equ 194(1):82–115

Ammar-Khodja F, Kerbal S, Soufyane A (2007) Stabilization of the nonuniform Timoshenko beam. J Math Anal Appl 327(1):525–538

Araruna FD, Zuazua E (2008) Controllability of the Kirchhoff system for beams as a limit of the Mindlin–Timoshenko system. SIAM J Control Optim 47(4):1909–1938

Arendt W, Batty CJK (1988) Tauberian theorems and stability of one-parameter semigroups. Trans Am Math Soc 306(2):837–852

Bassam M, Mercier D, Nicaise S, Wehbe A (2015) Polynomial stability of the Timoshenko system by one boundary damping. J Math Anal Appl 425(2):1177–1203

Batty CJK, Duyckaerts T (2008) Non-uniform stability for bounded semi-groups on Banach spaces. J Evol Equ 8(4):765–780

Benaissa A, Benazzouz S (2017) Well-posedness and asymptotic behavior of Timoshenko beam system with dynamic boundary dissipative feedback of fractional derivative type. Zeitschrift für angewandte Mathematik und Physik 68(4):68–94

Borichev A, Tomilov Y (2010) Optimal polynomial decay of functions and operator semigroups. Math Ann 347(2):455–478

Cavalcanti MM, Cavalcanti VND, Nascimento FAF, Lasiecka I, Rodrigues JH (2013) Uniform decay rates for the energy of Timoshenko system with the arbitrary speeds of propagation and localized nonlinear damping. Zeitschrift für angewandte Mathematik und Physik 65(6):1189–1206

Curtain RF, Zwart H (1995) An introduction to infinite-dimensional linear systems theory, texts in applied mathematics, vol 21. Springer, New York

Júnior DdaS Almeida, Santos ML, Rivera JEM (2013) Stability to 1-D thermoelastic Timoshenko beam acting on shear force. Zeitschrift für angewandte Mathematik und Physik 65(6):1233–1249

Fatori LH, Monteiro RN, Sare HDF (2014) The Timoshenko system with history and Cattaneo law. Appl Math Comput 228:128–140

Guesmia A, Messaoudi SA (2009) General energy decay estimates of Timoshenko systems with frictional versus viscoelastic damping. Math Methods Appl Sci 32(16):2102–2122

Hao J, Wei J (2018) Global existence and stability results for a nonlinear Timoshenko system of thermoelasticity of type III with delay. Bound Value Probl 2018(1):1–17

Huang F (1988) On the mathematical model for linear elastic systems with analytic damping. SIAM J Control Optim 26(3):714–724

Huang FL (1985) Characteristic conditions for exponential stability of linear dynamical systems in Hilbert spaces. Ann Differ Equ 1(1):43–56

Kato T (1995) Perturbation theory for linear operators. Springer, Berlin

Kim JU, Renardy Y (1987) Boundary control of the Timoshenko beam. SIAM J Control Optim 25(6):1417–1429

Littman W, Markus L (1988) Stabilization of a hybrid system of elasticity by feedback boundary damping. Annali di Matematica Pura ed Applicata 152(1):281–330

Liu K, Chen S, Liu Z (1998) Spectrum and stability for elastic systems with global or local Kelvin–Voigt damping. SIAM J Appl Math 59(2):651–668

Liu K, Liu Z (1998) Exponential decay of energy of the Euler–Bernoulli beam with locally distributed Kelvin–Voigt damping. SIAM J Control Optim 36(3):1086–1098

Liu K, Liu Z, Zhang Q (2017) Eventual differentiability of a string with local Kelvin–Voigt damping. ESAIM Control Optim Calc Var 23(2):443–454

Liu Z, Rao B (2005) Characterization of polynomial decay rate for the solution of linear evolution equation. Z Angew Math Phys 56(4):630–644

Liu Z, Zhang Q (2016) Stability of a string with local Kelvin–Voigt damping and non smooth coefficient at interface. SIAM J Control Optim 54(4):1859–1871

Liu Z, Zhang Q (2018) Stability and regularity of solution to the Timoshenko beam equation with local Kelvin–Voigt damping. SIAM J Control Optim 56(6):3919–3947

Liu Z, Zheng S (1999) Semigroups associated with dissipative systems. Research notes in mathematics, vol 398. Chapman & Hall, Boca Raton

Messaoudi SA, Mustafa MI (2008) On the internal and boundary stabilization of Timoshenko beams. NoDEA Nonlinear Differ Equ Appl 15(6):655–671

Messaoudi SA, Said-Houari B (2009) Uniform decay in a Timoshenko-type system with past history. J Math Anal Appl 360(2):459–475

Muñoz Rivera JE, Racke R (2002) Mildly dissipative nonlinear Timoshenko systems—global existence and exponential stability. J Math Anal Appl 276(1):248–278

Muñoz Rivera JE, Racke R (2008) Timoshenko systems with indefinite damping. J Math Anal Appl 341(2):1068–1083

Nadine N (2016) Étude de la stabilisation exponentielle et polynomiale de certains systèmes d’équations couplées par des contrôles indirects bornés ou non bornés. Thèse université de Valenciennes

Pazy A (1983) Semigroups of linear operators and applications to partial differential, applied mathematical sciences equations, vol 44. Springer, New York

Prüss J (1984) On the spectrum of \(C_{0}\)-semigroups. Trans Am Math Soc 284(2):847–857

Raposo CA, Ferreira J, Santos ML, Castro NNO (2005) Exponential stability for the Timoshenko system with two weak dampings. Appl Math Lett 18(5):535–541

Renardy M (2004) On localized Kelvin–Voigt damping. ZAMM 84(4):280–283

Sare HDF, Racke R (2009) On the stability of damped Timoshenko systems: Cattaneo versus Fourier law. Arch Ration Mech Anal 194(1):221–251

Soufyane A, Whebe A (2003) Uniform stabilization for the Timoshenko beam by a locally distributed damping. Electron J Differ Equ 9:1–14

Tebou L (2015) A localized nonstandard stabilizer for the Timoshenko beam. Comptes Rendus Mathematique 353(3):247–253

Tian X, Zhang Q (2017) Stability of a Timoshenko system with local Kelvin-Voigt damping. Zeitschrift für angewandte Mathematik und Physik 68(1):1–15

Timoshenko S (1921) LXVI. On the correction for shear of the differential equation for transverse vibrations of prismatic bars. Lond Edinb Dublin Philos Mag J Sci 41(245):744–746

Wehbe A, Youssef W (2009) Stabilization of the uniform Timoshenko beam by one locally distributed feedback. Appl Anal 88(7):1067–1078

Zhang Q (2010) Exponential stability of an elastic string with local Kelvin–Voigt damping. Zeitschrift für angewandte Mathematik und Physik 61(6):1009–1015

Zhao HL, Liu KS, Zhang CG (2004) Stability for the Timoshenko beam system with local Kelvin–Voigt damping. Acta Mathematica Sinica Engl Ser 21(3):655–666

Acknowledgements

The authors would like to thank the referees for their valuable comments and useful suggestions.

Author information

Authors and Affiliations

Additional information

Communicated by Eduardo Cerpa.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wehbe, A., Ghader, M. A transmission problem for the Timoshenko system with one local Kelvin–Voigt damping and non-smooth coefficient at the interface. Comp. Appl. Math. 40, 297 (2021). https://doi.org/10.1007/s40314-021-01446-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-021-01446-1