Abstract

In this paper, we derive the limit distribution of the least squares estimator for an AR(1) model with a non-zero intercept and a possible infinite variance. It turns out that the estimator has a quite different limit for the cases of \(|\rho | < 1\), \(|\rho | > 1\), and \(\rho = 1 + \frac{c}{n^\alpha }\) for some constant \(c \in R\) and \(\alpha \in (0, 1]\), and whether or not the variance of the model errors is infinite also has a great impact on both the convergence rate and the limit distribution of the estimator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a simple but useful tool, the auto-regression (AR) models have been widely used in economics and many other fields. Among them, the simplest one is the autoregressive process with order 1, i.e., an AR(1) model, which is usually defined as

where \(y_0\) is a constant and \(e_t\)’s are independent and identically distributed (hereafter, i.i.d.) random errors with mean zero and finite variance. The process \(\{y_t\}\) is (i) stationary if \(|\rho |<1\) independent of n, (ii) unit root if \(\rho =1\), (iii) near unit root if \(\rho =1+c/n\) for some nonzero constant c, iv) explosive if \(|\rho | > 1\) independent of n, and v) moderate deviation from a unit root if \(\rho =1+c/k_n\) for some nonzero constant c and a sequence \(\{k_n\}\) satisfying \(k_n\rightarrow \infty \) and \(k_n/n\rightarrow 0\) as \(n\rightarrow \infty \).

When \(\mu = 0\) and the error variance in the model (1) is finite, it is well known in the literature that the least squares estimator for \(\rho \) has a quite different limit distribution in cases of stationary, unit root and near unit root; see [17]. The convergence rate of the correlation coefficient is \(\sqrt{n}\), n for cases (i)–(iii), respectively, and may even be \((1 + c)^n\) in the case of (v) for some \(c > 0\) as stated in [19]. More studies on this model can be found in [1, 2, 4, 8, 9, 13, 16, 20] and references therein.

When \(\mu \ne 0\) with finite variance, [21] and [11] studied the limit theory for the AR(1) for cases of iv) and v), respectively. It is shown that the inclusion of a nonzero intercept may change drastically the large sample properties of the least squares estimator compared to [19]. More recently, [15] studied how to construct a uniform confidence region for \((\mu , \rho )\) regardless of (i)–(v) based on the empirical likelihood method.

Observe that \(e_t\) may have an infinite variance in practice [5, 18], and most of the aforementioned research was focused on the case of \(e_t\) having a finite variance. In this paper, we are interested in considering the model (1) when \(\mu \ne 0\) and the variance of \(e_t\) may possibly be infinite. We will derive the limit distribution of the least squares estimator of \((\mu , \rho )\) for the following cases:

-

(P1) \(|\rho |<1\) independent of n;

-

(P2) \(|\rho | > 1\) independent of n;

-

(P3) \(\rho = 1\);

-

(P4) \(\rho = 1 + \frac{c}{n}\) for some constant \(c \ne 0\);

-

(P5) \(\rho = 1 + \frac{c}{n^\alpha }\) for some constants \(c < 0\) and \(\alpha \in (0, 1)\);

-

(P6) \(\rho = 1 + \frac{c}{n^\alpha }\) for some constants \(c > 0\) and \(\alpha \in (0, 1)\).

Since the current paper allows for the inclusion of both the intercept and a possible infinite variance, it can be treated as an extension of the existing literature, i.e., [11, 14, 17, 19, 21], among others.

We organize the rest of this paper as follows. Section 2 provides the methodology and main limit results. Detailed proofs are put in Section 3.

2 Methodology and main results

Under model (1), by minimizing the sum of squares:

with respect to \((\mu , \rho )^\top \), we get the least squares estimator for \((\mu ,\rho )^\top \) as follows

Here \(A^\top \) denotes the transpose of the matrix or vector A. In the sequel, we will investigate the limit distribution of \(({\hat{\mu }}-\mu , {\hat{\rho }}-\rho )^\top .\)

To derive the limit distribution of this least squares estimator, we follow [19] by assuming that

-

(C1) The innovations \(\{e_t\}\) are i.i.d. with \(E[e_t]=0\);

-

(C2) The process is initialized at \(y_0=O_p(1)\).

Observing that the variance of \(e_t\)’s may not exist, we use the slowly varying function \(l(x)=E[e_t^2I(|e_t|\le x)]\) instead as did in [14] to characterize the dispersion property of the random errors, which is supposed to satisfy

-

C3) \(l(nx)/l(n) \rightarrow 1\) as \(n \rightarrow \infty \) for any \(x > 0\).

An example of slowly varying function is when l(x) has a limit, say \(\lim \limits _{x\rightarrow \infty }l(x)=\sigma ^2\), which implies \(\{e_t\}\) having a finite variance \(\sigma ^2\). Another example is \(l(x)=\log (x)\), \(x>1\), which implies that the variance of \(e_t\)’s does not exist. One known property of l(x) is that \(l(x)=o(x^{\varepsilon })\) as \(x\rightarrow \infty \), for any \(\varepsilon >0\). More properties on l(x) can be found in [10]. To deal with the possibly infinite variance, we introduce the following sequence \(\{b_k\}_{k=0}^\infty \), where

and

which imply directly \(n l(b_n)\le b_n^2\) for all \(n\ge 1\); see also [12].

For convenience, in the sequel we still call \(|\phi | < 1\) the stationary case, \(\rho = 1\) the unit root case, \(\phi = 1 + \frac{c}{n}\) for some \(c\ne 0\) the near unit root case, \(\rho = 1 + \frac{c}{n^\alpha }\) for some \(c \ne 0\) the moderate deviation case and \(|\rho | > 1\) the explosive case, even when the variance of \(v_t\) is infinite. We will divide the theoretical derivations into four separate subsections.

2.1 Limit theory for the stationary case

We first consider the stationary case \(|\rho | < 1\), which is independent of n. Observe that

We write \({\bar{y}}_t = y_t - \frac{\mu }{1 - \rho }\), and then have

To prove the main result for this case, we need the following preliminary lemma.

Lemma 1

Suppose conditions (C1)–(C3) hold. Under P1, as \(n\rightarrow \infty \), we have

and

Based on Lemma 1, we can show the following theorem.

Theorem 1

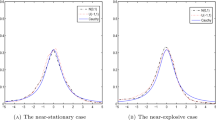

Under conditions (C1)–(C3), as \(n\rightarrow \infty \), we have under P1 that

where \(X_1= W_1 - \frac{\mu (1+\rho )}{\sigma ^2}W_2\) and \(X_2 = (1-\rho ^2)W_2\) if \(\lim \limits _{m \rightarrow \infty } l(b_n) = \sigma ^2\), and \(X_1 = W_1\) and \(X_2 = (1-\rho ^2)W_2\) if \(\lim \limits _{m \rightarrow \infty } l(b_n) = \infty \).

Remark 1

Theorem 1 indicates that the possible infinite variance may affect the convergence rate of the least squares estimator of the intercept, but has no impact on that of \(\rho \).

Remark 2

When \(\lim \limits _{m\rightarrow \infty } l(b_m)\) exists and is equal to \(\sigma ^2\), we have \((X_1, X_2)^\top \sim N(0, \Sigma _1)\), where \(\Sigma _1 = (\sigma _{ij}^2)_{1\le i,j\le 2}\) with \(\sigma _{11}^2 = 1 + \frac{\mu ^2(1 + \rho )}{\sigma ^4 (1 - \rho )}\), \(\sigma _{12}^2 = \sigma _{21}^2 = - \frac{\mu (1+\rho )}{\sigma ^2}\) and \(\sigma _{22}^2 = 1 - \rho ^2\). That is, the limit distribution reduces to the ordinary case; see [15] and references therein for details.

2.2 Limit theory for the explosive case

For this case, let \({\tilde{y}}_t=\sum _{i=1}^t \rho ^{t-i}e_i+\rho ^t y_0\), then

Along the same line as Section 2.1, we derive a preliminary lemma first as follows.

Lemma 2

Suppose conditions (C1)–(C3) hold. Under P2, as \(n\rightarrow \infty \), we have

and

where \(U_1 \sim \lim \limits _{m\rightarrow \infty } \frac{1}{\sqrt{l(b_m)}}\sum \limits _{t=1}^m\rho ^{-(m-t)}e_t\), \(U_2 \sim \rho y_0 + \lim \limits _{m\rightarrow \infty } \frac{\rho }{\sqrt{l(b_m)}}\sum \limits _{t=1}^{m-1}\rho ^{-t}e_t\), and \(W_1\) are mutually independent random variables. \(W_1\) is specified in Lemma 1.

Using this lemma, we can obtain the following theorem.

Theorem 2

Under conditions (C1)–(C3), as \(n\rightarrow \infty \), we have

under P2.

Similar to the case of \(|\phi | < 1\), Theorem 2 indicates that the possible infinite variance only affects the convergence rate of \({\hat{\mu }}\). The joint limit distribution reduces to that obtained in [21] if \(\lim \limits _{m\rightarrow \infty } l(b_m)\) is finite.

2.3 Limit theory for the unit root and near unit root cases

In these two cases, \(\rho =:\rho _n=1+\frac{c}{n}, c\in {\mathbb {R}}\). (\(c = 0\) corresponds to \(\rho = 1\), i.e., the unit root case.) Let \({\tilde{y}}_t=\sum _{i=1}^t \rho ^{t-i}e_i\), then

We have the following lemma:

Lemma 3

Let \(E_n(t)=\frac{\sum \limits _{i=1}^{\lfloor ns\rfloor }e_i}{\sqrt{nl(b_n)}}\), \(s\in [0, 1]\). Then

where \(\{{{\bar{W}}}(s), s\ge 0\}\) is a standard Brownian process, \(\lfloor \cdot \rfloor \) is the floor function, and \(\overset{D}{\longrightarrow }\) denotes the weak convergence. Moreover, define \(J_c(s) = \lim \limits _{a \rightarrow c} \frac{1-e^{as}}{-a}\), then as \(n\rightarrow \infty \), we have under P3 and P4 that

and in turn

where \(B_c(s)=e^{2c}(e^{-2cs-1})/(-2c)\).

This lemma can be easily proved using similar techniques as in [6] based on the fact that

Using this lemma, it is easy to check the following theorem.

Theorem 3

Under the conditions (C1)–(C3), as \(n\rightarrow \infty \), we have

under P3 and P4, where

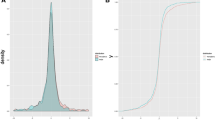

2.4 Limit theory for the moderate deviation cases

As stated in [19], the moderate deviation cases bridge the different convergence rates of cases P1–P4. That is, case P5 bridges the stationary case and the near unit root case, while Case P5 bridges the explosive case and the near unit root case. The derivation of these two cases needs to be handled differently because for the case \(c > 0\), the martingale center limit theorem fails to hold. Following [19], we consider them separately.

The following lemma is useful in deriving the limit distribution of the least square estimator under cases P5-P6.

Lemma 4

Suppose conditions (C1)–(C3) hold.

-

(i) Under P5, as \(n \rightarrow \infty \), we have

$$\begin{aligned} \begin{pmatrix} \frac{1}{\sqrt{n}}\sum \limits _{t=1}^n\frac{e_t}{\sqrt{l(b_n)}}\\ \frac{1}{\sqrt{n^\alpha }}\sum \limits _{t=1}^n\frac{\rho ^{t-1}e_t}{\sqrt{l(b_n)}}\\ \frac{1}{\sqrt{n^\alpha }}\sum \limits _{t=1}^n\frac{\rho ^{n-t}e_t}{\sqrt{l(b_n)}}\\ \frac{1}{\sqrt{n^{1+\alpha }}}\sum \limits _{t=2}^n \frac{e_t}{\sqrt{l(b_n)}} \sum \limits _{j=1}^{t-1} \frac{\rho ^{t-1-j}e_j}{\sqrt{l(b_n)}} \end{pmatrix} ~\overset{d}{\longrightarrow }~ \begin{pmatrix} V_{11}\\ V_{12}\\ V_{13}\\ V_{14} \end{pmatrix} \sim N(0, \Sigma _2), \end{aligned}$$where \(\Sigma _2= diag (1, -\frac{1}{2c},-\frac{1}{2c},-\frac{1}{2c})\), which implies that \(V_{1i}\)’s are independent;

-

(ii) Under P6, as \(n \rightarrow \infty \), we have

$$\begin{aligned} \begin{pmatrix} \frac{1}{\sqrt{n}}\sum \limits _{t=1}^n\frac{e_t}{\sqrt{l(b_n)}}\\ \frac{1}{\sqrt{n^\alpha }}\sum \limits _{t=1}^n\frac{\rho ^{-t}e_t}{\sqrt{l(b_n)}}\\ \frac{1}{\sqrt{n^{\alpha }}}\sum \limits _{t=1}^n\frac{\rho ^{t-1-n}e_t}{\sqrt{l(b_n)}} \end{pmatrix} ~\overset{d}{\longrightarrow }~ \begin{pmatrix} V_{21}\\ V_{22}\\ V_{23} \end{pmatrix}, \end{aligned}$$and

$$\begin{aligned} \frac{1}{\rho ^n n^\alpha }\sum _{t=2}^n \left( \sum _{i=1}^{t-1}\frac{\rho ^{t-1-i}e_i}{\sqrt{l(b_n)}}\right) \frac{e_t}{\sqrt{l(b_n)}} \overset{d}{\longrightarrow }\ V_{22}V_{23}, \end{aligned}$$where \((V_{21}, V_{22}, V_{23})^\top \sim N(0, \Sigma _3)~\text {with}~\Sigma _3=diag(1, \frac{1}{2c},\frac{1}{2c})\), which implies that \(V_{2i}\)’s are independent.

Theorem 4

Suppose conditions (C1)–(C3) hold.

-

(i) Under P5, we have as \(n\rightarrow \infty \)

$$\begin{aligned} \left( \begin{array}{ccc} a_n({\hat{\mu }}-\mu ) \\ a_nn^\alpha ({\hat{\rho }}-\rho ) \end{array} \right) \overset{d}{\longrightarrow }\left( \begin{array}{ccc} \frac{\mu }{cd} \\ \frac{1}{d} \end{array} \right) ^\top Z; \end{aligned}$$where

$$\begin{aligned} a_n= & {} \frac{\max (n^{1-\alpha /2}l(b_n), n^{3\alpha /2})}{\max (n^{\alpha }\sqrt{l(b_n)}, n^{1/2}l(b_n))},\\ Z= & {} \frac{\mu }{c} V_{12} I(\alpha> 1/2) + V_{14} I(\alpha \le 1/2),\\ d= & {} \frac{\mu ^2}{-2c^3} I(\alpha > 1/2) + \frac{1}{-2c}I(\alpha \le 1/2), \end{aligned}$$if \(\lim \limits _{n\rightarrow \infty } l(b_n) = \infty \), and

$$\begin{aligned} a_n= & {} n^{\max (\alpha , 1/2) - \alpha /2},\\ Z= & {} \frac{\mu }{c} V_{12} I(\alpha \ge 1/2) + V_{14} I(\alpha \le 1/2),\\ d= & {} \frac{\mu ^2}{-2c^3} I(\alpha \ge 1/2) + \frac{1}{-2c}I(\alpha \le 1/2), \end{aligned}$$if \(\lim \limits _{m\rightarrow \infty } l(b_m) = \sigma ^2\).

-

(ii) Under P6, we have as \(n\rightarrow \infty \)

$$\begin{aligned} \left( \begin{array}{ccc} \sqrt{\frac{n}{l(b_n)}}({\hat{\mu }}-\mu ) \\ \frac{n^{3\alpha /2}\rho ^n}{\sqrt{l(b_n)}}({\hat{\rho }}-\rho ) \end{array} \right) \overset{d}{\longrightarrow }\left( \begin{array}{ccc} V_{21} \\ \frac{2c^2}{\mu }V_{23} \end{array} \right) . \end{aligned}$$

Remark 3

Theorem 4 indicates that the possible infinite variance affects both estimators of \(\mu \) and \(\rho \). Similar to [21], the limit distribution of the least squares estimators under P5 is degenerated, but slightly differently, we obtain the exact limit distribution under this case.

Remark 4

Under some mild conditions, it is possible to extend the current result to the case that \(\rho = 1 + \frac{c}{k_n}\) by using similar arguments for some general sequence \(\{k_n\}\) such that \(k_n = o(n)\) and \(k_n / n \rightarrow 0\) as \(n \rightarrow \infty \) as studied in [19].

3 Detailed proofs of the main results

In this section, we provide all detailed proofs of the lemmas and theorems stated in Section 2.

Proof of Lemma 1

To handle the possible infinite variance, we use the truncated random variables. Let

where \(I(\cdot )\) denotes the indicative function. The key step is to show that the difference of replacing \(e_t\) by \(e_t^{(1)}\) in the summations is negligible.

Let \(\{{{\bar{y}}}_t^{(1)}\}\) and \(\{{{\bar{y}}}_t^{(2)}\}\) be two time series satisfying

Obviously, \(\{e_t^{(1)} / \sqrt{l(b_n)}: t\ge 1\}\) are i.i.d., and under P1), it is easy to check that \(\{{{\bar{y}}}_{t-1}e_t^{(1)} / \sqrt{l(b_n)} : t\ge 1\}\) is a martingale differences sequence with respect to \({\mathcal {F}}_{t-1} =\sigma (\{e_s: s \le t-1\})\) for \(t = 1, 2, \cdots , n\), which satisfy the Lindeberg’s condition. Hence, by the Cramér-Wold device and the central limit theorem for martingale difference sequences, we have

Next, under condition C3), it follows from [7] that

Then by \(nl(b_n)\le b_n^2\) and the Markov inequality, we have, for any \(\varepsilon > 0\),

That is,

Furthermore, note that \({{\bar{y}}}_{t-1}^{(k)}=\rho ^{t-1}\bar{y}_0^{(k)}+\sum _{i=1}^{t-1}\rho ^{t-1-i}e_i^{(k)},~k=1,2\). By the Hölder inequality, we have

Using the Markov inequality, we have

i.e.,

Similarly, we can show

This, together with (6)-(8), shows

while combined with (5) shows (3).

Note that \({{\bar{y}}}_t=\rho {{\bar{y}}}_{t-1}+e_t\). Multiplying both sides with \({{\bar{y}}}_t\) and \(y_{t-1}\) respectively, and taking summation, we have

Since

and

we have

by noting that \(\frac{\sum _{t=1}^n e_t^2}{nl(b_n)} \overset{p}{\longrightarrow }1\) (see (3.4) in [12]). Hence,

which implies that as \(n\rightarrow \infty \)

Note that

and

Using these, the rest proof of this lemma follows directly by the law of large numbers.

Proof of Theorem 1

For the least squares estimator, it is easy to check that

For convenience, hereafter write

Observe that

Hence, by Lemma 1 we have, as \(n\rightarrow \infty \),

Next, relying on

we obtain

Following a similar fashion, we have

Then this theorem follows immediately by using the continuous mapping theorem.

Proof of Lemma 2

For the first part, by following a similar fashion to Lemma 1, we can show that

The rest proof is similar to [3] and [21]. We omit the details.

For the second part, we only prove the case of \(\lim \limits _{m\rightarrow \infty }l(b_m)=\infty \). Let \({\tilde{y}}_t^{(k)}=\sum \limits _{i=1}^t \rho ^{t-i}e_i^{(k)}+\rho ^t y_0\), \(k=1,2\), \(t = 1, 2, \cdots , n\). Similar to Lemma 1, we have

for \((k,j) \in \{(1, 2), (2, 1), (2, 2)\}\), as \(n\rightarrow \infty \), and in turn, we can obtain that

The rest proof is similar to the first part. We omit the details.

Proof of Theorem 2

Using the same arguments as [21], it follows from Lemma 2 that

Then as \(n\rightarrow \infty \), we have

and

Then the theorem has been proved.

Proof of Theorem 3

Similar to the proof of Theorem 1, by Lemma 3, we have, as \(n \rightarrow \infty \),

which implies

and

which leads

and

which results in

Then the theorem has been proved.

Proof of Lemma 4

(i) Similar to Lemma 1, under P5, by the Markov inequality and the fact \(nl(b_n)\le b_n^2\), we have for any \(\varepsilon > 0\)

This implies that

Similarly, we can show that

Next, if one of i or j equals 2, it follows from Lemma A.2 of [14] that

We actually obtain

Based on the Cramér-Wold device and central limit theorem for martingales differences sequence, Lindeberg’s condition for the first part of the right side of (9) can be proved by using the same arguments as [19] and [14]. We omit the details.

(ii) The proof of the case under P6 is similar to that of (i) and [19], thus is omitted.

Proof of Theorem 4

(i) Under P5, observe that \(\rho ^n = o(n^{-\alpha })\), \(y_0 = o(n^{\alpha /2})\), and

which implies

It is easy to verify that

Hence,

and

and

These, together with Lemma 4, lead directly to (i).

(ii) Similarly, we have under P6

as \(n \rightarrow \infty \), which implies that

and

Using a similar technique, we obtain, as \(n \rightarrow \infty \),

and

Then as \(n\rightarrow \infty \), we have

Therefore, the result holds.

4 Concluding remarks

In this paper, we investigated the limit distribution of the least squares estimator of \((\mu , \rho )\) for the first-order autoregressive model with \(\mu \ne 0\). The discussions were taken under the assumption that the error variance may be infinite. The existing results fail to hold under this assumption. Our results show that the possible infinite variance affects the convergence rate of the estimator of the intercept in all cases, but only in some cases for the correlation coefficient; see Sections 3.3 and 3.4 for details. Based on the current results, one could build some testing procedures, e.g., t-statistics. However, their limit distributions may be quite complex because the least squares estimator has a different limit distribution in different cases, and even is degenerated in moderate deviations from a unit root case. Hence, it is interesting to construct some uniform statistical inferential procedures, e.g., confidence region for \((\mu , \rho )^\top \), which are robust to all cases above. Nevertheless, this topic is beyond the scope of the current paper and will be pursued in the future.

References

Andrews, D.W.K. and Guggenberger, P. (2009). Hybrid and size-corrected subsampling methods. Econometrica 77, 721–762.

Andrews, D.W.K. and Guggenberger, P. (2014). A conditional-heteroskedasticity-robust confidence interval for the autoregressive parameter. The Review of Economics and Statistics 96, 376–381.

Anderson, T.W. (1959). On asymptotic distributions of estimates of parameters of stochastic difference equations. The Annals of Mathematical Statistics 30, 676–687.

Chan, N.H., Li, D. and Peng, L. (2012). Toward a unified interval estimation of autoregressions. Econometric Theory 28, 705–717.

Cavaliere, G. and Taylor, A.M.R. (2009). Heteroskedastic time series with a unit root. Econometric Theory 25: 1228–1276.

Chan, N.H. and Wei, C.Z. (1987). Asymptotic inference for nearly nonstationary AR(1) processes. The Annals of Statistics 15, 1050–1063.

Csörgő, M., Szyszkowicz, B., and Wang, Q.Y., (2003). Donsker’s theorem for self-normalized partial sums processes. The Annals of Probability 31, 1228–1240.

Dickey, D.A. and Fuller, W.A. (1981). Likelihood ratio statistics for autoregressive time series with a unit root. Econometrica 49, 1057–1072.

Dios-Palomares, R. and Roldan, J.A. (2006). A strategy for testing the unit root in AR(1) model with intercept: a Monte Carlo experiment. Journal of Statistical Planning and Inference 136, 2685–2705.

Embrechts, P., Klüppelberg, K., and Mikosch, T., (1997). Modelling Extremal Events: for Insurance and Finance. Springer.

Fei, Y., (2018). Limit theory for mildly interated process with intercept, Economics Letters 163 98–101.

Giné, E., Götze, F., and Mason, D.M. (1997). When is the student t-statistic asymptotically standard normal? The Annals of Probability 25, 1514–1531.

Hill, J.B., Li, D. and Peng, L. (2016). Uniform Interval Estimation for an AR(1) Process with AR Errors. Statistica Sinica 26, 119–136.

Huang, S.H., Pang, T.X., and Weng, C. (2014). Limit theory for moderate deviations from a unit root under innovations with a possibly infinite variance. Methodology & Computing in Applied Probability 16, 187–206.

Liu, X., and Peng, L., (2017). Asymptotic Theory And Uniform Confidence Region For An Autoregressive Model. Technical report.

Mikusheva, A. (2007). Uniform inference in autoregressive models. Econometrica 75, 1411–1452.

Phillips, P.C.B. (1987). Towards a unified asymptotic theory for autoregression. Biometrika 74, 535-547.

Phillips, P.C.B. (1990). Time series regression with a unit root and infinite variance errors. Econometric Theory 6: 44–62.

Phillips, P.C.B., and Magdalinos, T. (2007). Limit theory for moderate deviations from a unit root. Journal of Econometrics 136, 115–130.

So, B.S. and Shin, D.W. (1999). Cauchy estimators for autoregressive processes with applications to unit root tests and confidence intervals. Econometric Theory 15, 165–176.

Wang, X., and Yu, J., (2015). Limit theory for an explosive autoregressive process. Economics Letters 126, 176–180.

Acknowledgements

The authors thank one anonymous referee for his/her comments, which have led to many improvements in this paper. Qing Liu’s research was supported by the Key Research Base Project of Humanities and Social Sciences in Jiangxi Province Universities (Grant No.JD20021), the NSF project of Jiangxi provincial education department (Grant No.GJJ190261), and the China Postdoctoral Science Foundation funded project (Grant No.2020M671961). Xiaohui Liu’s research is supported by the NSF of China (Grant No. 11971208), the National Social Science Foundation of China (Grant No. 21 &ZD152), and the Outstanding Youth Fund Project of the Science and Technology Department of Jiangxi Province (Grant No. 20224ACB211003).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Q., Xia, C. & Liu, X. Limit theory for an AR(1) model with intercept and a possible infinite variance. Indian J Pure Appl Math (2023). https://doi.org/10.1007/s13226-023-00506-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13226-023-00506-y