Abstract

Anderson (The Annals of Mathematical Statistics 30(3):676–687, 1959) studied the limiting distribution of the least square estimator for explosive AR(1) process under the independent and identically distributed (iid) condition on error i.e., \(X_t=\rho X_{t-1}+e_t\) where \(\rho >1\) and \(e_t\) is iid error with \(Ee=0\) and \(Ee^2<\infty \). This paper is mainly concerned about the limiting distribution of the least square estimator of \(\rho \), that is \(\hat{\rho }\), when errors are not identically distributed. In addition, we provide an approximate description of the limiting distribution of \(\sum _{j=0}^{n-1}\rho ^{-j}e_{n-j}\) when \(\rho >1\) as \(n\rightarrow \infty \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main results

Consider the following non-stationary AR(1) process defined by

where the coefficient \(\rho \ge 1\), the initial value \(X_0=0\) and the error process \(\{e_t, t\ge 1\}\) is iid with mean zero and finite variance. Observe that

The random walk (RW) refers to \(\rho =1\). For \(\rho >1\), \(\{X_t, t\ge 0\}\) is referred to as an ‘explosive’ process. See, for instance, Hwang and Basawa (2005) and Hwang (2013). The two cases, viz., \(\rho =1\) (RW) and \(\rho >1\) (explosive), are well investigated and documented in the literatures for the case of iid errors. In this paper, we are mainly concerned about the explosive case (\(\rho >1\)) which depends on the initial condition \(X_0\) or \(e_0\) critically. In the literatures it is employed to study economic bubble and often referred to as random chaotic system in the sense that it is highly sensitive to the initial condition. See, e.g., Kim and Hwang (2019) or Lee (2018).

When \(X_1,X_2, \ldots ,X_n\) denote a sample of size n, the least squares estimator of \(\rho \),

is known to be n-consistent and \(\rho ^n\)-consistent according to \(\rho =1\) and \(\rho >1\), respectively (see Fuller 1996, Ch.10). Now the following expression is useful to derive limit distributions of \(\hat{\rho }\),

where

and

For \(\rho >1\), Anderson (1959) showed that under the iid condition on error process \(\{e_t, t\ge 1\}\)

and

where

(refer to Theorem 2.1 and Theorem 2.2 there). Now observe that by (2) and (3)

in probability limit or \(plim_{n\rightarrow \infty }\). Thus

in probability limit. By showing that the random variables in \(Y_n\) and \(Z_n\) are asymptotically disjoint (refer to Theorem 2.3 and 2.4 there), he derived the related limiting distribution of \(\hat{\rho }\) for \(\rho >1\). Indeed

where Y is limiting distribution of \(Y_n\) and Z is limiting distribution of \(Z_n\). Refer to Theorem 2.5 there.

Now, we are mainly concerned about limiting distribution of \(\rho ^n(\rho ^2-1)^{-1}(\hat{\rho }-\rho )\) when errors are not identically distributed. This is a practical issue because economic bubble might be caused by non-identical shocks and severely affected by the initial shock. From this point of view, it would be quite essential to check how sensitively the initial condition give effects to \(\hat{\rho }\) under non-identical shocks. Our main results address these issues which are given below.

Theorem 1

Let \(Y_n^{(1)}=\sum _{j=0}^{[c_{n}^{(2)}]-1}\rho ^{-j} e_{n-j}\) and \(Z_n^{(1)}=\sum _{j=1}^{[c_{n}^{(1)}]}\rho ^{-(j-1)}e_j\) where \(1\le c_{n}^{(i)}=o(n)\) for \(i=1,2\) are sequences going to infinity slowly. Assuming \(sup_t Ee_t^2<\infty \) and \(\rho >1\), distributions of \(Y_n/Z_n\) and \(Y_{n}^{(1)}/Z_n^{(1)}\) are asymptotically equivalent in the sense that

Remark 1

Theorem translates Theorem 2.5 of Anderson (1959) under relaxed condition \(sup_t Ee_t^2<\infty \). We also introduce \(c_n^{(1)}\) and \(c_n^{(2)}\) for better concise descriptions of \(Y_n\) and \(Z_n\). These mainly follow from

It is worth mentioning that these sequences of \(c_n^{(1)}\) and \(c_n^{(2)}\) precisely attributes the independence of \(Y_n^{(1)}\) and \(Z_n^{(1)}\). For possible \(c_n^{(1)}\) and \(c_n^{(2)}\), one may consider slowly varying function which is defined as \(L(x):(0,\infty )\rightarrow (0,\infty )\) such that

For example, the function \(L(x) = (\log x)^\beta \) for any \(\beta \in R\) is slowly varying. In such case one can introduce \((\log n)^{\beta _i}\), \(\beta _i>0\), \(i=1,2\) for \(c_n^{(i)}\). This means that most errors are negligible except for very slowly increasing number of errors at both ends and hence error distribution might be allowed to vary on most occasions. In other words, any type error distribution is allowed between indices \(c_{n}^{(1)}+1\) and \(n-c_{n}^{(2)}\). Refer to Figs. 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17 and 18 for related simulation results.

Remark 2

Since \(c_{n}^{(1)}\) and \(c_{n}^{(2)}\) slowly go to infinity, one may reasonably approximate the limiting distribution of \(Y/Z\sim e_n/e_1\) in practice because \(e_n\) and \(e_1\) respectively influence the limiting distributions of Y and Z most. For instance, if both \(e_1\) and \(e_n\) are \(N(0,\sigma _0^2)\) and \(\rho >1\) is large, then one may reasonably approximate

If both \(e_1\) and \(e_n\) are uniform\((-1,1)\) and \(\rho \) is large,

whose density is given by

From Theorem 1, one might establish limiting distribution for \(\hat{\rho }\) by imposing additional condition.

Theorem 2

Assume that \(sup_t Ee_t^2<\infty \) and \(\rho >1\). Let \(\{e_{t,n}\}\) be triangular array of errors for \(t=1,\ldots ,n\) and \(n=1,\ldots \) If

as \(n\rightarrow \infty \), then we have

where Y and Z are independent.

Remark 3

Theorem 2 corresponds to Theorem 2.5 of Anderson (1959). Major differences between the two Theorems are to replace identically distributed e’s and \(Ee^2<\infty \) by (6) and \(sup_t Ee_t^2<\infty \). Note that (6) allows any types of distributions for \(e_{t,n}\) for \(t\le n-1\). Refer to Figs. 19, 20, 21 and 22 for related simulation results. One may apply Theorem 2 to test economic bubble under non-identical shocks. Note that it is usually recommended to fix \(\rho >1\) close to 1 for testing economic bubble because it is unrealistic to imagine \(\rho \) far greater than 1 under economic bubble.

Remark 4

Notice that if \(E\epsilon _t^2=\sigma _0^2\) for all t, \(Var(Y)=Var(Z)=\sigma _0^2/(1-\rho ^{-2})\) and central limit theorem could result in \(Y\sim N(0,1)\) or \(Z\sim N(0,1)\) as \(\rho \) decreases to one from above. In fact (Phillips and Magdalinos 2007) verify this by taking \(\rho _n=1+1/n^k\) for some \(0<k<1\) (see Theorem 4.3 there). This in turn suggests that Y and Z tend to have central tendency to zero at the cost of increased variance as \(\rho \) decreases to one.

2 Simulation study

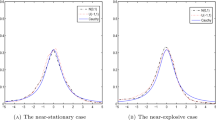

In this section, we carried out some Monte Carlo simulations to check validity of Theorems 1 and 2 in reality or at reasonable finite sample size. For this, we compare kernel density estimate \(\hat{f}_n\) and empirical distribution function \(\hat{F}_n\) of \(Y_n/Z_n\) with those of \(Y_n^{(1)}/Z_n^{(1)}\) in Theorem 1 under various non-identical error situations. In particular, we fix \(\rho = 1.02\) as recommended in Remark 3 and then generate model (1) with non-identical error sequences \(\{e_t\}\) as follows:

-

(i)

\(e_1,\ldots ,e_{[n/2]}\sim U(-1,1)\), \(e_{[n/2]+1}\ldots ,e_{n}\sim N(0,1)\),

-

(ii)

\(e_1,\ldots ,e_{[n/2]}\sim t(3)\), \(e_{[n/2]+1},\ldots ,e_{n}\sim N(0,1)\),

-

(iii)

\(e_1,\ldots ,e_{[n/10]}\sim U(-1,1)\), \(e_{[n/10]+1},\ldots ,e_{[9n/10]} \sim N(0,1)\), \(e_{[9n/10]+1},\ldots ,e_{n}\sim t(3)\),

Recall [x] denotes the greatest integer not exceeding x. Also we set

at \(n=200\) and 500. For each simulation setting, 1, 000 repetitions are made. Simulation results are reported in Figs. 1, 2, 3, 4, 5 and 6 (error sequence (i)), Figs. 7, 8, 9, 10, 11 and 12 (error sequence (ii)) and Figs. 13, 14, 15, 16, 17 and 18 (error sequence (iii)). It follows from these simulations that the distributions of \(Y_n/Z_n\) (blue line) or \(Y_n^{(1)}/Z_n^{(1)}\) (red line) are getting close to each other as n or \(\beta \) increase, which is as expected.

Regarding Theorem 2, the following triangular array of errors \(\{e_{t,n}\}\) are considered:

-

(iv)

\(e_{t,n}\sim N(0,1-1/n))\), \(t=1,\ldots ,n\),

-

(v)

\(e_{1,n}\sim N(0,1-1/n)\), \(e_{2,n},\ldots , e_{n-1,n}\sim U(-1,1)\), \(e_{n,n}\sim N(0,1-1/n)\),

and then compare kernel density estimate \(\hat{f}_n\) and qq plot \(\hat{Q}_n\) of \(\rho ^n(\rho ^2-1)^{-1}(\hat{\rho }-\rho )\) with those of the standard Cauchy distribution. Note that the Cauchy(0,1) is the limiting distribution for the error sequences of (iv) and (v) according to Theorem 2. Simulation results are reported in Figs. 19 and 20 (error sequence (iv)) and Figs. 21 and 22 (error sequence (v)). In Figs. 19, 20, 21 and 22, the distributions of \(\rho ^n(\rho ^2-1)^{-1}(\hat{\rho }-\rho )\) (blue line) and the standard Cauchy (red line) are getting close to each other as the sample size increases. Our simulation results confirms that Theorem 2 is regardless of the types of error distributions in between the first and the last standard normal errors.

3 Proofs

Proof of Theorem 1

In the below, the results assuming \(Ee^2=\sigma _0^2<\infty \) by Anderson (1959) are extended to the case of \(sup_t Ee_t^2< \infty \). First, the proofs of (2) and (3) by Anderson (1959) (see Theorem 2.1 and 2.2 there) are extended easily to our case of \(sup_t Ee_t^2<\infty \). Then using (4), the proof of Theorem 1 will be completed if (5) is established, i.e.,

Without loss of generality, it is sufficient to consider the case \(\sup _t|e_t|\le M\). This is so because by (14) and (15) in the proof of Lemma 1,

and

as \(M\rightarrow \infty \). Now (5) follows by (12) and (13) of Lemma 1. For \(Z_n\), letting

where \(\lceil x\rceil \) denote the least integer greater than or equal to x, we have

Owing to (12) of Lemma 1, we obtain for any \(\epsilon >0\),

Since the above holds for any positive integer r (recall \(\sup _t|e_t|\le M\) at this moment) and \(c_{n}^{(1)}\rightarrow \infty \) as \(n\rightarrow \infty \), we have

For \(Y_n\), letting

we have

For any \(\epsilon >0\), by using (13) of Lemma 1,

Since the above holds for any positive integer r and \(c_{n}^{(2)}\rightarrow \infty \) as \(n\rightarrow \infty \), we have

Recall that

in probability. \(\square \)

Proof of Theorem 2

Choose \(c_n^{(1)}=c_n^{(2)}=(\log n)^{\beta }\) for \(\beta >0\). Then by Theorem 1, we have \(\sum _{j=0}^{[c_{n}^{(2)}]-1} \rho ^{-j} e_{n-j}\Rightarrow Y\) and \(\sum _{j=1}^{[c_{n}^{(1)}]} \rho ^{-(j-1)}e_j\Rightarrow Z\), which leads to

Since the above holds for any \(\beta >0\), the proof is completed. \(\square \)

Lemma 1

Assume that \(\sup _t Ee_t^{2r}<\infty \) for \(r>2\). Then we have

Furthermore

where \(c_{n}^{(1)}\rightarrow \infty \) as \(n\rightarrow \infty \) and

where \(c_{n}^{(2)}\rightarrow \infty \) as \(n\rightarrow \infty \).

Proof

Assume \(Ee^2=\sigma _0^2\) for the moment. Using independence and \(Ee=0\), one may obtain

and

This establishes (11) under \(Ee^2=\sigma _0^2<\infty \) and its extension to \(\sup _t Ee_t^2 <\infty \) is trivial. To verify (12), let r be a positive integer. Observe that

The above holds by the Minkowski inequality and \(\sup _t Ee_t^{2r}<\infty \), which validates (12). Moreover, (13) can be yielded similarly to (12). Hence, the lemma is established. \(\square \)

References

Anderson, T. W. (1959). On asymptotic distributions of estimates of parameters of stochastic difference equations. The Annals of Mathematical Statistics, 30(3), 676–687.

Fuller, W. A. (1996). Introduction to statistical time series (2nd ed.). New York: Wiley.

Hwang, S. Y. (2013). Arbitrary initial values and random norm for explosive AR(1) processes generated by stationary errors. Statistics & Probability Letters, 83(1), 127–134.

Hwang, S. Y., & Basawa, I. (2005). Explosive random-coefficient AR(1) processes and related asymptotics for least-squares estimation. Journal of Time Series Analysis, 26(6), 807–824.

Kim, T. Y., & Hwang, S. Y. (2019). Slow-explosive AR(1) processes converging to random walk. Communications in Statistics-Theory and Methods. https://doi.org/10.1080/03610926.2019.1568486.

Lee, J. H. (2018). Limit theory for explosive autoregression under conditional heteroskedasticity. Journal of Statistical Planning and Inference, 196, 30–55.

Phillips, P. C., & Magdalinos, T. (2007). Limit theory for moderate deviations from a unit root. Journal of Econometrics, 136(1), 115–130.

Acknowledgements

We would like to thank the Editor, an AE, and the two referees for their careful reading and valuable comments and helpful suggestions. This work was supported by a grant from the National Research Foundation of Korea (NRF-2019R1F1A1060152(TY Kim), NRF-2018R1A2B2004157(SY Hwang)).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kim, T.Y., Hwang, S.Y. & Oh, H. Explosive AR(1) process with independent but not identically distributed errors. J. Korean Stat. Soc. 49, 702–721 (2020). https://doi.org/10.1007/s42952-019-00032-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42952-019-00032-w