Abstract

This paper presents the stability analysis of three real large rock slopes prone to failure with different mechanisms to investigate the applicability of the response surface methodology (RSM) for reliability-based rock slope problems. Initially, a detailed review of studies was performed to identify the gaps and common types of reliability-based rock slope problems. The applicability of RSMs based reliability methods was then investigated for three types of identified rock slope reliability problems via three case studies–type (1) Chenab rock slope prone to stress-controlled failures neglecting spatial variability, type (2) Deccan gold mine slope prone to stress-controlled failures considering spatial variability, and type (3) Rishikesh-Badrinath Highway slope prone to structurally controlled failures neglecting spatial variability. Analysis was performed by coupling MATLAB coded RSMs with advanced numerical tools and results were compared with those of direct Monte-Carlo Simulations (MCSs) based reliability method. Further, a detailed comparative study was carried out to evaluate the effect of the use of different response surfaces, i.e., polynomial based, Radial Basis Functions (RBFs) based, Support Vector Machine (SVM) based, kriging, Moving Least Square (MLS) and Gaussian Process Regression (GPR) on the accuracy and efficiency of RSMs based reliability analysis. Accuracy was evaluated using the Nash–Sutcliffe Efficiency (NSE) and Relative Difference in Reliability Index (RDRI), while computational efficiency was evaluated via the computational time required to evaluate the reliability with acceptable accuracy. RSMs based methods are observed to be highly accurate and efficient for the reliability analysis of large rock slopes. Further, the accuracy and efficiency are observed to be dependent upon the employed response surfaces and type of the problem. Suggestive guidelines are provided for selecting most suitable response surfaces for different problem types– a) for type 1 problem, Kriging and Least Square (LS)-SVM, b) for type 2 problem, some RBFs based and LS-SVM, and c) for type 3 problem, some RBFs based and LS-SVM are the most suitable RSMs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Risk is defined as the possibility of suffering damage which is considered to be a function of the probability of event occurrence and the resulting consequence in the engineering domain (Baecher and Christian 2005). Slope failures are such major events resulting in significant damage to human lives and the economy. For India, an annual landslide damage of $1.35 billion is reported for the total 89,000 km of roads in northern India's landslide-prone and fragile mountainous territories, along with significant casualties (Mathur 1982). The most important step for managing and preventing these risks is to conduct a probabilistic risk assessment of the rock slopes. Reliability technique is an important tool to estimate these risks using modern probabilistic and statistical methods. Different reliability methods such as Point Estimate Methods (PEMs), First Order Reliability Method (FORM), Second-Order Reliability Method (SORM) and Monte-Carlo Simulations (MCSs) have proven their accuracy and practical utility for a wide range of rock slope reliability problems (Low and Einstein 1992; Düzgün et al. 1995; Park and West 2001; Duzgun and Bhasin 2009; Ahmadabadi and Poisel 2016). Table 1 shows the studies and classification of the reliability studies on rock slopes based on the major types of failure mechanisms and analysis methods. It can be observed that the majority of initial studies were related to the reliability analysis of structurally controlled rock slopes (type 3 problems) and analysis were performed via the coupling of analytically formulated performance functions with methods like FORM or MCSs. However, due to the limitations of analytical formulations like inability to incorporate the advanced constitutive models, spatial variability, complex in-situ stresses and boundary conditions (Jiang et al. 2015; Qian et al. 2017; Kumar et al. 2018; Ji et al. 2020; Abdulai and Sharifzadeh 2021), the focus has currently shifted towards the coupling of advanced numerical tools like finite element, finite difference, etc., with reliability methods (Huang et al. 2017; Pandit et al. 2018; Tiwari and Latha 2019; Zhao et al. 2020; Zhang et al. 2021). However, due to the unavailability of explicit performance functions in many reliability problems, traditional reliability methods like FORM/SORM cannot be applied as they require the derivative of the performance function. Further, the simulation-based methods like MCSs are computationally inefficient for large rock slopes as they require repeated evaluation of the numerical models for the random sets of input properties.

For these cases, Response Surface Methods (RSMs) can be employed which develop surrogate performance functions based on the limited numerical simulations. This provides a computationally efficient and accurate en route for the risk assessment and remedial measures design of rock slopes utilizing reliability methods (Pandit et al. 2018, 2019; Tiwari and Latha 2019). Studies on the consistent developments to enhance the accuracy and efficiency of the RSMs, and their practical applicability for different engineering problems are available in the literature (Hill and Hunter 1966; Mead and Pike 1975; Myers et al. 1989; Myers 1999; Bucher and Most 2008; Li et al. 2016; Zhu et al. 2019b). Table 2 summarizes the studies related to the application of RSMs for the reliability-based stability analysis of rock slopes. It can be observed that the studies on the development and application of RSMs for the reliability-based stability analyses of rock slopes are limited. Most of these studies employed the polynomial based RSM due to its mathematical simplicity despite many disadvantages related to its accuracy and efficiency (Shamekhi and Tannant et al. 2015; Pandit et al. 2016; Dadashzadeh et al. 2017; Basahel and Mitri 2019; Roy et al. 2019). From the extensive literature review, major gaps are identified as: (i) lack of any guideline for the selection of the most appropriate RSM among the available RSMs and their required sampling points for the reliability-based stability analysis of different types of rock slope problems, (ii) lack of studies demonstrating the applicability and advantages of advanced RSMs for rock slope problems as most studies employed polynomial RSMs due to their mathematical simplicity, and (iii) no studies on the applicability of RSMs for the reliability-based stability analysis of rock slopes considering the spatial variation of properties (type 2 problem).

To address these issues, this study demonstrates and compares the applicability and advantages of traditional and advanced RSMs for the reliability-based stability analysis of different types of rock slope problems. These problem types are identified based on an extensive literature review: type (1) stress-controlled failures neglecting spatial variability, type (2) stress-controlled failures considering spatial variability, and type (3) structurally controlled failures neglecting spatial variability. Performances of sixteen traditional and advanced RSMs are evaluated for the reliability-based stability analysis of three real rock slopes corresponding to the above-mentioned problem types to identify the dependence of the accuracy and efficiency of RSMs on the problem types. Real and large natural rock slopes with complex geometries and geologies were used for the analyses to get a more realistic idea of the applicability of the RSMs. Based on the extensive numerical simulations (\(\sim 5\times {10}^{3}\)) and data analyses, guidelines are suggested for the selection of the most appropriate RSM and the number of the required sampling points for the stability analysis of different types of rock slope problems. This paper is organized into five sections. “Response surface methods (RSMs)” provides the theoretical details of the considered RSMs. “Evaluation of RSMs performance” provides the methodology adopted to compare the performances of the considered RSMs. “Application of RSMs to major slope reliability problems” describes the major rock slope reliability problems identified through literature review and analyses of the case studies to demonstrate the applicability of RSMs along with the suggestive guidelines. “Conclusions” provides the important conclusions and limitations of the study.

Response surface methods (RSMs)

RSMs basically consist of developing an explicit surrogate functional relationship between response parameters (e.g., Factor of Safety (FOS) for the rock slopes) with input random variables (e.g., rock properties) based on the limited numerical experiments. The basic mathematical formulation of the RSMs is given below (Bucher and Most 2008).

Let the required response parameter for the rock slopes, i.e., \(G({\varvec{Z}})\) (\(FOS({\varvec{Z}})\) for this study), depend upon a vector \({\varvec{Z}}\) of \(d\) input random variables (rock properties), i.e. \({\varvec{Z}} ={({z}_{1}, {z}_{2}, {z}_{3},\dots \dots {z}_{d})}^{T}\). In many rock slope problems, it is impossible to derive an explicit relation between the response parameter and input rock properties. RSMs provide an alternative relationship between response (\(FOS({\varvec{Z}})\)) and input variables (\({\varvec{Z}}\)) through a flexible function \(\widehat{G}({\varvec{Z}})\) and can be approximately represented as following.

where, \({\varvec{\theta}}={\theta }_{1}, {\theta }_{2}, {\theta }_{3},\dots \dots ,{\theta }_{m}\) is the vector of the parameters of the approximating function \(\widehat{G}({\varvec{Z}})\) and is evaluated through numerical experiments carried out for limited input realizations. This section will provide the theoretical details of the considered RSMs and methodology to determine parameters for these RSMs. In the following sections, \(\widehat{G}\left({\varvec{\theta}};{\varvec{Z}}\right)\) is represented as \(\widehat{G}\left({\varvec{Z}}\right)\) for simplicity. The Latin Hypercube Sampling (LHS) based space-filling design is adopted for generating random input vector realizations (i.e., sampling points) from the distributions of input parameters (Montgomery 2001).

Polynomial model with cross terms (Q-I)

This is the most commonly used RSM in rock engineering due to its computational simplicity and its final form which is a closed-form algebraic expression (Krishnamurthy 2003; Li et al. 2016). The efficiency of this RSM depends on the order and cross-terms of the polynomial function, which are used to represent the whole input parameter domain. Figure 1a shows an example of the polynomial RSM. A general quadratic (second-order) polynomial model can be defined as following.

where, \({z}_{i}\) and \({z}_{j}\) are the design variables and \({a}_{0}\), \({a}_{i}\) and \({a}_{ij}\) are the unknown coefficients. The unknown coefficients (\(m\)= \(\left(d+1\right)\left(d+2\right)/2\)) can be determined uniquely with \(m\) numerical experiments corresponding to \(m\) sampling points. For a larger number of sampling points \(n\)(\(>m\)), the system of equations becomes overdetermined. In that case, the method of least squares that minimizes the sum of the squares of the residual error (i.e., the difference between the actual/true and the approximated response at a sampling point) can be used in the coefficient estimation process.

Polynomial model without cross terms (Q-II)

This is a type of the previous RSM in which a quadratic polynomial without cross (interaction) terms is used. The expression for this RSM is defined as following.

where, \({a}_{0}\), \({a}_{i}\) and \({b}_{i}\) are the \(m=\left(2d+1\right)\) unknown coefficients which can be determined as explained for the previous RSM (Q-I).

Classical radial basis function (RBF)

The RBF-based RSMs are developed to efficiently approximate the multi-variable performance functions which are highly non-linear in their entire input domain. The classical RBF methods approximate the numerical model output \(G({\varvec{Z}})\) by a weighted linear combination of radially symmetric non-linear functions which depend on the Euclidean norm between observation point \({\varvec{Z}}\) and \({i}^{th}\) sampling point input vector \({{\varvec{Z}}}_{i}\), i.e., \({\| {\varvec{Z}}-{{\varvec{Z}}}_{i}\| }_{2}\). Figure 1b illustrates the basic concept of the RBF RSM. The functional form for the RBF RSM can be written as given below (Krishnamurthy 2003).

where, \(\phi\) is the radial basis function, \(n\) is the number of sampling points obtained using Latin Hypercube Sampling (LHS), and \({\lambda }_{i}\) are the interpolation/weightage coefficients to be determined. The unknown coefficients \({\varvec{\lambda}}\) can be determined by solving the system of linear equations as given below.

where, \({\varvec{Y}}={[G\left({{\varvec{Z}}}_{1}\right) G\left({{\varvec{Z}}}_{2}\right) ...\boldsymbol{ }\boldsymbol{ }G\left({{\varvec{Z}}}_{n}\right)]}^{{\varvec{T}}}\) is the known vector of numerical model outputs estimated by solving numerical models at \(n\) sampling points and matrix \({\varvec{A}}\) can be obtained as given below.

Popular RBFs include Linear (L–RBF), Cubic (C–RBF), Gaussian (G–RBF) and Multiquadric (MQ-RBF) basis functions, i.e., \(\phi\) as shown in Appendix 1.

The approximation accuracy of these RBFs models depends on a parameter, i.e., \(c\), known as the shape or shift parameter whose optimal estimation can be made with the leave-one-out cross-validation (\(LOOCV\)) technique (Rippa 1999). The \(LOOCV\) uses \(n-1\) sampling points to const`ruct the response surface and the remaining sample point as cross-validation. This procedure of leaving out one sampling point is repeated for all \(n\) points and squares of error are added cumulatively with varying shape parameter (\(c\)) values. Thus, the value of \(c\) for which \(LOOCV\) is minimum is adopted for RBF response surface construction.

where, \(\widehat{G}({{\varvec{Z}}}_{i})\) is approximated output of the left-out point from the response surface constructed from \(n-1\) sampling points and \(G\left({{\varvec{Z}}}_{i}\right)\) is the actual output obtained from the numerical model at the left-out point.

Compactly supported RBF

Compactly supported RBFs are developed to increase the computational efficiency by converting the globally supported system of classical RBFs into a local one. This is achieved by defining the basis functions in such a way that they only give weightage to sample points that are within a certain distance (i.e., the radius of compact support) from the observation point. This makes the resultant matrix \({\varvec{A}}\) (Eq. 6) sparse and banded. Thus, compactly supported RBF approximations are more feasible for solving large-scale problems.

Apart from the basis function, definitions of all the other parameters in Eq. 4 are valid for compactly supported RBFs. In this study, the following two compactly supported RBFs are considered (Krishnamurthy 2003).

Compact-I (C-I-RBF):

Compact-II (C-II-RBF):

where, \(t = {\raise0.7ex\hbox{$r$} \!\mathord{\left/ {\vphantom {r {r_{0} }}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${r_{0} }$}}\); \(r_{0}\) is the compact support radius of the domain which is a free parameter chosen by minimizing the \(LOOCV\) error.

Augmented RBF

The compactly supported RBFs require a large number of sampling points to reproduce the simple polynomials, i.e., constant, linear and quadratic (Krishnamurthy 2003). Augmented RBFs are developed to approximate the simple polynomials efficiently by augmenting polynomial terms to the basis functions of compactly supported RBFs as given below (Krishnamurthy 2003).

where, \({P}_{j}({\varvec{Z}})\) are the monomial terms of polynomial \(P({\varvec{Z}})\) and \({c}_{j}\) are the \(m\) constant coefficients introduced due to augmentation of the polynomial. For quadratic polynomial without cross term, value of \(m\) is \(2d+1\) (i.e.,\({P}_{j}({\varvec{Z}})={[1 {z}_{1} {z}_{2} ... {z}_{d} {z}_{1}^{2}{ z}_{2}^{2} ... {z}_{d}^{2}]}_{1\times m}\)). Additional \(m\) coefficients are estimated by employing orthogonality conditions as given below.

By combining Eq. 10 and Eq. 11, unknown coefficients \({\varvec{\lambda}}\) and \({\varvec{c}}\) can be estimated by solving the following system of equations.

where, \({{\varvec{C}}}_{ij}={P}_{j}\left({{\varvec{Z}}}_{i}\right) \mathrm{for} i=\mathrm{1,2},...n; j=\mathrm{1,2},...m\) and\({\varvec{c}}={[{c}_{1} {c}_{2} ... {c}_{m}]}^{T}\). Matrices\({\varvec{\lambda}}\),\({\varvec{A}}\), and \({\varvec{Y}}\) can be formed similarly as mentioned in “Classical Radial Basis Function (RBF)”. In this study, the two compactly supported RBFs defined in “Compactly supported RBF” (Eq. 8 and Eq. 9) are considered.

Moving least square (MLS)

The MLS is a local RSM with high approximation accuracy compared to the conventional least square method for the polynomial RSMs (Krishnamurthy 2003). Figure 1c shows the basic concept of the MLS RSM approximation. The MLS RSM approximates the polynomial surface from a set of sampling points within the influence domain of/around the observation point (\({\varvec{Z}}\)) through the least square measures. The sampling points are weighted according to their distance from the observation point. In MLS-RSM, an explicit polynomial approximation of the implicit performance function in terms of the random variables is made as given below (Krishnamurthy 2003).

where, \({\varvec{p}}({\varvec{Z}})={[1 {z}_{1} {z}_{2} ... {z}_{d} {z}_{1}^{2} {z}_{2}^{2} ... {z}_{d}^{2}]}_{1\times m}\) is a quadratic polynomial basis of function (\(m=2d+1\)). \({\varvec{a}}({\varvec{Z}})\) is a set of unknown coefficients which is expressed as a function of the observation point \({\varvec{Z}}\) to consider the variation of the coefficients for every new observation point.\({\varvec{a}}({\varvec{Z}})\) can be determined as given below.

where, \({\varvec{Y}}={[G\left({{\varvec{Z}}}_{1}\right) G\left({{\varvec{Z}}}_{2}\right)...\boldsymbol{ }G\left({{\varvec{Z}}}_{n}\right)]}^{{\varvec{T}}}\) is the vector of known performance function values obtained from the numerical modeling at \(n\) sampling points and matrix \({\varvec{A}}\) and \({\varvec{B}}\) are defined as given below.

with

where, \({w}_{i}({\varvec{Z}})\) is the weighting or smoothing function representing the weightage/contribution of different sampling points to the approximated \(\widehat{G}\left({\varvec{Z}}\right)\) for observation point \({\varvec{Z}}\). Among the available weighting functions, the spline weighting function (\({C}^{1}\) continuous) with compact support is considered in this study which can be expressed as following.

where, \(r = {\raise0.7ex\hbox{${\left\| {{\varvec{Z}}{-}{\varvec{Z}}_{i} } \right\|_{2} }$} \!\mathord{\left/ {\vphantom {{\left\| {{\varvec{Z}}{-}{\varvec{Z}}_{i} } \right\|_{2} } {l_{i} }}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${l_{i} }$}}\); \({l}_{i}\) = size of the domain of influence which is chosen as twice the distance between the \({(1+2d)}^{th}\) sample point and the observation point \({\varvec{Z}}\); \(d\) is the number of random variables and \(n\) is the number of sampling points.

Kriging

Kriging is a statistical RSM that assumes the deterministic response as a stochastic process. Figure 1d shows the concept of the kriging approximation. This method approximates the response at an observation point by giving more weightage to nearby sampling points utilizing spatial correlation among the sample points and minimizing the variance of the residual errors. Kriging model postulates the approximation of performance function in the form as given below (Krishnamurthy 2003).

where, \(\beta\) is the unknown coefficient; \({\varvec{Y}}\) is the matrix of \(n\) sample points response values obtained by numerical analysis; \({\varvec{f}}\) is a \(n\times 1\) matrix with all values equal to unity; \({\varvec{r}}({\varvec{Z}})\) is the matrix of correlations between the observation point \({\varvec{Z}}\) and all \(n\) sample points (\({{\varvec{Z}}}_{i}\)) in parameter space, and \({\varvec{R}}\) is the correlation matrix of all \(n\) sample points. The terms in the correlation matrices are computed using a Gaussian correlation function as following.

The value of the parameter \(\theta\) is estimated from \(n\) sample response values via maximum likelihood estimation (\(MLE\)) and this \(MLE\) problem can be solved by reducing it to a one-dimensional optimization problem as following.

where, \({\sigma }^{2}\) variance and unknown coefficient \(\beta\) are estimated using generalized least squares as following.

Support vector machine (T-SVM)

The SVM formulates the performance function approximation problem as an inequality constraint based convex optimization problem (quadratic programming problem). Figure 1e shows the concept of the SVM for linear approximation. The SVM based RSM sets a tolerance approximation margin (\(\pm \varepsilon\)) and uses only values outside the margin to approximate the performance function. For mapping of non-linearly separable functions, SVM uses kernel functions to transfer input space to high dimensional feature space and then linear separation is carried out. The optimization problem is given below (Zhao 2008).

where, \(C\) is the penalty parameter (\(C>0\)); \(\varepsilon\) is the approximation accuracy; \({\xi }_{i}\) and \({\xi }_{i}^{*}\) are the slack variables; \({\varvec{Y}}\) is the matrix of response values at \(n\) sample points obtained by numerical analysis; \(w\) is the weightage vector and \(b\) is a bias coefficient.

Solving the above optimization problem, the resulting SVM regression model for the non-linear function approximation takes the form as given below.

where, \(b\) can be estimated as following.

where, \({\alpha }_{i}\) and \({\alpha }_{i}^{*}\) are Lagrange multipliers and \(K(.)\) represents the kernel function. In this study, the Gaussian radial kernel (or covariance) function with kernel width \(\sigma\) is considered which is defined as following.

Least square-support vector machine (LS-SVM)

The LS-SVM is an improvement over the SVM method to increase its computational efficiency which involves the equality instead of inequality constraints. The LS-SVM works with the least-squares errors as shown in Fig. 1f instead of nonnegative errors to the loss function as for the SVM. In this way, the solution follows a linear Karush–Kuhn–Tucker system instead of a quadratic programming problem which thus reduces the computation time significantly.

For LS-SVM function, the optimization problem for approximations is formulated as following (Samui et al. 2013).

where, \({e}_{i}\) is the approximation error term for \({i}^{th}\) sampling point, \(\gamma \ge 0\) is the regularization constant. The resulting LS-SVM response surface for function approximation can be written as following

where, \({\alpha }_{i}\) are Lagrange multipliers and bias coefficient \(b\) can be estimated as following.

where, \(\boldsymbol{\alpha }={\left[{\alpha }_{1} {\alpha }_{2}\dots {\alpha }_{n}\right]}^{T}\); \({\varvec{f}}={\left[1 1\dots 1\right]}_{n\times 1}^{T}\) and \({{\varvec{\Omega}}}_{ij}=K({{\varvec{Z}}}_{i}, {{\varvec{Z}}}_{j})\) \(i,j=1, 2, \dots , n\). The Gaussian radial kernel, as in Eq. 28, is considered for the LS-SVM method.

Gaussian progress regression (GPR)

The GPR is a Bayesian framework-based non-parametric RSM. It provides the probability of responses with prediction errors compared to other regression methods. GPR uses the concept of kernel functions-based regression and hence, can approximate the non-linear performance functions with high accuracy and efficiency. In GPR RSM, response \(\widehat{G}({\varvec{Z}})\) for the observations \({\varvec{Z}}\) can be modeled using the posterior distribution based on the Baye's rule as given below (Kang et al. 2015).

where, \({\varvec{Z}}\) represents sampling points and \({\varvec{Y}}\) is the corresponding numerical model output vector, \({\varvec{K}}\) is the covariance matrix and its elements are \({K}_{ij}=K({{\varvec{Z}}}_{{\varvec{i}}},{{\varvec{Z}}}_{{\varvec{j}}})\), \({\varvec{I}}\) is the unit matrix and \({\sigma }_{n}\) is the standard deviation of Gaussian noise for the training data. The squared exponential covariance function with automatic relevance determination (Rasmussen and Williams 2006) is used in this study as following.

where, \({\sigma }_{f}\) is the standard deviation of the field, \(\boldsymbol{\Lambda }=diag(\lambda )\) and a set of hyperparameter \(\theta =(\mathrm{ln}{\lambda }_{1}, \mathrm{ln}{\lambda }_{2}, \dots ,\mathrm{ln}{\lambda }_{n},\mathrm{ln}{\sigma }_{f})\) can be obtained optimally by the gradient‐based marginal likelihood optimization with the log‐likelihood function of the hyperparameter as following.

Evaluation of RSMs performance

This section provides the details of the methodology adopted for the estimation of the reliability index (\(\beta\)) and the evaluation of the performances of RSMs.

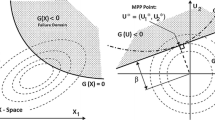

Estimation of the reliability index

Estimation of \(\beta\) using RSMs involved two major steps. Firstly, RSMs were used to obtain the performance function (\(\widehat{G}\)) and then the MCSs were performed on this function to estimate the \(\beta\). MCSs method was adopted to estimate the reliability index (\(\beta\)) due to its accuracy, robustness and concept simplicity. MCSs involves the generation of a large number of random sets '\(N\)' of input rock properties, i.e., \({z}_{1}, {z}_{2}, {z}_{3},\dots ,{z}_{d}\), by random sampling based on their probability distributions (PDs). These random sets are treated as an outcome of the laboratory or field investigations. The values of the performance function (constructed using RSMs for this case), i.e., \(G\left({\theta }_{1}, {\theta }_{2}, {\theta }_{3},\dots ,{\theta }_{m};{z}_{1}, {z}_{2}, {z}_{3},\dots ,{z}_{d}\right)\) are estimated for generated random sets as a deterministic analysis. Figure 2 shows the basic concept of MCSs. The reliability index using RSMs-MCSs, i.e., \({\beta }_{RSM}\) can be estimated by evaluating the statistics of resulting performance function values as given below.

where, \(E\left[\widehat{G}\right]\) and \(\sigma \left[\widehat{G}\right]\) are the mean and standard deviation (SD) of the performance function.

Additionally, the \(\beta\) was also estimated directly using the MCSs. This was done by evaluating \(G\left({\theta }_{1}, {\theta }_{2}, {\theta }_{3},\dots \dots ,{\theta }_{m};{z}_{1}, {z}_{2}, {z}_{3},\dots \dots ,{z}_{d}\right)\) for '\(N\)' random sets of input rock properties, i.e., \({z}_{1}, {z}_{2}, {z}_{3},\dots \dots {z}_{d}\) (generated via MCSs) using the original numerical program adopted to assess the stability of different rock slopes. Finally, \(\beta\) can be estimated similarly using Eq. 38 as explained above. This direct methodology and estimated \(\beta\) were termed as the direct MCSs (DMCSs) and \({\beta }_{DMC}\) respectively. Different accuracy indicators based on the \({\beta }_{DMC}\) and \({\beta }_{RSM}\) were then used to measure the accuracy of RSMs as explained in the next section.

Evaluation of the accuracy of RSMs

The accuracy of the RSMs was evaluated using two approaches for the current study as described below.

a) Initially, the accuracy of the RSMs was estimated using an accuracy indicator known as Nash–Sutcliffe Efficiency (NSE) (Moriasi et al. 2007). NSE can be estimated by generating \(k\) off-sample points (20 in this study) of input rock properties. For these off-sample points, FOSs were estimated using both the original numerical program and RSMs represented as \({FOS}_{i}^{original}\) and \({FOS}_{i}^{RSM}\) respectively. NSE can be estimated as given below.

where, \({FOS}^{mean}\) represents the mean value of \({FOS}_{i}^{original}\).

Performance of RSMs for NSE > 0.75 is considered very good. A complete description of the performance rating of RSMs based on NSE is shown in Appendix 2.

b) In addition to the NSE, the accuracy of RSMs was also evaluated with respect to the accuracy in the estimation of \(\beta\). For this analysis, an indicator namely Relative Difference in Reliability Index (RDRI) was used for this purpose which can be estimated as given below.

Higher values of RDRI mean higher inaccuracies in the prediction of \(\beta\) by the RSMs.

Evaluation of computational efficiency of RSMs

The computational efficiency of the RSMs was defined in terms of the total time required to compute the performance function values for minimum number of sampling points (\({N}_{sp}^{min}\)) required to construct the RSMs with acceptable accuracy. This time was represented as \({T}_{sp}\) and can be estimated by multiplying \({N}_{sp}^{min}\) with the time required to evaluate one sample point (\({T}_{sp}^{1}\)) using the original numerical program as following.

Acceptable accuracy for the RSMs is the condition when the NSE values corresponding to very good performance rating (0.75–1.0) are achieved. NSE was used in the present study to determine the computational efficiency due to its clear guidelines on the expected performance of RSMs as compared to RDRI (Appendix 2). Further, the number of sample points used for the construction of RSMs was represented as \({N}_{sp}\) and the maximum number of sample points used for RSMs construction for an application example was represented as \({N}_{sp}^{max}\).

Application of RSMs to major slope reliability problems

This section investigates the applicability of RSMs for three types of rock slope reliability problems– type (1) stress-controlled slope failure neglecting spatial variability, type (2) stress-controlled slope failure considering spatial variability, and type (3) structurally controlled failure neglecting spatial variability. Three case studies corresponding to these problem types were analyzed using different RSMs to rank them based on their accuracy and computational efficiency.

Type 1 problem: stress-controlled slope failure neglecting spatial variability

Stress-controlled failures along the rock slopes occur generally along highly weathered or closely fractured rocks with approximately circular failure surfaces. These types of failures occur along the rock slopes when–i) individual blocks in the rock mass are very small compared to the slope dimensions or, in other words, discontinuity spacing is very small, and ii) no single discontinuity is dominating the slope behavior. Reliability analyses for this type of problem are performed by considering the homogenous distribution of input properties, i.e., neglecting their spatial variability, as shown in Table 1. This assumption is more appropriate for the slopes with higher correlation lengths between rock properties, i.e., low spatial variability. Reliability analyses for this problem types were generally performed by coupling analytical limit equilibrium methods with traditional reliability methods in the past. However, there were several subjective assumptions made in these analyses especially for the failure surfaces and material models (Wyllie and Mah 2004; Kumar et al. 2018; Abdulai and Sharifzadeh 2021). This often leads to inaccuracies in the estimated results. Hence, the analyses for these slopes are performed with advanced numerical tools (FDM, FEM etc.) in the recent years as it helps to overcome the limitations related to the prior assumptions of the failure surface and provides flexibility to consider different material models. However, it is not possible to obtain explicit performance functions for these analyses which are required to apply reliability methods efficiently (Pandit et al. 2019; Kumar and Tiwari 2022a). RSMs can provide a better alternative under these conditions which will be investigated through a systematic analysis of a case study for this article.

The case study considered for this problem type is a large rock slope (dimensions of 293 m × 196 m) supporting piers of the world's highest railway bridge crossing the Chenab River in Jammu and Kashmir, India. Rock present at the site was dolomite intersected with closely spaced three major joint/discontinuity sets along with some random joint sets (Tiwari and Latha 2019). Physical and mechanical properties of intact rock were estimated by International Society for Rock Mechanics (ISRM) suggested methods (ISRM 1981) and are summarized in Table 3. Table 4 shows the properties of the major joint sets along the rock slope. Rock mass along the slope was assumed to follow elastic-perfectly plastic behavior with the Hoek–Brown criterion. Numerical modeling for this slope was carried out in Phase2 (Rocscience 2014). FOS was estimated using the shear strength reduction (SSR) method (Dawson et al. 1999). Rock mass properties (i.e., \({m}_{b}\), \({s}_{b}\), \({E}_{m}\)) required as input for the numerical model were calculated using their well-accepted relations with rock mass classification rating GSI (Hoek et al. 2013) and intact rock properties, i.e., \({m}_{i}\), \({E}_{i}\) (Appendix 3) (Hoek et al. 2002; Hoek and Diederichs 2006). Figure 3 shows the prepared numerical model of the slope in Phase2 along with the corresponding shear strain contour, FOS and velocity vector pattern obtained after the analysis for a typical model.

Performances of different RSMs were evaluated for this slope as explained in “Polynomial model with cross terms (Q-I)”. Necessary parameters required for the comparative analysis between the considered RSMs were estimated initially. Table 3 shows the properties considered in the analysis. DMCSs was performed for 1000 random sets of input properties, i.e., \({m}_{i}\), \({E}_{i}\), UCS and GSI (total 4 random properties; Table 3) generated according to their PD function (“Estimation of the reliability index”). Corresponding values of rock mass properties, i.e., \({m}_{b}\), \({s}_{b}\), \({E}_{m}\) were estimated using GSI based relations (Appendix 3). FOS values were then estimated for these random sets from the numerical model of the slope in Phase2. The number of DMCSs random sets was decided based on the convergence of FOS statistics, i.e., mean and standard deviation (SD). Figure 4 shows the variation of mean and SD of FOS with the number of random sets and a good convergence can be observed for 1000 random sets. The estimated FOS for these random sets ranged between 1.02 and 5.17 and Fig. 5a also shows the histogram of the FOS. The reliability index from DMCSs (\({\beta }_{DMC}\)) was estimated to be 1.99 through Eq. 38. Further, \({\beta }_{RSM}\) was estimated using 10,000 MCSs on the constructed RSMs. RSMs were constructed with different numbers of sample points (\({N}_{sp}\)) generated using LHS sampling as explained in “Response Surface Methods (RSMs).” Figure 5b-c shows the results for this application example. It can be seen that with the increase in the number of sample points (\({N}_{sp}\)), NSEs of the considered RSMs were continuously increasing. Values were converging towards their maximum magnitude of 1, indicating the continuous improvement in their accuracy with the increasing \({N}_{sp}\). This trend was similar for the estimated β using RSMs as the RDRI was also converging towards 0% for all the considered RSMs with the increasing \({N}_{sp}\). It can be observed that the acceptable accuracies for all the considered RSMs were achieved for \({N}_{sp}\)<\({N}_{sp}^{max}\)(100 points). However, there were noticeable differences in the minimum required sampling points (\({N}_{sp}^{min}\)) for the RSMs. Table 5 shows the \({N}_{sp}^{min}\) and the associated \({T}_{sp}\) for the RSMs. It can be observed that the Kriging and LS-SVM were the most and C-II RBF was the least computationally efficient RSM with \({N}_{sp}^{min}\) values of 9 \(({T}_{sp}\)=1.35 × 104 s) and 75 \(({T}_{sp}\)=1.12 × 105 s), respectively. It can be concluded that the accuracies of the considered RSMs were highly comparable. However, differences exist in the computational efficiencies of the RSMs for type 1 problem.

Results of Response Surface Methods (RSMs) based reliability analysis for type 1 problem a Histogram of Factor of Safety (FOS) evaluated using Direct Monte-Carlo Simulations (DMCSs), b Nash-Stucliffe Efficiency (NSE) vs number of sample points (\({N}_{sp}\)) showing the improving accuracy of RSMs with increasing sampling points, and c Relative Difference in Reliability Index (RDRI) vs number of sample points (\({N}_{sp}\)) concluding the similar result as (b)

Type 2 problem: stress-controlled slope failure considering spatial variability

Failure mode and in-situ rock conditions required for these types of slope failure analysis remain the same as that of type 1 problem. However, if the correlation length for properties is low, i.e., spatial variability is high then the reliability analyses performed by assuming a homogenous distribution of input properties can lead to high inaccuracies. In these cases, reliability analysis performed by considering spatial variability of rock properties is more accurate and realistic (Huang et al. 2017; Pandit et al. 2018). The analysis is generally performed using direct MCSs on randomly generated models. Limited studies are available on the analysis of this type of problem (Huang et al. 2017; Pandit et al. 2018) possibly due to complex mathematical formulation and the requirement of high computational efforts (Table 1). RSMs can provide a better alternative to the traditional reliability analysis under these conditions which will be investigated through a systematic analysis of a case study.

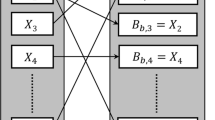

The case study considered for the type 2 problem is a gold mine slope located in the Haveri district of Karnataka state in India (Pandit et al. 2018). The major rock type present at the site was greywacke. All the properties were estimated by detailed ISRM suggested methods (ISRM 1981) and relevant physical and mechanical properties are shown in Table 6. Table 7 shows the details of the major joint sets along the rock slope. Rock mass along the slope was assumed to follow elastic-perfectly plastic behavior with the Hoek–Brown criterion. Uniaxial compressive strength (UCS) was modeled as a Random Field (RF), GSI as a Random Variable (RV) and all other properties as deterministic variables based on their sensitivity towards FOS. More details on the estimation of properties and sensitivity analysis can be seen in Pandit et al. 2018. FOS was estimated using the SSR method (Dawson et al. 1999).

Spatial variability of rock properties was considered in the analysis using the random field modeling. For random field modeling, a single exponential 2D autocorrelation model was adopted which can be expressed as following.

where, \({\rho }_{w}(\Delta x,\Delta z)\) is correlation value at a distance of \(\Delta x\) in the horizontal direction and \(\Delta z\) in the vertical direction from the point \(\left(x,z\right)\); \({\delta }_{x}\) and \({\delta }_{z}\) represent the horizontal and vertical scale of fluctuations (SOFs) estimated from the rock properties along different boreholes using the maximum likelihood method in the logarithmic domain. For the present study, the values of both SOFs were considered as 64 m. For the discretization, the Expansion Optimal Linear Estimation (EOLE) method (Li and Kiureghian 1993) was adopted, which discretizes the Gaussian random field \(Z\) as shown below.

where, \({\mu }_{\mathrm{ln}Z}\) and \({\sigma }_{\mathrm{ln}Z}\) are the mean and standard deviation of the random field \(Z\) in logarithmic space. \({\lambda }_{j}\), \({\phi }_{j}\) are eigenvalues and eigenvectors of \(\Sigma\), and \(\Omega\) is the correlation vector between the random field value at a point \(\left(x,z\right)\) and all other points in the grid. \({\xi }_{j}\) is a vector of \(N\) independent standard normal random variables where \(N\) is the number of terms retained in the EOLE. \(N\) was obtained by arranging the eigenvalues in decreasing order and selecting the first \(N\) eigenvalues so that the mean error variance was smaller than the target value. Expression of the error variance is given as follows.

where, \(Var\) is variance, \({\left({\phi }_{j}\right)}^{T}\) is the transpose of the vector \({\phi }_{j}\) and \(Z\left(x,z\right)-\widetilde{Z}\left(x,z\right)\) is the difference between the true and approximate value of the random field at point \(\left(x,z\right)\). The target mean error variance was taken as 10% in this study giving the value of \(N\) = 43. This means that the underlying continuous random field was approximated by 43 discrete random variables. Numerical modeling was carried out using a random field approach implemented in FLAC-2D (Itasca 2011) coupled with MATLAB. Figure 6 shows a typical numerical model along with the corresponding shear strain contour, FOS and velocity vector pattern obtained after the analysis for a typical model.

For comparison between the performances of RSMs, required parameters were estimated initially. DMCSs was performed by generating 1000 random finite difference models (RFDMs) with spatial variability in rock properties. For each simulation in DMCSs, the random input set \({\xi }_{j}\); \(j=1,\dots , N\) were obtained and utilized in Eq. 43 to generate spatially variable properties. For the next simulation in DMCSs, a different set of \({\xi }_{j}\); \(j=1,\dots , N\) was employed. Figure 6 shows a typical random finite difference model (RFDM) with spatial variation in UCS. FOS values for random finite difference models (RFDMs) were estimated using the shear strength reduction (SSR) method in FLAC. The estimated FOS for these random finite difference models were ranging between 2.17 and 7.10 and Fig. 7a shows the histogram of the estimated FOS. \({\beta }_{DMC}\) for the slope was estimated to be 3.07 using Eq. 38. Further, \({\beta }_{RSM}\) was estimated using 10,000 MCSs on the constructed RSMs. RSMs were constructed with different numbers of sample points generated using Latin Hypercube Sampling (LHS) as explained in “Response Surface Methods (RSMs).”. Figure 7b-c shows the results for this application example. Similar to the previous case, the accuracy of RSMs was continuously improving as indicated by the increasing Nash–Sutcliffe Efficiency (NSE) and decreasing Relative Difference in Reliability Index (RDRI) with the increasing number of sample points (\({N}_{sp}\)). However, some of the RSMs could not achieve the acceptable accuracy within the considered maximum number of sample points (\({N}_{sp}^{max}=150)\) for this application example. Further, a considerable difference exists in the estimated minimum number of sample points (\({N}_{sp}^{min}\)) and the associated total numerical models run time required (\({T}_{sp}\)) for the considered RSMs in this study. Table 8 shows the \({N}_{sp}^{min}\) and the associated \({T}_{sp}\) for the RSMs. It can be observed that almost all of the RBF based RSMs and LS-SVM are the most while Q-I, Q-II, MLS, Kriging, T-SVM and GPR are the least computationally efficient RSMs with \({N}_{sp}^{min}\) values of 75 \(({T}_{sp}\)=3.38 × 105 s) and undetermined (> \({N}_{sp}^{max}\)) respectively. It can be observed that the overall requirement of the \({N}_{sp}^{min}\) for this example was significantly higher than the previous example due to the involvement of the higher number of random variables (i.e., 44 RVs) (Zhou and Huang 2018). This should be emphasized that the number of the sampling points required for constructing RSM was problem type dependent. This could be due to the positive correlation between the number of sampling points and the number of input random variables. It can be seen that the number of random variables for type 2 problem was 90% higher as compared to type 1 problem (number of variables were 4). Hence, the required sampling points to achieve the acceptable accuracy for type 2 problem was 90% higher for most RSMs. Further, many RSMs could not achieve the acceptable accuracy for this problem type even for a higher number of sampling points possibly due to the higher number of input variables. This issue was not present in the type 1 problem where all RSMs achieved acceptable accuracy between 50 and 75 sampling points. For type 2 problem, RSMs like polynomial (Q-II) and C-RBF have significantly lower accuracy for sampling points ranging between 75 and 150 with an approximate percentage difference of 50% in NSE and RDRI as compared to other RSMs. Hence, it is suggested to choose the RSM for this type of problem with utmost attention to get more accurate results along with computational efficiency.

Results of Response Surface Methods (RSMs) based reliability analysis for type 2 problem a Histogram of Factor of Safety (FOS) evaluated using Direct Monte-Carlo Simulations (DMCSs), b Nash-Stucliffe Efficiency (NSE) vs number of sample points (\({N}_{sp}\)) showing the improving accuracy of most of the RSMs with increasing sampling points and failure of Q-I, Q-II, MLS and GPR to achieve acceptable accuracy within the maximum number of sample points (\({N}_{sp}^{max}\)), and c Relative Difference in Reliability Index (RDRI) vs number of sample points (\({N}_{sp}\)) concluding the similar result as (b)

Type 3 problem: structurally controlled failure neglecting spatial variability

Structurally controlled failures along the rock slopes are generally referred to the block failures along discontinuities. These types of failures occur along those slopes for which the discontinuity orientation and shear strength favor the block movement along them (Park and West 2001; Park et al. 2005; Tatone and Grasselli 2010; Ahmadabadi and Poisel 2016). The potential of structurally controlled failures and identification of their failure modes (planar, wedge, toppling) can be evaluated using kinematic analysis (Park and West 2001; Wyllie and Mah 2004). Reliability analyses for the type 3 problem are generally performed by considering the homogenous distribution of discontinuity properties along with other rock properties in the past (Table 1). Reliability analysis is generally performed by coupling analytical formulation of performance functions using limit equilibrium methods with traditional reliability methods. However, some studies have been recently performed to study these problems with advanced numerical tools (Tiwari and Latha 2016; Dadashzadeh et al. 2017; Sengani et al. 2021) like DEM, FEM etc., to study the displacements of slopes and the effect of post-peak strength behavior of discontinuities. Limit equilibrium methods cannot be applied under these conditions and hence, it becomes difficult to obtain an explicit performance function under these conditions (Dadashzadeh et al. 2017; Pandit et al. 2018, 2019). RSMs can provide an efficient alternative under these conditions. This section investigates the applicability of RSMs for these problems through a systematic analysis of a case study.

The case study considered for the type 3 problem is that of a potential massive rockslide along the Rishikesh-Badrinath highway located in the Uttarakhand state of India. The expected failure mechanism for this potential rockslide was planar failure as per kinematic stereographic analysis (Pain 2012). The major rock type present at the site was Quartzite. All the properties of the intact rock were estimated by ISRM suggested methods (ISRM 1981) as shown in Table 9. Table 10 also shows the properties of the major discontinuities.

Along the rock slope. Geometrical parameters of the slope and discontinuity were considered deterministic. As the slope showed the potential of failure along the discontinuity, strength properties of the discontinuities were considered to be random variables. Numerical modeling of the slope was carried out in Phase2 (Rocscience 2014) and FOS was evaluated using the SSR method (Dawson et al. 1999). Figure 8 shows the numerical model of the slope prepared in Phase2 along with the corresponding displacement contour, FOS and velocity vector pattern obtained after the analysis for a typical model.

For comparison between the performances of RSMs, required parameters were estimated initially. 1000 random sets of the random discontinuity properties (total 3 random properties; Table 9) were generated to perform the DMCSs. FOS values were estimated for these randomly generated random sets of rock properties using Phase2. Figure 9a shows the histogram of the estimated FOS ranging between 1.20 and 2.10. The \({\beta }_{DMC}\) was estimated to be 3.54 using Eq. 38. Further, \({\beta }_{RSM}\) was estimated using 10,000 MCSs on the constructed RSMs. RSMs were constructed with a different number of sampling points generated using LHS sampling as explained in “Response surface methods (RSMS)”. Figure 9b-c shows the results for the considered application example. Similar to the previous cases, the accuracy of the RSMs was improving as indicated by the increasing NSEs and decreasing RDRI with the increase in the \({N}_{sp}\). All of the RSMs achieved acceptable accuracy at \({N}_{sp}\) much lesser than the \({N}_{sp}^{max}\) (100 points). However, a difference was observed in \({N}_{sp}^{min}\) for the considered RSMs. Table 11 shows the \({N}_{sp}^{min}\) and \({T}_{sp}\) for the considered RSMs. It can be observed that the AC-I-RBF, AC-II-RBF, Kriging and LS-SVM are the most, while L-RBF, G-RBF, C-II-RBF and MLS are the least efficient RSMs with \({N}_{sp}^{min}\) values of 7 \(({T}_{sp}\)= 6.3 × 103 s) and 25 \(({T}_{sp}\)=2.25 × 104 s) respectively. It can be observed that accuracies of the RSMs were highly comparable similar to type 1 problem. However, differences exist in the computational efficiencies of the RSMs for this problem type.

Results of Response Surface Methods (RSMs) based reliability analysis for type 2 problem a Histogram of Factor of Safety (FOS) evaluated using Direct Monte-Carlo Simulations (DMCSs), b Nash-Stucliffe Efficiency (NSE) vs number of sample points (\({N}_{sp}\)) showing the improving accuracy of RSMs with increasing sampling points, and (c) Relative Difference in Reliability Index (RDRI) vs number of sample points (\({N}_{sp}\)) concluding the similar result as (b)

Suggested RSMs for reliability analysis of modelled rock slopes

It was discussed in the literature review that most of the previous studies employed polynomial RSM due to its mathematical simplicity. Although advanced RSMs provide a better alternative to traditional RSMs for most engineering problems, their applicability is questionable for the rock slope problems. This issue has been addressed in the previous sections as most of the advanced RSMs achieved acceptable accuracy with fewer sampling points for all problem types. This could be possibly due to their inherent feature of weighing the data samples near the observation points more heavily rather than giving them equal weights. However, one major issue for the users will be the selection of the most appropriate RSM among these advanced RSMs and the corresponding number of sampling points. To the author's best knowledge, no such guidelines for the rock slope problems exist in the current literature. This study has made an effort to provide the guidelines for the users for the initial selection of the most appropriate RSM and an appropriate number of sampling points based on the type of rock slope reliability problem under consideration.

Table 12 provides a comparison between the performances of the RSMs based on their accuracy and computational efficiency for different types of rock slope reliability problems. Symbol ‘’ means that the RSMs have achieved the acceptable accuracy for \({{N}_{sp}<N}_{sp}^{max}\), while ‘’ represents the RSM which could not. For computational efficiency, RSMs were ranked according to their estimated ‘\({T}_{sp}\)’ with ‘1’ referring to the most efficient RSM. It can be observed that for—a) type 1 problems, Kriging and LS-SVM, b) type 2 problems, L-RBF, G-RBF, MQ-RBF, C-II-RBF, AC-I-RBF, AC-II-RBF and LS-SVM, and c) type 3 problems, AC-I-RBF, AC-II-RBF, Kriging and LS-SVM were the accurate and most efficient RSMs. Another important recommendation is regarding the number of sampling points to obtain RSMs with acceptable accuracy. It is recommended to use \({N}_{sp}^{min} \approx 2\times d\) (number of involved random variables) as the initial trial and then increase the \({N}_{sp}\) if the acceptable accuracy is not achieved.

Conclusions

This study investigates the performance of different RSMs for the risk assessment of rock slope stability problems on the criteria of accuracy and computational efficiency. Three types of slope reliability problems were identified from a detailed literature review – type (1) stress-controlled failures neglecting spatial variability, type (2) stress-controlled failures considering spatial variability, and type (3) structurally controlled failures neglecting spatial variability. Analyses were performed for large sized rock slopes corresponding to these problem types along different engineering sites in India. In general, RSMs based reliability analysis was observed to be highly accurate and computationally efficient for all problem types. However, the suitability of different RSMs was observed to be problem dependent. Traditional global RSM such as polynomial was observed to perform inferior to advanced RSMs which weigh the data samples near the observation points more heavily rather than giving equal weights. Some specific recommendations were provided based on the extensive simulations and data analyses for the selection of the appropriate RSM based on the problem types. Kriging and LS-SVM for type 1; L-RBF, G-RBF, MQ-RBF, C-II-RBF, AC-I-RBF, AC-II-RBF and LS-SVM for type 2; and AC-I-RBF, AC-II-RBF, Kriging and LS-SVM for type 3 problems were suggested to be the most suitable RSMs. The number of sampling points required for the construction of RSM was also observed to be dependent on the type of problem. A possible reason could be the direct dependence of the number of sampling points on the number of the input random variables which in turn depends upon the problem type. For this study, the number of random variables for type 2 problem was 44 which was 90% higher as compared to type 1 (number of variables were 4) and 3 (number of variables were 4) problems. Hence, the number of sampling points required to achieve the acceptable accuracy for type 2 problem was 90% higher for most RSMs. Further, the minimum number of sampling points required to construct the response surface of acceptable accuracy was recommended to be twice the number of input random variables and more points can be included quantitatively by assessing the accuracy of the constructed RSM.

Although the study provides important conclusions on the applicability of RSMs for the reliability analysis of rock slopes, some issues need to be investigated in future studies. For type 2 problem, suitability of RSMs for rock slopes with different auto-correlation lengths, especially smaller lengths, should be further studied. This is because of the increasing number of random variables for the problems with smaller correlation lengths. Further, the applicability of RSMs for the reliability analysis of structurally controlled rock slopes considering spatial variability of properties should be investigated.

References

Abbaszadeh M, Shahriar K, Sharifzadeh M, Heydari M (2011) Uncertainty and reliability analysis applied to slope stability: a case study from Sungun copper mine. Geotech Geol Eng 29:581–596

Abdulai M, Sharifzadeh M (2021) Probability methods for stability design of open pit rock slopes: an overview. Geosciences 11:319

Afrapoli AM, Osanloo M 2012 Geomechanical Property Uncertainty Effect on Stability of Open-Pit Mine Slope (Comparison between two Important Copper Mines in Middle East and Europe). ISRM Reg. Symp. - 7th Asian Rock Mech. Symp. ISRM-ARMS7–2012–147

Ahmadabadi M, Poisel R (2015) Assessment of the application of point estimate methods in the probabilistic stability analysis of slopes. Comput Geotech 69:540–550. https://doi.org/10.1016/j.compgeo.2015.06.016

Ahmadabadi M, Poisel R (2016) Probabilistic analysis of rock slopes involving correlated non-normal variables using point estimate methods. Rock Mech Rock Eng 49:909–925. https://doi.org/10.1007/s00603-015-0790-2

Aladejare AE, Akeju VO (2020) Design and sensitivity analysis of rock slope using Monte Carlo simulation. Geotech Geol Eng 38:573–585. https://doi.org/10.1007/s10706-019-01048-z

Baecher GB, Christian JT (2005) Reliability and statistics in geotechnical engineering. John Wiley Sons

Basahel H, Mitri H (2019) Probabilistic assessment of rock slopes stability using the response surface approach–a case study. Int J Min Sci Technol 29:357–370

Bucher C, Most T (2008) A comparison of approximate response functions in structural reliability analysis. Probabilistic Eng Mech 23:154–163. https://doi.org/10.1016/j.probengmech.2007.12.022

Chowdhury RN (1986) Geomechanics risk model for multiple failures along rock discontinuities. International Journal of Rock Mechanics and Mining Sciences & Geomechanics Abstracts. Elsevier, Amsterdam, pp 337–346

Dadashzadeh N, Duzgun HSB, Yesiloglu-Gultekin N (2017) Reliability-based stability analysis of rock slopes using numerical analysis and response surface method. Rock Mech Rock Eng 50:2119–2133

Dawson EM, Roth WH, Drescher A (1999) Slope stability analysis by strength reduction. Geotechnique 49:835–840

Düzgün HŞB, Paşamehmetoğlu AG, Yücemen MS (1995) Plane failure analysis of rock slopes: a reliability approach. Int J Surf Mining, Reclam Environ 9:1–6. https://doi.org/10.1080/09208119508964707

Duzgun HSB, Bhasin RK (2009) Probabilistic stability evaluation of oppstadhornet rock slope, Norway. Rock Mech Rock Eng 42:729–749. https://doi.org/10.1007/s00603-008-0011-3

Duzgun HSB, Yucemen MS, Karpuz C (2003) A Methodology for reliability-based design of rock slopes. Rock Mech Rock Eng 36:95–120. https://doi.org/10.1007/s00603-002-0034-0

He TT, Shang YQ, Lu Q, Ren SS (2013) Slope reliability analysis using support vector machine. Rock Soil Mech 34(11):3269–3276

Hill WJ, Hunter WG (1966) A review of response surface methodology: a literature survey. Technometrics 8:571–590

Hoek E, Diederichs MS (2006) Empirical estimation of rock mass modulus. Int J Rock Mech Min Sci 43:203–215. https://doi.org/10.1016/j.ijrmms.2005.06.005

Hoek E, Carranza C, Corkum B 2002 Hoek-brown failure criterion 2002 edition. Narms-Tac 267–273

Hoek E, Carter TG, Diederichs MS (2013) Quantification of the geological strength index chart. In: 47th US rock mechanics/geomechanics symposium. OnePetro

Huang XC, Zhou XP, Ma W et al (2017) Two-dimensional stability assessment of rock slopes based on random field. Int J Geomech 17:4016155

ISRM (1981) Rock characterization, testing and monitoring. ISRM Suggest. Methods. 211

Itasca (2011) FLAC-Fast Lagrangian analysis and continua version. Itasca Consulting Group Inc, Minneapolis

Ji J, Zhang W, Zhang F et al (2020) Reliability analysis on permanent displacement of earth slopes using the simplified bishop method. Comput Geotech 117:103286

Jiang Q, Qi Z, Wei W, Zhou C (2015) Stability assessment of a high rock slope by strength reduction finite element method. Bull Eng Geol Environ 74:1153–1162

Jimenez-Rodriguez R, Sitar N (2007) Rock wedge stability analysis using system reliability methods. Rock Mech Rock Eng 40:419–427. https://doi.org/10.1007/s00603-005-0088-x

Jimenez-Rodriguez R, Sitar N, Chacón J (2006) System reliability approach to rock slope stability. Int J Rock Mech Min Sci 43:847–859

Johari A, Lari AM (2016) System reliability analysis of rock wedge stability considering correlated failure modes using sequential compounding method. Int J Rock Mech Min Sci 82:61–70

Kang F, Han S, Salgado R, Li J (2015) System probabilistic stability analysis of soil slopes using gaussian process regression with Latin hypercube sampling. Comput Geotech 63:13–25. https://doi.org/10.1016/j.compgeo.2014.08.010

Krishnamurthy T (2003) Response surface approximation with augmented and compactly supported radial basis functions. AIAA/ASME/ASCE/AHS/ASC Struct Struct Dyn Mater Conf. https://doi.org/10.2514/6.2003-1748

Kumar A, Tiwari G (2022a) Jackknife based generalized resampling reliability approach for rock slopes and tunnels stability analyses with limited data: theory and applications. J Rock Mech Geotech Eng 14:714–730. https://doi.org/10.1016/j.jrmge.2021.11.003

Kumar A, Tiwari G (2022b) Application of re-sampling stochastic framework for rock slopes support design with limited investigation data: slope case studies along an Indian highway. Environ Earth Sci 81:1–25

Kumar N, Verma AK, Sardana S et al (2018) Comparative analysis of limit equilibrium and numerical methods for prediction of a landslide. Bull Eng Geol Environ 77:595–608

Laifu S, Rui P, Bin X, Yang Z (2022) Dynamic reliability analysis of the stability of the 3D Slope of a Rockfill Dam Based on the copula function and generalized probability density evolution method. Int J Geomech 22:4022038. https://doi.org/10.1061/(ASCE)GM.1943-5622.0002341

Lee YF, Chi YY, Juang CH, Lee DH (2012) Reliability analysis of rock wedge stability: knowledge-based clustered partitioning approach. J Geotech Geoenvironmental Eng 138:700–708

Li H (2021) Stability rating of mine rock slope based on artificial intelligence. Adv Civ Eng. https://doi.org/10.1155/2021/6638333

Li CC, Der Kiureghian A (1993) Optimal discretization of random fields. J Eng Mech 119:1136–1154

Li D, Zhou C, Lu W, Jiang Q (2009) A system reliability approach for evaluating stability of rock wedges with correlated failure modes. Comput Geotech 36:1298–1307

Li D, Chen Y, Lu W, Zhou C (2011) Stochastic response surface method for reliability analysis of rock slopes involving correlated non-normal variables. Comput Geotech 38:58–68

Li DQ, Jiang SH, Cao ZJ et al (2015) Efficient 3-D reliability analysis of the 530 m high abutment slope at Jinping I Hydropower Station during construction. Eng Geol 195:269–281

Li DQ, Zheng D, Cao ZJ et al (2016) Response surface methods for slope reliability analysis: review and comparison. Eng Geol 203:3–14. https://doi.org/10.1016/j.enggeo.2015.09.003

Li S, Zhao H, Ru Z (2017) Relevance vector machine-based response surface for slope reliability analysis. Int J Numer Anal Methods Geomech 41:1332–1346

Liu X, Li DQ, Cao ZJ, Wang Y (2020) Adaptive Monte Carlo simulation method for system reliability analysis of slope stability based on limit equilibrium methods. Eng Geol 264:105384. https://doi.org/10.1016/j.enggeo.2019.105384

Low BK (1997) Reliability analysis of rock wedges. J Geotech Geoenvironmental Eng 123:498–505

Low BK (2007) Reliability analysis of rock slopes involving correlated nonnormals. Int J Rock Mech Min Sci 44:922–935. https://doi.org/10.1016/j.ijrmms.2007.02.008

Low BK (2008) Efficient probabilistic algorithm illustrated for a rock slope. Rock Mech Rock Eng 41:715–734

Low BK, Einstein HH 1992 Simplified relaibility analysis for wedge mechanisms in rock slopes. In: International symposium on landslides 499–507

Mathur HN 1982 Influence of human activities on landslides, mudflows and slope movements in India and efforts at reducing their negative impact. In Landslides and mudflows–reports of the Alma-Ata International Seminar 00:20–44

Mead R, Pike DJ (1975) A biometrics invited paper. A review of response surface methodology from a biometric viewpoint. Biometrics 31:803–851

Montgomery DC (2001) Design and analysis of experiments. John Wiley & Sons. Inc, New York 1997:200–201

Moriasi DN, Arnold JG, Van Liew MW et al (2007) Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans ASABE 50:885–900

Myers RH (1999) Response surface methodology—current status and future directions. J Qual Technol 31:30–44

Myers RH, Khuri AI, Carter WH (1989) Response surface methodology: 1966–l988. Technometrics 31:137–157

Obregon C, Mitri H (2019) Probabilistic approach for open pit bench slope stability analysis–A mine case study. Int J Min Sci Technol 29:629–640

Ortiz R, Silva G R, Michalak N (2015) Application of the response surface methodology to 3dec analysis of open pit slopes. In: ISRM Regional Symposium-8th South American Congress on Rock Mechanics. OnePetro

Pain A 2012 Rock slope stability assessment using rock mass characterization and discontinuity modelling. Masters Dissertation, AcSIR - Central Building Research Institute, Roorkee, India

Pan Q, Jiang YJ, Dias D (2017) Probabilistic stability analysis of a three-dimensional rock slope characterized by the Hoek-Brown failure criterion. J Comput Civ Eng 31:4017046

Pan Q, Qu X, Liu L, Dias D (2020) A sequential sparse polynomial chaos expansion using Bayesian regression for geotechnical reliability estimations. Int J Numer Anal Methods Geomech 44:874–889

Pandit B, Babu GLS (2018) Reliability-based robust design for reinforcement of jointed rock slope. Georisk 12:152–168. https://doi.org/10.1080/17499518.2017.1407800

Pandit B, Doraiswamy RG, Babu GLS (2016) Robust geotechnical design of rock bolts for stability of rock slopes using response surface. Recent Advances in Rock Engineering (RARE 2016). Atlantis Press, Amsterdem, pp 217–222

Pandit B, Tiwari G, Latha GM, Sivakumar Babu GL (2018) Stability analysis of a large gold mine open-pit slope using advanced probabilistic method. Rock Mech Rock Eng 51:2153–2174. https://doi.org/10.1007/s00603-018-1465-6

Pandit B, Tiwari G, Latha GM, Babu GLS (2019) Probabilistic characterization of rock mass from limited laboratory tests and field data: associated reliability analysis and its interpretation. Rock Mech Rock Eng 52:2985–3001. https://doi.org/10.1007/s00603-019-01780-1

Pandit B, Babu GLS (2016) Reliability analysis of rock slopes using stochastic response surface. INDOROCK Proc.

Park H, West TR (2001) Development of a probabilistic approach for rock wedge failure. Eng Geol 59:233–251. https://doi.org/10.1016/S0013-7952(00)00076-4

Park HJ, West TR, Woo I (2005) Probabilistic analysis of rock slope stability and random properties of discontinuity parameters, Interstate Highway 40, Western North Carolina, USA. Eng Geol 79:230–250. https://doi.org/10.1016/j.enggeo.2005.02.001

Park HJ, Um J-G, Woo I, Kim JW (2012) The evaluation of the probability of rock wedge failure using the point estimate method. Environ Earth Sci 65:353–361

Peng X, Li DQ, Cao ZJ et al (2017) Reliability-based robust geotechnical design using Monte Carlo simulation. Bull Eng Geol Environ 76:1217–1227

Qian ZG, Li A-J, Lyamin AV, Wang CC (2017) Parametric studies of disturbed rock slope stability based on finite element limit analysis methods. Comput Geotech 81:155–166

Rasmussen CE, Williams CK (2006) Gaussian processes for machine learning, vol. 1

Rippa S (1999) An algorithm for selecting a good value for the parameter c in radial basis function interpolation. Adv Comput Math 11:193–210. https://doi.org/10.1023/a:1018975909870

Rocscience (2014) Phase version 8.020, finite element analysis for excavations and slopes. Rocscience Inc, Toronto

Roy N, Sarkar R, Bharti SD (2019) Relative influence of strength and geometric parameters on the behavior of jointed rock slopes. Arab J Geosci 12:1–19

Samui P, Lansivaara T, Bhatt MR (2013) Least square support vector machine applied to slope reliability analysis. Geotech Geol Eng 31:1329–1334

Scavia C, Barla G, Bernaudo V (1990) Probabilistic stability analysis of block toppling failure in rock slopes. International journal of rock mechanics and mining sciences & geomechanics abstracts. Elsevier, Amsterdam, pp 465–478

Sengani F, Muavhi N, Mulenga F (2021) Advanced analysis of road-slope stability in a brittle and faulted rockmass terrain by several techniques. Transp Geotech 28:100545

Shamekhi E, Tannant DD (2015) Probabilistic assessment of rock slope stability using response surfaces determined from finite element models of geometric realizations. Comput Geotech 69:70–81

Tamimi S, Amadei B, Frangopol DM (1989) Monte Carlo simulation of rock slope reliability. Comput Struct 33:1495–1505

Tatone BSA, Grasselli G (2010) ROCKTOPPLE: a spreadsheet-based program for probabilistic block-toppling analysis. Comput Geosci 36:98–114. https://doi.org/10.1016/j.cageo.2009.04.014

Tiwari G, Latha GM (2016) Design of rock slope reinforcement: an Himalayan case study. Rock Mech Rock Eng 49:2075–2097. https://doi.org/10.1007/s00603-016-0913-4

Tiwari G, Latha GM (2019) Reliability analysis of jointed rock slope considering uncertainty in peak and residual strength parameters. Bull Eng Geol Environ 78:913–930. https://doi.org/10.1007/s10064-017-1141-1

Tiwari G, Pandit B, Latha GM, Sivakumar Babu GL (2017) Probabilistic analysis of tunnels considering uncertainty in peak and post-peak strength parameters. Tunn Undergr Sp Technol 70:375–387. https://doi.org/10.1016/j.tust.2017.09.013

Wang L, Hwang JH, Juang CH, Atamturktur S (2013) Reliability-based design of rock slopes—a new perspective on design robustness. Eng Geol 154:56–63

Wang Y, Han M, Li B, Wan Y (2022) Stability evaluation of earth-rock dam reinforcement with new permeable polymer based on reliability method. Constr Build Mater 320:126294. https://doi.org/10.1016/j.conbuildmat.2021.126294

Wyllie DC, Mah C (2004) Rock slope engineering. CRC Press

Zhang W, Meng F, Chen F, Liu H (2021) Effects of spatial variability of weak layer and seismic randomness on rock slope stability and reliability analysis. Soil Dyn Earthq Eng 146:106735. https://doi.org/10.1016/j.soildyn.2021.106735

Zhao L, Yu C, Li L et al (2020) Rock slope reliability analysis using Barton-Bandis failure criterion with modified pseudo-dynamic approach. Soil Dyn Earthq Eng 139:106310

Zhaobo H (2008) Slope reliability analysis using a support vector machine. Comput Geotech 35:459–467. https://doi.org/10.1016/j.compgeo.2007.08.002

Zhou X-P, Huang X-C (2018) Reliability analysis of slopes using UD-based response surface methods combined with LASSO. Eng Geol 233:111–123

Zhu B, Pei H, Yang Q (2019a) An intelligent response surface method for analyzing slope reliability based on Gaussian process regression. Int J Numer Anal Methods Geomech 43:2431–2448

Zhu X, He J, Zhao S et al (2019b) A comprehensive comparison and analysis of computational predictors for RNA N6-methyladenosine sites of Saccharomyces cerevisiae. Brief Funct Genomics 18:367–376

Funding

This work was financially supported with the initiation grant funded by Indian Institute of Technology (IIT) Kanpur.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of a Topical Collection in Environmental Earth Sciences on “Geoenvironmental resources and risks: management strategies and techniques for eco-sustainable development and planning of fragile territories”, guest edited by Gioacchino Francesco Andriani, Domenico Calcaterra, Juan Carlos Mora Chaparro, Ratan Das, Lidia Loiotine, Michael Tarullo.

Appendices

Appendix 1: basis functions for RBF based RSMs

Method | Basis function |

|---|---|

Linear | \(\phi \left( r \right) = cr\) |

Cubic | \(\phi \left( r \right) = \left( {c + r} \right)^{3}\) |

Gaussian | \(\phi \left( r \right) = e^{{{-}cr^{2} }}\) |

Multiquadratic | \(\phi \left( r \right) = \left( {r^{2} + c^{2} } \right)^{\frac{1}{2}}\) |

Appendix 2: performance rating guidelines corresponding to NSE

Performance rating | NSE |

|---|---|

Very good | 0.75 – 1.00 |

Good | 0.65 – 0.75 |

Satisfactory | 0.50 – 0.65 |

Unsatisfactory | < 0.50 |

Appendix 3: estimation of rock mass properties using GSI under the undisturbed condition

a) Estimation of Hoek–Brown strength parameters, i.e., \({m}_{b}\) and \({s}_{b}\) of rock mass

b) Estimation of deformation modulus (\({E}_{m}\)) of rock mass

where \({m}_{i}\) is the Hoek–Brown strength parameter for intact rock and GSI is the geological strength index.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, A., Pandit, B. & Tiwari, G. Reliability-based stability analysis of large rock slopes with different failure mechanisms using response surface methodology. Environ Earth Sci 81, 511 (2022). https://doi.org/10.1007/s12665-022-10624-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12665-022-10624-1