Abstract

Diverse plant diseases have a major impact on the yield of food crops, and if plant diseases are not recognized in time, they may spread widely and directly cause losses to crop yield. In this work, we studied the deep learning techniques and created a convolutional ensemble network to improve the capability of the model for identifying minute plant lesion features. Using the method of ensemble learning, we aggregated three lightweight CNNs including SE-MobileNet, Mobile-DANet, and MobileNet V2 to form a new network called Es-MbNet to recognize plant disease types. The transfer learning and two-stage training strategy were adopted in model training, and the first phase implemented the initialization of network weights. The second phase re-trained the network using the target dataset by injecting the weights trained in the first phase, thereby gaining the optimum parameters of the model. The proposed method attained a 99.37% average accuracy on the local dataset. To verify the robustness of the model, it was also tested on the open-source PlantVillage dataset and reached an average accuracy of 99.61%. Experimental findings prove the validity and deliver superior performance of the proposed method compared to other state-of-the-arts. Our data and codes are provided at https://github.com/xtu502/Ensemble-learning-for-crop-disease-detection.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The 2020 World Population Data Sheet declares that the population of the world is expected to increase to over 9 billion by 2050 (https://interactives.prb.org/2020-wpds/), which requires increasing crop yields and minimizing plant damage by timely recognition of plant diseases. As a result, recent studies on plant disease recognition that involve food security have attracted a lot of attention. The diverse plant diseases have great threats to food production, and serious disease types can even cause no harvest entirely. In particular, the major food crops such as rice and maize, which occupy important positions from the production perspective, are consumed as staple foods by over half of the world population, but they are quite susceptible to various diseases too. In the past several years, the degree of damage caused by plant diseases is rapidly growing at an alarming rate due to primary changes in methods adopted for crop cultivation and the influence of harmful organisms. Early recognition and diagnosis acts a pivotal part in suppressing the outbreak of plant diseases (Atoum et al. 2016). It can effectively reduce the losses caused by various plant diseases and has the dual effects of increasing crop yields while avoiding the excessive usage of biocides. However, to date, the most used approach for plant disease recognition still relies on the visual observations of plant disease experts or experienced growers in many areas, explicitly in developing countries. This approach requires experts to monitor continuously, which is prohibitively labor-intensive, expensive, and unfeasible for many farms (Chug et al. 2022; Ding and Taylor 2016). Additionally, it is not always possible to meet plant disease experts at any time. In most cases, farmers must go long distances to access plant protection experts for plant disease problems, which makes consultation time-consuming and expensive. Other than that, this approach cannot be transplanted in a broad range. Hence, there are realistic importance and a great need to construct a useful, simple, quick, and reliable method to automatically recognize diverse plant diseases.

In the last few decades, a new manner for identifying plant diseases is being provided with the advancement of pattern recognition and machine learning (ML) techniques. A specific classifier that categorizes the plant images into diseased or healthy types is usually employed in the identification of plant diseases. For instance, Kaur et al. (2018) introduced a support vector machine (SVM) classifier to recognize 3 varieties of soybean leaf diseases comprised of downy mildew, frog eye, and septoria leaf blight; their average accuracy approximately reached 90.00%. After performing the extraction of Scale invariant feature transform (SIFT) features, Mohan et al. (2016) used the classifiers including k-nearest neighbour (k-NN) and SVM to identify three types of paddy diseases, and they realized the recognition accuracy of 93.33% with k-NN while 91.10% with SVM. Kumari et al. (2019) utilized an artificial neural network (ANN) method to identify cotton and tomato diseases; they attained an average accuracy of 92.5%. Chouhan et al. (2018) trained an ANN model called BRBFNN, which was based on the radial function, to identify tomato and cotton crop diseases, and they realized an average accuracy of 86.21%, etc. Despite promising results introduced in the literature, the aforementioned methods primarily rely on the manual feature extraction of the preprocessed corpus, which has a certain subjectivity and consumes a large amount of work, especially, when the image dataset is large-scale. In recent years, a novel ML technique named deep learning (DL), explicitly convolutional neural network (CNN), which can overcome the above-mentioned challenges and automatically extract image features (Chen et al. 2021a, b, c), has been developed to become a preferred method owing to the competitive performance (Tuncer 2021; Qi et al. 2021). Jayagopal et al. (2022) trained a CNN model to recognize leaf-borne infestation and they achieved the best accuracy of 95%. Sethy et al. (2020) introduced a network structure, which used CNN to extract features and SVM for classification. In the experiment of 5,932 samples, they identified 4 classes of rice plant diseases including tungro, bacterial blight, brown spot, and blast, and the combination of SVM and ResNet50 performed better with a 98.38% F1-score. Coulibaly et al. (2019) reported a deep CNN-based approach and transfer learning to identify the mildew disease of pearl millet. They identified 6 mildew diseases as well as healthy types on a dataset of 500 natural rice crop images and realized an accuracy of 95.00%. By using 500 natural rice plant images, Lu et al. (2017) proposed a deep CNN model to recognize 10 typical rice plant diseases, and they attained a 95.48% accuracy. In another research, Gandhi et al. (2018) used generative adversarial networks (GAN) to enrich the local images, and the MobileNet along with Inception V3 models were employed to identify plant diseases. For their experiments on the PlantVillage dataset (Hughes and Salathé 2015), their models attained the accuracy of 92% and 88.6%, respectively. Based on the ResNet50, Wenchao and Zhi (2022) introduced the focal loss function and proposed a new model, namely G-ResNet50, to recognize four types of Strawberry leaf diseases. They achieved an average recognition accuracy of 98.67%. Mohanty et al. (2016) trained a CNN model on the PlantVillage dataset to recognize plant disease types comprised of 26 plant diseases and 14 species, and their model realized a 99.35% accuracy, etc. Although very useful results were attained in the aforementioned literature, the difference and diversity of the images used in the research are limited because most materials are photographed in laboratory environments rather than in natural on-field scenarios. In practice, images should be taken under a wide range so that they contain diverse symptom features of plant diseases (Barbedo 2018). Next, various crop diseases can appear on any part of the plant, whether it is stems, leaves, fruits, or roots. Additionally, the deep learning models used above are primarily the classical deep CNN models with a large volume, which are not easy to be deployed into embedded systems. Despite the limitations, previous research has successfully demonstrated the capability of deep CNNs (DCNNs) to identify various plant diseases. In this study, using the approaches of convolutional ensemble learning, three lightweight CNNs including SE-MobileNet, Mobile-DANet, and MobileNet V2 were employed as the backbone extractor to generate a new convolutional ensemble network called Es-MbNet, where three logistic regressions were designed as the base-classifiers and the Softmax were explored as the meta-classifiers depending upon a two-level stacking strategy. The first-level classifier produces output values for training data that are highly differentiated and have good predictive ability, and the second-level classifier learns from the first-level classifier to improve the accuracy and stability of model prediction. Furthermore, the transfer learning and two-stage training strategy were adopted in model training. The first stage performed the initialization of the network weights, and the second stage fine-tuned the network weights using the target dataset. In brief, the major contributions of this study can be recapitulated below.

-

We have collected a plant disease dataset of major food crops like rice and maize. It contains approximately 1000 images captured from real field wild conditions, and each image has been assigned to a specific category. This dataset is expected to facilitate further research on plant disease recognition.

-

A two-level stacking-based convolutional ensemble learning model, namely Es-MbNet, is proposed for the recognition of crop diseases. In the proposed Es-MbNet, three lightweight CNNs were used as the feature extractors and the corresponding three logistic regression models were designed as the base-classifiers. The Softmax was finally explored as the meta-classifiers based on the stacking strategy.

-

The two-stage transfer learning was executed in model training. This progressive strategy helps the model discover the coarse features of crop disease images first, and then transform its attention to delicate details gradually, without the need for learning all scale features of the images meantime.

The rest of this writing is organized as follows. Section 2 presents the details of the acquired images followed by an introduction of related work. This section primarily discusses the proposed method for crop disease recognition. Section 3 brings the experimental analyses; extensive experiments are conducted and assessed through comparative analysis. Finally, Sect. 4 concludes the paper and advises on future work.

2 Materials and methods

2.1 Acquired images

In the experiments of plant disease recognition, we have obtained around 1000 plant disease images containing 466 maize and 500 rice plant disease samples, which are both captured in an on-field cultivation farm environment from the innovation base of ZeHuo Digital Technology Co., Ltd. The plant disease type of each image is known in advance referring to the expertise of the domain experts. It is noteworthy that all the plant disease images are provided in JPG format and have complicated backdrop conditions. For example, some images were photographed with the background of other plant leaves or clutter field grasses, and in other images, the background conditions are the on-field soils of diverse colors. Also, the photographers’ fingers are included in the context of the images sometimes. Besides, the lighting strengths were uneven because of the varied weather conditions at the different photographing time. After acquiring the raw images, we used Photoshop software to uniformly adjusted the size of these photographs to 256 × 256 pixels. The rice plant diseases mainly consist of rice blast, brown spot, leaf smut, leaf scald, stackburn, white tip, straighthead, and stem rot. The maize diseases contain crazy top, gibberella ear rot, maize eyespot, Goss’s bacterial wilt, gray leaf spot, common smut, and phaeosphaeria spot. Figure 1 displays the sample images of those plant disease types.

Moreover, the publicly accessible datasets, such as the PlantVillage dataset, AI Challenger dataset (https://www.kaggle.com/jinbao/ai-challenger-pdr2018), and Sethy et al. (2020) dataset, are also used in our experiments. Among them, PlantVillage is an open-source plant image repository used for the algorithm test of identifying and classifying diverse plant diseases. It includes 54,306 plant leaf images comprised of 12 healthy plants and 26 disease types in 14 species, and all the images are raw colored photographs of plant leaves captured under controlled backdrop conditions. All the sample images have been adjusted to a fixed size of 256 × 256 pixels to fit the model. The partial data of the AI Challenger dataset is derived from the PlantVillage, and 4 types of potato plant disease images, including early blight fungus general, early blight fungus serious, late blight fungus general, and late blight fungus serious, as well as the healthy category are selected in our experiments. The Sethy et al. dataset is a paddy leaf image dataset, in which 5,932 paddy leaf images are collected under a natural field environment. Figure 2 presents the partial sample images of the public paddy and potato datasets, and the detailed sample numbers and categories are summarized in Table 1.

2.2 Related work

2.2.1 Mobile-DANet

To our knowledge, DenseNet (Huang et al. 2017) reveals the latest state-of-the-art with excellent feature extraction capability because the feature layers receive all the features from its preceding layers and its feature maps are output to all later layers. In many image recognition fields, DenseNet has attained impressive performance. Nevertheless, such dense connections also increase the amount of calculation and consume more memory. Besides, each layer connects feature maps gained from the previous layers without considering the channel relationship characteristics, which does not involve the inter-dependencies between channels. Therefore, on the basis of this, Chen et al. (2021a, b, c) proposed a new network architecture named Mobile-DANet which compressed the size of the model using depthwise separable convolutions (DWSC) (Sifre 2014) in place of the standard convolution layers. Further, a hybrid attention mechanism including channel-wise and spatial attention modules was incorporated into the network to learn the importance of inter-channel relationships and space-wise points for the input features, thereby improving the accuracy of model classification. Their experimental findings demonstrate the effectiveness and feasibility of the proposed method. Hence, the Mobile-DANet was used in our network.

2.2.2 SE-MobileNet

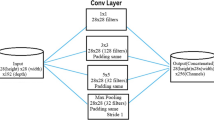

Due to plenty of parameters and great volume for the classical deep CNN models, it is hard for them to be practically applied in mobile portable devices. As a consequence, the research and application of lightweight networks has gained significant attention in the past years. MobileNet is a type of lightweight network architecture depending upon DWSC and has shown promising capability in addressing both large-scale and small-scale problems (Sifre 2014; Li et al. 2019). However, as mentioned earlier, the inter-dependencies between channels are not considered in the classical MobileNet architecture, which does not involve the channel relationship characteristics. Therefore, the SE block incorporated MobileNet, namely SE-MobileNet, was introduced in reference (Chen et al. 2021a, b, c), where the existing MobileNet was optimized by embedding the SE block into the pre-trained model. Concretely, the top layers of classical MobileNet were truncated and the SE block was added behind the pre-trained MobileNet, which was followed by an additional convolutional layer of 3 × 3 × 512 for high-dimensional feature extraction. Then, the completely linked (CL) layer was replaced by a global pooling layer, which was followed by a new CL ReLU layer with 1024 neutrons. At last, a CL Softmax layer with the actual number of categories was used as the top layer of the modified network for the classification. Thus, SE-MobileNet is also used as the feature extractor of our proposed network.

2.3 Proposed approach

2.3.1 Es-MbNet model

As mentioned previously, SE-MobileNet is memory efficient. It achieves considerable accuracy compared with other deep CNNs, while saving more overhead. The volume of SE-MobileNet is only half of the deep convolution neural network Densenet-121 and about 1/30 of VGGNet-19. On the other hand, using the deep separable convolution in place of the traditional convolution, Mobile-DANet compresses the model volume and realizes the maximum reuse of input features by incorporating the channel-wise and spatial attention mechanism and preserving the structure of transition layers in DenseNet. Additionally, in the mainstream lightweight networks, MobileNet V2 (Sandler et al. 2018) is relatively small and has fewer parameters. Derived from the MobileNet V1, the inverted residual connections and linear bottleneck architecture are introduced in MobileNet V2, which primarily aims to solve the problem of vanishing gradient and achieves some improvement over MobileNet V1. Therefore, based on the three lightweight CNN models, this study integrates the SE-MobileNet, Mobile-DANet, and MobileNet V2 to form a new convolutional ensemble network, which we termed Es-MbNet, to enhance the stability and robustness of the model for plant disease recognition.

In general, there are three main ensemble learning strategies, such as Bagging, Boosting, and Stacking. Among them, the Stacking strategy can be used to represent Bagging and Boosting methods, and it also has better classification performance (Doan 2017). Therefore, in this study, we adopted the Stacking strategy and a two-level stacking architecture was utilized in the proposed method. Where the first-level classifier produces output values for training data that are highly differentiated and have good predictive ability, and the second-level classifier learns further from the first-level classifier to enhance the accuracy and stability of model prediction. Three logistic regressions are designed as the base-classifiers in Es-MbNet and the Softmax is explored as the meta-classifier by applying the idea of stacking strategy. Algorithm 1 depicts the specific procedure of stacking-based ensemble learning. More specifically, the detailed processes of the proposed method are presented below.

-

1.

Use three network models to extract the features from the training images and construct a migrated feature training set: D = {D1, D2, D3}, where Dk = {(xk,1, yk,1), (xk,2, yk,2),…, (xk,N, yk,N)}, (xk,i, yk,i) denotes the features extracted by image \(i\) using the CNN model k and the corresponding category information, k ∈ {1, 2, 3}, yk,i ∈ {1, 2, …, C}, C indicates the number of image classes and N is the total number of training samples.

-

2.

Construct a base classifier H = {H1, H2, H3} composed of three logistic regression models, and train a logistic regression model using each of the three features in the migration feature training set.

-

3.

The probability feature space is constructed and a meta-classifier L comprised of Softmax classification function is trained. For arbitrary data xk,1 ∈ Dk, the classifier Hk is used to predict and obtain the probability distribution \(P_{i,k} = [p_{i,k}^{1} ,p_{i,k}^{2} ,...,p_{i,k}^{M} ]\), where \(p_{i,k}^{j}\) denotes the probability that sample i is predicted by the classifier Hk to the category j. The dataset \(D_{H} = \{ (x_{1}^{^{\prime}} ,y_{1} ),(x_{2}^{^{\prime}} ,y_{2} ),...,(x_{N}^{^{\prime}} ,y_{N} )\}\),\(x_{i}^{^{\prime}} = [P_{i,1} ,P_{i,2} ,P_{i,3} ]\) is then constructed depending on the probability distribution, and it is used for training the classifier L. Figure 3 depicts the architecture of the proposed Es-MbNet.

Furthermore, the network heads of these three backbone extractors were discarded and the global average pooling (GAP) layers were embedded behind these lightweight network models, which were used to take care of the resolution of various input images. One block comprised of the dropout, batch normalization, and completely associated Sigmoid layer with regularization items is added in each network. In the dropout layer, we adopted a dropout factor of 0.5, and a batch normalization layer was utilized to enhance the robustness of the network and suppress the over-fitting risk to a certain extent. To prevent the over-fitting, the L1 regularization term was applied in this dense layer with a fixed value of 1 × 10–4, and the Adam (adaptive moment estimation) (Kingma and Ba 2014) was employed as the inner optimizer with a 1 × 10–3 learning rate. Next, the binary cross-entropy was employed as the loss function for the pre-trained backbone networks, and the logistic regression classifier was added to each network for the classification. Then, a fully connected (FC) Softmax layer with the practical number of categories was used as the classification layer of the convolutional ensemble network, and particularly, the optimized focal loss function was applied to process the multi-classification problems. Last but not least, inspired by the performance of Grad_CAM (gradient-weighted class activation mapping) (Selvaraju et al. 2017), the visualization technique was used to position the defect areas of crop disease images, and the plant disease activation map (PDAM) was formed using a weighted sum of the activation maps of last convolutional layer with the weights learned in the last FC Softmax layer, where the significant regions of the images that caused the classification results made by the CNN were presented.

2.3.2 Training procedure and loss function

The transfer learning and two-stage training strategy were adopted in our model training. In the first stage, the parameters were initialized and an adaptation was implemented for the networks, where the original top layers of the backbone networks were substituted by new Sigmoid classification layers, and all the bottom convolution layers were kept frozen, while the newly extended layers were trained using the target dataset. Adam solver was used to update the weights. In the second stage, the network was re-trained (fine-tuned) using the target dataset by injecting the weights learned in the first stage, and all the weights were updated by the SGD optimizer in this stage. The first-level classifiers of the ensemble model used the binary cross-entropy as the loss function, and a logistic regression classifier was added to each network for classification. The formula of the loss function is expressed by

where y denotes the label (1 indicates the positive sample, and 0 is the negative sample), and p(y) is the prediction probability of the positive sample for all N samples. The second-level classifiers used the FC Softmax layer with the actual category numbers as the classification layer of the network, and the boost focal loss (γ = 2) function was used as the loss function of classification for plant disease recognition. The hyper-parameters of the model are the minimum batch size of 64, the learning rate of 1 × 10–3, the momentum of 0.9, and other parameters are set referring to Sethy et al. (2020) work. Algorithm 2 summarizes the detailed procedures of model training.

3 Experimental analysis

We have primarily used Python 3.6 to perform the experiments apart from some image processing operations executed by the Photoshop and Matlab software. Where OpenCV-python3, Tensorflow, and Keras libraries are utilized for algorithm running. The hardware configuration includes the Intel® Xeon(R) E5-2620V4 CPU, 64G memory, and GeForce RTX 2080 TI GPU.

3.1 Experiments on the open database

Using the method proposed in Sect. 2.3, we performed the model training and test on the PlantVillage dataset. Except that some raw images were drawn from the database as the test set to evaluate the model, the ratio of the samples randomly assigned to the training set to those in the validation set was 4:1, and the choice of the division ratio referred to the work of Mohanty et al. (2016). In particular, to further investigate the performance of the proposed method, we considered the well-known CNNs, such as VGGNet (Simonyan and Zisserman 2014), Inception V3 (Szegedy et al. 2016), ResNet (He et al. 2016), DenseNet, InceptionResNet (Szegedy et al. 2017), and others, to compare models. Through transfer learning, these baseline CNN models were built and the weights of the bottom convolutional layers were initialized by injecting the weights pre-trained on ImageNet (Russakovsky et al. 2015). The completely associated layers of all the network heads that are used for the classification were substituted by new FC Softmax layers with the actual number of classes, and the parameter training optimizer was Adam, with a 64 mini-batch size, 100 epochs, and a 1 × 10–3 learning rate. By doing this, we accomplished the training of these CNN models and multiple experiments were carried out on the publicly accessible dataset.

An exhaustive analysis was implemented on the results output by these CNN models, and we measured the performance of the plant disease recognition model using the metrics like Accuracy, Recall, and F1-Score, which were separately calculated as follows.

where TP (true positive) means the number of correctly identified crop images in each class, TN (true negative) indicates the sum of accurately identified crop samples in all other types except for the related ones, FP (false positive) denotes the number of samples that don’t belong to the category but mistakenly classified as this one, and FN (false negative) is the number of inaccurately identified samples. Table 2 displays the training and validation accuracy of the different methods.

As seen in Table 2, the proposed Es-MbNet has achieved a superior performance gain compared to other state-of-the-art methods. After training for 20 and 100 epochs, the training Accuracy of the model has separately reached 97.12% and 98.37%, and especially, the validation accuracy of the proposed method has attained the top value of 98.96% in all the compared methods except for DenseNet-121, which is a deep CNNs with a relatively large volume. Because of the dense connection, DenseNet-121 requires more storage and computational resource to train the model, e.g., it took more than 4 h for DenseNet-121 to complete the entire 100 epochs of iteration training, while the time-consuming of Es-MbNet is around 24 min. In contrast, the proposed Es_MbNet achieves the best trade-off between classification accuracy and model efficiency. The crucial explanation behind the solid performance of the proposed method is that the Es-MbNet integrated the merits of multiple networks through the stacking-based convolutional ensemble learning, which enhances the feature extraction of plant lesion symptoms. In addition, the transfer learning and two-stage training strategy make the network acquire the optimal weight parameters, which helps the model obtain satisfying results. Last but not least, the time-consuming of the proposed method is even less than that of either skeleton model used in the Es-MbNet, which is because that the three simple classifiers, the logistic regressions instead of Softmax classifiers are designed as the base-classifiers in the completely associated layers of the Es-MbNet, reducing the computational complexity and the number of model parameters. Conversely, owing to the single network, other CNNs did not achieve the best results through the parameters of the networks were initialized by loading the models pre-trained on ImageNet instead of inferring from scratch. As a consequence, the model trained by the proposed method was further used to recognize plant diseases on new unseen images, and referring to the work introduced by Hari et al. (2019), the partial crop species like maize, tomato, grape, apple, and potato were chosen to perform the test of plant disease identification. Table 3 represents the recognition results and related metrics measurement.

It can be visualized from Table 3 that most of the samples in each class have been accurately recognized by the proposed method and an average Accuracy reaches 99.61%, which reveals that the Es-MbNet model has an outstanding capability to recognize plant diseases under controlled backdrop conditions. Moreover, apart from recognizing the normal or diseased crops, the Es-MbNet also distinguishes the specific types of plant diseases, and both the average Recall and F1-Score achieve 99.08%, respectively. On another front, the experimental findings on the PlantVillage dataset obtained by some previous research have also been summarized in Table 4. The details such as the references, years, used models, the number of classifications, and the highest accuracy are all included in this table. The comparison of the proposed approach with other influential methods demonstrates the superiority of our approach for identifying plant diseases under simple backdrop conditions.

Furthermore, a series of experiments were carried out on the public paddy and potato image datasets, and Fig. 4 portrays the training performance of different methods. After training for 30 epochs, the proposed method has attained a validation Accuracy of 98.51%, which is the highest accuracy of all the methods, as shown in Fig. 4f. Thereupon, the model trained by the proposed method was further employed to identify the classes of new sample images, and the test results are summarized in Table 5. Plus, Table 6 presents the report in literature (Sethy et al. 2020) for comparison, and Table 7 lists the results compared with the existing work (Liang et al. 2019).

As seen in Table 5, the tested 200 samples per category for all the paddy disease images have been properly identified by the proposed method, and the recognition Accuracy reaches 100%, outperforming the results reported by Sethy et al. (2020) (see Table 6). In addition, for the healthy potato plant, all the 204 samples have been accurately identified by the proposed method except for 1 misidentification, and the Accuracy achieves 99.91%. The 27 potato “Early Blight Fungus general” disease samples have been correctly recognized by the proposed method apart from 2 incorrect identification, and thus the proposed method has gained an Accuracy of 99.25%. Among 73 potato “Early Blight Fungus serious” disease images, 66 samples have been accurately identified by the proposed method, and the Accuracy rate realizes 99.41%. To sum up, in the 1206 paddy and potato plant images, 1183 samples have been successfully identified by the proposed method and the average Accuracy reaches 99.57%. Besides, the average Recall and F1-Score realize 98.09%, respectively. More than that, from the comparison results between the proposed method and the existing work (Liang et al. 2019) on the identification of potato plant diseases, the proposed Es_MbNet has also shown strong recognition capability, as shown in Table 7.

3.2 Experiments on the local dataset

In like manner, the proposed method was further tested on the local image dataset. To enhance the diversity and difference of local sample images, the data enhancement scheme was utilized to produce new synthetic images for model training, thereby alleviating the risks of over-fitting. The conventional data enhancement techniques paired with the improved DCGAN method were adopted in our data enhancement scheme. In general, the conventional data enhancement techniques including random translation, scale transformation, angle rotation, color jittering, and horizontal or vertical flipping, can generate new images but may destroy the linear relationship of original images too. In comparison, the latest DCGAN can generate diverse images automatically, although the process is more complex than the traditional methods. The classical architecture of DCGAN is designed to produce new images with a size of 64 × 64 pixels since training DCGAN with larger scales is not stable as one module (generator or discriminator) becomes stronger than the other one. But for most of the successful CNNs, the dimensions of input images are assigned a greater value like 256 × 256 pixels to improve the performance of the model. Due to this reason, we enhanced the classical DCGAN by modifying the input assignment as 256 × 256 pixels and embedding a convolutional block of 128 × 64 × 3 followed by a 32 × 3 convolutional block in the generator module. Correspondingly, the input size of the discriminator was assigned as 256 × 256 × 3, and then the convolutional block of 32 × 3 followed by a 64 × 128 × 3 convolutional block was also incorporated into the networks. The hyper-parameters of model training were set to the mini-batch size of 16, the learning rate of 1 × 10–4, the epochs of 5 × 105, and the Adam solver. By doing this, the new samples were synthesized and greater than 200 images were guaranteed per category.

In addition to preserving a certain number of original images for the model test, the augmented samples were divided into the training and validation sets with the ratio of 4:1 to separately train and determine whether the model was over-fitting. Using the model trained by the proposed method, the new sample images outside modeling were selected for the test of plant disease identification. Figure 5 shows the ROC curve and confusion matrix of the identification results, and the corresponding evaluation metrics are summarized in Table 8.

It can be visualized from Fig. 5a that the identification result of the proposed method shows ideal operating points and the curves of all the categories are generally convex to the left upper corner of the figure. The recognized plant disease types are with a greater true positive rate (TPR) while a smaller false positive rate (FPR), which can also be reflected by the confusion matrix of recognition results in Fig. 5b. Most images in various categories have been accurately recognized by the proposed Es-MbNet method. For instance, except for one misidentification, the other 37 examples were correctly identified in the "common rust" category, and the identification accuracy realizes 99.72%. Likewise, all 28 “Crazy Top” disease samples have been properly recognized by the proposed method, and also, the proposed method has accurately recognized 27 instances of "gibberellin ear rot" in 28 samples, with an accuracy of 99.72%. In summary, a total of 347 instances have been successfully identified in 365 samples, and the average recognition accuracy achieves 99.37%. Again, the average Recall and F1-Score have attained no less than 95.32% and 95.06%, respectively, as shown in Table 8. In another aspect, there are individual identification errors, such as 2 misclassified samples in the category "Leaf scald", and 4 errors in the category "White tip". This is due to that some different disease types like rice "Leaf scald" and "White tip" have some similarities in their own pathological characteristics, such as whitening, twisting, and withering of leaves. Additionally, the serious clutter field backdrop conditions and irregular illumination intensities, which impact the feature extraction of disease spot images, can lead to the misclassification of crop diseases too. Figure 6 shows the examples of partially recognized results. Among them, the upper samples are the original images, the middle samples are the lesion images presented by the classification activation map (CAM) visualization technology, and the bottom images are the plant disease samples recognized by our method.

As can be observed in Fig. 6, most of the tested sample images have been accurately recognized by the proposed method. Such as Fig. 6a, the practical category of this sample is “Rice Stackburn”, which is successfully recognized with a 0.89 probability. Similarly in Fig. 6b, c, these samples are both correctly recognized with a probability greater than 0.99 and 0.74, respectively. In contrast, individual samples such as Fig. 6d are mistakenly categorized because of the extreme clutter field backdrops and non-uniform lighting intensities. Besides, some different crop diseases appearing on the same crop may also influence the identification results. Although individual disease spot images are misclassified, the types of most crop disease samples that have been identified are consistent with their actual types. Furthermore, from the middle defect positioning images exhibited by PDAM in Fig. 6, it can also be observed that most disease areas have been successfully located by the proposed method. Consequently, based on the results of experimental analysis, we can conclude that the proposed Es-MbNet has a significant ability to recognize diverse crop diseases under natural field wild scenarios and can also be transplanted in other related fields.

4 Conclusions

To guarantee a substantial crop yield, the timely and efficient recognition of plant diseases plays a crucial role, and therefore looking for a useful, simple, and fast tool to automatically recognize various plant diseases has a great need and important realistic significance. In this study, a novel convolutional ensemble learning method is proposed to recognize plant disease types. Using a two-level stacking strategy, the three lightweight networks including SE-MobileNet, Mobile-DANet, and MobileNet V2 were employed as the backbone extractor to generate a new convolutional ensemble network, namely Es-MbNet, in which three logistic regressions were designed as the base-classifiers and the Softmax were explored as the meta-classifiers. Further, the transfer learning and two-stage training strategy were adopted in model training, and the first phase only trained the weights on the newly extended layers from scratch while freezing the parameters of the bottom convolutional layers, thereby initializing the network weights. The second phase re-trained the network using the target dataset by injecting the weights trained in the first phases, and thus the optimum weight parameters are gained for the ensemble convolutional network. Experimental findings reveal the proposed method with solid efficacy for plant disease recognition on both the open-source and local datasets. Though competitive performance has been achieved by the proposed method, individual misidentifications still exist in samples with extremely complicated background conditions. In the future, we will collect a wider range of plant disease images under practical on-field wild scenarios to automatically recognize the broad range of plant disease images. Additionally, we want to extend the method to other related fields, such as online fault inspection, computer-aided evaluation, and so on.

References

Atoum Y, Afridi MJ, Liu X, McGrath JM, Hanson LE (2016) On developing and enhancing plant-level disease rating systems in real fields. Pattern Recognit 53:287–299

Barbedo JG (2018) Factors influencing the use of deep learning for plant disease recognition. Biosyst Eng 172:84–91

Chen J, Wang W, Zhang D, Zeb A, Nanehkaran YA (2021a) Attention embedded lightweight network for maize disease recognition. Plant Pathol 70(3):630–642

Chen J, Zhang D, Suzauddola M, Nanehkaran YA, Sun Y (2021b) Identification of plant disease images via a squeeze-and-excitation MobileNet model and twice transfer learning. IET Image Proc 15(5):1115–1127

Chen J, Zhang D, Zeb A, Nanehkaran YA (2021c) Identification of rice plant diseases using lightweight attention networks. Expert Syst Appl 169:1–12

Chouhan SS, Kaul A, Singh UP, Jain S (2018) Bacterial foraging optimization based radial basis function neural network (BRBFNN) for identification and classification of plant leaf diseases: an automatic approach towards plant pathology. IEEE Access 6:8852–8863

Chug A, Bhatia A, Singh AP, Singh D (2022) A novel framework for image-based plant disease detection using hybrid deep learning approach. Soft Comput 1–26

Coulibaly S, Kamsu-Foguem B, Kamissoko D, Traore D (2019) Deep neural networks with transfer learning in millet crop images. Comput Ind 108:115–120

Ding W, Taylor G (2016) Automatic moth detection from trap images for pest management. Comput Electron Agric 123:17–28

Doan TS (2017) Ensemble learning for multiple data mining problems. University of Colorado at Colorado Springs. PhD thesis

Elhassouny A, Smarandache F (2019) Smart mobile application to recognize tomato leaf diseases using Convolutional Neural Networks. In: 2019 international conference of computer science and renewable energies (ICCSRE). IEEE, pp 1–4

Gandhi R, Nimbalkar S, Yelamanchili N, Ponkshe S (2018) Plant disease detection using CNNs and GANs as an augmentative approach. In: 2018 IEEE international conference on innovative research and development (ICIRD). IEEE, pp 1–5

Geetharamani G, Pandian A (2019) Identification of plant leaf diseases using a nine-layer deep convolutional neural network. Comput Electr Eng 76:323–338

Hari SS, Sivakumar M, Renuga P, Suriya S (2019) Detection of plant disease by leaf image using convolutional neural network. In: 2019 international conference on vision towards emerging trends in communication and networking (ViTECoN). IEEE, pp 1–5

Hassan SM, Maji AK (2022) Plant disease identification using a novel convolutional neural network. IEEE Access 10:5390–5401

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Hughes D, Salathé M (2015) An open access repository of images on plant health to enable the development of mobile disease diagnostics. http://arxiv.org/abs/1511.08060

Jayagopal P, Rajendran S, Mathivanan SK, Sathish Kumar SD, Raja KT, Paneerselvam S (2022) Identifying region specific seasonal crop for leaf borne diseases by utilizing deep learning techniques. Acta Geophys pp 1–14

Karthik R, Hariharan M, Anand S, Mathikshara P, Johnson A, Menaka R (2020) Attention embedded residual CNN for disease detection in tomato leaves. Appl Soft Comput 86:1–27

Kaur S, Pandey S, Goel S (2018) Semi-automatic leaf disease detection and classification system for soybean culture. IET Image Proc 12(6):1038–1048

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. http://arxiv.org/abs/1412.6980

Kumari CU, Prasad SJ, Mounika G (2019) Leaf disease detection: feature extraction with K-means clustering and classification with ANN. In: 2019 3rd international conference on computing methodologies and communication (ICCMC). IEEE, pp 1095–1098

Li X, Zhang S, Jiang B, Qi Y, Chuah MC, Bi N (2019) Dac: data-free automatic acceleration of convolutional networks. In: 2019 IEEE Winter conference on applications of computer vision (WACV). IEEE, pp 1598–1606

Liang Q, Xiang S, Hu Y, Coppola G, Zhang D, Sun W (2019) PD2SE-Net: computer-assisted plant disease diagnosis and severity estimation network. Comput Electron Agric 157:518–529

Lu Y, Yi S, Zeng N, Liu Y, Zhang Y (2017) Identification of rice diseases using deep convolutional neural networks. Neurocomputing 267:378–384

Mohan KJ, Balasubramanian M, Palanivel S (2016) Detection and recognition of diseases from paddy plant leaf images. Int J Comput Appl 144(12):34–41

Mohanty SP, Hughes DP, Salathé M (2016) Using deep learning for image-based plant disease detection. Front Plant Sci 7:1–10

Qi H, Liang Y, Ding Q, Zou J (2021) Automatic identification of peanut-leaf diseases based on stack ensemble. Appl Sci 11(4):1–14

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Fei-Fei L (2015) Imagenet large scale visual recognition challenge. Int J Comput vis 115(3):211–252

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4510–4520

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

Sethy PK, Barpanda NK, Rath AK, Behera SK (2020) Deep feature based rice leaf disease identification using support vector machine. Comput Electron Agric 175:1–9

Sifre L (2014) Rigid-motion scattering for image classification. Ecole Polytechnique, CMAP. PhD thesis

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. http://arxiv.org/abs/1409.1556

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-ResNet and the impact of residual connections on learning. In: Thirty-first AAAI conference on artificial intelligence(AAAI-17), pp 4278–4284

Tm P, Pranathi A, SaiAshritha K, Chittaragi NB, Koolagudi SG (2018) Tomato leaf disease detection using convolutional neural networks. In: 2018 eleventh international conference on contemporary computing (IC3). IEEE, pp 1–5

Tuncer A (2021) Cost-optimized hybrid convolutional neural networks for detection of plant leaf diseases. J Ambient Intell Humaniz Comput 12(8):8625–8636

Wenchao X, Zhi Y (2022) Research on strawberry disease diagnosis based on improved residual network recognition model. Math Probl Eng 2022:1–12

Zeng T, Li C, Zhang B, Wang R, Fu W, Wang J, Zhang X (2022) Rubber leaf disease recognition based on improved deep convolutional neural networks with an cross-scale attention mechanism. Front Plant Sci 274:1–12

Acknowledgements

The authors want to thank Fundamental Research Funds for the Central Universities with Grant No. 20720181004. The authors also thank editors and unknown reviewers for providing useful suggestions.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, J., Zeb, A., Nanehkaran, Y.A. et al. Stacking ensemble model of deep learning for plant disease recognition. J Ambient Intell Human Comput 14, 12359–12372 (2023). https://doi.org/10.1007/s12652-022-04334-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-022-04334-6