Abstract

Food adulteration imposes a significant health concern on the community. Being one of the key ingredients used for spicing up food dishes. Red chilli powder is almost used in every household in the world. It is also common to find chilli powder adulterated with brick powder in global markets. We are amongst the first research attempts to train a machine learning-based algorithms to detect the adulteration in red chilli powder. We introduce our dataset, which contains high quality images of red chilli powder adulterated with red brick powder at different proportions. It contains 12 classes consists of 0%, 5%, 10%, 15%, 20%, 25%, 30%, 35%, 40%, 45%, 50%, and 100% adulterant. We applied various image color space filters (RGB, HSV, Lab, and YCbCr). Also, extracted features using mean and histogram feature extraction techniques. We report the comparison of various classification and regression models to classify the adulteration class and to predict the percentage of adulteration in an image, respectively. We found that for classification, the Cat Boost classifier with HSV color space histogram features and for regression, the Extra Tree regressor with Lab color space histogram features have shown the best performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Food adulteration is an illegal practice of adding cheaper ingredients or fake substances to pure food products to increase their quantity, making them unhygienic to eat. It poses a significant health concern, and when consumed, it deprives necessary nutrition in the human body (Manasha and Janani 2016). Adulterated foods can cause various health issues ranging from mild to life-threatening, including allergic reactions, skin diseases, loss of vision, cancer, and heart diseases (Bansal et al. 2017). Sometimes, it shows immediate side effects in the human body, including vomiting, abdominal pain, and diarrhoea.

Individuals in the food supply chain perform adulteration intentionally to maximize profits. They reduce the food quality to increase its quantity. They put consumers’ health in danger for personal economic gains. Adulterants are also used to expand the shelf life. Improper processing of these food items also leads to abdominal pain and food poisoning or other food infections, usually with fever (Nascimento et al. 2017).

Food adulteration also results in economic losses to the countries as it leads to a decrement in consumer demand. It impacts consumer confidence. Food quality assurance efforts are significant to make consumers’ trust intact. Food adulteration at the production level usually happens due to a lack of monitoring and testing measures (Bansal et al. 2017).

Red chilli powder is one of the key ingredients used for spicing up Indian cooking. It is rich in vitamins and minerals. It also comes in several varieties like Kashmiri Chilli powder, Guntur Chilli, ghost pepper, bird-eye Chilli, Byadagi Daggi Chilli, etc. (Jamaluddin et al. 2022). The variety of the Chilli powder depends on the type of Chilli used and the process followed for drying and grinding. As it is typically consumed in small amounts, it does not contribute much towards one’s diet. Red chilli powder typically constitutes 88% water, 0.3% protein, 1.3% carbohydrates, 0.8% sugar, 0.2% fiber, and 0.1% fat and has a Caloric value of 6 Cal. It contains vitamins like vitamin C, vitamin B6, vitamin K1, and vitamin A (Khan et al. 2019). It also contains minerals like potassium and copper. Consumption of red chilli powder has many benefits, such as providing pain relief, promoting weight loss, fighting inflammation, clearing congestion, boosting immunity, preventing stomach ulcers, boosting immunity, etc. (Ayob et al. 2021; Mi et al. 2022). It is not uncommon to find Chilli powder adulterated with brick powder in Indian markets. Typically, people identify this kind of adulteration using visual cues like adulterated Chilli powder looks more orangish instead of the rich color of unadulterated Chilli powder.

Artificial Intelligence enables computers to do tasks that earlier needs human interference. Computer vision is a field of artificial intelligence that allows computers to see, understand, and recognize digital content by processing images and videos (Ma et al. 2016). Adulteration in food is detectable by training algorithms on the labelled dataset. Therefore, the need to create a genuine dataset in presence of a domain expert for mapping food images with various percentages/ratios of adulteration or purity is a must. Image Augmentation techniques are used to make the image classification-based computer vision model more robust and image pre-processing techniques to improve image quality (Subashini 2010). Various filters including grayscale (Vincent 1993), RGB (Li et al. 2016), HSV (Sadhukhan et al. 2019), median smoothening (Vijaykumar et al. 2010), adaptive thresholding (Bao and Zhang 2003), Canny edge detection (Sarkar et al. 2022), Sobel edge detection (Gao et al. 2010; Hussein et al. 2011), and YCrCb (Lakhwani et al. 2015) are used to enhance features in the images. Feature extraction techniques are also used to extract features from the training dataset consisting of images.

Machine learning algorithms enable computers to make informative decisions based on raw data. Deep learning (subset of machine learning) algorithms are able to learn complex features from unstructured data as they mimic the way the human brain works. Researchers have used neural networks containing one or more hidden layers consisting of neurons to extract and learn complex information. Deep learning (DL)-based image recognition methods are spread across various fields. DL is being used to recognize food objects in an image and to recognize possible food allergens in an image (Salim et al. 2021). DL also provide food recommendations to the user based on their past choices (content-based filtering for food recommendation) (Bianchini et al. 2016). DL is also being used in detecting food adulteration by mixing cheap ingredients in order to maximize the quantity (Goyal et al. 2022).

Machine learning is essential to detect food adulteration in an image captured using mobile phone or any other device (Goyal et al. 2022). This will enable the detection of food adulteration even if it is not distinguishable by a human being. Deep learning-based food adulteration detection models are able to be deployed at a large scale as in this digital age major chunk of the population is having smartphones and access to an internet connection (Rateni et al. 2017). Deep learning models efficiently and precisely can detect food adulterants and their percentage using an image. Recent advances in adulteration detection using DL models for various foods ensures that this methodology is well tested and effective (Calle et al. 2022b).

The aim of this study is to detect the adulteration of red chilli powder; adulterated with red brick dust/powder. We have considered various machine learning algorithms for detecting the percentage of adulteration in red chilli powder.

Literature Review

Machine learning (ML) algorithms outperformed the earlier rule-based classical algorithms. ML enables systems to define rules on their own by recognizing hidden patterns in data. In order to make ML model perform precisely and accurately, there is a need to provide the best suitable and as many features during training the model. Image processing is generally used to improve image quality and extract useful features from an image. Various filters including grayscale, RGB, HSV, median smoothening, adaptive thresholding, canny edge detection, Sobel edge detection, and YCrCb are used to enhance features in the images. Feature extraction using various methods including neural networks is also used to extract various features from the images.

Researchers have trained the Linear Discriminant Analysis (LDA) Classifier on 1080 greyscale images for detecting the quality of wheat seeds into 9 categories with an accuracy of 98.15% by extracting the features using various feature extraction matrices including local similarity numbers (LSN), local similarity patterns (LSP), gray level run length matrix (GLRM), gray level cooccurrence matrix (GLCM), and local binary patterns (LBP) (Pourreza et al. 2012). Various image processing techniques have been applied to differentiate the quality of rice by using various filters including canny edge detection, adaptive thresholding, median smoothing, and grayscale (Pratibha et al. 2017) (Table 1). Researchers have designed the automated mango grading system. They used the fuzzy rule-based algorithm by processing video images captured using a CCD camera including background elimination and contour detection using the Graph Contour tracking system. They have predicted the maturity using RFE-SVM and gradation with multi attributes decision method (MADM). They reported an average grading precision rate of 90% (Nandi 2014). Scholars have applied image processing techniques via performing various morphological operations including dilation, erosion, and intensity of border to classify oranges based on maturity level (Carolina and David 2014).

Researchers have trained PLS-DA and LDA models to detect the adulteration of mined beef with pork and vice-versa using the dataset consisting of 18 different wavelengths of 220 meat samples spread across nine adulteration classes (Ropodi et al. 2015). They reported accuracy of 98.48%. Researchers have trained three variants of Partial Least Squares Regression (PLSR) including R-PLSR, A-PLSR, and KM-PLSR with the data retrieved by visible near infrared hyperspectral imaging. They reported an R2 score of 0.96 and RMSEP of 2.83% for R-PLSR; R2 score of 0.97 and RMSEP of 2.61% for A-PLSR; and R2 score of 0.96 and RMSEP of 3.05% For KM-PLSR (Kamruzzaman et al. 2016).

ANN classifier has been used to classify healthy (65% images) and unhealthy nuts (35% images) based on two different sets of features. First consists of 22 original features including 6 texture properties and 16 features extracted using gray level co-occurrence matrix (GLCM) at angles of 0, 45, 90, and 135. The second consists of a feature set obtained from the principal component analysis (PCA) algorithm. They split the dataset into 70:15:15 for training, validation and testing respectively. They reported an accuracy of 81.8% using the first set of features and 100% using the second set of features on the testing dataset (Khosa and Pasero 2014).

Decision tree, random forest, SVM, K-nearest neighbor (KNN), and ANN classifiers have been used to detect white rice adulteration using the dataset containing 330 samples spread across 7 different ratios. They reported that SVM and random forest outperformed other classifiers and are more robust in distinguishing white rice and adulterated mixtures (Lim et al. 2017). Scholars have proposed the model based on ANN, PLSR, and PCR algorithms for recognizing milk adulteration using the dataset containing milk samples mixed with bromothymol blue. They used RGB values and luminosity as features (Kobek 2017). MLP classifier has been considered to classify three varieties of rice, the dataset contains 222 images of each variety of rice, a total of 666 images. They used PCA for feature ranking and extracted 41 textural features and 17 morphological features from the images. They obtained the classification accuracy of 55.93 to 84.62% on the test dataset (Fayyazi et al. 2017).

Researchers have applied a window local segmentation algorithm to detect the surface defects in oranges on the dataset comprised of 1191 grey level images of oranges (Rong et al. 2017). Anami et al. 2019 trained BPNN, SVM, and k-NN classifiers to detect paddy adulteration into 7 different varieties and then classify it into 5 different adulteration levels (10%, 15%, 20%, 25%, and 30%). They extracted color features, GLCM features, and LBP features from RGB images. They also applied PCA and sequential forward floating selection (SFFS) algorithm for feature selection. They reported an accuracy of 41.31% using the BPNN classifier trained on extracted color features, the accuracy of 44.74% when trained on extracted GLCM features, and accuracy of 39.03% when trained on extracted LBP features. They also reported an accuracy of 35.80% using SVM classifier trained on extracted color features, accuracy of 37.00% when trained on extracted GLCM features, and accuracy of 35.00% when trained on extracted LBP features. They also reported an accuracy of 36.40% using k-NN classifier trained on extracted color features, accuracy of 34.40% when trained on extracted GLCM features, and accuracy of 34.71% when trained on extracted LBP features. They found significant improvement in performance after applying feature selection algorithms and using combined color-texture features. On Selected features using SFFS algorithm, they reported an accuracy of 91% using BPNN classifier, 86.91% using SVM classifier, and 82.14% using k-NN classifier. On Selected features using PCA algorithm, they reported an accuracy of 93.31% using BPNN classifier, 89.29% using SVM classifier, and 83.66% using k-NN classifier (Anami et al. 2019).

Materials and Methods

Sample preparation

Full grown red chilli of variety Bullet Lanka-5 (Capsicum annuum L.) were collected from Kaliachak, Malda (24°86'N 88°01' E). The samples were rinsed with double distilled water, followed by Sun drying (37 ± 3 °C, relative humidity 75–81%, and for consecutive 3 days from 9.00 am to 5.00 pm). The final moisture content of the dried samples were 4–5% (dry weight basis) and ash content was 4.5%. The dried samples were grinded with mixer grinder (500 W, grinding jar capacity 0.5 L, hybrid motor) for 9 min (3 min per cycle, in total 3 cycles, 18,000 rpm). The final product was passed through a screen of 60 mesh, and the undersized powdered samples were considered as pure red chilli powder.

Burnt clay bricks (IS: 1077–1992) were procured from local market, and passed through jaw crusher, the crushed brick powder was passed through a screen of 60 mesh, and the undersized powdered materials were considered as adulterant.

The chilli powder sample was mixed thoroughly with the brick powder (5%, 10%, 15%, 20%, 25%, 30%, 35%, 40%, 45%, and 50%) to prepare a homogeneous mixture of adulterated samples.

Image Acquisition

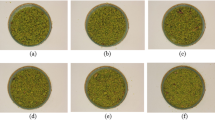

In total, 12 sets of samples were prepared, 100% pure red chilli powder, 100% brick powder, and 10 sets of adulterated samples (5–50%). A total of 20 equal sized cells were drawn on the white A4 paper sheet an white A4 paper sheet (210 mm × 297 mm, 80 gsm). The samples were spread over the paper in thin layer. Thus, 20 equal sized cells were there loaded with similar samples (Fig. 1). In total,12 × 20 = 240 images were captured.

Each of the cells were captured separately with Realme 8 Pro (Android 11, Realme UI 2.0, Adreno 618, Octa-core, 108 MP, f/1.9, 26 mm (wide), 1/1.52", 0.7 µm, PDAF, 8 MP, f/2.3, 119˚, 16 mm (ultrawide), 1/4.0", 1.12 µm, 2 MP, f/2.4, (macro), 2 MP, f/2.4, (depth)) without flash. The perpendicular distance between each of the cells and the camera lens was 15 cm. The schematic diagram for image acquisition has been shown in Fig. 2.

Color Space Representation

The color space representation chosen to represent images matters, as it can make ignoring or emphasizing certain information easier. For example, in HSV representation emphasizing color (Hue) is easy and it is also easy to ignore brightness (Value) information. In our study we have explored four different color space representations which are discussed below.

RGB

Single RGB pixel consists of intensities of red, green, and blue components of light illuminating that spot in the image. These intensities can take values from 0 to 255. RGB is one of simplest and straight forward ways of representing an image digitally.

HSV

Single HSV pixel consists of hue, saturation, and value (brightness) components of light illuminating that spot in the image. This representation is better at modelling the way humans perceive color when compared to RGB. Hue can take values from 0 to 360. Saturation and value can take values from 0 to 100. The formula for conversion from RGB to HSV is given below. The inverse formula can be used for converting the other way around.

Hue calculation:

Saturation calculation:

Value calculation:

Lab

This color space representation represents lightness, and green–red and yellow-blue values of pixels. It is also referred to as L*a*b* color space. It is commonly used for detecting minor variations in color. The L component takes value from 0 to 100, a and b components value from –128 to 128. Conversion from RGB color space (IEC 61,966–2-1:1999) to the CIE Lab colorspace is carried out using the D65 illuminant function and aperture angle of “2” for the observer (van der Walt 2014).

YCrCb

This color space represents an image using luma, blue-difference, and red-difference chroma components. This representation is perceptually uniform. The luma component varies from 0 to 1. The blue-difference and red-difference components vary from –0.5 to 0.5. The digital version of the below RGB to YCrCb is used in this study.

Feature Extraction

Instead of using images directly we use feature extraction methods for speeding up training and inferencing, ease of model comprehension, improving stability of the model.

Color Histogram

This feature extraction method is based on making histogram for each individual channel and concatenating the bin-count values of every channel. 256 was the number of bins picked for RGB, Lab, YCrCb color space images. 360 was the number of bins picked for HSV images. This representation only has color information and it ignores spatial information. This is suitable for our application as we want to be sensitive to the color of the Chilli powder while being insensitive to its geometric distribution.

Channel Mean Value

This feature extraction method simply utilizes the mean channel intensities of the image. This is primarily used for providing comparison with color histogram features.

Classification Techniques

Classification is performed on the extracted features to determine the level of adulteration. The techniques used for classification are discussed below.

Cat Boost Classifier

Cat Boost (Dorogush et al. 2018) classifier is a open-source gradient boosted tree-based classifier method by Yandex. It is highly scalable making it suitable for big data applications. It is also one of the best-known algorithms for performing classification on tabular data. This algorithm is implemented using the Cat Boost library. Cat Boost classifier name consist of two words which are “Category” and “Boosting”. It works well with the various type of categorical data such as text, image, and audio. It uses ensemble-based learning technique named as Boosting to create an ensemble of well chosen strong and diverse models by combining all weak learners. It relies on the ordering principle and called target based with prior (TBS). It automatically converts categorical data into numerical data using various statistics on combination of categorical features and combination of categorical and numerical features without using any explicit pre-processing technique. It uses oblivious decision trees, where the same splitting rule is used across all intermediate nodes within the same level of tree. It is a robust classifier as it is less prone to overfitting. It also lowers the need for parameter tuning, providing great results using default parameters only. The Oblivious Decision Tree used by CatBoost Classifier has been shown in Fig. 3.

Random Forest Classifier

Random forest (Breiman 2001) classifier is a model based on an ensemble of decision trees. It uses bagging technique for creating the ensemble. In bagging technique, we have various base learners. Similarly, random forest consist of various decision trees as base learners. We pick some sample of rows (known as row sampling) and features (known as feature sampling) from the dataset various times with replacement to train various decision trees (known as base learners). Then, we use the majority vote on decision trees inference to calculate the prediction and accuracy of Radom Forest Algorithm. That’s why, Random Forest Classifier shows low variance while Decision Trees shows high variance. An implementation by the scikit-learn (Pedregosa et al. 2011) library is used by us. The working of Random Forest Classifier has been shown in Fig. 4.

Extra Trees Classifier

Extra Trees classifier (Geurts et al. 2006) is a variant of the random forest classifier which also shows low variance. Unlike random forest it uses entire dataset instead of subsampling with replacement. It also sometimes uses random splits instead of always picking locally optimum splits like random forest classifier. An implementation of this algorithm is provided by the scikit-learn (Pedregosa et al. 2011) library.

Extreme Gradient Boosting Classifier

Extreme gradient boosting (Chen and He 2017) is a technique similar to gradient boosting classifier but it utilizes a second-order Taylor approximation making it behave more like Newton–Raphson method rather than gradient descent in function space. It splits the tree level wise (depth wise) (Fig. 5). There are various hyper parameters that can be optimized. XGBoost library is used for implementing this classifier.

Light Gradient Boosting Machine Classifier

Light gradient boosting machine (Ke et al. 2017) classifier is fast, distributed, high performance gradient boosting-based model that uses gradient-based one side sampling and exclusive feature bundling for better performance. It splits the tree leaf wise (best first) (Fig. 6). Like XGBoost, there are various hyper parameters that can be optimized. It can lead to overfitting which can be minimized by defining the depth for splitting. The LightGBM library is used for implementing this algorithm.

Gradient Boosting Classifier

Gradient boosting classifier (Friedman 2001; Hastie et al. 2009) algorithm is similar to Ada Boost Classifier with the main difference being this algorithm has a fixed base estimator. It also has a differentiable loss function and training is done by utilizing the technique of gradient boosting. In Gradient boosting algorithm, suppose we have some function Y(β0, β1). We want to minimize this function by starting with some β0, β1 and keep changing β0, β1 to reduce Y(β0, β1) until we hopefully end up at a minimum.

Repeat until convergence

The implementation by scikit-learn library is used (Pedregosa et al. 2011).

K Nearest Neighbors Classifier

K nearest neighbors (KNN) (Fix and Hodges 1989) classifier is a lazy learner that picks the nearest k points and predicts the modal class of the k points. Different spatial metrics can be used for picking nearest points (Fig. 7). An implementation of KNN by scikit-learn library is used in this study.

This method may utilize any distance metric like Euclidean distance, Manhattan distance, Minkowski distance, etc. Distance between vectors \({x}_{i}\) and \({y}_{i}\) in a n-dimensional vector space is given for the above-mentioned metrics below,

Ridge Classifier

Ridge classifier (McDonald 2009) method converts output to range –1 to 1 and solves it as a ridge regression problem. One versus all approach is used for multi-class classification.

This model converts class 0 into value of –1 and keeps class 1 values unmodified. Then it applies Ridge regression to model the data (Fig. 8). Then it predicts class 1 for given input if the value of Ridge regression output is greater than 0, otherwise it predicts class 0. Typically, the parameters are optimized either analytically using a formula or estimated using methods based on gradient descent or Newton–Raphson method. Ridge classifier is realized by utilizing the scikit-learn library (Pedregosa et al. 2011).

Support Vector Machine Classifier

Support vector machine (SVM) classifier uses support vector machine and kernel trick for performing classification (Platt 2000). One versus rest technique is utilized for multi-classification. SVM implementation by scikit-learn is utilized in this study.

This technique utilizes a support vector \(w\) to describe a hyperplane that nearly separates the two classes (Fig. 9), in such a way that the margin between the two classes is large. The support vector is learned by minimizing the following cost function \(l\),

Here, \(\lambda\) is a hyperparameter that determines the trade-off between the size of the margin and the classification accuracy. \(b\) is some real constant, \(n\) denotes number of inputs, \({x}_{i}\) denotes ith input and \({y}_{i}\) denotes ith output. The loss function is typically minimized using L-BFGS technique.

Ada Boost Classifier

Ada Boost (Freund and Schapire 1997; Zhu et al. 2006) uses a technique called adaptive boosting for ensembling several decision stumps (weak-learners) into one strong learner. A decision tree with one depth is known as a decision stump. In boosting techniques, there are various sequential base learners, where incorrectly classified records by first base learner will pass to the next base learner, incorrectly classified records by second base learner will pass to the next base learner and so on. In Ada Boost algorithm (Fig. 10), all the base learners are decision trees and weights will decide the sequence of base learners. We calculate either entropy or Gini index of all decision stumps (for each feature) to calculate the updated weights and normalized weights to decide the sequence of all base learners. An implementation by scikit-learn library is utilized (Pedregosa et al. 2011).

Logistic Regression

Logistic regression (Kleinbaum and Klein 2002) performs binary classification using the logistic function applied on a linear regression model to get probabilities (Fig. 11). One versus rest approach is used for multi-class classification. This technique is utilized by us with the help of scikit-learn library (Pedregosa et al. 2011).

This technique models the log odds of binary classification as a linear combination of the inputs. The probability of true class \(p\left(x\right)\) associated with input \(x\) is computed as follows where \({\beta }_{0}\) and \({\beta }_{1}\) are trainable parameters,

The log loss function \(l\) is used for optimization and parameter estimation. Here, \({p}_{k}\) denotes the true class probability associated with input at index \(k\) and \({y}_{k}\) denotes actual class for input at index \(k\).

The parameters are usually solved by direct formula or gradient descent-based techniques.

Dummy Classifier

This classification technique predicts the modal class and is used as a baseline. An implementation by scikit-learn with the ‘prior’ strategy is utilized by us.

Quadratic Discriminant Analysis (Quadratic Classifier)

Quadratic discriminant analysis (Tharwat 2016) is a generalization of linear discriminant analysis. This technique is implemented using sckit-learn library in this study. Unlike linear discriminant analysis, this technique does not make the assumption that measurements of each class are identically distributed. This technique utilizes quadratic decision surfaces for performing classification (Fig. 12). This technique works for binary classification. It can be used for multi-class classification using one vs rest technique. This technique works by computing the likelihood ratio and comparing it against some threshold value \(t\); based on the comparison the prediction is made. Let \({\mu }_{0}\) and \({\mu }_{1}\) be the means associated with both classes and \({\Sigma }_{0}\) and \({\Sigma }_{1}\) be the covariance matrices associated with both the classes. Then the likelihood ratio \({l}_{r}\) is computed as follows,

The decision boundary we get when thresholding \({l}_{r}\) is quadratic in nature. Hence, we call it quadratic discriminant analysis.

Classification Performance Evaluation

Different classifiers are trained on 70% of the total data using threefold cross-validation. Then the classifiers are evaluated on the remaining 30% test data and metrics like accuracy, precision, recall, F1 score, Cohen’s kappa (κ), Matthew’s correlation coefficient (MCC), and ROC-AUC score (receiver operator characteristic curve – area under the curve score) are computed.

The above-mentioned metrics are computed using the formulas presented below, where TP denotes the number of true positive predictions, TN is the number of true negative predictions, FN is the number false negative predictions (i.e., type II error), FP is the number false positive predictions (i.e., type I error), P is the total number of positive tuples, and N is the total number of negative tuples. As we are dealing with multi-class classification one vs rest technique is utilized, i.e., the one particular class is treated as positive class and remaining classes are treated as negative class. This is done considering every class as a positive class and the final metrics are computed by averaging over metrics computed by treating one class as positive class or by a natural generalization.

The ROC curve plots the false positive rate (FPR) against the true positive rate (TPR) as the classification threshold is varied, where true positive rate is the same as recall and FPR is defined below. The area under this curve is the AUC score.

Regression Techniques

Different regression models are trained to predict the percentage of chilli powder present in the given observation. Once again, instead of using the image directly the extracted features from the image are used for this purpose.

Ada Boost Regressor

Ada boost regressor (Drucker 1997) uses a technique called adaptive boosting for ensembling several decision stumps (weak-learners) into one strong learner. A decision tree with one depth is known as a decision stump. It uses ensemble-based learning technique named as Boosting to create an ensemble of well chosen strong and diverse models by combining all weak learners. An implementation by scikit-learn library is utilized (Pedregosa et al. 2011).

Gradient Boosting Regressor

Gradient boosting regression algorithm (Friedman 2001) starts by making a single leaf, instead of a tree or stump. This leaf represents an initial guess for the target feature values of all of the sample. When we predict a continuous value, the first guess is the average value. Then, Gradient Boosting algorithm builds a tree. Like AdaBoost, this tree is based on the errors made by the previous tree. But unlike Adaboost, this tree is usually larger than a stump. Gradient Boost still restricts the size of the tree. Thus, like AdaBoost, gradient boost builds fixed sized trees based on the previous tree’s errors. Also, it scales all trees by the same amount. An implementation by scikit-learn library is utilized (Pedregosa et al. 2011).

Light Gradient Boosting Machine Regressor

This is an enhancement of the gradient boosting regressor algorithm. LightGBM Regressor is fast, distributed, high performance gradient boosting-based model that uses gradient-based one side sampling and exclusive feature bundling for better performance (Ke et al. 2017). It splits the tree leaf wise (best first) (Fig. 6). Like XGBoost, there are various hyper parameters that can be optimized. It can lead to overfitting which can be minimized by defining the depth for splitting. The LightGBM library is used for implementing this algorithm.

Extreme Gradient Boosting Regressor

Extreme gradient boosting (Chen and He 2017) is a technique similar to gradient boosting regression algorithm but it utilizes a second-order Taylor approximation making it behave more like Newton–Raphson method rather than gradient descent in function space. It splits the tree level wise (depth wise) (Fig. 5). There are various hyper parameters that can be optimized. XGBoost library is used for implementing this classifier.

Random Forest Regressor

Random forest regressor (Breiman 2001) is a model based on an ensemble of decision trees. It uses bagging technique for creating the ensemble. In bagging technique, we have various base learners. Similarly, random forest consists of various decision trees as base learners. We pick some sample of rows (known as row sampling) and features (known as feature sampling) from the dataset various times with replacement to train various decision trees (known as base learners). Then, we use the majority vote on decision trees inference to calculate the prediction and accuracy of radom forest algorithm. An implementation by the scikit-learn (Pedregosa et al. 2011) library is used by us. The working of random forest regressor has been shown in Fig. 4.

Extra Trees Regressor

Extra Trees regressor (Geurts et al. 2006) is a variant of the random forest regressor which also shows low variance. Unlike random forest it uses entire dataset instead of subsampling with replacement. It also sometimes uses random splits instead of always picking locally optimum splits like random forest regressor. An implementation of this algorithm is provided by the scikit-learn (Pedregosa et al. 2011) library.

Cat Boost Regressor

Cat boost regressor (Dorogush et al. 2017) is a a open-source gradient boosted regression method by Yandex. It is highly scalable making it suitable for big data applications. It is also one of the best-known algorithms for performing regression on tabular data. This algorithm is implemented using the Cat Boost library. Cat Boost regressor name consist of two words which are “Category” and “Boosting.” It works well with the various type of categorical data such as text, image, and audio. It uses ensemble-based learning technique named as Boosting to create an ensemble of well chosen strong and diverse models by combining all weak learners. It automatically converts categorical data into numerical data using various statistics on combination of categorical and numerical features without using any explicit pre-processing technique.

K Nearest Neighbors Regressor

This technique utilizes a lazy regressor that predicts the mean (or weighted mean in weighted nearest neighbors) of the k nearest neighbors (Song et al. 2017). It has higher prediction power as compare to linear regression as it takes care of the non-linearity. Implementation from scikit-learn of the KNN regressor algorithm is used in this study.

Linear Regressor

This regression technique works by fitting a line of best fit to the data. It uses the mean squared error function as the loss function (Schneider et al. 2010). The mean squared error loss (L) is defined as follows,

where N is the total number of training points, \({y}_{i}\) and \(\widehat{{y}_{i}}\) are the actual output and the estimated output.

Huber Regressor

This regressor is similar to a linear regressor however it uses Huber loss instead of standard loss of mean squared error making it more robust when dealing with outliers (Huber and Ronchetti 2009). Scikit-learn-based implementation is used in this study. The Huber loss function on residual \(a\) with parameter \(\delta\) is defined as follows:

Bayesian Ridge Regressor

This technique adds to the basic linear regressor model an additional L2-regularization term in the loss function (MacKay 1992). It also uses Bayesian techniques for determining priors and hyperparameter selection. Scikit-learn-based Bayesian ridge regression implementation is used in this study. L2-regularization term is mathematically defined as follows,

where \({w}_{i}\) denotes the weight of the ith input parameter out of N total input parameters.

Lasso Regressor

This technique adds an L1-regularization term to the loss function of basic linear regression (Ranstam and Cook 2018). This gives it the capability making the contributions of certain terms zero. Scikit-learn-based Lasso regression implementation is used in this study. The L1-regularization term is defined similarly to the L2-regularization term as follows,

Elastic Net Regressor

This technique utilizes both L1 and L2 regularization with the basic linear regression model (Hans 2012). This gives it capabilities of Lasso and Ridge regression to some degree. Scikit-learn-based elastic net regression is used in this study.

Lasso Least Angle Regressor

This technique utilizes the least angle regressor algorithm in lasso mode (Januaviani et al. 2019). This provides some guarantees on convergence and is usually preferred over least angle regressor. Scikit-learn-based Lasso least angle regressor is used in this study.

Least Angle Regressor

Least angle regressor (LAR) algorithm is a forward-stepwise algorithm for regression related to Lasso regression (Efron et al. 2004). This algorithm works stage-wise and does not provide any guarantees on convergence. Scikit-learn-based least angle regressor is used in this study.

Dummy Regressor

This regressor predicts the arithmetic mean of the target class. This technique is used only as a baseline.

Regression Performance Evaluation

Different regressors are trained on 70% of the total data using threefold cross-validation. Then the regressors are evaluated on the remaining 30% test data and metrics like MAE (mean absolute error), MAPE (mean absolute percentage error), MSE (mean squared Error), R2 score (Pearson correlation coefficient R squared), RMSE (root mean squared error), and RMSLE (root mean squared logarithmic error) are computed.

The above-mentioned metrics are defined below, where N is the number of predictions, ȳ is the mean of actual values, yi is the ith actual value and ŷ is the ith predicted value.

Model Development Process

To create the machine learning-based models, first we created the dataset by capturing the high-quality Images of red chilli powder adulteration with red brick powder at different proportions. We constructed 12 classes consist of 0%, 5%, 10%, 15%, 20%, 25%, 30%, 35%, 40%, 45%, 50%, and 100% adulterant. The dataset contains 240 images (20 images per class) spread across 12 classes. We split each 4 × zoom image into 4 segments and applied various image color space filters (RGB, HSV, Lab, and YCbCr). Then, extracted features using mean and histogram feature extraction techniques. We used various classification models to classify the adulteration percentage class. We trained Extra Trees Classifier, CatBoost Classifier, Random Forest Classifier, Light Gradient Boosting Machine, Gradient Boosting Classifier, K Neighbors Classifier, Naive Bayes, Decision Tree Classifier, Ridge Classifier, SVM—Linear Kernel, Linear Discriminant Analysis, Logistic Regression, Ada Boost Classifier, Quadratic Discriminant Analysis, Dummy Classifier, and Extreme Gradient Boosting Classifier. Then, we evaluated all the classification models by computing various classification model performance metrics.

We also used regression models to predict the percentage of adulteration in an image. We trained extra trees regressor, K neighbors regressor, CatBoost regressor, random forest regressor, gradient boosting regressor, extreme gradient boosting, light gradient boosting machine, AdaBoost regressor, least angle regression, Bayesian ridge, ridge regression, linear regression, lasso regression, elastic net, Huber regressor, decision tree regressor, orthogonal matching pursuit, Lasso least angle regression, dummy regressor, and passive aggressive regressor to determine the percentage of red brick powder adulteration in red chilli powder using an image. Then, we evaluated all the regression models by computing various regression model performance metrics. We used various python libraries for implementing these machine learning models including scikit-learn, pandas, NumPy, scikit-image, XGBoost, LGBMBoost, CatBoost, and PyCaret. We used GoogleColab to train all the machine learning models. Also, threefold cross-validation was used during training process for the model to generalize better and prevent overfitting. The results of this process are presented and discussed in the following section. The work flow adopted to detect adulterated chilli powder and quantification of adulteration has been shown in Fig. 13. We have modeled our dataset for performing adulteration quantification. The link to the dataset for adulterated red chilli powder with brick powder is https://doi.org/10.17632/cpm7y44746.1. The code which was used for performing adulteration quantification is available at https://github.com/arun5309/chilli_adulteration_quantification.

Result and Discussion

The overall experimental results indicate that the combination of color space, feature extraction and prediction techniques adopted in this study yield good results and they do not take too much time to train or make predictions. The experimental results are discussed in detail in the remaining part of this section.

From the outcomes of the experiments carried out summarized in Tables 2–17 one can see that histogram feature extraction technique consistently outperformed mean feature extraction techniques in majority of cases. With approximately a difference of 0.08–0.3 in term of R2 score for regression and by around 30% difference in classification accuracy. Also, regression techniques yield better and more useful prediction than classification techniques. This being due to classification techniques treating all classes representing adulteration ratio as independent and unrelated, in contrast to regression treating it on a spectrum. This is why classification models have a prediction accuracy around 90% and regression models can explain about 98% of the variance as inferred from the R2 score.

To predict the percentage of adulteration, we found that Extra Tree regressor was best performing with an R2 score of 0.9812 and 0.9837 for YCbCr and Lab color spaces histogram features respectively. The YCbCr and Lab color spaces have similar performance characteristics in case of regression for histogram features, with around 0.98 R2 score as seen from Tables 11 and Table 13. The HSV and RGB color spaces perform slightly worse in regression when using histogram feature extraction technique. Around, 0.97 and 0.96 R2 respectively as seen from Tables 15 and Table 17. Performance of RGB and YCbCr color space is somewhat worse than Lab for channel mean value-based features. With R2 scores of 0.87, 0.86, and 0.9 respectively as seen from Tables 16, 10, and 12. However, HSV perform significantly worse than other color spaces for regression using channel mean value features. With an R2 of 0.61 as seen in Table 14 R2 score of regression methods with different color spaces for grading chilli powder images is reported in Fig. 14.

To classify the adulteration class, we found that Cat Boost classifier was best performing with an Accuracy of 0.9049 and 0.908 for YCbCr and HSV color spaces histogram features respectively. Also, YCbCr and Lab have similar performance characteristics. With a classification accuracy score of around 60% for channel mean value features and 90% score for histogram-based features as seen from Tables 2, 3, 4, and 5. HSV has similar performance Charteristics to YCbCr and Lab when it comes to histogram features (accuracy score of around 90%) but it performs significantly worse when it comes to channel mean value features (accuracy score of 44%) as seen from Table 6 and Table 7. RGB performs somewhat worse than other color spaces when histogram features (around 87% classification accuracy from Table 8) are used but surprisingly outperforms YCbCr and Lab color spaces by a small margin (approximately 63% classification accuracy from Table 9) when channel mean value features are used. Classification accuracy of classifiers with different color spaces for grading chilli powder images is reported in Fig. 15. Figure 16 shows the confusion matrix related to the image grading of red chilli powder adulteration with red brick powder. Most of the images are predicted correctly by the classifier, and a few images were misclassified, with a difference of 5% adulteration in actual and predicted classes.

One can infer from Tables 10, 11, 12, 13, 14, 15, 16, and 17 the tree-based algorithms and KNN are the regressors with the best performance across color space and feature extraction methods. There are only minor variations in their performance characteristics and achieving best scores on all the regression experiments. Other regressors perform somewhat or significantly worse than the regressors mentioned previously.

Once again, the tree-based algorithms perform well in classification algorithms as seen from Tables 2 to Table 9. With Ada boost being a notable exception, which performs slightly worse than SVM. These results aren’t surprising as these algorithms have great success when dealing with tabular data. KNN, SVM, logistic regression and naive Bayes algorithms lag slightly behind the tree-based algorithms in terms of performance. Quadratic and linear discriminant analysis classifiers had a good success when it came to classification based on channel mean value-based features often outperforming the classifiers (including tree-based ones) mentioned earlier.

Most of these algorithms are fast to train and can be trained in a few seconds on datasets of this size and hardware configuration used in this study as seen from Tables 2–17. This indicates that these algorithms can be deployed on low-end devices in real-time scenarios and these would still yield decent results. However, CatBoost, LightGBM, and gradient boosted trees took much longer to train than other algorithms. These anomalies can be explained by stating that these three algorithms do not utilize hardware acceleration (which they were designed to take advantage of) in our benchmarks rather than having inefficiencies in the design of these algorithms.

The methods employed in this study perform nearly as well as other methods employed in the literature (Jahanbakhshi et al. 2021b), while consuming little computational resources for both training and inferencing. This show cases the practicality and utility of the methods employed in this study especially in situations with low computational resources and lack of advanced scientific equipment. For example, performing adulteration quantification of chilli powder using a low end smartphone.

Tree-based techniques outperformed most other techniques in both regression and classification. KNN closely followed the tree-based techniques in terms of performance in both regression and classification. SVM, logistic regression, naive Bayes classifier, and linear and quadratic discriminant analysis had good performance in case of classification, although discriminant analysis-based methods were only successful when using channel mean value-based features for classification.

We also saw that regression-based techniques were more successful than classification-based techniques for solving this problem. The reason being strictly grouping percentage of classification into bins isn’t a very good idea in practice and for this particular application small amounts of errors are acceptable in practice. This is clear when we compare Tables 10–17 with Tables 2–9.

The Lab and YCbCr color spaces had a good performance overall and produced stable results. The RGB color space had moderate performance and stability although it produced a few surprisingly good results. The HSV had performance comparable to that of Lab and YCbCr but it was the most unstable among the four color spaces and had a few performance dips. This clearly highlights that the choice of color space is extremely important when performing analysis on chilli powder. As the distinction between chilli powder and brick powder is made primarily based on color.

Overall, from the results of extensive experimentation carried out in this study one can infer that histogram-based feature technique outperforms channel mean value-based feature extraction, YCbCr and Lab color spaces outperform HSV and RGB, tree-based algorithms have best performance characteristics across the board and training time isn’t a significant factor for datasets of this size with modern computational resources. It is also clear that regression techniques are more suitable than classification for quantifying amount of adulteration in red chilli powder. It is clear from these results that the approach taken in this study is a promising one for adulteration quantification. However, further research is needed to extend this result into adulteration quantification problems on other foods.

Conclusion

Many models and features extraction techniques have been compared and it is clear that these techniques can be used for efficiently in the detecting and quantifying the percentage of brick powder present in given chilli powder sample. Utilizing feature extraction techniques instead of utilizing the entire image makes our method suitable for large-scale and mobile application. The use of simple statistical machine learning algorithms instead of deep learning algorithms makes the model size smaller as well. In this study many image processing and ML techniques were employed and compared for performing adulteration quantification of chilli powder. To classify the adulteration class, we found that Cat Boost classifier was best performing with an accuracy of 0.9049 and 0.908 for YCbCr and HSV color spaces histogram features respectively. To predict the percentage of adulteration, we found that Extra Tree regressor was best performing with an R2 score of 0.9812 and 0.9837 for YCbCr and Lab color spaces histogram features respectively. The results achieved were comparable to that of other results found in the literature employing DL (deep learning) methods. Evidencing that the methodology adopted in this study is viable, efficient, and especially suited for use in low resource and mobile environments. In the future a mobile application can be made for utilizing this technology in a more accessible manner.

Data Availability

All the data used in the manuscript are available in the tables and figures.

Code Availability

Not applicable.

References

Al-Awadhi MA, Deshmukh RR (2021) Detection of adulteration in coconut milk using infrared spectroscopy and machine Learning. Sana'a, Yemen, In: 2021 Int Conf Modern Trends Inf Commun Technol Ind (MTICTI) 1–4. https://doi.org/10.1109/MTICTI53925.2021.9664764

Al-Awadhi MA, Deshmukh RR (2022) Honey Adulteration detection using hyperspectral imaging and machine learning. Vijayawada, India, In: 2022 2nd Int Conf Artif Intell Signal Proc (AISP) 1–5. https://doi.org/10.1109/AISP53593.2022.9760585

Anami BS, Malvade NN, Palaiah S (2019) Automated recognition and classification of adulteration levels from bulk paddy grain samples. Inf Process Agric 6:47–60. https://doi.org/10.1016/j.inpa.2018.09.001

Ayob O, Hussain PR, Suradkar P et al (2021) Evaluation of chemical composition and antioxidant activity of Himalayan red chilli varieties. LWT 146:111413. https://doi.org/10.1016/j.lwt.2021.111413

Bansal S, Singh A, Mangal M et al (2017) Food adulteration: sources, health risks, and detection methods. Crit Rev Food Sci Nutr 57:1174–1189. https://doi.org/10.1080/10408398.2014.967834

Bao P, Zhang L (2003) Noise reduction for magnetic resonance images via adaptive multiscale products thresholding. IEEE Trans Med Imaging 22:1089–1099. https://doi.org/10.1109/TMI.2003.816958

Bianchini D, De Antonellis V, Franceschi N, Melchiori M (2016) PREFer: a prescription-based food recommender system. Comput Stand Interfaces 54:64–75. https://doi.org/10.1016/j.csi.2016.10.010

Boateng AA, Sumaila S, Lartey M et al (2022) Evaluation of chemometric classification and regression models for the detection of syrup adulteration in honey. LWT 163:113498. https://doi.org/10.1016/j.lwt.2022.113498

Breiman L (2001) Random Forests. Mach Learn 45:5–32. https://doi.org/10.1023/A:1010933404324

Brighty SPS, Harini GS, Vishal N (2021) Detection of adulteration in fruits using machine learning. Chennai, India, In: 2021 Sixth Int Conf Wirel Commun, Signal Proc Netw (WiSPNET) 37–40. https://doi.org/10.1109/WiSPNET51692.2021.9419402

Calle JLP, Barea-Sepúlveda M, Ruiz-Rodríguez A et al (2022a) Rapid Detection and quantification of adulterants in fruit juices using machine learning tools and spectroscopy data. Sensors (Basel) 22:. https://doi.org/10.3390/s22103852

Calle JLP, Ferreiro-González M, Ruiz-Rodríguez A et al (2022b) Detection of adulterations in fruit juices using machine learning methods over FT-IR Spectroscopic data. Agron 12. https://doi.org/10.3390/agronomy12030683

Carolina CPD, David NTD (2014) Classification of oranges by maturity, using image processing techniques. In: 2014 III Int Congr Eng Mechatron Autom (CIIMA) 1–5. https://doi.org/10.1109/CIIMA.2014.6983466

Chen T, He T (2017) Xgboost: extreme gradient boosting. R package version 0.4-2 1, no. 4:1–4

Dorogush A, Gulin A, Gusev G et al (2017) Fighting biases with dynamic boosting

Dorogush AV, Ershov V, Gulin A (2018) CatBoost: gradient boosting with categorical features support. ArXiv abs/1810.1

Drucker H (1997) Improving Regressors Using Boosting Techniques. Proc 14th Int Conf Mach Learn

Efron B, Hastie T, Johnstone I, Tibshirani R (2004) Least angle regression. Ann Stat 32:407–499. https://doi.org/10.1214/009053604000000067

Fatima N, Areeb QM, Khan IM, Khan MM (2021) Siamese network-based computer vision approach to detect papaya seed adulteration in black peppercorns. J Food Process Preserv n/a:e16043. https://doi.org/10.1111/jfpp.16043

Fayyazi S, Abbaspour-Fard M, Rohani A, et al (2017) Identification and classification of three iranian rice varieties in mixed bulks using image processing and MLP neural network. Int J Food Eng 13:. https://doi.org/10.1515/ijfe-2016-0121

Fix E, Hodges JL (1989) Discriminatory analysis. nonparametric discrimination: consistency properties. Int Stat Rev / Rev Int Stat 57:238–247

Freund Y, Schapire RE (1997) A Decision-Theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55:119–139. https://doi.org/10.1006/jcss.1997.1504

Friedman JH (2001) Greedy function approximation: A grADIENT BOOsting machine. Ann Stat 29:1189–1232. https://doi.org/10.1214/aos/1013203451

Gao W, Zhang X, Yang L, Liu H (2010) An improved Sobel edge detection. In: 2010 3rd Int Conf Comput Sci Inf Technol 67–71. https://doi.org/10.1109/ICCSIT.2010.5563693

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63:3–42. https://doi.org/10.1007/s10994-006-6226-1

Goyal K, Kumar P, Verma K (2022) Food Adulteration detection using artificial intelligence: a systematic review. Arch Comput Methods Eng 29:397–426. https://doi.org/10.1007/s11831-021-09600-y

Hans C (2012) Elastic Net regression modeling with the orthant normal prior. J Am Stat Assoc 106:1383–1393. https://doi.org/10.1198/jasa.2011.tm09241

Hastie T, Tibshirani R, Friedman J (2009) Overview of supervised learning. In: Hastie T, Tibshirani R, Friedman J (eds) The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer, New York, New York, NY, pp 9–41

Hendrawan Y, Widyaningtyas S, Fauzy MR (2022) Deep Learning to detect and classify the purity level of Luwak Coffee green beans. Pertanika J Sci Technol 30:1–18

Huang B, Liu J, Jiao J et al (2022) Applications of machine learning in pine nuts classification. Sci Rep 12:8799. https://doi.org/10.1038/s41598-022-12754-9

Huang W, Guo L, Kou W et al (2022) Identification of adulterated milk powder based on convolutional neural network and laser-induced breakdown spectroscopy. Microchem J 176:107190. https://doi.org/10.1016/j.microc.2022.107190

Huber PJ, Ronchetti EM (2009) Robust Statistics concomitant scale estimate. Wiley

Hussein WB, Moaty AA, Hussein MA, Becker T (2011) A novel edge detection method with application to the fat content prediction in marbled meat. Pattern Recognit 44:2959–2970. https://doi.org/10.1016/j.patcog.2011.04.028

Jahanbakhshi A, Abbaspour-Gilandeh Y, Heidarbeigi K, Momeny M (2021) Detection of fraud in ginger powder using an automatic sorting system based on image processing technique and deep learning. Comput Biol Med 136:104764. https://doi.org/10.1016/j.compbiomed.2021.104764

Jahanbakhshi A, Abbaspour-Gilandeh Y, Heidarbeigi K, Momeny M (2021) A novel method based on machine vision system and deep learning to detect fraud in turmeric powder. Comput Biol Med 136:104728. https://doi.org/10.1016/j.compbiomed.2021.104728

Jamaluddin F, Noranizan MA, Mohamad Azman E et al (2022) A review of clean-label approaches to chilli paste processing. Int J Food Sci Technol 57:763–773. https://doi.org/10.1111/ijfs.15293

Januaviani TMA, Gusriani N, Joebaedi K et al (2019) The best model of LASSO with the LARS (least angle regression and shrinkage) Algorithm Using Mallow’s Cp

Jin H, Wang Y, Lv B et al (2022) Rapid detection of avocado oil adulteration using low-Field nuclear magnetic resonance. Foods 11. https://doi.org/10.3390/foods11081134

Kamruzzaman M, Makino Y, Oshita S (2016) Rapid and non-destructive detection of chicken adulteration in minced beef using visible near-infrared hyperspectral imaging and machine learning. J Food Eng 170:8–15. https://doi.org/10.1016/j.jfoodeng.2015.08.023

Ke G, Meng Q, Finley T et al (2017) LightGBM: A highly efficient gradient boosting decision tree. In: Guyon I, Luxburg U Von, Bengio S, et al. (eds) Advances in Neural Information Processing Systems. Curran Associates, Inc

Khan N, Ahmed MJ, Shah SZA (2019) Comparative analysis of mineral content and proximate composition from chilli pepper (Capsicum annuum L.) germplasm. Pure Appl Biol 8. https://doi.org/10.19045/bspab.2019.80075

Khosa I, Pasero E (2014) Defect detection in food ingredients using multilayer perceptron neural network. In: 2014 World Symp Comput Appl Res WSCAR 2014

Kleinbaum DG, Klein M (2002) Important special cases of the logistic model. In: Kleinbaum DG, Klein M (eds) Logistic Regression: A Self-Learning Text. Springer, New York, New York, NY, pp 39–72

Kobek JA (2017) Vision based model for identification of adulterants in milk. http://su-plus.strathmore.edu/handle/11071/5652

Lakhwani K, Murarka P, Narendra M (2015) Color space transformation for visual enhancement of noisy color image. IET Image Process

Lanjewar MG, Morajkar PP, Parab J (2022) Detection of tartrazine colored rice flour adulteration in turmeric from multi-spectral images on smartphone using convolutional neural network deployed on PaaS cloud. Multimed Tools Appl 81:16537–16562. https://doi.org/10.1007/s11042-022-12392-3

Lapcharoensuk R, Danupattanin K, Kanjanapornprapa C, Inkawee T (2020) Combination of NIR spectroscopy and machine learning for monitoring chili sauce adulterated with ripened papaya. E3S Web Conf 187:4001. https://doi.org/10.1051/e3sconf/202018704001

Li Y, Huang J-B, Ahuja N, Yang M-H (2016) Deep joint image filtering BT - Computer Vision – ECCV 2016. In: Leibe B, Matas J, Sebe N, Welling M (eds). Springer International Publishing, Cham, 154–169

Lim DK, Long NP, Mo C et al (2017) Combination of mass spectrometry-based targeted lipidomics and supervised machine learning algorithms in detecting adulterated admixtures of white rice. Food Res Int 100:814–821. https://doi.org/10.1016/j.foodres.2017.08.006

Ma J, Sun D-W, Qu J-H et al (2016) Applications of computer vision for assessing quality of agri-food products: a review of recent research advances. Crit Rev Food Sci Nutr 56:113–127. https://doi.org/10.1080/10408398.2013.873885

MacKay DJC (1992) Bayesian interpolation. Neural Comput 4:415–447. https://doi.org/10.1162/neco.1992.4.3.415

Manasha S, Janani M (2016) Food Adulteration and its problems (Intentional, Accidental and Natural Food Adulteration). Int J Res Financ Int J Res Financ Mark 6:2231–5985

McDonald GC (2009) Ridge regression. WIREs. Comput Stat 1:93–100. https://doi.org/10.1002/wics.14

Mi S, Zhang X, Wang Y et al (2022) Effect of different genotypes on the fruit volatile profiles, flavonoid composition and antioxidant activities of chilli peppers. Food Chem 374:131751. https://doi.org/10.1016/j.foodchem.2021.131751

Nandi C (2014) Computer Vision Based Mango Fruit Grading System. https://doi.org/10.15242/IIE.E1214004

Nascimento CF, Santos PM, Pereira-Filho ER, Rocha FRP (2017) Recent advances on determination of milk adulterants. Food Chem 221:1232–1244. https://doi.org/10.1016/j.foodchem.2016.11.034

Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Pinheiro Claro Gomes W, Gonçalves L, Barboza da Silva C, Melchert WR (2022) Application of multispectral imaging combined with machine learning models to discriminate special and traditional green coffee. Comput Electron Agric 198:107097. https://doi.org/10.1016/j.compag.2022.107097

Platt J (2000) Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv Large Margin Classif 10

Pourreza A, Pourreza H, Abbaspour-Fard M-H, Sadrnia H (2012) Identification of nine Iranian wheat seed varieties by textural analysis with image processing. Comput Electron Agric 83:102–108. https://doi.org/10.1016/j.compag.2012.02.005

Pradana-López S, Pérez-Calabuig AM, Cancilla JC, Torrecilla JS (2022) Standard photographs convolutionally processed to indirectly detect gluten in chickpea flour. J Food Compos Anal 110:104547. https://doi.org/10.1016/j.jfca.2022.104547

Pratibha N, Hemlata M, Krunali M (2017) Analysis and identification of rice granules using image processing and neural network. International Journal of Electronics and Communication Engineering 10:25–33

Ranstam J, Cook JA (2018) LASSO regression. Br J Surg 105:1348

Rateni G, Dario P, Cavallo F (2017) Smartphone-based food diagnostic technologies: a review. Sensors (Basel) 17:. https://doi.org/10.3390/s17061453

Rong D, Rao X, Ying Y (2017) Computer vision detection of surface defect on oranges by means of a sliding comparison window local segmentation algorithm. Comput Electron Agric 137:59–68. https://doi.org/10.1016/j.compag.2017.02.027

Ropodi AI, Pavlidis DE, Mohareb F et al (2015) Multispectral image analysis approach to detect adulteration of beef and pork in raw meats. Food Res Int 67:12–18. https://doi.org/10.1016/j.foodres.2014.10.032

Sadhukhan T, Chatterjee S, Das RK et al (2019) Efficient Removal of noise from an image using HSV filtering. In: 2019 Global Conf Adv Technol (GCAT), Bangalore, pp 1–4

Salim NOM, Zeebaree SRM, Sadeeq MAM et al (2021) Study for food recognition system using deep learning. J Phys Conf Ser 1963:12014. https://doi.org/10.1088/1742-6596/1963/1/012014

Sarkar T, Mukherjee A, Chatterjee K et al (2022) Edge detection aided geometrical shape analysis of Indian gooseberry (Phyllanthus emblica) for freshness classification. Food Anal Methods. https://doi.org/10.1007/s12161-021-02206-x

Schneider A, Hommel G, Blettner M (2010) Linear regression analysis: part 14 of a series on evaluation of scientific publications. Dtsch Arztebl Int 107:776–782. https://doi.org/10.3238/arztebl.2010.0776

Shafiee S, Polder G, Minaei S et al (2016) Detection of honey adulteration using hyperspectral imaging. IFAC-PapersOnLine 49:311–314. https://doi.org/10.1016/j.ifacol.2016.10.057

Soltani Firouz M, Rashvand M, Omid M (2021) Rapid identification and quantification of sesame oils adulteration using low frequency dielectric spectroscopy combined with chemometrics. LWT 140:110736. https://doi.org/10.1016/j.lwt.2020.110736

Song Y, Liang J, Lu J, Zhao X (2017) An efficient instance selection algorithm for k nearest neighbor regression. Neurocomputing 251:26–34. https://doi.org/10.1016/j.neucom.2017.04.018

Sowmya N, Ponnusamy V (2021) Development of spectroscopic sensor system for an IoT application of adulteration identification on milk using machine learning. IEEE Access 9:53979–53995. https://doi.org/10.1109/ACCESS.2021.3070558

Srinath K, Kiranmayee AH, Bhanot S, Panchariya PC (2022) Detection of palm oil adulteration in sunflower oil using ATR-MIR spectroscopy coupled with chemometric algorithms. Mapan. https://doi.org/10.1007/s12647-022-00558-1

Subashini P (2010) Comparison of filters used for underwater image pre-processing. Int J Comput Sci Netw Secur 10:58–64

Tharwat A (2016) Linear vs. quadratic discriminant analysis classifier: a tutorial. Int J Appl Pattern Recognit 3:145. https://doi.org/10.1504/IJAPR.2016.079050

van der Walt S, Schönberger JL, Nunez-Iglesias J, Boulogne F, Warner JD, Yager N, Gouillart E, Yu T; scikit-image contributors (2014) scikit-image: Image processing in Python. PeerJ 2:e453. https://doi.org/10.7717/peerj.453

VijaykumarVanathiKanagasabapathy VRPTP (2010) Fast and efficient algorithm to remove gaussian noise in digital images. IAENG Int J Comput Sci 37:78–84

Vincent L (1993) Morphological grayscale reconstruction in image analysis: applications and efficient algorithms. IEEE Trans Image Process 2:176–201. https://doi.org/10.1109/83.217222

Zhang Y, Zheng M, Zhu R, Ma R (2022) Detection of adulteration in mutton using digital images in time domain combined with deep learning algorithm. Meat Sci 192:108850. https://doi.org/10.1016/j.meatsci.2022.108850

Zhu J, Rosset S, Zou H, Hastie T (2006) Multi-class AdaBoost. Stat Interface 2:. https://doi.org/10.4310/SII.2009.v2.n3.a8

Acknowledgements

We acknowledge Mr. Aniket Ghosh, Mr. Gopal Roy, Mr. Prasun Kumar Saha final year student (2022 batch) of department of Food Processing Technology and Sri Snehashis Guha, PIC Malda polytechnic, Malda for their support to conduct this study. Thanks to GAIN (Axencia Galega de Innovación) for supporting this research (grant number IN607A2019/01).

Funding

Funding for open access charge: Universidade de Vigo/CISUG.

Author information

Authors and Affiliations

Contributions

T.R.: conceptualization, methodology, investigation, validation, formal analysis, writing-original draft preparation; T.C.: methodology, investigation, validation, formal analysis, contribution in writing; V.R.A.: methodology, investigation, validation, formal analysis, and contribution in writing in relevant section; M.K.: data analysis, writing-review and editing, final draft supervision, and monitoring; M.A.S.: review and editing, final draft supervision, and monitoring; J.M.L.: review and editing, final draft supervision and monitoring. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics Approval

Not applicable.

Consent to Participate

All authors has given their full consent to participate.

Consent for Publication

All authors has given their full consent for publication.

Conflict of Interest

Tanmay Sarkar declares that she has no conflict of interest. Tanupriya Choudhury declares that she has no conflict of interest. Nikunj Bansal declares that he has no conflict of interest. VR Arunachalaeshwaran declares that she has no conflict of interest. Mars Khayrullin declares that she has no conflict of interest. Mohammad Ali Shariati declares that he has no conflict of interest. Jose Manuel Lorenzo declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sarkar, T., Choudhury, T., Bansal, N. et al. Artificial Intelligence Aided Adulteration Detection and Quantification for Red Chilli Powder. Food Anal. Methods 16, 721–748 (2023). https://doi.org/10.1007/s12161-023-02445-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12161-023-02445-0