Abstract

Among different functional data analyses, clustering analysis aims to determine underlying groups of curves in the dataset when there is no information on the group membership of each curve. In this work, we develop a novel variational Bayes (VB) algorithm for clustering and smoothing functional data simultaneously via a B-spline regression mixture model with random intercepts. We employ the deviance information criterion to select the best number of clusters. The proposed VB algorithm is evaluated and compared with other methods (k-means, functional k-means and two other model-based methods) via a simulation study under various scenarios. We apply our proposed methodology to two publicly available datasets. We demonstrate that the proposed VB algorithm achieves satisfactory clustering performance in both simulation and real data analyses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Functional data analysis (FDA), term first coined by Ramsay and Dalzell (1991), deals with the analysis of data that are defined on some continuum such as time. Theoretically, data are in the form of functions, but in practice they are observed as a series of discrete points representing an underlying curve. Ramsay and Silverman (2005) establish a foundation for FDA on topics including smoothing functional data, functional principal components analysis and functional linear models. Ramsay et al. (2009) provide a guide for analyzing functional data in R and Matlab using publicly available datasets. Wang et al. (2016) present a comprehensive review of FDA, in which clustering and classification methods for functional data are also discussed. Functional data analysis has been applied to various research areas such as energy consumption (Lenzi et al. 2017; De Souza et al. 2017; Franco et al. 2023), rainfall data visualization (Hael et al. 2020), income distribution (Hu et al. 2020), spectroscopy (Dias et al. 2015; Yang et al. 2021; Frizzarin et al. 2021), and Covid-19 pandemic (Boschi et al. 2021; Souza et al. 2023; Collazos et al. 2023), to mention a few.

Cluster analysis of functional data aims to determine underlying groups in a set of observed curves when there is no information on the group label of each curve. As described in Jacques and Preda (2014), there are three main types of methods used for functional data clustering: dimension reduction-based (or filtering) methods, distance-based methods, and model-based methods. Functional data generally belongs to the infinite-dimensional space, making those clustering methods for finite-dimensional data ineffective. Therefore, dimension reduction-based methods have been proposed to solve this problem. Before clustering, a dimension reduction step (also called filtering in James and Sugar, 2003) is carried out by the techniques including spline basis function expansion (Tarpey and Kinateder 2003) and functional principal component analysis (Jones and Rice 1992). Clustering is then performed using the basis expansion coefficients or the principal component scores, resulting in a two-stage clustering procedure. Distance-based methods are the most well-known and popular approaches for clustering functional data since no parametric assumptions are necessary for these algorithms. Nonparametric clustering techniques, including k-means clustering (Hartigan and Wong 1979) and hierarchical clustering (Ward 1963), are usually applied using specific distances or dissimilarities between curves (Delaigle et al. 2019; Martino et al. 2019; Zambom et al. 2019; Li and Ma 2020). It is important to note that distance-based methods are sometimes equivalent to dimension reduction-based methods if, for example, distances are computed using the basis expansion coefficients. Another widely-used approach is model-based clustering, where functional data are assumed to arise from a mixture of underlying probability distributions. For example, in Bayesian hierarchical clustering, a common methodology is to assume that the set of coefficients in the basis expansion representing functional data follow a mixture of Gaussian distributions (Wang et al. 2016).

Chamroukhi and Nguyen (2019) recently provided a comprehensive review for model-based clustering of functional data. A common model-based approach is to represent functional data as a linear combination of basis functions (e.g., B-splines) and consider a finite regression mixture model (Grün 2019) with the matrix of basis function evaluations as the design matrix and a set of basis expansion coefficients for each mixture component. The estimation and inference of the mixture parameters as well as the regression (or basis expansion) coefficients are usually conducted via the Expectation-Maximization (EM) algorithm (Samé et al. 2011; Jacques and Preda 2013; Giacofci et al. 2013; Chamroukhi 2016a; Grün 2019) or Markov Chain Monte Carlo (MCMC) sampling techniques (Ray and Mallick 2006; Fruhwirth-Schnatter et al. 2019). An alternative approach to EM and MCMC is the use of variational inference techniques.

Bayesian variational inference has found versatile applications within the field of FDA. Variational Bayes for fast approximate inference was applied in functional regression analysis by Goldsmith et al. (2011). Beyond functional regression, another pivotal facet of FDA lies in functional data registration, with a growing interest in the joint clustering and registration of functional data (Zhang and Telesca 2014). A novel adapted variational Bayes algorithm for smoothing and registration of functional data simultaneously via Gaussian processes was proposed by Earls and Hooker (2017). Nguyen and Gelfand (2011) considered a random allocation process, namely the Dirichlet labelling process, to cluster functional data and inferred model parameters by Gibbs sampling and variational Bayes. In a recent development, Rigon (2023) extended the work of Blei and Jordan (2006) and proposed an enriched Dirichlet mixture model for functional clustering via a variational Bayes algorithm. Rigon (2023) considered a Bayesian functional mixture model without random effects and introduced a functional Dirichlet multinomial process to allow the estimation of the number of clusters.

In this paper, we develop a novel variational Bayes algorithm for clustering functional data via a regression mixture model. In contrast to Rigon (2023), we consider a regression mixture model with random intercepts and take on a two-fold scheme for choosing the best number of clusters using the deviance information criterion (Spiegelhalter et al. 2002). We model the raw data, simultaneously obtaining clustering assignments and cluster-specific smooth mean curves. We compare the posterior estimation results from our proposed VB with the ones from MCMC. Our proposed method is implemented in R, and codes are available at https://github.com/chengqianxian/funclustVI.

The remainder of the paper is organized as follows. Section 2 presents an overview of variational inference, our two model settings and proposed algorithms. In Sect. 3, we conduct simulation studies to assess the performance of our methods under various scenarios. In Sect. 4, we apply our proposed methodology to real datasets. A conclusion of our study and a discussion on the proposed method are provided in Sect. 5.

2 Methodology

2.1 Overview of variational inference

Variational inference (VI) is a method from machine learning that approximates the posterior density in a Bayesian model through optimization (Jordan et al. 1999; Wainwright et al. 2008). Blei et al. (2017) provide an interesting review of VI from a statistical perspective, including some guidance on when to use MCMC or VI. For example, one may apply VI to large datasets and scenarios where the interest is to develop probabilistic models. In contrast, one may apply MCMC to small datasets for more precise samples but with a higher computational cost. In Bayesian inference, our goal is to find the posterior density, denoted by \(p(\cdot \vert y)\), where y corresponds to the observed data. One can apply Bayes’ theorem to find the posterior, but this might not be easy if there are many parameters and non-conjugate prior distributions. Therefore, one can aim to find an approximation to the posterior. To be specific, one wants to find \(q^*\) coming from a family of possible densities Q to approximate \(p(\cdot \vert y)\), which can be solved in terms of an optimization problem with criterion f as follows:

The criterion f measures the closeness between the possible densities q in the family Q and the exact posterior density p. When we consider the Kullback–Leibler (KL) divergence (Kullback and Leibler 1951) as criterion f, i.e.,

this optimization-based technique to approximate the posterior density is called Variational Bayes (VB). Jordan et al. (1999) and Blei et al. (2017) show that minimizing the KL divergence is equivalent to maximizing the so-called evidence lower bound (ELBO). Let \(\theta \) be a set of latent model variables, the KL divergence is defined as

and it can be shown that

where the last term is the ELBO. Since \(\log p(y)\) is a constant with respect to \(q(\theta )\), this changes the problem in (1) to

We, therefore, derive a VB algorithm for clustering functional data. We consider the mean-field variational family in which the latent variables are mutually independent, and a distinct factor governs each of them in the variational density. Finally, we apply the coordinate ascent variational inference algorithm (Bishop 2006) to solve the optimization problem in (2).

2.2 Assumptions and model settings

Let \({\textbf{Y}}_i\), \(\{i=1,\ldots ,N\}\), denote the observed data from N curves, and for each curve i there are \(n_i\) evaluation points, \(t_{i1},..., t_{in_i}\), so that \({\textbf{Y}}_i =(Y_i(t_{i1}),\ldots ,Y_i(t_{in_i}))^T\). Let \(Z_i\) be a hidden variable taking values in \(\{ 1,\ldots ,K\}\) that determines which cluster \({\textbf{Y}}_i\) belongs to. We assume \(Z_1,\ldots ,Z_N\) are independent and identically distributed with \(P(Z_i=k) = \pi _k, \, k=1,...,K\), and \(\sum _{k=1}^K \pi _k =1\). For the ith curve from cluster k, there is a smooth function \(f_k\) evaluated at \(\textbf{t}_i =(t_{i1},..., t_{in_i})^T\) so that \(f_k(\textbf{t}_i) = (f_k(t_{i1}),\ldots ,f_k(t_{in_i}))^T\). Given that \(Z_i=k\), we consider two different models for \({\textbf{Y}}_i\) based on the correlation structure of the errors. In Model 1, described in Sect. 2.2.1, we assume independent errors, and in Model 2, described in Sect. 2.2.2, we add a random intercept to induce a correlation between observations within each curve.

2.2.1 Model 1

Let us assume that

with conditionally independent errors \({\varvec{\epsilon }}_1,..., {\varvec{\epsilon }}_N,\) where \({\varvec{\epsilon }}_i=(\epsilon _{i1},..., \epsilon _{in_i})\) and \({\varvec{\epsilon _i}} \sim MVN (\textbf{0}, \mathrm{{I}}_{n_i}), i=1,...,N\), where \( \mathrm{{I}}_{n_i}\) is an identity matrix of size \(n_i\) and MVN represents the multivariate normal distribution. The functions \(f_1,\ldots ,f_K\) can be written as a linear combination of M known B-spline basis functions, that is, \(f_k(t_{ij}) = \sum _{m=1}^M B_m(t_{ij})\phi _{km}, \; j=1,..., n_i\), such that \(f_k(\textbf{t}_i) = \textbf{B}_{i({n_i}\times M)}{\varvec{\phi }}_{k(M\times 1)}, i=1,..., N, k=1,...,K\), \(\textbf{B}_i\) is an \(n_i\times M\) matrix for the ith curve whose each entry (j, m) is the mth basis function evaluated at \(t_{ij}\), \(B_m(t_{ij})\), and \({\varvec{\phi }}_{k}\) is the basis coefficient vector for cluster k. Therefore,

The proposed model is within the framework of a mixture of linear models, also known as the finite regression mixture model (Chamroukhi and Nguyen 2019). The finite regression mixture model offers a statistical framework for characterizing complex data from various unknown classes of conditional probability distributions (Peel and MacLahlan 2000; Melnykov and Maitra 2010; Chamroukhi 2016a; Grün 2019; Fruhwirth-Schnatter et al. 2019; McLachlan et al. 2019; Rigon 2023). In our model, we specifically consider Gaussian regression mixtures to deal with functional data that originate from a finite number of groups and are represented through a linear combination of B-spline basis functions plus some Gaussian random noise (Chamroukhi 2016b). Our model aligns with the classical finite Gaussian regression mixture model of order K, which can be expressed as follows:

where g is the density function of a \(MVN(\textbf{B}_i{\varvec{\phi }}_{k}, \sigma _k^2 \mathrm{{I}}_{n_i})\).

In our proposed models, we employ B-spline basis functions to represent and smooth functional data. However, it is worth noting that alternative basis systems, such as the Fourier bases, wavelets, and polynomial bases can also be considered for this purpose (Ramsay and Silverman 2005). As discussed in Chamroukhi and Nguyen (2019), the B-spline basis system offers greater flexibility, allowing researchers to tailor their choice of B-spline order and the number of knots to suit their specific needs. For smoothing functional data, cubic B-splines, corresponding to an order of four, are sufficient and can provide satisfactory performance (Chamroukhi and Nguyen 2019). As in previous studies of functional data, we use cubic B-splines with equally spaced knots and assume that the number of basis functions M is predefined and known (Dias et al. 2009, 2015; Lenzi et al. 2017; Franco et al. 2023).

Let \(\textbf{Z}=(Z_1,\ldots ,Z_N)^T\), \({\varvec{\phi }}=\{{\varvec{\phi }}_1,\ldots ,{\varvec{\phi }}_K\}\), \({\varvec{\pi }} = (\pi _1,\ldots ,\pi _K)^T \) and \({\varvec{\tau }} = (\tau _1,\ldots ,\tau _K)^T \), where \(\tau _k = 1/\sigma ^2_k\) is the precision parameter. We take on a Bayesian approach to infer \({\varvec{Z}}\), \({\varvec{\phi }}\), \({\varvec{\pi }}\) and \({\varvec{\tau }}\), and assume the following marginal prior distributions for parameters in Model 1:

-

\({\varvec{\pi }} \sim \text{ Dirichlet }(\textbf{d}^0)\) where \(\textbf{d}^0\) is the parameter vector for a Dirichlet distribution;

-

\(Z_i\vert {\varvec{\pi }} \sim \text{ Categorical }({\varvec{\pi }})\);

-

\({\varvec{\phi }}_k \sim MVN(\textbf{m}_k^0,s^0\textbf{I})\) with precision \(v^0 = 1/s^0\) and \(\textbf{I}\) an \(M \times M\) identity matrix;

-

\(\tau _k = 1/\sigma ^2_k \sim \text{ Gamma }(a^0,r^0), \; k=1,...,K\).

We develop a novel VB algorithm which, for given data, approximates the posterior distribution by finding the variational distribution (VD), \( q(\textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }})\), with smallest KL divergence to the posterior distribution \(p(\textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }}\vert {\textbf{Y}})\). Minimizing the KL divergence is equivalent to maximizing the ELBO given by

where \(\log p({\textbf{Y}},\textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }})\) is the complete data log-likelihood.

2.2.2 Model 2

We extend the model in Sect. 2.2.1 by adding a curve-specific random intercept \(a_i\) which induces correlation among observations within each curve. The model now becomes:

where \(\epsilon _{ij}\sim N(0, 1)\) and \(a_i\sim N(0, \sigma _a^2)\) with \(a_i\) and \(\epsilon _{ij}\) independent for all i and j. We can write Model 2 in a vector form as

in which \(\textbf{1}_{n_i}\) is a column vector of length \(n_i\) with all elements equal to 1, and further assume that \({\varvec{\epsilon _i}}\sim MVN(\textbf{0}, \mathrm{{I}}_{n_i})\) and \(a_i\sim N(0, \sigma _a^2)\). This model can be rewritten as a two-step model:

and \(a_i\sim N(0, \sigma _a^2), i=1,2,...,N\). Let \(\textbf{a}=(a_1,\ldots ,a_N)^T\) and \(\tau _a=1/\sigma ^2_a\). We assume the following marginal prior distributions for parameters in Model 2:

-

\({\varvec{\pi }} \sim \text{ Dirichlet }(\textbf{d}^0)\);

-

\(Z_i\vert {\varvec{\pi }} \sim \text{ Categorical }({\varvec{\pi }})\);

-

\({\varvec{\phi }}_k \sim MVN(\textbf{m}_k^0,s^0\textbf{I})\) with precision \(v^0 = 1/s^0\);

-

\(\tau _k = 1/\sigma ^2_k \sim \text{ Gamma }(b^0,r^0), \; k=1,...,K\);

-

\(\tau _a = 1/\sigma ^2_a \sim \text{ Gamma }(\alpha ^0,\beta ^0)\);

-

\(a_i \vert \tau _a \sim N(0, \sigma _a^2)\) with \(\tau _a=1/\sigma ^2_a\).

As in Model 1, we develop a VB algorithm to infer \({\varvec{Z}}\), \({\varvec{\phi }}\), \({\varvec{\pi }}\), \({\varvec{\tau }}\), \(\textbf{a}\) and \(\tau _a\). The ELBO under Model 2 is given by

2.3 Steps of the VB algorithm

This section describes the main steps of the VB algorithm under Model 2 for inferring \({\varvec{Z}}\), \({\varvec{\phi }}\), \({\varvec{\pi }}\), \({\varvec{\tau }}\), \(\textbf{a}\) and \(\tau _a\). The proposed VB is summarized in Algorithm 1. The VB algorithm’s main steps and the ELBO calculation for Model 1 can be found in Appendix A.

First, we assume that the variational distribution belongs to the mean-field variational family, where \({\varvec{Z}}\), \({\varvec{\phi }}\), \({\varvec{\pi }}\) \({\varvec{\tau }}\), \(\textbf{a}\) and \(\tau _a\) are mutually independent and each governed by a distinct factor in the variational density, that is:

We then derive a coordinate ascent algorithm to obtain the VD (Jordan et al. 1999; Blei et al. 2017). That is, we derive an update equation for each term in the factorization (6) by calculating the expectation of \(\log p(\textbf{Y},\textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }}, \textbf{a}, \tau _a)\) (the joint distribution of the observed data \(\textbf{Y}\), hidden variables \(\textbf{Z}\) and parameters \({\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }}, \textbf{a}, \tau _a\), which is also called complete-data log-likelihood) over the VD of all random variables except the one of interest, where

So, for example, the optimal update equation for \(q({\varvec{\pi }})\), \(q^*({\varvec{\pi }})\), is given by calculating

where \(-{\varvec{\pi }}\) indicates that the expectation is taken with respect to the VD of all other latent variables but \({\varvec{\pi }}\), i.e., \(\textbf{Z},{\varvec{\phi }}\), \({\varvec{\tau }}\), \(\textbf{a}\) and \(\tau _a\). In what follows we derive the update equation for each component in our model. For convenience, we use \(\overset{\mathrm{\tiny {+}}}{\approx }\) to denote equality up to a constant additive factor.

2.3.1 VB update equations

(i) Update equation for \(q({\varvec{\pi }})\)

Since only the second term, \(\log p(\textbf{Z} \vert {\varvec{\pi }})\), and the fifth term, \(\log p({\varvec{\pi }})\), in (7) depend on \({\varvec{\pi }}\), the update equation \(q^*({\varvec{\pi }})\) can be derived as follows.

Therefore, \(q^*({\varvec{\pi }})\) is a Dirichlet distribution with parameters \(\textbf{d}^*=(d_1^*,\ldots ,d_K^*)\), where

(ii) Update equation for \(q(Z_i)\)

Note that we can write \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\phi }},{\varvec{\tau }}, \textbf{a})\) and \(\log p(\textbf{Z} \vert {\varvec{\pi }})\) into two parts, one that depends on \(Z_i\) and one that does not, that is:

Now when taking the expectation in (9), the parts that do not depend on \(Z_i\) in \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\phi }},{\varvec{\tau }},\textbf{a})\) and \(\log p(\textbf{Z} \vert {\varvec{\pi }})\) will be added as a constant in the expectation. So, we obtain

Therefore, \(q^*(Z_i)\) is a categorical distribution with parameters

where

Note that all expectations involved in the VB update equations are calculated in Sect. 2.3.2.

(iii) Update equation for \(q({\varvec{\phi }}_k)\)

Only the first term, \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\phi }},{\varvec{\tau }}, \textbf{a})\), and the third term, \(\log p({\varvec{\phi }})\), in (7) depend on \({\varvec{\phi }}_k\). In addition, similarly to the previous case for \(q^*(Z_i)\), we can write \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\phi }},{\varvec{\tau }}, \textbf{a})\) and \(\log p({\varvec{\phi }})\) in two parts, one that depends on \({\varvec{\phi }}_k\) and the other that does not. Therefore, we obtain

All expectations are defined in Sect. 2.3.2, but note that, for example, \({{\mathbb {E}}}_{q^*(Z_i)}[ \mathrm{{I}}(Z_i=k)] = p^*_{ik}\) and

where \(\mu _{a_i}^*\) is the posterior mean of \(q^*(a_i)\) which is derived later. We focus on the quadratic forms that appear in (11) and (12). Let \({\textbf{Y}}_i^*={\textbf{Y}}_i-\mu _{a_i}^*\textbf{1}_{n_i}\), we can write:

Now let

We can then rewrite (13) as

Therefore, \(q^*({\varvec{\phi }}_k)\) is \(MVN(\textbf{m}^*_k,\mathbf {\Sigma }^*_k)\) with \(\mathbf {\Sigma }^*_k\) as in (14) and mean vector

(iv) Update equation for \(q(\tau _k)\)

Similarly to the calculations in iii) we can write

Therefore, \(q^*(\tau _k)\) is a Gamma distribution with parameters

and

(v) Update equation for \(q(a_i)\)

Let \({\textbf{Y}}_{ik}^*={\textbf{Y}}_i-\textbf{B}_i\textbf{m}^*_k\), then

Let

and

Then \(q^*(a_i)\) is \(N({\mu }^{*}_{a_i},{\sigma }^{*2}_{a_i})\).

(vi) Update equation for \(q(\tau _a)\)

Let

and

\(q^*(\tau _a)\) is Gamma(\(\alpha ^*, \beta ^*\)).

2.3.2 Expectations

In this section, we calculate the expectations in the update equations derived in Sect. 2.3.1 for each component in the VD. Let \( {\varvec{\Psi }}\) be the digamma function defined as

which can be easily calculated via numerical approximation. The values of the expectations taken with respect to the approximated distributions are given as follows.

In addition, using the fact that \({{\mathbb {E}}}({\textbf{X}}^T {\textbf{X}}) = \text{ trace }[\text{ Var }({\textbf{X}})] + {{\mathbb {E}}}({\textbf{X}})^T{{\mathbb {E}}}({\textbf{X}})\), we obtain

and

2.4 ELBO calculation

In this section, we show how to calculate the ELBO under Model 2, which is the convergence criterion of our proposed VB algorithm and is updated at the end of each iteration until convergence. Equation (6) gives the ELBO:

where

and

Therefore, we can write the ELBO as the summation of 7 terms:

where,

Specifically,

The other terms in (31) are calculated as follows:

and

Therefore, at iteration c, we calculate \(\text{ ELBO}^{(c)}\) using all parameters obtained at the end of iteration c. Convergence of the algorithm is achieved if \(\text{ ELBO}^{(c)}-\text{ ELBO}^{(c-1)}\) is smaller than a given threshold. It is important to note that we use the fact that \(\displaystyle \lim \nolimits _{p^{*}_{ik} \rightarrow 0} p^{*}_{ik}\log p^{*}_{ik}=0\) to avoid numerical issues when calculating (32). Numerical issues also exist in calculating the term \(\{A^*_k \log R_k^{*} - \log \Gamma (A^*_k) +(A^*_k-1){{\mathbb {E}}}_{q^*(\tau _k)}(\log \tau _{k}) - R_k^{*}{{\mathbb {E}}}_{q^*(\tau _k)}(\tau _{k}) \}\) in (33), so we will approximate it by the following digamma and log-gamma approximations. Note that we use (23) and (24) for \({{\mathbb {E}}}_{q^*(\tau _k)}(\tau _{k})\) and \({{\mathbb {E}}}_{q^*(\tau _k)}(\log \tau _{k})\), respectively.

-

(1)

digamma approximation based on asymptotic expansion:

$$\begin{aligned} {\varvec{\Psi }}(A_k^{*})\approx \log A_k^{*} - 1/(2A_k^{*}). \end{aligned}$$ -

(2)

log-gamma Stirling’s series approximation:

$$\begin{aligned} \log \Gamma (A_k^{*})\approx A_k^{*}\log (A_k^{*}) - A_k^{*} - \frac{1}{2}\log (A_k^{*}). \end{aligned}$$

Therefore, plugging in these two approximations, we obtain

3 Simulation studies

In Sect. 3.1, we present the metrics used to evaluate the performance our proposed methodology. Sections 3.2 and 3.3 present the simulation scenarios and results for Model 1 and Model 2, respectively.

3.1 Performance metrics

We evaluate the clustering performance of our proposed algorithm by two metrics: mismatches (Zambom et al. 2019) and V-measure (Rosenberg and Hirschberg 2007). Mismatch rate is the proportion of subjects misclassified by the clustering procedure. In our case, each subject corresponds to a curve in our functional dataset. V-measure, a score between zero and one, evaluates the subject-to-cluster assignments and indicates the homogeneity and completeness of a clustering procedure result. Homogeneity is satisfied if the clustering procedure assigns only those subjects that are members of a single group to a single cluster. Completeness is symmetrical to homogeneity, and it is satisfied if all those subjects that are members of a single group are assigned to a single cluster. The V-measure is one when all subjects are assigned to their correct groups by the clustering procedure. One may also consider alternative metrics to evaluate clustering performance, such as the Rand index (Rand 1971) and the mutual information (Cover 1999). The Rand index measures the similarity between two data partitions by counting the number of pairs of observations that are either correctly grouped together (i.e., true positives) or correctly separated (i.e., true negatives) in both partitions. Mutual information, on the other hand, quantifies the information shared between two data partitions. Along with the V-measure, these metrics are commonly used for clustering and partition evaluation, but they each have different mathematical formulations and emphasize different aspects of clustering performance.

For comparison purposes, we also investigate the performance, in terms of mismatch and V-measure, of the classical clustering algorithms including k-means for raw data (discrete observed points), and k-means for functional data (referred to as functional k-means, Febrero-Bande and de la Fuente (2012)), and two other model-based algorithms: funFEM (Bouveyron et al. 2015) and SaS-Funclust (Centofanti et al. 2023). The funFEM method was proposed for the inference of the discriminative functional mixture model to cluster functional data via the EM algorithm. The SaS-Funclust method, short for sparse and smooth functional clustering, was developed to facilitate sparse clustering for functional data via a functional Gaussian mixture model and penalized maximum likelihood estimation.

To further evaluate the performance of the proposed VB algorithm in terms of the estimated mean curves, we calculate the empirical mean integrated squared error (EMISE) for each cluster in each simulation scenario. For simplicity, we generate curves with equal number of observed values, that is n, in our simulation study. The EMISE is obtained as follows:

where T is the curve evaluation interval length, n is total number of observed evaluation points, and the empirical mean squared error (EMSE) at point \(t_j\) for cluster k, \(\text {EMSE}_k(t_j)\), is given by

in which s corresponds to the sth simulated dataset among S datasets in total, \(f_k(t_j)\) is the value of the true mean function in cluster k evaluated at point \(t_j\) and \({\hat{f}}_k^s(t_j)\) is its corresponding estimated value for the sth simulated dataset. The estimated value \({\hat{f}}_k^s(t_j)\) is calculated using the B-spline basis expansion with coefficients corresponding the to posterior mean (15) obtained at the convergence of the VB algorithm.

3.2 Simulation study on Model 1

In Sects. 3.2.1 and 3.2.2, we first conduct simulation studies for Model 1 which comprises six different scenarios, five of which have three clusters (\(K=3\)) while the last scenario has four clusters (\(K=4\)). For each simulation scenario, we generate 50 datasets and apply the proposed VB algorithm to each dataset, considering the number of basis functions to be six except for Scenario 5, which uses 12 basis functions. The ELBO convergence threshold is 0.01, with a maximum of 100 iterations. We use the clustering results of k-means to initialize \(p^{*}_{ik}\) in our VB algorithm.

We further conduct simulation studies on Model 1 to investigate the performance of the VB algorithm, including a prior sensitivity analysis in Sect. 3.2.3, choice of the number of clusters in Sect. 3.2.4 and misspecification of the type of basis functions in Sect. 3.2.5. We compare the posterior estimation results from VB to the ones from MCMC in Sect. 3.2.6.

3.2.1 Simulation scenarios

Scenarios 1 and 2 are adopted from Zambom et al. (2019). Each dataset is generated from 3 possible clusters (\(k=1,2,3\)) with \(N=50\) curves per cluster. For each curve, we assume there are \(n=100\) observed values across a grid of equally spaced points in the interval \([0, \pi /3]\).

Scenario 1, \(K = 3\):

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_i\sim U(-1/4,1/4)\), \(\delta _{ij}\sim N(0, 0.4^2)\), \(b_1=0.3\), \(b_2=1\), \(b_3=0.2\), \(c_1=1/1.3\), \(c_2=1/1.2\), and \(c_3=1/4\).

Scenario 2, \(K = 3\):

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_i\sim U(-1/4,1/4)\), \(\delta _{ij}\sim N(0, 0.3^2)\), \(b_1=1/1.8\), \(b_2=1/1.7\), \(b_3=1/1.5\), \(c_1=1.1\), \(c_2=1.4\), and \(c_3=1.5\).

In Scenarios 3 and 4, each dataset is also generated considering three clusters (\(k=1,2,3\)) with 50 curves each. The mean curve of the functional data in each cluster is generated from a pre-specified linear combination of B-spline basis functions. The number of basis functions is the same across clusters but the coefficients of the linear combination are different, one set per cluster (see Table 1). We apply the function create.bspline.basis in the R package fda to generate six B-spline basis functions of order 4, \(B_l(\cdot )\), \(l=1,...,6,\) evaluated on equally spaced points, \(t_j\), \(j=1,...,100\), in the interval [0, 1].

Scenarios 3 and 4, \(K = 3\):

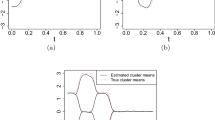

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k and \(\delta _{ij}\sim N(0, 0.4^2)\). Table 1 presents the vector of coefficients for each cluster k, \({\varvec{\phi }}_k = (\phi _{k1},\ldots ,\phi _{k6})^T\), used in Scenarios 3 and 4. Figure 1 illustrates the true mean curves for the three clusters and their corresponding basis functions for Scenarios 3 and 4.

Scenario 5 (\(K=3\)) is based on one of the simulation scenarios used in Dias et al. (2009) in which the curves mimic the energy consumption of different types of consumers in Brazil. There are 50 curves per cluster and for each curve we generate 96 points based on equally spaced time points, \(t_j, \; j=1,...,96\) in the interval [0, 24] (corresponding to one observation every 15 min over a 24-hour period).

Scenario 5, \(K = 3\):

where \(Y_{ik}(t_j)\) denotes the value at time \(t_j\) of the ith curve from cluster k, \(i=1,...,50\), \(j=1,...,96\), \(k=1,2,3\), and \(\delta _{ij}\sim N(0, 0.012^2)\).

Scenario 6 also corresponds to one of the simulation scenarios considered by Zambom et al. (2019), where there are \(K=4\) clusters with 50 curves each. Each curve has 100 observed values based on equally spaced points, \(t_j\), \(j=1,...,100\), in the interval \([0, \pi /3]\).

Scenario 6, \(K = 4\):

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_i\sim U(-1/3,1/3)\), \(\delta _{ij}\sim N(0, 0.4^2)\), \(b_1=0.2\), \(b_2=0.5\), \(b_3=0.7\), \(b_4=1.3\), \(c_1=1.1\), \(c_2=1.4\), \(c_3=1.6\) and \(c_4=1.8\).

3.2.2 Simulation results for Model 1

Figure 2 shows the raw curves (color-coded by cluster) from one of the 50 generated datasets for each simulation scenario. In addition, the true mean curves (\(f_k(\textbf{t})\), \(k=1,\ldots ,K\)) and the estimated smoothed mean curves (\({\hat{f}}_k(\textbf{t})=\textbf{B}\textbf{m}^*_k\), \(k=1,\ldots ,K\)) are shown in black and red, respectively. We can observe that the true and estimated mean curves almost coincide within each cluster in all scenarios.

Table 2 displays the mean and standard deviation of mismatch rates (M) and V-measure values (V) across 50 simulated datasets for each scenario. For the sake of completeness, we have included the results from Scenario 7 in Sect. 3.2.4 and Scenario 8 in Sect. 3.2.5 in Table 2 as they pertain to the study of Model 1. The proposed VB algorithm performs the best in all scenarios except for Scenario 5 where we simulate the curves that mimic daily energy consumption. Across Scenarios 1 to 6, VB demonstrates impressive results with a mean mismatch rate of 5.13% and a mean V-measure of 88.06%. Notably, the mean mismatch rate achieved by VB is 55.71%, 83.6%, 85.86%, and 73.41% lower than that of classical k-means, functional k-means, funFEM, and SaS-Funclust, respectively. Meanwhile, VB’s mean V-measure surpasses the compared methods by 5.36%, 38.75%, 85.9%, and 8.46%, respectively. In Scenarios 3 and 4, where data is simulated through a linear combination of six predefined basis functions, VB exhibits perfect classification, with \(M=0\) and \(V=1\), which aligns with expectations since the raw data in these scenarios share the same structure as the proposed model. Comparatively, classical k-means generally outperforms functional k-means, funFEM, and SaS-Funclust in Scenarios 1, 2, 3, and 6, as similarly found in Zambom et al. (2019). The SaS-Funclust method excels in Scenario 5, with a slightly (0.0067) lower mismatch rate and a marginally (0.0053) higher V-measure than VB. Functional k-means also demonstrates competitive performance in Scenario 5, comparable to VB and SaS-Funclust.

In terms of computational efficiency, the run times for the proposed VB algorithm of Model 1 across the 50 simulated datasets from Scenarios 1 to 6 are as follows: 1.97 min, 5.41 min, 1.41 min, 1.61 min, 3.60 min, and 5.32 min. For comparison, SaS-Funclust required significantly longer computation times: 60.16 min, 68.94 min, 65.04 min, 68.19 min, 72.26 min, and 129.47 min for the respective scenarios. On average, the proposed VB algorithm demonstrates exceptional speed, being approximately 20 times faster than SaS-Funclust. The algorithm was implemented in R version 3.6.3 on a computer using the Mac OS X operating system with a 1.6 GHz processor and 8 GBytes of random access memory, same for the simulation study for Model 2 in Sect. 3.3.

Table 3 presents the EMISE for each cluster in each Scenario. We can observe small EMISE values, which are consistent with the results shown in Fig. 2, where there is a small difference between the red curves (i.e., the estimated mean functions) and the black curves (i.e., the true mean functions). A plot of EMSE values versus observed points for each cluster in Scenario 1 is presented in Fig. 3 while plots of EMSE values for Scenarios 2, 3, 4, 5 and 6 are provided in Fig. 11 in Appendix B.

3.2.3 Prior sensitivity analysis

In Bayesian analysis, it is important to assess the effects of different prior settings in the posterior estimation. In this section, we carry out a sensitivity analysis on how different prior settings may affect the results of our proposed VB algorithm. Our sensitivity analysis focuses on the prior distribution of the coefficients \({\varvec{\phi }}_k\) of the B-spline basis expansion of each cluster-specific mean curve. We assume \({\varvec{\phi }}_k\) follows a multivariate normal prior distribution with a mean vector \(\textbf{m}_k^0 \) and \(s^0\textbf{I}\) as the covariance matrix. We simulated data according to Scenario 3 in Sect. 3.2.1 and four different prior settings as follows:

-

Setting 1: use the true coefficients as the prior mean vector and consider a small variance (\(s^0=0.01\)).

-

Setting 2: use the true coefficients as the prior mean vector but consider a larger variance than in Setting 1 (\(s^0=1\)).

-

Setting 3: use a prior mean vector that is different than the true vector of coefficients with a small variance (\(s^0=0.01\)).

-

Setting 4: set the prior mean vector of coefficients to a vector of zeros with a small variance (\(s^0=0.01\)).

Setting 1 has the strongest prior information among these four prior settings, while setting 4 is the most non-informative prior case. In setting 3, the prior mean vector of coefficients is generated from sampling from a multivariate normal distribution with a mean vector corresponding to the true coefficients and covariance matrix \(\sigma ^2\textbf{I}\), with \(\sigma ^2 =0.5\). For each prior setting, we simulate 50 datasets as in Scenario 3, obtaining the average mismatch rate and V-measure, which are displayed in Table 4. First, we can observe that all the curves are correctly clustered under Setting 1, which has the strongest prior information. Then, as we relax the prior assumptions in two possible directions (i.e., more considerable variance or less informative mean vector), the mismatch rate increases, and the V-measure decreases. However, the clustering performance does not decrease much, only 4.67% higher in mismatches and 3.73% lower in V-measure.

3.2.4 Choosing the number of clusters

Choosing an appropriate number of clusters, denoted as K, holds paramount importance within clustering procedures. This decision aligns with determining the number of mixture components in a regression mixture model. One of the most widely applied methodologies to deal with uncertainty in the cluster numbers is the two-fold scheme that one first fits the mixture model with different predefined numbers of mixtures and then use some information criteria to select the best one (Chen et al. 2012; Nieto-Barajas and Contreras-Cristán 2014; Wang and Lin 2022). Alternatively, one can explore concurrent approaches for optimal cluster number selection, including techniques such as overfitted Bayesian mixtures, tailored to address scenarios with large unknown K (Rousseau and Mengersen 2011), selection through penalized maximum likelihood (Chamroukhi 2016b), and the application of infinite mixture models such as Dirichlet process mixture models (Escobar and West 1995; Ray and Mallick 2006; Petrone et al. 2009; Rodríguez et al. 2009; Angelini et al. 2012; Heinzl and Tutz 2013; Rigon 2023).

In our study, we employ the afterward model selection (i.e., two-fold) scheme to determine the most suitable number of clusters. Assuming some prior knowledge of K, we establish a clustering model for a range of integers based on this prior information, employing the VB algorithm for each K. For model comparison, we utilize the deviance information criterion (DIC) (Spiegelhalter et al. 2002), which can be applied to select the optimal number of clusters within a comparable Bayesian clustering framework (Gao et al. 2011; Anderson et al. 2014; Komárek 2009). DIC is built to balance the model fitness and complexity under a Bayesian framework, and a lower DIC indicates a better model. Nonetheless, the DIC is not an integral component of the core methodology and can be substituted with alternative model selection criteria such as the WAIC (Watanabe and Opper 2010) and LPML (Geisser and Eddy 1979) when someone’s concern is predictive goodness-of-fit. In our Model 1 setting, the DIC can be obtained as follows:

where \({{\mathbb {E}}}_{q^*} \left[ \log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }}) \right] \) can be computed after the convergence of our proposed VB algorithm based on the ELBO. The term \({\overline{D}}\) corresponds to the log-likelihood \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }})\) evaluated at the expected value of each parameter posterior. For example, when we calculate the term \(\log \tau _{k}\) in \(\log p({\textbf{Y}}\vert \textbf{Z},{\varvec{\pi }},{\varvec{\phi }},{\varvec{\tau }})\), we replace it by \(\log \,({{\mathbb {E}}}_{q^*(\tau _k)}(\tau _{k}))\).

Simulation results for Model 1, Scenario 7, \(K=6\). Left: boxplots of DIC values under different \(K \in \{1, 2,..., 10\}\). The best number of clusters is six which has the smallest DIC. Right: the clustering results for \(K=6\) for one of the simulated data sets. Raw curves (different colors correspond to different clusters), cluster-specific true mean curves (in black) and corresponding VB estimated mean curves (in red) (color figure online)

We consider a more complex scenario, namely Scenario 7, where \(K=6\) in this simulation study which was also analyzed in Zambom et al. (2019). The data are generated as follows:

Scenario 7, \(K = 6\):

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_i\sim U(-1/4,1/4)\), \(\delta _{ij}\sim N(0, 0.3^2)\), \(b_1=1\), \(b_2=1.2\), \(b_3=1.4\), \(b_4=1.6\), \(b_5=1.8\) and \(b_6=2\).

We assume a prior information of the number of clusters that K is around 6. Accordingly, we evaluate a range of potential K values, specifically \(\{2, 3,..., 10\}\). For each K, we apply the VB algorithm to cluster the observed functional data and calculate the resulting DIC. Within this scope, for each \(K \in \{2, 3,..., 10\}\), we repeat the simulation analysis for 50 times utilizing different random seeds to generate data. The left plot in Fig. 4 displays a boxplot representation of the DIC values for each K. It is evident that our DIC-based approach adeptly identifies the correct K (in this case, \(K=6\)), yielding the lowest DIC. The accompanying right plot in Fig. 4 showcases the clustering results for one of the simulated data sets under Scenario 7, demonstrating a highly satisfactory estimation of the true mean curves.

The quantitative evaluation of VB clustering performance in Scenario 7, along with a comparison to the other methods, is presented in Table 2. The VB algorithm performs the best among the others with a mean mismatch rate of 0.3001 and a mean V-measure of 0.7528. The mean mismatch rate of VB is 0.03%, 61.33%, 63.33%, and 58.13% lower than that of the classical k-means, functional k-means, funFEM and SaS-Funclust methods, while the mean V-measure is 0.41%, 36.25%, 1393.65%, and 21.83% higher, respectively. It is important to note that Scenario 7, characterized by a more complex structure with multiple groups of curves and overlapping patterns, poses a greater challenge for all methods, leading to overall reduced performance compared to other scenarios. FunFEM, in particular, encounters significant difficulties, with a V-measure approaching 0 due to the misclassification of more than 80% of curves.

3.2.5 Misspecification of the type of basis functions

This section illustrates the performance of the VB algorithm in case of misspecification of the type of basis functions via a simulation study, namely Scenario 8. We generate seven Fourier basis functions with equally spaced points on the interval [0, 1], which are shown in Fig. 5b, and simulate the data for three clusters \((k=1, 2, 3)\) with 50 curves (\(i=1, 2,..., 50\)) and 100 values \((t_j, j=1, 2,..., 100)\) on each curve in each cluster using a linear combination of these Fourier basis functions as follows:

Scenario 8, \(K = 3\):

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) for the ith curve from cluster k, \(G_l(t_j)\) is the lth Fourier basis function evaluated at point \(t_j\), \(\phi _{kl}\) is the corresponding basis function coefficient, and \(\delta _{ij}\sim N(0, 4)\). In this simulation study, the vectors of basis function coefficients for each cluster are:

Figure 5c presents the raw curves with each cluster distinguished by a unique color. Notably, when compared to the B-spline bases, the Fourier bases exhibit a more intricate curve structure, suggesting the potential need for an increased number of B-spline basis functions to adequately represent these functional curves, as observed in Souza et al. (2023). Consequently, we have generated 15 B-spline bases from the interval [0, 1], as illustrated in Fig. 5a, to cluster the curves derived from a linear combination of the Fourier bases. The resulting VB estimated mean curves (solid lines) are juxtaposed with the true mean curves (dashed lines) in Fig. 5d from one of the simulated data sets.

While a minor discrepancy is observable between the true and estimated mean curves at the left boundary for the red and green groups, it is evident that the VB algorithm achieves highly accurate estimations of the true mean curves across all clusters. As shown in Table 2, the computed mean mismatch rate (sd) and mean V-measure (sd) from clustering 50 different simulated datasets are 0.067 (0.135) and 0.947 (0.108), respectively. In comparison to classical k-means, functional k-means, and funFEM, the mean mismatch rate from VB is 30.52%, 71.26%, and 87.65% lower, while the mean V-measure is 2%, 49.72%, and 711.92% higher. Unfortunately, SaS-Funclust struggles to cluster the curves, resulting in a V-measure of zero. This simulation illustrates the robustness of the VB algorithm in clustering functional data, even when confronted with the misspecification of basis function types.

Simulation results for Model 1, Scenario 8, \(K=3\). a B-spline basis functions for model fit. b Fourier basis functions for data generation. c Raw curves from three clusters (distinct colors for each cluster). d Cluster-specific true mean curves (dashed) and corresponding VB estimated mean curves (solid) (color figure online)

3.2.6 Comparison with MCMC posterior estimation

In our simulation study on Model 1, VB is shown to yield accurate mean curve estimates and satisfactory outcomes in clustering functional data. Although mean-field VB, as an alternative to MCMC, boasts a lower computational cost, it may potentially underestimate the posterior variance (Wang and Titterington 2005). To investigate this concern in the context of clustering functional data through a B-spline regression mixture model, we employ the MCMC-based Gibbs sampling algorithm for simulated data under Scenario 1. The resulting posterior distribution from Gibbs is based on 9000 MCMC samples following a 1000-sample burn-in and with a thinning of 1 from one chain. The convergence of the MCMC algorithm was well assessed and checked by the trace plot. Figure 6 illustrates the marginal posterior density of each basis coefficient \(\phi _{km}\), \(k=1, 2, 3\), \(m = 1, \ldots , 6\), and the precision parameter \(\tau _k\), \(k=1, 2, 3\), for each cluster, organized by columns. In each plot, the dashed red line represents the corresponding posterior density from VB, while the solid blue line is derived from MCMC. We observe a robust consistency in the estimated posterior distributions between MCMC and VB. A similar consistency between VB and MCMC in posterior estimation under a regression setting was found by Faes et al. (2011), Luts and Wand (2015), Xian et al. (2024).

To elucidate the uncertainty from the estimated mean curves, we utilize Scenarios 1 and 3 as illustrative examples. We construct 95% credible bands, both from MCMC and VB, for the true mean curves based on the posterior distribution of the B-spline coefficients. Figure 7 presents the results, with the first row corresponding to Scenario 1 and the second row to Scenario 3. In each plot, the solid colored lines depict the estimated mean curves from VB or MCMC, while the black solid lines represent the true mean curves. The 95% credible bands are shown as dashed lines, with different colors for different clusters. In Scenario 1, VB provides comparable point and interval estimation results with MCMC. In contrast, in Scenario 3, VB provides more accurate estimated mean curves, particularly at the left tails. Importantly, we observed no substantial differences in the resulting credible bands between VB and MCMC. In terms of computational cost for one simulation, VB took 5.5 s to produce the results, while the Gibbs sampler took 2.9 min for Scenario 1. In Scenario 3, VB took 5.8 s, while MCMC took 2.6 min. Overall, VB was more than 20 times faster than MCMC.

Simulation results for Model 1, Scenarios 1 and 3. The 95% credible bands for the true mean curves from VB (the left column) and MCMC (the right column). The solid colored lines represent the estimated mean curves, with the true mean curves depicted by black solid lines. The 95% credible bands are illustrated by the corresponding dashed lines (color figure online)

3.3 Simulation study on Model 2

3.3.1 Simulation scenarios

We also investigate the performance of our proposed VB algorithm under Model 2 using simulated data. We consider the simulation schemes of Scenario 1 and Scenario 3 in Sect. 3.2.1, but add a random intercept to each curve, to construct four different scenarios namely Scenario 9, Scenario 10, Scenario 11, and Scenario 12.

Scenario 9, \(K = 3\):

Scenario 9 is constructed based on Scenario 1. The data are simulated as follows.

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_{ik}\sim N(0, 0.4^2)\), \(\delta _{ij}\sim N(0, 0.2^2)\), \(b_1=-0.25\), \(b_2=1.25\), \(b_3=2.50\), \(c_1=1/1.3\), \(c_2=1/1.2\), and \(c_3=1/4\).

Scenario 10, \(K = 3\):

Scenario 10 is developed based on Scenario 3. In this scenario, we consider a very small variance for the random intercept which almost resembles the case without a random intercept. Data are generated as follows.

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_{ik}\sim N(0, 0.05^2)\), \(\delta _{ij}\sim N(0, 0.4^2)\). The B-spline coefficients, \(\phi _{kl}\), remain the same and are presented in Table 1, which are also used in Scenarios 9 and 10.

Scenario 11, \(K = 3\):

Scenario 11 is similar to Scenario 10, but with larger variance for the random intercept but smaller variance for the random error. Data are generated as follows.

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_{ik}\sim N(0, 0.3^2)\), \(\delta _{ij}\sim N(0, 0.15^2)\).

Scenario 12, \(K = 3\):

Scenario 12 is similar to Scenario 10, but with larger variance for the random intercept. In this scenario, we use larger variance for the random error compared with that in Scenario 11, indicating a more complex case. Data are generated as follows.

where \(Y_{ik}(t_j)\) denotes the value at point \(t_j\) of the ith curve from cluster k, \(a_{ik}\sim N(0, 0.6^2)\), \(\delta _{ij}\sim N(0, 0.4^2)\).

3.3.2 Simulation results for Model 2

Figure 8 shows the curves from one of the 50 simulated datasets for Scenarios 9 and 11. Due to the similarity among Scenarios 10, 11 and 12, the curves for Scenarios 10 and 12 are presented in Fig. 12 of Appendix B. In Fig. 8, we can observe a slight difference between each cluster’s true mean curve and the estimated mean curve. Furthermore, more variation occurs after adding the random intercept. Especially in Scenario 12, with large variances, there is a more substantial overlap among curves from different clusters, resulting in a more complex scenario for clustering than the corresponding Scenario 3 in Sect. 3.2.

Table 5 presents the numerical results, including the mean mismatch rate and the mean V-measure with their corresponding standard deviations from the 50 different simulated datasets under each scenario considered. In Scenario 9, where the true mean curves exhibit relative parallelism, we do not observe a significant difference in the mean mismatch rate (approximately 10%) and the mean V-measure (approximately 0.7) among our VB model, the classical k-means, and SaS-Funclust. In contrast, in Scenario 9, the functional k-means and funFEM methods exhibit a larger mean mismatch rate and an 18.78% lower mean V-measure than VB. In Scenario 10, where the true mean curves intersect, our proposed model achieves a significantly lower mean mismatch rate of 0.0299, in contrast to the other methods: 0.1404 for classical k-means, 0.2799 for functional k-means, 0.1845 for funFEM, and 0.3333 for SaS-Funclust. Moreover, the mean V-measure obtained from VB is 0.9767, which is 9.28%, 69.33%, 34.09%, and 33.12% higher than the results from the aforementioned methods, respectively.

When the random intercept variance becomes larger in Scenario 11, even with a smaller random error variance, clustering curves via our proposed model becomes more challenging. The mean mismatch rate increases to 0.1453 from 0.0299, while the mean V-measure drops to 0.7923 from 0.9767 in Scenario 10. Nonetheless, our model continues to outperform the other considered methods, with differences in mismatch rates of 0.0118 for classical k-means, 0.1974 for functional k-means, 0.0576 for funFEM, and 0.0519 for SaS-Funclust. In Scenario 12, where there is a further increase in variance in the random intercept, we observe that the clustering performance of all methods deteriorates, leading to higher mismatch rates and lower V-measure values. Nevertheless, the VB algorithm still stands out by achieving the lowest mean mismatch rate and the highest mean V-measure compared to the other methods. The larger standard deviation of mismatch rates and V-measure of VB compared to other methods happen because, among the 50 different runs, there are 11 runs where our method can 100% correctly assign each curve to the cluster it belongs to, resulting in a mismatch rate of zero and a V-measure of one. At the same time, using the classical k-means as an example, there is no run where the classical k-means provides such perfect clustering results. Besides, among the 50 different runs, there are 41 runs where our method provides lower mismatch rates and higher V-measures than the classical k-means.

Table 6 shows the EMISE for each cluster in Scenarios 9, 10, 11 and 12 based on Model 2. Small EMISE values once again indicate that the true mean curves and the corresponding curves have a small difference. We also find that compared with Table 3 based on Model 1, the EMISE values based on Model 2 are larger. This is in our expectation since adding a random intercept to each curve will bring more variation to the curves, and as a result, more variation in the estimated mean curves, in Scenario 12 especially when we have a larger variance for generating random intercepts. Plots of EMSE values in Scenarios 7, 8, 9, and 10 based on Model 2 are provided in Fig. 13 in Appendix B.

For the computational cost, the run times of the proposed VB algorithm of Model 2 for 50 simulated datasets from Scenarios 9, 10, 11 and 12 are 40.96 min, 1.52 min, 10.46 min, and 11.52 min, respectively. For comparison, SaS-Funclust takes longer computation times: 45.06 min, 65.17 min, 64.35 min and 64.2 min for the respective scenarios.

4 Application to real data

In this section, we apply our proposed method in Sect. 2 to the growth and the Canadian weather datasets, which are both publicly available in the R package fda.

The Growth data (Tuddenham and Snyder 1954) includes heights (in cm) of the 93 children over 31 unevenly spaced time points from the age of one to eighteen. Raw curves without any smoothing are shown in Fig. 9, where the green curves correspond to boys and blue curves to girls. In this case, we apply our proposed method to the growth curves considering two clusters and compare the inferred cluster assignments (boys or girls) to the true ones.

The Canadian weather data (raw data are presented in Fig. 14 in Appendix B) contains the daily temperature at 35 different weather stations (cities) in Canada, averaged out from the year of 1960 to 1994. However, unlike the growth data, we do not know the true number of clusters in the weather data. Therefore, in order to find the best number of clusters, we apply the DIC for model comparison.

The number of B-spline basis functions is fixed and known within the VB algorithm. As discussed in Rossi et al. (2004), a low number of basis functions can be applied to get rid of the measurement noise. Another feature of the B-spline basis system is that increasing the number of B-spline bases does not always improve certain aspects of the fit to the data (Ramsay and Silverman 2005). Based on Liu and Yang (2009), ten B-spline basis functions are relatively reasonable for clustering the Growth data with two clusters. The Canadian weather data presents a higher variation (larger noise) than the Growth data. Therefore, curves with a moderate smoothing, rather than with more roughness, may more accurately reflect the underlying functional structures, and the underlying clusters. So, we use six B-spline basis functions to represent the weather data within the VB algorithm. It is important to note that we do not have a strong prior knowledge of these real datasets but still need to provide appropriate prior hyperparameters for the VB algorithm. As a solution, we randomly select one underlying curve in each dataset and fit a B-spline regression to obtain a vector of coefficients which is then modified across different clusters resulting in the prior mean vectors \(\textbf{m}_k^0\) for \(k=1,...,K\). We set \(s^0=0.1\), corresponding to a precision of 10, as the prior variance of these coefficients which provides a useful information as assumed in real world. For the Dirichlet prior distribution of \({\varvec{\pi }}\), we use \(\textbf{d}^0=(1/K,...,1/K)\), indicating that for each curve, the probability of assignment to each cluster is a priori equal across clusters. For the Gamma prior distribution of the precision, \(\tau _k=1/\sigma _k^2\), we prefer a large prior mean (e.g., 10) and a small prior variance (e.g., 0.1) which serve as informative prior knowledge, and therefore, we set \(a^0 = 2000\) and \(r^0 = 100\) for the growth data, and \(a^0 = 1000\) and \(r^0 = 800\) for the weather data. The ELBO convergence threshold is 0.001.

Since we know there are two clusters (boys and girls) in the growth dataset, \(K=2\) is preset for the clustering procedure. We apply the proposed VB algorithms under Models 1 and 2 to cluster the growth curves with 50 runs corresponding to 50 different initializations. The classical k-means method is also applied to the raw curves for performance comparison purposes. Figure 9 presents the estimated mean curves for each cluster corresponding to the the best VB run (the one with maximum ELBO after convergence) along with the empirical mean curves from both models (left graph for Model 1 while right for Model 2). The empirical mean curves are calculated by considering the true clusters and calculating their corresponding point-wise mean at each time point. Some difference between the estimated and the empirical curves can be observed for the girls due to a potential outlier. Regarding clustering performance, the mean mismatch rates for the VB algorithms under Model 1 and Model 2, and k-means are 33.33%, 20.47% and 34.41%, respectively. V-measure is more sensitive to misclassification than mismatch rate and, therefore, we obtain low mean V-measure values of 7.75% for VB under Model 1, 33.75% for VB under Model 2, and 6.37% for k-means. We can see the clustering performance significantly improved after adding a random intercept to each curve. Compared with Model 1, the mean mismatch rate from Model 2 is lower by 12.86%, and the mean V-measure is higher by 26%.

For the Canadian weather dataset analysis, we considered temperature data from all stations except those located in Vancouver and Victoria because they present relatively flat temperature curves compared to other locations. We applied the proposed VB algorithm under Model 1 to the weather data. The left plot in Fig. 10 shows the DIC values for different possible numbers of clusters (\(K=2,3,4,5\)). We can observe that the best number of clusters for separating the Canadian weather data is three, which corresponds to the smallest DIC. Finally, we present the clustering results with \(K=3\) on a map of Canada in the right plot in Fig. 10. As can be seen, when \(K=3\), we have three resulting groups in three different colors. In general, most of the weather stations in purple are located in northern Canada. In contrast, stations in southern Canada are separated into two groups color-coded in blue and red on the map of Canada. Although some stations may be incorrectly clustered, we can still see a potential pattern that makes sense geographically.

Raw curves (dashed curves) from the Growth dataset where green curves refer to the boys’ heights while the blue ones are for the girls’, with empirical mean curves (in solid black) and our VB estimated mean curves (in solid red). The left graph is resulted from Model 1 while the right is from Model 2 (color figure online)

Left: DIC values for different clusters (\(K=2,3,4,5\)) in Canadian weather data. The best number of clusters is three which has the smallest DIC. Right: Clustering results under Model 1 (cities with same color are predicted in the same cluster) for Canadian weather data with preset three clusters (\(K=3\)) (color figure online)

5 Conclusion and discussion

This paper develops a new model-based algorithm to cluster functional data via Bayesian variational inference. We first provide an overview of variational inference, a method used to approximate the posterior distribution under the Bayesian framework through optimization. We then derive a mean-field Variational Bayes (VB) algorithm. Next, the coordinate ascent variational inference is applied to update each term in the variational distribution factorization until convergence of the evidence lower bound. Finally, each observed curve is assigned to the cluster with the largest posterior probability.

We build our proposed VB algorithm under two different models. In Model 1, we assume the errors are independent, which may be a strong assumption. Motivated by the Growth data for the children’s heights, which show a parallel structure indicating a shift among curves, we extended our approach to Model 2, which includes more complex variance-covariance structures by adding a random intercept for each curve.

The performance of our proposed VB algorithm in clustering functional data is supported by simulations and real data analyses. In simulation studies, VB accurately estimates mean curves, closely aligning with true curves, resulting in minimal empirical mean integrated squared errors and demonstrating a good fit. In most scenarios, VB consistently outperforms other considered methods (classical k-means, functional k-means, funFEM, and SaS-Funclust) with the highest V-measure and the lowest mismatch rate. We provide insight into the selection of the number of clusters (mixture components) through a two-fold scheme based on DIC. Robustness is assessed via a sensitivity analysis across different prior settings and a study involving a misspecified type of basis functions. In our simulations, the proposed VB algorithm demonstrated computational efficiency, averaging 4 s to cluster each simulated dataset. In particular, for simulated data under Scenarios 1 and 3, VB is over 20 times faster than MCMC (Gibbs sampler). Moreover, VB demonstrates strong consistency with MCMC in estimating the marginal posterior distribution of B-spline basis coefficients and precision parameters. In addition to simulation studies, applying the VB algorithm to the Growth data reveals that Model 2 with a random intercept surpasses Model 1 in both mean curve estimation and clustering performance when the curves from the same cluster show a parallel structure.

The main advantage of our proposed VB algorithm is that we model the raw data and obtain clustering assignments and cluster-specific smooth mean curves simultaneously. In other words, compared to some previous methods where researchers first smooth the data and then cluster the data using only the information after smoothing (e.g., the coefficients of B-spline basis functions); our model, as a regression mixture model, directly uses the raw data as input, performing smoothing and clustering simultaneously. In addition, as we take a Bayesian inference approach, we can measure the uncertainty of our proposed clustering using the obtained cluster assignment posterior probabilities.

While our study has introduced the VB algorithm to cluster functional data using a B-spline regression mixture model, it is important to recognize its limitations. Although our Model 2, which includes a random intercept, provides a more flexible dependence structure, one could explore more intricate Gaussian processes for modeling the random errors. Additionally, it is worth noting that VB is not the sole method for clustering functional data with regression mixtures; alternatives like Gibbs sampler (as used for comparison here) or other MCMC-based algorithms can also be considered. In this work, we focus on the case where, for each curve, the number of basis functions is smaller than the number of evaluation points (\(M < n\)). So, future work may include investigation and further extension of the proposed VB under high-dimensional settings (\(M>>n\)), paying special attention to the issue of underestimation of the variability of the posterior estimates (Mukherjee and Sen 2022; Devijver 2017). For large datasets (large number of curves, N), the coordinate ascent variational inference algorithm, which considers all data points, may result in a high computational cost. Therefore, one may consider scalable algorithms such as the stochastic variational inference (Hoffman et al. 2013) for approximating the posterior distributions.

Furthermore, our approach relies on the assumption that the number of B-spline basis functions (M) is known prior to applying the VB algorithm. This assumption aligns with practical scenarios where researchers may subjectively determine M based on their expertise and/or visual inspection of the curves (Franco et al. 2023; Günther et al. 2021; Lenzi et al. 2017). However, to enhance the model’s adaptability and automate the selection process, future investigations could explore the integration of a mechanism for selecting the number of B-spline bases directly within the VB algorithm itself. Relevant approaches and references for the selection of the number of basis functions include Souza et al. (2023); Devijver et al. (2020); Gálvez et al. (2015); Yuan et al. (2013); Dias and Garcia (2007), and DeVore et al. (2003).

References

Anderson C, Lee D, Dean N (2014) Identifying clusters in Bayesian disease mapping. Biostatistics 15(3):457–469

Angelini C, De Canditiis D, Pensky M (2012) Clustering time-course microarray data using functional Bayesian infinite mixture model. J Appl Stat 39(1):129–149

Bishop C (2006) Pattern recognition and machine learning. Springer, Berlin

Blei DM, Jordan MI (2006) Variational inference for Dirichlet process mixtures. Bayesian Anal 1(1):121–143. https://doi.org/10.1214/06-BA104

Blei DM, Kucukelbir A, McAuliffe JD (2017) Variational inference: a review for statisticians. J Am Stat Assoc 112(518):859–877

Boschi T, Di Iorio J, Testa L, Cremona MA, Chiaromonte F (2021) Functional data analysis characterizes the shapes of the first Covid-19 epidemic wave in Italy. Sci Rep. https://doi.org/10.1038/s41598-021-95866-y

Bouveyron C, Côme E, Jacques J (2015) The discriminative functional mixture model for a comparative analysis of bike sharing systems. Ann Appl Stat 1726–1760

Centofanti F, Lepore A, Palumbo B (2023) Sparse and smooth functional data clustering. Stat Pap 1–31

Chamroukhi F (2016) Piecewise regression mixture for simultaneous functional data clustering and optimal segmentation. J Classif 33(3):374–411. https://doi.org/10.1007/s00357-016-9212-8

Chamroukhi F (2016) Unsupervised learning of regression mixture models with unknown number of components. J Stat Comput Simul 86(12):2308–2334

Chamroukhi F, Nguyen HD (2019) Model-based clustering and classification of functional data. Wiley Interdiscipl Rev Data Min Knowl Discov 9(4):e1298

Chen T, Zhang NL, Liu T, Poon KM, Wang Y (2012) Model-based multidimensional clustering of categorical data. Artif Intell 176(1):2246–2269

Collazos JAA, Dias R, Medeiros MC (2023) Modeling the evolution of deaths from infectious diseases with functional data models: The case of covid-19 in brazil. Stat Med . https://doi.org/10.1002/sim.9654. https://onlinelibrary.wiley.com/doi/pdf/10.1002/sim.9654

Cover TM (1999) Elements of information theory. Wiley, New York

De Souza CP, Heckman NE, Xu F (2017) Switching nonparametric regression models for multi-curve data. Can J Stat 45(4):442–460

Delaigle A, Hall P, Pham T (2019) Clustering functional data into groups by using projections. J R Stat Soc Ser B (Stat Methodol) 81(2):271–304. https://doi.org/10.1111/rssb.12310

Devijver E (2017) Model-based regression clustering for high-dimensional data: application to functional data. Adv Data Anal Classif 11:243–279

Devijver E, Goude Y, Poggi JM (2020) Clustering electricity consumers using high-dimensional regression mixture models. Appl Stoch Model Bus Ind 36(1):159–177

DeVore R, Petrova G, Temlyakov V (2003) Best basis selection for approximation in lp. Found Comput Math 3:161–185

Dias R, Garcia NL (2007) Consistent estimator for basis selection based on a proxy of the Kullback–Leibler distance. J Econ 141(1):167–178

Dias R, Garcia NL, Ludwig G, Saraiva MA (2015) Aggregated functional data model for near-infrared spectroscopy calibration and prediction. J Appl Stat 42(1):127–143

Dias R, Garcia NL, Martarelli A (2009) Non-parametric estimation for aggregated functional data for electric load monitoring. Environmetrics 20:111–130. https://doi.org/10.1002/env.914

Earls C, Hooker G (2017) Variational Bayes for functional data registration, smoothing, and prediction. Bayesian Anal 12(2):557–582. https://doi.org/10.1214/16-BA1013

Escobar MD, West M (1995) Bayesian density estimation and inference using mixtures. J Am Stat Assoc 90(430):577–588

Faes C, Ormerod JT, Wand MP (2011) Variational bayesian inference for parametric and nonparametric regression with missing data. J Am Stat Assoc 106(495):959–971

Febrero-Bande M, de la Fuente MO (2012) Statistical computing in functional data analysis: the r package fda.usc. J Stat Softw 51(4):1–28. https://doi.org/10.18637/jss.v051.i04

Franco G, de Souza CPE, Garcia NL (2023) Aggregated functional data model applied on clustering and disaggregation of uk electrical load profiles. J R Stat Soc: Ser C: Appl Stat 72(1):48–75

Frizzarin M, Bevilacqua A, Dhariyal B, Domijan K, Ferraccioli F, Hayes E, Ifrim G, Konkolewska A, Nguyen TL, Mbaka U, Ranzato G, Singh A, Stefanucci M, Casa A (2021) Mid infrared spectroscopy and milk quality traits: a data analysis competition at the "international workshop on spectroscopy and chemometrics 2021"

Fruhwirth-Schnatter S, Celeux G, Robert CP (2019) Handbook of mixture analysis. CRC Press, Cambridge

Gálvez A, Iglesias A, Avila A, Otero C, Arias R, Manchado C (2015) Elitist clonal selection algorithm for optimal choice of free knots in b-spline data fitting. Appl Soft Comput 26:90–106

Gao H, Bryc K, Bustamante CD (2011) On identifying the optimal number of population clusters via the deviance information criterion. PLoS ONE 6(6):e21014

Geisser S, Eddy WF (1979) A predictive approach to model selection. J Am Stat Assoc 74(365):153–160

Giacofci M, Lambert-Lacroix S, Marot G, Picard F (2013) Wavelet-based clustering for mixed-effects functional models in high dimension. Biometrics 69(1):31–40. https://doi.org/10.1111/j.1541-0420.2012.01828.x

Goldsmith J, Wand MP, Crainiceanu C (2011) Functional regression via variational bayes. Electron J Stat 5:572

Grün B (2019) Model-based clustering, Handbook of mixture analysis. CRC Press, Taylor & Francis Group, pp 157–192

Günther S, Pazner W, Qi D (2021) Spline parameterization of neural network controls for deep learning. arXiv preprint arXiv:2103.00301

Hael MA, Yongsheng Y, Saleh BI (2020) Visualization of rainfall data using functional data analysis. SN Appl Sci 2(3):461. https://doi.org/10.1007/s42452-020-2238-x

Hartigan J, Wong M (1979) A k-means clustering algorithm. J R Stat Soc Ser C 28:100–108

Heinzl F, Tutz G (2013) Clustering in linear mixed models with approximate Dirichlet process mixtures using em algorithm. Stat Model 13(1):41–67

Hoffman MD, Blei DM, Wang C, Paisley J (2013) Stochastic variational inference. J Mach Learn Res 14:1303–1347

Hu G, Geng J, Xue Y, Sang H (2020) Bayesian spatial homogeneity pursuit of functional data: an application to the u.s. income distribution

Jacques J, Preda C (2013) Funclust: a curves clustering method using functional random variables density approximation. Neurocomputing 112:164–171. https://doi.org/10.1016/j.neucom.2012.11.042

Jacques J, Preda C (2014) Functional data clustering: a survey. Adv Data Anal Classif 8(3):24

James G, Sugar C (2003) Clustering for sparsely sampled functional data. J Am Stat Assoc 98(462):397–408

Jones MC, Rice JA (1992) Displaying the important features of large collections of similar curves. Am Stat 46(2):140

Jordan MI, Ghahramani Z, Jaakkola T, Saul L (1999) Introduction to variational methods for graphical models. Mach Learn 37:183–233

Komárek A (2009) A new R package for Bayesian estimation of multivariate normal mixtures allowing for selection of the number of components and interval-censored data. Comput Stat Data Anal 53(12):3932–3947

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86. https://doi.org/10.1214/aoms/1177729694

Lenzi A, de Souza CP, Dias R, Garcia NL, Heckman NE (2017) Analysis of aggregated functional data from mixed populations with application to energy consumption. Environmetrics 28(2):e2414. https://doi.org/10.1002/env.2414

Li T, Ma J (2020) Functional data clustering analysis via the learning of gaussian processes with Wasserstein distance. In: Kwok JT, Chan JH, King I (eds) Yang H, Pasupa K, Leung ACS (eds) Neural information processing, Springer International Publishing, Cham pp 393–403

Liu X, Yang MC (2009) Simultaneous curve registration and clustering for functional data. Comput Stat Data Anal 53(4):1361–1376

Luts J, Wand MP (2015) Variational inference for count response semiparametric regression. Bayesian Anal 10(4):991–1023. https://doi.org/10.1214/14-BA932

Martino A, Ghiglietti A, Ieva F, Paganoni AM (2019) A k-means procedure based on a mahalanobis type distance for clustering multivariate functional data. Stat Methods Appl 28(2):301–322. https://doi.org/10.1007/s10260-018-00446-6

McLachlan GJ, Lee SX, Rathnayake SI (2019) Finite mixture models. Annu Rev Stat Appl 6:355–378

Melnykov V, Maitra R (2010) Finite mixture models and model-based clustering. Stat Surv 4:80–116. https://doi.org/10.1214/09-SS053

Mukherjee S, Sen S (2022) Variational inference in high-dimensional linear regression. J Mach Learn Res 23(1):13703–13758

Nguyen X, Gelfand AE (2011) The dirichlet labeling process for clustering functional data. Stat Sinica 1249–1289

Nieto-Barajas LE, Contreras-Cristán A (2014) A Bayesian nonparametric approach for time series clustering. Bayesian Anal 9(1):147–170. https://doi.org/10.1214/13-BA852

Peel D, MacLahlan G (2000) Finite mixture models. Wiley

Petrone S, Guindani M, Gelfand AE (2009) Hybrid dirichlet mixture models for functional data. J R Stat Soc Ser B Stat Methodol 71(4):755–782

Ramsay J, Hooker G, Graves S (2009) Functional data analysis with R and MATLAB. Springer, New York

Ramsay JO, Dalzell CJ (1991) Some tools for functional data analysis. J Roy Stat Soc: Ser B (Methodol) 53(3):539–561. https://doi.org/10.1111/j.2517-6161.1991.tb01844.x

Ramsay JO, Silverman BW (2005) Functional data analysis, 2nd edn. Springer, Berlin

Rand WM (1971) Objective criteria for the evaluation of clustering methods. J Am Stat Assoc 66(336):846–850

Ray S, Mallick B (2006) Functional clustering by Bayesian wavelet methods. J R Stat Soc Ser B (Stat Methodol) 68(2):305–332

Rigon T (2023) An enriched mixture model for functional clustering. Appl Stoch Model Bus Ind 39(2):232–250

Rodríguez A, Dunson DB, Gelfand AE (2009) Bayesian nonparametric functional data analysis through density estimation. Biometrika 96(1):149–162

Rosenberg A, Hirschberg J (2007, June) V-measure: a conditional entropy-based external cluster evaluation measure. In: Proceedings of the 2007 joint conference on empirical methods in natural language processing and computational natural language learning (EMNLP-CoNLL), Prague, Czech Republic, pp 410–420. Association for Computational Linguistics

Rossi F, Conan-Guez B, El Golli A (2004) Clustering functional data with the som algorithm. In: ESANN, pp 305–312. Citeseer

Rousseau J, Mengersen K (2011) Asymptotic behaviour of the posterior distribution in overfitted mixture models. J R Stat Soc Ser B Stat Methodol 73(5):689–710

Samé A, Chamroukhi F, Govaert G, Aknin P (2011) Model-based clustering and segmentation of time series with changes in regime. Adv Data Anal Classif 5(4):301–321. https://doi.org/10.1007/s11634-011-0096-5

Sousa PHTO, de Souza CPE, Dias R (2023) Bayesian adaptive selection of basis functions for functional data representation. J Appl Stat. https://doi.org/10.1080/02664763.2023.2172143

Spiegelhalter DJ, Best NG, Carlin BP, Van Der Linde A (2002) Bayesian measures of model complexity and fit. J R Stat Soc Ser B (Stat Methodol) 64(4):583–639. https://doi.org/10.1111/1467-9868.00353

Tarpey T, Kinateder K (2003) Clustering functional data. J Classif 20(1):93–114

Tuddenham RD, Snyder MM (1954) Physical growth of california boys and girls from birth to eighteen years. Publications in child development. University of California, Berkeley 12:183–364

Wainwright MJ, Jordan MI, et al (2008) Graphical models, exponential families, and variational inference. Found Trends® Mach Learn 1(1–2):1–305

Wang B, Titterington DM (2005) Inadequacy of interval estimates corresponding to variational bayesian approximations. In: International workshop on artificial intelligence and statistics, pp 373–380. PMLR

Wang JL, Chiou JM, Müller HG (2016) Functional data analysis. Ann Rev Stat Appl 3:257–295

Wang WL, Lin TI (2022) Model-based clustering via mixtures of unrestricted skew normal factor analyzers with complete and incomplete data. Stat Methods Appl 1–31

Ward J (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58:236–244

Watanabe S, Opper M (2010) Asymptotic equivalence of bayes cross validation and widely applicable information criterion in singular learning theory. J Mach Learn Res 11(12)

Xian C, de Souza CP, He W, Rodrigues FF, Tian R (2024) Variational bayesian analysis of survival data using a log-logistic accelerated failure time model. Stat Comput 34(2):67

Yang Y, Yang Y, Shang HL (2021) Feature extraction for functional time series: theory and application to nir spectroscopy data