Abstract

Laplacian support vector machine (LapSVM), which is based on the semi-supervised manifold regularization learning framework, performs better than the standard SVM, especially for the case where the supervised information is insufficient. However, the use of hinge loss leads to the sensitivity of LapSVM to noise around the decision boundary. To enhance the performance of LapSVM, we present a novel semi-supervised SVM with the asymmetric squared loss (asy-LapSVM) which deals with the expectile distance and is less sensitive to noise-corrupted data. We further present a simple and efficient functional iterative method to solve the proposed asy-LapSVM, in addition, we prove the convergence of the functional iterative method from two aspects of theory and experiment. Numerical experiments performed on a number of commonly used datasets with noise of different variances demonstrate the validity of the proposed asy-LapSVM and the feasibility of the presented functional iterative method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As a powerful supervised learning algorithm based on solid theoretical foundations, support vector machine (SVM) (Cristianini and Shawe-Taylor 2000; Vapnik 1995) has gained substantial attention in many research areas. By simultaneously minimizing the regularization term and the hinge loss function, SVM implements the structural risk minimization principle rather than the traditional empirical risk minimization principle. Due to its remarkable characteristics such as the good generalization performance, the absence of local minima and sparse representation of the solution, SVM and its variants (Zhao et al. 2019; Melki et al. 2018) have been successfully applied to a wide range of applications, including classification, regression, clustering, representational learning and so on. However, there still exists a great space for improvements on the traditional SVM.

On the one hand, the use of hinge loss function leads to the sensitivity of SVM to re-sampling and noise around the decision boundary. To alleviate this disadvantage, various methods have been proposed so far (Bi and Zhang 2004; Shivaswamy et al. 2006; Xu et al. 2009; Zhong 2012; Wang et al. 2015; Wang and Zhong 2014). Recently, motivated by the link between the hinge loss and the shortest distance, Huang et al. (2014) proposed a new SVM classifier with the pinball loss (Pin-SVM). The pinball loss, which shares many good properties and brings noise insensitivity for classification, is related to the quantile distance (Koenker 2005; Jumutc et al. 2013) between two classes. The theoretical analysis and experimental results show that Pin-SVM is more stable to noise-corrupted data compared with the traditional SVM. Lately, to speed up the training process for Pin-SVM, Huang et al. (2014) further exploited the expectile distance as a surrogate of the quantile distance and propose a new SVM classifier with the asymmetric squared loss (aLS-SVM). This is motivated by the fact that the expectile value, which is related to minimizing the asymmetric squared loss (Lu et al. 2018), has similar statistical properties to the quantile value. In other words, aLS-SVM is an approximation of Pin-SVM which can be effectively solved.

On the other hand, a main challenge for the standard SVM is its dependence on sufficient supervised information. In many real-world applications, such as natural language parsing (Tur et al. 2005), spam filtering (Guzella and Caminhas 2009), and video surveillance (Zhang et al. 2011), the acquisition of enough labeled data is usually difficult while unlabeled data are available in large quantity. In such situations, the performance of SVM usually deteriorates since a lot of information carried by the unlabeled data is simply ignored. To handle the problem, semi-supervised learning (SSL) is proposed. It has become an efficient paradigm (Chapelle et al. 2006; Scardapane et al. 2016; Li et al. 2017; Calma et al. 2018), especially that with manifold regularization (MR) (Belkin et al. 2006; Chen et al. 2014) which tries to capture the geometric information from both labeled and unlabeled data and makes the smoothness of classifiers along the intrinsic manifold via an additional regularization term. With the addition of the MR into the conventional SVM, Belkin et al. (2006) presented the classical Laplacian SVM (LapSVM), in which the geometric information embedded in the abundant unlabeled data is fully considered to build more reasonable classifiers. After that, under the semi-supervised MR learning framework, a number of SVM-based SSL algorithms have emerged (Sun 2013; Khemchandani and Pal 2016; Pei et al. 2017a, b). However, similar to SVM, LapSVM is sensitive to noise-corrupted data, since it also employs the minimal distance which is related to the hinge loss to measure the margin between two classes.

In this paper, inspired by the studies above, we propose a novel semi-supervised support vector machine with the asymmetric squared loss (asy-LapSVM). Our motivation mainly depends on the facts that the MR has the ability to encode the geometric information embedded in the unlabeled data and the expectile distance is stable to noise-corrupted data. In other words, we hope that the proposed asy-LapSVM possesses the ability to make full use of the abundant unlabeled data, and moreover, it is stable to noise-corrupted data. Moreover, to speed up the training process, we present a simple and efficient functional iterative method to replace the traditional quadratic programming (QP) to solve the involved optimization problems. The convergence property of the iterative method is proved by both of the theory and experiments. Experimental results on a number of commonly used datasets show that the proposed asy-LapSVM achieves a significant performance in comparison with several popular supervised learning (SL) and SSL algorithms.

In summary, by incorporating the properties of the SSL and the asymmetric squared loss, the advantages of the proposed algorithm are as follows:

-

We construct a robust LapSVM framework by adopting an asymmetric squared loss, and it can be effectively solved with the help of a simple functional iterative method.

-

The proposed model belongs to inductive learning and is natural for out-of-sample data, which can avoid expensive graph computation.

-

The extensive comparison experiments with several related methods on widely used benchmark datasets demonstrate the effectiveness of the proposed method.

Moreover, compared with the very recently published algorithms, such as the pure SSL algorithms in references (Huang et al. 2014; Ma et al. 2019), the proposed asy-LapSVM not only maintains their primary advantages such as the ability to make use of unlabeled data and handle unseen data in the testing phase directly, but also makes them less sensitive to noise-corrupted data (feature noises). As for the robust SSL algorithms algorithms in references (Du et al. 2019; Pei et al. 2018; Gu et al. 2019), they are all proposed for outliers among labeled data (label noises).

The remainder of this paper is organized as follows. Section 2 briefly outlines the background. The novel asy-LapSVM is proposed in Sect. 3, which includes both the linear and nonlinear cases. Moreover, the proof of convergence of the iterative algorithm is given. After presenting the experimental results on multiple datasets in Sect. 4, we conclude this paper in Sect. 5.

2 Background

In this section, some background knowledge of the proposed algorithm including the asymmetric squared loss function and the semi-supervised manifold regularization learning framework is briefly reviewed.

2.1 Asymmetric squared loss function

In the field of machine learning, loss function is usually one of the key issues in designing learning algorithms since most problems require it to describe the cost of the discrepancy between the prediction and the observation. In fact, the use of the loss function can be traced back to a long time ago. For example, the least-square loss function for regression was already employed by Legendre, Gauss, and Adrain in the early 19th century (Steinwart and Christmann 2008). At present, various margin-based loss functions, such as hinge loss, squared loss, exponential loss, logistic loss, and brown boost loss have been exploited to search for the optimal classification and regression functions.

In a binary classification problem, a large margin between two classes plays an important role to obtain a good classifier. Traditionally, the margin is measured by the minimal distance between two sets, which is related to the hinge loss (1) or the squared hinge loss (2):

where \(r=1-yf({\varvec{x}})\), in which \({\varvec{x}}\in R^{d}\) is the input sample, \(y\in \{+1,-1\}\) is the corresponding output, and \(f({\varvec{x}})\) is the prediction. However, measuring the margin by the minimal value leads to the sensitivity of the corresponding classifiers to re-sampling and noise around the decision boundary. To overcome this weak point, Huang et al. (2014) employed the quantile value which has been deeply studied and widely applied in regression problems, as a surrogate of the minimal value to measure the margin between classes, and proposed the following pinball loss (3) which brings noise insensitivity:

where \(0<u< 1\). Different from the hinge loss, the pinball loss gives an additional penalty on the correctly classified samples. So, the pinball loss can be regarded as an extension to the hinge loss. However, the pinball loss is non-smooth and its minimization is more difficult than that of smooth loss functions. Hence, Huang et al. (2014) modified the measurement of margin by taking the expectile value, which is related to the following smooth asymmetric squared loss:

The property of asymmetric squared loss is similar to that of the pinball loss. From the definition of \(v_{\mathrm{asy}}(\cdot )\), one observes that when the value of u is 1, it becomes the squared hinge loss \(v_{\mathrm{shin}}(\cdot )\). Similar to the difference between the pinball loss and the hinge loss, the asymmetric squared loss, which gives an asymmetric penalty for negative and positive losses, can be seen as a generalized squared hinge loss. The plots of \(v_{\mathrm{hin}}(r)\), \(v_{\mathrm{shin}}(r)\), \(v_{\mathrm{pin}}(r)\) and \(v_{\mathrm{asy}}(r)\) with \(u=0.83\) are shown in Fig. 1.

2.2 Semi-supervised manifold regularization learning framework

The idea of regularization, which is widely used in machine learning, has its root in mathematics to solve ill-posed problems (Tikhonov 1963). A number of popular learning algorithms can be interpreted as a supervised regularization learning framework that consists of different loss functions and complexity measures in an appropriately chosen Reproducing Kernel Hilbert Space (RKHS).

Given a set of labeled samples \({\mathcal {S}}_{l}=\{{\varvec{x}}_{i},y_{i}\}_{i=1}^{l}\) generated according to a probability distribution, the standard supervised regularization learning framework can be written as:

where \(r_{i}=1-y_{i}f({\varvec{x}}_{i})\) for \(i=1,\ldots ,l\), v stands for some loss function on the labeled samples, \(\lambda _{1}\) is the weight of \(\Vert f\Vert ^{2}_{k}\) that controls the complexity of the unknown function f, in which \(\Vert \cdot \Vert _{k}\) is a norm related to a Mercer kernel k in the RKHS \({\mathcal {H}}_{k}\). For the choice of k, it can be the linear kernel function, the polynomial kernel function (POLY) and the radial basis function kernel function (RBF) and so on. In the numerical experiments, the RBF kernel (6) which gains an advantages over others (Lu et al. 2018) will be employed:

where \(\sigma \) is the kernel parameter.

It is clear that the excellent performance of standard supervised regularization learning framework (5) is in premise of sufficient labeled samples. However, in many real-world applications, the acquisition of labeled data is generally more difficult than the collection of unlabeled ones. In such situations, a learning framework that is able to make full use of unlabeled data to improve recognition performance is of potentially great significance. In the light of this idea, by adding a manifold regularization term \(\Vert f\Vert ^{2}_{{\mathcal {I}}}\) in formulation (5), Belkin et al. (2006) proposed the following semi-supervised manifold regularization learning framework:

where \(\lambda _{2}\) is the weight of \(\Vert f\Vert ^{2}_{{\mathcal {I}}}\) in the low dimensional manifold (or intrinsic norm), which enforces f smoothness along the intrinsic manifold.

Given l labeled and m unlabeled data in the training set

where \({\varvec{x}}_{i}\in R^{d}, i=1,\ldots ,n\) (\(n=l+m\) is the number of training samples), and \(y_{i}\in \{-1,1\}\) for \(i=1,\ldots ,l\). \({\mathcal {S}}_{u}\) denotes a set of m unlabeled data which are drawn according to a marginal distribution. The manifold regularization term \(\Vert f\Vert ^{2}_{{\mathcal {I}}}\) can be reexpressed as:

where \({\mathbf{f }}=[f({\varvec{x}}_{1}), \ldots , f({\varvec{x}}_{n})]^{T}\) is the vector of the n values of f on the training data, L is the graph Laplacian associated to \({\mathcal {S}}\), given by \(L=G-W\), where W is the adjacency matrix which can be defined by the p nearest neighbors, and its non-negative edge weight \(W_{ij}\) represents the similarity of every pair of input instances. G is a diagonal matrix with its i-th diagonal element \(G_{ii}=\mathop \sum \nolimits ^{n}_{j=1}W_{ij}\) represents the weight degree of vertex i. When the manifold regularization term (9) is used in the semi-supervised regularization learning framework (7), we can understand it by the means: if the samples \({\varvec{x}}_{i}\) and \({\varvec{x}}_{j}\) has higher similarity (\(W_{ij}\) is larger), the difference of \(f({\varvec{x}}_{i})\) and \(f({\varvec{x}}_{j})\) will obtain a big punishment.

By applying the hinge loss function to the semi-supervised manifold regularization learning framework (7), Belkin et al. (2006) proposed the classical LapSVM which can exploit the geometric information of the marginal distribution embedded in unlabeled data to construct a more reasonable classifier. Due to its excellent performance, LapSVM becomes a powerful choice for SSL. More details about LapSVM can be found in Belkin et al. (2006).

3 Semi-supervised SVM with asymmetric squared loss

In this section, we elaborate the formulation of the proposed semi-supervised SVM with the asymmetric squared loss (asy-LapSVM) for semi-supervised binary classification problem. After giving the detailed derivations of the proposed asy-LapSVM in the linear and nonlinear cases, we present a simple and efficient functional iterative method to optimize them. Then we prove the convergence of the proposed functional iterative method in theory. And in the end, we summary the proposed asy-LapSVM.

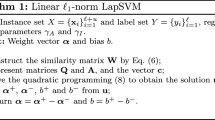

3.1 Linear asy-LapSVM

Although LapSVM has shown good generalization, we notice that hinge loss function is noise sensibility. To further improve its generalization performance, we provide a stable asy-LapSVM, especially for noise-corrupted data. In specific, unlike LapSVM, we exploit the asymmetric squared loss (4) as a surrogate of the hinge loss for the proposed linear asy-LapSVM. Meanwhile, we maximize the margin measured by the expectile distance between two classes by optimizing with respect to both the weight vector \({\varvec{w}}\) and the bias term b of the linear asy-LapSVM classifier \(f({\varvec{x}})= {\varvec{w}}^{T}{\varvec{x}}+b\). Thus, the regularization term \(\Vert f\Vert ^{2}_{k}\) can be expressed as:

As for the manifold regularization term \(\Vert f\Vert ^{2}_{{\mathcal {I}}}\), it has the following form:

where \(D=[{\varvec{x}}_{1},\ldots ,{\varvec{x}}_{n}]^{T}\) and \({\mathbf{f }}=[f({\varvec{x}}_{1}), \dots ,f({\varvec{x}}_{n})]^{T}= [{\varvec{w}}^{T}{\varvec{x}}_{1}, \dots ,{\varvec{w}}^{T}{\varvec{x}}_{n}]^{T}=D{\varvec{w}}\), in which we deliberately reduce the bias term b for convenience.

By substituting the asymmetric squared loss (4), the regularization term (10) and the manifold regularization term (11) into the semi-supervised manifold regularization learning framework (7), the primal problem of the linear asy-LapSVM can be formulated as

where \(0<u<1\), c and \(\lambda \) are the regularization parameters, \({\varvec{\xi }}\) is the error variable vector, \({\varvec{e}}_{l}\) is the vector of ones of l dimension, \({\varvec{e}}\) is the vector of ones of n dimension, \(Y\in R^{l\times n}\) is a matrix with elements \(Y_{ii}=y_{i}\) and other elements are zeros.

Introducing the nonnegative Lagrange parameter vectors \({\varvec{\alpha }}_{1}\) and \({\varvec{\alpha }}_{2}\), the Lagrangian function for the problem (12) can be expressed as

According to the following Karush–Kuhn–Tucker (KKT) conditions

we get

Then the following dual problem of (12) can be derived by substituting the above Eqs. (17)–(19) into the Lagrangian function (13)

where \(F=Y(D(I+\lambda D^{T}LD)^{-1}D^{T}+{\varvec{e}}{\varvec{e}}^{T})Y^{T}\).

Although the proposed asy-LapSVM can be solved by the classical quadratic programming, inspired by the idea in Balasundaram and Benipal (2016), we adopt a simple functional iterative method, which leads to the minimization of a differentiable convex function in a space of dimensionality equal to the number of classified points and in some cases is dramatically faster than a standard quadratic programming SVM solver, to solve the dual problem (20). Specifically, based on the Karush–Kuhn–Tucker (KKT) necessary and sufficient optimality conditions for the dual problem (20), we have

\(\Leftrightarrow \)

\(\Leftrightarrow \)

where the symbol “\(\perp \)” represents two vectors are orthogonal. By exploiting the easily established identity between any two vectors \({\varvec{a}}\) and \({\varvec{b}}\) (Fung and Mangasarian 2004):

where \({\varvec{b}}_{+}=\max \{{\varvec{0}},{\varvec{b}}\}\) and \(|{\varvec{b}}|\) denotes the vector with all of its components set to absolute values, the optimality conditions (23) can be written in the following equivalent form:

Next, let \({\varvec{\alpha }}={\varvec{\alpha }}_{1}-{\varvec{\alpha }}_{2}\), we obtain the following absolute value equation problem:

\(\Leftrightarrow \)

The problem (27) can be solved by a simple functional iterative method given by

After the optimal solution \({\varvec{\alpha }}^{*}\) is obtained, we get the following linear asy-LapSVM classifier which is represented by the dual variables

3.2 Nonlinear asy-LapSVM

The same as the linear case, the asymmetric squared loss (4) is exploited in the proposed nonlinear asy-LapSVM, and the margin measured by the expectile distance is maximized by optimizing with respect to both the weight vector \({\varvec{w}}\) and the bias term b of the nonlinear asy-LapSVM classifier \(f({\varvec{x}})= {\varvec{w}}^{T}\phi ({\varvec{x}})+b\), where \(\phi (\cdot )\) is a nonlinear mapping function from a low-dimensional input space to a high-dimensional Hilbert space \({\mathcal {H}}\). Thus, the regularization term \(\Vert f\Vert ^{2}_{k}\) can be expressed as:

As for the manifold regularization term \(\Vert f\Vert ^{2}_{{\mathcal {I}}}\), on the basis of the constructed graph laplacian matrix L, the manifold regularization term is defined as:

where \(D_{1}=[\phi ({\varvec{x}}_{1}),\ldots ,\phi ({\varvec{x}}_{n})]^{T}\) includes all of the labeled and unlabeled samples in \({\mathcal {S}}\) and \({\mathbf{f }}=[f({\varvec{x}}_{1}),\dots ,f({\varvec{x}}_{n})]^{T}=[{\varvec{w}}^{T}\phi ({\varvec{x}}_{1}),\dots ,{\varvec{w}}^{T}\phi ({\varvec{x}}_{n})]^{T}=D_{1}{\varvec{w}}\), in which we deliberately reduce the bias term b for convenience.

By substituting the asymmetric squared loss (4), the regularization term (30) and the manifold regularization term (31) into the the semi-supervised manifold regularization learning framework (7), the primal problem of the nonlinear asy-LapSVM can be formulated as

where the constant u, the parameters c and \(\lambda \), the vectors \({\varvec{\xi }}\), \({\varvec{e}}_{l}\) and \({\varvec{e}}\), the matrix Y have the same meanings as the ones in problems (12).

According to the Representer Theorem (Belkin et al. 2006), \({\varvec{w}}\) can be expressed as \({\varvec{w}}=\mathop \sum \nolimits ^{n}_{i=1}\rho _{i}\phi ({\varvec{x}}_{i})=D_{1}^{T}{\varvec{\rho }}\), where \({\varvec{\rho }}\in R^{n}\) is a parameter vector. Then the terms containing \({\varvec{w}}\) in the optimization problem (32) can be rewritten as

where \(K\in R^{n\times n}\) is a Gram matrix with elements \(K_{i,j}=k({\varvec{x}}_{i},{\varvec{x}}_{j})\).

Based on the above analysis, the nonlinear optimization problem (32) can be converted into the following form:

where \(F_{1}=K+\lambda KLK\) is a symmetric positive semi-definite matrix since the Gram matrix K and the graph Laplacian L are two symmetric positive semi-definite matrices.

The Lagrange function of the optimization problem (35) can be written as

where \({\varvec{\alpha }}_{1}\) and \({\varvec{\alpha }}_{2}\) are Lagrange parameter vectors.

Differentiating the Lagrange function (36) with respect to \({\varvec{\rho }}\), b, \({\varvec{\xi }}\) and setting them equal to zero, we can obtain

It is worth noting that although the matrix \(F_{1}\) in (37) is always positive semi-definite, it may not be well conditioned in some situations. In the light of the idea of regularization, \(F_{1}^{-1}\) can be revised by \((\epsilon I+F_{1})^{-1}\), where \(\epsilon I\) \((\epsilon >0)\) is a regularization term. In the following, we shall continue to use \(F_{1}^{-1}\) with the understanding that, if need be, \((\epsilon I+F_{1})^{-1}\) is to be used.

Then the following dual problem of (35) can be derived by substituting the above Eqs. (37)–(39) into the Lagrangian function (36)

where \(F_{2}=Y(KF_{1}^{-1}K+ee^{T})Y^{T}\).

We solve the dual problem (40) by a functional iterative method. Specifically, based on the Karush–Kuhn–Tucker (KKT) necessary and sufficient optimality conditions for the dual problem (40) , we have

\(\Leftrightarrow \)

\(\Leftrightarrow \)

By exploiting the identity (24), the optimality conditions (43) can be written in the following equivalent form:

Let \({\varvec{\alpha }}={\varvec{\alpha }}_{1}-{\varvec{\alpha }}_{2}\), we obtain the following absolute value equation problem:

\(\Leftrightarrow \)

The problem (46) can be solved by the simple functional iterative method given by

After the optimal solution \({\varvec{\alpha }}^{*}\) is obtained, we get the following nonlinear asy-LapSVM classifier which is represented by the dual variables

where \({\varvec{\rho }}^{*}=F_{1}^{-1}KY^{T}{\varvec{\alpha }}^{*}\) and \(b^{*}={\varvec{e}}^{T}Y^{T}{\varvec{\alpha }}^{*}\).

Next, we prove the convergence of the proposed functional iterative method in the following Theorem.

Theorem 1

Assume that \({\varvec{\alpha }}\in R^{l}\) is the solution of the absolute value Eq. (46) and \(0.5<u<1\), then, for any starting vector \({\varvec{\alpha }}^{0}\in R^{l}\), the sequence of iterates generated by (47) will always converge to \({\varvec{\alpha }}\) with linear rate of convergence.

Proof

According to the conditions given in the above theorem, by using (46) and (47), we get

Since

we have

Let \(\{q_{1},\ldots ,q_{l}\}\) be the set of the nonnegative eigenvalues of the positive semi-definite matrix \(F_{2}\). Clearly, the eigenvalues of \((F_{2}-\frac{I}{cu(1-u)})(F_{2}+\frac{I}{cu(1-u)})^{-1}\) will become:

So, \((2u-1) ||(F_{2}-\frac{1}{cu(1-u)}) (F_{2}+ \frac{1}{cu(1-u)})^{-1}| |=(2u-1) \max \{|\frac{q_{1}- \frac{1}{cu(1-u)}}{q_{1}+ \frac{1}{cu(1-u)}}|, \ldots ,|\frac{q_{l}- \frac{1}{cu(1-u)}}{q_{l}+\frac{1}{cu(1-u)}}|\}<1\) is always true. This shows the Theorem 1 holds. \(\square \)

Although we only prove the convergence of the nonlinear case, the convergence of the linear case also can be easily gotten in the similar way. In the end, we summary the proposed nonlinear asy-LapSVM as follows:

Input. A set of l labeled data and m unlabeled data \({\mathcal {S}}={\mathcal {S}}_{l}\cup {\mathcal {S}}_{u}= \{({\varvec{x}}_{i},y_{i})\}_{i=1}^{l} \cup \{{\varvec{x}}_{i}\}_{i=l+1}^{n}\), the constant u, the positive parameters c and \(\lambda \), the kernel parameter \(\sigma \), the number of the nearest neighbors p, the vector of ones of l dimension \({\varvec{e}}_{l}\), the vector of ones of n dimension e, the matrix \(Y\in R^{l\times n}\) with elements \(Y_{ii}=y_{i}\) according to the class of each sample, the maximum number of iterations \(maxI =15\), the tolerance \(tol=10^{-4}\), and initial iteration number \(t=0\).

Step 1. Compute the graph Laplacian matrix \(L=G-W\), in which W denotes the adjacency matrix which is constructed by p nearest neighbors with n nodes in \({\mathcal {S}}\) and edge weights \(W_{ij}\) are calculated by binary weights. G denotes the diagonal matrix with its diagonal elements \(G_{ii}=\mathop \sum \nolimits ^{n}_{j=1}W_{ij}\) representing the weight degree of the vertex i.

Step 2. Choose a proper kernel function \(k(\cdot ,\cdot )\), and compute the Gram matrix K.

Step 3. Compute the matrices

where \(I \in {\mathcal {R}}^{l\times l}\) is an identity matrix.

Step 4. Compute the initial vector

Step 5. Compute \({\varvec{\alpha }}^{t+1}\) via

Step 6. If \(\Vert w^{t+1}-w^{t}\Vert < tol\) or \(t> maxI\), stop; else, let \(t=t+1\) and goto Step 5.

Step 7. Compute the bias term \(b={\varvec{e}}^{T}Y^{T}{\varvec{\alpha }}^{t}\).

Step 8. Derive the nonlinear asy-LapSVM classifier

where \({\varvec{\rho }}=(K+\lambda KLK)^{-1}KY^{T}{\varvec{\alpha }}^{t}\).

4 Numerical experiments

In this section, we will verify the validity of the proposed asy-LapSVM by comparing it with several closely relevant algorithms on different kinds of datasets with noise of different variances. After the experimental setup and data are described in Sects. 4.1 and 4.2, we carefully analyze the performance of our proposed asy-LapSVM in the following subsections.

4.1 Experimental setup

We compare the proposed asy-LapSVM with a group of popular supervised learning (SL) algorithms [SVM (Cristianini and Shawe-Taylor 2000), regularized least squares (RLS) (Belkin et al. 2006), extreme learning machine (ELM) (Huang et al. 2006), aLS-SVM (Huang et al. 2014)] and semi-supervised learning (SSL) algorithms (LapSVM (Belkin et al. 2006), Laplacian RLS (LapRLS) (Belkin et al. 2006), semi-supervised ELM (SSELM) (Huang et al. 2014)). In the experiments, the sigmoid activation function is used for the two algorithms with ELM, and the number of hidden neurons is set to 1000. For the remaining algorithms and the proposed asy-LapSVM, the RBF kernel (6) which meets the Mercer’s theorem is employed. For the choice of parameters, a set of possible values are first predefined, and 5-fold cross validation is used for all the compared methods. In order to avoid the bias caused by different sample partition, 5-fold cross validation with different partition repeats 10 times at random, and the average testing accuracies are computed to obtain the final evaluation results. The ranges of the five parameters are listed as follows:

-

\(c: \{2^{-3},2^{-2},2^{-1},2^{0},2^{1},2^{2},2^{3}\}\),

-

\(\lambda : \{2^{-3},2^{-2},2^{-1},2^{0},2^{1},2^{2},2^{3}\}\),

-

\(p: \{5,10,15,20\}\),

-

\(\sigma : \{2^{-3},2^{-2},2^{-1},2^{0},2^{1},2^{2},2^{3}\}\),

-

\(u: \{0.55, 0.65, 0.75, 0.83, 0.95, 0.99\}\).

In the model training, we randomly select 10% training samples as labeled samples, and the remaining training samples are regarded as unlabeled samples. For supervised algorithms, we use only the selected labeled samples to train the classifier, while for semi-supervised algorithms, we use all the training set with both labeled and unlabeled samples to train the classifier. The simulations of all the algorithms are carried out in MATLAB R2016a on a personal computer with system configuration: Intel Core i7 (3.6 GHz) and 8 GB random access memory. We use the quadratic programming (QP) solver embedded in MATLAB to solve all the QP problems and the MATLAB operation “\(\setminus \)” to realize the matrix inverse involved in the algorithms.

4.2 Data specification

In this subsection, several publicly available datasets including one artificial dataset, six UCIFootnote 1 datasets and five image datasets are employed to study the performance of the proposed asy-LapSVM. The G50CFootnote 2 artificial dataset is generated from two unit covariance normal distributions with equal probabilities. The class means are adjusted so that the Bayes error is 5%. For the image datasets, the Coil-20Footnote 3 dataset includes 1440 grey-scale images sampled from 20 objects, and each object has 72 images with the size of \(32\times 32\). The USPSFootnote 4 handwritten digit dataset consists of 7,291 training images and 2,007 test images with size of \(16\times 16\). The YaleBFootnote 5 face recognition dataset admittedly contains manifold structures includes 2414 grey human facial images sampled from 38 persons, and each person has about 64 different images with size of \(32\times 32\). The Multiple Features (MF)Footnote 6 handwritten numeral dataset consists of 10 classes and 2,000 samples. The NUS-WIDE-OBJECT (NWO)Footnote 7 dataset contains 31 categories and 30,000 network images (17,927 for training and 12,703 for testing) created by the media search laboratory of National University of Singapore.

In order to fit into the binary-class environment, we choose two pairwise digits in the USPS dataset, three pairwise objects in the Coil-20 dataset, three pairwise facial images in the YaleB face recognition dataset, eight pairwise subsets in the MF dataset, and seven pairwise subsets in the NWO dataset to constitute 23 binary-class image datasets, namely, USPS1, USPS2, Coil-201, Coil-202, Coil-203, YaleB1, YaleB2, YaleB3, MF1, MF2, MF3, MF4, MF5, MF6, MF7, MF8, NWO1, NWO2, NWO3, NWO4, NWO5, NWO6, and NWO7. To facilitate the calculation, the well-known principal component analysis (PCA) is adopted to preprocess these image datasets, where the dimension and accumulative contribution rate after processing are displayed in Table 1. The features of each dataset are corrupted by zero-mean Gaussian noise, and the ratio of the variance of noise to that of the features, denoted as z, is set to be 0 (i.e., noise-free), 0.05, and 0.1. The training and test data are aggravated by the same noise. The important statistics of these employed datasets are summarized in Table 1, where “No.” denotes the ordinal numbers of the datasets. “Selected class” and “Selected size” denote the classes and sizes selected from the original datasets, respectively. “Dimension” denotes the number of the original features.

4.3 Experimental results and discussions

The results of the eight algorithms on the aforementioned datasets with different proportion noise are shown in Table 2, where the best ones are presented in bold. In addition, the learning time (second) taken by each method under the optimal parameters obtained by one 5-fold cross validation is listed in Table 3. For better interpretation, the comparison results of the time among the proposed asy-LapSVM and other three SSL algorithms in the absence of noise are visualized in Fig. 2, where indexes on the horizontal axis denote the ordinal numbers of the employed datasets.

From the experimental results and experimental runtime reported in Tables 2 and 3 and Fig. 2, we can get the following conclusions:

-

(1)

The SSL algorithms including the proposed asy-LapSVM perform better than the corresponding SL ones in most cases, which might be attribute to the manifold regularization which helps classifiers gain more geometric information embedded in unlabeled data to achieve better performance.

-

(2)

The proposed asy-LapSVM stands out the other three SSL algorithms (LapSVM, LapRLS and SSELM) on 54 cases, which implies that adopting the asymmetric squared loss can improve the prediction accuracy, especially for noise-corrupted data. For example, the accuracy of asy-LapSVM in YaleB3 dataset with \(z=0.10\) is 92.55%, and nearly increases by 2.55% compared with the second best method LapSVM.

-

(3)

From Table 3, it can be easy to know that the SSL algorithms generally consume longer time than the SL algorithms. The major reason is that, in addition to the few labeled data which is considered in SL algorithms, the SSL algorithms need to consider lots of unlabeled data.

-

(4)

From Fig. 2, we can see that although the presented functional iterative method for the proposed asy-LapSVM costs more time than LapRLS, it shows faster learning speed than the other two SSL algorithms in most cases, which implies the feasibility of the presented functional iterative method.

To sum up, the proposed method is always the best in terms of classification accuracy and also preferable in terms of learning time among all the SSL algorithms, which implies that the asy-LapSVM is a powerful SSL algorithm for semi-supervised binary-class classification problems in the presence of noise.

4.4 Statistical test

To further compare the performance of the proposed asy-LapSVM with the relevant SSL algorithms, we employ the well-known nonparametric Friedman test with the corresponding post hoc tests (Demsar 2006). The average ranks of four methods on 90 employed datasets in terms of accuracy are shown in Table 4. Under the null hypothesis that all the algorithms are equivalent, we compute the Friedman statistics

and

which is distributed according to the \({\mathcal {F}}\)-distribution with (3, 87) degrees of freedom.

For \(\alpha =0.05\), \({\mathcal {F}}_{\alpha }(3,267)=2.6384<{5.15}\), so we reject the null hypothesis. Next, the Nemenyi post-hoc test is exploited to further compare the four algorithms in pairs. Based on the Studentized range statistic divided by \(\sqrt{2}\), we know \(q_{\alpha }=2.569\) and the critical difference

Thus, if the average ranks of two algorithms differ by at least CD, then their performance is significantly different. From Table 4, we can derive the differences between the proposed asy-LapSVM and other three SSL algorithms as follows:

where \(\mathrm d(a-b)\) denotes the differences between two algorithms \(\mathrm{a}\) and \(\mathrm{b}\). Then we can summarize that the proposed asy-LapSVM performs significantly better than the other SSL algorithms on the employed datasets with noise of different variances.

4.5 Effect of the parameter u

In this subsection, we study the influence of the parameter u in loss function on the performance of the proposed asy-LapSVM. In the experiments, the value of u is tuned in the range \(\{0.55,0.65,0.75,0.83,0.95,0.99\}\). By changing it in the given range and setting other parameters to be optimal values obtained by one 5-fold cross validation, we estimate its influence on seven employed datasets with \(z=0\) and the results are displayed in Fig. 3. As shown in Fig. 3, all the curves are almost invariable with the different values of u, which means that the value of u has little effect on the performance of the proposed algorithm. In other words, the proposed asy-LapSVM is insensitive to the parameter u.

4.6 Analysis for the convergence

In this subsection, by exploiting an empirical justification (Ye 2005), we discuss the convergence of the proposed asy-LapSVM on four employed datasets with \(z=0\). The learning results are illustrated in Fig. 4, where the abscissa denotes the number of iterations and the ordinate denotes the logarithm values of the objective function (35). From Fig. 4, it can be clearly seen that the logarithm values of the objective function change with the iterations and go fast steady in less than 10 iterations. Therefore, we can conclude that the proposed algorithm converges in limited iterations.

5 Conclusion

A novel asy-LapSVM is proposed in this paper to enhance the generalization performance of the classical LapSVM. To our knowledge, it is almost the first time to employ the asymmetric squared loss to improve the performance of the SSL algorithms. Moreover, we present a simple and efficient functional iterative method to solve the proposed asy-LapSVM. And we further investigate the convergence of the functional iterative method from both theoretical and experimental aspects. The validity of the proposed asy-LapSVM and the feasibility of the presented functional iterative method are demonstrated by numerical experiments on a series of popular datasets with noise of different variances.

However, limitations of the proposed asy-LapSVM still exist, such as it is not suitable for online learning. The research along this line will be our future work.

Notes

References

Balasundaram S, Benipal G (2016) On a new approach for Lagrangian support vector regression. Neural Comput Appl. 29(9):533–551

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7:2399–2434

Bi J, Zhang T (2004) Support vector classification with input data uncertainty. Neural Inf Process Syst (NIPS) 17:161–168

Calma A, Reitmaier T, Sick B (2018) Semi-supervised active learning for support vector machines: a novel approach that exploits structure information in data. Inform Sci 456:13–33

Chapelle O, Scholkopf B, Zien A (2006) Semi-supervised learning. MIT Press, Cambridge

Chen W, Shao Y, Xu D, Fu Y (2014) Manifold proximal support vector machine for semi-supervised classification. Appl Intell 40:623–638

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel-based learning methods. Cambridge University Press, Cambridge

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Du B, Tang X, Wang Z, Zhang L, Tao D (2019) Robust graph-based semisupervised learning for noisy labeled data via maximum correntropy criterion. IEEE Trans Cybern 49(4):1440–1453

Fung G, Mangasarian OL (2004) A feature selection Newton method for support vector machine classification. Comput Optim Appl 28(2):185–202

Gu N, Fan P, Fan M, Wang D (2019) Structure regularized self-paced learning for robust semi-supervised pattern classification. Neural Comput Appl 31(10):6559–6574

Guzella TS, Caminhas WM (2009) A review of machine learning approaches to spam filtering. Expert Syst Appl 36(7):10206–10222

Huang G, Zhu Q, Siew C (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501 (Neural Networks Selected Papers from the 7th Brazilian Symposium on Neural Networks, SBRN’04)

Huang X, Shi L, Suykens JAK (2014) Support vector machine classifier with pinball loss. IEEE Trans Pattern Anal Mach Intell 36(5):984–997

Huang X, Shi L, Suykens JAK (2014) Asymmetric least squares support vector machine classifiers. Comput Stat Data Anal 70:395–405

Huang G, Song S, Gupta J, Wu C (2014) Semi-supervised and unsupervised extreme learning machines. IEEE Trans Cybern 44:2405–2417

Jumutc V, Huang X, Suykens JAK (2013) Fixed-size Pegasos for hinge and pinball loss SVM. In: Proceedings of the international joint conference on neural network, Dallas, TX, USA. pp 1122–1128

Khemchandani R, Pal A (2016) Multi-category Laplacian least squares twin support vector machine. Appl Intell 45:458–474

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Li Z, Tian Y, Li K, Zhou F, Yang W (2017) Reject inference in credit scoring using semi-supervised support vector machines. Expert Syst Appl 74:105–114

Lu L, Lin Q, Pei H, Zhong P (2018) The aLS-SVM based multi-task learning classifiers. Appl Intell 48:2393–2407

Ma J, Wen Y, Yang L (2019) Lagrangian supervised and semi-supervised extreme learning machine. Appl Intell 49(2):303–318

Melki G, Kecman V, Ventura S, Cano A (2018) OLLAWV: online learning algorithm using worst-violators. Appl Soft Comput 66:384–393

Pei H, Chen Y, Wu Y, Zhong P (2017) Laplacian total margin support vector machine based on within-class scatter. Knowl-Based Syst 119:152–165

Pei H, Wang K, Zhong P (2017) Semi-supervised matrixized least squares support vector machine. Appl Soft Comput 61:72–87

Pei H, Wang K, Lin Q, Zhong P (2018) Robust semi-supervised extreme learning machine. Knowl-Based Syst 159:203–220

Scardapane S, Fierimonte R, Lorenzo PD, Panella M, Uncini A (2016) Distributed semi-supervised support vector machines. Neural Netw. 80:43–52

Shivaswamy P, Bhattacharyya C, Smola A (2006) Second order cone programming approaches for handling missing and uncertain data. J Mach Learn Res 7:1283–1314

Steinwart I, Christmann A (2008) Support vector machines. Springer, New York

Sun S (2013) Multi-view Laplacian support vector machines. Appl Intell 41(4):209–222

Tikhonov AN (1963) Regularization of incorrectly posed problems. Sov. Math. Dokl 4:1624–1627

Tur G, Hakkani-Tür D, Schapire RE (2005) Combining active and semi-supervised learning for spoken language understanding. Speech Commun 45(2):171–186

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Wang K, Zhong P (2014) Robust non-convex least squares loss function for regression with outliers. Knowl-Based Syst 71:290–302

Wang K, Zhu W, Zhong P (2015) Robust support vector regression with generalized loss function and applications. Neural Process Lett 41:89–106

Xu H, Caramanis C, Mannor S (2009) Robustness and regularization of support vector machines. J Mach Learn Res 10:1485–1510

Ye J (2005) Generalized low rank approximations of matrices. Mach Learn 61(1–3):167–191

Zhang T, Liu S, Xu C, Lu H (2011) Boosted multi-class semi-supervised learning for human action recognition. Pattern Recognit 44(10–11):2334–2342

Zhao J, Xu Y, Fujita H (2019) An improved non-parallel Universum support vector machine and its safe sample screening rule. Knowl-Based Syst 170:79–88

Zhong P (2012) Training robust support vector regression with smooth non-convex loss function. Optim Methods Softw 27(6):1039–1058

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pei, H., Lin, Q., Yang, L. et al. A novel semi-supervised support vector machine with asymmetric squared loss. Adv Data Anal Classif 15, 159–191 (2021). https://doi.org/10.1007/s11634-020-00390-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-020-00390-y