Abstract

Over the past two decades, support vector machines (SVMs) have become a popular supervised machine learning model, and plenty of distinct algorithms are designed separately based on different KKT conditions of the SVM model for classification/regression with different losses, including convex and or nonconvex loss. In this paper, we propose an algorithm that can train different SVM models in a unified scheme. First, we introduce a definition of the least squares type of difference of convex loss (LS-DC) and show that the most commonly used losses in the SVM community are LS-DC loss or can be approximated by LS-DC loss. Based on the difference of convex algorithm (DCA), we then propose a unified algorithm called UniSVM which can solve the SVM model with any convex or nonconvex LS-DC loss, wherein only a vector is computed by the specifically chosen loss. UniSVM has a dominant advantage over all existing algorithms for training robust SVM models with nonconvex losses because it has a closed-form solution per iteration, while the existing algorithms always need to solve an L1SVM/L2SVM per iteration. Furthermore, by the low-rank approximation of the kernel matrix, UniSVM can solve large-scale nonlinear problems efficiently. To verify the efficacy and feasibility of the proposed algorithm, we perform many experiments on small artificial problems and large benchmark tasks both with and without outliers for classification and regression for comparison with state-of-the-art algorithms. The experimental results demonstrate that UniSVM can achieve comparable performance in less training time. The foremost advantage of UniSVM is that its core code in Matlab is less than 10 lines; hence, it can be easily grasped by users or researchers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the past two decades, support vector machines (SVMs) (Vapnik 1999, 2000), which are based on structural risk minimization, have become a computationally powerful machine learning method for supervised learning. They are widely used in classification and regression tasks (Vapnik 2000; Schölkopf and Smola 2002; Steinwart and Christmann 2008), such as disease diagnosis, face recognition, and image classification, etc.

Assuming that a training data set \(\mathbb {T}=\{(\varvec{x}_i, y_i)\}_{i=1}^m\) is drawn independently and identically from a probability distribution on \((\mathcal {X},\mathcal {Y})\) with \(\mathcal {X}\subset \mathbb {R}^d\) and \(\mathcal {Y}= \{-1, +1\}\) for classification or \(\mathcal {Y}= \mathbb {R}\) for regression, the SVM model solves the following optimization problem:

where \(\mathbb {H}\) is a reproducing kernel Hilbert space (RKHS) induced by a kernel function \(\kappa (\varvec{x},\varvec{z}) = \langle \phi (\varvec{x}), \phi (\varvec{z})\rangle \) with a feature mapping \(\phi :\mathbb {R}^d\mapsto \mathbb {H}\), \(\ell (\cdot ,\cdot )\) is a margin-based loss with different choices, and \(\lambda \) is the regularizer. The output prediction function f is parameterized by \(\varvec{w}\) as \(f(\varvec{x}) = \langle \varvec{w}, \phi (\varvec{x})\rangle \). Herein, we take the form without offset for f as in previous papers (Steinwart 2003; Keerthi et al. 2006; Steinwart et al. 2011). The offset can also be considered by adding an extra attribute 1 to every sample \(\varvec{x}\) or to its feature mapping \(\phi (\varvec{x})\).

For nonlinear problems, the model (1) cannot be solved efficiently because \(\phi (\cdot )\) is always a high-dimensional mapping and possibly infinite. By applying the representer theorem (Schölkopf et al. 2001; Schölkopf and Smola 2002; Steinwart and Christmann 2008; Shalev-Shwartz and Ben-David 2014), there exists a vector \(\varvec{\alpha }^*\in \mathbb {R}^m\) such that the solution of (1) admits \(\varvec{w}^*=\sum _{i=1}^{m}\alpha ^*_i \phi (\varvec{x}_i).\) Hence, substituting \(\varvec{w}=\sum _{i=1}^{m}\alpha _i \phi (\varvec{x}_i)\) in (1), we have the following equivalent finite dimensional optimization problem,

where the kernel matrix \({\varvec{K}}\) satisfies \({\varvec{K}}_{i,j}=\kappa (\varvec{x}_i,\varvec{x}_j)\) and \({\varvec{K}}_i\) is the k-th row of \({\varvec{K}}\). The similar model of (2) can also be derived by duality (Vapnik 2000; Boyd and Vandenberghe 2009), where the coefficients \(\varvec{\alpha }\) may be properly bounded (see (5), (6) and (9) for details).

Many scholars have studied different SVM models based on different loss functions. The typical works (Vapnik 2000; Suykens and Vandewalle 1999a; Keerthi et al. 2006; Zhou et al. 2009; Steinwart et al. 2011; Zhou 2013; Zhou et al. 2013; Zhou 2016) are focused on SVM models with convex loss, such as L1-SVM with the hinge loss, L2-SVM with the squared hinge loss, LS-SVM with the least squares least loss, and the support vector regression (SVR) with \(\varepsilon \)-insensitive loss, etc. The state-of-the-art SVM tool, LibSVM (including SVC and SVR) (Chang and Lin 2011), covers some cases with convex losses and has numerous applications.

The algorithms based on convex losses are sensitive to outliers, where “outlier” refers to the contaminated samples far away from the majority of instances with the same labels (Hampel et al. 2011) that may emerge through mislabeling. This is because the contaminated data have the largest weights (support values) to represent the output function in this case.

Many researchers have used nonconvex loss functions to weaken the influence of outliers. For example, Shen et al. (2003), Collobert et al. (2006), and Wu and Liu (2007) study the robust SVM with the truncated hinge loss; Tao et al. (2018) study the robust SVM with the truncated hinge loss and the truncated squared hinge loss. Based on difference of convex algorithm (DCA) procedure (Le Thi and Pham Dinh 2018; Yuille and Rangarajan 2003), researchers have presented algorithms to iteratively solve L1SVM/L2SVM to obtain the solutions of their proposed nonconvex models. By introducing the smooth nonconvex losses, Feng et al. (2016) propose the robust SVM models which solve a re-weighted L2SVM many times. See Subsect. 2.2.2 for the representative robust SVMs.

All the robust SVM algorithms mentioned above have double-layer loops. The inner loop is used to solve a convex problem with parameters adjustable by the outer loop, and the outer loop adjusts those parameters to reach the solution of the nonconvex model.

However, the inner loop of these algorithms is computationally expensive. For example, Collobert et al. (2006), Wu and Liu (2007), Feng et al. (2016), Tao et al. (2018) solve a constrained quadratic programming (QP) defined by L1SVM, L2SVM or re-weighted L2SVM, and all state-of-the-art methods for quadratic programming require substantial numbers of iterations (such as SMO (Platt 1999; Keerthi et al. 2001; Chen et al. 2006) or the tools quadprog in Matlab). In Tao et al. (2018), some efficient techniques based on the coordinate descent are given to reduce the cost of the inner loop, but it remains necessary to solve L1SVM/L2SVM, perhaps with smaller size.

There are three weaknesses to the existing algorithms of the robust SVM models. The first is that the total computational complexity is high, resulting in long training time which limits the algorithms in processing large-scale problems. The second is that most of the existing algorithms are only suitable for classification problems and require complicated modifications when applied for regression problems. The third is that all the existing algorithms are designed separately based on the special kinds of losses, thus costing much effort for the readers/users to learn the different algorithms or to change the losses before making use of them.

Recently, Chen and Zhou (2018) proposed the robust LSSVM based on the truncated least squares loss, which partly resolves the first two weaknesses by removing the inner loop and solving classification/regression tasks similarly). To extend this benefit to all the other losses, by defining an LS-DC loss, we propose a unified solution for different models with different losses, named as UniSVM, which overcomes all three aforementioned weaknesses.

Here, we only focus on the positive kernel case, namely, where \(\mathbb {H}\) is an RKHS. For the nonpositive kernel case, Ong et al. (2004) generalized this type of learning problem to reproducing kernel Kreĭn spaces (RKKS) and verified that the representer theorem still holds in RKKS even if its regularization part is nonconvex. Recently, Xu et al. (2017) decomposed the regularization part as DC form and proposed an efficient learning algorithm where the loss is chosen as only the squared hinge loss. Our results in this work can be seamlessly generalized to the nonpositive case by the methods in Xu et al. (2017).

Our contributions in this work can be summarized as follows:

-

We define a kind of loss with a DC decomposition, called LS-DC loss, and show that all the commonly used losses are LS-DC loss or can be approximated by LS-DC loss.

-

We propose a UniSVM algorithm which can deal with any LS-DC loss in a unified scheme, including both convex and nonconvex losses and both classification and regression losses, in which only one vector is dominated by the specifically chosen loss. Hence, it can train classification problems and regression problems in the same scheme.

-

The proposed UniSVM has low computational complexity, even for nonconvex models, because it solves a system of linear equations iteratively, which has a closed-form solution. Hence, the inner loop disappears.

-

By the efficient low-rank approximation of the kernel matrix, UniSVM can solve large-scale problems efficiently.

-

In view of the theories of DCA, UniSVM converges to the globally optimal solution of the convex model, or to a critical point of the nonconvex model.

-

UniSVM can be easily grasped by users or researchers because its core code in Matlab is less than 10 lines.

The notations in this paper are as follows. All the vectors and matrices are in bold style, such as \(\varvec{v},\varvec{x}_i\) or \({\varvec{K}}\), and the set or space is noted as \(\mathbb {M}, \mathbb {B}\), \(\mathbb {R}^m\), etc. The scalar \(v_i\) is the i-th element of \(\varvec{v}\), the row vector \({\varvec{K}}_i\) is the i-th row of \({\varvec{K}}\), and \({\varvec{K}}_\mathbb {B}\) is the submatrix of \({\varvec{K}}\) with all rows in the index set \(\mathbb {B}\). The transpose of the vector \(\varvec{v}\) or matrix \({\varvec{K}}\) is noted as \(\varvec{v}^\top \) or \({\varvec{K}}^\top \). \({\varvec{I}}\) is an identity matrix with proper dimensions, \(t_+:=\max \{t,0\}\), and \(\mathbb {1}_{a} =1\) if the event a is true, and otherwise 0.

The rest of the paper is organized as follows. In Sect. 2 we review the DCA procedure and the related SVM models. In Sect. 3 we define an LS-DC loss which has a desirable DC decomposition and reveal its properties. In Sect. 4 we propose a UniSVM algorithm that can train the SVM model with any different LS-DC loss based on DCA. In Sect. 5 we verify the effectiveness of UniSVM with numerous experiments, and Sect. 6 concludes the paper.

2 Reviews of related works

We review the DCA procedure and some SVM models with convex and nonconvex loss in this section.

2.1 DC programming and DCA

DCA is an efficient nonconvex optimization technique, first introduced in Pham Dinh and El-Bernoussi (1986) and recently reviewed in Le Thi and Pham Dinh (2018), and has been successfully applied in machine learning (Yuille and Rangarajan 2003; Neumann et al. 2004; Collobert et al. 2006; Le Thi et al. 2008, 2009; Ong and Le Thi 2013; Xu et al. 2017; Tao et al. 2018; Chen and Zhou 2018). A function F(x) is called a difference of convex (DC) function if \(F(\varvec{x})=H(\varvec{x})-G(\varvec{x})\), where \(H(\varvec{x})\) and \(G(\varvec{x})\) are convex functions. DC programming is used to solve

where \(H(\varvec{x})\) and \(G(\varvec{x})\) are convex functions and \(\mathcal {X}\) is a convex set.

DCA is a majorization-minimization algorithm (Naderi et al. 2019) which works by optimizing a sequence of upper-bounded convex functions of \(F(\varvec{x})\). For the current approximated solution \(\varvec{x}^k\), since \(G(\varvec{x})\ge G(\varvec{x}^k)+\langle \varvec{x}-\varvec{x}^k, \varvec{v}^k\rangle \) with \(\varvec{v}^k\in \partial G(\varvec{x}^k)\), \(H(\varvec{x})-\langle \varvec{v}^k,\varvec{x}-\varvec{x}^k\rangle - G(\varvec{x}^k)\) is an upper-bounded convex function of \(F(\varvec{x})\). Thus, to solve the DC problem (3), DCA iteratively obtains a new solution \(\varvec{x}^{k+1}\) by solving the convex programming as follows.

There is a convergence guarantee (Le Thi and Pham Dinh 2018). Particularly, if \(H(\varvec{x})\) is a quadratic function, the optimal problem (4) has a closed form solution. Thus, the DCA procedure has no inner iterations. This motivates us to design a DC decomposition for the losses of SVMs models to speed up the algorithm in Sect. 3.

2.2 SVM models with convex losses and nonconvex losses

2.2.1 SVM models with convex losses

If the hinge loss \(\ell (y,t):=\max \{0,1-yt\}\) is chosen in (1) for classification, then L1SVM is obtained by duality through the following expression (Vapnik 2000, 1999; Keerthi et al. 2006; Steinwart et al. 2011; Zhou 2013):

where \({\widetilde{{\varvec{K}}}}_{i,j} = y_iy_j{\varvec{K}}_{i,j}\) and \(\varvec{e}=(1,\cdots , 1)^\top \in \mathbb {R}^m\). If the squared hinge loss \(\ell (y,t):=\frac{1}{2}\max \{0,1-yt\}^2\) is chosen in (1), then L2SVM is obtained by duality through the following expression (Vapnik 2000, 1999; Steinwart et al. 2011; Zhou 2013; Zhou et al. 2013, 2009):

where \({\varvec{I}}\) is the identity matrix. With the solution \(\varvec{\beta }^*\), the unknown sample \(\varvec{x}\) is predicted as \(sgn(f(\varvec{x}))\) with \(f(\varvec{x}) = \frac{1}{\lambda }\sum _{i=1}^{m}y_i\beta _i^*\kappa (x,x_i)\) for model (5) or (6).

If the least squares loss \(\ell (y,t):=\frac{1}{2}(1-yt)^2 = \frac{1}{2}(y-t)^2\) is chosen in (1), the LSSVM model obtained by duality (Suykens and Vandewalle 1999a, b; Suykens et al. 2002; Jiao et al. 2007) is

with a unique nonsparse solution, where \(\varvec{y}=(y_1,\cdots ,\varvec{y}_m)^\top \). By choosing the least squares loss in (1), Zhou (2016) recently proposed the primal LSSVM (PLSSVM) based on the representer theoremFootnote 1 as

which may have a sparse solution if \({\varvec{K}}\) has low rank or can be approximated as a low rank matrix. With the solution \(\varvec{\beta }^*\), the unknown sample \(\varvec{x}\) is predicted as \(sgn(f(\varvec{x}))\)(classification) or \(f(\varvec{x})\) (regression) with \(f(\varvec{x}) = \frac{1}{\lambda }\sum _{i=1}^{m}\beta _i^*\kappa (x,x_i)\) for model (7) or (8).

If the \(\varepsilon \)-insensitive loss \(\ell _\varepsilon (y,t):= (|y-t|-\varepsilon )_+\) is chosen in (1) for the regression problem, then SVR is obtained as

and with the solution \((\varvec{\beta }^*,{\hat{\varvec{\beta }}}^*)\), the prediction of the new input \(\varvec{x}\) is \(f(\varvec{x}) = \frac{1}{\lambda }\sum _{i=1}^{m}(\beta _i^*-{\hat{\beta }_i}^*)\kappa (x,x_i).\)

2.2.2 Robust SVM models with nonconvex losses

To improve the robustness of the L1SVM model (5), in Collobert et al. (2006) the hinge loss \((1-yt)_+\) in (1) is truncated as the ramp loss \(\min \{(1-yt)_+, a\}\) with \(a>0\) and decomposed into DC form \((1-yt)_+ - (1-yt-a)_+\). The problem (1) is posed as a DC programming:

Then, based on the DC procedure and Lagrange duality, a robust L1SVM is proposed by iteratively solving the following L1SVM problem at the \((k+1)-\)th iteration.

where \(\varvec{v}^{k}\) satisfies \(v^{k}_i = \frac{1}{m}\cdot \mathbb {1}_{1-{\widetilde{{\varvec{K}}}}_i(\varvec{\beta }^{k}-\varvec{v}^k)/\lambda >a}, i=1,\cdots ,m\). Similar analysis with a different form appears in Wu and Liu (2007) and Tao et al. (2018).

To improve the robustness of L2SVM (6), Tao et al. (2018) truncated the squared hinge loss \((1-yt)_+^2\) as \( \min \{(1-yt)_+^2, a\}\) in (1) and decomposed into DC form \((1-yt)_+^2-\left( (1-yt)_+^2 - a\right) _+\). Based on DCA, the robust solution of L2SVM is given by iteratively solving

where \(\varvec{v}^{k}\) satisfies \(v^{k}_i = \frac{1}{m}(1-\tfrac{1}{\lambda }{\widetilde{{\varvec{K}}}}_i(\varvec{\beta }^{k}-\varvec{v}^k))\cdot \mathbb {1}_{1-{\widetilde{{\varvec{K}}}}_i(\varvec{\beta }^{k}-\varvec{v}^k)/\lambda >\sqrt{a}}, i=1,\cdots ,m\). However, the analysis in Tao et al. (2018) pointed out that (11) is not a “satisfactory learning algorithm” in this case. By deleting the current outliers, which satisfy \(1-y_i f(\varvec{x}_i)>\sqrt{a}\) from the training set iteratively, Tao et al. (2018) proposed a multistage SVM (MS-SVM) to solve the robust L2SVM. They first solve an original L2SVM (6) and then solve smaller L2SVMs iteratively.

Although Tao et al. (2018) propose improved methods using coordinate descent and an inexpensive scheme, all of the given algorithms still solve a constrained QP per iteration, and possibly with smaller size.

In contrast, Feng et al. (2016) propose a robust SVM model, in which the following smooth nonconvex loss

with \(a> 0\) is chosen in (1). The loss (12) is approximated as the squared hinge loss \((1-yt)_+^2\) when \(a\rightarrow +\infty \) and can be considered as a smooth approximation of the truncated squared hinge loss \( \min \{(1-yt)_+^2, a\}\). After analysis of the KKT conditions of the given model, Feng et al. (2016) proposed the algorithm by solving the following re-weighted L2SVM iteratively:

where \({\varvec{D}}^k\) is a diagonal matrix satisfying \({\varvec{D}}_{i,i}^k=\left( \psi '((1-{\widetilde{{\varvec{K}}}}_i\varvec{\beta }^k)_+^2)\right) ^{-1}\) with \(\psi (u)= a\left( 1-\exp (-\tfrac{1}{a}u)\right) \).

All of these algorithms for robust SVM models (Collobert et al. 2006; Wu and Liu 2007; Tao et al. 2018; Feng et al. 2016) must solve a constrained QP in the inner loops, which results in a long training time.

Based on the decomposition of the truncated least squares loss \(\min \{(1-yt)^2,a\}\) in (1) or (2) as \((1-yt)^2-((1-yt)^2-a)_+\) and the representer theorem, the robust sparse LSSVM (RSLSSVM) was studied in Chen and Zhou (2018) by solving

with \(\varvec{v}^k\) as \(v^k_i = -(y_i-{\varvec{K}}_i\varvec{\alpha }^k)\mathbb {1}_{|1-y_i{\varvec{K}}_i\varvec{\alpha }^k|>\sqrt{a}}\).

The model (14) for solving the nonconvex SVM has three advantages. The first is that it has a closed-form solution since it is an unconstrained QP. Second, it has a sparse solution if \({\varvec{K}}\) has low rank or can be approximated by a low rank matrix (see (Zhou 2016) for details). Thus, it can be solved efficiently. Furthermore, (14) can also be applied to regression problems directly.

To extend those benefits to all the other losses for classification tasks and regression tasks simultaneously, we define LS-DC loss in Sect. 3 and propose a unified algorithm in Sect. 4, which includes all SVM models (including the classification/regression SVM with convex loss and nonconvex loss) in a unified scheme.

3 LS-DC loss function

Here, we first define a kind of loss called LS-DC loss, and then show that most popular losses are in fact LS-DC loss or can be approximated by LS-DC loss.

For any margin-based loss \(\ell (y,t)\) of SVM, let \(\psi (u)\) satisfy \(\psi (1-yt):=\ell (y,t)\) for classification loss or \(\psi (y-t):=\ell (y,t)\) for regression loss. To obtain a useful DC decomposition of the loss \(\ell (y,t)\), we propose the following definition:

Definition 1

(LS-DC loss) We call \(\ell (y,t)\) a least squares type DC loss, abbreviated as LS-DC loss, if there exists a constant A (\(0<A<+\infty \)) such that \(\psi (u)\) has the following DC decomposition:

The essence of the definition demands that \(Au^2-\psi (u)\) be convex. The following theorem is clear:

Theorem 1

If the loss \(\psi (u)\) is second-order derivable and \(\psi ''(u)\le M\), then it is an LS-DC loss with parameter \(A\ge \frac{M}{2}\).

Not all losses are LS-DC losses, even the convex losses. We will show that the hinge loss and the \(\varepsilon \)-insensitive loss are not LS-DC losses. However, they can be approximated by LS-DC losses.

Next, we will show that most losses used in the SVM community are LS-DC losses or can be approximated by LS-DC loss. The proofs are listed in Appendix A.

Proposition 1

(LS-DC property of classification losses) The most commonly used classification losses are cases of LS-DC loss or can be approximated by LS-DC losses. We enumerate them as follows.

-

(a)

The least squares loss \(\ell (y,t) = (1-yt)^2\) is an LS-DC loss with \(Au^2-\psi (u)\) vanished.

-

(b)

The truncated least squares loss \(\ell (y,t) =\min \{(1-yt)^2,a\}\) is an LS-DC loss with \(A\ge 1\).

-

(c)

The squared hinge loss \(\ell (y,t)=(1-yt)_+^2\) is an LS-DC loss with \(A \ge 1\).

-

(d)

The truncated squared hinge loss \(\ell (y,t) =\min \{(1-yt)_+^2,a\}\) is an LS-DC loss with \(A\ge 1\).

-

(e)

The hinge loss \(\ell (y,t) = (1-yt)_+\) is not an LS-DC loss. However, if it is approximated as \(\frac{1}{p}\log (1+\exp (p(1-yt)))\) with a finite p, we obtain an LS-DC loss with \(A\ge p/8\).

-

(f)

The ramp loss \(\ell (y,t) =\min \{(1-yt)_+, a\}\) is also not an LS-DC loss. However, we can give two smoothed approximations of the ramp loss:

$$\begin{aligned} \ell _{a}(y,t)= & {} \left\{ \begin{array}{ll} \frac{2}{a} (1-yt)_+^2, &{} 1-yt \le \frac{a}{2}, \\ a-\frac{2}{a} (a-(1-yt))_+^2, &{} 1-yt > \frac{a}{2}. \end{array} \right. \end{aligned}$$(16)$$\begin{aligned} \ell _{(a,p)}(y,t)= & {} \frac{1}{p}\log \left( \frac{1+\exp (p(1-yt))}{1+\exp (p(1-yt-a))}\right) . \end{aligned}$$(17)The first one has the same support set as the ramp loss, and the second one is derivable with any order. The loss (16) is an LS-DC loss with \(A\ge 2/a\) and (17) is an LS-DC loss with \(A\ge p/8\).

-

(g)

The nonconvex smooth loss (12) proposed in Feng et al. (2016) is an LS-DC loss with \(A\ge 1\).

-

(h)

Following the nonconvex smooth loss (12), we generalize it as

$$\begin{aligned} \ell _{(a,b,c)}(y,t) = a\left( 1-\exp \left( -\tfrac{1}{b}(1-yt)_+^c\right) \right) , \end{aligned}$$(18)where \(a,b>0, c\ge 2\). The loss (18) is an LS-DC loss with the parameter \(A\ge \frac{1}{2}{M(a,b,c)}\), where

$$\begin{aligned} M(a,b,c):= \tfrac{ac}{b^{2/c}}\left( (c-1)(h(c))^{1-2/c}-c (h(c))^{2-2/c}\right) e^{-h(c)}, \end{aligned}$$(19)with \(h(c):=\left( 3(c-1)-\sqrt{5c^2-6c+1}\right) /(2c)\).

Regarding the new proposed loss (18), we offer the following comments:

Remark 1

Two more parameters are introduced to the loss (18) to make it more flexible. The parameter a, which is the limitation of the loss function if \(1-yt\rightarrow \infty \), describes the effective value (or saturated value) of the loss function for large inputs. The parameter b, which characterizes the localization property of the loss functions, describes the rate of the loss function saturated to its maximum and minimum. By uncoupling a and b, we improve the flexibility of the robust loss. For example, the inflection point of (12) is \(yt=1-\sqrt{a/2}\), which is directly controlled by the saturated value a, and the inflection point of (18) is \(yt=1-\sqrt{b/2}\) if \(c=2\), which is only controlled by the parameter b. In experiments, by simply adjusting a, b, and c, we can obtain better performance.

The most commonly used losses for classification are summarized in Table 5 in Appendix B. Some classification losses and their LS-DC decompositions are also plotted in Fig. 1.

The plots of some LS-DC losses for classification and their DC decompositions: “Red curve = Green curve-Blue curve”. In the plot, the Black curve (if it exists) is the plot of the original non-LS-DC loss which is approximated by an LS-DC loss (Red curve), the Green curve is the function Aµ2 and the Blue cruve is the convex function Aµ2-Ψ(u). The loss names in the legends are defined in Table 5 in Appendix B. All of the LS-DC parameters A are chosen as the lower bounds in Table 5, and increasing the value of A can make the Blue cruve “smoother”

Proposition 2

(LS-DC property of regression losses) The commonly used regression losses are LS-DC loss or can be approximated by LS-DC loss. We enumerate them as follows.

-

(1)

The least squares loss and the truncated least squares loss are all LS-DC losses with \(A\ge 1\).

-

(2)

The \(\varepsilon \)-insensitive loss \(\ell _\varepsilon (y,t):= (|y-t|-\varepsilon )_+\), mostly used for SVR, is not an LS-DC loss. However, we can smooth it as

$$\begin{aligned} \ell _{(\varepsilon ,p)}(y,t):=\tfrac{1}{p}\log (1+\exp (-p(y-t+\varepsilon ))) + \tfrac{1}{p}\log (1+\exp (p(y-t-\varepsilon ))), \end{aligned}$$(20)which is LS-DC loss with \(A\ge p/4\).

-

(3)

The absolute loss \(\ell (y,t) = |y-t|\) is also not an LS-DC loss. However, it can be smoothed by LS-DC losses. For instance, we can use the Huber loss

$$\begin{aligned} \ell _\delta (y,t) = \left\{ \begin{array}{ll} \frac{1}{2\delta }(y-t)^2, &{} |y-t|\le \delta , \\ |y-t|-\frac{\delta }{2}, &{} |y-t|>\delta , \end{array}\right. \end{aligned}$$which approximates the absolute loss is an LS-DC loss with \(A\ge 1/(2\delta )\); Setting \(\varepsilon =0\) in (20), \(A\ge p/4\).

-

(4)

The truncated absolute loss \(\ell _a(y,t):=\min \{|y-t|,a\}\) can be approximated by the truncated Huber loss \(\min \{\ell _\delta (y,t),a\}\), which is an LS-DC loss with \(A\ge 1/(2\delta )\).

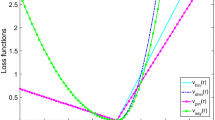

Some regression losses and their DC decompositions are plotted in Fig. 2.

The plots of some LS-DC losses for regression and their DC decompositions: “Red curve = Green curve-Blue curve”. In the plot, the Black curve (if it exists) is the plot of the original non-LS-DC loss which is approximated by an LS-DC loss (Red curve), the Green curve is the function Aµ2 and the Blue curve is the convex function Aµ2-Ψ(u). The loss names in the legends are defined in Table 5 in Appendix B. All of the LS-DC parameters A are chosen as the lower bounds in Table 5, and increasing the value of A can make the Blue curve “smoother”

4 Unified algorithm for SVM models with LS-DC losses

Let \(\ell (y,t)\) be any LS-DC loss discussed in Section 3, and let \(\psi (u)\) satisfying \(\psi (1-yt)=\ell (y,t)\) (for classification task) or \(\psi (y-t)=\ell (y,t)\) (for regression tasks) have the DC decomposition (15) with parameter \(A>0\). The SVM model (2) with any loss can then be decomposed as

where \(r_i = 1-y_i{\varvec{K}}_i\varvec{\alpha }\) (for classification) and \(r_i = y_i - {\varvec{K}}_i\varvec{\alpha }\) (for regression).

Using the DCA procedure (4) with an initial point \(\varvec{\alpha }^0\), a stationary point of (21) can be iteratively reached by solving

where \(\varvec{\xi }^k = {\varvec{K}}\varvec{\alpha }^k\) and \(\varvec{\gamma }^k = (\gamma ^k_1,\gamma ^k_2,\cdots , \gamma ^k_m)^\top \) satisfies

where \(\partial \psi (u)\) indicates the subdifferential of the convex function \(\psi (u)\). The related losses and their derivatives or subdifferentials for updating \(\varvec{\gamma }^k\) in (23) are listed in Table 5 in Appendix B.

The KKT conditions of (22) are

By solving (24), we propose a unified algorithm that can train SVM models with any LS-DC loss. For different LS-DC losses (either classification loss or regression loss), we just need to calculate the different \(\varvec{\gamma }\) by (23). We refer to the algorithm as UniSVM, which is summarized as Algorithm 1.

The new algorithm possesses the following advantages:

-

It is suitable for training any kind of SVM models with any LS-DC losses, including convex loss and nonconvex loss. The training process for classification problems is also the same as for regression problems. The proposed UniSVM is therefore a truly unified algorithm.

-

Unlike the existing algorithms for nonconvex loss (Collobert et al. 2006; Wu and Liu 2007; Tao et al. 2018; Feng et al. 2016) that must iteratively solve L1SVM/L2SVM or reweighted L2SVM in the inner loops, UniSVM is free of the inner loop because it solves a system of linear equations (24) with a closed-form solution per iteration.

-

According to the studies on LSSVM in Zhou (2016), the problem (24) may have multiple solutions, including some sparse solutions, if \({\varvec{K}}\) has low rankFootnote 2. This is of vital importance for training large-scale problems efficiently. Details will be discussed in Subsect. 4.2.

-

In experiments, we always set \(\varvec{\xi }^0 =\varvec{y}\) and \(\varvec{\gamma }^0=0\) instead of giving an \(\varvec{\alpha }^0\) to begin the algorithm. This is equivalent to starting the algorithm from the solution of LSSVM, which is a moderate guess of the initial point, even for nonconvex loss.

In Subsect. 4.1, we present an easily grasped version of the proposed UniSVM in the case that the full kernel \({\varvec{K}}\) is available. In Subsect. 4.2, we propose an efficient method to solve the KKT conditions (24) for UniSVM even if the full kernel matrix is unavailable. The Matlab code is also given in Appendix C.

4.1 Solving UniSVM with full kernel matrix available

If the full kernel matrix \({\varvec{K}}\) is available and \(\tfrac{\lambda m}{A}{\varvec{I}}+ {\varvec{K}}\) can be inverted cheaply, we note that \({\varvec{Q}}=\left( \tfrac{\lambda m}{A}{\varvec{I}}+{\varvec{K}}\right) ^{-1}\) and can prove that

is one nonsparse solution of (24). It should be noted that \({\varvec{Q}}\) is only calculated once. Hence, after the first iteration, \(\varvec{\alpha }^{k+1}\) will be reached within \(O(m^2)\).

Furthermore, if \({\varvec{K}}\) is low rank and can be factorized as \({\varvec{K}}={\varvec{P}}{\varvec{P}}^\top \) with a full-column rank \({\varvec{P}}\in \mathbb {R}^{m\times r}\) (\(r<m\)), the cost of the process can be reduced through two methods. One is SMW identity (Golub and Loan 1996), which determines the cost \(O(mr^2)\) to compute \({\varvec{P}}^\top {\varvec{P}}\), the cost \(O(r^3)\) to obtain the inversion \({\hat{{\varvec{Q}}}}=\left( \tfrac{\lambda m}{A}{\varvec{I}}+{\varvec{P}}^\top {\varvec{P}}\right) ^{-1}\in \mathbb {R}^{r\times r}\) once, and the cost within O(mr) to update the nonsparse \(\varvec{\alpha }^{k+1}\) per iteration as

The other is the method employed in subsection 4.2 to obtain a sparse solution of (24).

4.2 Solving UniSVM for large-scale training with a sparse solution

For large-scale problems, the full kernel matrix \({\varvec{K}}\) is always unavailable because of the limited memory and the computational complexity. Hence, we should obtain the sparse solution of the model, since in this case \({\varvec{K}}\) is always low rank or can be approximated by a low-rank matrix.

To obtain the low-rank approximation of \({\varvec{K}}\), we can use the Nyström approximation (Sun et al. 2015), which is a random sampling method, or the pivoted Cholesky factorization method proposed in Zhou (2016) that has a guarantee to minimize the trace norm of the approximation error greedily. The gaining approximation of \({\varvec{K}}\) is \({\varvec{P}}{\varvec{P}}^\top \), where \({\varvec{P}}=[{\varvec{P}}_\mathbb {B}^\top ~~{\varvec{P}}_\mathbb {N}^\top ]^\top \) is a full column rank matrix with \({\varvec{P}}_\mathbb {B}\in \mathbb {R}^{r\times r}\) (\(r\ll m\)) and \(\mathbb {B}\subset \{1,2, \cdots ,m\}\) is the index set corresponding to the only visited r columns of \({\varvec{K}}\). Both algorithms satisfy the condition that the total computational complexity is within \(O(mr^2)\), and \({\varvec{K}}_\mathbb {B}\)—the visited rows of \({\varvec{K}}\) corresponding to set \(\mathbb {B}\)—can be reproduced exactly as \({\varvec{P}}_\mathbb {B}{\varvec{P}}^\top \).

Replacing \({\varvec{K}}\) with \({\varvec{P}}{\varvec{P}}^\top \) in (24), we have

which can be simplified as

This is because \({\varvec{P}}\) is a full column rank matrix. By simple linear algebra, if we let \(\varvec{\alpha }=[\varvec{\alpha }_\mathbb {B}^\top ~~\varvec{\alpha }_\mathbb {N}^\top ]^\top \) be a partition of \(\varvec{\alpha }\) corresponding to the partition of \({\varvec{P}}\), then we can set \(\varvec{\alpha }_\mathbb {N}=0\) to solve (27). Thus, (27) is equivalent to

We then have

where \({\overline{{\varvec{Q}}}}=\left( (\tfrac{\lambda m}{A}{\varvec{I}}+ {\varvec{P}}^\top {\varvec{P}}){\varvec{P}}_\mathbb {B}^\top \right) ^{-1}\), \(\varvec{\xi }^k = {\varvec{P}}{\varvec{P}}_\mathbb {B}^\top \varvec{\alpha }_\mathbb {B}^k\), and \(\varvec{\gamma }^k\) is updated by (23).

Notice that \({\overline{{\varvec{Q}}}}\) is only calculated in the first iteration with the cost \(O(r^3)\). The cost of the algorithm is \(O(mr^2)\) for the first iteration, and O(mr) for the following iterations. Hence, UniSVM can be run very efficiently.

5 Experimental studies

In this section, we present experimental results to illustrate the effectiveness of the proposed unified model. All the experiments are run on a computer with an Intel Core i5-6500 CPU @3.20GHz\(\times 4\) and a maximum memory of 8GB for all processes; the computer runs Windows 7 with Matlab R2016b. The comparators include L1SVM and SVR solved by LibSVM, L2SVM and the robust SVM modes in Collobert et al. (2006), Tao et al. (2018), Feng et al. (2016).

5.1 Intuitive comparison of UniSVM with other SVM models on small data sets

In this subsection, we first present experiments to show that the proposed UniSVM with convex loss can obtain a comparable performance by solving L1SVM, L2SVM and SVR on the small data sets. Second, we perform experiments to illustrate that UniSVM with nonconvex loss more efficiently obtains comparable performance to the algorithms in Collobert et al. (2006), Tao et al. (2018), and Feng et al. (2016) by solving robust SVMs with nonconvex loss. We have implemented UniSVM in two cases; one is in (25) with the full kernel matrix \({\varvec{K}}\) available, called UniSVM-full, and the other obtains the sparse solution of the model by (28), where \({\varvec{K}}\) is approximated as \({\varvec{P}}{\varvec{P}}^\top \) with \({\varvec{P}}\in \mathbb {R}^{m\times r} (r\ll m)\), noted as UniSVM-app. The latter has the potential to resolve large-scale tasks. L1SVM and SVR are solved by the efficient tools LibSVM (Chang and Lin 2011), and the other related models (L2SVM, the robust L1SVM and the robust L2SVM) are solved by the solver of quadratic programming quadprog.m in Matlab.

5.1.1 On convex loss cases

The first experiment is a hard classification task on the highly nonlinearly separable “xor" dataset shown in Fig. 3, where the instances are generated by uniform sampling with 400 training samples and 400 test samples. The kernel function is \(\kappa (\varvec{x},\varvec{z}) = \exp (-\gamma \Vert \varvec{x}-\varvec{z}\Vert ^2)\) with \(\gamma = 2^{-1}\), \(\lambda \) is set as \(10^{-5}\) and \(r=10\) for UniSVM-app. The experimental results are plotted in Fig. 3 and the detailed information of the experiments is given as the captions and the subtitles of the figures.

Comparison of the related algorithms of SVM with convex losses. In (a), (b), (d) and (e), the classification results of the algorithms are plotted as red solid curves (since the differences between them are slight, the classification curves of UniSVM-app are not plotted and its test accuracies and the training times are correspondingly noted in (b) and (e)). In (c) and (f), the iterative processes of UniSVM are plotted. The blue curves with respect to the left y-axis are the iterative test accuracies of UniSVM, and the red curves with respect to the right y-axis are the iterative objective values of (2). The accuracies and the objective values of L1SVM/L2SVM are plotted as the horizontal lines for reference

From the experimental results in Fig. 3, we have the following findings:

-

The proposed UniSVM for solving L1SVM or L2SVM can obtain similar performance compared with the state-of-the-art algorithms (LibSVM/quadprog). Of course, in those smaller cases, LibSVM is more efficient than UniSVM, since they are only designed for SVM with convex losses.

-

The low-rank approximation of the kernel matrix can significantly accelerate UniSVM, and the acceleration rate is approximated as \(\frac{m}{r}\).

-

The red curves in Fig. 3c and f reveal that UniSVM is the majorization-minimization algorithm (Naderi et al. 2019). All cases of UniSVM reach the optimal value of L1SVM or L2SVM from above. In this setting, if \(r>15\), the difference of the objective values between UniSVM-full and UniSVM-app will vanish.

Thus, the advantage of UniSVM lies in solving the large-scale tasks with low-rank approximation.

The second set of experiments is based on a regression problem with an SVR model (9) and \(\varepsilon \)-insensitive loss, where 1,500 training samples and 1,014 test samples are generated by the Sinc function with Gaussian noise \(y = \frac{\sin (x)}{x} + \zeta \) with \(x\in [-4\pi , 4\pi ]\) discretized by the step of 0.01 and \(\zeta \sim N(0,0.05)\). Since the \(\varepsilon \)-insensitive loss is not LS-DC loss, we compare LibSVM with UniSVM with smoothed \(\varepsilon \)-insensitive loss (20) for solving SVR (9). Here, the kernel function \(\kappa (\varvec{x},\varvec{z}) = \exp (-\gamma \Vert \varvec{x}-\varvec{z}\Vert ^2)\) with \(\gamma = 0.5\), \(\lambda \) is set as \(10^{-4}\) and \(r=50\) for UniSVM-app. The experimental results are plotted in Fig. 4.

The comparison of solving SVR by UniSVM with smooth \(\varepsilon \)-insensitive loss and LibSVM on Sinc regression problem. In (a), the training data and the ground truth are plotted; in (b), the regression results of LibSVM, UniSVM-full and UniSVM-app are given, where the respective MSRs are 0.0027, 0.0025, and 0.0025, and the training times are 0.062s, 0.144s and 0.035s; in (c), the regression errors of three algorithms are plotted. In (b) and (c), the difference between UniSVM-full and UniSVM-app can be neglected, while the training time of the latter is less than one-fifth of that of the former

From the experimental results in Fig. 4, we have two findings. One is that the new UniSVM can achieve better performance than LibSVM. This may be because the added noise follows a Gaussian distribution while UniSVM is initialized as LSSVM. The other finding is that the low-rank approximation of the kernel matrix is highly efficient here, since UniSVM-app can obtain results similar to those of UniSVM-full; this is similar to findings in Zhou (2016), Chen and Zhou (2018). The speedup rate here is less than \(\frac{m}{r}\), which is because the number of iterations of UniSVM is only 3.

5.1.2 On nonconvex loss cases

The first set of experiments was again performed on the “xor” dataset as shown in Fig. 4, where some training samples (10%) are contaminated to simulate outliers by flipping labels (the test samples are noise-free). The compared algorithms include robust L1SVM in Collobert et al. (2006), MS-SVM of robust L2SVM in Tao et al. (2018), and the re-weighted L2SVM in Feng et al. (2016). The results are presented in Fig. 5, in which the classification results of L1SVM and L2SVM are listed as the references. In these experiments, only UniSVM was implemented as UniSVM-app with \(r=10\), shortened as UniSVM. The truncated parameter \(a=2\) for nonconvex loss.

Comparison of the classification results of the related algorithms of SVM with convex and nonconvex losses. See the titles of the subfigures for details, where the loss (18) used in figure (h) with \(a=b=c=2\) is the same as (12) used in figure (e). It is worth noting that the results of (d) and (g) are based on the same model with the truncated squared hinge loss with the different algorithms, and (f) and (i) are based on the same model with nonconvex smooth loss (12) but are solved with a different algorithm

From the experimental results in Fig. 5, we observe that the models based on nonconvex loss improve the classification results in cases with outliers. The algorithms in Fig. 5c–e, which need to iteratively solve L1SVM or L2SVM many times, are also affected by the outliers of the upper-right corner, where the outliers are dominated locally. However, the proposed UniSVM based on the effective LS-DC loss can completely resolve this problem. In particular, as highlighted by the results in Fig. 5d and g where the same SVM model with truncated squared hinge loss is solved by different algorithms, the proposed UniSVM can solve the robust SVM with high performance. The comparison between the results of Fig. 5e and h reveals a similar performance. The reason for this may be because the proposed UniSVM can obtain a better local minimum with a good initial point (a sparse solution of LSSVM) based on the DC decomposition of the corresponding nonconvex loss.

The second set of experiments is performed to compare the effectiveness of the related algorithms, also on the “xor" problem. The training samples are randomly generated with varied sizes from 400 to 10,000, and the test samples are generated similarly with the same sizes. The training data is contaminated by randomly flipping the labels of 10% of instances to simulate outliers. We set \(r=0.1m\) for all UniSVM algorithms to approximate the kernel matrix, and the other parameters are set as in the former experiments. The corresponding training time and test accuracies (averaged over ten trials) of the related robust SVM algorithms are plotted in Fig. 6, where the results of LibSVM (with outliers and without outliers) are also given as references.

From the experimental results in Fig. 6, we have the following findings:

-

In Fig. 6a, it is clear that the performances of all the related robust SVM algorithms based on nonconvex losses are better than that of the L1SVM with convex loss on the contaminated training datasets and that all of them match the results of the noise-free case. At the same time, we notice that the differences of the test accuracies between the selected robust algorithms are very small.

-

From Fig. 6b, we observe that the differences of the training time between the related algorithms are large, especially for the larger training set. The training time of the proposed UniSVM is significantly less, while the robust L1SVM (Collobert et al. 2006), Robust L2SVM (Tao et al. 2018) and re-weighted L2SVM (Feng et al. 2016)—which need to solve the constrained QP several times—have long training times. Even they can be more efficiently implemented (such as SMO) than quadprog.m, but their training time will be longer than that of LibSVM since all of them at least solve a QP which is similar to the QP solved by LibSVM.

-

In our setting, the outliers greatly affect the results of LibSVM, not only with respect to test accuracies but also training time.

-

All in all, the new proposed UniSVM with low rank kernel approximation is not only robust to noise but also highly efficient with respect to the training process.

5.2 Experiments on larger benchmark datasets

In this section, we perform experiments to show that UniSVM can quickly train the convex and nonconvex SVM models with comparable performance using a unified scheme on large data sets. We choose only the state-of-the-art SVM tool LibSVM (Chang and Lin 2011) (including SVC and SVR) as the comparator, rather than other robust SVM algorithms in previous papers (Collobert et al. 2006; Wu and Liu 2007; Tao et al. 2018; Feng et al. 2016), to conserve experimental time.

First, we select four classification tasks and three regression tasks from the UCI database to illustrate the performance of the related algorithms. The detailed information of the data sets and the hyper-parameters (training size, test size, dimension, \(\lambda \), \(\gamma \)) is given as follows:

Here, the classification tasks have the default splitting, and the regression tasks are split randomly. The \(\lambda \) (regularizer) and \(\gamma \) (for Gaussian kernel \(\kappa (\varvec{x},\varvec{z}) = \exp (-\gamma \Vert \varvec{x}-\varvec{z}\Vert ^2)\)) are roughly chosen by the grid search. For the parameters of loss functions, we simply use the default value (given next). Fine-tuning all parameters will certainly improve the performance further.

To implement the UniSVM for larger training data, we use the pivoted Cholesky factorization method proposed in Zhou (2016) to approximate the kernel matrix \({\varvec{K}}\), and the low-rank approximation error is controlled by the first matched criterion \(trace({\varvec{K}}-{\tilde{{\varvec{K}}}})<0.001 \cdot m\) or \(r\le 1000\), where m is the training size and r is the upper bound of the rank.

The first set of experiments shows that the proposed UniSVM can train SVM models with convex or nonconvex loss for classification problems. The chosen losses for \(\hbox {UniSVM}_1\) to \(\hbox {UniSVM}_{{10}}\) are listed as following:

The results in Table 1 are obtained based on the original data sets, and those of Table 2 are based on the contaminated data sets, where 20% of the labels of training instances are randomly flipped. Since the kernel approximation in Zhou (2016) undergoes random initialization, the training times recorded in Matlab are not very stable, and since random flipping is observed for noise cases, all results are averaged over ten random trials.

From the results in Tables 1 and 2, we draw the following conclusions:

-

UniSVMs with different losses work well using a unified scheme in all cases. They are mostly faster than LibSVM and offer comparable performance in noise-free cases. The training time of LibSVM in Table 2 is notably longer than its training time in Table 1 because the flipping process adds a large number of support vectors. However, owing to the sparse solution of (28), this influence on UniSVMs is quite weak.

-

Comparing the training time (including the time to obtain \({\varvec{P}}\) for approximating the kernel matrix \({\varvec{K}}\)) of \(\hbox {UniSVM}_1\) (least squares) with other methods, it is clear that the proposed UniSVM requires a very low cost after the first iteration, as other UniSVMs always run \(\hbox {UniSVM}_1\) in their first iteration.

-

All the UniSVMs with nonconvex losses are working as efficiently as those with convex losses. Particularly, the UniSVMs with nonconvex losses maintain high performance on the contaminated data sets. The new proposed loss (18) with two more parameters always achieves the highest performance.

The second set of experiments examined the performance of the UniSVM for solving regression tasks with convex and nonconvex losses. The experimental results are listed in Table 3. The chosen losses for \(\hbox {UniSVM}_1\) to \(\hbox {UniSVM}_{{6}}\) are listed as follows:

From the results in Table 3, it is again observed that UniSVMs with different losses work well for a unified scheme. All of them are more efficient than LibSVM with comparable performance. For example, LibSVM requires a very long training time on the second 3D-Spatial task because of excessive training samples, and LibSVM cannot finish the task on the last Slice data set, possibly because of an excessive number of support vectors. In two cases, all UniSVMs function well and exhibit comparable performance, which is primarily attributed to the efficient low-rank approximation of the kernel matrix. It is also noted that the UniSVMs with nonconvex losses function as efficiently as those with convex loss.

In the third set of experiments, we challenge UniSVM with two classification tasks on very large data sets (up to millions of samples) on the same computer. The selected data sets are:

-

Covtype: a binary class problem with 581,012 samples, where each example has 54 features. We randomly split it into 381,012 training samples and 200,000 test samples. The parameters used are \(\gamma =2^{-2}\) and \(\lambda =10^{-8}\).

-

Checkerboard3M: based on the noise-free version of the 2-dimensional Checkerboard data set (\(4\times 4\)-grid XOR problem), which was widely used to show the effectiveness of nonlinear kernel methods. The dataset was sampled by uniformly discretizing the regions \([0,1]\times [0,1]\) to \(2000^2 = 4000000\) points and labeling two classes by the \(4\times 4\)-grid XOR problem, and was then split randomly into 3,000,000 training samples and 1,000,000 test samples. The parameters used are \(\gamma =2^{4}\) and \(\lambda =10^{-7}\).

Those datasets are also used in Zhou (2016). Because of the limited memory of our computer, the kernel matrix on Covtype is approximated as \({\varvec{P}}{\varvec{P}}^\top \) with \({\varvec{P}}\in \mathbb {R}^{m\times 1000}\), and the kernel matrix on Checkerboard3M is approximated as \({\varvec{P}}{\varvec{P}}^\top \) with \({\varvec{P}}\in \mathbb {R}^{m\times 300}\), where m is the training size. The experimental results are given in Table 4, where LibSVM cannot accomplish the tasks because of its long training time. The losses used in the algorithms are the same as those in Table 1.

From the results in Table 4, we observe that the UniSVM works well on very large data sets. We also reach conclusions which are consistent with the results in Tables 1 and 2 . For example, UniSVMs with different losses work well using a unified scheme and offer comparable performance, and all of the UniSVMs with nonconvex losses function as efficiently as those with convex losses. Particularly, the UniSVMs with nonconvex losses maintain high performance because many contaminated samples may exist in very large training cases.

6 Conclusion and future work

In this work, we first define a kind of LS-DC loss with an effective DC decomposition. Based on the DCA procedure, we then propose a unified algorithm (UniSVM) for training SVM models with different losses for both classification problems and regression problems. Particularly, for training robust SVM models with nonconvex losses, UniSVM has a dominant advantage over all the existing algorithms because it always has a closed-form solution per iteration, while the existing ones must solve a constraint programming per iteration. Furthermore, UniSVM can solve large-scale nonlinear problems efficiently after the kernel matrix has the low-rank matrix approximation.

Several experimental results verify the efficacy and feasibility of the proposed algorithm. The most prominent advantage of the proposed algorithm is that it can be easily grasped by users or researchers since its core code in Matlab is less than 10 lines (See Appendix C).

In this work, we mainly discussed the methods to deal with the (convex or nonconvex) loss of the regularized loss minimization (Shalev-Shwartz and Ben-David 2014) by DCA to enhance the sparseness of the samples or robustness of the learner. However, there are also some works which handle the nonconvex regularizer part of the regularized loss minimization by DCA, which can strengthen the sparseness of the features and serve as a highly efficient tool for feature selection. For example, in Neumann et al. (2004), Le Thi et al. (2008, 2009), Ong and Le Thi (2013), some smooth approximations of the nonconvex “\(\ell _0\) norm” are decomposed as DC forms, then DCA is used to perform feature selection and many satisfactory results are produced. We will intensively study whether or not our new LS-DC decomposition can improve those kinds of learning problems.

Notes

For consistency with the model induced by duality, we let \(\varvec{w}=\frac{1}{\lambda }\sum _{i=1}^{m}\varvec{\beta }_i \phi (\varvec{x}_i)\) here.

\({\varvec{K}}\) is always low rank in computing, for there are always many similar samples in the training set, causing the corresponding columns of the kernel matrix to be (nearly) linearly dependent.

References

Boyd, S. P., & Vandenberghe, L. (2009). Convex optimization (7th ed.). Cambridge University Press.

Chang, C. C., & Lin, C. J. (2011). LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology, 2, 1–27.

Chen, L., & Zhou, S. (2018). Sparse algorithm for robust lssvm in primal space. Neurocomputing, 257(31), 2880–2891. https://doi.org/10.1016/j.neucom.2017.10.011.

Chen, P. H., Fan, R. E., & Lin, C. J. (2006). A study on SMO-type decomposition methods for support vector machines. IEEE Transactions on Neural Networks, 17(4), 893–908.

Collobert, R., Sinz, F., Weston, J., & Bottou, L. (2006). Trading convexity for scalability. In: Proceedings of the 23rd international conference on Machine learning - ICML’06, ACM Press, pp 201–208.

Feng, Y., Yang, Y., & Huang, X. (2016). Robust support vector machines for classification with nonconvex and smooth losses. Neural Computation, 28(6), 1217–1247.

Golub, G. H., & Loan, C. F. V. (1996). Matrix Computations. The John Hopkins University Press.

Hampel, F. R., Ronchetti, E. M., Rousseeuw, P. J., & Stahel, W. A. (2011). Robuststatistics: The approach based on influence functions. Wiley.

Jiao, L., Bo, L., & Wang, L. (2007). Fast sparse approximation for least squares support vector machines. IEEE Transactions on Neural Networks, 18(3), 685–697.

Keerthi, S. S., Shevade, S. K., Bhattacharyya, C., & Murthy, K. R. K. (2001). Improvements to Platt’s SMO algorithm for SVM classifier design. Neural Computation, 13, 637–649.

Keerthi, S. S., Chapelle, O., & Decoste, D. (2006). Building support vector machines with reduced classifier complexity. Journal of Machine Learning Research, 7, 1493–1515.

Le Thi, H. A., Le Hoai, M., Nguyen, V. V., & Pham Dinh, T. (2008). A DC programming approach for feature selection in support vector machines learning. Journal of Advances in Data Analysis and Classification, 2(3), 259–278.

Le Thi, H. A., Nguyen, V. V., & Ouchani, S. (2009). Gene selection for cancer classification using DCA. Journal of Frontiers of Computer Science and Technology, 3(6), 612–620.

Le Thi, H. A., & Pham, Dinh T. (2018). DC programming and DCA: Thirty years of developments. Mathematical Programming, 169(1), 5–68.

Neumann, J., Schnrr, C., & Steidl, G. (2004). SVM-based feature selection by direct objective minimization. In: Lecture Notes in Computer Science (Vol. 3175, pp. 212–219) Springer.

Naderi, S., He, K., Aghajani, R., Sclaroff, S., & Felzenszwalb, P. (2019). Generalized majorization-minimization. In: Proceedings of the 36-th International Conference on Machine Learning, Long Beach, CA, USA.

Ong, C. S., & Le Thi, H. A. (2013). Learning sparse classifiers with difference of convex functions algorithms. Optimization Methods and Software, 28(4), 830–854.

Ong, C.S., Mary, X., Canu, S., & Smola, A.J. (2004). Learning with non-positive kernels. In: Twenty-first International Conference on Machine learning - ICML’04, ACM Press.

Pham Dinh, T., & El-Bernoussi, S. (1986). Algorithms for solving a class of nonconvex optimization problems. methods of subgradients. In: Fermat Days 85: Mathematics for Optimization, Elsevier, pp 249–271.

Platt, J. C. (1999). Fast training of support vector machines using sequential minimal optimization. In C. J. Burges & A. J. Smola (Eds.), Advances in Kernel method-support vector learning (pp. 185–208). MIT Press.

Rockafellar, R. T. (1972). Convex analysis (2nd ed.) Princeton University Press.

Schölkopf, B., & Smola, A. J. (2002). Learning with Kernels-support vector machines, regularization, optimization and beyond. The MIT Press.

Schölkopf, B., Herbrich, R., & Smola, A.J. (2001). A generalized representer theorem. In: Proceedings of the Annual Conference on Computational Learning Theory, Springer, pp 416–426.

Shalev-Shwartz, S., & Ben-David, S. (2014). Understanding machine learning: From theory to algorithms. Cambridge University Press.

Shen, X., Tseng, G. C., Zhang, X., & Wong, W. H. (2003). On \(\phi \)-learning. Journal of the American Statistical Association, 98(463), 724–734.

Steinwart, I. (2003). Sparseness of support vector machines. Journal of Machine Learning Research, 4, 1071–1105.

Steinwart, I., & Christmann, A. (2008). Support vector machines. Springer-Verlag.

Steinwart, I., Hush, D., & Scovel, C. (2011). Training SVMs without offset. Journal of Machine Learning Research, 12, 141–202.

Sun, S., Zhao, J., & Zhu, J. (2015). A review of nyström methods for large-scale machine learning. Information Fusion, 26, 36–48.

Suykens, J. A. K., & Vandewalle, J. (1999a). Least square spport vector machine classifiers. Neural Processing Letters, 9(3), 293–300.

Suykens, J. A. K., & Vandewalle, J. (1999b). Least squares support vector machine classifiers. Neural Processing Letters, 9(3), 293–300.

Suykens, J. A. K., Gestel, T. V., Brabanter, J. D., Moor, B. D., & Vandewalle, J. (2002). Least squares Support Vector Machines. World Scientific.

Tao, Q., Wu, G., & Chu, D. (2018). Improving sparsity and scalability in regularized nonconvex truncated-loss learning problems. IEEE Transactions on Neural Networks and Learning Systems, 29(7), 2782–2793.

Vapnik, V. N. (1999). An overview of statistical learning theory. IEEE Transactions on Neural Network, 10(5), 988–999.

Vapnik, V. N. (2000). The nature of dtatistical learning theory. Springer-Verlag.

Wu, Y., & Liu, Y. (2007). Robust truncated hinge loss support vector machines. Publications of the American Statistical Association, 102(479), 974–983.

Xu, H.M., Xue, H., Chen, X.H., & Wang, Y.Y. (2017). Solving indefinite kernel support vector machine with difference of convex functions programming. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, AAAI Press, AAAI’17, pp 2782–2788.

Yuille, A. L., & Rangarajan, A. (2003). The concave-convex procedure. Neural Computation, 15(4), 915–936.

Zhou, S. (2013). Which is better? regularization in RKHS vs \({\mathfrak{R}}^m\) on reduced SVMs. Statistics, Optimization and Information Computing, 1(1), 82–106.

Zhou, S. (2016). Sparse LSSVM in primal using Cholesky factorization for large-scale problems. IEEE Transactions on Neural Networks and Learning Systems, 27(4), 783–795.

Zhou, S., Liu, H., Ye, F., & Zhou, L. (2009). A new iterative algorithm training SVM. Optimization Methods and Software, 24(6), 913–932.

Zhou, S., Cui, J., Ye, F., Liu, H., & Zhu, Q. (2013). New smoothing SVM algorithm with tight error bound and efficient reduced techniques. Computational Optimization and Applications, 56(3), 599–618.

Acknowledgements

We would like to acknowledge support for this project from the National Natural Science Foundation of China under Grant No. 61772020. We also thank the anonymous reviewers for their useful comments that greatly improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China under Grant No. 61772020.

Editors: Daniel Fremont, Mykel Kochenderfer, Alessio Lomuscio, Dragos Margineantu, Cheng Soon-Ong.

Appendices

Appendix

Appendix A: The proof of propositions

1.1 The proof of propositions 1

Proof

We illustrate them one by one.

-

(a)

It is clear.

-

(b)

It is because \(u^2-\min \{u^2, a\}= (u^2 - a)_+\) is a convex function.

-

(c)

It is because \(Au^2-u_+^2\) with \(A\ge 1\) is a convex function.

-

(d)

It is because \(Au^2-\min \{u_+^2,a\} = Au^2-u_+^2 + (u_+^2-a)_+\) with \(A\ge 1\) is convex.

-

(e)

First we show that the hinge loss \(\ell (y,t) = (1-yt)_+\) is not an LS-DC loss. If let \(g(u)=Au^2-u_+\), we have \(g_-'(0)=0>g'_+(0)=-1\). Hence by Theorem 24.1 in Rockafellar (1972), we conclude that g(u) is not convex for all A (\(0<A<+\infty \)).

Notice that \((1-yt)_+ = \lim _{p\rightarrow +\infty }\frac{1}{p}\log (1+\exp (p(1-yt)))\). Let \(\psi (u) = \frac{1}{p}\log (1+\exp (pu))\), and we have \(\psi ''(u) = \frac{p\exp (pu)}{(1+\exp (pu))^2}\le \frac{p}{4}\). By Theorem 1, we know that \(\ell _p(y,t)=\frac{1}{p}\log (1+\exp (p(1-yt)))\) is an LS-DC loss with \(A\ge p/8\). In experiments, letting \(1\le p\le 100\), \(\ell _p(y,t)\) is a good approximation of the hinge loss.

-

(f)

The reason that the ramp loss is not an LS-DC loss is the same as that of the hinge loss. Its two smoothed approximations (16) and (17) are LS-DC loss. The proof of the first is similar to that of the squared hinge loss and the proof of the second is similar to that of the approximation of the hinge loss.

-

(g)

Let \(g(u) = Au^2-a\left( 1-\exp (-\tfrac{1}{a}u_+^2)\right) \). Then g(u) is a convex function because \(g'(u)=2Au-2u_+\exp (-\tfrac{1}{a}u_+^2))\) is monotonically increasing if \(A\ge 1\).

-

(h)

Let \(\psi (u)=a(1-e^{-\tfrac{1}{b} u^c_+})\). If \(c=2\), set \(A\ge \frac{a}{b}=\frac{1}{2} M(a,b,2)\) and let \(g(u) = A u^2-\psi (u)\). Hence g(u) is convex because \(g'(u)=2A u- \frac{2a}{b} u_+ e^{-\frac{1}{b} u^2_+}\) is monotonically increasing.

For \(c>2\), \(\psi (u)\) is second-order derivable, and according to Theorem 1 we only need to obtain the upper bound of \(\psi ''(u)\). If \(u\le 0\), we have \(\psi ''(u)=0\). If \(u>0\), then \(\psi ''(u)=\tfrac{ac}{b} \left( (c-1)u^{c-2}-\tfrac{c}{b} u^{2c-2}\right) e^{-\frac{1}{b} u^c}\) and

$$\begin{aligned} \psi '''(u)=\tfrac{ac}{b} u^{c-3}e^{-\frac{1}{b} u^c}\left( \tfrac{c^2}{b^2}u^{2c}-\tfrac{3c(c-1)}{b} u^{c}+(c-1)(c-2)\right) . \end{aligned}$$Letting \(\psi '''(u)=0\), we get the roots \(u^*_1\) and \(u^*_2\) (\(0<u^*_1<u^*_2\)), where

$$\begin{aligned} u^*_{1} = (b\cdot h(c))^{\frac{1}{c}} \end{aligned}$$with \(h(c)=(3(c-1) - \sqrt{5c^2-6c+1})/(2c)\), which is a local maximum of \(\psi ''(u)\). Noting that \(\lim _{u\rightarrow 0} \psi ''(u) = \lim _{u\rightarrow \infty }\psi ''(u) =0\), we have that the global maximum of \(\psi ''(u)\) reaches at \(u^*_1\). Putting \(u^*_1\) in \(\psi ''(u)\), we prove that \(\psi ''(u)\le \psi ''(u^*_1)=:M(a,b,c)\) for any u, where \(M(a,b,c)=\tfrac{ac}{b^{2/c}}\left( (c-1)(h(c))^{1-2/c}-c (h(c))^{2-2/c}\right) e^{-h(c)}\).

For example, \(M(2,2,2) = 2\), \(M(2,2,4) \approx 4.5707<5\), \(M(2,3,4) \approx 3.7319<4\). Thus, the parameter \(A= \frac{1}{2} M(a,b,c)\) is not very large.

\(\square \)

1.2 The proof of propositions 2

Proof

We illustrate them one by one.

-

(1)

It is clear.

-

(2)

The \(\varepsilon \)-insensitive loss \(\ell _\varepsilon (y,t):= (|y-t|-\varepsilon )_+\) is not an LS-DC loss. The reason is similar to that of the hinge loss in item (e). However, its smoothed approximation (20) is an LS-DC loss with \(A\ge p/4\). Let \(\psi (u) = \frac{1}{p}\log (1+\exp (-p(u+\varepsilon ))) + \frac{1}{p}\log (1+\exp (p(u-\varepsilon )))\). We have

$$\begin{aligned} \psi ''(u) = \frac{p\exp (-p(u+\varepsilon ))}{(1+\exp (-p(u+\varepsilon )))^2} + \frac{p\exp (p(u-\varepsilon ))}{(1+\exp (p(u-\varepsilon )))^2}\le \frac{p}{2}. \end{aligned}$$ -

(3)

The absolute loss \(\ell (y,t) = |y-t|\) is also not an LS-DC loss. Clearly, the Huber loss \(\ell _\delta (y,t)\) which approximates the absolute loss, is an LS-DC loss with \(A\ge 1/(2\delta )\); setting \(\varepsilon =0\) in (20) we obtain another smoothed absolute loss, which is an LS-DC loss with \(A\ge p/4\).

-

(4)

It is clear.

\(\square \)

Appendix B: The lists of some related losses and their subdifferentials

The most related losses and their subdifferentials for updating \(\varvec{\gamma }^k\) by (23) are listed in Table 5. The LS-DC parameters of the LS-DC losses are also given in the last column. In experiments, we always use the lower-bound of the parameter.

Appendix C: Matlab code for UniSVM

Matlab code for solving UniSVM with the full kernel matrix available in Subsect. 4.1 is listed as follows; please see the notes for other cases. The demo codes can also be found at https://github.com/stayones/code-UNiSVM.

Rights and permissions

About this article

Cite this article

Zhou, S., Zhou, W. Unified SVM algorithm based on LS-DC loss. Mach Learn 112, 2975–3002 (2023). https://doi.org/10.1007/s10994-021-05996-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-05996-7