Abstract

In the recent paper of Giorgi et al. (J Optim Theory Appl 171:70–89, 2016), the authors introduced the so-called approximate Karush–Kuhn–Tucker (AKKT) condition for smooth multiobjective optimization problems and obtained some AKKT-type necessary optimality conditions and sufficient optimality conditions for weak efficient solutions of such a problem. In this note, we extend these optimality conditions to locally Lipschitz multiobjective optimization problems using Mordukhovich subdifferentials. Furthermore, we prove that, under some suitable additional conditions, an AKKT condition is also a KKT one.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Karush–Kuhn–Tucker (KKT) optimality conditions are one of the most important results in optimization theory. However, KKT optimality conditions do not need to be fulfilled at local minimum points unless some constraint qualifications are satisfied; see, for example [4, p. 97], [5, Sect. 3.1] and [12, p. 78]. In Andreani et al. [2] introduced the so-called complementary approximate Karush–Kuhn–Tucker (CAKKT) condition for scalar optimization problems with smooth data. Then, the authors proved that this condition is necessary for a point to be a local minimizer without any constraint qualification. Moreover, they also showed that the augmented Lagrangian method with lower-level constraints introduced in [1] generates sequences converging to CAKKT points under certain conditions. Optimality conditions of CAKKT-type have been recognized to be useful in designing algorithms for finding approximate solutions of optimization problems; see, for example [3, 5, 8, 10, 11].

Recently, Giorgi et al. [9] extended the results in [2] to multiobjective problems with continuously differentiable data. The authors proposed the so-called approximate Karush–Kuhn–Tucker (AKKT) condition for multiobjective optimization problems. Then, they proved that the AKKT condition holds for local weak efficient solutions without any additional requirement. Under the convexity of the related functions, an AKKT-type sufficient condition for global weak efficient solutions is also established.

An interesting question arises: How does one obtain AKKT-type optimality conditions for weak efficient solutions of locally Lipschitz multiobjective optimization problems? This paper is aimed at solving the problem. We hope that our results will be useful in finding approximate efficient solutions of nonsmooth multiobjective optimization problems.

The paper is organized as follows. In Sect. 2, we recall some basic definitions and preliminaries from variational analysis, which are widely used in the sequel. Section 3 is devoted to presenting the main results.

2 Preliminaries

We use the following notation and terminology. Fix \(n \in {{\mathbb {N}}}:=\{1, 2, \ldots \}\). The space \({\mathbb {R}}^n\) is equipped with the usual scalar product and Euclidean norm. The topological closure and the topological interior of a subset S of \({\mathbb {R}}^n\) are denoted, respectively, by \(\mathrm {cl}\,{S}\) and \(\mathrm {int}\,{S}\). The closed unit ball of \({\mathbb {R}}^n\) is denoted by \({\mathbb {B}}^n.\)

Definition 2.1

(See [13]) Given \({\bar{x}}\in \text{ cl }\,S\). The set

is called the Mordukhovich/limiting normal cone of S at \({\bar{x}}\), where

is the set of \(\varepsilon \)-normals of S at x and \(u\xrightarrow {{S}} x\) means that \(u \rightarrow x\) and \(u \in S\).

Let \(\varphi :{\mathbb {R}}^n \rightarrow \overline{{\mathbb {R}}}\) be an extended-real-valued function. The epigraph and domain of \(\varphi \) are denoted, respectively, by

Definition 2.2

(See [13]) Let \({\bar{x}}\in \text{ dom } \varphi \). The set

is called the Mordukhovich/limiting subdifferential of \(\varphi \) at \({\bar{x}}\). If \({\bar{x}}\notin \text{ dom } \varphi \), then we put \(\partial \varphi ({\bar{x}})=\emptyset \).

Recall that \(\varphi : {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) is strictly differentiable at \({\bar{x}}\) iff there is a linear continuous operator \(\nabla \varphi ({\bar{x}}) : {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\), called the Fréchet derivative of \(\varphi \) at \({\bar{x}}\), such that

As is well-known, any function \(\varphi \) that is continuously differentiable in a neighborhood of \({\bar{x}}\) is strictly differentiable at \({\bar{x}}\). We now summarize some properties of the Mordukhovich subdifferential that will be used in the next section.

Proposition 2.1

(See [14, Proposition 6.17(d)]) Let \(\varphi :{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\) be lower semicontinuous around \({\bar{x}}\). Then, for all \(\lambda \geqq 0\), one has \(\partial (\lambda \varphi )({\bar{x}})=\lambda \partial \varphi ({\bar{x}})\).

Proposition 2.2

(See [13, Corollary 1.81]) If \(\varphi :{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\) is Lipschitz continuous around \({\bar{x}}\) with modulus \(L>0\), then \(\partial \varphi ({\bar{x}})\) is a nonempty compact set in \({\mathbb {R}}^n\) and contained in \(L{\mathbb {B}}^n\).

Proposition 2.3

(See [13, Theorem 3.36]) Let \(\varphi _l:{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\), \(l=1, \ldots , p\), \(p\geqq 2\), be lower semicontinuous around \({\bar{x}}\) and let all but one of these functions be locally Lipschitz around \({\bar{x}}\). Then we have the following inclusion

Proposition 2.4

(See [13, Theorem 3.46]) Let \(\varphi _l:{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\), \(l=1, \ldots , p\), be locally Lipschitz around \({\bar{x}}\). Then the function \( \phi (\cdot ):=\max \{\varphi _l(\cdot ):l=1, \ldots , p\}\) is also locally Lipschitz around \({\bar{x}}\) and one has

where \(\Lambda ({\bar{x}}):=\{(\lambda _1, \ldots , \lambda _p): \lambda _l\geqq 0, \sum _{l=1}^{p}\lambda _l=1, \lambda _l[\varphi _l({\bar{x}})-\phi ({\bar{x}})]=0\}.\)

Proposition 2.5

(See [13, Theorem 3.41]) Let \(g:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) be locally Lipschitz around \({\bar{x}}\) and \(\varphi :{\mathbb {R}}^m\rightarrow {\mathbb {R}}\) be locally Lipschitz around \(g({\bar{x}})\). Then one has

In particular, if \(m=1\) and \(\varphi \) is strictly differentiable at \(g({\bar{x}})\), then

Proposition 2.6

(See [13, Proposition 1.114]) Let \(\varphi :{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\) be finite at \({\bar{x}}\). If \(\varphi \) has a local minimum at \({\bar{x}}\), then \( 0\in \partial \varphi ({\bar{x}}).\)

Proposition 2.7

(See [6, Proposition 5.2.28]) Let \(\varphi :{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}\) be a lower semicontinuous function. Then the set-valued mapping \(\partial \varphi :{\mathbb {R}}^n\rightrightarrows {\mathbb {R}}^n\) is closed.

3 Main results

Let \({\mathcal {L}}:=\{1, \ldots , p\}\), \({\mathcal {I}}:=\{1, \ldots , m\}\) and \({\mathcal {J}}:=\{1, \ldots , r\}\) be index sets. Suppose that \(f=(f_1, \ldots , f_p):{\mathbb {R}}^n\rightarrow {\mathbb {R}}^p\), \(g=(g_1, \ldots , g_m):{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\), and \(h=(h_1, \ldots , h_r):{\mathbb {R}}^n\rightarrow {\mathbb {R}}^r\) are vector-valued functions with locally Lipschitz components defined on \({\mathbb {R}}^n\). Let \({\mathbb {R}}^p_+\) be the nonnegative orthant of \({\mathbb {R}}^p\). For \(a, b\in {\mathbb {R}}^p\), by \(a\leqq b\), we mean \(a-b\in -{\mathbb {R}}^p_+\); by \(a\le b\), we mean \(a-b\in -{\mathbb {R}}^p_+\setminus \{0\}\); and by \(a<b\), we mean \(a-b\in -\text {int}\,{\mathbb {R}}^p_+\).

We focus on the following constrained multiobjective optimization problem:

where \({\mathcal {F}}\) is the feasible set given by \( {\mathcal {F}}:=\{x\in {\mathbb {R}}^n\,:\, g(x)\leqq 0, h(x)=0\}. \)

Definition 3.1

Let \({\bar{x}}\in {\mathcal {F}}\). We say that:

-

(i)

\({\bar{x}}\) is a (global) weak efficient solution of (MOP) iff there is no \(x\in {\mathcal {F}}\) satisfying \(f(x)<f({\bar{x}})\).

-

(ii)

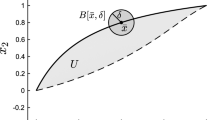

\({\bar{x}}\) is a local weak efficient solution of (MOP) iff there exists a neighborhood U of \({\bar{x}}\) such that \({\bar{x}}\) is a weak efficient solution on \(U\cap {\mathcal {F}}\).

We now introduce the concept of approximate Karush–Kuhn–Tucker condition for (MOP) inspired by the work of Giorgi et al. [9].

Definition 3.2

We say that the approximate Karush–Kuhn–Tucker condition (AKKT) is satisfied for (MOP) at a feasible point \({\bar{x}}\) iff there exist sequences \(\{x^k\}\subset {\mathbb {R}}^n\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\subset {\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r\) such that

-

(A0)

\(x^k\rightarrow {\bar{x}}\),

-

(A1)

\({\mathfrak {m}}(x^k; \lambda ^k, \mu ^k, \tau ^k)\rightarrow 0\) as \(k\rightarrow \infty \), where

-

(A2)

\(\sum _{l=1}^{p}\lambda ^k_l=1\),

-

(A3)

\(g_i({\bar{x}})<0\Rightarrow \mu ^k_i=0\) for sufficiently large k and \(i\in {\mathcal {I}}\).

We are now ready to state and prove our main results.

Theorem 3.1

If \({\bar{x}}\in {\mathcal {F}}\) is a local weak efficient solution of (MOP), then there exist sequences \(\{x^k\}\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\) satisfying the AKKT condition at \({\bar{x}}\). Furthermore, we can choose these sequences such that the following conditions hold:

-

(E1)

\(\mu _i^k=b_k\max (g_i(x^k), 0)\geqq 0,\)\(\forall i\in {\mathcal {I}}\), and \(\tau _j^k=c_kh_j(x^k)\geqq 0,\)\(\forall j\in {\mathcal {J}}\), where \(b_k, c_k>0,\)\(\forall k\in {\mathbb {N}}\),

-

(E2)

\(f_l(x^k)-f_l({\bar{x}})+\frac{1}{2}\left[ \sum _{i=1}^{m}\mu _i^kg_i(x^k)+ \sum _{j=1}^{r}\tau ^k_jh_j(x^k)\right] \leqq 0,\)\(\forall k\in {\mathbb {N}}\), \(l\in {\mathcal {L}}\).

Proof

Since \({\bar{x}}\) is a local weak efficient solution of (MOP), \(f_l\), \(g_i\) and \(h_j\) are locally Lipschitz functions, we can choose \(\delta >0\) such that these functions are Lipschitz on \(B({\bar{x}}, \delta ):=\{x\in {\mathbb {R}}^n: \Vert x-{\bar{x}}\Vert \leqq \delta \}\) and \({\bar{x}}\) is a global weak efficient solution of f on \({\mathcal {F}}\cap B({\bar{x}}, \delta )\). It is easily seen that \({\bar{x}}\) is also a global minimum solution of the function \(\phi (\cdot ):=\max \{f_l(\cdot )-f_l({\bar{x}}): l\in {\mathcal {L}}\}\) on \({\mathcal {F}}\cap B({\bar{x}}, \delta )\).

For each \(k\in {\mathbb {N}}\), we consider the following problem

where

Clearly, \(\varphi _k\) is continuous on the compact set \(B({\bar{x}}, \delta )\). Thus, by the Weierstrass theorem, the problem (\(\hbox {P}_k\)) admits an optimal solution, say \(x^k\). This and the fact that \(\varphi _k({\bar{x}})=0\) imply that

or, equivalently,

By the continuity of \(\phi \) and \(\Vert x^k-{\bar{x}}\Vert \leqq \delta \), the right-hand-side of (2) tends to zero when k tends to infinity. Hence,

This and the continuity of the functions \(\max (g_i(\cdot ),0)\) and \(h_j\) imply that every accumulation point of \(\{x^k\}\) must belongs to \({\mathcal {F}}\). Since \(\{x^k\}\subset B({\bar{x}}, \delta )\), the sequence has at least an accumulation point, say \({\tilde{x}}\in {\mathcal {F}}\). By (1), one has

Passing the last inequality to the limit as \(k\rightarrow \infty \), we get

This and \(\phi ({\tilde{x}})\geqq 0\) imply that \({\tilde{x}}={\bar{x}}\). This means that the sequence \(\{x^k\}\) has a unique accumulation point \({\bar{x}}\), thus converges. Consequently, \(x^k\) belongs to the interior of \(B({\bar{x}}, \delta )\) for k large enough. Thanks to Proposition 2.6, we have

By Propositions 2.1–2.5, one has

where

with

and

Hence, (3) implies that

This means that there exist \((\lambda _1^k, \ldots , \lambda _p^k)\in \Lambda (x^k)\), \(\xi _l^k\in \partial f_l (x^k)\), \(\eta _i^k\in \partial g_i(x^k)\) and \(\gamma _j^k\in [\partial h_j(x^k)\cup \partial (-h_j)(x^k)]\) such that

Hence,

Setting \( \lambda ^k=(\lambda _1^k, \ldots , \lambda _p^k), \mu ^k=(\mu _1^k, \ldots , \mu _m^k), \tau ^k=(\tau _1^k, \ldots , \tau _r^k),\) where

For each \(k\in {\mathbb {N}}\), we have

This and \(\lim \nolimits _{k\rightarrow \infty }x^k={\bar{x}}\) imply that \(\lim \nolimits _{k\rightarrow \infty }{\mathfrak {m}}(x^k; \lambda ^k, \mu ^k, \tau ^k)=0.\) Thus, \({\bar{x}}\) satisfies conditions (A0)–(A2). If \(g_j({\bar{x}})<0\), then \(g_j(x^k)<0\) for k large enough. Consequently, \(\mu _i^k=0\) for k large enough and we therefore get condition (A3).

For each \(j\in {\mathcal {J}}\), by passing to a subsequence if necessary, we may assume that \(h_j(x^k)\geqq 0\) for all \(k\in {\mathbb {N}}\), or \(h_j(x^k)< 0\) for all \(k\in {\mathbb {N}}\). For the last case, by replacing \(h_j\) by \({\bar{h}}_j:=-h_j\), one has

and \({\bar{h}}_j(x^k)\geqq 0\) for all \(k\in {\mathbb {N}}\). Hence we may assume that \(h_j(x^k)\geqq 0\) for all \(k\in {\mathbb {N}}\) and \(j\in {\mathcal {J}}\). This means that \(\tau ^k_j=kh_j(x^k)\geqq 0\) for all \(k\in {\mathbb {N}}\) and \(j\in {\mathcal {J}}\) and we therefore get condition (E1). Moreover, we see that

Thus, (1) can be rewrite as

and condition (E2) follows. The proof is complete. \(\square \)

Remark 3.1

If \(h_j\), \(j\in {\mathcal {J}}\), are continuously differentiable functions, then

In this case, we can choose \(\gamma _j^k=\nabla h_j(x^k)\) for all \(j\in {\mathcal {J}}\) and \(k\in {\mathbb {N}}\). Thus, the conclusions of Theorem 3.1 still hold if condition (A1) is replaced by the following condition:

- (A1)\(^\prime \):

-

\({\mathfrak {m}}^\prime (x^k; \lambda ^k, \mu ^k, \tau ^k)\rightarrow 0\) as \(k\rightarrow \infty \), where

Conditions (A0), (A1)\(^\prime \), (A2), (A3) are called by the AKKT\(^\prime \) condition. In case the problem (MOP) has no equality constraints, then conditions (A1) and (A1)\(^\prime \) coincide. In general, condition (A1)\(^\prime \) is stronger than condition (A1) because

Thus if \({\bar{x}}\) satisfies the AKKT\(^\prime \) condition with respect to sequences \(\{x^k\}\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\), then so does the AKKT one.

Definition 3.3

(See [9, Remark 3.2]) Let \({\bar{x}}\) be a feasible point of (MOP). We say that:

-

(i)

\({\bar{x}}\) satisfies the sign condition (SGN) with respect to sequences \(\{x^k\}\subset {\mathbb {R}}^n\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\subset {\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r\) iff, for every \(k\in {\mathbb {N}}\), one has

$$\begin{aligned} \mu _i^k g_i(x^k)\geqq 0, i\in {\mathcal {I}},\;\;\text {and}\;\; \tau _j^k h_j(x^k)\geqq 0, j\in {\mathcal {J}}. \end{aligned}$$ -

(ii)

\({\bar{x}}\) satisfies the sum converging to zero condition (SCZ) with respect to sequences \(\{x^k\}\subset {\mathbb {R}}^n\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\subset {\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r\) iff

$$\begin{aligned} \sum _{i=1}^{m}\mu _i^kg_i(x^k)+\sum _{j=1}^{r} \tau _j^kh_j(x^k)\rightarrow 0\;\;\text {as}\;\;k\rightarrow \infty . \end{aligned}$$

Remark 3.2

Clearly, if condition (E1) holds at \({\bar{x}}\), then so does condition SGN. Moreover, thanks to [9, Remark 3.2], conditions (A0), SGN and (E2) imply condition SCZ. The converse does not hold in general; see [9, Remark 3.4].

The following result gives sufficient optimality conditions for (global) weak efficient solutions of convex problems.

Theorem 3.2

Assume that \(f_l\)\((l=1, \ldots , p)\) and \(g_i\)\((i=1, \ldots , m)\) are convex and \(h_j\)\((j=1, \ldots , r)\) are affine. If \({\bar{x}}\) satisfies conditions \(AKKT^\prime \) and SCZ with respect to sequences \(\{x^k\}\subset {\mathbb {R}}^n\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\subset {\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r\), then \({\bar{x}}\) is a weak efficient solution of (MOP).

Proof

On the contrary, suppose that \({\bar{x}}\) is not a weak efficient solution of (MOP). Then, there exists \({{\hat{x}}}\in {\mathcal {F}}\) such that

By condition (A2), without any loss of generality, we may assume that \(\lambda ^k\rightarrow \lambda \) with \(\lambda \ge 0\) and \(\sum _{l=1}^{p}\lambda _l=1\). For k large enough, the sets \(\partial f_l(x^k)\) and \(\partial g_i(x^k)\) are compact. Hence, there exist \(\xi ^k_l\in \partial f_l(x^k)\) and \(\eta ^k_i\in \partial g_i(x^k)\) such that

As \(f_l\) and \(g_i\) are convex and \(h_j\) are affine, for each \(k\in {\mathbb {N}}\), we have

Multiplying (5) by \(\lambda _l^k\), (6) by \(\mu _i^k\), (7) by \(\tau ^k_j\) and adding up, we obtain

where \(\sigma _k:=(\sum _{l=1}^{p}\lambda _l^k\xi ^k_l+\sum _{i=1}^{m}\mu ^k_i\eta ^k_i+\sum _{j=1}^{r}\tau ^k_j\nabla h_j(x^k))({\hat{x}}-x^k).\) Since \(x^k\rightarrow {\bar{x}}\) and \({\mathfrak {m}}^\prime (x^k; \lambda ^k, \mu ^k, \tau ^k)\rightarrow 0\) as \(k\rightarrow \infty \), and

we have \(\lim \limits _{k\rightarrow \infty }\sigma _k= 0\). By condition SCZ, taking the limit in (8), we obtain

Moreover, since \(\lambda \ge 0\) and (4), we have \( \sum _{l=1}^{p}\lambda _lf_l({\hat{x}})<\sum _{l=1}^{p}\lambda _lf_l({\bar{x}}),\) contrary to (9). The proof is complete. \(\square \)

Clearly, if f, g and h are continuously differentiable, then Theorems 3.1 and 3.2 reduce to [9, Theorems 3.1, 3.2], respectively.

We now show that, under the additional that the quasi-normality constraint qualification and condition (E1) hold at a given feasible solution \({\bar{x}}\), an AKKT condition is also a KKT one.

Definition 3.4

We say that \({\bar{x}}\in {\mathcal {F}}\) satisfies the KKT optimality condition iff there exists a multiplier \((\lambda , \mu , \tau )\) in \({\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r\) such that

-

(i)

\(\lambda \ge 0\),

-

(ii)

\(0\in \sum _{l=1}^{p}\lambda _l\partial f_l({\bar{x}})+\sum _{i=1}^{m}\mu _i\partial g_i({\bar{x}})+\sum _{j=1}^{r}\tau _j[\partial h_j({\bar{x}})\cup \partial (-h_j)({\bar{x}})]\),

-

(iii)

\(\mu _i g_i({\bar{x}})=0\), \(i\in {\mathcal {I}}\).

Definition 3.5

We say that \({\bar{x}}\in {\mathcal {F}}\) satisfies the quasi-normality constraint qualification (QNCQ) if there is not any multiplier \((\mu , \tau )\in {\mathbb {R}}^m_+\times {\mathbb {R}}^r\) satisfying

-

(i)

\((\mu , \tau )\ne 0\),

-

(ii)

\(0\in \sum _{i=1}^{m}\mu _i\partial g_i({\bar{x}})+\sum _{j=1}^{r}\tau _j[\partial h_j({\bar{x}})\cup \partial (-h_j)({\bar{x}})]\),

-

(iii)

in every neighborhood of \({\bar{x}}\) there is a point \(x\in {\mathbb {R}}^n\) such that \(g_i(x)>0\) for all i having \(\mu _i> 0\), and \(\tau _jh_j(x)>0\) for all j having \(\tau _j\ne 0\).

Theorem 3.3

Let \({\bar{x}}\in {\mathcal {F}}\) be such that conditions AKKT and (E1) are satisfied with respect to sequences \(\{x^k\}\) and \(\{(\lambda ^k, \mu ^k, \tau ^k)\}\). If the QNCQ holds at \({\bar{x}}\), then so does the KKT optimality condition.

Proof

For each \(k\in {\mathbb {N}}\), put \(\delta _k=\Vert (\lambda ^k, \mu ^k, \tau ^k)\Vert \). By condition (A2), we have

Since \(\Vert \frac{1}{\delta _k}(\lambda ^k, \mu ^k, \tau ^k)\Vert =1\) for all \(k\in {\mathbb {N}}\), we may assume that the sequence \(\{\frac{1}{\delta _k}(\lambda ^k, \mu ^k, \tau ^k)\}\) converges to \((\lambda , \mu , \tau )\in ({\mathbb {R}}^p_+\times {\mathbb {R}}^m_+\times {\mathbb {R}}^r){\setminus }\{0\}\) as k tends to infinity. By condition (A0) and Proposition 2.2, for k large enough, the sets \(\partial f_l(x^k)\), \(\partial g_i(x^k)\) and \([\partial h_j(x^k)\cup \partial (-h_j)(x^k)]\) are compact. Thus, there exist \(\xi _l^k\in \partial f_l(x^k)\), \(\eta _i^k\in \partial g_i(x^k)\) and \(\gamma _j^k\in [\partial h_j(x^k)\cup \partial (-h_j)(x^k)]\) such that

for k large enough. Since \(f_l, g_i\) and \(h_j\) are locally Lipschitz around \({\bar{x}}\), without any loss of generality, we may assume that these functions are locally Lipschitz around \({\bar{x}}\) with the same modulus L. Again by condition (A0) and Proposition 2.2, for k large enough, one has \( (\xi _l^k, \eta _i^k, \gamma _j^k)\in L {\mathbb {B}}^n\times L{\mathbb {B}}^n\times L{\mathbb {B}}^n.\) By replacing \(\{(\xi _l^k, \eta _i^k, \gamma _j^k)\}\) by a subsequence if necessary, we may assume that this sequence converges to some \((\xi _l, \eta _i, \gamma _j)\in {\mathbb {R}}^n\times {\mathbb {R}}^n\times {\mathbb {R}}^n\). By Proposition 2.7, we have

From conditions (A1) and (10), dividing the both sides of (11) by \(\delta _k\) and taking the limits, we have

Thanks to condition (A3), one has \(\mu _i g_i({\bar{x}})=0 \ \ \text {for all} \ \ i\in {\mathcal {I}}\). We claim that \(\lambda \ne 0\). Indeed, if otherwise, one has \((\mu , \tau )\ne 0\) and

By condition (10) and \(\mu _i^k\rightarrow \mu _i\) as \(k\rightarrow \infty \), we see that if \(\mu _i>0\), then \(\mu _i^k>0\) for k large enough. Hence, due to condition (E1), we obtain \(g_i(x^k)>0\) for all k large enough. Similarly, if \(\tau _j\ne 0\), then \(\tau _j h_j(x^k)>0\) for k large enough. Thus, the multiplier \((\mu , \tau )\) satisfies conditions (i)–(iii) in Definition 3.5, contrary to the fact that \({\bar{x}}\) satisfies the QNCQ. The proof is complete. \(\square \)

We finish this section with the following remarks.

Remark 3.3

-

(i)

It is well known that if \({\bar{x}}\) is a weak efficient solution of (MOP) and satisfies the QNCQ, then the KKT condition holds at this point; see [7, Theorem 3.3]. This fact may not hold if \({\bar{x}}\) is not a weak efficient solution.

-

(ii)

If condition (E1) does not hold, then the AKKT condition and the QNCQ do not guarantee the correctness of KKT optimality conditions even for smooth scalar optimization problems; see [4, Example 4] for more details.

-

(iii)

Analysis similar to that in the proof of Theorem 3.3 shows that Theorems 4.1–4.5 in [9] can be extended to multiobjective optimization problems with locally Lipschitz data. We leave the details to the reader.

References

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: On augmented Lagrangian methods with general lower-level constraints. SIAM J. Optim. 18, 1286–1309 (2007)

Andreani, R., Martínez, J.M., Svaiter, B.F.: A new sequential optimality condition for constrained optimization and algorithmic consequences. SIAM J. Optim. 20, 3533–3554 (2010)

Andreani, R., Haeser, G., Martínez, J.M.: On sequential optimality conditions for smooth constrained optimization. Optimization 60, 627–641 (2011)

Andreani, R., Martínez, J.M., Ramos, A., Silva, P.J.S.: A cone-continuity constraint qualification and algorithmic consequences. SIAM J. Optim. 26, 96–110 (2016)

Birgin, E.G., Martínez, J.M.: Practical augmented Lagrangian methods for constrained optimization. In: Nicholas, J.H. (ed.) Fundamental of Algorithms, pp. 1–220. SIAM Publications, Philadelphia (2014)

Borwein, J.M., Zhu, Q.J.: Techniques of Variational Analysis. Springer, New York (2005)

Chuong, T.D., Kim, D.S.: Optimality conditions and duality in nonsmooth multiobjective optimization problems. Ann. Oper. Res. 217, 117–136 (2014)

Dutta, J., Deb, K., Tulshyan, R., Arora, R.: Approximate KKT points and a proximity measure for termination. J. Glob. Optim. 56, 1463–1499 (2013)

Giorgi, G., Jiménez, B., Novo, V.: Approximate Karush–Kuhn–Tucker condition in multiobjective optimization. J. Optim. Theory Appl. 171, 70–89 (2016)

Haeser, G., Schuverdt, M.L.: On approximate KKT condition and its extension to continuous variational inequalities. J. Optim. Theory Appl. 149, 528–539 (2011)

Haeser, G., Melo, V.V.: Convergence detection for optimization algorithms: approximate-KKT stopping criterion when Lagrange multipliers are not available. Oper. Res. Lett. 43, 484–488 (2015)

Mangasarian, O.L.: Nonlinear Programming. McGraw-Hill, New York (1969)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation I: Basis Theory. Springer, Berlin (2006)

Penot, J.P.: Calculus without derivatives. In: Sheldon, A., Kenneth, R. (eds.) Graduate Texts in Mathematics, vol. 266, pp. 1–524. Springer, New York (2013)

Acknowledgements

The authors would like to thank the referees for their constructive comments which significantly improve the presentation of the paper. J.-C. Yao and C.-F. Wen are supported by the Taiwan MOST (Grant Nos. 106-2923-E-039-001-MY3, 106-2115-M-037-001), respectively, as well as the Grant from Research Center for Nonlinear Analysis and Optimization, Kaohsiung Medical University, Taiwan.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Van Tuyen, N., Yao, JC. & Wen, CF. A note on approximate Karush–Kuhn–Tucker conditions in locally Lipschitz multiobjective optimization. Optim Lett 13, 163–174 (2019). https://doi.org/10.1007/s11590-018-1261-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-018-1261-y