Abstract

We extend the so-called approximate Karush–Kuhn–Tucker condition from a scalar optimization problem with equality and inequality constraints to a multiobjective optimization problem. We prove that this condition is necessary for a point to be a local weak efficient solution without any constraint qualification, and is also sufficient under convexity assumptions. We also state that an enhanced Fritz John-type condition is also necessary for local weak efficiency, and under the additional quasi-normality constraint qualification becomes an enhanced Karush–Kuhn–Tucker condition. Finally, we study some relations between these concepts and the notion of bounded approximate Karush–Kuhn–Tucker condition, which is introduced in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Karush–Kuhn–Tucker (KKT) optimality conditions play an important role in optimization theory, both for scalar optimization and for multiobjective optimization. The KKT conditions are satisfied at a weak efficient point, provided a suitable constraint qualification holds.

Other kind of optimality conditions, named Fritz John-type conditions, do not require any constraint qualification. Such conditions are true in the scalar case and also for the multiobjective problem.

Another type of necessary optimality conditions in scalar problems, that do not require a constraint qualification, are the so-called “approximate optimality conditions” or also “asymptotic optimality conditions” or “sequential optimality conditions,” in which appropriate sequences of points and multipliers are considered. These conditions are investigated since several decades. We can quote the works of Kortanek and Evans [1], for pseudoconcave programming, the paper of Fiacco and McCormick [2], the paper of Zlobec [3], where this last author generalizes, in an asymptotic way, the classic optimality conditions given by Guignard and the book of Hestenes [4]. Asymptotic versions of the Karush–Kuhn–Tucker conditions are considered also by McShane [5], Craven [6] and Trudzik [7].

Recently, several works have been devoted to the study of such conditions for the interest they have in the design and analysis of algorithms to check this kind of approximate optimality conditions; see, for example, Haeser and Schuverdt [8], Andreani, Haeser and Martínez [9], Andreani, Martínez and Svaiter [10], Dutta et al. [11] and Haeser and Melo [12].

Despite of its great interest, this type of optimality conditions has not been studied in multiobjective optimization; in our knowledge this paper is the first dedicated to this topic.

In this paper, we deal with the study of the approximate KKT condition for a continuously differentiable multiobjective problem in finite dimensional spaces, whose feasible set is defined by inequality and equality constraints. In the main result (Sect. 3) we extend to this context the necessary optimality conditions obtained for scalar problems via approximate KKT conditions. We also prove that these conditions are also sufficient conditions under convexity assumptions. Illustrative examples are given.

In Sect. 4 we obtain a necessary optimality condition of enhanced Fritz John-type (in this case, in addition to the classic Fritz John conditions, the multipliers satisfy some additional sensitivity-like conditions). This class of multipliers was obtained by Bertsekas and Ozdaglar [13] for scalar optimization problems. Moreover, we study the relationships between these conditions and the above one, we introduce the notions of enhanced (respectively weak enhanced) KKT-point and state that the approximate KKT condition is sufficient to have an enhanced KKT-point under a weak constraint qualification, called quasi-normality constraint qualification. We also introduce the notion of bounded approximate KKT condition and study the relations with the above concepts.

2 Preliminaries

Recall that, for a scalar function, the so-called Clarke subdifferential is defined as follows [14]: The upper Clarke directional derivative of a locally Lipschitz function \(\varphi :\mathbb {R}^n\rightarrow \mathbb {R}\) at \(x\in \mathbb {R}^n\) in the direction \(d\in \mathbb {R}^n\) is

and the Clarke subdifferential of \(\varphi \) at x is given by

where \(\langle \cdot , \cdot \rangle \) stands for the Euclidean scalar product. When \(\varphi \) is continuously differentiable, one has \(\partial _C \varphi (x)=\{\nabla \varphi (x)\}\). Next, we collect some properties of this subdifferential that can be found in [14].

Proposition 2.1

-

(i)

Let \(\varphi :\mathbb {R}^n\rightarrow \mathbb {R}\) be locally Lipschitz and \(\psi :\mathbb {R}^n\rightarrow \mathbb {R}\) continuously differentiable. Then,

$$\begin{aligned} \partial _C (\varphi +\psi )(x)=\partial _C \varphi (x)+\{\nabla \psi (x)\}. \end{aligned}$$ -

(ii)

If \(x^0\) is a local minimum of \(\varphi \), then \(0\in \partial _C \varphi (x^0)\).

-

(iii)

If \(\varphi (x)=\max \{\varphi _1(x),\dots ,\varphi _p(x)\}\), where \(\varphi _1,\dots ,\varphi _p:\mathbb {R}^n\rightarrow \mathbb {R}\) are continuously differentiable, then

$$\begin{aligned} \partial _C\varphi (x)=\mathop {\mathrm{conv}}\nolimits \{\nabla \varphi _j(x):\,j=1,\dots ,p~\text {such that } \varphi _j(x)=\varphi (x)\}, \end{aligned}$$(here \(\mathop {\mathrm{conv}}\nolimits \) denotes the convex hull).

We denote by \(B(x^0,\delta )\) (respectively \(\bar{B}(x^0,\delta )\)) the open (respectively closed) ball centered at \(x^0\in \mathbb {R}^n\) and radius \(\delta >0\). In general, for a vector \(x\in \mathbb {R}^n\), we denote its components by \(x_i\) (\(i=1,\dots ,n\)). We denote by \(\mathbb {R}_+^n\) the nonnegative orthant of \(\mathbb {R}^n\).

For \(a\in \mathbb {R}\), we denote \(a_+:=\max \{0,a\}\), \(a_+^2:=(a_+)^2\), \(\Vert \cdot \Vert \) is the Euclidean norm of \(\mathbb {R}^n\) except otherwise is specified. For \(y,z\in \mathbb {R}^n\), as usual we write \(y\le z\) if \(y_i\le z_i\) for \(i=1,\dots ,n\); \(y<z\) if \(y_i< z_i\) for \(i=1,\dots ,n\).

We consider the following multiobjective optimization problem:

where \(S:=\{x\in \mathbb {R}^n: ~ g(x)\le 0, ~ h(x)=0\}\), \(f:\mathbb {R}^n\rightarrow \mathbb {R}^p\), \(g:\mathbb {R}^n\rightarrow \mathbb {R}^m\) and \(h:\mathbb {R}^n\rightarrow \mathbb {R}^r\) are continuously differentiable functions. The components of these functions are \(f_l\) \((l=1,\dots , p)\), \(g_j\) \((j=1\), \(\dots ,m)\) and \(h_i\) \((i=1,\dots ,r)\). The set of active indexes at a point \(x\in S\) is given by \(J(x):=\{j:\,g_j(x)=0\}\).

A point \(x^0 \in S\) is an efficient (respectively weak efficient) solution for (MOP) iff there exists no \(x\in S\) such that \(f(x)\le f(x^0)\), \(f(x)\ne f(x^0)\) (respectively \(f(x)<f(x^0))\). The set of all efficient (respectively weak efficient) solutions of (MOP) is denoted by \(\mathop {\mathrm{Min}}(f,S)\) (respectively \(\mathop {\mathrm{WMin}}\nolimits (f,S))\). A point \(x^0 \in S\) is a local weak efficient solution for (MOP) if there exists a neighborhood U of \(x^0\) such that \(x^0\) is a weak efficient solution on \(S\cap U\).

3 Approximate KKT Condition for Multiobjective Optimization Problems

Next we introduce the concept of approximate Karush–Kuhn–Tucker condition for the multiobjective problem (MOP) inspired by the works by Andreani, Haeser and Martínez [9], Andreani, Martínez and Svaiter [10], Haeser and Schuverdt [8] and Dutta et al. [11]. We point out that all the mentioned authors are concerned with scalar optimization problems.

Definition 3.1

We say that the approximate Karush–Kuhn–Tucker condition (AKKT) is satisfied for (MOP) at a feasible point \(x^0\in S\) iff there exist sequences \((x^k)\subset \mathbb {R}^n\) and \((\lambda ^k,\mu ^k,\tau ^k)\subset \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) such that

This condition is introduced here for the first time in multiobjective optimization problems, and it extends in a natural way the AKKT condition studied in scalar optimization problems (see, for example, [8]). Points satisfying the AKKT condition will be called AKKT points. Let us observe that the sequence of points \((x^k)\) is not required to be feasible.

Remark 3.1

Assuming \(\mu ^k\in \mathbb {R}_+^m\), condition (A3) is clearly equivalent to

Each of these conditions implies the condition

In order to establish necessary optimality conditions for problem (MOP), we are going to scalarize it. To this aim, we consider the nonsmooth function \(\phi :\mathbb {R}^p\rightarrow \mathbb {R}\) defined by

Clearly, \(\phi (y)\le 0\) \(\Leftrightarrow \) \(y\le 0\), and \(\phi (y)< 0\) \(\Leftrightarrow \) \(y<0\).

Furthermore the following result is well known. We report here for the reader’s convenience the result and its proof.

Lemma 3.1

If \(x^0\in \mathop {\mathrm{WMin}}\nolimits (f,S)\), then \(x^0\in \mathop {\mathrm{Min}}(\phi (f(\cdot )-f(x^0)),S)\).

Proof

Suppose that \(x^0\notin \mathop {\mathrm{Min}}(\phi (f(\cdot )-f(x^0),S)\), then there exists \(\hat{x}\in S\) such that \(\phi (f(\hat{x})-f(x^0))<\phi (f(x^0)-f(x^0))=0\). It follows that \(f(\hat{x})-f(x^0)<0\), which contradicts the hypothesis. \(\square \)

Theorem 3.1

If \(x^0\in S\) is a local weak efficient solution of problem (MOP), then \(x^0\) satisfies the AKKT condition with sequences \((x^k)\) and \((\lambda ^k,\mu ^k,\tau ^k)\). In addition, for these sequences we have that

Proof

By assumption, there exists \(\delta >0\) such that \(x^0\in \mathop {\mathrm{WMin}}\nolimits (f,S\cap B(x^0,\delta ))\), and by Lemma 3.1, we deduce that \(x^0\in \mathop {\mathrm{Min}}(\phi (f(\cdot )-f(x^0)),S\cap B(x^0,\delta ))\). In consequence, we may suppose that \(x^0\) is the unique solution of problem

(choosing a small \(\delta \) if necessary).

We define, for each \(k\in \mathbb {N}\),

and let \(x^k\) be a solution of problem

Let us observe that \(x^k\) exists because \(\psi _k\) is continuous and \(\bar{B}(x^0,\delta )\) is compact.

Let z be an accumulation point of \((x^k\)). We may suppose that \(x^k\rightarrow z\) (choosing a subsequence if necessary).

On one hand, we have

because

On the other hand, as \(x^0\) is a feasible point of problem (4) and \(x^k\) is a solution, one has

since \(x^0\in S\) and \(\phi (f(x^0)-f(x^0))=\phi (0)=0\).

Let us prove that z is a feasible point of problem (3).

Indeed, first as \(\Vert x^k-x^0\Vert \le \delta \) if follows that \(\Vert z-x^0\Vert \le \delta \).

Second, suppose that

Then, there exists \(c>0\) such that

for all k large enough, by continuity and because \(x^k\rightarrow z\). Now, as

taking the limit we obtain \(\psi _k(x^k)\rightarrow +\infty \), which contradicts (5). In consequence, \(\sum _{j=1}^m g_j(z)_+^2+ \sum _{i=1}^{r}h_i(z)^2=0\), and this implies that \(z\in S\).

From (5) one has

and, as \(\sum _{j=1}^m k g_j(x^k)_+^2+ \sum _{i=1}^{r} k h_i(x^k)^2\ge 0\), we get

Taking the limit it results

As \(x^0\) is the unique solution of problem (3) with value 0, we conclude that \(z=x^0\). Therefore, \(x^k\rightarrow x^0\) and \(\Vert x^k-x^0\Vert <\delta \) for all k sufficiently large.

Now, as \(x^k\) is a solution of the nonsmooth problem (4) and it is an interior point of the feasible set, for k large enough, from Proposition 2.1(ii) it follows that \(0\in \partial _C\psi _k(x^k)\). By applying Proposition 2.1, parts (i) and (iii), we have

Hence, there exist \(\lambda _l^k\ge 0\), \(l=1,\dots , p\), such that \(\sum _{l=1}^p \lambda _l^k=1\) and

Choosing for each k

we see that \(x^0\) satisfies conditions (A1), (A2) and (E1). If \(g_j(x^0)<0\), then \(g_j(x^k)<0\) for all k large enough, and so \(\mu _j^k=kg_j(x^k)_+=0\); therefore (A3) is also satisfied.

From (7), clearly for all \(j=1,\dots , m\) and for all \(i=1,\dots , r\),

In consequence, (6) can be rewritten as

From here, condition (E2) follows and the proof is finished. \(\square \)

We highlight that in Theorem 3.1, we do not require any constraint qualification, that is, these necessary optimality conditions are true for any local weak efficient solution without additional requirements. Let us observe also that condition (E1) says that the multipliers \(\mu ^k\) and \(\tau ^k\) are proportional, respectively, to \(g(x^k)_+\) and \(h(x^k)\). Condition (E1) is usually satisfied, for example, when the external penalty method is applied [8].

Next we illustrate Theorem 3.1 with an example.

Example 3.1

Consider the following multiobjective problem:

where

Let us note that it is a nonconvex problem. The point \(x^0=(1,1)\) is a weak efficient solution as can be checked, and so Theorem 3.1 can be applied. In order to find sequences satisfying (A0)–(A3), (E1) and (E2), first let us solve the equation

with \(\lambda _1,\lambda _2,\mu \ge 0\), \(\lambda _1+\lambda _2=1\). We obtain for \(x_2<-1\) or \(x_2\ge 0\),

Second, consider the nonfeasible points \(x^\varepsilon =(1,1+6\varepsilon )\), \(\varepsilon >0\). Then, from (9) it results

Let \(\mu ^\varepsilon =\frac{8}{11+60\varepsilon }\) and \(\tilde{\mu }^\varepsilon =\frac{24\varepsilon }{11+60\varepsilon }\) so that \(\bar{\mu }^\varepsilon =\mu ^\varepsilon +\tilde{\mu }^\varepsilon \). In view of (8), one has \(\lambda _1^\varepsilon \nabla f_1(x^\varepsilon )+\lambda _2^\varepsilon \nabla f_2(x^\varepsilon )+(\mu ^\varepsilon +\tilde{\mu }^\varepsilon )\nabla g(x^\varepsilon )=0,~\forall \varepsilon >0\). From here,

Clearly, (A0)–(A3) are satisfied with \((x^\varepsilon ,\lambda _1^\varepsilon ,\lambda _2^\varepsilon ,\mu ^\varepsilon )\) (if necessary we transform the points in sequences selecting \(\varepsilon =\frac{1}{k}\)). Moreover, (E1) is fulfilled selecting \(b_\varepsilon =\frac{\mu ^\varepsilon }{g(x^\varepsilon )_+}>0\). Condition (E2) is also satisfied since (after some calculations)

Conditions (E1) and (E2) are good properties as it is showed in Remark 3.2 and in Theorem 4.1, but it may not be easy to find a sequence with such properties. However, sequences satisfying (A0)–(A3) are easily obtained. As instance, if \(x^0\) is a KKT-point (see Definition 4.4) and \(\sum _{l=1}^p\lambda _l=1\), then every sequence \((x^k,\lambda ^k,\mu ^k,\tau ^k)\subset \mathbb {R}^n\times \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) converging to \((x^0,\lambda ,\mu ,\tau )\) satisfy (A0)–(A3) whenever \(\mu _j^k=0\) for sufficiently large k if \(g_j(x^0)<0\).

The reciprocal of Theorem 3.1 is not true, as the following example shows.

Example 3.2

Consider problem (MOP) with the following data:

One has

Therefore conditions (A0)–(A3) are fulfilled. Moreover, (E1) is satisfied with \(b_k=\frac{\mu ^k}{g(x^k)_+}=k\). Condition (E2) is also fulfilled since

However \(x^0\) is not a local weak efficient solution, because for the feasible points \(x(t)=(t^2,t)\) one has \(f(x(t))=(-t^2,-t)<f(x^0)\) for all \(t>0\).

In the following remark we study the relationships between conditions (E1) and (E2) and other conditions, some of them already considered in the literature.

Remark 3.2

The following implications are true:

-

(i)

Condition (E1) implies the following condition (sign condition, SGN in short): for every k one has

$$\begin{aligned} (\textit{SGN})~\mu _j^k g_j(x^k)\ge 0 ~(\forall j=1,\dots ,m) \text { and } \tau _i^k h_i(x^k)\ge 0~ (\forall i=1,\dots ,r),\nonumber \\ \end{aligned}$$(10)and, moreover, \(\mu _j^k>0\) \(\Leftrightarrow \) \(g_j(x^k)>0\), and \(\tau _i^k\ne 0\) \(\Leftrightarrow \) \(h_i(x^k)\ne 0\).

-

(ii)

Conditions (A0), (E2) and (SGN) imply the following convergence condition:

$$\begin{aligned} (\textit{CVG})~\mu _j^k g_j(x^k)\rightarrow 0 ~(\forall j=1,\dots ,m) \text { and } \tau _i^k h_i(x^k)\rightarrow 0~ (\forall i=1,\dots ,r).\nonumber \\ \end{aligned}$$(11) -

(iii)

(CVG) implies the following condition, that we call sum converging to zero condition:

$$\begin{aligned} (\textit{SCZ})~ \sum _{j=1}^m \mu _j^k g_j(x^k)+ \sum _{i=1}^{r} \tau _i^k h_i(x^k) \rightarrow 0. \end{aligned}$$ -

(iv)

(SGN) and (SCZ) imply (CVG).

Indeed, part (i) is immediate since \(\mu _j^kg_j(x^k)=b_kg_j(x^k)^2\) if \(g_j(x^k)\ge 0\) and \(\mu _j^kg_j(x^k)=0\) if \(g_j(x^k)<0\). So \(\mu _j^kg_j(x^k)\ge 0\). Similarly, \(\tau _i^k h_i(x^k)=c_kh_i(x^k)^2\ge 0\). The rest of part (i) is obvious.

For part (ii), let \(s_k:=\sum _{j=1}^m \mu _j^k g_j(x^k) + \sum _{i=1}^{r} \tau _i^k h_i(x^k)\). Using (SGN) and (E2), we have \(0\le s_k\le f_l(x^0)-f_l(x^k)\) for all k and l. From here, \(s_k\rightarrow 0\) follows by the continuity of \(f_l\) and property (A0).

Parts (iii)–(iv) are obvious.

Taking into account Remark 3.2(i), from (E2) it follows that \(f_l(x^k)\le f_l(x^0)\), \(l=1,\dots ,p\), i.e., the points \(x^k\) are better than \(x^0\). This condition is similar to the property given by (22), which is used further on to define a strong EFJ-point. As a consequence, if we wish a sequence \((x^k)\) satisfying (E1) and (E2), then we have to look for it in the set defined by the system \(f_l(x)\le f_l(x^0)\), \(l=1,\dots , p\).

Remark 3.3

In some works, in order to define the AKKT condition for scalar optimization problems, several variants of condition (A3) or some of the above ones are used. For example, in [10] the authors use the CAKKT condition, in which (A3) is replaced with

which is clearly equivalent to (CVG).

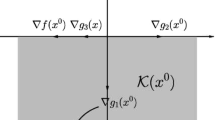

We summarize the above relations in the following diagram (where the arrow means “implies”):

In next remark, we show that the reciprocal implications of Remark 3.2 are not true and the invalidity if any assumption is not satisfied.

Remark 3.4

-

(i)

(SGN) \(\not \Rightarrow \) (E1). Consider Example 3.1, with \(x^0=(1,1)\), \(x^k=(1+\frac{1}{k},1)\), \(\lambda _1^k=\frac{5}{11}\), \(\lambda _2^k=\frac{6}{11}\), \(\mu ^k=\frac{8}{11}\). With these data, (SGN) is satisfied but (E1) is not since \(g(x^k)_+=0\), and so \(b_k g(x^k)_+=0\ne \mu ^k\) for all \(b_k>0\).

-

(ii)

(CVG) \(\not \Rightarrow \) (E2) even if (SGN) holds. Consider Example 3.1, with \(x^0=(1,1)\), \(x^k=(1-\frac{1}{k},1)\), \(\lambda _1^k=\frac{5}{11}\), \(\lambda _2^k=\frac{6}{11}\), \(\mu ^k=\frac{8}{11}\). One has \(\mu ^k g(x^k)\rightarrow 0\) and (SGN) is satisfied, but (E2) is not since

$$\begin{aligned} f_2(x^k)-f_2(x^0)+\mu ^k g(x^k) = \frac{6k-8}{11k^2}>0 \quad \forall k>1. \end{aligned}$$ -

(iii)

(E2) \(\not \Rightarrow \) (CVG) if (SGN) is not satisfied. Consider the following data in problem (MOP):

$$\begin{aligned} f_1=x_1+x_2,~f_2=-x_1+x_2,~g_1=x_1^2-x_2\le 0,~ g_2=-2x_1^2+x_2\le 0, \end{aligned}$$\(x^0=(0,0)\), \(x^k=(0,-\frac{1}{k})\), \(\lambda _1^k=\frac{1}{2}\), \(\lambda _2^k=\frac{1}{2}\), \(\mu _1^k=1+k\), \(\mu _2^k=k\). One has that (E2) is satisfied since

$$\begin{aligned} f_l(x^k)-f_l(x^0)+\mu _1^k g_1(x^k)+\mu _2^k g_2(x^k) = 0\quad \forall k,~l=1,2, \end{aligned}$$however (CVG) is not satisfied because \(\mu _1^k g_1(x^k)=\left( 1+k\right) \frac{1}{k}=\frac{1}{k}+1\rightarrow 1\).

-

(iv)

(SCZ) \(\not \Rightarrow \) (CVG) if (SGN) is not satisfied. The same data of part (iii) show this fact since

$$\begin{aligned} \mu _1^k g_1(x^k)+\mu _2^k g_2(x^k)=\left( 1+k\right) \frac{1}{k}+k\frac{-1}{k}=\frac{1}{k}\rightarrow 0, \end{aligned}$$so (SCZ) holds but (CVG) does not.

Theorem 3.1 extends to multiobjective optimization (and improves in some cases) Theorem 3.3 in Andreani, Martínez and Svaiter [10], Theorem 2.1 in Haeser and Schuverdt [8] and Theorem 2.1 (with \(I=\emptyset \)) in Andreani, Haeser and Martínez [9].

Next we establish that the reciprocal of Theorem 3.1 is true for convex programs.

Theorem 3.2

Assume that \(f_l\) (\(l=1,\dots , p\)) and \(g_j\) (\(j=1,\dots m\)) are convex and \(h_i\) (\(i=1,\dots , r\)) are affine. If \(x^0\in S\) satisfies the AKKT condition and (SCZ) is fulfilled, then \(x^0\) is a (global) weak efficient solution of (MOP).

Proof

Suppose that \(x^0\) is not a weak efficient solution. Then, there exists \(\hat{x}\in S\) such that

Let \((x^k)\), and \((\lambda ^k,\mu ^k,\tau ^k)\) be the sequences that satisfy (A0)–(A3). Without any loss of generality we may assume that \(\lambda ^k\rightarrow \lambda ^0\), with \(\lambda ^0\ge 0\) and \(\sum _{l=1}^p \lambda _l^0=1\). As \(f_l\), \(g_j\) are convex and \(h_i\) are affine, for all k one has

Multiplying (14) by \(\lambda _l^k\), (15) by \(\mu _j^k\) and (16) by \(\tau _i^k\) and adding up, it results (the first inequality is valid because \(\hat{x}\in S\))

where \(\gamma _k=(\sum _{l=1}^p \lambda _l^k \nabla f_l(x^k)+\sum _{j=1}^m \mu _j^k \nabla g_j(x^k)+ \sum _{i=1}^{r} \tau _i^k \nabla h_i(x^k))(\hat{x}-x^k)\). As \(x^k\rightarrow x^0\), \(\gamma _k\rightarrow 0\) by (A1) and \(\sum _{j=1}^m \mu _j^k g_j(x^k)+ \sum _{i=1}^{r} \tau _i^k h_i(x^k)\rightarrow 0\) by (SCZ), taking the limit in (17) we obtain

As \(\lambda ^0\ge 0\) and \(\lambda ^0\ne 0\), from (13) it follows that \(\sum _{l=1}^p \lambda _l^0 f_l(\hat{x}) < \sum _{l=1}^p \lambda _l^0 f_l(x^0)\), which contradicts (18). \(\square \)

This theorem extends to multiobjective optimization Theorem 4.2 in Andreani, Martínez and Svaiter [10] and Theorem 2.2 in Haeser and Schuverdt [8].

We illustrate Theorem 3.2 with the following example.

Example 3.3

Consider problem (MOP) with the following data:

With these data, \(x^0\) satisfies AKKT and moreover (SCZ) holds, so by applying Theorem 3.2 we conclude that \(x^0\) is a global weak efficient solution.

The conclusion of Theorem 3.2 cannot be that \(x^0\) is a global efficient solution as this example shows, because \(x^0\) is not efficient since \(f(2,0)\le f(x^0)\) and \(f(2,0)\ne f(x^0)\).

4 Relations of the AKKT Condition with Other Optimality Conditions

Next we state necessary optimality conditions, which are alternative to the conditions of Theorem 3.1 and we study the relations with AKKT conditions. These conditions, in scalar optimization, are called by Bertsekas and Ozdaglar [13] “enhanced Fritz John” conditions because the associated multipliers have some additional properties (see also Hestenes [4, Theorem5.7.1]).

Definition 4.1

We say that a point \(x^0\in S\) satisfies the enhanced Fritz John conditions (EFJ) (or it is an EFJ-point) iff there exist \((\lambda ,\mu ,\tau )\in \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) such that

- (EFJ1):

-

\(\sum \nolimits _{l=1}^{p} \lambda _l\nabla f_l(x^0)+\sum \nolimits _{j=1}^m \mu _j\nabla g_j(x^0)+ \sum \nolimits _{i=1}^{r} \tau _i\nabla h_i(x^0)= 0,\)

- (EFJ2):

-

\((\lambda ,\mu ,\tau )\ne 0\),

- (EFJ3):

-

if the index set \(J\cup I\) is nonempty, where

$$\begin{aligned} J=\{j: ~\mu _j\ne 0\},\quad I=\{i:~\tau _i\ne 0\}, \end{aligned}$$then there exists a sequence \((x^k)\subset \mathbb {R}^p\) that converges to \(x^0\) and is such that, for all k,

$$\begin{aligned}&\mu _j g_j(x^k)>0,\, \forall j\in J,~~ \tau _i h_i(x^k)>0, \, \forall i\in I, \end{aligned}$$(19)$$\begin{aligned}&g_j(x^k)_+=o(w(x^k)),\, \forall j\notin J,~~ |h_i(x^k)|=o(w(x^k)),\, \forall i\notin I, \end{aligned}$$(20)where

$$\begin{aligned} w(x)=\min \left\{ \min _{j\in J}\{g_j(x)_+\},\min _{i\in I}\{ |h_i(x)|\}\right\} . \end{aligned}$$(21)

We say that \(x^0\) satisfies the strong EFJ-condition (or it is a strong EFJ-point) iff it satisfies (EFJ1)–(EFJ3) and, in addition, the sequence \((x^k)\) in (EFJ3) satisfies

We say that \(x^0\) satisfies the weak EFJ-condition (or it is a weak EFJ-point) iff it satisfies (EFJ1)–(EFJ3), but conditions (20)–(21) are removed.

Remark 4.1

Condition (EFJ3) implies \(\mu _j=0\) if \(g_j(x^0)<0\), i.e., the usual complementary condition, since if \(\mu _j>0\), then in view of (19) one has that \(g_j(x^k)>0\) for \(x^k\) near \(x^0\), and this is a contradiction.

Extending the above definition, we say that \(x^0\in S\) is an EKKT-point iff it satisfies the conditions (EFJ1), (EFJ3) and, moreover, \(\lambda \ne 0\) instead of (EFJ2). Similarly it is defined a strong EKKT-point and a weak EKKT-point.

In scalar optimization, multipliers satisfying (EFJ1), (EFJ2) and (EFJ3) with (22) are called by Bertsekas and Ozdaglar [13] informative multipliers. See [13] for interesting properties of this class of multipliers. Let us observe that (EFJ3) with (22) says that, whenever \((\mu ,\tau )\ne 0\), the constraints whose indexes are in the set \(J\cup I\) can be violated by a sequence of (infeasible) points \(x^k\) converging to \(x^0\), that improve all the objectives \(f_l\); the remaining constraints, whose indices do not belong to \(J\cup I\), may also be violated, but the degree of their violation is arbitrarily small relative to the other constraints according to (20)–(21). Another consequence of (19) is the following: if \(g_j(x)\le 0\) on some neighborhood of \(x^0\), then \(\mu _j=0\).

In order to obtain a multiplier rule with \(\lambda \ne 0\), usually a constraint qualification is utilized. The following constraint qualification is one of the weakest and was introduced by Hestenes [4].

Definition 4.2

We say that \(x^0\in S\) satisfies the quasi-normality constraint qualification (QNCQ) iff there is not any multiplier \((\mu ,\tau )\in \mathbb {R}_+^m\times \mathbb {R}^r\) such that

-

(i)

\((\mu ,\tau )\ne 0\),

-

(ii)

\(\sum _{j=1}^m \mu _j\nabla g_j(x^0)+\sum _{i=1}^{r} \tau _i\nabla h_i(x^0)=0\),

-

(iii)

in every neighborhood of \(x^0\) there is a point \(x\in \mathbb {R}^n\) such that \(\mu _j g_j(x)>0\) for all j having \(\mu _j\ne 0\), and \(\tau _i h_i(x)>0\) for all i having \(\tau _i\ne 0\).

It is well known that quasi-normality implies regularity (see Hestenes [4, Theorem5.8.1]), i.e., the Abadie constraint qualification is satisfied, and this property implies the existence of KKT multipliers at a local minimum.

Next, we establish that a point satisfying conditions AKKT and (E1)–(E2) is an EFJ-point, and if additionally quasi-normality holds, then it is an EKKT-point.

Theorem 4.1

Assume that \(x^0\in S\) satisfies the AKKT condition with the sequences \((x^k)\) and \((\lambda ^k,\mu ^k,\tau ^k)\) and condition (E1), with \(c_k=b_k\) for all k. Then,

-

(i)

\(x^0\) is an EFJ-point.

-

(ii)

If, in addition, condition (E2) is satisfied, then \(x^0\) is a strong EFJ-point.

Proof

We simultaneously prove parts (i) and (ii). Let \(t_k:=\Vert (\lambda ^k,\mu ^k,\tau ^k)\Vert \), where \(\Vert ~\Vert \) is the \(\ell _1\)-norm. Then, \(t_k\ge 1\) since \(\sum _{l=1}^{p} \lambda _l^k=1\) and \(\lambda ^k\ge 0\). As \(\Vert \frac{1}{t_k}(\lambda ^k,\mu ^k,\tau ^k)\Vert =1\) \(\forall k\), without any loss of generality we may suppose that \(\frac{1}{t_k}(\lambda ^k,\mu ^k,\tau ^k) \rightarrow (\lambda ^0,\mu ^0,\tau ^0)\) with \((\lambda ^0,\mu ^0,\tau ^0)\ne 0\). Clearly \(\lambda ^0\ge 0\) and \(\mu ^0\ge 0\). Dividing in (A1) by \(t_k\) and taking the limit it results (EFJ1) (with \((\lambda ,\mu ,\tau )=(\lambda ^0,\mu ^0,\tau ^0)\)).

For checking condition (EFJ3), assume that \((\mu ^0,\tau ^0)\ne 0\) and set \(s_k:=\sum _{j=1}^m \mu _j^k g_j(x^k) + \sum _{i=1}^{r} \tau _i^k h_i(x^k)\). By Remark 3.2(i), one has that (SGN) holds.

If \(\mu _j^0>0\), as \(\mu _j^0=\lim \frac{\mu _j^k}{t_k}\), then it follows that \(\mu _j^k>0\) for all k large enough. By Remark 3.2(i), we derive that \(g_j(x^k)>0\) [the first part of (19) holds] and so \(\mu _j^0g_j(x^k)>0\) and \(\mu _j^k g_j(x^k)>0\) for all k large enough. Therefore, taking into account (10), one has \(s_k>0\).

If \(\tau _i^0\ne 0\), as \(\tau _i^0=\lim \frac{\tau _i^k}{t_k}=\lim \frac{c_k h_i(x^k)}{t_k}\) one has \(h_i(x^k)\ne 0\) and the second part of (19) follows. To prove (22), we analyze two possibilities:

-

(a)

If \(\tau _i^0>0\), then \(\tau _i^k>0\) and \(h_i(x^k)>0\), so \(\tau _i^k h_i(x^k)>0\) and \(\tau _i^0 h_i(x^k)>0\) for all k large enough. In consequence, according with (10), one has \(s_k>0\).

-

(b)

If \(\tau _i^0<0\), then \(\tau _i^k<0\) and \(h_i(x^k)<0\), so \(\tau _i^k h_i(x^k)>0\) and \(\tau _i^0 h_i(x^k)>0\) for all k large enough. In consequence, in view of (10), one has \(s_k>0\).

Therefore, if \((\mu ^0,\tau ^0)\ne 0\), then one has \(s_k>0\), and consequently, making use of (E2), \(f_l(x^0)-f_l(x^k)<0\) for all \(l=1,\dots ,p\) and condition (22) is proved.

In order to prove (20), assume that in (E1) \(c_k=b_k\) for all k. Then, \(g_j(x^k)_+=\frac{\mu _j^k}{b_k}\) for \(j=1,\dots ,m\) and \(|h_i(x^k)|=\frac{|\tau _i^k|}{b_k}\) for \(i=1,\dots ,r\), and so

Therefore

Now, clearly this quotient tends to zero for \(j_0\notin J\) because \(\mu _{j_0}/t_k\rightarrow \mu _{j_0}^0=0\) and the denominator is equal to \(\varepsilon _k\ge \alpha >0\) for some \(\alpha \) and for all k large enough, since \(\mu _j^k/t_k\rightarrow \mu _j^0>0\) \(\forall j\in J\) and \(|\tau _i^k|/t_k\rightarrow |\tau _i^0|>0\) \(\forall i\in I\). \(\square \)

Remark 4.2

Note that the multipliers given in Theorem 3.1, which are defined by (7), satisfy condition (E1) with \(b_k=c_k\).

Proposition 4.1

If \(x^0\in S\) is an EFJ-point (respectively strong EFJ-point or weak EFJ-point) and (QNCQ) holds, then \(x^0\) an EKKT-point (respectively strong EKKT-point or weak EKKT-point).

Proof

If \(\lambda ^0=0\), it follows that (QNCQ) does not hold since in every neighborhood of \(x^0\) there is a point \(x^k\) that does not satisfy condition (iii) of the definition of (QNCQ). \(\square \)

Part (ii) of Theorem 4.1, taking into account Proposition 4.1, extends to multiobjective optimization and improves Theorem 2.3 in Haeser and Schuverdt [8], which is true for scalar problems and where it is not required that the multipliers satisfy (20)–(22).

The following result is an immediate consequence of Theorems 3.1 and 4.1 taking into account Remark 4.2 and Proposition 4.1.

Theorem 4.2

If \(x^0\in S\) is a local weak solution of (MOP), then

-

(i)

\(x^0\) is a strong EFJ-point.

-

(ii)

If, in addition, (QNCQ) holds at \(x^0\), then \(x^0\) is a strong EKKT-point.

Part (i) of this theorem extends to multiobjective optimization and improves Theorem 5.7.1 in Hestenes [4] and Proposition 3.3.5 in Bertsekas [15] (in both, only it is proved that \(x^0\) is a weak EFJ-point). It also extends (partially) Proposition 2.1 in Bertsekas and Ozdaglar [13], where a scalar problem is considered with equality and inequality constraints, but also with a set constraint.

Next we introduce a new notion of approximate KKT type, requiring boundedness of the multipliers, and then we will prove that this condition is stronger than AKKT, and we will study its relations with weak EKKT-points and KKT-points.

Definition 4.3

We say that the bounded approximate Karush–Kuhn–Tucker condition (BAKKT, in short) is satisfied at a feasible point \(x^0\in S\) iff there exist sequences \((x^k)\subset \mathbb {R}^n\) and \((\lambda ^k,\mu ^k,\tau ^k)\subset \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) such that conditions (A0)–(A3) hold and

Clearly condition (23) can be replaced with the convergence condition of the multipliers:

Proposition 4.2

If \(x^0\in S\) satisfies (BAKKT), then \(x^0\) satisfies also the AKKT condition and, in addition, (CVG) holds.

Proof

We only have to prove condition (CVG), but this follows immediately from (24), the continuity of \(g_j\) and \(h_i\) and the fact that \(x^k\rightarrow x^0\). \(\square \)

The converse implication is not true, as the next example shows.

Example 4.1

Consider problem (MOP) with the data \(f(x_1,x_2)=x_1\) and \(g(x_1,x_2)=-x_1^3+x_2^2\). Clearly \(x^0=(0,0)\) is a minimum of f subject to \(g\le 0\). So by Theorem 3.1, the AKKT condition is satisfied.

Let us check that (BAKKT) does not hold. Suppose that there exist sequences \(x^k=(x_1^k,x_2^k)\in \mathbb {R}^2\) and \(\mu ^k\in \mathbb {R}_+\) such that \(x^k\rightarrow x^0\) and

From here,\((1,0)+\mu ^k\left( -3(x_1^k)^2,2x_2^k\right) =(w_1^k,w_2^k)\). Therefore there is solution \(\mu ^k=\frac{1-w_1^k}{3(x_1^k)^2}\rightarrow +\infty \) whenever \(x_1^k\ne 0\) and \(\frac{x_2^k}{(x_1^k)^2}\rightarrow 0\). So every solution \((\mu ^k)\) is not bounded, and consequently (BAKKT) is not satisfied at \(x^0\). Note that this can also be deduced from Theorem 4.4 since \(x^0\) is not a KKT-point.

Let us note that by Theorem 4.2(i), \(x^0\) is an EFJ-point, and also that any constraint qualification does not hold because \(x^0\) is not a KKT-point.

Next theorem proves that the converse of Proposition 4.2 is true if a constraint qualification holds at the point \(x^0\).

Recall that the Mangasarian–Fromovitz constraint qualification (MFCQ) holds at \(x^0\in S\) if the gradients \(\nabla h_1(x^0),\dots , \nabla h_r(x^0)\) are linearly independent and there exists a vector \(d\in \mathbb {R}^n\) such that \(\nabla g_j(x^0)d<0\) for all \(j\in J(x^0)\) and \(\nabla h_i(x^0)d=0\) for all \(i=1,\dots ,r\). It is well known that (MFCQ) is equivalent to the positive linearly independent constraint qualification [4], i.e., the following implication is true: \(\mu _j\ge 0\), \(j\in J(x^0)\), \(\tau \in \mathbb {R}^r\),

It is also known that (MFCQ) is stronger than the so-called constant positive linear dependence condition, which implies (QNCQ) [16].

Theorem 4.3

Assume that (MFCQ) holds at \(x^0\). Then, for every pair of sequences \((x^k)\subset \mathbb {R}^n\) and \((\lambda ^k,\mu ^k,\tau ^k)\subset \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) that satisfy (A0)–(A3), one has that the second sequence is bounded.

Proof

Let \((s_k)\) be the sequence defined by

If the sequence \((\mu ^k,\tau ^k)\) is not bounded, then, without any loss of generality we can suppose that \(t_k:=\Vert (\mu ^k,\tau ^k)\Vert \rightarrow +\infty \) and that \(\frac{1}{t_k}(\mu ^k,\tau ^k)\) converges to \((\mu ^0,\tau ^0)\) with \(\Vert (\mu ^0,\tau ^0)\Vert =1\). Dividing (25) by \(t_k\), one has

The sequence \((\lambda ^k)\) is bounded since \(\lambda \ge 0\) and (A2) holds. So \(\lambda ^k/t_k\rightarrow 0\), and as \(s_k/t_k\rightarrow 0\), taking the limit it results

By (A3), \(\mu _j^0=0\) for all \(j\notin J(x^0)\). With this observation, (26) contradicts (MFCQ) because \((\mu ^0,\tau ^0)\ne 0\) and \(\mu ^0\ge 0\). Therefore, the sequence \((\mu ^k,\tau ^k)\) is bounded and so condition (23) holds. \(\square \)

Definition 4.4

We say that a feasible point \(x^0\in S\) is a KKT-point iff there exist \((\lambda ,\mu ,\tau )\subset \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) such that

Theorem 4.4

If \(x^0\in S\) satisfies (BAKKT), then \(x^0\) is a KKT-point.

Proof

Let \((x^k)\), \((\lambda ^k,\mu ^k,\tau ^k)\) be the sequences that satisfy (A0)–(A3) and (24). Then, taking the limit in (A1) and (A2), we obtain

and \(\sum _{l=1}^{p} \lambda _l^0=1\), with \(\lambda ^0\ge 0\), \(\mu ^0\ge 0\). The condition \(\mu _j^0g_j(x^0)=0\) for all \(j\notin J(x^0)\) follows from (A3). \(\square \)

The following result is a direct consequence of Theorems 4.3 and 4.4

Theorem 4.5

If \(x^0\in S\) satisfies the AKKT condition and (MFCQ) holds at \(x^0\), then \(x^0\) is a KKT-point.

Following a notion due to Dutta et al. [11], given a sequence of positive numbers \(\varepsilon _k\downarrow 0\) and points \(x^k\rightarrow x^0\), we say that \((x^k)\) is a sequence of \(\varepsilon _k\)-KKT-points if there exist \((\lambda ^k,\mu ^k,\tau ^k)\subset \mathbb {R}_+^p\times \mathbb {R}_+^m\times \mathbb {R}^r\) such that

\(\mu _j^kg_j(x^k)=0\) for \(j=1,\dots ,m\) and (A2) holds.

Note that we do not require \(x^k\) to be feasible. It is obvious that, if \((x^k)\) is a sequence of \(\varepsilon _k\)- KKT-points, then \(x^0\) satisfies the AKKT condition, and so the following result is an immediate consequence of Theorem 4.5.

Proposition 4.3

If \((x^k)\) is a sequence of \(\varepsilon _k\)-KKT-points and (MFCQ) holds at \(x^0\), then \(x^0\) is a KKT-point.

Theorem 3.2 in Dutta et al. [11] is a particular case of Proposition 4.3. These authors only consider a scalar function f and inequality constraints.

Theorem 4.6

If \(x^0\in S\) is a KKT-point, then \(x^0\) is a weak EKKT-point. In addition, the sequence \((x^k)\) satisfying (19) also satisfies

Proof

To give a unified treatment to the multipliers we transform the multipliers associated with equality constraints as follows: if \(\tau _i\ge 0\), then we define \(g_{m+i}(x):=h_i(x)\) and \(\mu _{m+i}:=\tau _i\); if \(\tau _i< 0\), then we define \(g_{m+i}(x):=-h_i(x)\) and \(\mu _{m+i}:=-\tau _i>0\). In this way, (K1) can be written

where \(s=m+r\). Rearranging, we may assume that \(\mu _1,\dots ,\mu _q\) are positive and \(\mu _{q+1},\dots ,\mu _s\) are zero. Let us observe that the indices j satisfying (K3) are in the second group. Through the following reduction process, we can assume that the gradients \(\nabla g_1(x^0),\dots ,\nabla g_q(x^0)\) are positive linearly independent.

Indeed, assume that there exist nonnegative numbers \(\alpha _1,\dots ,\alpha _q\), not all zero, such that \(\sum _{j=1}^q\alpha _j\nabla g_j(x^0)=0\). Then, the multipliers \(\hat{\mu }_j=\mu _j-t\alpha _j\) (\(j=1,\dots ,q\)), \(\hat{\mu }_j=\mu _j\) (\(\forall j>q\)) satisfy (28) for all \(t\in \mathbb {R}\). So we can choose \(t_0>0\) so that \(\hat{\mu }_j\ge 0\) \(\forall j=1,\dots , q\) and \(\hat{\mu }_{j_0}=0\) for some index \(j_0\le q\). So, rearranging we obtain a proper subset of subgradients \(\nabla g_1(x^0),\dots ,\nabla g_{q'}(x^0)\) and positive multipliers \(\hat{\mu }_1,\dots ,\hat{\mu }_{q'}\) with \(q'<q\) (the rest of multipliers is zero).

If \(q'=0\) (i.e., if for \(t_0\), \(\hat{\mu }_j=0\) for all \(j=1,\dots ,q\)), then (28) is satisfied with \(\mu _j=0\), \(j=1,\dots , s\), and there is nothing to do in order to prove (EFJ3).

If \(q'>0\), repeating the process if necessary, we can assume that the gradients \(\nabla g_1(x^0),\dots ,\nabla g_q(x^0)\) are positive linearly independent. So, by a theorem of the alternative, there exists \(u\in \mathbb {R}^n\) such that

As \(g_j(x^0)=0\), there exists \(\delta >0\) such that \(g_j(x^0+tu)>0\) \(\forall t\in ]0,\delta [\) and for all \(j=1,\dots ,q\). Therefore (22) is satisfied for this set of multipliers since \(\mu _j g_j(x(t))>0\) \(\forall t\in ]0,\delta [\) and for all \(j=1,\dots ,q\), where \(x(t)=x^0+tu\). Let us observe that, if \(g_j=-h_i\) (and \(\mu _j=-\tau _i>0\)), then one has \(\tau _i h_i(x(t))>0\). This proves that \(x^0\) is a weak EKKT-point.

Finally, if we apply (28) to the vector u, then it results that

and so for a suitable \(\delta >0\) we have that \(\sum _{l=1}^{p} \lambda _l (f_l(x(t))- f_l(x^0))<0\). \(\square \)

Corollary 4.1

The following statements are equivalent for \(x^0\in S\):

-

(a)

\(x^0\) is a KKT-point.

-

(b)

\(x^0\) is a weak EKKT-point.

-

(c)

\(x^0\) satisfies (BAKKT).

Proof

(a) \(\Rightarrow \) (b) and (c) \(\Rightarrow \) (a) follow from Theorems 4.6 and 4.4, respectively. Let us prove the implication (b) \(\Rightarrow \) (c). Assume that \(x^0\) is a weak EKKT-point. We may suppose that (EFJ1) is satisfied with \(\sum _{l=1}^p\lambda _l=1\). Then, we can choose \(x^k=x^0\), \(\lambda ^k=\lambda \), \(\mu ^k=\mu \), \(\tau ^k=\tau \), and it is obvious that (BAKKT) is satisfied. \(\square \)

5 Conclusions

We have presented two type of results: sequential optimality conditions and enhanced FJ and KKT conditions for differentiable multiobjective optimization problems with equality and inequality constraints.

In scalar optimization, Bertsekas and Ozdaglar [13] considered a problem with a set constraint, but in [8–10, 16] the set constraint is not considered. It would be very interesting to extend the results of the present paper to a differentiable multiobjective problem with a set constraint, in such a way that in particular the results of [13] will be also generalized.

In the proof of Theorem 3.1, mainly properties of Clarke subdifferential are used. For this reason, we think that another promising research line is to extend our results to a multiobjective problem involving locally Lipschitz functions.

Another interesting investigation line is to study a necessary sequential AKKT condition for a point to be a solution of a vector variational inequality extending the results in [8, Sect. 3]. We believe that the sequential optimality conditions here exposed may be useful in order to generate or improve algorithms. Thus, in our opinion, further investigation is needed to provide suitable conditions that can be utilized in numerical structures for solving multiobjective problems and therefore for practical applications. See Haeser and Melo [12] where an AKKT-type condition is discussed as a stop criterion for an interactive algorithm.

References

Kortanek, K.O., Evans, J.P.: Asymptotic Lagrange regularity for pseudoconcave programming with weak constraint qualification. Oper. Res. 16, 849–857 (1968)

Fiacco, A.V., McCormick, G.P.: The slacked unconstrained minimization technique for convex programming. SIAM J. Appl. Math. 15, 505–515 (1967)

Zlobec, S.: Extensions of asymptotic Kuhn–Tucker conditions in mathematical programming. SIAM J. Appl. Math. 21, 448–460 (1971)

Hestenes, M.R.: Optimization Theory: The Finite Dimensional Case. Wiley, New York (1975)

McShane, E.J.: The Lagrange multiplier rule. Am. Math. Mon. 80, 922–925 (1973)

Craven, B.D.: Modified Kuhn–Tucker conditions when a minimum is not attained. Oper. Res. Lett. 3, 47–52 (1984)

Trudzik, L.I.: Asymptotic Kuhn–Tucker conditions in abstract spaces. Numer. Funct. Anal. Optim. 4, 355–369 (1982)

Haeser, G., Schuverdt, M.L.: On approximate KKT condition and its extension to continuous variational inequalities. J. Optim. Theory Appl. 149, 528–539 (2011)

Andreani, R., Haeser, G., Martínez, J.M.: On sequential optimality conditions for smooth constrained optimization. Optimization 60, 627–641 (2011)

Andreani, R., Martínez, J.M., Svaiter, B.F.: A new sequential optimality condition for constrained optimization and algorithmic consequences. SIAM J. Optim. 20, 3533–3554 (2010)

Dutta, J., Deb, K., Tulshyan, R., Arora, R.: Approximate KKT points and a proximity measure for termination. J. Glob. Optim. 56, 1463–1499 (2013)

Haeser, G., Melo, V.V.: Convergence detection for optimization algorithms: approximate-KKT stopping criterion when Lagrange multipliers are not available. Oper. Res. Lett. 43, 484–488 (2015)

Bertsekas, D.P., Ozdaglar, A.E.: Pseudonormality and a Lagrange multiplier theory for constrained optimization. J. Optim. Theory Appl. 114, 287–343 (2002)

Clarke, F.H.: Optimization and Nonsmooth Analysis. Wiley, New York (1983)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Andreani, R., Martínez, J.M., Schuverdt, M.L.: On the relation between constant positive linear dependence condition and quasinormality constraint qualification. J. Optim. Theory Appl. 125, 473–483 (2005)

Acknowledgments

This research was partially supported (for the second and third author) by the Ministerio de Economía y Competitividad (Spain) under project MTM2012-30942. The authors are grateful to the associate editor and the anonymous referees for their helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Fabian Flores-Bazán.

Rights and permissions

About this article

Cite this article

Giorgi, G., Jiménez, B. & Novo, V. Approximate Karush–Kuhn–Tucker Condition in Multiobjective Optimization. J Optim Theory Appl 171, 70–89 (2016). https://doi.org/10.1007/s10957-016-0986-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-016-0986-y

Keywords

- Approximate optimality conditions

- Sequential optimality conditions

- Enhanced Fritz John conditions

- Enhanced Karush–Kuhn–Tucker conditions

- Vector optimization problems