Abstract

This paper presents a computationally efficient technique for reduction of blur caused by handshakes in images captured by mobile devices. This technique uses a short-exposure or a low-exposure image that is captured at the same time a normal or auto-exposure image is captured. The short-exposure image is enhanced by utilizing low rank image approximation of the auto-exposure image without requiring any user specified parameters. Based on the three quantitative measures of image quality, it is shown that this technique outperforms similar techniques used for image deblurring while it also offers computational efficiency. A GPU implementation of this technique is also reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When capturing images by mobile devices, sometimes they appear blurred due to handshakes. Users often recapture images to address such blurs. However, in many cases, the moment of interest cannot be repeated. In this work, a computationally efficient technique has been developed to deblur such blurred images based on two images that are captured with two different exposures or shutter speeds. First, a normal or auto-exposure image is captured. Then, a second image with a short-exposure or shutter speed is captured automatically with no user intervention immediately after the normal exposure image is taken. It is also possible to generate the short-exposure image electronically by allocating a memory location to a shorter duration of the exposure time of the normal or auto-exposure image. This second image appears deblurred but dark looking. The developed technique uses the information from both of the images to generate a deblurred image.

The problem of image deblurring has been extensively studied in the image processing literature. Most existing techniques, e.g. [1–4], attempt to estimate the point spread function (PSF) of the camera motion and use it to achieve deblurring by applying deconvolution filtering. Such techniques are not only computationally demanding, but also they often generate undesirable deconvolution ringing or artifacts.

In [3, 4], an inertial measurement unit (IMU), which is now available on mobile devices, was used to estimate the camera motion PSF. In [5, 6], handshake blur removal techniques were reported based on a short-exposure image and a normal exposure image. In [6], an adaptive tonal correction (ATC) technique was introduced to enhance the short-exposure image to obtain a deblurred image using the statistics of the normal or auto-exposure image. In [7], it was shown that the ATC technique achieved a better outcome compared to the IMU estimated PSF technique since in practice an accurate calibration between the camera and the IMU is not easily achievable.

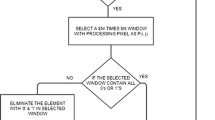

Inspired by the ATC technique, an alternative blur reduction technique is presented in this paper that is computationally more efficient than the ATC technique. This new technique does not require any search to be performed as required by the ATC technique, thus making it more suitable for utilization on mobile platforms. The first step of this technique involves a low rank image matrix approximation using singular value decomposition (SVD) and the use of Akaike information criterion (AIC) to find an appropriate number of eigenvalues from the normal or auto-exposure but blurred image. This approximation image remains undistorted as a result of blurring and contains the image brightness and contrast information. The second step consists of incorporating the eigenvalues of the approximation image into the short-exposure image.

The rest of the paper is organized as follows: Sect. 2 presents a description of the developed technique for image blur reduction while Sect. 3 presents the improvement gained in computational efficiency compared with the ATC technique. Section 4 includes the GPU implementation of the developed technique. Finally, the results and conclusion are stated in Sects. 5 and 6, respectively.

2 Low rank image approximation via singular value decomposition

In general, an image/matrix I with size \( m \times n \) can be decomposed into three matrices via SVD, that is

where \( {\mathbf{U}} = [{\mathbf{u}}_{1} \, {\mathbf{u}}_{2} \ldots {\mathbf{u}}_{m} ] \) and \( {\mathbf{V}} = [{\mathbf{v}}_{1} \, {\mathbf{v}}_{2} \ldots {\mathbf{v}}_{n} ] \) denote unitary matrices, \( \varSigma = {\text{diag}}(\sigma_{1} ,\sigma_{2} , \ldots ,\sigma_{m} ) \) denotes a \( m \times n \) diagonal matrix with singular values \( \sigma_{1} \ge \sigma_{2} \cdots \ge \sigma_{m} \ge 0 \).

2.1 Low rank approximation image

Consider a blurred image \( \hat{I} \) of size \( m \times n \) where m ≤ n. This image can be expressed as:

where \( E \) represents a low rank approximation image, \( R \) the detail content of the image and \( Z \) the blurring effect. In general, \( E \) does not suffer from the blurring effect as it is rank deficient:

The SVD of \( E \) can be expressed as follows:

where

are unitary matrices, \( \varSigma_{{E_{1} }} = {\text{diag}}(\sigma_{1} ,\sigma_{2} , \ldots ,\sigma_{p} ) \in {\mathbb{R}}^{p \times p} \) is a diagonal matrix consisting of the eigenvalues of E. For simplicity, let \( T = R + Z \). As a result, \( \hat{I} \) can be written as \( \hat{I} = E + T \). By utilizing the property of unitary matrices (i.e., \( {\mathbf{VV}}^{t} = I \)), the image \( \hat{I} \) can be rewritten as:

The SVD based on Eq. (5) can be stated as

where

In Eq. (6), there exists a gap in the eigenvalue matrix in which the smallest eigenvalue in \( \tilde{\varSigma }_{{E_{1} }} \) is larger than the largest one in \( \tilde{\varSigma }_{{E_{2} }} \). As shown in Eqs. (3) and (4), the eigenvalue matrix \( \tilde{\varSigma }_{{E_{1} }} \) matrix of \( E \) has \( p \) numbers of eigenvalues. These \( p \) eigenvalues belong to the low rank approximation image conveying the mean, contrast and a rough structure of the image \( \hat{I} \). The \( m - p \) eigenvalues of \( \tilde{\varSigma }_{{E_{2} }} \) can be interpreted as the eigenvalues of the detail and the blurred component of the image \( \hat{I} \) .

3 Akaike information criterion

For deblurring purposes, it is first required to determine the number of eigenvalues to represent the low rank approximation image. Several methods have been utilized for this purpose and a comprehensive study can be found in [8]. The eigenvalues \( (\sigma_{1} ,\sigma_{2} , \ldots \sigma_{p} ) \) of the low rank approximation image has this property:

The remaining \( m - p \) eigenvalue \( (\sigma_{p + 1} ,\sigma_{p + 2} , \ldots \sigma_{m} ) \) correspond to the subspace with

To determine the low rank value \( p \) from a set of given eigenvalues, several measures have been considered including the ratio \( {\raise0.7ex\hbox{${\sigma_{p} }$} \!\mathord{\left/ {\vphantom {{\sigma_{p} } {\sigma_{p + 1} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${\sigma_{p + 1} }$}} \), the eigenvalue difference \( \sigma_{p} - \sigma_{p + 1} \) and the percentage of total power energy \( \sigma_{p} /\mathop \sum \nolimits_{i = 1}^{m} \sigma_{i} \) [9]. In practice, when these measures are used for the deblurring application under consideration here, they fluctuate when the number of eigenvalues is varied due to different amounts of blurring effect present in an image. A user-defined threshold thus needs to be applied.

To avoid specifying a user-defined threshold, the Akaike information criterion (AIC) is used here [10, 11]. The advantage of using AIC is that it does not require a prior threshold value. AIC is defined as follows:

As can be seen in Fig. 1b, the AIC value is decreasing by considering the top first few numbers of eigenvalues in the low rank image. This part of image usually covers higher eigenvalues, and the lower eigenvalues in AIC appear to be linear increasing. Thus, we select the minimum of AIC function to determine the number of eigenvalue in low rank image. The number of eigenvalues \( p^{\text{AIC}} \in \{ 1,2, \ldots ,m\} \) is determined here

By replacing \( p^{\text{AIC}} \) with the low rank approximation image \( E \) in Eq. (4), the SVD of \( E \) can be expressed as

where \( \otimes \) denotes the outer product. A typical outcome when using the AIC and the eigenvalue ratio measures are shown in Fig. 1. As can be seen from this figure, the eigenvalue ratio generates multiple similar ratios while the AIC provides an easily identifiable minimal value.

3.1 Eigenvalue transformation

After obtaining the number of eigenvalues of the low rank approximation image, the next step consists of adjusting the eigenvalues to enhance the brightness and contrast of the short-exposure image. It is important to keep the original singular value ratio of the short-exposure image to avoid introducing distortions. This is achieved by considering this enhancement ratio based on the eigenvalues of \( I^{s} \)

The enhanced image \( I_{d} \) recovered from the short-exposure image can then be written as:

where \( {\mathbf{U}}^{s} \), \( \varSigma^{s} \) and \( V^{{s^{t} }} \) are the SVD of the short-exposure image \( I^{s} \) and \( \varSigma^{S}_{d} \) represents the enhanced eigenvalue matrix. As can be seen, this approach does not require any search iterations for finding the enhancement parameters as done in the ATC technique. In other words, the information of the blurred image is directly used to enhance the short-exposure image.

4 Computational efficiency

The computational complexity of performing SVD a matrix of size \( m \times n \) is \( O(mn^{2} ) \). Thus, it would be computationally demanding to process high-resolution images. To gain computational efficiency, 2D discrete wavelet transform (DWT) [12] is used here to transform an image to a lower resolution image. For an image of size \( m \times n \), let the wavelet function be \( \phi \) and the scaling function be \( \psi \) for a single level of two-dimensional DWT with the approximation coefficient image \( W_{\phi } \), horizontal detail image \( W_{\psi }^{H} \), vertical detail image \( W_{\psi }^{V} \) and diagonal detail image \( W_{\psi }^{D} \), each being of size \( (m/2,n/2) \). Since the approximation coefficient image \( W_{\phi } \) provides an approximate representation of the original image at a lower resolution level, our blur reduction technique is first applied to the approximation image to improve the computational efficiency.

4.1 Computational complexity comparison

The computational complexity of the ATC technique is \( O(2*\alpha *\beta *m*n) \) for calculating the two tonal curve parameters \( \alpha \) and on a single channel image of size \( m \times n \). In [7], the parameter \( \alpha \) was \( \beta \) varied from 1 through 20 in 1.0 step size, and \( \beta \) was varied from 1 to 5 in 0.3 step size. This makes the computational complexity of the ATC technique \( O\left( {\frac{2000}{3}mn} \right) \). The computational complexity of the introduced technique is \( O\left( {\frac{{mn^{2} }}{{8^{r} }}} \right) \) with \( r \) representing the wavelet decomposition level. In practice, one level of wavelet decomposition is adequate to gain computational efficiency. However, if desired, higher levels of the wavelet decomposition can be considered to further reduce the computational complexity. The PSF technique in [1], applied Gaussian–Newton method for kernel parameter searching. Each iteration is \( O(m^{2} n^{2} ) \). In compared with, conventional PSF technique [2], it requires optical flow to extract PSF function. The computational complexity is \( O((a*l^{2} + w^{2} )*m*n) \), where \( a \) is number of iteration for searching optical flow, \( l \) is number of warp parameters, and \( w \) is the filter size for deconvolution. In convention, the warp parameters setting is \( l > 5 \), \( a \) may varied from 5 to 10 and \( w \) is selected to be 5–7. Thus, such computational complexity is not suitable for the real-time implementation.

5 Implementation aspects

In this section, the implementation aspects of capturing two consecutive images with two different exposures or shutter speeds are stated. In addition, a GPU implementation of the developed technique is reported.

5.1 Image capture pipeline

To capture two images consecutively and also with different exposures or shutter speed settings, the RGB raw image data are captured noting that in a conventional image acquisition pipeline, an image goes through several stages to complete its data transfer through a buffer and an encoder. This leads to delays and such delays may cause not capturing the same scene area due to handshakes. Thus, in our implementation, a camera with Mobile Industry Processor Interface-Camera Serial Interface (MIPI-CSI) was used to capture images. MIPI has become a commonly used interface protocol on mobile devices as it provides scalable serial interface for image data transfer to host/CPU processor. This way, the image raw data are directly mapped into a memory stack by enabling the camera output port. The memory size can be pre-defined based on a user-defined image size. This way delays caused by the data transfer are avoided. The pre-defined memory is also synchronized to the camera. The encoder and buffer are both deactivated. When the first image is captured, the image data are simultaneously mapped into the memory without a time delay. Next, the camera control parameters are updated using a different shutter speed. Meanwhile, the camera port is connected to a second memory space. As a result, two consecutive images get captured, each corresponding to a different exposure or shutter speed setting, while not suffering from the delay caused by the data buffer and encoder. The above implementation steps appear in Algorithm 1, and a timing chart comparison is provided in Fig. 2.

5.2 GPU implementation

A GPU implementation was done to gain further computational efficiency due to the many matrix and vector operations involved in the developed deblurring algorithm. Figure 3 shows the configuration of the GPU implementation. Captured image data are copied into the GPU memory for the wavelet transformation and SVD [13] computation. For the AIC part, only \( n \) numbers of eigenvalues are needed. Hence, these eigenvalues are extracted and sent to the CPU to run the AIC algorithm to avoid an extra GPU initialization step. Since the eigenvalue matrix \( \sum_{d} \) gets calculated and stored from the previous stage, the eigenvalue transformation is applied by using the vector scaling operation from the GPU. Also, the composition of \( \sum_{d}^{\prime } \) and \( V^{t} \) are done via vector scaling on the shared memory to avoid matrix multiplication, and the result can be easily pushed into the memory in a column-major ordering manner for the subsequent matrix multiplication and inverse wavelet transform.

6 Experimental results

In this section, sample experimental results when using the Daubechies D4 wavelet transform with r = 1 are provided. For the ATC algorithm, the search parameters were set according to the ones in [7]. Five sets of 15 images were captured with different image resolutions (width*height): Set 1 (800*600), Set 2 (960*720), Set 3 (1024*768) Set 4 (1296*864) and Set 5 (2592*1936). The developed technique was implemented on both a CPU and a GPU. The CPU version was implemented using C/C++ on a 2.5 GHz PC. The GPU version was implemented using CUDA [14] on a Geforce GT 650 GPU. All memory data were saved in column-major ordering manner. As shown in Fig. 4, the GPU implementation achieved higher computational throughput. The computational improvement was about 20 % for lower resolution images, while the computational improvement was about 50 % over that of the ATC technique for higher resolution images. Our implementation approximately used 7 % of CPU resource. The most of memory consumption is from the image storage on GPU and CPU, and memory usage takes (2*3*m*n) bytes on both GPU and CPU sides. In addition, the self-tunable transformation technique in [15] was applied using the comparison scheme provided in [7]. The self-tunable technique was found to be computationally very demanding taking close to one minute of processing time; thus, its time is not included in Fig. 4. Next, three images were consecutively captured from 60 different scenes. The first image was captured with a user-defined exposure or shutter speed with no handshake, while the second and the third images were captured with a short and a user-defined exposure or shutter speed in the presence of handshake movements. The first image was used as the reference. The resolution of the captured images was 1024*768, and the two short shutter speeds were 1/100 s and 1/200 s. Table 1 shows the average SSIM [16], PSNR [17, 18] and FSIM [19] image quality measures for each technique. From this table, it can be seen that the developed technique generated better outcome in both cases. A sample outcome is shown in Fig. 5. Figure 5a, b show the short-exposure and the handshake blurred images. The reference image appears in Fig. 5c, and the deblurred images by applying the ATC, self-tunable, and the introduced technique are shown in Fig. 5d–f, respectively. The cumulative histograms of the short-exposure, blurred, deblurred images are shown in Fig. 5g. From this figure, it is evident that the eigenvalues of the low rank approximation image provide a mean and contrast closer to the short-exposure image resulting in a histogram shape closer to the blurred image. In contrast, the ATC technique exhibits over-compensation of the short-exposure image since its parameters searching does not necessarily match the parameters of the short-exposure image.

The results for all the images examined are provided as supplementary materials to this paper. The deblurred results for sample images examined can be viewed at: http://www.utdallas.edu/~kehtar/Deblurring.html.

Finally, it is worth stating that an Android smartphone implementation and app of the deblurring technique discussed in this paper was also reported in [20]. This app can be downloaded from http://www.utdallas.edu/~kehtar/DeblurApp.apk.

7 Conclusion

A new approach for post deblurring of handshake blurred images captured by a mobile device was introduced in this work. An image acquisition pipeline was provided to capture two consecutive images with different shutter speeds. The introduced approach uses a short-exposure image which is captured at the same time a normal or auto-exposure image is captured. It involves appropriately selecting the eigenvalues of the blurred but normal exposure image and the eigenvalues of the dark looking but short-exposure image without requiring any search procedure. A GPU implementation of the developed blur reduction technique was also reported leading to 40 % improvement in computational efficiency compared to the existing techniques.

References

Joshi, N., Szeliski, R., Kriegman D.: PSF estimation using sharp edge prediction. In: Proceedings of IEEE Conference on CVPR, pp. 1–8, 2008

Portz, T., Zhang, L., Jiang, H.: Optical flow in the presence of spatially-varying motion blur. In: Proceedings of IEEE Conference on CVPR, pp. 1752–1759, 2012

Fergus, R., Singh, B., Hertzmann, A., Roweis, S., Freeman, W.: Removing camera shake from a single photograph. ACM Trans. Graph. 25, 787–794 (2006)

Šindelář, O., Šroubek, F.: Image deblurring in smartphone device using built-in inertial measurement sensors. J. Electron. Imaging 22(1), 1003–1015 (2013)

Razligh, Q., Kehtarnavaz, N.: Image blur reduction for cell-phone cameras via adaptive tonal correction. Proc. IEEE Int. Conf. Image Process. 1, 113–116 (2007)

Jia, J., Sun, J., Tang, C., Shum, H.: Bayesian correction of image intensity with spatial consideration. In: ECCV 2004, LNCS, 2004, pp. 342–354

Chang, C.-H., Parthasarthy, S., Kehtarnavaz, N.: Comparison of two computationally feasible image deblurring techniques for smartphones. In: Proceedings of IEEE Global Conference on Signal and Information Processing, 2013

Shen, H., Huang, J.: Sparse principal component analysis via regularized low rank matrix approximation. J. Multivar. Anal. 99(6), 1015–1034 (2008)

Ye, J.: Generalized low rank approximations of matrices. Mach. Learn. 61(1), 887–894 (2004)

Umapathi, V., Biradar, L.S.: SVD-based information theoretic criteria for detection of the number of damped/undamped sinusoids and their performance analysis. IEEE Trans. Signal Process. 41(9), 2872–2880 (1993)

De Moor, B.: The singular value decomposition and long and short spaces of noisy matrices. IEEE Trans. Signal Process. 41(9), 2826–2838 (1993)

Lewis, A.S.: Image compression using the 2-D wavelet transform. IEEE Trans. Image Process. 1(2), 244–250 (1992)

Ma, L., Mosian, L., Yu, J., Zeng, T.: A dictionary learning approach for passion image deblurring. IEEE Trans. Med. Imaging. 32(7), 1277–1289 (2013)

Sanders, J., Kandrot, E.: CUDA by example: an introduction to general-purpose GPU programing, 1st edn. Addison-Wesley Professional, New York (2010)

Arigela, S., Asari, V.K.: Self-tunable transformation function for enhancement of high contrast color images. J. Electron. Imaging 2(22), 23010–23033 (2013)

Dixi, M., Ziou, D.: Image quality metrics: PSNR vs. SSIM. In: Proceedings of IEEE International Conference on Pattern Recognition, pp. 2366–2369, 2010

Hore, A., Ziou, D.: Image quality metrics: PSNR vs. SSIM. In: Proceedings of IEEE International Conference on Pattern Recognition, pp. 2366–2369, 2010

Wang, Z., Bovik, A., Sheikh, H., Simoncelli, E.: Image quality assessment from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Zhang, L., Zhang, L., Mou, X., Zhang, D.: FSIM: a feature similarity index for image quality assessment. IEEE Trans. Image Process. 20(8), 2378–2386 (2011)

Pourreza, R., Chang, C.-H., Kehtarnavaz, N.: Real-time deblurring of handshake blurred image on smartphone. In: Proceedings of SPIE Conference on Real-Time Image and Video Processing, Feb 2015

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chang, CH., Kehtarnavaz, N. Computationally efficient image deblurring using low rank image approximation and its GPU implementation. J Real-Time Image Proc 12, 567–573 (2016). https://doi.org/10.1007/s11554-015-0539-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-015-0539-x