Abstract

In this paper, we propose a new image blind deblurring model, based on a novel low-rank prior. As the low-rank prior, we employ the weighted Schatten p-norm minimization (WSNM), which can represent both the sparsity and self-similarity of the image structure more accurately. In addition, the L0-regularized gradient prior is introduced into our model, to extract significant edges quickly and effectively. Moreover, the WSNM prior can effectively eliminate harmful details and maintain dominant edges, to generate sharper intermediate images, which is beneficial for blur kernel estimation. To optimize the model, an efficient optimization algorithm is developed by combining the half-quadratic splitting strategy with the generalized soft-thresholding algorithm. Extensive experiments have demonstrated the validity of the WSNM prior. Our flexible low-rank prior enables the proposed algorithm to achieve excellent results in various special scenarios, such as the deblurring of text, face, saturated, and noise-containing images. In addition, our method can be extended naturally to non-uniform deblurring. Quantitative and qualitative experimental evaluations indicate that the proposed algorithm is robust and performs favorably against state-of-the-art algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Blind image deblurring has become an important research topic in the field of image processing and computer vision. It is a challenging and interesting problem and has been applied widely in various fields, including biomedicine, aerospace, and public safety. A common type of blurring is motion blurring, which is caused by the motion of an object relative to the camera during the exposure time.

When the motion blurring is uniform and spatially invariant, the relationship between the latent sharp image \( L \) and the observed blurred image \( B \) can be established by using the following model:

where *, \( k \), and \( n \) represent the convolution operator, blur kernel, and additive noise, respectively. According to (1), we need to restore both \( L \) and \( k \), with the blurred image \( B \) as the only input. This problem is challenging and ill-posed because there are an infinite number of different solution sets (\( L \), \( k \)), each of which can correspond to the same \( B \). In addition, the effect of noise makes blind image restoration more difficult. Therefore, additional constraints on \( L \) and \( k \) are required to ensure that the final optimized solution is close to the true solution.

1.1 Previous Work

In recent decades, many scholars have carried out in-depth research on blind deblurring and have proposed numerous fruitful blind deblurring methods [2,3,4, 7, 11, 14, 18, 19, 21, 22, 25,26,27,28, 32, 33, 35,36,37,38,39,40,41,42,43,44,45, 49, 51, 52]. These methods can be classified into three mainly categories: explicit edge prediction strategies, image statistical priors, and deep-learning-based methods.

1.1.1 Explicit Edge Prediction

Because strong edges have a significant beneficial effect for blur kernel estimation, many methods based on explicit edge prediction have been proposed. Joshi et al. [18] directly detected and predicted latent sharp edges to improve blur kernel estimation. Cho et al. [4] used a combination of bilateral filtering, shock filtering, and edge gradient thresholding to predict salient edges. Xu and Jia [49] developed an effective salient edge selection strategy and proposed a two-phase kernel estimation framework. Subsequently, Pan et al. [37] proposed a self-adaptive edge selection algorithm. Although explicit edge prediction methods are valid for image blind recovery, they rely heavily on heuristic filters. These methods are likely to amplify noise and over-sharpen images, and salient edges are not always present in natural images.

1.1.2 Prior Based on Image Statistics

To mitigate the negative impact of heuristic filters on the selection of strong edges, numerous methods based on image statistical priors have been proposed. To match natural image gradient, which obeys a heavy-tailed distribution, Fergus et al. [11] applied a Gaussian mixture model and performed kernel estimation through a variational Bayesian framework. Shan et al. [42] connected two piecewise functions to approximate the image gradient distribution. Levin et al. [25, 26] elaborated on the limitations of the naive maximum a posteriori method, which is conducive to the generation of delta kernels and blurred images, and an effective maximum marginal optimization algorithm was proposed. Krishnan et al. [21] proposed the normalized sparsity prior and provided a new L1/L2 regularization function to estimate the kernel.

To enhance the search for strong edges, Xu et al. [51] proposed a generalized L0 sparse gradient prior, which can extract strong edges quickly and efficiently. Inspired by this work, many methods utilize L0 regularization to enhance the sparsity of image gradients, and incorporate various image priors to improve the kernel estimation. Pan et al. [35] further adopted the L0-regularized priors of intensity and gradient to deblur text images. Li et al. [27] applied L0 regularization of image gradient and kernel intensity. Interestingly, Pan et al. [38] observed that the dark channel of a clear image is sparser than the blurred image, and L0 regularization can be performed on the dark channel to improve the restoration performance further. However, not all images have obvious dark channels. Therefore, Yan et al. [52] proposed an extreme channel prior, which integrates the dark channel and bright channel priors of the image. Recently, Li et al. [28] used the effective combination of a convolutional neural network (CNN) and the L0-regularized gradient for blind deblurring. Chen et al. [3] developed a local maximum gradient prior and combined it with L0-regularized gradient to obtain good results, but this method is sensitive to noise.

Most of the above methods focus only on the relationship between adjacent pairs of pixels, or the simplicity of pixel intensity, or both. However, they ignore that the salient structure is dependent on a larger range of pixels. Using these methods cannot restore images with complex geometries completely and effectively. To avoid such restrictions, image patch-based methods have been proposed for image restoration [10, 14, 31, 32, 43, 44, 55]. Zoran et al. [55] proposed a Gaussian mixture prior, which learns from natural image patches and restores images using patch likelihoods. Sun et al. [43] learned two types of patch priors, to model image edge primitives: natural images and synthetic structures. Michaeli et al. [32] used internal patch recurrence property for kernel estimation, because cross-scale internal patches were repeated in clear images and significantly reduced in blurred images. Guo et al. [14] proposed an adaptive edge-based patch prior to reconstruct salient edges and other features. Tang et al. [44] used external patch priors, combined with the sparse representation method for kernel estimation. In general, a patch-based prior can cover more pixels than an image gradient or intensity prior can. Therefore, patch-based priors are more beneficial for complex structure extraction and noise suppression.

To extend the effectiveness of patch-based priors further, low-rank matrix approximation (LRMA) methods, based on non-local similarity patches, have been extensively studied and successfully applied to many vision tasks, such as image or video denoising [9, 13, 16, 48], non-blind deblurring [8, 30, 53], blind deblurring [7, 40], and other tasks [1, 9, 12, 17, 29, 48, 53]. LRMA methods can be classified into two categories: nuclear norm minimization (NNM) methods and low-rank matrix factorization methods. In this paper, we mainly study the former type as a regularization term. Although conventional NNM has been used widely in image restoration, it still has some limitations. To pursue convex solutions, standard NNM penalizes each singular value equally. However, this is unreasonable and limits its flexibility to deal with various practical problems. Because each singular value of the matrix represents a different meaning and importance, they need to be processed separately.

To promote the flexibility and effectiveness of NNM, the weighted nuclear norm minimization (WNNM) model was proposed by Gu et al. [12, 13]; this model assigns a different weight to each singular value. WNNM imposes less penalty on larger singular values than on smaller ones, thereby retaining the main structure of the image more rationally. Subsequently, Ma et al. [30] used WNNM and total variation regularization to perform non-blind deblurring of the images. Ren et al. [40] exploited the WNNM low-rank prior to perform blind deblurring.

Inspired by Schatten p-norm minimization (\( 0 \le p \le 1 \)) sparse optimization algorithms [34, 56] and WNNM [13], a novel low-rank prior—weighted Schatten p-norm Minimization (WSNM)—was proposed by Xie et al. [48], who applied it to background subtraction and image denoising. When considering different rank components, WSNM has more flexibility than WNNM and can approximate the original LRMA problem better. It is worth noting that WNNM is only a special case of WSNM, in which \( p = 1 \). Zha et al. [53] further developed the alternative direction multiplier method to solve the WSNM model, and applied this method to non-blind restoration.

In blind image deblurring, low-rank priors have inherent essential advantages. They favor sharp images over blurred images [7] and produce sharper intermediate latent images for kernel estimation, by eliminating harmful subtle details while preserving the main structures [40]. Although two low-rank-based methods [7, 40] have been proposed for blind deblurring, both of them have some limitations. The method of [7] combines explicit salient edge extraction with conventional NNM for kernel estimation. The explicit edge extraction of the method of [7] utilizes a conventional structure–texture decomposition strategy and heuristic filtering, which significantly increases the complexity of the algorithm. In addition, because of the negative influence of heuristic filtering and the inflexible NNM, the effect of restoration is reduced. The method of [40] employs the WNNM of image intensity and gradient to recover the image. However, because of the low-rank minimization of the gradient, the computational complexity of the algorithm is greatly increased. Moreover, this method employs only the low-rank prior in the finest layer pyramid, reducing the role of the low-rank prior in the entire blind restoration. In addition, the WNNM prior of this method still lacks sufficient flexibility and accuracy, compared with the latest WSNM prior.

1.1.3 Method Based on Deep Learning

Recently, many deblurring methods [2, 22, 28, 33, 41, 45] based on CNNs have been proposed. However, because of the variability and complexity of real-world blurred images, compared with conventional optimization-based methods, most existing CNN-based methods have difficulty in restoring real-world blurred images effectively, especially the large-scale motion blur. Therefore, the closely related optimization-based methods are mainly introduced in the introduction.

1.2 Motivation and Proposed Approach

The motivation for our work is twofold. First, in view of the latest research work, low-rank priors show obvious advantages and great potential for image restoration. However, existing low-rank blind deblurring methods [7, 40] still have some limitations. Therefore, we can develop better blind deblurring methods based on the latest low-rank priors. Second, several state-of-the-art blind deblurring algorithms adopt a strategy that effectively combines the L0-regularized gradient prior with different image priors, to further improve kernel estimation. However, these image priors have limitations, which reduce the universality and effectiveness of the methods. Therefore, we can develop a more pervasive, sparse, and efficient prior to replace the existing image priors.

Based on the above two aspects, we propose a new blind deblurring method by extending the application of the flexible WSNM prior and the L0-regularized gradient prior. Our main contributions are summarized as follows:

-

1.

We propose a new image blind deblurring model based on the low-rank prior and L0-regularized gradient prior. The L0-regularized gradient prior can extract the main edges quickly. Our low-rank prior can further effectively eliminate harmful subtle details, while preserving the main edges. To implement low-rank regularization effectively, a more accurate and flexible Schatten p-norm minimization (i.e., WSNM) is employed.

-

2.

To solve our model effectively, an iterative optimization algorithm, based on the half-quadratic splitting (HQS) strategy and the generalized soft-thresholding (GST) algorithm, is developed.

-

3.

The validity of the WSNM prior is demonstrated by extensive experiments. Our low-rank prior enhances the sparsity and self-similarity of the intermediate latent image, thereby improving kernel estimation.

-

4.

Our algorithm achieves outstanding results on both natural images and domain-specific images. In addition, our method can be extended naturally to non-uniform deblurring.

The remainder of this paper is organized as follows. Section 2 describes the WSNM prior employed in our model. Section 3 shows the proposed model and derives a numerical optimization algorithm, based on HQS and GST, to solve the non-convex regularization terms. Section 4 shows that our algorithm can be effectively extended to non-uniform deblurring. Section 5 presents experimental comparisons with other state-of-the-art methods. Section 6 analyzes and discusses the effectiveness of the proposed algorithm. Section 7 concludes the paper.

2 Weighted Schatten p-norm Minimization Prior

To enhance the low-rank prior, inspired by Schatten p-norm minimization [34, 56] and WNNM [13], Xie et al. [48] proposed a weighted Schatten p-norm minimization (WSNM) model:

where \( X \) and \( Y \) are the desired low-rank approximation matrix and degraded observation matrix, respectively. The first term in (2) is the F-norm data fidelity term, and the second term is the low-rank regularization term. \( \left\| X \right\|_{{w,S_{p} }}^{{}} \) represents the weighted Schatten p-norm of matrix \( X \in \Re^{m \times n} \), and is defined as

where \( 0 \le p \le 1 \), \( w \) is a nonnegative weight vector, \( w = \left\{ {w_{1} , \ldots ,w_{{{\mathrm{min}} (n,m)}} } \right\} \) and \( w_{i} \ge 0 \), and \( \sigma_{i} \) is the ith singular value of \( X \). \( \left\| X \right\|_{{w,S_{p} }} \) to the power of \( p \) is

where \( \Delta \) and \( W \) are diagonal matrices composed of all values of \( \sigma_{i} \) and \( w_{i} \), respectively. Because \( \left\| X \right\|_{{w,S_{p} }}^{p} \) contains the weight vector \( w \) and the non-convex Schatten p-norm, problem (2) is difficult to solve effectively. To obtain the optimal solution, we introduce the following theorem:

Theorem 1

Let \( Y = U\sum V^{{\mathrm{T}}} \) be the singular value decomposition (SVD) of \( Y \in \Re^{m \times n} \), \( \sum = {\text{diag}}(\delta_{1} , \ldots ,\delta_{r} ) \), and \( r = {\mathrm{min}} \left( {m,n} \right) \); then the optimal solution to problem (2) is \( X = U\Delta V^{{\mathrm{T}}} \), where \( \Delta = {\text{diag}}(\sigma_{1} , \ldots,\sigma_{r} ) \), and a singular value \( \sigma_{i} \) of \( X \) can be obtained by solving the following problem:

(The detailed proof can be found in [48].) Therefore, solving problem (2) can be transformed to solving problem (5); the GST algorithm [56] can solve problem (5) as follows:

where \( t \) represents the number of iterations. The weights \( w_{i} \) are in non-descending order: \( 0 \le w_{1} \le \cdots \le w_{r} \). This means that larger singular values represent the main components and should be penalized less, whereas smaller values represent harmful details and noise and should be penalized more. Therefore, the preservation of the main data components can be guaranteed. More details can be found in [48, 56]. In blind deblurring, this weight setting is favorable for preserving significant edges for kernel estimation.

3 Model and Optimization

Based on the above introduction and analysis, we propose a novel image blind deblurring model, which is based on the WSNM prior and L0-regularized gradient prior, and develop an effective optimization algorithm to estimate the kernels. Our model is defined as follows:

where \( \gamma \), \( \mu \), and \( \lambda \) are the weight parameters corresponding to each term. \( \nabla = (\nabla_{{\mathrm{h}}} ,\nabla_{{\mathrm{v}}} ) \) is the gradient operator; \( \nabla_{{\mathrm{v}}} \) and \( \nabla_{{\mathrm{h}}} \) denote the vertical and horizontal directions of the gradient operator, respectively.

The first term in (7) denotes data fidelity, which constrains the convolution of the clear image \( L \) with the blur kernel \( k \) to be close to the blurred image \( B \). The second term constrains the estimated kernel to obtain a stable solution. The third term is the L0 regularization gradient prior, which preserves the large gradients and removes the harmful fine structures. The last term is the WSNM prior, which uses the low-rank characteristic of non-local self-similarity patches to eliminate further harmful micro-textures and noise. \( R(L) \) is defined as

Image \( L \in \Re^{N} \) is divided into \( d \) overlapping patches \( l_{i} \), of size \( \sqrt n \times \sqrt n \), \( i = 1, \ldots ,d \). For each example patch \( l_{i} \), the \( m \) most similar patches are collected through an \( S \times S \) search window, and the \( m \) similar patches are stacked into the matrix \( L_{i} \in \Re^{n \times m} \), of which the columns are composed of \( m \) vectorized similar patches, such as \( L_{i} = \left\{ {l_{i1} ,l_{i2} , \ldots ,l_{im} } \right\} \), and \( l_{im} \) is the mth similar patch of the ith group \( L_{i} \). Because the \( m \) similar patches have consistent geometric structures, their permutation combination results in matrix \( L_{i} \) with a low-rank property, and our model (7) can be reformulated as

Problem (9) involves solving for two unknown variables, \( L \) and \( k \). To optimize (9), we divide it into two subproblems (of \( L \) and \( k \)) to minimize alternately, where the subproblem for \( L \) is

and the subproblem for \( k \) is

3.1 Intermediate Latent Image \( L \) Estimation

Because subproblem (10) includes both the L0-regularized gradient and WSNM terms, which are non-convex, performing the calculation directly is difficult. We adopt the HQS strategy [50] and the GST algorithm [56] to optimize these two non-convex terms.

First, we adopt the HQS strategy, where \( u \) and \( g = \left( {g_{{\mathrm{h}}} ,g_{{\mathrm{v}}} } \right) \) are introduced as two new auxiliary variables; \( u \) and \( g \) correspond to the latent image \( L \) and image gradient \( \nabla L \), respectively. Subproblem (10) can be reformulated as

where \( \alpha \) and \( \beta \) are positive regularization parameters. We can solve (12) by alternately minimizing \( L \), \( u \), and \( g \), to avoid solving the non-convex L0 gradients and the weighted Schatten p-norm directly.

We fix \( u \) and \( g \), to solve the intermediate latent image \( L \) by optimizing the following objective function:

This is a least squares optimization problem, and its closed solution can be effectively solved by the fast Fourier transform (FFT) method:

where \( F( \cdot ) \) and \( F^{ - 1} ( \cdot ) \) denote FFT and inverse FFT, respectively, \( \overline{F( \cdot )} \) denotes the complex conjugate operator, and \( F_{G} = \overline{{F(\nabla_{{\mathrm{h}}} )}} F(g_{{\mathrm{h}}} ) + \overline{{F(\nabla_{{\mathrm{v}}} )}} F(g_{{\mathrm{v}}} ) \).

Fixing \( u \) and \( L \), we can solve \( g \) by

Because (15) is a pixel-wise minimization problem, it can be directly solved by [50] to obtain \( g \):

Fixing \( g \) and \( L \), we can solve \( u \) by

However, the second term is severely non-convex. This problem has complex structures and is difficult to optimize directly. To solve (17) more efficiently, we make the following assumptions, based on [53, 54].

Theorem 2

Define \( L,u \in \Re^{N} \), \( L_{i} ,u_{i} \in \Re^{n \times m} \), and error vector \( e = L - u \); \( e(j) \) is each element of \( e \) and \( j = 1, \ldots,N \). Suppose that \( e(j) \) satisfies the independent zero-mean distribution and that its variance is \( \sigma^{2} \). Then, for any \( \varepsilon > 0 \), the following property describes the relationship between \( \left\| {L - u} \right\|_{2}^{2} \) and \( \sum\limits_{i = 1}^{d} {\left\| {L_{i} - u_{i} } \right\|_{F}^{2} } \):

where \( P( \bullet ) \) denotes the probability, and \( R = d \times n \times m \).

Proof

Each \( e(j) \) is assumed to be an independent zero-mean distribution with variance \( \sigma^{2} \), i.e., \( Var[e(j)] = \sigma^{2} \) and \( E[e(j)] = 0 \). Therefore, each \( e(j)^{2} \) is also independent, and the mean of each \( e(j)^{2} \) is

By invoking the law of large numbers in probability theory, for any \( \varepsilon > 0 \), \( \mathop {\lim }\limits_{N \to \infty } P\left\{ {\left| {\frac{1}{N}\sum\nolimits_{j = 1}^{N} {e(j)^{2} } - \sigma^{2} } \right|} \right.\left. { < \frac{\varepsilon }{2}} \right\} = 1 \), i.e.,

Further, we let \( {\mathbf{L}} \) and \( {\mathbf{u}} \) denote the concatenation of all groups \( L_{i} \) and \( u_{i} \), \( i = 1, \ldots,d \), respectively, and denote the error of each element of \( {\mathbf{L}} - {\mathbf{u}} \) by \( e(r) \), \( r = 1,2, \ldots ,R \). Suppose \( e(r) \) also follows an independent zero-mean distribution with variance \( \sigma^{2} \).

Therefore, a process similar to that mentioned above is applied to \( e(r)^{2} \) to obtain \( \mathop {\lim }\limits_{R \to \infty } P\left\{ {\left| {\frac{1}{R}\sum\nolimits_{r = 1}^{R} {e(r)^{2} } - \sigma^{2} } \right|} \right.\left. { < \frac{\varepsilon }{2}} \right\} = 1 \), i.e.,

Considering (20) and (21) together, we prove (18).□

Therefore, according to Theorem 2, in each iteration we obtain an equation of high probability (approaching 1):

From (17) and (22), we obtain the following equation:

According to (2), (5), and (6), the optimal solution of (23) can be obtained by the GST algorithm [56], as follows:

where \( \delta_{ij} (L_{i} ) \) is the jth singular value of the stacked similar patch matrix \( L_{i} \), the definition of \( \sigma_{ij} (u_{i} ) \) is similar to that of \( \delta_{ij} (L_{i} ) \), and \( \eta = {{\lambda R} \mathord{\left/ {\vphantom {{\lambda R} {\beta N}}} \right. \kern-0pt} {\beta N}} \). Because large singular values contain the main structure of the image, they should be penalized less, whereas small ones mainly contain the harmful detail and should be penalized more. Therefore, referring to [48], we define the weight \( w_{ij} \) as

where \( \varepsilon \) is a very small constant and \( m \) is the number of similar patches. Because \( \sigma_{ij} (u_{i} ) \) is unknowable before estimating \( u_{i} \), we follow [40, 48] and initialize \( \sigma_{ij} (u_{i} ) \) as

Each singular value of \( u_{i} \) can be calculated from (24) to form \( u_{i} = U\Delta V^{{\mathrm{T}}} \), where \( \Delta = {\text{diag}}\left( {\left. {\sigma_{i1} (u_{i} ), \ldots ,\sigma_{ir} (u_{i} )} \right)} \right. \), \( r = {\mathrm{min}} \left( {n,m} \right) \), \( i = 1, \ldots ,d \), and \( s \) denotes the size of the blur kernel. Finally, all values of \( u_{i} \) are aggregated, to reconstruct \( u \).

In addition, we add a stopping criterion to the internal iterative process, to enhance the fast convergence of our algorithm:

where \( x \) is the number of internal iterations and \( tol = 10^{ - 5} \).

3.2 Blur Kernel \( k \) Estimation

After obtaining the intermediate latent image \( L \), subproblem (11) can be used directly to calculate the closed solution by FFT. However, (11) is based on image intensity, and the direct solution cannot yield good results [4, 26, 35, 51]. Therefore, image gradients are used to estimate the blur kernel \( k \), and this method is more effective. We reformulate (11) to

The objective function (28) can be solved directly by FFT:

After obtaining the blur kernel \( k \), the negative elements of \( k \) are set to 0, and normalization is performed. In the concrete implementation, similar to other state-of-the-art algorithms [4, 38, 51], a coarse-to-fine multiscale framework based on the image pyramid [4] is adopted in the whole deblurring process; this enables our algorithm to facilitate large-scale blur kernel estimation. We alternately solve \( L \) and \( k \) at each layer of the pyramid and then perform up-sampling, using the estimated \( k \) as the initial value for the next layer of the pyramid. Algorithm 1 presents the main steps of the proposed deblurring algorithm at each layer of the pyramid.

3.3 Non-blind Deconvolution

When using our blind deblurring algorithm (Algorithm 1) to restore the final latent image, the image details may be lost. Therefore, after obtaining the final blur kernel, various state-of-the-art non-blind deconvolution algorithms can be used to restore the final latent image. In this paper, the sparse deconvolution algorithm [24] is adopted, unless otherwise stated.

4 Extension to Non-uniform Deblurring

The proposed deblurring method can be easily extended to non-uniform deblurring, where the blur kernel is spatial-variant. Based on the geometric model of camera motion [47], we can model the blurred image \( B \) as the sum of all the different views in the scene:

where \( t \) is the number of each view, \( H_{t} \) is a homography matrix, and \( k_{t} \) is the weight corresponding to the tth view, with \( k_{t} \ge 0 \) and \( \sum\nolimits_{t} {k_{t} = 1} \). Similarly to [47], (30) can be rewritten as

where \( K = \sum\nolimits_{t} {k_{t} H_{t} } \), \( A = [H_{1} L,H_{2} L, \ldots ,H_{t} L] \), and \( {\text{k}} = [k_{1} ,k_{2} , \ldots ,k_{t} ]^{{\mathrm{T}}} \). We can solve non-uniform blur by alternatively minimizing

and

Similarly to the case of uniform deblurring, (32) can be rewritten as

For (34), we use the same optimization strategies as (15) and (17) to solve for \( g \) and \( u \).

The minimization problem for \( L \) is

Obviously, (35) cannot be solved directly by FFT. Because the blur kernels are similar in a small region, we use local uniform blur to approximate non-uniform blur. Based on the fast forward approximation method [15], the blurred image is divided into \( Q \) overlapping patches. The matrix \( K \) can be expressed as

\( C_{r} ( \cdot ) \) denotes the operator that crops the rth patch from the image, \( C_{r}^{ - 1} ( \cdot ) \) denotes the operator that replaces the patch in the reconstructed image, and \( {\text{diag}}( \cdot ) \) denotes a diagonal matrix. The matrix \( M_{r} \) is a window function that has the same size as \( L \). In addition, \( a_{r} = C_{r} {J}_{r} {\text{k}} \) denotes the blur kernel of the rth patch, and the elements of each matrix \( {J}_{r} \) are simply a rearrangement of the elements of \( H_{t} \) [46].

Based on (36), the optimized solution of (35) can be calculated quickly by frequency-domain convolutions and correlations. For the blur kernel optimization problem (33), we use the method of [46] to estimate the kernel. The main difference between the proposed method and that of [46] is that we do not employ a bilateral filter and shock filter to predict significant edges. This is because the effective combination of WSNM and L0-regularized gradient priors can eliminate harmful structures and obtain the intermediate latent images with sharp edges.

5 Experimental Results

We evaluated our method on three natural image datasets [20, 25, 43] and real-world images and compared it with state-of-the-art methods. We also evaluated our method on specific domain datasets, such as text [35], face [23], and saturated images [23] and compared it with related specially designed methods. In addition, we evaluated the robustness of our algorithm to blurred images with Gaussian noise. Finally, we tested non-uniform blurred images and other types of blurred images.

In all experiments of the uniform deblurring, we empirically set the parameter values of the proposed algorithm as follows: \( \lambda = 0.005 \), \( \mu = 0.004 \), \( \gamma = 40 \), \( \alpha_{{{\mathrm{max}} }} = 10^{5} \), \( \beta_{{{\mathrm{max}} }} = 0.06 \), \( p = 0.9 \), and \( T_{{{\mathrm{max}} }} = 5 \); we collected \( 9 \times 9 \) non-local similar patches through a \( 30 \times 30 \) search window by using the block matching algorithm [6], and the overlap of adjacent example patches was set to one pixel. For a fair comparison, the other methods were tested with the default parameters of the authors’ original code. Our numerical experiments were performed in MATLAB R2017a on a desktop computer with Intel Core i7-8770 CPU at 3.20 GHz and 32 GB RAM.

5.1 Natural Images

5.1.1 Dataset of Levin et al.

First, we performed a quantitative evaluation on the dataset of Levin et al. [25], which comprises 32 images generated from four grayscale images and eight uniform blur kernels. The sizes of the kernels ranged from \( 13 \times 13 \) to \( 27 \times 27 \). We compared the proposed method with other state-of-the-art methods [4, 7, 11, 26, 28, 38,39,40, 43, 49] and uniformly adopted the non-blind method [24], to ensure a fair comparison. We adopted three measurement criteria to evaluate the recovery results: the cumulative error ratio [25], the peak signal-to-noise ratio (PSNR), and the structural similarity index (SSIM). The error ratio is

where \( L_{t} \), \( L \), and \( L_{k} \) represent the ground-truth sharp image, the recovered latent image, and the deblurred image obtained by the ground-truth kernel deconvolution, respectively. When the error ratio \( r \) is reduced, the recovered image is closer to the ground-truth sharp image. According to [25], when \( r \le 2 \), the deconvolution result is usually visually plausible.

We first compared our method quantitatively with our method without WSNM. Figure 1 shows the average PSNR and average SSIM of four images with eight kernels. Our method achieved a higher PSNR and SSIM, on average. Figure 2a shows that our method achieved a higher success rate for each error ratio. It is worth noting that, when our method omits WSNM, it contains only the L0 gradient term, which is equivalent to the method of [51]. Compared with other competing methods, our method achieved the highest PSNR and SSIM values, on average, as shown in Table 1. Figure 2b shows that our method also achieved the highest success rate when the error ratio was 2.

Quantitative evaluation of our method and our method without WSNM on the dataset of [25]. a Comparison of average PSNR. b Comparison of average SSIM. The rightmost column shows the average PSNR and average SSIM of all images

Comparison of cumulative error ratios of various methods on the dataset of [25]. a Comparison of our two methods. b Comparison of our method with other competing methods

For a better comparison, Fig. 3 visually shows an example image and the results recovered by different methods. Our method estimated the accurate blur kernel and recovered comparable, or even better, image quality. However, the kernel estimated by our method without WSNM produced interruption in the upper right part of the image. Table 2 shows that our method achieved the highest PSNR and the second-best SSIM for the image of Fig. 3.

Visual comparison on an example image from the dataset of [25]

5.1.2 Dataset of Sun et al.

Second, we conducted a quantitative evaluation on the benchmark dataset of Sun et al. [43], which produced 640 blurred images from 80 natural images and eight kernels. Our method was compared with seven optimization-based methods [4, 21, 26, 38, 39, 43, 49] and two CNN-based methods [2, 28]. To ensure fairness, we uniformly used the non-blind deconvolution method [55] to recover the final results and employed the error ratio criterion for evaluation. According to [32], if the error ratio \( r \le 5 \), the final recovery result is satisfactory. Figure 4a shows that our method yielded a higher success rate than our method without WSNM, for every error ratio. Figure 4b shows that our method achieved a higher success rate than the other methods for \( r \ge 2 \).

Comparison of cumulative error ratios of various methods on the dataset of [43]. a Comparison of our two methods. b Comparison of our method with other competing methods

We then chose two challenging images for comparison. They have fewer significant edges but contain more small details, which is not conducive to blur kernel estimation. As shown in Figs. 5 and 6, our method estimated the most accurate blur kernel, yielding the most pleasing recovered images. However, the other methods estimated either poor blur kernels or kernels containing noise, resulting in serious ringing artifacts. It is worth noting that the kernel estimated by our method without WSNM produced a serious deviation in both images. The reason is that the main advantage of L0 gradient is the extraction of significant edges. When there are fewer edges or complex structures in the image, the L0 gradient prior cannot extract edges very well. However, the WSNM prior in our model can use the low-rank property of locally similar patches to remove harmful small details, so that useful information can be better preserved for kernel estimation. Table 3 also shows that our method produced the highest PSNR and SSIM values in the deblurring results of both Figs. 5 and 6.

Visual comparison on an example image from the dataset of [43]

Visual comparison on another example image from the dataset of [43]. Part of the recovered image is enlarged for comparison

5.1.3 Dataset of Köhler et al.

Third, we tested the proposed algorithm against other advanced methods [4, 7, 11, 15, 21, 28, 38, 40, 42, 46, 49, 52] on the real dataset [20], which contains 48 blurred images generated from 12 blur kernels and four images. The mean structural similarity index (MSSIM) and PSNR were selected as the evaluation metrics. The result of each restoration was compared to 199 sharp images taken along the camera trajectory, and the highest PSNR and MSSIM values were calculated.

Figure 7 shows that our method achieved a higher PSNR and MSSIM, on average, than our method without WSNM. The average PSNR and MSSIM differences between the two methods were 0.533 dB and 0.025, respectively. This proves that the WSNM prior can improve the deblurring performance of real images. Table 4 shows that our method achieved a competitive MSSIM and the highest PSNR values, on average, compared to other state-of-the-art methods.

Quantitative evaluation of our method and our method without WSNM on the dataset of [20]. a Comparisons of average PSNR. b Comparisons of average MSSIM. The rightmost column shows the average PSNR and average MSSIM of all images

Figure 8 illustrates a challenging visual example with severe motion blur. Our method produced the best visual results. Although two methods [28, 38] achieved acceptable deblurring results, the result of the method of [38] has severe ringing artifacts, and the result of the method of [28] is over-smooth. The deblurring results of the other two low-rank methods show severe distortion. Table 5 shows that our method obtained the highest PSNR and MSSIM values in the deblurring results of Fig. 8.

Visual comparison of deblurring results using a severely blurred image on the benchmark dataset of [20]

5.1.4 Other Real-World Images

We further compared our approach with two other low-rank methods on real-world images. We used the same non-blind method [24] to ensure fairness. As shown in Fig. 9, the method of [40] estimated the kernel with large error and produced a poor quality image. The kernel estimated by the method of [7] contained substantial noise, resulting in obvious artifacts in the restored images, whereas our method recovered a clearer result. It is worth noting that our method without WSNM estimated an incorrect blur kernel. Figure 10a shows an example of large-scale motion blur. Our method is the only one that recovered good visual effects; the images recovered by the other methods have serious biases.

5.2 Domain-Specific Images

5.2.1 Text Images

Text images mostly contain two tones (white and black) that do not comply with the heavy-tailed distribution of natural images; processing them is a daunting task for most deblurring methods. Our approach was compared with the other methods [7, 21, 26, 28, 35, 38, 40, 52] on the text dataset of [35], consisting of 15 images and eight kernels. It is worth noting that the method of [35] was designed specifically for text images. We employed the same non-blind approach [24] to ensure fairness. Table 6 shows that our method yielded the highest PSNR, on average, whereas our method without WSNM yielded a lower value. Figure 11 shows a typical case, for which our method recovered the best quality image, whereas most other methods produced severe artifacts and blur residues. Table 7 shows the PSNR values of all the images compared in Fig. 11.

Visual comparison on an example image from the text dataset of [35]. Part of the recovered image is enlarged for comparison

Figure 12 shows another challenging image from [35], on which the deblurring result of our method yielded the best visual effect. Among the other methods, only the result of method [35] was acceptable. In particular, our method without WSNM produced a poor result.

5.2.2 Face Images

Face images contain fewer edge structures, so they are not conducive to blur kernel estimation. Our method was compared with other methods [7, 26, 28, 38, 40, 43, 49, 52] on the face dataset of Lai et al. [23], which contains 20 blurred images generated from four kernels and five images. The same non-blind deconvolution algorithm [24] was used to restore the results. The average PSNR values of the deblurring results are shown in Table 8; our method achieved the highest PSNR value, on average. Figure 13 shows that our method recovered clearer face structures and background details than others. However, our method without WSNM produced serious distortions in the face. The PSNR values for the deblurring results in Fig. 13 are shown in Table 9.

Visual comparison on an example image from the face dataset of [23]

In addition, we selected a real face-blurred image for intuitive comparison. As shown in Fig. 14, our method produced the best result, whereas our method without WSNM restored the result with great error, especially in the eye region.

Visual comparison on a real face-blurred image. The results were obtained by the same non-blind method [24]

5.2.3 Saturated Images

The recovery of saturated images is a challenging problem. This is because saturated pixels greatly affect the selection of significant edges, making it difficult for conventional methods to obtain good results. We performed comparative experiments using a saturated image dataset of Lai et al. [23], which produced 20 blurred images by convolution of four kernels and five images. To ensure fairness, the same non-blind algorithm [5] was adopted. Table 10 shows that our method achieved a better PSNR, on average, than the algorithm of [36], which was designed specifically for saturated pixels. Our method without WSNM achieved a lower average value. Figure 15 shows a visual comparison of the deblurring results on a challenging example. The deblurring result of our method obviously has fewer artifacts and clearer details; Table 11 shows that our method achieved the highest PSNR value.

Visual comparison on an example image from the saturated images dataset of [23]

5.2.4 Blurred Images with Gaussian Noise

Noise has a significant impact on the restoration of blurred images. We compared our approach with other advanced methods [28, 35, 38, 52] involving the L0 gradient prior on blurred images with Gaussian noise. We added Gaussian noise, with a variance ranging from 1 to 10, to the images in the dataset of Levin et al. [25] and performed comparative experiments. The same non-blind deconvolution algorithm [24] was employed. As Fig. 16a shows, at different noise levels, our method produced better results than the other methods, in terms of average PSNR. Figure 16b shows that our method without WSNM achieved a lower average PSNR value. The comparison data in Fig. 16 demonstrate that our WSNM prior is robust to Gaussian noise. This is mainly because the WSNM prior is based on local similar patches, the influence of noise on the similar patch structure is relatively small, and the WSNM penalty for singular values reduces the influence of noise further. In contrast, the other algorithms are based on pixel intensity, and weak noise will seriously affect the intensity of pixels.

Figure 17a shows an example of a blurred image with a noise level of 5. Figure 17 shows that our method achieved the best visual effect, but there are still some noise points in the kernel of the estimation. Our method also obtained the highest PSNR and SSIM values, as shown in Table 12. When the noise level is close to 10, all methods failed to recover the image well. This is mainly caused by two factors: one is that these blind deblurring methods cannot estimate the blur kernel effectively in the case of severe noise, and the other is that the non-blind method is also sensitive and not robust to severe noise. For future work, we will delve more deeply into the restoration of images with severe noise, develop effective blind and non-blind methods for severe noise, and integrate deblurring and denoising into one recovery framework.

5.3 Non-uniform Images

In this section, we show that our method can be extended naturally to deblurring non-uniformly blurred images. The proposed method was compared with the other advanced non-uniform deblurring methods [15, 35, 47, 49, 51]. Figure 18 shows that our method estimated the exact kernels and obtained the best restoration results. In Fig. 19, our method achieved results that were comparable to, or even better than, the results of the other methods.

5.4 Other Types of Blurred Images

The proposed method is designed mainly for types of motion blur. We quantitatively evaluated other types of blur, such as Gaussian blur, out-of-focus blur, and average blur. For a fair comparison, we uniformly employed sparse deconvolution [24] for final non-blind restoration. Figures 20, 21, and 22 show that our method could accurately estimate these types of blur kernels, and finally recover high-quality images. Table 13 shows that our method achieved the highest PSNR and SSIM values on the three types of deblurring images.

6 Analysis and Discussion

In this section, we further analyze and discuss the effectiveness of the proposed method, the effect of similar patch sizes, computational complexity and execution time of the algorithm, the sensitivity of the main parameters, and the limitations of our algorithm.

6.1 Effectiveness of the Proposed Method

Numerous experimental comparisons, reported in Sect. 5, have proved that our method has excellent deblurring performance. In this section, we select two further challenging examples for deblurring comparison, to verify the effectiveness of the algorithm.

Figure 23 shows that our method estimated the exact blur kernel and achieved a pleasant restoration result, whereas the other two low-rank methods estimated poor kernels, causing the restoration results to contain serious artifacts and residual blur. Figure 24a shows a blurred image with few edge contours, so that it is difficult for general optimization methods to extract the main edges. Our method estimated the most accurate kernel and yielded the best recovery results. The results of other advanced methods all contained some artifacts. Tables 14 and 15 show that our method achieved the highest PSNR values for the images in both Figs. 23 and 24. When only one prior is employed in our model, the blur kernel estimation fails, and residual blur is apparent in the restored image. This proves that the two priors can complement each other in the process of blind deblurring, thereby performing better kernel estimation. The results shown in Fig. 24 also clarify that the WSNM prior has better universality and effectiveness than other image priors [28, 38, 52] combined with the L0 gradient. In the following, we further analyze the role of each prior in our model separately.

Visual comparison of the results deblurred using three low-rank methods. The results were obtained by the same non-blind method [47]

Visual comparison of the results deblurred using different methods. The results were obtained by the same non-blind method [24]. Part of the recovered image is enlarged for comparison

6.1.1 Effectiveness of WSNM Prior

The two methods (with and without the WSNM prior) in our model have been compared experimentally on five datasets [20, 23, 25, 35, 43], and the method with the WSNM prior has achieved better results, proving that the WSNM prior can improve the deblurring effect.

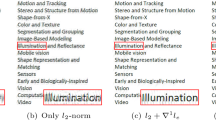

Figure 25 shows a challenging example from [20], comparing the intermediate latent images of our method (with and without WSNM) and two well-known methods [35, 38] involving L0 gradient. As shown in Fig. 25b–e, our method (with WSNM) estimated the most exact kernel and achieved the highest PSNR and MSSIM, as shown in Table 16, whereas our method without WSNM and the method of [35] estimated the kernels with large errors, resulting in poor recovery results. Figure 25h, i shows that, as the number of iterations increases, the use of WSNM effectively promoted the formation of sharper intermediate latent images, thereby improving kernel estimation. Figure 25g, i shows that, although the method of [38] ultimately yielded good recovery results, our method produced sharp intermediate latent images and precise kernels more quickly.

To better illustrate the validity of the WSNM prior, we used the NNM, WNNM, and WSNM priors, both alone and with our method, to compare their performance on the dataset of [25]. To ensure fairness, we used the same patch size and number of patches. Figure 26 shows that the method with only WSNM achieved a higher average PSNR and success rate than the methods with only NNM or only WNNM. Similarly, our method with WSNM achieved better results than our methods with either NNM or WNNM. This is mainly because NNM only penalizes each singular value uniformly. WNNM has penalties that differ according to the importance of different singular values, but WNNM is just a special case of WSNM. As a result, WSNM can penalize singular values more reasonably and flexibly, thereby better eliminating harmful details and retaining significant edges. However, the method with only the WSNM prior does not achieve excellent deblurring results on the dataset of [25]. This is mainly because the WSNM prior cannot extract edges for kernel estimation directly. Therefore, it is not appropriate to perform deblurring tasks using WSNM alone.

Quantitative evaluation on the dataset of [25]. a Comparison of average PSNR. b Comparison of cumulative error ratio

6.1.2 Effectiveness of L0-regularized Gradient Prior

Currently, because of the strong sparse property of the L0 regularization, several deblurring methods [28, 35, 38, 52] with a L0-regularized gradient have been developed. In the comparisons performed in the numerous experiments reported above, our method demonstrated superior deblurring performance, compared with these methods. This is because they are mainly based on simple pixel intensity [28, 35] or extreme channels (dark and bright) [38, 52]. When blurred images have complex structures or lack sufficient extreme channel pixels, these methods cannot perform the task of deblurring well. As shown in Fig. 25f, i, our approach is better and forms a sharp intermediate latent image more rapidly.

In our method, the L0 gradient prior is used mainly for the extraction of strong edges, and the WSNM prior eliminates further harmful details and better preserves the main edges, thereby providing a powerful guarantee for the main edge extraction performed in the next iteration. Numerous experiments have proved that, if only the L0-regularized gradient is used, this method is unable to deblur effectively when the blurred image is complex or lacks sufficient strong edges. In addition, Fig. 26 shows that satisfactory results cannot be obtained without the L0-regularized gradient. Therefore, the combination of the two priors (L0-regularized gradient and WSNM) can perform the deblurring task better than either of them alone.

To further illustrate the effectiveness of the L0 gradient, we adopted different norms for image gradients in our algorithm, for comparison on the dataset of [25]. Figure 27a shows that the combination of the L0 gradient prior and the WSNM prior achieved the best results. This proves that the L0 constraint is more suitable for our model than the other norm constraints.

Quantitative evaluation on the dataset of [25]. a Comparison of cumulative error ratios under different norm constraints. The red curve shows that our method with L0 gradient achieved the best results. b Comparison of average PSNR of different similar patch sizes (Color figure online)

6.2 Effect of Similar Patch Size

Because WSNM involves a search for similar patches, the size of the patch is an important factor. We evaluated different patch sizes on the dataset of [25]. Figure 27b shows that, within a certain range, the patch size had little effect on the average PSNR; this proves that our algorithm is insensitive to patch size, within a reasonable range. With a patch size of \( 9 \times 9 \), the average PSNR obtained was relatively high; therefore, to ensure fairness, we used a \( 9 \times 9 \) patch size in all experiments.

6.3 Computational Complexity and Execution Time

A comparison of the execution times of our method, with and without the low-rank prior, is shown in Table 17. The dominant computational cost of the proposed algorithm is the calculation of the WSNM prior, that is, the calculation of the intermediate auxiliary variable \( u \) in Algorithm 1. This step mainly involves a search for similar patches, and the SVD and GST operation of the group structure \( L_{i} \in \Re^{n \times m} \). We assume that the number of pixels is \( N \), and the average time to search for \( m \) similar patches for each example patch \( l_{i} \in \Re^{\sqrt n \times \sqrt n } \) is \( T_{S} \). The complexity of the SVD and GST operations for each similar group \( L_{i} \) is \( O(nm^{2} ) \). Therefore, the complexity of the low-rank prior is \( O(N(nm^{2} + T_{S} )) \). The other steps of Algorithm 1, such as the calculation of intermediate latent images and kernel estimation, can be accelerated by FFTs and inverse FFTs.

We also measured the execution time of other competing algorithms [7, 26, 38, 40, 51, 52] on blurred images with different sizes, as shown in Table 17. The execution time of our method was greater than that of the methods of [26, 38, 51, 52]. However, compared with the other two low-rank methods [7, 40], the execution time of our algorithm was much less. Because we adopted the L0 gradient prior, our method can quickly extract the main edges, so that only a fewer external iterations, involving WSNM operations, are required to maintain further significant edges.

6.4 Main Parameter Analysis

Our model (7) includes the three regularized weight parameters \( \mu \), \( \lambda \), and \( \gamma \), and the \( p \) in Schatten p-norm minimization. We analyzed the sensitivity of these parameters in our algorithm, on the dataset of [25], by changing each parameter and fixing the others.

We evaluated the sensitivity of the three weight parameters \( \mu \), \( \lambda \), and \( \gamma \) by measuring the accuracy of estimated kernels using the kernel similarity criterion. As shown in Fig. 28a–c, our algorithm was insensitive to the setting of weight parameters within a certain range.

Because of the uncertainty and complexity of blur kernels in the real world, we can choose different \( p \) (\( 0 \le p \le 1 \)) for optimal solution, according to the actual situation. However, we must set \( p \) uniformly, to ensure fairness of comparison. As shown in Fig. 28d, a higher average PSNR value was obtained when \( p = 0.9 \). Therefore, we set \( p = 0.9 \) in all the experiments.

6.5 Limitations

Our method is inefficient when dealing with blurred images with nonlinear relations, because our hypothetical model (1) is based on linear convolution. For example, blurred images caused by out-of-focus or dynamic scene object motion in the real world are usually severely nonlinear and non-uniform. The existing algorithms primarily use depth estimation or image segmentation to recover images. As shown in Figs. 29 and 30, the blurred regions of the two example images are still blurred after deblurring. The next task is to design a robust dedicated algorithm for such highly nonlinear and non-uniform blurred images.

7 Conclusions

In this paper, we present a new blind restoration model based on the low-rank prior and L0-regularized gradient prior. We adopt the flexible non-convex weighted Schatten p-norm minimization as our low-rank prior. In the coarse-to-fine multiscale framework, we propose an efficient optimization algorithm to perform blur kernel estimation, which combines the HQS strategy with the GST algorithm. Experimental analysis shows that the WSNM prior in our model can further promote the accurate estimation of the kernel, while ensuring the sparsity and continuity of the estimated kernel and improving the robustness of our method. In addition, our method does not require additional complex kernel estimation or a salient edge prediction strategy. Moreover, our method can be naturally extended to non-uniform deblurring. Extensive experiments show that our method has achieved excellent visual effects in blurred images of various specific scenarios. The results of qualitative and quantitative evaluations indicate that the proposed method performs favorably against other state-of-the-art methods.

References

E.J. Candes, X. Li, Y. Ma, J. Wright, Robust principal component analysis? J. ACM 58(3), 1–37 (2011)

A. Chakrabarti, A neural approach to blind motion deblurring, in European Conference on Computer Vision (ECCV) (2016), pp. 221–235

L. Chen, F. Fang, T. Wang, G. Zhang, Blind image deblurring with local maximum gradient prior, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019), pp. 1742–1750

S. Cho, S. Lee, Fast motion deblurring. ACM Trans. Graph. 28(5), 1–8 (2009)

S. Cho, J. Wang, S. Lee, Handling outliers in non-blind image deconvolution, in International Conference on Computer Vision (ICCV) (2011), pp. 495–502

K. Dabov, A. Foi, V. Katkovnik, K. Egiazarian, Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

J. Dong, J. Pan, Z. Su, Blur kernel estimation via salient edges and low rank prior for blind image deblurring. Sig. Process. Image Commun. 58, 134–145 (2017)

W. Dong, G. Shi, X. Li, Image deblurring with low-rank approximation structured sparse representation, in Proceedings of The 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC) (2012), pp. 1–5

W. Dong, G. Shi, X. Li, Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans. Image Process. 22(2), 700–711 (2013)

W. Dong, L. Zhang, G. Shi, X. Wu, Image deblurring and super-resolution by adaptive sparse domain selection and adaptive regularization. IEEE Trans. Image Process. 20(7), 1838–1857 (2011)

R. Fergus, B. Singh, A. Hertzmann, S.T. Roweis, W.T. Freeman, Removing camera shake from a single photograph. ACM Trans. Graph. 25(3), 787–794 (2006)

S. Gu, Q. Xie, D. Meng, W. Zuo, X. Feng, L. Zhang, Weighted nuclear norm minimization and its applications to low level vision. Int. J. Comput. Vision 121(2), 183–208 (2017)

S. Gu, L. Zhang, W. Zuo, X. Feng, Weighted nuclear norm minimization with application to image denoising, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014), pp. 2862–2869

Y. Guo, H. Ma, Image blind deblurring using an adaptive patch prior. Tsinghua Sci. Technol. 24(2), 238–248 (2019)

M. Hirsch, C.J. Schuler, S. Harmeling, B. Schölkopf, Fast removal of non-uniform camera shake, in International Conference on Computer Vision (ICCV) (2011), pp. 463–470

H. Ji, C. Liu, Z. Shen, Y. Xu, Robust video denoising using low rank matrix completion, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2010), pp. 1791–1798

K.H. Jin, J.C. Ye, Annihilating filter-based low-rank hankel matrix approach for image inpainting. IEEE Trans. Image Process. 24(11), 3498–3511 (2015)

N. Joshi, R. Szeliski, D.J. Kriegman, PSF estimation using sharp edge prediction, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2008), pp. 1–8

B.R. Kapuriya, D. Pradhan, R. Sharma, Detection and restoration of multi-directional motion blurred objects. SIViP 13(5), 1001–1010 (2019)

R. Köhler, M. Hirsch, B. Mohler, B. Schölkopf, S. Harmeling, Recording and playback of camera shake: benchmarking blind deconvolution with a real-world database, in European Conference on Computer Vision (ECCV) (2012), pp. 27–40

D. Krishnan, T. Tay, R. Fergus, Blind deconvolution using a normalized sparsity measure, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2011), pp. 233–240

O. Kupyn, V. Budzan, M. Mykhailych, D. Mishkin, J. Matas, DeblurGAN: blind motion deblurring using conditional adversarial networks, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), pp. 8183–8192

W.-S. Lai, J.-B. Huang, Z. Hu, N. Ahuja, M.-H. Yang, A comparative study for single image blind deblurring, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 1701–1709

A. Levin, R. Fergus, F. Durand, W.T. Freeman, Image and depth from a conventional camera with a coded aperture. ACM Trans. Graph. 26(3), 70–78 (2007)

A. Levin, Y. Weiss, F. Durand, W.T. Freeman, Understanding and evaluating blind deconvolution algorithms, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2009), pp. 1964–1971

A. Levin, Y. Weiss, F. Durand, W.T. Freeman, Efficient marginal likelihood optimization in blind deconvolution, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2011), pp. 2657–2664

J. Li, W. Lu, Blind image motion deblurring with L0-regularized priors. J. Vis. Commun. Image Represent. 40, 14–23 (2016)

L. Li, J. Pan, W.-S. Lai, C. Gao, N. Sang, M.-H. Yang, Blind image deblurring via deep discriminative priors. Int. J. Comput. Vision 127(8), 1025–1043 (2019)

M. Li, J. Liu, Z. Xiong, X. Sun, Z. Guo, MARLow: a joint multiplanar autoregressive and low-rank approach for image completion, in European Conference on Computer Vision (ECCV), (2016), pp. 819–834

L. Ma, L. Xu, T. Zeng, Low rank prior and total variation regularization for image deblurring. J. Sci. Comput. 70(3), 1336–1357 (2017)

J. Mairal, F. Bach, J. Ponce, G. Sapiro, A. Zisserman, Non-local sparse models for image restoration, in International Conference on Computer Vision (ICCV) (2009), pp. 2272–2279

T. Michaeli, M. Irani, Blind deblurring using internal patch recurrence, in European Conference on Computer Vision (ECCV) (2014), pp. 783–798

S. Nah, T. Kim, K. Lee, Deep multi-scale convolutional neural network for dynamic scene deblurring, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), pp. 3883–3891

F. Nie, H. Huang, C. Ding, Low-rank matrix recovery via efficient Schatten p-norm minimization, in AAAI Conference on Artificial Intelligence (AAAI) (2012), pp. 655–661

J. Pan, Z. Hu, Z. Su, M.-H. Yang, Deblurring text images via L0-regularized intensity and gradient prior, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014), pp. 2901–2908

J. Pan, Z. Lin, Z. Su, M.-H. Yang, Robust kernel estimation with outliers handling for image deblurring, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 2800-2808

J. Pan, R. Liu, Z. Su, X. Gu, Kernel estimation from salient structure for robust motion deblurring. Sig. Process. Image Commun. 28(9), 1156–1170 (2013)

J. Pan, D. Sun, H. Pfister, M.-H. Yang, Blind image deblurring using dark channel prior, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 1628–1636

D. Perrone, R. Diethelm, P. Favaro, Blind deconvolution via lower-bounded logarithmic image priors, in International Conference on Energy Minimization Methods in Computer Vision and Pattern Recognition (EMMCVPR) (2015), pp. 112–125

W. Ren, X. Cao, J. Pan, X. Guo, W. Zuo, M.-H. Yang, Image deblurring via enhanced low rank prior. IEEE Trans. Image Process. 25(7), 3426–3437 (2016)

C.J. Schuler, M. Hirsch, S. Harmeling, B. Scholkopf, Learning to Deblur. IEEE Trans. Pattern Anal. Mach. Intell. 38(7), 1439–1451 (2016)

Q. Shan, J. Jia, A. Agarwala, High-quality motion deblurring from a single image. ACM Trans. Graph. 27(3), 1–10 (2008)

L. Sun, S. Cho, J. Wang, J. Hays, Edge-based blur kernel estimation using patch priors, in IEEE International Conference on Computational Photography (ICCP) (2013), pp. 1–8

Y. Tang, Y. Xue, Y. Chen, L. Zhou, Blind deblurring with sparse representation via external patch priors. Digit. Signal Proc. 78, 322–331 (2018)

X. Tao, H. Gao, X. Shen, J. Wang, J. Jia, Scale-recurrent network for deep image deblurring, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018), pp. 8174–8182

O. Whyte, J. Sivic, A. Zisserman, Deblurring shaken and partially saturated images. Int. J. Comput. Vision 110(2), 185–201 (2014)

O. Whyte, J. Sivic, A. Zisserman, J. Ponce, Non-uniform deblurring for shaken images. Int. J. Comput. Vis. 98(2), 168–186 (2011)

Y. Xie, S. Gu, Y. Liu, W. Zuo, W. Zhang, L. Zhang, Weighted Schatten p-norm minimization for image denoising and background subtraction. IEEE Trans. Image Process. 25(10), 4842–4857 (2016)

L. Xu, J. Jia, Two-phase kernel estimation for robust motion deblurring, in European Conference on Computer Vision (ECCV) (2010), pp. 157–170

L. Xu, C. Lu, Y. Xu, J. Jia, Image smoothing via L0 gradient minimization. ACM Trans. Graph. 30(6), 1–12 (2011)

L. Xu, S. Zheng, J. Jia, Unnatural L0 sparse representation for natural image deblurring, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013), pp. 1107–1114

Y. Yan, W. Ren, Y. Guo, R. Wang, X. Cao, Image deblurring via extreme channels prior, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), pp. 6978–6986

Z. Zha, X. Zhang, Y. Wu, Q. Wang, X. Liu, L. Tang, X. Yuan, Non-convex weighted lp nuclear norm based ADMM framework for image restoration. Neurocomputing 311, 209–244 (2018)

J. Zhang, D. Zhao, W. Gao, Group-based sparse representation for image restoration. IEEE Trans. Image Process. 23(8), 3336–3351 (2014)

D. Zoran, Y. Weiss, From learning models of natural image patches to whole image restoration, in International Conference on Computer Vision (ICCV) (2011), pp. 479–486

W. Zuo, D. Meng, L. Zhang, X. Feng, D. Zhang, A generalized iterated shrinkage algorithm for non-convex sparse coding, in International Conference on Computer Vision (ICCV) (2013), pp. 217–224

Acknowledgements

We thank the anonymous reviewers for their valuable comments, which have helped to improve the quality of this paper. Additionally, we thank Liwen Bianji, Edanz Editing China (www.liwenbianji.cn/ac), for editing the English text of a draft of this manuscript.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

ZX contributed to conceptualization, methodology, and writing—original draft preparation; ZX and HC performed formal analysis and investigation and provided software; ZX, HC, and ZL performed writing—review and editing; ZL helped in supervision and project administration.

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, Z., Chen, H. & Li, Z. Blind Image Deblurring via the Weighted Schatten p-norm Minimization Prior. Circuits Syst Signal Process 39, 6191–6230 (2020). https://doi.org/10.1007/s00034-020-01457-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01457-z