Abstract

Recently, topic modeling has been upgraded by neural variational inference, which simultaneously allows the model structures deeper and proposes efficient update rules with the reparameterization trick. We formally call this recent new art as neural topic model. In this paper, we investigate a problem of neural topic models, where they formulate topic embeddings and measure the word weights within topics by linear transformation between topic and word embeddings, resulting in redundant and inaccurate topic representations. To solve this problem, we propose a novel neural topic model, namely G enerative M odel with N onlinear N eural T opics (GMnnt). The insight of GMnnt is to replace the topic embeddings with neural networks of topics, named neural topic, so as to capture nonlinear relationships between words in the embedding space, enabling to induce more accurate topic representations. We derive the inference process of GMnnt under the framework of neural variational inference. Extensive empirical studies have been conducted on several widely used collections of documents, including datasets of both short texts and normal long texts. The experimental results validate that GMnnt can output more semantically coherent topics compared with traditional topic models and neural topic models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, the volume of text data becomes increasingly large everyday, e.g., online news and reports generated by a variety of daily web services [33]. Automatically mining the latent theme information from them with unsupervised learning is a significant and challenging research subject. Topic models [2], e.g., Latent Dirichlet Allocation (LDA) [6] and Hierarchical Dirichlet Processes (HDP) [48], have become one of the great successful unsupervised techniques for inducing latent topics from text documents. In the past decades, topic models surveyed by [7] have been applied to numbers of fields, e.g., sociology, marketing, and political science, to name just a few.

Most traditional topic models, built on the spirit of LDA [6], are probabilistic generative models of documents, basically supposing that each document is represented by a topic proportion and each topic is a multinomial distribution over words. With conjugacy designs, e.g., Dirichlet-Multinomial distributions, the posterior distributions associated with the topics can be efficiently inferred by either variational inference [3, 6, 24] or sampling methods, e.g., collapsed Gibbs sampling [18]. Generally, the expressiveness of topic models grows with more complicated model structures, however, this also results in intractable inference problems. Recently, topic modeling has been upgraded by neural variational inference [26, 35, 39, 45], which approximates the posterior distribution of a generative model with a variational distribution parameterized by a neural network [34]. This simultaneously allows the model structures deeper and proposes efficient update rules with the reparameterization trick [26, 49], developing a new trend of topic modeling, formally referred to as neural topic model, i.e., the art that marries topic modeling with deep neural networks.

The previous research literatures have introduced dozens of neural topic models [8, 10, 12, 13, 19, 22, 33,34,35, 41, 43, 47, 52,53,54]. From the perspective of model inference by variational Bayes, in neural topic models the neural network serves as a variational distribution to the target distribution, i.e., often the posterior of latent variables. Or they can be read as Variational Auto-Encoders (VAE) kind of models [26]. As an example, the Neural Variational Document Model (NVDM) [35] involves two halves, i.e., an encoding network for latent topics and a generative decoding model for document reconstruction from topics. The subsequent study [34] normalizes the latent topics of NVDM for achieving distribution expressions of topics, and proposes three versions of neural topic models with different neural structures. However, the aforementioned models suffer from a shared weakness: they formulate topics as embeddings, i.e., distributed representations in the word embedding space, and measure the word weights within topics by linear transformation between topic and word embeddings. This results in the problem of redundant and inaccurate topic representations, which is going to be discussed in the following part.

1.1 Problem, motivation and contribution

To deeply discuss the prior neural topic models, we briefly introduce a standard generative formulation of documents [13, 34]. Specifically, the generative process of a document \(\{{w_{dn}}\}_{n=1}^{N_d}\) can be described as follows:

where 𝜃d denotes the topic proportion drawn from G(μ0, σ0), i.e., a neural network conditioned on an isotropic Gaussian \(\mathcal {N}(\mu _{0},\sigma _{0})\), e.g., logistic-normal distribution [13], Gaussian softmax distribution, and Gaussian stick breaking distribution [34]; zdn the topic assignment drawn from 𝜃d; and ϕt the topic distribution over words, constructed by the softmax function of the product of word embeddings ρ and topic embedding βt:

For simplicity, we now by no means introduce the notations too much, which will be detailedly described in the latter section (also see Table 1).

Referring to (2), we notice that the word weights within topics are actually computed by the inner product distance between topic and word embeddings. In this situation, neighboring words tend to share similar weights in the same topic, resulting in potentially redundant top topical words, also observed in the early models [1, 11, 28]. To visualize this problem, Figure 1 shows two examples (i.e., topic embeddings and embeddings of top words) learnt by the prior model [13] across NewYorkTimes. We can observe that many the top word lists contain many similar words, resulting in redundancy.

Top topical word redundancy in ETM [13]. Each topic is visualized by its 24 top words on a 2-dimensional space compacted by principle component analysis. The topic embeddings and word embeddings are represented by red and black points, respectively

In this paper, we introduce the proposed generative model that extracts nonlinear neural topics in embedding spaces, namely G enerative M odel with N onlinear N eural T opics (GMnnt). In GMnnt we replace the topic embeddings with neural networks of topics, formally referred to as neural topic, which can capture nonlinear relationships between words in the embedding space. Therefore, even similar words, i.e., neighbors measured by word embeddings, are allowed with totally different probabilities in the same topic distribution, leading to more accurate topic representations.

The main contributions of this paper are described below:

-

We investigate the problem of prior neural topic models, where they may output inaccurate topics with redundant top topical words.

-

We develop a new GMnnt model that uses neural topics, describing nonlinear relationships between word embeddings.

-

Empirical studies show that GMnnt can generate semantically coherent topics in contrast to traditional neural topic models.

The rest of this paper is organized as follows: In Section 2, we introduce the most related works. We present the proposed GMnnt and its inference process in Section 3. The empirical studies are shown in Section 4. Finally, we conclude this work in Section 5.

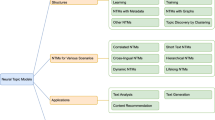

2 Related work

In this section, we briefly introduce the previous studies on traditional topic models and neural topic models.

2.1 Traditional topic model

On referring to reviews [2, 7], traditional topic modeling, such as LDA [6] and its nonparametric version (i.e., HDP) [48], has been well studied as probabilistic generative models of documents. Generally speaking, they are probabilistic generative models of documents. For example, in the context of LDA, it considers the corpus as a mixture of K topics and each document corresponds with a topic proportion 𝜃d, drawn from the Dirichlet prior α. Each topic presents a multinomial distribution over the vocabulary ϕ, drawn from the Dirichlet prior β. Specifically, the generative process of a document collection can be described as follows:

-

For each topic k ∈ [K]

-

Sample a topic \(\phi _{k} \sim \mathbf {Dirichlet}(\beta )\)

-

-

For each document wd, d ∈ [D]

-

Sample a topic proportion \(\theta _{d} \sim \mathbf {Dirichlet}(\alpha )\)

-

For each word token wdn, n ∈ [Nd]

-

Sample a topic assignment \(z_{dn} \sim \mathbf {Multinomial}(\theta _{d})\)

-

Sample a word \(w_{dn} \sim \mathbf {Multinomial}(\phi _{z_{dn}})\)

-

-

where zdn represents the topic assignment for each word token.

In the past decades, researchers have developed many extensions built on the above formulation, and they have been successfully applied to deal with various problems as well as various kinds of text data, e.g., topic correlations [5, 23, 27, 30], dynamic topics varying over time [4, 51], and sparse topics within short texts [9, 16, 31, 44], etc. Commonly, the popular model inference methods include variational inference often with mean-field approximations [6, 24], Gibbs sampling [18], and hybrid methods [29, 38]. However, to maintain model inference efficient, traditional topic models are more willing to be designed as shallow structures with conjugate priors, which somehow limits expressiveness.

2.2 Neural topic model

Recently, a new trend of topic modeling, i.e., neural topic model, has raised lots of concerns [8, 10, 12, 13, 19, 22, 33,34,35, 41, 47, 53, 54]. For ease of understanding, we briefly introduce these models from the eye of VAE, i.e., the encoder-decoder perspective of documents. Encoding: In this situation, the original document representations, e.g., bag-of-words, are encoded as (unnormalized) topic proportions by variational neural networksFootnote 1. Decoding: The reconstructed document representations, i.e., probabilities of the vocabulary, are computed by a LDA-like generative process [13, 34], which starts from topic proportions with topic-word distributions specified by topic embeddings. Specially, the variational distributions are often fixed as Gaussians for efficiently applying the reparameterization trick [26, 39, 45]. Generally speaking, several typical neural topic models include NVDM [35], Gaussian Softmax Model (GSM) [34], LDA with Products of Experts (ProdLDA) [47], Neural Variational LDA (NVLDA) [47], and Embedded Topic Model (ETM) [13], etc.

Due to the reparameterization property of the Gaussian distribution, many existing neural topic models [13, 34, 35] employ it as the prior distribution for efficient inference. NVDM [35] is an early attempt modeling topics with Gaussian prior, in which a two-layer multi-layer perception is applied for encoding. NVDM can achieve rather superior perplexity scores and meanwhile generates incoherent topics in many cases, as reported in [13]. Inspired by the context embedding [37], ETM [13] incorporates word and topic embeddings into neural topic models, assuming that each topic is presented in a word embedding space. ETM generates topic and word embeddings simultaneously during training and has a variant with pre-trained word embeddings. Due to the inner product of the topic and word embeddings in the decoder of ETM, words with high semantic correlations are more likely to gather in the same topic embedding space, leading to topical words redundancy. Besides, to capture topic correlations, [33] proposed Neural Variational Correlated Topic Model (NVCTM), which incorporates Centralized Transformation Flow (CTF), enabling to model Gaussian distributions with covariance matrix.

Another group of attempts approximate the Dirichlet distribution as prior for neural topic models as which in LDA, for lacking the intuitional non-central differentiable reparameterizations for Dirichlet distribution under neural variational inference [8]. ProdLDA [47] explores the Laplace approximation for the Dirichlet prior. A relatively high learning rate and batch normalization prevent ProdLDA from component collapsing. [40] approaches the Dirichlet prior by a rejection sampler on Gamma distribution. The proposed Rejection Sampling Variational Inference (RSVI) creates an elegant and extensible way for solving the reparameterization challenge and studies the approximations of Gamma distribution and Dirichlet distribution. Based on RSVI, [54] generates an approximation of Gamma distribution utilizing Weibull distribution since reparameterization trick is available on Weibull distribution. [8] proposes Dirichlet Variational Autoencoder (DVAE) and decouples sparsity and smoothness in the Dirichlet distribution.

There also exist studies about variants of VAE-based topic models. Adversarial-neural Topic Model (ATM) [52] generates an approach adapting Generative Adversarial Nets (GANs) with the Dirichlet prior to topic modeling. [41] broadens the Wasserstein Auto-encoder [50] and proposes W-LDA which is capable of matching aggregated posteriors to priors utilizing the Maximum Mean Discrepancy (MMD).

3 Model

In this section, we introduce the proposed generative model that extracts nonlinear neural topics in embedding spaces, namely G enerative M odel with N onlinear N eural T opics (GMnnt).

3.1 Model description

Consider a corpus of D documents, i.e., denoted by \(\{w_{d}\}_{d=1}^{D}\), with a fixed vocabulary of V words. Each document of Nd word tokens is represented by \(\{w_{dn}\}_{n=1}^{N_{d}}\). With any existing embedding technique, e.g., Word2Vec [36] and GloVe [42], the pre-trained L-dimensional word embeddings almost covering the current vocabulary are available, i.e., denoted by \(\rho \in \mathbb {R}^{L \times V}\), where each column ρv is the corresponding embedding of word v.

In the context of traditional neural topic models [13, 34], the documents are generated from distributions associated with topics. The topics are represented by L-dimensional topic embeddings, and referring to (2), the word weights within topics are measured by linear transformation between topic and word embeddings. However, such resulting topic-word distributions may contain many redundant top topical words, i.e., outputting inaccurate topic representations, which has been explained before (see Figure 1). To solve this problem, in GMnnt we replace the topic embeddings with neural networks of topics (i.e., neural topics), which can capture nonlinear relationships between words in the embedding space [32]. Therefore, even similar words, i.e., neighbors measured by word embeddings, are allowed with totally different probabilities in the same topic distribution, leading to more accurate topic representations. Specifically, we define that NT(ρ|φt) denotes the neural topic t with word embeddings ρ, formulated as follows:

where f(ρv|φt) is a neural network parameterized by φt with the input of one word embedding ρv and the output of the corresponding untransformed word weight.

Overall speaking, the model structure of GMnnt is under the framework of traditional neural topic models, described in (1). For clarity, we now formally introduce the generative process of GMnnt as follows: Suppose that there are totally T neural topics ϕ (i.e., (3)), representing multinomial distributions over words. For each document wd, GMnnt first draws a topic proportion 𝜃d from a neural network kind of prior G(μ0, σ0), named topic proportion generator. Then, it draws a topic assignment zdn from 𝜃d, and then draws a word wdn from \(\phi _{z_{dn}}\). Repeat this process Nd times for Nd word tokens. In summary, the generative process of GMnnt is described below:

-

For each document wd, d ∈ [D]

-

Sample a topic proportion \(\theta _{d} \sim \mathbf {G}(\mu _{0},\sigma _{0})\)

-

For each word token wdn, n ∈ [Nd]

-

Sample a topic assignment \(z_{dn} \sim \mathbf {Multinomial}(\theta _{d})\)

-

Sample a word \(w_{dn} \sim \mathbf {Multinomial}(\phi _{z_{dn}}) \triangleq \mathbf {NT}(\rho |\varphi _{z_{dn}})\)

-

-

In this work, we specify the topic proportion generator G(μ0, σ0) as the Gaussian softmax distribution [34]. For each document wd it first generates an untransformed topic proportion δd from a isotropic Gaussian \(\mathcal {N}(\mu _{0},\sigma _{0})\) and then applies the softmax function to compute the final 𝜃d:

where \(W \in \mathbb {R}^{T \times T}\) is the linear transformation matrix. We would like to note that our GMnnt is feasible to apply more complex topic proportion generator, leading to more practical variants of GMnnt. The important notations of this paper are shown in Table 1 for convenience.

3.2 Inference

From the perspective of topic modeling, the model parameters of GMnnt include the neural topic parameter φ and the Gaussian hyper-parameters {μ0, σ0}, while the latent variables include the untransformed topic proportion δ and topic assignment z. In our situation, {μ0, σ0} are fixed as known priors and z can be analytically integrated out. Therefore, the inference problem refers to finding the optimum of {φ, δ} by fitting GMnnt given a collection of documents \(\mathcal {D}\) and word embeddings ρ.

Commonly, the inference problem of GMnnt is intractable to compute, therefore we resort to approximating inference by neural variational inference [34, 35] with the reparameterization trick [26]. With the spirit of amortized inference [17], we posit the following variational distributions over the untransformed topic proportion δ:

where g(wd|λ) is the variational neural network parameterized by λ (i.e., considered as the variational parameter). That is, the network ingests wd and outputs {μd, σd}. Following [13], we form the input wd by normalizing its bag-of-word representation by the number of word tokens Nd. Applying these variational distributions, we can formulate the following variational objective, i.e., Evidence Lower BOund (ELBO), with respect to {φ, λ}:

The likelihood of each document in (6) is given by:

where ϕ and 𝜃 are obtained by (3) and (4), respectively.

Since variational distributions are Gaussians, we can replace the variational objective of (6) with its Monte Carlo approximation by using the reparameterization trick [26]:

where S is the number of Monte Carlo samplesFootnote 2 and \(h(\delta _{d}^{(s)})= \delta _{d}^{(s)} \sigma _{d} + \mu _{d}\) the mapping function.

Given this approximating variational objective, we can form its gradients with respect to {φ, λ}, where the subgradients of neural networks (i.e., variational neural networks and neural topics) can be computed by backpropagation. We then update {φ, λ} with their gradients under numbers of updating cycles until {φ, λ} are almost unchanged. To efficiently deal with corpora of massive documents, the data subsampling methodology from [20, 21] can be also applied. Finally, for fast and safe updating processes, we can adopt any adaptive learning rate method, e.g., Adagrad [15], Adam [25], and Nadam [14], etc. The full inference procedure of GMnnt is briefly shown in Algorithm 1. Specially, we would like to note that we can reform the approximating variational objective of (8) by drawing new Monte Carlo samples during the updating cycles. We omit this detail in Algorithm 1 for concise expression.

4 Experiment

Corpora

The experiments have been conducted across 5 publicly available datasets, whose statistics are summarized in Table 2. Specifically, they include TrecFootnote 3, StackOverflowFootnote 4, AbstractFootnote 5, Tmc2007Footnote 6, and NewYorkTimesFootnote 7. For each dataset, we removed the standard stop words and infrequent words occurred in less than 5 documents. We randomly selected 85% instances as the training dataset, 10% as test dataset and 5% as validation dataset.

Besides, we employed the pre-trained GloVeFootnote 8 word embeddings [42], i.e., the 300-dimensional version trained on Wikipedia2014 and Gigaword5. We randomly generated the embeddings of words that have not been covered by GloVe embeddings.

Comparing Topic Models

We compare the performance of GMnnt with four existing topic models, including three neural topic models, i.e., ETM, NVDM and ProdLDA, and also the standard Online LDA (OLDA) model. Details of all comparing models are described below:

-

LDA [6, 20, 21] is the standard LDA model trained by stochastic variational inference. Here, the Dirichlet priors of document-topic proportions and topics are set to 0.1 and 0.01, respectively. The code is available on the netFootnote 9.

-

NVDM [35] is an unnormalized neural topic model with Gaussian prior. Following [14], the encoder used in NVDM defines a fully connected network with 2 layers and 500 hidden neurons. It involves an inner iteration for optimizing the encoder. The code is provided by its authors.Footnote 10

-

ProdLDA [47] is a neural topic model with Dirichlet prior, solved by Laplace approximation. Following [47], we define its encoder as a fully connected network with 3 layers and 100 hidden neurons, where the tricks of batch normalization and 0.2 dropout are also used. The code is provided by its authorsFootnote 11.

-

GSM [34] is a neural topic model using Gaussian Softmax which constructs a finite topic distribution. We inherit the same encoder from NVDM and the number of hidden neurons and word vectors dimension are both set as 500. We adopt the document model version. The code is available on the net.Footnote 12

-

ETM [13] is a neural topic model with Gaussian prior. Following [13], the encoder used in ETM defines a fully connected network with 3 layers and 800 hidden neurons. The code is provided by its authors.Footnote 13

-

GMnnt is our proposed neural topic model with nonlinear neural topics. We use the same encoder as ETM. The neural topics are designed as fully-connected networks with λd layers and λw hidden neurons, and the batch normalization is applied. The parameters of neural topics are tuned over the following ranges: λd ∈{2,3,4,5,6,7,8} and λw ∈{60,80,100,120}. We will analyze these parameters later.

For all comparing models, the mini-batch size is set to 1000. The learning rate and epoch for GMnnt are set as 0.0001 and 2000, respectively. Besides, the Adam method is used for adaptively tuning the learning rate under the following settings: β1 = 0.9, β2 = 0.999. Specifically, we study two variants of ETM and GMnnt following [13] where the one applies the pre-trained word embeddings, and the other leaves the word embeddings as trainable parameters. The versions with pre-trained word embeddings are called p-ETM and p-GMnnt, respectively.

4.1 Qualitative study

The first concern is whether GMnnt enables to alleviate the problem of redundant top topical words that have been observed in previous neural topic models. To answer it, as shown in Table 3 we list 10 same topics learned by p-ETM and p-GMnnt across the NewYorkTimes dataset. We may find that p-ETM severely suffers from the topical words redundancy, where 17 pairs of words with same etyma exist in 10 topics, i.e., “restaurant” and “restaurants”. As for p-GMnnt, there only exist 4 pairs in 4 topics which indicates that our method significantly relieves the topical words redundancy.

More specifically, ETM and p-ETM apply an inner product decoder, which gathers closer words in the word embedding space and generate topics closest to these gathered words. These close words are mostly semantically related and result in topical words redundancy. Our GMnnt and p-GMnnt learn word weights for each topics independently in a nonlinear manner, which weakens the words gathering in the embedding space. Furthermore, different topics in ETM and p-ETM exist in the same embedding space and therefore words under different topics may be duplicated when the word embeddings are not discriminative enough, especially for the version without pre-trained embeddings. The topic uniqueness results in Section 4.2.3 coincide with this analysis.

We further compare topic quality over all methods and present topics about “aircraft fire” from Tmc2007 in Table 4. We find that NVDM and ProdLDA generate many identical topics, which severely suffer from topic redundancy. Comparing with baselines, GMnnt and p-GMnnt only generate one topic with more meaningful words, corresponding with the high topic uniqueness in Section 4.2.3. There seems to exist a trade-off between the number of topics and topic uniqueness scores on NVDM and ETM. Actually, topical words generated by NVDM on Tmc2007 are quite infrequent and even less repeated, leading to higher topic uniqueness scores, which will be analyzed below. As for ETM, it generates plenty of identical words among different topics, e.g., “aircrafts”, which severely harm the topic uniqueness.

We also notice that NVDM and ProdLDA generate “low-quality” topics on larger datasets. For smaller datasets, e.g., Trec and StackOverflow, the two methods generate “high-quality” topics with meaningful words. However when facing the larger Abstract and Tmc2007, they begin to generate some infrequent words which harm the topic quality. As for the large NewYorkTimes, they generate rather poor topics with numerous infrequent words, e.g., names of persons and places. For validating the guess that NVDM and ProdLDA suffer from topic quality descending with the increment of dataset scale, we examine NVDM and ProdLDA on different truncation sizes of NewYorkTimes and the results show the same case.

4.2 Quantitative study

We quantitatively evaluate the proposed GMnnt model by three tasks of held-out likelihood, topic coherence and topic uniqueness.

4.2.1 Evaluation on held-out likelihood

Perplexity

The perplexity is a widely used metric for measuring the held-out likelihood. Considering a test dataset \(\widehat W = \{\widehat w_{d}\}_{d=1}^{\widehat D}\), its perplexity can be computed as follows:

where \(\log (\widehat w_{d})\) represents the log probability of document \(\widehat w_{d}\). Following [35], we use the variational lower bound to approximate the perplexity.

Results

We show the results in Table 5. Overall speaking, our GMnnt with trainable word embeddings achieves the best perplexities among all baselines and the p-GMnnt with pre-trained word embeddings ranks the second. According to the average ranks, the performance order of perplexity is given by GMnnt> p-GMnnt> NVDM > ETM ≈ p-ETM > LDA > ProdLDA > GSM. More observations and discussions are detailed below.

Our GMnnt achieves the best perplexities and the two versions both perform well among all settings. GSM has the worst results on short text datasets, i.e., Trec and StackOverflow, and LDA also performs badly on these two datasets which coincides with the fact that LDA fails to handle short texts due to the lack of word patterns. As for long text datasets, i.e., Abstract, Tmc2007 and NewYorkTimes, LDA lies in middle position and performs better than ProdLDA and GSM, which gain the worst perplexities on long text datasets. NVDM performs well on long text datasets and the performance gaps comparing with our GMnnt are quite small. NVDM achieves the best perplexity on NewYorkTimes, corresponding with the best topic coherence and topic uniqueness which will be analyzed later. ProdLDA has the worst perplexities on most settings, especially for long text datasets. The cause for the extreme high perplexity goes to the high KL-divergence for approximating the Dirichlet prior in the encoder, and these observations are consistent with [8]. We have the same observations on the objective values in Section 4.3.

4.2.2 Evaluation on topic coherence

Topic coherence

Broadly speaking, the Topic Coherence (TC) measures the quality of topics by counting the co-occurrences of their top words. In the experiment, we compute the score of topic coherence by using the publicly available project PalmettoFootnote 14 developed by the previous study [46]. We employ the version of CV suggested by [46].

Results

Table 6 illustrates results of topic coherence. Our p-GMnnt and GMnnt achieve significant improvements over other methods, especially on Trec and Abstract. The performance improvements are up to 0.025 and 0.059 on Trec and Abstract when K = 25 and K = 50, respectively. Our GMnnt achieves much higher topic coherence scores than ETM, especially on Trec, Abstract and NewYorkTimes, which indicates that our method generates more coherent topics with less redundant words, e.g., semantically related words, which may seldomly co-occur and therefore harm the topic coherence. This observation confirms the effectiveness of our motivation. Besides, both the two versions of GMnnt perform steady results for different topic numbers T. LDA suffers from the sparsity problem on short text datasets and therefore results in the bad performance on Trec and StackOverflow and meanwhile beats most neural topic models on Abstract and Tmc2007.

Meanwhile, we find that high topic coherence scores may not always correspond with higher topic qualities. For NewYorkTimes, NVDM achieves the best topic coherence up to 0.557. Back to the aforementioned topic quality decline problem in Section 4.1, when facing larger datasets, NVDM and ProdLDA tend to generate more infrequent words for each topic, e.g., names of persons or places. These infrequent words may strongly co-occur in external mass corpus however leading to less meaningful topics.

4.2.3 Evaluation on topic uniqueness

Topic Uniqueness

Topic Uniqueness (TU) measures the redundancy of top-M words of topics [41]. Given learned top-M words of all topics \(\{{\Omega }_{t}\}_{t=1}^{T}\), TU is computed as follows:

where cnt(wi) denotes the number of times that the word wi appears in top-M word lists of all topics.

Results

Table 7 illustrates the results of topic uniqueness. Overall speaking, our p-GMnnt achieves the best topic uniqueness in most settings and ranks the first. Our p-GMnnt beats p-ETM 7 times on 11 settings and GMnnt with trainable word embeddings beats ETM in all settings, which indicates the effectiveness of topic-specific generation decoder.

p-ETM performs well on short text datasets while NVDM generates higher topic uniqueness scores on long text datasets. GSM applies the topic uniqueness regularization and achieves 0.940 on NewYorkTimes, while the regularization plays a weak part and has limited effects in reducing topic redundancy. Furthermore, we find that GSM tends to collapse to identical topics with longer training process, and this observation is rather similar with the component collapsing mentioned in [8], from which the VAE family mostly suffer. The reason for component collapsing is that the KL-divergence in objective tends to converge much faster than the reconstruction loss and the model falls into local optima in early training. We indeed have the same observations, where the topic uniqueness scores have the tendency of first rising and then descending. For our GMnnt and p-GMnnt, we apply a relatively high learning rate at 0.0001 and Batch Normalization is employed before the reparameterization trick for avoiding the component collapsing according to [8]. These settings provide gradually rising topic uniqueness values during the entire training process.

4.3 Convergence analysis

In this section, we present the convergence analysis of neural topic models and plot the objective, i.e., negative evidence lower bound (NELBO) values across Trec, StackOverflow, Abstract and Tmc2007 in Figure 2. Overall, our GMnnt and p-GMnnt converge fast within 40 epochs. Due to the smaller learning rate comparing with our baselines, our GMnnt and p-GMnnt converge a little slower than other methods. ProdLDA converges hard due to the approximation of Dirichlet prior. In summary, GMnnt is more practical in real applications due to its fast convergence.

4.4 Sensitivity analysis of parameters

In this section, we examine the impacts of number of layers λd and number of hidden neurons λw in neural topics by perplexity, topic coherence and topic uniqueness on p-GMnnt. We study the sensitivity experiment on Trec, StackOverflow, Abstract and Tmc2007 for memory limitation.

For λd, we varied it from {2,3,4,5,6,7,8} and present results in Figure 3. In general, a more complicated network leads to a worse perplexity under same settings. We find that for all four datasets, perplexities of p-GMnnt show little change and a slight rising trend. As for topic coherence, p-GMnnt shows insensitivity on Trec and StackOverflow and meanwhile shows slight decrements for Abstract and Tmc2007 when facing deeper networks. As for topic uniqueness, a deeper network mostly leads to better topic uniqueness scores for most datasets, which coincides with the fact that topic coherence and topic uniqueness are more likely to have opposite tendencies. In a word, GMnnt is insensitive to the number of layers in neural topic, making the model more robust in real scenarios. For λw, we varied it from {60,80,100,120} and plot results in Figure 4. In keeping with λd, perplexities shows small fluctuations on λw. Topic coherence scores on Trec and StackOverflow are stable and insensitive to λw and a wider network leads to higher topic uniqueness scores. In brief, our GMnnt shows great robust on λd and λw, which makes it practical in real-world applications.

5 Conclusion

In this paper, we aim at alleviating the problem that the existing neural topic models often output redundant and inaccurate topic representations. To this end, we suggest a novel GMnnt model by replacing the topic embeddings with neural topics, enabling to capture nonlinear relationships between words in the embedding space. With this design, even similar words are allowed with different probabilities in the same topic distribution, leading to more accurate topic representations. By employing the spirit of neural variational inference, we can efficiently train GMnnt with the reparameterization trick. We conduct numbers of experiments to empirically compare GMnnt against several existing neural topic models and also the standard LDA model. Experimental results show that GMnnt is on a par with the existing baseline models over the evaluations of held-out likelihood, topic coherence and topic uniqueness. Specifically, the topics learned by GMnnt are more semantically coherent both qualitatively and quantitatively.

Notes

References

Batmanghelich, K., Saeedi, A., Narasimhan, K., Gershman, S.: Nonparametric spherical topic modeling with word embeddings. In: Annual Meeting of the Association for Computational Linguistics, pp. 537–542 (2016)

Blei, D.M.: Probabilistic topic models. Communications of The ACM 55(4), 77–84 (2012)

Blei, D.M., Kucukelbir, A., Mcauliffe, J.: Variational inference: a review for statisticians. J. Am. Stat. Assoc. 112(518), 859–877 (2017)

Blei, D.M., Lafferty, J.: Dynamic topic models. In: International Conference on Machine Learning, pp. 113–120 (2006)

Blei, D.M., Lafferty, J.: A correlated topic model of science. The Annals of Applied Statistics 1(1), 17–35 (2007)

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent Dirichlet allocation. J. Mach. Learn. Res. 3(Jan), 993–1022 (2003)

Boyd-Graber, J., Hu, Y., Mimno, D.: Applications of topic models. Foundations and Trends in Information Retrieval 11(2-3), 143–296 (2017)

Burkhardt, S., Kramer, S.: Decoupling sparsity and smoothness in the Dirichlet variational autoencoder topic model. J. Mach. Learn. Res. 20(131), 1–27 (2019)

Cheng, X., Yan, X., Lan, Y., Guo, J.: BTM: topic modeling over short texts. IEEE Trans. Knowl. Data Eng. 26(12), 2928–2941 (2014)

Cong, Y., Chen, B., Liu, H., M.Z.: Deep latent dirichlet allocation with topic-layer-adaptive stochastic gradient riemannian MCMC. In: International Conference on Machine Learning, pp. 864–873 (2017)

Das, R., Zaheer, M., Dyer, C.: Gaussian LDA for topic models with word embeddings. In: International Joint Conference on Natural Language Processing, pp. 795–804 (2015)

Dieng, A.B., Ruiz, F.J.R., Blei, D.M.: The dynamic embedded topic model. arXiv:1907.05545 (2019)

Dieng, A.B., Ruiz, F.J.R., Blei, D.M.: Topic modeling in embedding spaces. Transactions of the Association for Computational Linguistics 8, 439–453 (2020)

Dozat, T.: Incorporating nesterov momentum into adam. In: International Conference on Learning Representations Workshop (2016)

Duchi, J.C., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7), 2121–2159 (2011)

Feng, J., Rao, Y., Xie, H., Wang, F., Li, Q.: User group based emotion detection and topic discovery over short text. World Wide Web 23, 1553–1587 (2020)

Gershman, S., Goodman, N.D.: Amortized inference in probabilistic reasoning. In: Annual Meeting of the Cognitive Science Society (2014)

Griffiths, T.L., Steyvers, M.: Finding scientific topics. Proc. Natl. Acad. Sci. U.S.A. 101(suppl 1), 5228–5235 (2004)

Gui, L., Leng, J., Pergola, G., Zhou, Y., Xu, R., He, Y.: Neural topic model with reinforcement learning. In: Conference on Empirical Methods in Natural Language Processing, pp. 3478–3483 (2019)

Hoffman, M.D., Bach, F., Blei, D.M.: Online learning for latent dirichlet allocation. In: Neural Information Processing Systems, pp. 856–864 (2010)

Hoffman, M.D., Blei, D.M., Wang, C., Paisley, J.: Stochastic variational inference. J. Mach. Learn. Res. 14(1), 1303–1347 (2013)

Isonuma, M., Mori, J., Bollegala, D., Sakata, I.: Tree-structured neural topic model. In: Annual Meeting of the Association for Computational Linguistics, pp. 800–806 (2020)

Jiang, H., Zhou, R., Zhang, L., Wang, H., Zhang, Y.: Sentence level topic models for associated topics extraction. World Wide Web 22(6), 2545–2560 (2019)

Jordan, M.I., Ghahramani, Z., Jaakkola, T.S., Saul, L.K.: An introduction to variational methods for graphical models. Mach. Learn. 37(2), 105–161 (1999)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. In: International Conference on Learning Representations (2015)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: International Conference on Learning Representations (2014)

Li, W., Mccallum, A.: Pachinko allocation: DAG-structured mixture models of topic correlations. In: International Conference on Machine Learning, pp. 577–584 (2006)

Li, X., Chi, J., Li, C., Ouyang, J., Fu, B.: Integrating topic modeling with word embeddings by mixtures of VMFs. In: International Conference on Computational Linguistics, pp. 151–160 (2016)

Li, X., Ouyang, J., Zhou, X.: Sparse hybrid variational-gibbs algorithm for latent dirichlet allocation. In: SIAM International Conference on Data Mining, pp. 729–737 (2016)

Li, X., Zhang, A., Li, C., Ouyang, J., Cai, Y.: Exploring coherent topics by topic modeling with term weighting. Inform. Process. Manage. 54(6), 1345–1358 (2018)

Li, X., Zhang, J., Ouyang, J.: Dirichlet multinomial mixture with variational manifold regularization: Topic modeling over short texts. In: AAAI Conference on of Artificial Intelligence, pp. 7884–7891 (2019)

Li, Z., Wang, X., Li, J., Zhang, Q.: Deep attributed network representation learning of complex coupling and interaction. Knowl.-Based Syst. 212, 106,618 (2021)

Liu, L., Huang, H., Gao, Y., Zhang, Y., Wei, X.: Neural variational correlated topic modeling. In: The Web Conference, pp. 1142–1152 (2019)

Miao, Y., Grefenstette, E., Blunsom, P.: Discovering discrete latent topics with neural variational inference. In: International Conference on Machine Learning, pp. 2410–2419 (2017)

Miao, Y., Yu, L., Blunsom, P.: Neural variational inference for text processing. In: International Conference on Machine Learning, pp. 1727–1736 (2016)

Mikolov, T., Chen, K., Corrado, G.S., Dean, J.: Efficient estimation of word representations in vector space. In: International Conference on Learning Representations (2013)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Neural Information Processing Systems, pp. 3111–3119 (2013)

Mimno, D.M., Hoffman, M.D., Blei, D.M.: Sparse stochastic inference for latent dirichlet allocation. In: International Conference on Machine Learning, pp. 1515–1522 (2012)

Mnih, A., Gregor, K.: Neural variational inference and learning in belief networks. In: International Conference on Machine Learning, pp. 1791–1799 (2014)

Naesseth, C.A., Ruiz, F.J.R., Linderman, S.W., Blei, D.M.: Reparameterization gradients through acceptance-rejection sampling algorithms. In: International Conference on Artificial Intelligence and Statistics, pp. 489–498 (2017)

Nan, F., Ding, R., Nallapati, R., Xiang, B.: Topic modeling with wasserstein autoencoders. In: Annual Meeting of the Association for Computational Linguistics, pp. 6345–6381 (2019)

Pennington, J., Socher, R., Manning, C.D.: GloVe: Global vectors for word representation. In: Conference on Empirical Methods in Natural Language Processing, pp. 1532–1543 (2014)

Pergola, G., Gui, L., He, Y.: Tdam: a topic-dependent attention model for sentiment analysis. Inform. Process. Manage. 56(6), 102,084 (2019)

Rashid, J., Shah, S.M.A., Irtaza, A.: Fuzzy topic modeling approach for text mining over short text. Inform. Process. Manage. 56(6), 102,060 (2019)

Rezende, D.J., Mohamed, S., Wierstra, D.: Stochastic Backpropagation and Approximate Inference in Deep Generative Models. In: International Conference on Machine Learning, pp. 1278–1286 (2014)

Röder, M., Both, A., Hinneburg, A.: Exploring the Space of Topic Coherence Measures. In: International Conference on Web Search and Data Mining, pp. 399–408 (2015)

Srivastava, A., Sutton, C.A.: Autoencoding Variational Inference for Topic Models. In: International Conference on Learning Representations (2017)

Teh, Y.W., Jordan, M.I., Beal, M.J., Blei, D.M.: Hierarchical Dirichlet processes. J. Am. Stat. Assoc. 101(476), 1566–1581 (2006)

Titsias, M., Lázaro-Gredilla, M.: Doubly stochastic variational bayes for non-conjugate inference. In: International Conference on Machine Learning, pp. 4056–4069 (2014)

Tolstikhin, I., Bousquet, O., Gelly, S., Schoelkopf, B.: Wasserstein auto-encoders. In: International Conference on Learning Representations (2018)

Wang, C., Blei, D.M., Heckerman, D.: Continuous time dynamic topic model. In: Uncertainty in Artificial Intelligence, pp. 579–586 (2008)

Wang, R., Zhou, D., He, Y.: Atm: Adversarial-neural topic model. Inform. Process. Manage. 56(6), 102,098 (2019)

Wang, Y., Li, X., Ouyang, J.: Layer-assisted neural topic modeling over document networks. In: International Joint Conference on Artificial Intelligence, pp. 3148–3154 (2021)

Zhang, H., Chen, B., Guo, D., Zhou, M.: WHAI: Weibull hybrid autoencoding inference for deep topic modeling. In: International Conference on Learning Representations (2018)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (NSFC) [No.61876071] and Scientific and Technological Developing Scheme of Jilin Province [No.20180201003SF, No.20190701031GH] and Energy Administration of Jilin Province [No.3D516L921421].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Y., Li, X., Ouyang, J. et al. Extracting nonlinear neural topics with neural variational bayes. World Wide Web 25, 131–149 (2022). https://doi.org/10.1007/s11280-021-00970-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-021-00970-8