Abstract

The k th Fréchet derivative of a matrix function f is a multilinear operator from a cartesian product of k subsets of the space \(\mathbb {C}^{n\times n}\) into itself. We show that the k th Fréchet derivative of a real-valued matrix function f at a real matrix A in real direction matrices E1, E2, \(\dots \), Ek can be computed using the complex step approximation. We exploit the algorithm of Higham and Relton (SIAM J. Matrix Anal. Appl. 35(3):1019–1037, 2014) with the complex step approximation and mixed derivative of complex step and central finite difference scheme. Comparing with their approach, our cost analysis and numerical experiment reveal that half and seven-eighths of the computational cost can be saved for the complex step and mixed derivative, respectively. When f has an algorithm that computes its action on a vector, the computational cost drops down significantly as the dimension of the problem and k increase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Matrix functions play important roles in a variety of applications such as quantum graphs [10], network analysis [11], computer animation [29], and solutions of systems of differential equations [3, 7]. In the computation of matrix functions, it is important to understand how perturbations in input data effect the results. The condition number plays an essential role in measuring the sensitivity of the data to perturbations and the norm of the Fréchet derivative is the main component of the condition number. Apart from the sensitivity analysis, the Fréchet derivative is also used in image reconstruction in tomography [28], the computation of choice probabilities [2], the analysis of carcinoma treatment [14], and computing the matrix geometric mean and Karcher mean [19]. In the literature, there are some numerical algorithms for the Fréchet derivative for the matrix exponential, square root, logarithm, and fractional power; see [4, 8, 12, 15, 17, 21]. By using Daleckiǐ-Kreǐn formula, Noferini gives an explicit expression for the Fréchet derivative of generalized matrix functions [27].

The second-order Fréchet derivative has an application in the extension of iterative methods to solve a nonlinear scalar equation to Banach spaces [9]. The computation of the higher order Fréchet derivative s of matrix functions was first proposed by Higham and Relton [18]. The authors develop algorithms for computing the k th derivative and its Kronecker form. They analyze the level-2 absolute condition number of a matrix function, which is the condition number of the condition number, and bound it in terms of the norm of the second Fréchet derivative.

The use of complex arithmetic to approximating the derivative of analytic functions was introduced by Lyness [26] and Lyness and Moler [25]. The work of Squire and Trapp [30] appears the earliest manipulation of the complex step approximation for the derivative of a real function at a real number. Later, many authors have extended the complex step approach to produce approximations of higher rate of convergence (see, for instance, [1, 22,23,24]). The extension of the complex step approximation to the first-order Fréchet derivative of real matrix functions was first introduced by Al-Mohy and Higham [6].

The aim of this work is to make the computation of the higher order Fréchet derivative of a matrix function as efficient as possible via the use of derivative techniques: complex step and finite difference, and the implementation of the action of the matrix function on a thin tall matrix whenever available. The paper is organized as follows. In Section 2 we review the definition and the computation aspects of the k th Fréchet derivative given by Higham and Relton [18]. Based on the definition, we derive a recurrence relation for the k th Fréchet derivative of a monomial matrix function. Section 3 represents the main body of the paper. First, using the definition of the k th Fréchet derivative, we derive the central finite difference scheme and the complex step approximation showing the order of convergence rate. Second, we use the block matrix Xk− 1 (half the size of Xk) alongside the complex step approximation to compute the k th Fréchet derivative, yielding about 50% saving of the computational cost. Third, we derive the mixed derivative scheme of the central finite difference and the complex step approximation. This allowed us to use the block matrix Xk− 2 (one-fourth the size of Xk) with certain inputs to compute the k th Fréchet derivative. The computational saving is about 87%. Fourth, since the k th Fréchet derivative is extracted from f(Xk) by reading off the top right n × n block, it is attractive to use the action of f(Xk) on a certain thin and tall matrix to extract that block. We explain how to obtain the k th Fréchet derivative as a whole matrix and how to obtain its action on a vector. This approach yields a significant reduction of computational cost and CPU time. Finally, we give our numerical experiment in Section 4 and draw our concluding remarks in the last section.

2 Higher order Fréchet derivative

The k th-order Fréchet derivative of \(f : {\mathbb {C}}^{{n \times n}} \rightarrow {\mathbb {C}}^{{n \times n}}\) at \(A\in {\mathbb {C}}^{{n \times n}}\) can be defined recursively as the unique multilinear operator \(L^{(k)}_{f}(A)\) of the direction matrices \(E_{i}\in {\mathbb {C}}^{{n \times n}}\), i = 1: k, that satisfies

where \(L_{f}^{(0)}(A)=f(A)\) and \(L_{f}^{(1)}(A,E_{1})\) is the first-order Fréchet derivative. To shorten our expressions, we denote the k-tuple (E1,E2,⋯ ,Ek) by \({\mathcal {E}}_{k}\) regardless of the order of Ek since the multilinear operator \(L_{f}^{(k)}(A)\) is symmetric.

If E = Ej, j = 1: k, we denote the k th Fréchet derivative of f at E by \(L_{f}^{(k)}(A,E)\); that is, \(L_{f}^{(k)}(A,E)=L_{f}^{(k)}(A,E,E,\cdots ,E)\).

For the monomial Xr, where r is any nonnegative integer, we obtain the following recurrence for \(L_{x^{r}}^{(k)}(A,{\mathcal {E}}_{k})\), which can be proven by induction on k and using the product rule of the Fréchet derivative.

Lemma 2.1

The k th Fréchet derivative of Xr is given by

with \(L_{x^{r}}^{(k)}(A,E_{1},E_{2},\cdots ,E_{k})=0\) if k > r.

In particular if E = Ej, j = 1: k, the recurrence relation (2.2) boils down to the recurrence relation [6, Eq. (3.1)].

We recall next an important result by Higham and Relton [18] that allows the computation of the k th Fréchet derivative as a block of f(Xk), where

The symbol ⊗ denotes the Kronecker product [15, Chap. 12] and Im denotes the m × m identity matrix.

We will state the following theorem for the existence of the k th Fréchet derivative.

Theorem 2.1 ([18, Theorem. 3.5] )

Let \(A\in {\mathbb {C}}^{n\times n}\) whose largest Jordan block is of size p and whose spectrum lies in an open subset \(\mathcal {D}\subset {\mathbb {C}}\). Let \(f:\mathcal {D}\to {\mathbb {C}}\) be 2kp − 1 times continuously differentiable on \(\mathcal {D}\). Then the k th Fréchet derivative \(L^{(k)}_{f}(A)\) exists and \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) is continuous in A and \(E_{1},E_{2},\dots ,E_{k}\in {\mathbb {C}}^{n\times n}\). Moreover

the upper right n × n block of f(Xk).

3 Complex step approximation

3.1 Scalar case

If f(x) is a real function with real variables and is analytic then it can be expanded in a Taylor series

Thus, the imaginary part Im(f(x + ih))/h and the real part Re(f(x + ih)) give order O(h2) approximations to \(f^{\prime }(x)\) and f(x), respectively. Approximating \(f^{\prime }(x)\) by the imaginary part of the function avoids the subtractive cancellation occurring in finite difference scheme. In addition, complex step approximation allows the use of an arbitrary small h without sacrificing the accuracy. Numerical results obtained by numerical algorithm design in meteorology proved the accuracy obtained even with h = 10− 100 [13].

Next we investigate how to implement complex step techniques to compute higher order Fréchet derivative s of matrix functions.

3.2 Matrix case

Assume that A and Ei, i = 1: k, are real matrices and f is a real-valued function at real arguments obeying the assumption of Theorem 2.1. Replacing the matrix Ek in the definition of the k th Fréchet derivative (2.1) by hEk, where h is a positive real number, and exploiting the linearity of the operator \(L_{f}^{(k)}(A)\), we have

Thus

which is an O(h) finite difference approximation to \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\). The central finite difference approximation can be derived by replacing h in (3.2) by − h and adding the obtained limit to (3.2) to cancel out the term \(L_{f}^{(k-1)}(A,{\mathcal {E}}_{k-1})\). That is,

which yields O(h2) approximation to \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) as shown below.

Now replacing the matrix Ek in the definition of the k th Fréchet derivative (2.1) by ihEk yields

Since \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) is real, we obtain

This yields the complex step approximation of \(L_{f}^{(k)}(A)\) via \(L_{f}^{(k-1)}(A)\) as

for a sufficiently small scalar h. Clearly,

However, this derivation of complex step approximation does not reveal the rate of convergence of the approximation as h goes to zero. To determine the rate of convergence, we need stronger assumptions on f.

Theorem 3.1

Let \(A,E_{i}\in {\mathbb {R}}^{{n \times n}}\), i = 1: k, and \(f:\mathcal {D}\subset {\mathbb {C}}\to {\mathbb {C}}\) be an analytic function in an open subset \(\mathcal {D}\) containing the spectrum of A. Assume further that f is real-valued at real arguments. Let h be a sufficiently small real number such that the spectrum of A + ihEk lies in \(\mathcal {D}\). Then we have

where \({\mathcal {E}}_{k}=(E_{1},E_{2},\cdots ,E_{k})\).

Proof

The analyticity of f on \(\mathcal {D}\) implies that f has a power series expansion there. Recall [6, Theorem. 3.1] and denote by \(E_{k}^{(j)}\) the j-tuple (Ek,Ek,⋯ ,Ek), so we have

Since the power series converges uniformly on \(\mathcal {D}\), we can repeatedly Fréchet differentiate the series (3.8) term by term in the directions \(E_{1}, E_{2},\dots ,E_{k-1}\) and obtain

Equations (3.6) and (3.7) follow immediately by equaling the imaginary and real parts of the series, respectively. □

The rate of convergence of the central finite difference approximation (3.3) can now be shown from the expansion of \(L_{f}^{(k-1)}(A+{\mathrm {i}} hE_{k},{\mathcal {E}}_{k-1})\) above by replacing the scalar h by ih and then by −ih. Subtracting the expansion of \(L_{f}^{(k-1)}(A-hE_{k},{\mathcal {E}}_{k-1})\) from the expansion of \(L_{f}^{(k-1)}(A+hE_{k},{\mathcal {E}}_{k-1})\) and dividing through by 2h yield the approximation in (3.3) and reveal its order, O(h2). The benefit of this analysis is that when an algorithm is available to compute \(L_{f}^{(k-1)} (A,{\mathcal {E}}_{k-1})\), it can be used to compute \(L_{f}^{(k)} (A,{\mathcal {E}}_{k})\). Thus, we can use the complex step approximation to compute \(L_{f}^{(k)} (A,{\mathcal {E}}_{k})\) via Imf(Xk− 1)/h with X0 = A + ihEk in (2.3). This leads to an important result analogous to Theorem 2.1.

Theorem 3.2

Let \(A,E_{i}\in {\mathbb {R}}^{{n \times n}}\), i = 1: k, and \(\mathcal {D}\subset {\mathbb {C}}\) be an open subset containing the spectrum of A. Let h be a sufficiently small real number such that the spectrum of A + ihEk lies in \(\mathcal {D}\) and pk be the size of the largest Jordan block of A + ihEk. Let \(f:\mathcal {D}\to {\mathbb {C}}\) be 2k− 1pk − 1 times continuously differentiable on \(\mathcal {D}\). Assume further that f is real-valued at real arguments. Then the k th Fréchet derivative exists and

where X0 = A + ihEk given in (2.3).

Proof

In view of Theorem 2.1, The upper right n × n block of f(Xk− 1), where X0 = A + ihEk, is \(L_{f}^{(k-1)} (A+{\mathrm {i}} hE_{k},{\mathcal {E}}_{k-1})\). By (3.6), \(L_{f}^{(k)} (A,{\mathcal {E}}_{k})\) is the upper right n × n block of \(\lim _{h\to 0}{{\text {Im}}} f(X_{k-1})/h\). □

The advantage of this approach is that the size of the matrix Xk− 1 is half the size of Xk, which could lead to a faster computation. On the assumption that the algorithm for computing f(A) requires O(n3) operations, the computation of f(Xk) requires O(8kn3) operations since the dimension of Xk is 2kn × 2kn [18]. However, the computation of f(Xk− 1) with X0 = A + ihEk requires O(4 ⋅ 8k− 1n3), bearing in mind that the computational cost of complex arithmetic is about four times the computational cost of real arithmetic. Thus, the cost of f(Xk− 1) with complex arguments is about half the cost of f(Xk) for real arguments. Our numerical experiments below support this analysis.

In fact, we can evaluate the k th Fréchet derivative using the block matrix Xk− 2 instead of Xk. The idea is to use a mixed derivative scheme as shown below.

Lemma 3.1

Suppose f, A, and Ej, j = 1: k, satisfy the assumptions of Theorem 2.1. Let B = A + ih1Ek− 1 then

Proof

Using (3.3) and (3.6), we obtain the mixed derivative

Thus

for sufficiently small real scalars h1 and h2. □

We can take advantage of the scheme (3.10) to reduce the computational cost for computing \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\), where k ≥ 3. Consider the recurrence (2.3) for X0 = A + ih1Ek− 1 + h2Ek and evaluate Xk− 2, then set X0 = A + ih1Ek− 1 − h2Ek and denote the value of Xk− 2 by Yk− 2. Observe that the top right n × n blocks of f(Xk− 2) and f(Yk− 2) are \(L_{f}^{(k-2)}(A+{\mathrm {i}} h_{1}E_{k-1}+h_{2}E_{k},{\mathcal {E}}_{k-2})\) and \(L_{f}^{(k-2)}(A+{\mathrm {i}} h_{1}E_{k-1}-h_{2}E_{k},{\mathcal {E}}_{k-2})\), respectively. Therefore, we have

We will see in our numerical experiments that the parameter h1 can be chosen as small as desired. However, the parameter h2 is a finite difference step and it has to be chosen carefully. However, this approximation reduced the computational cost significantly. It requires two matrix function evaluations at complex matrices of size 2k− 2n × 2k− 2n, so the cost is O(8 ⋅ 8k− 2n3), which is about one-eighth the cost of f(Xk). Our numerical experiment below reveals this factor.

3.3 Exploiting action of matrix functions

Some matrix functions have existing algorithms to compute their actions on thin matrices without explicitly forming f(X), but rather computing f(X)B using the matrix–matrix product of X and B. As examples, the matrix exponential has the algorithm [7, Alg. 3.2] by Al-Mohy and Higham to compute eXB. Recently, Al-Mohy [3] and Higham and Kandolf [16] developed algorithms to compute the actions of trigonometric and hyperbolic matrix functions. These Algorithms use truncated Taylor series, so f(X)B is recovered via updating the matrix B of the product XB. Krylov subspace methods can also be used to evaluate matrix function times vector [15, Ch. 13].

As mentioned above, the k th Fréchet derivative is obtained by reading off the upper right n × n block of the matrix f(Xk), where X0 = A. This is equivalent to reading off the upper n × n block of the thin and tall matrix f(Xk)B, where

The entries 0n and In are the zero and the identity matrices of size n × n, respectively. The action of f(Xk) on B extracts the last block column of f(Xk). Thus,

The advantage of this approach is that we only compute the rightmost n columns of the matrix f(Xk) instead of computing the whole matrix as the algorithm of Higham and Relton does [18, Algorithm 3.6]. In addition, the matrix Xk has special structure, so the best algorithm is the one that exploits the structure of the input matrix in an optimal way. Such an algorithm is unavailable yet as per our knowledge. However, evaluating f(Xk)B using the matrix multiplication XkB takes advantage of the sparsity of Xk. From (2.3) we count the nonzero elements of Xk, nnz(Xk), in terms of nnz(A) and nnz(Ek). Thus, we have

Since nnz(A) and nnz(Ek) never exceed n2, the nonzero elements of Xk is bounded above by 2k− 1(k + 2)n2. To illustrate, for k = 6, nnz(Xk) represents about 6.25% of the elements of Xk and this percentage drops down rapidly as k increases.

In the numerical experiment below, the methods that compute the k th Fréchet derivative s via the action of matrix functions are significantly faster (as k increases) than the methods that explicitly form the matrix f(Xk) and then extract the top right n × n block.

Using the complex step approximation, we can reduce the size of the acting matrix as shown in Theorem 3.1. Thus for X0 = A + ihEk and B is being reduced to size 2k− 1n × n by deleting the first block, we have

We can go further and compute the action of the k th Fréchet derivative on a vector or more generally the action on a thin matrix b of size n × n0, where n0 ≪ n. Observe that we can compute \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})b\) via (3.13) by multiplying its sides from the right by b. However, this would not be an efficient approach. The most efficient approach in this setting is to reconstruct the matrix B in (3.13) to be \([0_{n\times n_{0}},0_{n\times n_{0}},\dots ,0_{n\times n_{0}},b]^{T}=\colon B_{b}\), which is of size 2kn × n0. Thus,

By using the complex step for X0 = A + ihEk and adjusting Bb to be of size 2k− 1n × n0 by deleting the top block, we obtain

Kandolf and Relton [20] propose an algorithm for computing the action of the first Fréchet derivative Lf(A,E)b. Their approach is a particular case of (3.16) for k = 1; that is,

In fact, the computation of Lf(A,E)b could be made more efficient if we use the complex step approximation

which requires the action of n × n matrices instead of the action of 2n × 2n matrices. This approximation is a particular case of (3.17) with k = 1. In similar fashions, we can implement (3.11) to reduce the size of the acting matrix to 2k− 2n × 2k− 2n for k ≥ 3.

4 Numerical experiments

In this section, we give several numerical experiments to illustrate the advantage of the use of the complex step approximation and the actions of matrix functions to reduce the computational cost. We use MATLAB R2017a on a machine with Core i7. For experiments where CPU time is important, we limit MATLAB to run on a single processor.

Experiment 1

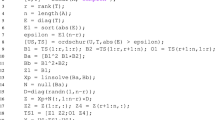

In this experiment we measure the execution time for computing \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\), k ≥ 2, by the methods prescribed above. We denote by \({{T}}^{(j)}_{k}\) the execution time for \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) using the method j. We specify f to the matrix exponential function whose implementation in MATLAB is expm. This function is based on the algorithm of Al-Mohy and Higham [5, Algorithm 5.1]. We take A = gallery('lesp',5) and generate ten random matrices Ek, k = 1: 10, of size 5 × 5. We use the algorithm of Higham and Relton [18, Algorithm 3.6] that forms Xk in (2.3) with X0 = A, computes f(Xk), and extracts \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) as [f(Xk)]1n. The execution time for this method is denoted by \({{T}}_{k}^{(1)}\). Second, we run the algorithm of Higham and Relton using the initial matrix X0 = A + ihE1 with h = 10− 8, and the direction matrices Ek for k = 2: 10. The algorithm computes f(Xk− 1), of which \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\approx {{\text {Im}}}[f(X_{k-1})]_{1n}/h\) as shown in (3.9). We denote the execution time for this implementation by \({{T}}_{k}^{(2)}\). Third, we use (3.11) and invoke the algorithm of Higham and Relton for f(Xk− 2) and f(Yk− 2) in the directions Ek, k = 3: 10, with initial matrices X0 = A + ih1E1 + h2E2 and Y0 = A + ih1E1 − h2E2, respectively. We take h2 = 10− 6. We denote the execution time for this implementation by \({{T}}_{k}^{(3)}\). Fourth, we evaluate \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) via (3.13) using the algorithm of Al-Mohy and Higham [7, Alg. 3.2], expmv, that computes the action \({\mathrm {e}}^{X_{k}}B\), where Xk is given as in (2.3) with X0 = A and B is defined as in (3.12). The code is available at https://github.com/higham/expmv. We denote the running time for expmv by \({{T}}^{(4)}_{k}\). Fifth, we use expmv with complex step to approximate \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) via (3.15). The running time for this implementation is denoted by \({{T}}^{(5)}_{k}\). Finally, we use (3.11) with expmv and denote its execution time by \({{T}}^{(6)}_{k}\).

Table 1 presents \({{T}}^{(j)}_{k}\) for k ≥ 2 and j = 1: 6 and Fig. 1 plots the ratios \({{T}}^{(1)}_{k}/{{T}}^{(j)}_{k}\) and j = 2: 6. We fix \({{T}}^{(1)}_{k}\) as reference points to show how our approaches of using the complex step and matrix function actions improve on the approach of Higham and Relton. The results are as follows. We observe that the ratio \({{T}}^{(1)}_{k}/{{T}}^{(2)}_{k}\), k ≥ 2 asymptotically approaches 2 as k increases. This supports our cost analysis given right after Theorem 3.2 that the use of the complex step approximation could save about 50% of the computational cost of the Algorithm of Higham and Relton. A superior computational saving is obtained when implementing the mixed derivatives. The ratio \({{T}}^{(1)}_{k}/{{T}}^{(3)}_{k}\), k ≥ 3 approaches 8 as k increases, which also supports our cost analysis presented right after Lemma 3.1. The ratios \({{T}}^{(1)}_{k}/{{T}}^{(j)}_{k}\) for j = 4, 5, 6, where that actions of the matrix exponential are used, grow up linearly by a factor of 4. The implementation of expmv outperforms the direct use of expm for k ≥ 6 when the dimensions of the input matrices start to grow up rapidly. expmv fully exploits the sparsity of the matrix Xk whereas the algorithm of expm involves explicit matrix powering and solves multiple right-hand sides linear systems, so the sparsity pattern deteriorates significantly. In addition, most of the components of expm(Xk) are not wanted. The three implementations of expmv behave in a similar manner with slight better performance of method 5 and method 6 that implement the complex step and the mixed derivative approximations, respectively.

The ratios \({{T}}^{(1)}_{k}/{{T}}^{(j)}_{k}\) for k = 2: 10 and j = 2: 6 of the data in Fig. 1

We look at the accuracy of these methods. For each k we calculate 1-norm relative errors for \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) where the “exact” reference computation is considered to be the output of the algorithm of Higham and Relton with expm. Figure 2 displays the results.

Note that the relative errors for \(L_{f}^{(k)}(A,{\mathcal {E}}_{k})\) computed using the complex step approximation (3.9) (err1), the formula of matrix function action (3.13) (err3), and the complex step approximation with the matrix function action (3.15) (err4) yield the highest accuracy whereas the use of the mixed derivative scheme (3.11) in both implementations produces less accurate computations (err2 and err5).

The accuracy of the mixed derivative scheme is quit sensitive to changes in the parameter h2. The scheme is prone to subtractive cancellation in floating point arithmetic. Thus, h2 has to be chosen to balance truncation errors with errors due to subtractive cancellation. Hence, the smallest relative error that could be obtained is of order u1/2, where u is the unit roundoff [15, Section 3.4]. However, the complex step approximation does not involve subtraction. Thus, the parameter h1 can be taken arbitrary small without effecting the obtained accuracy. The next experiment illustrates these points.

Experiment 2

The purpose of this experiment is to shed light on the robustness of the complex step approximation accuracy and some weakness on the mixed derivative approach. We take the matrix A and Ek from the above experiment and \(f=\cos \limits \circ \sqrt {~~}\). We use the algorithm of Al-Mohy [3, Algorithm 5.1] that computes the action of the matrix function \(\cos \limits (\sqrt {A})\) on a thin tall matrix B. The MATLAB code of this algorithm is denoted by funmv and is available at https://github.com/aalmohy/funmv. The algorithm is intended for half, single, and double precision arithmetics, but for this experiment we choose double precision. We compute \(L:=L_{f}^{(10)}(A,{\mathcal {E}}_{10})\) using (3.13) and consider it as a reference computation. Then, we compute L via complex step approximation with steps h1 = 10−r, r = 1: 50. The top part of Fig. 3 shows the 1-norm relative errors for each h1. Note that the algorithm achieved the order of machine precision for all r ≥ 6; this demonstrates the main advantage of complex step approximations of derivatives. The bottom plot of Fig. 3 shows the sensitivity of the mixed derivative scheme (3.11) to the difference step h2. We fixed h1 = 10− 50 and take h2 = 10−r, r = 1: 16. Notice that the relative error decreases and attains its minimum at r = 4 then the accuracy deteriorates as r increases. The selection of an optimal r is a delicate matter; it depends on the inputs and the method by which f is computed.

The change of the relative error in (3.11) with the choice of h1 and h2

5 Concluding remarks

The evaluation of a higher order Fréchet derivative of a matrix function is still a challenging problem if the algorithm that computes the matrix function f produces f(Xk) as a full matrix. The dimension of the matrix Xk, 2kn × 2kn, grows up exponentially as k increases. The algorithm of Higham and Relton computes the k th Fréchet derivative for general complex functions and matrices. However, it does not exploit the special structure and sparsity pattern of the matrix Xk. We improve the efficiency of the computation of the k th Fréchet derivative in two ways. We use the complex step approximation to reduce the size of the problem and the action of matrix functions to exploit the structure of the problem. The application of the complex step approximation works for real-valued functions at real arguments. Our use of the complex step approximation reduces the dimension of the input matrix by half. The use of the mixed derivative scheme, however, reduces the dimension of the input matrix by three quarters. These implementations lead to significant computational savings compared to the direct use of Xk as proposed by Higham and Relton. Though the mixed derivative approach proves computational efficiency, it has to be used with caution since subtractive cancellation is likely to occur. The finite difference step has to be chosen to balance truncation errors with rounding errors. The complex step approximation is a reliable approach as the accuracy is retained whenever the complex step parameter becomes smaller.

References

Abreu, R., Stich, D., Morales, J.: On the generalization of the complex step method. J. Comput. Appl. Math. 241, 84–102 (2013)

Ahipasaoglu, S.D., Li, X., Natarajan, K.: A convex optimization approach for computing correlated choice probabilities with many alternatives. IEEE Trans. Automat. Control 64(1), 190–205 (2019)

Al-Mohy, A.H.: A truncated taylor series algorithm for computing the action of trigonometric and hyperbolic matrix functions. SIAM J. Sci. Comput. 40(3), A1696–A1713 (2018)

Al-Mohy, A.H., Higham, N.J.: Computing the Frechet́, derivative of the matrix exponential, with an application to condition number estimation. SIAM J Matrix Anal. Appl. 30(4), 1639–1657 (2009)

Al-Mohy, A.H., Higham, N.J.: A new scaling and squaring algorithm for the matrix exponential. SIAM J. Matrix Anal. Appl. 31(3), 970–989 (2009)

Al-Mohy, A.H., Higham, N.J.: The complex step approximation to the Frechet́ derivative of a matrix function. Numer. Algorithms 53(1), 133–148 (2010)

Al-Mohy, A.H., Higham, N.J.: Computing the action of the matrix exponential, with an application to exponential integrators. SIAM J. Sci Comput. 33(2), 488–511 (2011)

Al-Mohy, A.H., Higham, N.J., Relton, S.D.: Computing the Frechet́ derivative of the matrix logarithm and estimating the condition number. SIAM J. Sci. Comput. 35(4), C394–C410 (2013)

Amat, S., Busquier, S., Gutiérrez, J.M.: Geometric constructions of iterative functions to solve nonlinear equations. J. Comput. Appl. Math. 157(1), 197–205 (2003)

Arioli, M., Benzi, M.: A finite element method for quantum graphs. IMA J. Numer. Anal. 38(3), 1119–1163 (2018)

Benzi, M., Estrada, E., Klymko, C.: Ranking hubs and authorities using matrix functions. Linear Algebra Appl. 438(5), 2447–2474 (2013)

Cardoso, J.A.R.: Computation of the matrix pth root and its Frechet́ derivative by integrals. Electron. Trans. Numer. Anal. 39, 414–436 (2012)

Cox, M.G., Harris, P.M.: Numerical analysis for algorithm design in metrology technical report 11, software support for metrology best practice guide, national physical laboratory, Teddington, UK (2004)

García-Mora, B., Santamaría, C., Rubio, G., Pontones, J.L.: Computing survival functions of the sum of two independent Markov processes: an application to bladder carcinoma treatment. Internat. J. Comput. Math. 91(2), 209–220 (2014)

Higham, N.J.: Functions of matrices: theory and computation. society for industrial and applied mathematics, Philadelphia, PA USA (2008)

Higham, N.J., Kandolf, P.: Computing the action of trigonometric and hyperbolic matrix functions. SIAM J. Sci. Comput. 39(2), A613–A627 (2017)

Higham, N.J., Lin, L.: An improved Schur–Padé algorithm for fractional powers of a matrix and their frechet́ derivatives. SIAM J. Matrix Anal. Appl. 34(3), 1341–1360 (2013)

Higham, N.J., Relton, S.D.: Higher order Frechet́ derivatives of matrix functions and the level-2 condition number. SIAM J. Matrix Anal. Appl. 35(3), 1019–1037 (2014)

Jeuris, B., Vandebril, R., Vandereycken, B.: A survey and comparison of contemporary algorithms for computing the matrix geometric mean. Electron. Trans. Numer. Anal. 39, 379–402 (2012)

Kandolf, P., Relton, S.D.: A block Krylov method to compute the action of the Fréchet derivative of a matrix function on a vector with applications to condition number estimation. SIAM J. Sci. Comput. 39(4), A1416–A1434 (2017)

Kenney, C.S., Laub, A.J.: A Schur–Fréchet algorithm for computing the logarithm and exponential of a matrix. SIAM J. Matrix Anal. Appl. 19 (3), 640–663 (1998)

Lai, K.-L., Crassidis, J.: Extensions of the first and second complex-step derivative approximations. J. Comput. Appl. Math. 219(1), 276–293 (2008)

Lai, K.-L., Crassidis, J., Cheng, Y., Kim, J.: New complex-step derivative approximations with application to second-order Kalman filtering. AIAA Guidance, Navigation, and Control Conference and Exhibit, 5944 (2005)

Lantoine, G., Russell, R.P., Dargent, T.: Using multicomplex variables for automatic computation of high-order derivatives. ACM Trans. Math Softw. 38, 16:1–16:21 (2012)

Lyness, J.N.: Numerical algorithms based on the theory of complex variable. In: Proceedings of the 1967 22-nd National Conference, Washington, D.C. USA, pp 125–133 (1967)

Lyness, J.N., Moler, C.B.: Numerical differentiation of analytic functions. SIAM J. Numer. Anal. 4(2), 202–210 (1967)

Noferini, V.: A formula for the Fréchet derivative of a generalized matrix function. SIAM J. Matrix Anal. Appl. 38(2), 434–457 (2017)

Powell, S., Arridge, S.R., Leung, T.: Gradient-based quantitative image reconstruction in ultrasound-modulated optical tomography: first harmonic measurement type in a linearised diffusion formulation. IEEE Trans. Med. Imag. 35 (2015)

Rossignac, J., Vinacua, A.: Steady affine motions and morphs. ACM T. Graphic 30, 116:1–116:16 (2011)

Squire, W., Trapp, G.: Using complex variables to estimate derivatives of real functions. SIAM Rev. 40(1), 110–112 (1998)

Acknowledgments

We thank the reviewers for their insightful comments and suggestions that helped to improve the presentation of this paper.

Funding

This work received funding from the Deanship of Scientific Research at King Khalid University through Research Groups Program under Grant No. R.G.P.1/113/40

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Al-Mohy, A.H., Arslan, B. The complex step approximation to the higher order Fréchet derivatives of a matrix function. Numer Algor 87, 1061–1074 (2021). https://doi.org/10.1007/s11075-020-00998-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00998-3