Abstract

We derive several explicit formulae for finding infinitely many solutions of the equation \(AXA=XAX\), when A is singular. We start by splitting the equation into a couple of linear matrix equations and then show how the projectors commuting with A can be used to get families containing an infinite number of solutions. Some techniques for determining those projectors are proposed, which use, in particular, the properties of the Drazin inverse, spectral projectors, the matrix sign function, and eigenvalues. We also investigate in detail how well-known similarity transformations like Jordan and Schur decompositions can be used to obtain new representations of the solutions. The computation of solutions by the suggested methods using finite precision arithmetic is also a concern. Difficulties arising in their implementation are identified and ideas to overcome them are discussed. Numerical experiments shed some light on the methods that may be promising for solving numerically the matrix equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper deals with the equation

where \(A \in \mathbb {C}^{n\times n}\) is a given complex matrix and \(X\in \mathbb {C}^{n\times n}\) has to be determined. This equation is called the Yang–Baxter-like (YB-like, for short) matrix equation. If A is singular (nonsingular), then the equation (1.1) is said to be the singular (nonsingular) Yang–Baxter-like matrix equation. The equation (1.1) has its origins in the classical papers by Yang [30] and Baxter [1]. Their pioneering works have led to extensive research on the various forms of the Yang–Baxter equation arising in braid groups, knot theory and quantum theory (see, e.g., the books [22, 31]). The YB-like equation (1.1) is also known as the star-triangle-like equation in statistical mechanics; see, e.g., [20, Part III].

A possible way of solving (1.1) is to multiply out both sides, which leads to a system of \(n^2\) quadratic equations with \(n^2\) variables. However, this strategy may have little practical interest, unless n is very small, say \(n=2\) or \(n=3.\)

Note that the YB-like matrix equation (1.1) has at least two trivial solutions : \(X = \mathbf {0}\) and \( X = A.\) Of course, the interest in solving it is in calculating non-trivial solutions. Discovering collections of solutions of (1.1) or characterizing its full set of solutions have attracted the interest of many researchers in the last few years. Since a complete description of the solution set for an arbitrary matrix A seems very challenging, many authors have been rather successful in doing so by imposing restrictive conditions on A. See, for instance, [5, 19] for A idempotent, [7, 9] for A diagonalizable, and [28] for matrices with rank one.

Our interest in this paper is to solve the equation for a general singular matrix A, without additional assumptions. We recall that among the published works on the YB-like equation (1.1), few are devoted to the numerical computation of its solutions. With this paper, we expect to give a contribution to fill in this gap. In our recent paper [16], we have proposed efficient and stable iterative methods for spotting commuting solutions for an arbitrary matrix A. Nevertheless, those methods are not designed for determining non-commuting solutions and there are a few cases where it is difficult to choose a good initial approximation (e.g., A is non-diagonalizable).

The principal contributions of this work w.r.t. the solutions of singular YB-like equation \(AXA=XAX\) are:

-

(i)

To establish a new connection between the YB-like equation and a set of two linear matrix equations, whose general solution is known, this is also valid for a nonsingular matrix A (–cf. Sect. 3);

-

(ii)

To explain clearly the role of projectors commuting with A in the process of deriving new families containing infinitely many solutions and to discuss how to find such projectors (–cf. Sect. 4);

-

(iii)

To show how the similarity transformations can be utilized for locating more explicit representations of the solutions (–cf. Sect. 7);

-

(iv)

To propose effective numerical methods for solving the singular YB-like equation, alongside with a thorough discussion of their numerical behaviour and practical clues for implementation in MATLAB (–cf. Sects. 8 and 9).

By \({{\mathbf {0}}}\) and I, we mean respectively the zero and identity matrices of appropriate orders. For a given matrix Y, we denote by N(Y) and R(Y), the null space and the range of Y, respectively; \(v(\lambda )\) stands for the index of a complex number \(\lambda \) with respect to a square matrix Y, that is, \(v(\lambda )\) is the index of the matrix \(Y-\lambda I\) (check the beginning of Sect. 2 for the definition of the index of a matrix).

2 Basics

Given an arbitrary matrix \( A\in \mathbb {C}^{n\times n},\) consider the following conditions, where \(X\in \mathbb {C}^{n\times n}\) is unknown:

where \(Y^*\) denotes the conjugate transpose of the matrix Y and \(\text {ind}(A)\) stands for the index of a square matrix A, which is the smallest non-negative integer \(\ell \) such that \({\mathrm {rank}}(A^\ell )={\mathrm {rank}}(A^{\ell +1}).\) If \(m(\lambda )\) is the minimal polynomial of A, then \(\ell \) is the multiplicity of \(\lambda = 0\) as a zero of \(m(\lambda )\) [2, p. 154]. Thus, \(\ell \le n,\) where n is the order of A.

A complex matrix \(X \in \mathbb {C}^{n\times n}\) satisfying the condition (gi.2) is called a generalized outer inverse or a \(\{2\}\)-inverse of A, while the unique matrix X verifying the conditions (gi.1) to (gi.4) is the well-known Moore–Penrose inverse of A, which is denoted by \(A^\dag \) [23]; the unique matrix X obeying the conditions (gi.2), (gi.5) and (gi.6) is the Drazin inverse, which is denoted by \(A^D\) and is given by

where \(\ell \ge {\mathrm {ind}}(A).\) Other instances of generalized inverses may be defined [2], but are not used in this paper. We refer the reader to [2, 17, Chapter 4], and [18, Sect. 3.6] for the theory of generalized inverses. For both theory and computation, see [29].

In the following, we revisit two important matrix decompositions, the Jordan and the Schur decompositions, whose proofs can be found in many Linear Algebra and Matrix Theory textbooks (see, for instance, [14]). Both decompositions will be used later in Sect. 7 to detect explicit solutions of the singular matrix equation \(AXA=XAX.\) In addition, due to the numerical stability of the Schur decomposition, it is the basis of the algorithm that will be displayed in Fig. 1.

Lemma 2.1

(Jordan Canonical Form). Let \(A\in \mathbb {C}^{n\times n}\) and let \(J:={\mathrm {diag}}(J_{n_1}(\lambda _1), \ldots , J_{n_s}(\lambda _s))\), (\(n_1+\cdots +n_s=n\)), where \( \lambda _1, \ldots ,\lambda _s\) are the eigenvalues of A, not necessarily distinct, and \(J_k(\lambda )\in \mathbb {C}^{k\times k}\) denotes a Jordan block of order k. Then there exists a nonsingular matrix \(S\in \mathbb {C}^{n\times n}\) such that \(\,A=SJS^{-1}.\,\) The Jordan matrix J is unique up to the ordering of the blocks \(J_k,\) but the transforming matrix S is not.

For a singular matrix A of order n with \({\mathrm {rank}}(A)=r<n,\) it is possible to reorder the Jordan blocks in a way that those blocks associated with the eigenvalue 0 appear in the bottom-right of J with decreasing size, that is, \(J:={\mathrm {diag}}\left( J_{n_1}(\lambda _1), \ldots , J_{n_p}(\lambda _p),J_{n_{p+1}}(0), \ldots , J_{n_s}(0)\right) \), with \(n_{p+1}\ge \ldots \ge n_s\) (\(0\le p\le s\)). So A can be decomposed in the form

where \(J_1={\mathrm {diag}}\left( J_{n_1}(\lambda _1), \ldots , J_{n_p}(\lambda _p)\right) \) is nonsingular and \(J_0={\mathrm {diag}}\left( J_{n_{p+1}}(0), \ldots , J_{n_s}(0)\right) \) is nilpotent.

Lemma 2.2

(Schur Decomposition). For a given matrix \(A\in \mathbb {C}^{n\times n}\), there exists a unitary matrix U and an upper triangular T such that \(\,A=UTU^{*},\,\) where \(U^{*}\) stands for the conjugate transpose of U. The matrices U and T are not unique.

If A is singular, then by reordering the eigenvalues in the diagonal of T, where the zero eigenvalues appear in the bottom-right, the Schur decomposition of A can be written in the form

where \(B_1\) is \(s \times s \) and \(B_2\) is \(s \times (n-s),\) with \( r={\mathrm {rank}}(A) \le s \le n-1.\) Note that \(B_2\) is not, in general, the zero matrix.

Now we recall a lemma that provides an explicit solution for a well-known pair of linear matrix equations.

Lemma 2.3

([4, 24]). Let \(A, B, C, D\in \mathbb {C}^{n\times n}.\) The pair of matrix equations \(AX=B,\ XC=D\) is consistent if and only if

and its general solution is given by

where Y is an arbitrary \(n\times n\) complex matrix.

Necessary and sufficient conditions for the equations \(AX=B,\ XC=D\) to have a common solution are attributed to Cecioni [4] and the expression (2.4) for a general common solution to Rao and Mitra [24, p. 25]. See also [2, p. 54] and [23].

3 Splitting the YB-Like Matrix Equation

In the next lemma, we split a general YB-like matrix equation into a system of matrix equations similar to the one in Lemma 2.3. Such a result will be useful in the next section.

Lemma 3.1

Let \(A\in \mathbb {C}^{n\times n}\) be given and let \(B\in \mathbb {C}^{n\times n}\) be such that the set of matrix equations

has at least a solution \(X_0.\) Then \(X_0\) is a solution of (1.1). Conversely, if \(X_0\) is a solution of (1.1), then there exists a matrix B such that

Proof

If \(X_0\) is a solution of the simultaneous equations in (3.1), then \(AX_0=B \) and \(X_0B=BA.\) Therefore \(\,AX_0A=BA=X_0B=X_0AX_0.\,\) Conversely, suppose that \(X_0\) is a solution of \(AXA=XAX\), i.e. \(AX_0A=X_0AX_0.\) Letting \(B:=AX_0\), we have \(BA=X_0B\), which implies that \(X_0\) is a solution of (3.1). \(\square \)

Note that Lemma 3.1 is also valid for the nonsingular YB-like matrix equation. Using Lemmas 2.3 and 3.1, we must look for a matrix B that makes (3.1) consistent, that is,

For a given singular matrix A and any of B satisfying (3.2), the matrices of the form

constitute an infinite family of solutions to (1.1), where \(Y\in \mathbb {C}^{n\times n}\) is arbitrary.

4 Commuting Projectors-Based Solutions

Discovering all the matrices B in (3.2) may be a very hard task, apparently so difficult as solving the YB-like matrix equation. However, if A is singular and B is taken as in the following lemma, we have the guarantee that B satisfies the conditions in (3.2). Thus, many collections containing infinite solutions to the singular YB-like matrix equation can be obtained, as shown below.

Lemma 4.1

Let \(A\in \mathbb {C}^{n\times n}\) be singular and P be any idempotent matrix commuting with A, that is, \(P^2=P\) and \(PA=AP.\) Then, for \(B\in \left\{ A^2 P,\, A^2 (I-P)\right\} \), any matrix X obtained as in (3.3) is a solution of the singular YB-like matrix equation (1.1).

Proof

If \(B=A^2 P\), then the equality \(PA=AP\) implies that B commutes with A. Using the equalities \(P^2=P\) and \(BB^\dag B=B\), it is not difficult to show that the conditions in (3.2) hold for the matrix B and hence the result follows. Similar arguments apply to \(B=A^2(I-P).\) \(\square \)

By Lemma 4.1, we must look for matrices P that are idempotent and commute with a given singular matrix A, to define B. Below, several cases with examples of matrices B satisfying the conditions of Lemma 4.1 will be presented when A is a singular matrix.

Case 1. \(B\in \left\{ {{\mathbf {0}}},\, A^2\right\} .\)

This case arises, for instance, when P is a trivial commuting projector, that is, \(P={{\mathbf {0}}}\) or \(P=I.\) Let us assume first that \(B={{\mathbf {0}}}.\) Now the system (3.1) reduces to the matrix equation \(AX={{\mathbf {0}}}\) which is clearly solvable. From (3.3), its general set of solutions can be determined through the formula

Geometrically speaking, the set of matrices constructed by (4.1) is a vector subspace of \(\mathbb {C}^{n\times n}\), and hence the sum of solutions of the YB-like matrix equation or a scalar multiplication yield new solutions. Since \({\mathrm {rank}}(A)={\mathrm {rank}}(A^\dag A)\) and \({\mathrm {rank}}(I-A^\dag A)=n-{\mathrm {rank}}(A)\), such a subspace has dimension equal to \(n(n-{\mathrm {rank}}(A)).\)

Now, if we assume that \(B=A^2,\) by (3.3),

where \(Y\in \mathbb {C}^{n\times n}\) is arbitrary, gives another family of solutions to (1.1).

In the following result, we identify the solutions defined by (4.1) and (4.2) that commute with A.

Proposition 4.2

Let \(A\in \mathbb {C}^{n\times n}\) be singular. For arbitrary matrices \(Y_1,Y_2\in \mathbb {C}^{n\times n}\), the following formulae generate solutions of the equation (1.1) that commute with \(A\) \(:\)

Proof

Every solution \(X_1\) of \(AX={{\mathbf {0}}}\), \(XA={{\mathbf {0}}}\) belongs to the solution space defined by \(AX={{\mathbf {0}}}\), whose general solution is determined by (4.1). Let \(X_1\) be a common solution of the equations \(AX={{\mathbf {0}}}\), \(XA={{\mathbf {0}}}.\) Then \(X_1\) commutes with A and satisfies \(AXA=XAX.\) By Lemma 2.3, \(X_1\) is of the form (4.3).

Clearly, the set of matrix equations \(AX=A^2,\ XA=A^2\) is consistent and its solution set agrees with that of \(AX=A^2,\ XA^2=A^3\), which is delivered by (4.2). Hence, \(AXA=XAX.\) If \(X_2\) is a solution of the coupled matrix equations \(AX=A^2,\ XA=A^2\), then it is a commuting solution of (1.1) and, again by Lemma 2.3, \(X_2\) is given by (4.4). \(\square \)

The following lemma gives theoretical support for Case 2.

Lemma 4.3

Let \(A \in \mathbb {C}^{n \times n}\) be a given singular matrix and let \(M \in \mathbb {C}^{n \times n}\) be any matrix such that \(AM=MA.\) Then \(P_M=MM^D\) is an idempotent matrix commuting with A.

Proof

Using the properties of the Drazin inverse, in particular, \(M^DMM^D=M^D\), it is easily proven that \(P_M\) is an idempotent matrix. It follows from Theorem 7 in [2, Chapter 4] that \(M^D\) is a polynomial in M and hence \(M^DA=AM^D\), because \(AM=MA.\) This shows that \(P_M\) commutes with A. \(\square \)

Case 2. \(B\in \left\{ A^2P_M,\, A^2\left( I-P_M\right) \right\} .\)

It should be mentioned that the matrix M in Lemma 4.3 must be singular to avoid trivial cases. Examples of such matrices M can be taken from the infinite collection

for each \( \lambda _i \in \sigma (A)\), where \(\sigma (A)\) is the spectrum of A. A trivial example is to take \(M=A\) yielding \(P_M=AA^D\).

The next case (Case 3) involves the matrix sign function. Before proceeding, let us recall its definition [13, Chapter 5]. Let the \(n\times n\) matrix A have the Jordan canonical form \(A=ZJZ^{-1}\) so that \(J= \left[ \begin{array}{cc} J^{(1)} &{} {{\mathbf {0}}} \\ {{\mathbf {0}}} &{} J^{(2)} \end{array}\right] \), where the eigenvalues of \( J^{(1)} \in \mathbb {C}^{p \times p}\) lie in open left half-plane and those of \( J^{(2)} \in \mathbb {C}^{q \times q}\) lie in open right half-plane. Then \(S:={\mathrm {sign}}(A)=Z\begin{bmatrix}-I_{p\times p} &{}{} \mathbf {0} \\ \mathbf {0} &{}{} I_{q\times q} \end{bmatrix}Z^{-1}\) is named as the matrix sign function of A. If A has any eigenvalue on the imaginary axis, then \({\mathrm {sign}}(A)\) is undefined. Here \(S^2=I\) and \(AS=SA.\) Note also that \(P=(I+ S)/2\) and \(Q=(I- S)/2\) are projectors onto the invariant subspaces associated with the eigenvalues in the right half-plane and left half-plane, respectively. For more properties and approximation of the matrix sign function, see [13].

Since in this work A is assumed to be singular, we cannot use directly \({\mathrm {sign}}(A)\) because it is undefined. To overcome this situation, authors in [25] have shifted and scaled the eigenvalues of A so that the matrix sign of such resulting matrices exists and commutes with A. Nevertheless, there is an absence of a systematic and algorithmic approach to generate these newly matrices.

Towards this aim, let us consider the matrix \(A_\alpha :=\alpha I+A\), where \(\alpha \) is a suitable complex number. Note that the scalar \(\alpha \) must be carefully chosen to avoid the intersection of the spectrum of \(A_\alpha \) with the imaginary axis. Assuming that A has at least one eigenvalue that does not lie on the imaginary axis, a simple procedure for calculating several values of \(\alpha \) that leads to the acquisition of the maximal number of projectors is described as follows:

-

1.

Let \(\{r_1,\ldots ,r_s\}\) be the set constituted by the distinct real parts of the eigenvalues of A written in ascending order, that is, \(r_1<r_2<\cdots <r_s;\)

-

2.

For \(k=1,\ldots ,s-1\), choose \(\alpha _k = -(r_k+r_{k+1})/2.\)

This way of calculating \(\alpha _k\) guarantees that the eigenvalues of the successive \(A_{\alpha _k}\) do not intersect the imaginary axis and avoids the trivial situations. That is to say, the spectrum of \(A_{\alpha _k}\) does not lie entirely on either the open right half-plane or on the open left-plane, in which cases \({\mathrm {sign}}(A_{\alpha _k})=I\) or \({\mathrm {sign}}(A_{\alpha _k})=-I\). If \(S_{\alpha }:={\mathrm {sign}}(A_{\alpha })\), we see that \(S_{\alpha _k}\) and \(A_{\alpha _k}\) commute, because \(S_{\alpha _k}\) commutes with \(A_{\alpha _k}.\) Hence \(\left( I+S_{\alpha _k}\right) /2\) and \(\left( I-S_{\alpha _k}\right) /2\) are projectors commuting with A. In the particular case when all the eigenvalues of A are pure imaginary, we may consider \(\widetilde{A} = -iA\) and then apply the above procedure to \(\widetilde{A}\) instead of A.

Case 3. \(B \in \{A^2\left( \frac{I+S_{\alpha }}{2}\right) , \, {A^2\left( \frac{I-S_{\alpha }}{2}\right) }\}.\)

The upcoming case depends on the spectral projectors of A, which have played an important role in the theory of the YB-like matrix equation, [6,7,8]. Yet, there is not any definite procedure to find out them in computer algebra systems. The next proposition contributes to settle it out.

Proposition 4.4

Let \(\lambda _1,\ldots ,\lambda _s\) be the distinct eigenvalues of \(A \in \mathbb {C}^{n\times n}\) and assume that \(G_{\lambda _i}\) denotes the spectral projector onto the generalized eigenspace \(N((A-\lambda _iI)^{v(\lambda _i)})\) along \(R((A-\lambda _iI)^{v(\lambda _i)})\), associated with the eigenvalue \( \lambda _i \). Then, for any \(i=1,\ldots ,s\), \(G_{\lambda _i}\) can be represented as \(G_{\lambda _i} =I-(A-\lambda _i I)(A-\lambda _i I)^D\), where \(v(\lambda _i)\) is the index of \(\lambda _i.\)

Proof

Let \(r_i:={\mathrm {rank}}((A-\lambda _i I)^{v(\lambda _i)}).\) Since \(N((A-\lambda _iI)^{v(\lambda _i)})\) and \(R((A-\lambda _iI)^{v(\lambda _i)})\) are complementary subspaces of \( \mathbb {C}^{n},\) the spectral projector \(G_{\lambda _i}\) onto \(N((A-\lambda _iI)^{v(\lambda _i)})\) along \(R((A-\lambda _iI)^{v(\lambda _i)})\) can be written as: \( G_{\lambda _i}=Q_i \, {\mathrm {diag}}\left( {{\mathbf {0}}}_{r_i \times r_i},\right. \) \(\, \left. I_{(n-r_i) \times (n-r_i)}\right) \, Q_i^{-1},\) with \(Q_i=[X_i | Y_i]\), in which the columns of \(X_i\) and \(Y_i\) are bases for \(R((A-\lambda _iI)^{v(\lambda _i)})\) and \(N((A-\lambda _iI)^{v(\lambda _i)})\), respectively; see, for example, [2] and [21, Chapters 5 and 7].

On the other hand, the core-nilpotent decomposition of the matrix \((A-\lambda _i I)\) via \(Q_i\) can be written in the form \( Q_i^{-1}(A-\lambda _iI)Q_i={\mathrm {diag}}(C_{r_i \times r_i}, \, N_{(n-r_i) \times (n-r_i)}), \) where \(C_{r_i \times r_i}\) is nonsingular, and \( N_{(n-r_i) \times (n-r_i)}\) is nilpotent of index \(v(\lambda _i),\) [21, Chapter 5, p. 397]. Now we have, \( (A-\lambda _iI)=Q_i \, {\mathrm {diag}}\left( C_{r_i \times r_i}, \, N_{(n-r_i) \times (n-r_i)}\right) \, Q_i^{-1}\) and hence the Drazin inverse of \( (A-\lambda _iI)\) is given by \( (A-\lambda _iI)^D=Q_i \, {\mathrm {diag}}\left( C^{-1}_{r_i \times r_i}, \, {{\mathbf {0}}}_{(n-r_i) \times (n-r_i)} \right) \, Q_i^{-1},\) [21, Chapter 5, p. 399]. This further implies that \( I-(A-\lambda _i I)(A-\lambda _i I)^D=Q_i \, {\mathrm {diag}}({{\mathbf {0}}}_{r_i \times r_i}, \, I_{(n-r_i) \times (n-r_i)}) \, Q_i^{-1},\) which coincides with \(G_{\lambda _i}.\) \(\square \)

Now, we revisit a well-known result, whose proof can be found in the literature (e.g., [2, 8, 21]).

Lemma 4.5

Let us assume that the notations and conditions of the Proposition 4.4 are valid. Then:

-

(a) \(G_{\lambda _i}^2=G_{\lambda _i}\), \(AG_{\lambda _i}=G_{\lambda _i}A\), and \(G_{\lambda _i}G_{\lambda _j}={{\mathbf {0}}}\), for \(i \ne j\);

-

(b) \(P_{\lambda _i}=I-G_{\lambda _i}=(A-\lambda _i I)(A-\lambda _i I)^D\) is the complementary projector onto \(R((A-\lambda _iI)^{v(\lambda _i)})\) along \(N((A-\lambda _iI)^{v(\lambda _i)})\) commuting with A. In addition, \(P_{\lambda _i}P_{\lambda _j}=P_{\lambda _j}P_{\lambda _i}\);

-

(c) \(\sum _{i=1}^s G_{\lambda _i}=I\);

-

(d) The sum of any number of matrices among the \(G_{\lambda _i}\)’s is also a commuting projector with A. Thus, for any nonempty subset \(\Gamma \) of \(\{1,2, \dotsc , s\}\), \(E_\Gamma \) is a projector commuting with A, where \(E_\Gamma :=\sum _{i\in \Gamma } G_{\lambda _i}\);

-

(e) \(P_{\lambda _i}=\sum ^s_{\begin{array}{c} j=1 \\ j \ne i \end{array}} G_{\lambda _j}.\)

Note that the number of projectors \(E_\Gamma \)’s is \(2^s-1.\) Next, in Case 4, we present a new choice of B in Lemma 4.1, using the projectors described above.

Case 4. \(B \in \{A^2E_\Gamma \}.\)

To derive the last case (see Case 5 below), we use again the matrix sign function. It is based on the following result.

Proposition 4.6

Let \(\lambda _1,\ldots ,\lambda _s\) be the distinct eigenvalues of \(A \in \mathbb {C}^{n\times n}\). For any scalar \(\alpha \) and \(i=1,\ldots , s\), the eigenvalues of the matrix \(\hat{A}{_{\lambda _i}}=A+(\alpha -\lambda _i)G_{\lambda _i}\), consist of those of A, except that one eigenvalue \(\lambda _i\) of A is replaced by \(\alpha .\) Moreover, if \({\mathrm {sign}}(\hat{A}{_{\lambda _i}})\) exists, then it commutes with A.

Proof

Let \(A=P\, {\mathrm {diag}}\left( \widetilde{J}_1, \ldots , \widetilde{J}_i, \ldots , \widetilde{J}_s\right) \, P^{-1} \) be the Jordan decomposition of A, where \(\widetilde{J}_i\) is the Jordan segment corresponding to \(\lambda _i\) and P is nonsingular. Here \( A-\lambda _i I=P \,{\mathrm {diag}}\left( \widetilde{J}_1-\lambda _i \widetilde{I}_1, \ldots , \widetilde{J}_i-\lambda _i \widetilde{I}_i, \ldots , \widetilde{J}_s-\lambda _i \widetilde{I}_s\right) \, P^{-1},\) where \(\widetilde{I}_{i}\) is the identity matrix of the same order as \(\widetilde{J}_i.\) From [2, Chapter 4, Theorem 8], it follows that \((A-\lambda _i I)^D=P \, {\mathrm {diag}}\left( (\widetilde{J}_1-\lambda _i \widetilde{I}_1)^{-1}, \ldots ,{{\mathbf {0}}}, \ldots , (\widetilde{J}_s-\lambda _i \widetilde{I}_s)^{-1} \right) \, P^{-1}\) and we get \(G_{\lambda _i} =I-(A-\lambda _i I)(A-\lambda _i I)^D=P \, {\mathrm {diag}}\left( {{\mathbf {0}}}, \ldots ,\widetilde{I}_i, \right. \) \(\left. \ldots , {{\mathbf {0}}} \right) \, P^{-1}.\) Thus,

where \(\widetilde{J}_i(\alpha )\) is the matrix \(\widetilde{J}_i\) with \(\alpha \) in the place of \(\lambda _i\). This shows that the eigenvalues of the matrix \(\hat{A}{_{\lambda _i}}\) coincide with those of A with the exception that \(\lambda _i\) is replaced by \(\alpha \) in \(\hat{A}{_{\lambda _i}}.\) This proves our first claim in the proposition.

It is clear that no eigenvalue of \(\hat{A}{_{\lambda _i}}\) lies on the imaginary axis, since we are assuming that \({\mathrm {sign}}(\hat{A}{_{\lambda _i}})\) exists. Let \(\hat{S}{_{\lambda _i}}={\mathrm {sign}}(\hat{A}{_{\lambda _i}})=P \, {\mathrm {diag}}\left( {\mathrm {sign}}(\widetilde{J}_1), \ldots , {\mathrm {sign}}(\widetilde{J}_i(\alpha )), \ldots , {\mathrm {sign}}(\widetilde{J}_s) \right) \, P^{-1}\). Then a simple calculation shows that \(\hat{S}{_{\lambda _i}}\) commutes with A because \({\mathrm {sign}}(\widetilde{J}_i)=\pm \widetilde{I}_{ i}\). This proves our second claim. \(\square \)

Case 5. \(B \in \{A^2\left( \frac{I+ \hat{S}{_{\lambda _i}}}{2}\right) , \, A^2\left( \frac{I- \hat{S}{_{\lambda _i}}}{2}\right) \}.\)

We stop here and do not pursue to attain more possibilities for B. This could be considered for future works.

5 Connections Between the Projectors and B

For a given singular matrix A, the five cases presented in the previous section aimed at finding a commuting projector P (i.e., \(AP=PA\) and \(P^2=P\)) to obtain a matrix B that will be inserted in (3.3) to produce a family of solutions to the YB-like equation (1.1).

One issue arising in this approach for spotting B is that distinct projectors may correspond to the same B. That is to say, if \(P_1\) and \(P_2\) are two distinct commuting projectors then we may have \(B=A^2P_1=A^2P_2\), which means that \(A^2(P_1-P_2)={{\mathbf {0}}}\), that is, \(R(P_1-P_2)\subseteq N(A^2).\) To get more insight into this connection between the projectors and B, we will present two simple examples.

Example 1. Let \(A=\left[ \begin{array}{c@{\quad }c@{\quad }c} 1 &{} 1 &{} 1 \\ 0 &{} 1 &{} 0 \\ 1&{} 1 &{} 1 \end{array}\right] ,\) which is a diagonalizable singular matrix with spectrum \(\sigma (A)=\{0,1,2\}.\) Solving directly the equations \(AP=PA\) and \(P^2=P\), we achieve a total of eight distinct commuting projectors:

However, there are just four distinct \(B_i=A^2P_i\) (\(i=1,\ldots ,8\)):

because \(B_5=B_2\), \(B_6=B_1\), \(B_7=B_4\) and \(B_8=B_3.\) The same four distinct \(B_i\)’s can be obtained by means of the sign function (Case 3) for \(\alpha \in \{-5/2,-3/2,-1/2,1/2\}.\) However, Case 3 gives only six distinct projectors: \(P_1,P_2,P_3,P_5,P_6,P_7,\) instead of eight projectors. Note that the matrix sign function of \(A_\alpha \) just depends on the sign of its eigenvalues, so choosing other values for \(\alpha \) would not change the results. We have found those values of \(\alpha \) by the method described in the previous section for Case 3. If we now find the six spectral projectors \(P_{\lambda _i}\)’s and \(G_{\lambda _i}\)’s, for all \(\lambda _i\in \sigma (A)\) (see Proposition 4.4 and Lemma 4.5), we obtain all the commuting projectors, except the trivial ones \(P_1\) and \(P_5.\) Those six spectral projectors suffice to collect the four distinct matrices, \(B_i\)’s.

Note that, for this matrix A, we can use (3.3) to achieve four families of infinite solutions to the equation \(AXA=XAX.\)

Example 2. Let \(A=\left[ \begin{array}{ccc} 1 &{} 1 &{} 1 \\ 1 &{} 1 &{} 1 \\ 1 &{} 1 &{} 1 \end{array}\right] \), which is a diagonalizable singular matrix: \(A=S\,{\mathrm {diag}}(3,0,0)\,S^{-1},\) where \(S=\left[ \begin{array}{rrr} 1 &{} 1 &{} 1 \\ 1 &{} -1 &{} 1 \\ 1 &{} 0 &{} -2 \end{array}\right] .\) It can be proven that all the distinct commuting projectors P are given by

where \(\mu \in \{0,1\}\) and \(\widetilde{P}\) is any idempotent matrix of order 2. Since for any of those projectors \(B=A^2P={{\mathbf {0}}}\) if \(\mu =0\), and \(B=A^2P=A^2\) if \(\mu =1\), there are just two distinct matrices: \(B={{\mathbf {0}}}\) and \(B=A^2.\) The same result is given independently by Cases 3 and 4, leading to two families of infinite solutions to the equation \(AXA=XAX\) given by (3.3).

6 More Families of Explicit Solutions

In this section, we provide more explicit representations for solutions to the singular YB-like equation, but now with the help of the index of A.

Proposition 6.1

Assume that \(A\in \mathbb {C}^{n\times n}\) is a given singular matrix such that \({\mathrm {ind}}(A)=\ell .\)

-

(i)

If

$$\begin{aligned} Y=\left( A^{\ell +1}\right) ^\dag A^\ell (I-AZ)+Z, \end{aligned}$$(6.1)where \(Z\in \mathbb {C}^{n\times n}\) is an arbitrary matrix, then, for any \(V\in \mathbb {C}^{n\times n},\)

$$\begin{aligned} X=A^{\ell -1}\left( AY-I\right) V \end{aligned}$$(6.2)is a solution of the YB-like matrix equation \(AXA=XAX.\)

-

(ii)

If

$$\begin{aligned} Y=(I-ZA)A^\ell \left( A^{\ell +1}\right) ^\dag +Z, \end{aligned}$$(6.3)where \(Z\in \mathbb {C}^{n\times n}\) is an arbitrary matrix, then, for any \(V\in \mathbb {C}^{n\times n},\)

$$\begin{aligned} X=V\left( Y A -I\right) A^{\ell -1} \end{aligned}$$(6.4)is a solution of the YB-like matrix equation \(AXA=XAX.\)

Proof

It is well known that any square matrix has a Drazin inverse, which implies in particular that the matrix equation (gi.6) is solvable. From [17, Theorem 6.3], it follows that \(A^{\ell +1}\left( A^{\ell +1}\right) ^\dag A^\ell =A^\ell .\) Now, a simple calculation shows that the matrix Y given in (6.1) is a solution of the matrix equation (gi.6), that is, \(A^{\ell +1}Y=A^\ell \), while Y in (6.3) satisfies \(YA^{\ell +1}=A^\ell .\) Moreover, any solution of the matrix equation \(A^{\ell +1}X=A^\ell \) is of the form given in (6.1), and any solution of \(XA^{\ell +1}=A^\ell \) can be calculated from (6.3). The proof that both X in (6.2) and X in (6.4) satisfy the singular YB-like matrix equation (1.1), follows from a few matrix calculations. \(\square \)

7 Solutions Based on Similarity Transformations

Lemma 7.1

Let \(A,B\in \mathbb {C}^{n\times n}\) be similar matrices, that is, \(A=SBS^{-1},\) for some nonsingular complex matrix S. If Y is a solution of the YB-like matrix equation \(BYB=YBY,\) then \(X=SYS^{-1}\) is a solution of the YB-like matrix equation \(AXA=XAX.\) Reciprocally, if X satisfies \(AXA=XAX\) then there exists Y verifying \(BYB=YBY\) such that \(X=SYS^{-1}.\)

The previous result, whose proof is easy, can be utilized in particular with similarity transformations like the Jordan canonical form or the Schur decomposition (–cf. Sect. 2).

Let us assume that \(A=SJS^{-1}=S\, \left[ \begin{array}{cc} J_1 &{} {{\mathbf {0}}} \\ {{\mathbf {0}}} &{} J_0 \end{array}\right] \, S^{-1}\) is the Jordan decomposition of A, where S, \(J_0\) and \(J_1\) are as in (2.2). If \(Y=\left[ \begin{array}{cc} Y_{1} &{} Y_{2} \\ Y_{3} &{} Y_{4} \end{array}\right] \, \) is a solution of \(YJY=JYJ,\) conformally partitioned as J, then

Hence, one can determine all the solutions of equation (1.1) by solving (7.1) for the matrices \(Y_{\text {i}}\) (\(i=1,2,3,4\)). It turns out that building up its complete set of solutions seems to be unattainable. However, if we consider the special case for Y in which \(Y_{2}={{\mathbf {0}}}\) and \(Y_{3}={{\mathbf {0}}}\), then (7.1) reduces to

consisting of two independent nonsingular and singular YB-like matrix equations for \(J_1\) and \(J_0\), respectively. Now, we arrive at the following proposition with the help of Lemma 7.1.

Proposition 7.2

Let \(A\in \mathbb {C}^{n\times n}\) be a singular matrix and consider the notations used in (2.2). Then, \( X=S\, \left[ \begin{array}{cc} Y_1 &{} {{\mathbf {0}}} \\ {{\mathbf {0}}} &{} Y_4 \end{array}\right] \, S^{-1}\) is a solution of equation (1.1), where \(Y_1\) and \(Y_4\) satisfy their corresponding YB-like equations in (7.2).

Now an important issue arises: how to solve (7.2)? A possible way is to take \(Y_1=J_1\) or \(Y_1={{\mathbf {0}}}\), which satisfies the first equation in (7.2), and then finding \(Y_4\) by any of the suggested representations discussed in Sects. 4 and 6. Hence, a family of solutions to (1.1) resulting from Proposition 7.2 is commuting or non-commuting according to \(Y_4\) is commuting or non-commuting, respectively.

If \(Z=\left[ \begin{array}{cc} Z_{1} &{} Z_{2} \\ Z_{3} &{} Z_4 \end{array}\right] \, \), which is assumed to be conformally partitioned as T in the matrix decomposition (2.3), is a solution of \(ZTZ=TZT\), then we come down with the next set of four equations:

Solving (7.3) is again a challenging task, therefore, we restrict this task to the particular situation when \(Z_{3}={{\mathbf {0}}}.\) Now (7.3) becomes

which leads us to the following proposition:

Proposition 7.3

Let \(A\in \mathbb {C}^{n\times n}\) be a singular matrix of the form (2.3). Then, \( X=U\, \left[ \begin{array}{cc} Z_1 &{} Z_2 \\ {{\mathbf {0}}} &{} Z_4 \end{array}\right] \, U^{*}\), where \(Z_1,\) \(Z_2,\) and \(Z_4\) satisfy simultaneously the equations (7.4), is a solution of the equation (1.1).

Some examples of solutions to (7.4) are:

-

(i)

\(Z_1={{\mathbf {0}}}\), \(Z_2\) and \(Z_4\) arbitrary;

-

(ii)

\(Z_1=B_1\), \(Z_2=B_2\), and \(Z_4={{\mathbf {0}}}\);

-

(iii)

Any commuting solution of \(Z_{1}B_{1}Z_{1}=B_1Z_{1}B_1\), along with \(Z_2=B_2\) and \(Z_4= {{\mathbf {0}}}\);

-

(iv)

\(Z_1=B_1^2B_1^D\), \(Z_2=B_1B_1^DB_2\), \(Z_4= {{\mathbf {0}}}\), for the case when \(B_1\) is singular.

Other solutions to (7.4) may be determined by finding \(Z_1\) in the first equation \(Z_{1}B_{1}Z_{1}=B_1Z_{1}B_1\), which is a YB-like equation, and then determine the unknowns \(Z_2\) and \(Z_4\) at a time by solving the multiple linear system

provided it is consistent. For instance, if we fix \(Z_1=B_1\), we know that (7.5) is consistent, because \(Z_2=B_2\) and \(Z_4={{\mathbf {0}}}\) satisfy it. Moreover, since \(B_1\) is \(s \times s \), \(B_2\) is \(s \times (n-s),\) with \( r={\mathrm {rank}}(A)\le s \le n-1,\) and \(s<n\), it has infinitely many solutions.

8 Numerical Issues

We shall now consider the problem of solving the singular YB-like matrix equation in finite precision environments.

Most of the explicit formulae derived in Sect. 4 involve the computation of generalized inverses. We recall that the Moore–Penrose inverse is available in MATLAB through the function pinv, which is based on the singular value decomposition of A. Many other methods and scripts are available in the literature. For instance, some iterative methods of Schulz-type (e.g., hyperpower methods) have received much attention in the last few years; see [26] and the references therein. See also [27], and [29] for the Drazin and other inverses. Formula (3.3) with B given in Cases 3 and 5 requires the computation of the matrix sign function, which is available through many methods (check [13, Chapter 5]). In Sect. 9, a Schur decomposition-based algorithm available in [12] is used to calculate the matrix sign function. Here, the accuracy of the attained solution to the singular equation (1.1) depends on the difficulties arising in the intermediate estimation of those functions, viz: Moore–Penrose inverses, sign functions, or Drazin inverses which influence the relative error affecting the detected solutions to the singular YB-like equation.

Although the Jordan canonical decomposition is a very important tool in the theory of matrices, we must recall that its determination using finite precision arithmetic is a very ill-conditioning problem [10, 15]. Excepting a few particular cases, the numerical calculation of solutions of the YB-like matrix equation by means of the Jordan decomposition must be avoided. Instead, we shall resort to the Schur decomposition, whose stability properties make it well-suited for approximations. Hence, we shall focus on designing an algorithm based on (2.3).

Even this approach is not free of risks when applied to matrices with multiple eigenvalues. We recall that the computation of repeated eigenvalues may be very sensitive to small perturbations. There are also the problems of knowing when it is reasonable to interpret a small quantity as being zero and how to correctly order the eigenvalues in the diagonal of the triangular matrix to get the form (2.3).

To illustrate this, let us consider the matrix

which is nilpotent. All of its eigenvalues are zero and its Jordan canonical form is \(J_4(0)\), that is, it just involves a Jordan block of order 4. Hence, rank\((A)=3.\) However, if we calculate the eigenvalues of A in MATLAB, which has unit roundoff \(u\approx 2^{-53}\), by the function eig, we get

\(\begin{array}{r} \texttt {2.2968e-04 + 2.2974e-04i} \\ \texttt {2.2968e-04 - 2.2974e-04i}\\ \texttt {-2.2968e-04 + 2.2963e-04i}\\ \texttt {-2.2968e-04 - 2.2963e-04i} \end{array},\)

instead of values with magnitudes more close to u. This is quite expected and cannot be viewed as a failure of the algorithm used by MATLAB, because the condition number (evaluated through the function condeig) of the single eigenvalue of A is about 4.7934e+11. This example illustrates the shortcomings that may arise in the numerical calculation of solutions of the YB-like matrix equation by Schur decomposition when A has badly conditioned eigenvalues.

Despite such type of examples, the Schur decomposition performs very well for general singular matrices, as will be shown in Sect. 9.

MATLAB script for finding solutions of the singular YB-like matrix equation by Schur decomposition combined with the solution of (7.5), with \(s=r\)

In Fig. 1, we provide a MATLAB script based on (7.5) for obtaining solutions of the singular YB-like matrix equation. It involves the Schur decomposition, \(A=UTU^*\), which is reordered to move all the elements in the diagonal of T smaller than or equal to a certain quantity epsilon to the bottom-right. The tolerance epsilon determines what elements in the diagonal of T are viewed as corresponding to the zero eigenvalue. To identify a suitable epsilon, we sort the eigenvalues of T by increasing order of magnitude and assume that epsilon is the \((n-r)\)-th eigenvalue in the ordered vector, where \(r={\mathrm {rank}}(A).\) Then a solution for the rank deficient linear system (7.5) is attained by appropriate solvers.

If all of the eigenvalues of A are well conditioned or if A is diagonalizable, epsilon is in general small; otherwise, it can be large (say, \(10^{-4}\)) (–cf. Sect. 9).

9 Numerical Experiments

We have considered several YB-like matrix equations corresponding to 15 singular matrices with sizes ranging from \(3\times 3\) to \(20\times 20.\) The first three matrices (labelled with numbers from 1 to 3) are randomized and the next five matrices (from 4 to 8) were taken from the function matrix in the Matrix Computation Toolbox [11]; matrices labelled with 9 to 15 are academic examples, most of which are non-diagonalizable. We have selected the following four methods to get solutions of those 15 YB-like matrix equations in MATLAB:

-

alg-Case1: script based on Case 1, with \(B=A^2\), and subsequent use of (4.2), with Y being a randomized matrix;

-

alg-sign: script based on finding a B as in Case 3, with \(\alpha =-(r_{s-1}+r_s)/2\), and subsequent insertion in (3.3), with Y being a randomized matrix; here \(\{r_1,\ldots ,r_s\}\) is the set constituted by the distinct real parts of the eigenvalues of A written in ascending order; for all the matrices in the experiments we have \(s>1\);

-

alg-spectral: script based on Case 4, with \(B=A^2P_{\lambda _s}\), where \(\lambda _s\) is the n-th component of the vector eig(A) obtained in MATLAB, and subsequent use of (3.3), with Y being a randomized matrix;

-

alg-schur: script provided in Fig. 1.

Experiments related to other suggested formulae are not shown here. alg-Case1, alg-sign, and alg-spectral involve the computation of the Moore–Penrose inverse, which has been carried out by the function pinv of MATLAB. The computation of the Drazin inverse in alg-spectral has been based on (2.1). To estimate the quality of the approximation \(\widetilde{X}\) to a solution X of equation (1.1), we use the expression provided in [16, Equation (15)] for estimating the relative error, which is recalled here for convenience:

where \(\Vert .\Vert \) stands for the Frobenius norm, \(R(X):=AXA-XAX\) and \(M(X):=A^T\otimes A-I\otimes (XA)-(AX)^T\otimes I \in \mathbb {C}^{n^2\times n^2}\) (\(\otimes \) denotes the Kronecker product).

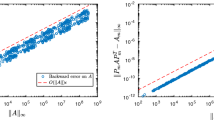

At the top of Fig. 2, we observe alg-Case1 performs very well for all the test matrices, with the exception of matrices 7 and 8, where the computation of the Moore–Penrose inverse causes some difficulties. Fortunately, in these two cases, alg-schur gives good results. So they seem to complement very well, in the sense that when one method gives poor results, the other one has a good performance. Matrices 7 and 8 have, respectively, sizes \(19\times 19\) and \(20\times 20\), and ranks 12 and 13. In the case of alg-schur, relative errors are larger for matrices 6, 9, 11, and 15, which are non-diagonalizable and have ill-conditioned eigenvalues as well. It is interesting to note that a comparison between both graphics shows a synchronization of the relative errors with the values of epsilon. alg-sign and alg-spectral give quite poor results for some matrices, in which large errors arise mainly in the calculation of Moore-Penrose or Drazin inverses. In the case of alg-sign, the choice of \(\alpha \) may also influence the accuracy of the computed solutions. It is worth pointing out that arbitrary matrices Y with a large norm in (3.3) may also cause difficulties.

10 Conclusion

At this point, it is worth highlighting the excellent features of the proposed techniques for computing solutions of singular YB-like matrix equation:

-

They are valid for any singular matrix;

-

They generate infinitely many solutions;

-

They perform well in finite precision environments;

and also our main theoretical contributions:

-

We have provided a novel connection between the YB-like matrix equation and a well-known system of linear matrix equations, and

-

We have investigated the role of commuting projectors in the process of designing explicit formulae and have been able to find a large set of examples of those projectors.

We have also overcome the main difficulties arising in the implementation of the Schur decomposition-based formula of Proposition 7.3 combined with (7.5), by designing an effective algorithm. We recall that many ideas of the paper (for instance, the splitting of the YB-like equation) can be extended to the nonsingular case.

References

Baxter, R.: Partition function of the eight-vertex lattice model. Ann. Phys. 70(1), 193–228 (1972)

Ben-Israel, A., Greville, T.N.E.: Generalized inverses theory and applications, 2nd edn. Springer, New York (2003)

Campbell, S.L., Meyer, C.D.: Generalized inverses of linear transformations. SIAM, Philadelphia (2009)

Cecioni, F.: Sopra alcune operazioni algebriche sulle matrici. Annali della Scuola Normale Superiore di Pisa-Classe di Scienze 11(Talk no. 3), 17–20 (1910)

Cibotarica, A., Ding, J., Kolibal, J., Rhee, N.H.: Solutions of the Yang–Baxter matrix equation for an idempotent. Numer. Algebra Control Optim. 3(2), 347–352 (2013)

Ding, J., Rhee, N.H.: Spectral solutions of the Yang–Baxter matrix equation. J. Math. Anal. Appl. 402(2), 567–573 (2013)

Ding, J., Rhee, N.H.: Computing solutions of the Yang–Baxter-like matrix equation for diagonalisable matrices. East Asian J. Appl. Math. 5(1), 75–84 (2015)

Ding, J., Zhang, C.: On the structure of the spectral solutions of the Yang–Baxter matrix equation. Appl. Math. Lett. 35, 86–89 (2014)

Dong, Q., Ding, J.: Complete commuting solutions of the Yang–Baxter-like matrix equation for diagonalizable matrices. Comput. Math. Appl. 72(1), 194–201 (2016)

Golub, G.H., Wilkinson, J.H.: Ill conditioned eigensystems and the computation of the Jordan canonical form. SIAM Rev. 18(4), 578–619 (1976)

Higham, N.J.: The matrix computation toolbox. URL http://www.ma.man.ac.uk/~higham/mctoolbox

Higham, N.J.: The Matrix Function Toolbox. URL http://www.maths.manchester.ac.uk/~higham/mftoolbox

Higham, N.J.: Functions of matrices: theory and computation. SIAM, Philadelphia (2008)

Horn, R.A., Johnson, C.R.: Matrix analysis, 2nd edn. Cambridge University Press, New York (2013)

Kågstrom, B., Ruhe, A.: An algorithm for numerical computation of the Jordan normal form of a complex matrix. ACM Trans. Math. Softw. 6(3), 398–419 (1980)

Kumar, A., Cardoso, J.R.: Iterative methods for finding commuting solutions of the Yang–Baxter-like matrix equation. Appl. Math. Comput. 333, 246–253 (2018)

Laub, A.J.: Matrix analysis for scientists and engineers. SIAM, Philadelphia (2004)

Lütkepohl, H.: Handbook of Matrices. Wiley, Chichester (1996)

Mansour, S., Ding, J., Huang, Q.: Explicit solutions of the Yang–Baxter-like matrix equation for an idempotent matrix. Appl. Math. Lett. 63, 71–76 (2017)

McCoy, B.M.: Advanced statistical mechanics. Oxford University Press, New York (2009)

Meyer, C.D.: Matrix analysis and applied linear algebra. SIAM, Philadelphia (2000)

Nichita, F.F.: Nonlinear equations, quantum groups and duality theorems: a primer on the Yang–Baxter equation. VDM Verlag, Saarbrucken (2009)

Penrose, R.: A generalized inverse for matrices. Proc. Camb. Philos. Soc. 51(3), 406–413 (1995)

Rao, C., Mitra, S.: Generalized inverse of matrices and its applications. Wiley, New York (1971)

Soleymani, F., Kumar, A.: A fourth-order method for computing the sign function of a matrix with application in the Yang–Baxter-like matrix equation. Comp. Appl. Math. 38, 64 (2019)

Soleymani, F., Stanimirović, P.S., Haghani, F.K.: On hyperpower family of iterations for computing outer inverses possessing high efficiencies. Linear Algebra Appl. 484, 477–495 (2015)

Stanimirović, P.S., Pappas, D., Katsikis, V.N., Stanimirović, I.V.: Full-rank representations of outer inverses based on the QR decomposition. Appl. Math. Comput. 218(20), 10321–10333 (2012)

Tian, H.: All solutions of the Yang–Baxter-like matrix equation for rank-one matrices. Appl. Math. Lett. 51, 55–59 (2016)

Wang, G., Wei, Y., Qiao, S.: Generalized inverses: theory and computations. Science Press, Beijing (2018)

Yang, C.N.: Some exact results for the many-body problem in one dimension with repulsive delta-function interaction. Phys. Rev. Lett. 19(23), 1312–1315 (1967)

Yang, C.N., Ge, M.L.: Braid Group. Knot theory and statistical mechanics. World Scientific, Singapore (1991)

Acknowledgements

The author, Ashim Kumar, acknowledges the I. K. Gujral Punjab Technical University Jalandhar, Kapurthala for providing research support to him. The work of the author João R. Cardoso is partially supported by the Centre for Mathematics of the University of Coimbra - UIDB/00324/2020, funded by the Portuguese Government through FCT/MCTES.

Author information

Authors and Affiliations

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, A., Cardoso, J.R. & Singh, G. Explicit Solutions of the Singular Yang–Baxter-like Matrix Equation and Their Numerical Computation. Mediterr. J. Math. 19, 85 (2022). https://doi.org/10.1007/s00009-022-01982-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-022-01982-y

Keywords

- Yang–Baxter-like matrix equation

- generalized outer inverse

- spectral projector

- matrix sign function

- Schur decomposition