Abstract

The aim of this paper is to study a classical pseudo-monotone and non-Lipschitz continuous variational inequality problem in real Hilbert spaces. Weak and strong convergence theorems are presented under mild conditions. Our methods generalize and extend some related results in the literature and the main advantages of proposed algorithms there is no use of Lipschitz condition of the variational inequality associated mapping. Numerical illustrations in finite and infinite dimensional spaces illustrate the behaviors of the proposed schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The main purpose of this paper to study the classical variational inequality of Fichera [13, 14] and Stampacchia [38] (see also Kinderlehrer and Stampacchia [25]) in real Hilbert spaces. Precisely, the classical variational inequality problem (VIP) is of the form: finding a point x∗∈ C such that

Let us denote by VI(C, A) the solutions set of VIP (1).

This problem plays an important role as a modeling tool in diverse fields such as in economics, engineering mechanics, transportation, and many more (see for example, [2, 3, 15, 26, 28]). Recently, many iterative methods have been constructed for solving variational inequalities and their related optimization problems (see monographs [12, 28] and references therein).

One of the most popular methods for solving variational inequalities with monotone and Lipschitz continuous mappings is the method proposed by Korpelevich [30] (also independently by Antipin [1]) which is called the extragradient method in the finite dimensional Euclidean space. This method was based on a double-projection method onto the feasible set.

The extragradient method has been studied and extended in infinite-dimensional spaces by many authors (see, e.g., [6,7,8,9, 20, 22, 23, 32, 33, 39, 41,42,43,44] and the references therein). It is easy to observe that, when the mapping-associated variational inequality is not Lipschitz continuous or the Lipschitz constant of the associated variational inequality mapping is very difficult to compute, it is clear that the extragradient method is not applicable to implement because we can not determine the stepsize.

Khobotov [27] proposed the linesearch for the extragradient method and Marcotte’s paper [34] contains its implementation. The first extrapolation method using Armijo-type linesearch was proposed in [29] and the method [19] follows the same approach (see comments of Section 1.3 in [28] and and Section 12.1 in [12]).

This modification in [19, 29] allows convergence without Lipschitz continuity of the mapping-associated variational inequality in finite-dimensional Euclidean space. The algorithm is of the form

Moreover, Algorithm 1 converges under the condition the mapping-associated variational inequality is monotone and continuous on the feasible set in finite-dimensional spaces. This brings the following natural question.

Question:

Can we obtain convergence result for VIP using a new modification of the extragradient method under a much weaker condition than monotonicity of the cost function?

Our aim in this paper is to answer the above question in the affirmative. Precisely, our contributions in this paper are:

to construct another modification of extragradient algorithm that converges under a weaker condition in an infinite-dimensional Hilbert space;

to introduce a modification of extragradient method for solving VIP with uniformly continuous pseudo-monotone mapping in infinite-dimensional real Hilbert spaces;

to use a different Armijo-type linesearch and obtain convergence results (weak and strong convergence results) when the mapping is pseudo-monotone in the sense of Karamardian [24]

to compare, using numerical examples, our proposed methods with some methods in the literature. Our numerical analysis (performed both in finite- and infinite-dimensional Hilbert spaces) shows that our methods outperform certain already established methods for solving variational inequality problem with pseudo-monotone mapping in the literature.

We organize the paper as follows: In Sect. 2, we give some definitions and preliminary results to be used in our convergence analysis. In Sect. 3, we deal with analyzing the convergence of the proposed algorithms. Finally, in Sect. 4, several numerical experiments are performed to illustrate the implementation of our proposed algorithms and compare our proposed algorithms with previously known algorithms.

2 Preliminaries

Let C be a non-empty, closed, and convex subset of a real Hilbert space H, A : H → H is a single-valued mapping, and 〈⋅,⋅〉 and ∥⋅∥ are the inner product and the norm in H, respectively.

The weak convergence of \(\{x_{n}\}_{n=1}^{\infty }\) to x is denoted by \(x_{n}\rightharpoonup x\) as n →∞, while the strong convergence of \(\{x_{n}\}_{n=1}^{\infty }\) to x is written as xn → x as n →∞. For each x, y ∈ H and \(\alpha \in \mathbb {R}\), we have

Definition 2.1

Let T : H → H be a mapping.

- 1.

The mapping T is called L-Lipschitz continuous with L > 0 if

$$ \|Tx-Ty\|\le L \|x-y\| \ \ \ \forall x,y \in H. $$if L = 1 then the mapping T is called non-expansive and if L ∈ (0,1), T is called contraction.

- 2.

The mapping T is called monotone if

$$ \langle Tx-Ty,x-y \rangle \geq 0 \ \ \ \forall x,y \in H. $$ - 3.

The mapping T is called pseudo-monotone if

$$ \langle Tx,y-x \rangle \geq 0 \Longrightarrow \langle Ty,y-x \rangle \geq 0 \ \ \ \forall x,y \in H. $$ - 4.

The mapping T is called α-strongly monotone if there exists a constant α > 0 such that

$$ \langle Tx-Ty,x-y\rangle\geq \alpha \|x-y\|^{2} \ \ \forall x,y\in H. $$ - 5.

The mapping T is called sequentially weakly continuous if for each sequence {xn} we have: xn converges weakly to x implies xn converges weakly to Tx.

It is easy to see that very monotone mapping is pseudo-monotone but the converse is not true. For example, take \(Tx:=\frac {1}{1+x}, x >0\).

For every point x ∈ H, there exists a unique nearest point in C, denoted by PCx such that ∥x − PCx∥≤∥x − y∥ ∀y ∈ C. PC is called the metric projection of H onto C.

Lemma 2.1

[16] Givenx ∈ Handz ∈ C.Thenz = PCx⇔〈x − z, z − y〉≥ 0 ∀y ∈ C.

Lemma 2.2

[16] Letx ∈ H.Then

- i)

∥PCx − PCy∥2 ≤〈PCx − PCy, x − y〉 ∀y ∈ H;

- ii)

∥PCx − y∥2 ≤∥x − y∥2 −∥x − PCx∥2 ∀y ∈ C;

- iii)

〈(I − PC)x − (I − PC)y, x − y〉≥∥(I − PC)x − (I − PC)y∥2 ∀y ∈ H.

Lemma 2.3

[5] Givenx ∈ Handv ∈ H,v≠ 0 and letT = {z ∈ H : 〈v, z − x〉 ≤ 0}.Then, for allu ∈ H,the projectionPT(u) is defined by

In particular, if u∉T then

Lemma 2.3 gives us an explicit formula to find the projection of any point onto a half-space.

For properties of the metric projection, the interested reader could be referred to Section 3 in [16] and Chapter 4 in [5].

The following Lemmas are useful for the convergence of our proposed methods.

Lemma 2.4

[11] Forx ∈ Handα ≥ β > 0 the following inequalities hold.

Lemma 2.5

[21] LetH1andH2be two real Hilbert spaces. SupposeA : H1 → H2is uniformly continuous on bounded subsets ofH1and M is a bounded subset ofH1.Then,A(M) is bounded.

Lemma 2.6

[[10], Lemma 2.1] Consider the problemVI(C, A) withC being a non-empty, closed, convex subset of a real Hilbert spaceH andA : C → Hbeing pseudo-monotone and continuous. Then,x∗is a solution ofVI(C, A) if and only if

Lemma 2.7

[36] LetC be a non-empty set ofH and{xn} be asequence inH such that the following two conditions hold:

- i)

for everyx ∈ C,\(\lim _{n\to \infty }\|x_{n}-x\|\)exists;

- ii)

every sequential weak cluster point of{xn} is in C.

Then, {xn} converges weakly to a point inC.

The proof of the following lemma is the same with Lemma 2.3 and was given in [17]. Hence, we state the lemma and omit the proof in real Hilbert spaces.

Lemma 2.8

LetH be a real Hilbert space and h be a real-valued function onH and defineK := {x ∈ H : h(x) ≤ 0}.If K is nonempty and h is Lipschitz continuous onH with modulus𝜃 > 0,then

where dist(x, K) denotes the distance function from x to K.

Lemma 2.9

[31] Let {an} be a sequence of non-negative real numbers such that there exists asubsequence\(\{a_{n_{j}}\}\)of{an} such that\(a_{n_{j}}<a_{n_{j}+1}\)forall\(j\in \mathbb {N}\).Then there exists a non-decreasing sequence{mk} of\(\mathbb {N}\)suchthat\(\lim _{k\to \infty }m_{k}=\infty \)andthe following properties are satisfied by all (sufficiently large)number\(k\in \mathbb {N}\):

In fact, mk is the largest number n in the set {1,2,⋯ ,k} such that an < an+ 1.

Lemma 2.10

[45] Let{an} be a sequence of non-negative real numbers such that:

where {αn}⊂ (0,1) and {bn} is a sequence such that

- a)

\({\sum }_{n=0}^{\infty } \alpha _{n}=\infty \);

- b)

\(\limsup _{n\to \infty }b_{n}\le 0.\)

Then ,\(\lim _{n\to \infty }a_{n}=0.\)

3 Main results

The following conditions are assumed for the convergence of the methods.

Condition 1

The feasible set C is a non-empty, closed, and convex subset of the real Hilbert space H.

Condition 2

The mapping A : H → H is a pseudo-monotone, uniformly continuous on H and sequentially weakly continuous on C. In finite-dimensional spaces, it suffices to assume that A : H → H is a continuous pseudo-monotone on H.

Condition 3

The solution set of the VIP (1) is non-empty, that is VI(C, A)≠∅.

3.1 Weak convergence

In this section, we introduce a new algorithm for solving VIP which is constructed based on modified projection-type methods.

Remark 3.1

We note that our Algorithm 3.1 in this paper is proposed in infinite-dimensional real Hilbert spaces while the method proposed by Solodov and Tseng in [40] was done in finite-dimensional spaces. Furthermore, our method is much more general than that of Solodov and Tseng [40] even with a more general cost function than that of Solodov and Tseng [40]. This is confirmed in our numerical examples, where we give examples of variational inequalities with pseudomonotone functions which are not monotone (as assumed in the paper of Solodov and Tseng [40]) even in finite-dimensional spaces.

We start the analysis of the algorithm’s convergence by proving the following lemmas

Lemma 3.1

Assume that Conditions 1–2 hold. The Armijo-line search rule (2) is welldefined.

Proof

If xn ∈ VI(C, A) then xn = PC(xn − γAxn) and mn = 0. We consider the situation xn∉VI(C, A) and assume the contrary that for all m we have

By Cauchy-Schwartz inequality, we have

This implies that

We consider two possibilities of xn. First, if xn ∈ C, then since PC and A are continuous, we have \(\lim _{m\to \infty }\|x_{n}-P_{C}(x_{n}-\gamma l^{m} Ax_{n})\|=0.\) From the uniform continuity of the mapping A on bounded subsets of C, it implies that

Assume that zm = PC(xn − γlmAxn) we have

This implies that

Taking the limit m →∞ in (8) and using (7) we obtain

which implies that xn ∈ VI(C, A) is a contraction.

Now, if xn∉C, then we have

and

Combining (4), (9), and (10), we get a contradiction. □

Remark 3.2

-

1.

In the proof of Lemma 3.1, we do not use the pseudo-monotonicity of A.

-

2.

Now, we show that if xn = yn then stop and yn is a solution of VI(C, A). Indeed, we have 0 < λn ≤ γ, which together with Lemma 2.4, we get

$$ 0=\frac{\|x_{n}-y_{n}\|}{\lambda_{n}}=\frac{\|x_{n}-P_{C}(x_{n}-\lambda_{n} Ax_{n})\|}{\lambda_{n}}\geq \frac{\|x_{n}-P_{C}(x_{n}-\gamma Ax_{n})\|}{\gamma}. $$This implies that xn is a solution of VI(C, A), thus yn is a solution of VI(C, A).

-

3.

Next, we show that if Ayn = 0 then stop and yn is a solution of VI(C, A). Indeed, since yn ∈ C, it is easy to see that if Ayn = 0 then yn ∈ VI(C, A).

Lemma 3.2

Assume that Conditions 1–3 hold. Letx∗be a solution of problem (1) and the functionhnbe defined by (5). Thenhn(x∗) ≤ 0 andhn(xn) ≥ (1 − μ)∥xn − yn∥2.In particular, ifxn≠ynthenhn(xn) > 0.

Proof

Since x∗ be a solution of problem (1), using Lemma 2.6 we have

It is implied from (11) and yn = PC(xn − λnAxn) that

The first claim of Lemma 3.2 is proven. Now, we prove the second claim. Using (2), we have

□

Remark 3.3

Lemma 3.2 implies that xn∉Cn. According to Lemma 2.3, then xn+ 1 is of the form

Lemma 3.3

Assume that Conditions 1–3 hold. Let{xn} be a sequence generated by Algorithm 3.1. If there exists asubsequence\(\{x_{n_{k}}\}\)of{xn} such that\(\{x_{n_{k}}\}\)convergesweakly toz ∈ Hand\(\lim _{k\to \infty }\|x_{n_{k}}-y_{n_{k}}\|=0\)thenz ∈ VI(C, A).

Proof

From \(x_{n_{k}}\rightharpoonup z, \lim _{k\to \infty }\|x_{n_{k}}-y_{n_{k}}\|=0\), and {yn}⊂ C, we get z ∈ C. We have \(y_{n_{k}}=P_{C}(x_{n_{k}}-\lambda _{n_{k}}Ax_{n_{k}}) \) thus,

or equivalently

This implies that

Now, we show that

For showing this, we consider two possible cases. Suppose first that \(\liminf _{k\to \infty }\lambda _{n_{k}}>0\). We have \(\{x_{n_{k}}\}\) is a bounded sequence, A is uniformly continuous on bounded subsets of H. By Lemma 2.6, we get that \(\{Ax_{n_{k}}\}\) is bounded. Taking k →∞ in (12) since \(\|x_{n_{k}}-y_{n_{k}}\|\to 0\), we get

Now, we assume that \(\liminf _{k\to \infty }\lambda _{n_{k}}=0\). Assume \(z_{n_{k}}=P_{C}(x_{n_{k}}-\lambda _{n_{k}}.l^{-1}Ax_{n_{k}})\), we have \(\lambda _{n_{k}}l^{-1}>\lambda _{n_{k}}\). Applying Lemma 2.4, we obtain

Consequently, \(z_{n_{k}}\rightharpoonup z\in C\), this implies that \(\{z_{n_{k}}\}\) is bounded, which the uniformly continuity of the mapping A on bounded subsets of H follows that

By the Armijo linesearch rule (2), we must have

By Cauchy-Schwartz inequality, we have

That is,

Combining (14) and (15), we obtain

Furthermore, we have

This implies that

Taking the limit k →∞ in (16), we get

Therefore, the inequality (13) is proven.

On the other hand, we have

Since \(\lim _{k\to \infty }\|x_{n_{k}}-y_{n_{k}}\|=0\) and the uniformly continuity of A on H, we get

which, together with (13) and (17) implies that

Next, we show that z ∈ VI(C, A). Indeed, we choose a sequence {𝜖k} of positive numbers decreasing and tending to 0. For each k, we denote by Nk the smallest positive integer such that

where the existence of Nk follows from (18). Since {𝜖k} is decreasing, it is easy to see that the sequence {Nk} is increasing. Furthermore, for each k, since \(\{y_{N_{k}}\}\subset C\) we have \(Ay_{N_{k}}\ne 0\), and setting

we have \(\langle Ay_{N_{k}}, v_{N_{k}}\rangle = 1\) for each k. Now, we can deduce from (19) that for each k

Since the fact that A is pseudo-monotone, we get

This implies that

Now, we show that \(\lim _{k\to \infty }\epsilon _{k} v_{N_{k}}=0\). Indeed, since \(x_{n_{k}}\rightharpoonup z\) and \(\lim _{k\to \infty }\|x_{n_{k}}-y_{n_{k}}\|=0, \)we obtain \(y_{N_{k}}\rightharpoonup z \text { as} k \to \infty \). Since A is sequentially weakly continuous on C, \(\{ Ay_{n_{k}}\}\) converges weakly to Az. We have that Az≠ 0 (otherwise, z is a solution). Since the norm mapping is sequentially weakly lower semicontinuous, we have

Since \(\{y_{N_{k}}\}\subset \{y_{n_{k}}\}\) and 𝜖k → 0 as k →∞, we obtain

which implies that \(\lim _{k\to \infty } \epsilon _{k} v_{N_{k}} = 0.\)

Now, letting k →∞, then the right hand side of (20) tends to zero by A is uniformly continuous, \(\{x_{N_{k}}\}, \{v_{N_{k}}\}\) are bounded and \(\lim _{k\to \infty }\epsilon _{k} v_{N_{k}}=0\). Thus, we get

Hence, for all x ∈ C we have

By Lemma 2.6, we obtain z ∈ VI(C, A) and the proof is complete. □

Remark 3.4

When the function A is monotone, it is not necessary to impose the sequential weak continuity on A.

Theorem 3.5

Assume that Conditions 1–3 hold. Then any sequence{xn} generated by Algorithm 3.1 converges weakly to an element ofVI(C, A).

Proof

Claim 1

{xn} is a bounded sequence. Indeed, let p ∈ VI(C, A) we have

This implies that

This implies that \(\lim _{n\to \infty }\|x_{n}-p\|\) exists. Thus, the sequence {xn} is bounded and we also have {yn} is bounded.

Claim 2

for some M > 0. Indeed, since {xn},{yn} are bounded, thus {Axn},{Ayn} are bounded, thus there exists M > 0 such that ∥xn − yn − λn(Axn − Ayn)∥≤ M for all n. Using this fact, we get for all u, v ∈ H that

This implies that hn(⋅) is M-Lipschitz continuous on H. By Lemma 2.8, we obtain

which, together with Lemma 3.2, we get

Combining (21) and (22), we obtain

which implies Claim 2 is proved.

Claim 3

The sequence {xn} converges weakly to an element of VI(C, A). Indeed, since {xn} is a bounded sequence, there exists the subsequence \(\{x_{n_{k}}\}\) of {xn} such that \(\{x_{n_{k}}\}\) converges weakly to z ∈ H.

According to Claim 2, we find

It is implied from Lemma 3.3 and (23) that z ∈ VI(C, A).

Therefore, we proved that:

- i)

For every p ∈ VI(C, A), \(\lim _{n\to \infty }\|x_{n}-p\|\) exists;

- ii)

Each sequential weak cluster point of the sequence {xn} is in VI(C, A).

By Lemma 2.7 the sequence {xn} converges weakly to an element of VI(C, A).

□

3.2 Strong convergence

In this section, we introduce an algorithm for strong convergence which is constructed based on viscosity method [35] and modified projection-type methods for solving VIs. In addition, we assume that f : C → H is a contractive mapping with a coefficient ρ ∈ [0,1), and we add the following condition

Condition 4

Let {αn} be a real sequences in (0,1) such that

Theorem 3.6

Assume that Conditions 1–4 hold. Then any sequence{xn} generated by Algorithm 3.2 converges strongly top ∈ VI(C, A),wherep = PVI(C, A) ∘ f(p).

Proof

Claim 1

The sequence {xn} is bounded. Indeed, let \(z_{n}=P_{C_{n}}(x_{n})\), according to Claim 1 in Theorem 3.5, we get

This implies that

Therefore,

This implies that the sequence {xn} is bounded. Consequently, {f(xn)},{yn}, and {zn} are bounded.

Claim 2

Indeed, we have

On the other hand, we have

Substitute (26) into (25), we get

This implies that

Claim 3

Indeed, from the definition of the sequence {xn} and (24) we obtain

This implies that

Claim 4

Indeed, we have

Claim 5

The sequence {∥xn − p∥2} converges to zero. We consider two possible cases on the sequence {∥xn − p∥2}.

Case 1

There exists an \(N\in {\mathbb N}\) such that ∥xn+ 1 − p∥2 ≤∥xn − p∥2 for all n ≥ N. This implies that \(\lim _{n\to \infty }\| x_{n}-p\|^{2}\) exists. It is implied from Claim 2 that

Now, according to Claim 3,

Since the sequence {xn} is bounded, it implies that there exists a subsequence \(\{x_{n_{k}}\}\) of {xn} that weak convergence to some z ∈ C such that

Since \(x_{n_{k}}\rightharpoonup z\) and (28), it implies from Lemma 3.3 that z ∈ VI(C, A). On the other hand,

Thus,

Since p = PVI(C, A)f(p) and \(x_{n_{k}} \rightharpoonup z\in VI(C,A)\), using (29), we get

This implies that

which, together with Claim 4, implies from Lemma 2.10 that

Case 2

There exists a subsequence \(\{\| x_{n_{j}}-p\|^{2}\}\) of {∥xn − p∥2} such that \(\| x_{n_{j}}-p\|^{2} < \| x_{n_{j}+1}-p\|^{2}\) for all \(j\in \mathbb {N}\). In this case, it follows Lemma 2.9 that there exists a nondecreasing sequence {mk} of \(\mathbb {N}\) such that \(\lim _{k\to \infty }m_{k}=\infty \) and the following inequalities hold for all \(k\in \mathbb {N}\):

According to Claim 2, we have

According to Claim 3, we have

Using the same arguments as in the proof of Case 1, we obtain

and

Since (27), we get

which, together with (30), implies that

Therefore, \(\limsup _{k\to \infty }\|x_{k}-p\|\le 0\), that is xk → p. The proof is completed.

□

Applying Algorithm 3.2 with f(x) := x1 for all x ∈ C, we obtain the following corollary.

Corollary 3.7

Givenγ > 0,l ∈ (0,1),μ ∈ (0,1).Letx1 ∈ Cbe arbitrary. Compute

whereλnis chosen to be the largestλ ∈{γ, γl, γl2,...} satisfying

Ifyn = xn then stop and xnis the solution of VIP. Otherwise, compute

where

and

Assume that Conditions 1–4 hold. Then the sequence {xn} converges strongly top ∈ VI(C, A), wherep = PVI(C, A)x1.

4 Numerical illustrations

Some numerical implementations of our proposed methods in this paper are provided in this section. We give the test examples both in finite-dimensional and infinite-dimensional Hilbert spaces and give numerical comparisons in all cases.

In the first two examples, we consider test examples in finite dimensional and implement our proposed Algorithm 3.1. We compare our method with Algorithm 1 of Iusem [19] (Iusem Alg. 1.1).

Example 4.1

Let us consider VIP (1) with

and

This VIP has unique solution x∗ = (0,− 1)T. It is easy to see that A is not a monotone map on C. However, using the Monte Carlo approach (see [18]), it can be shown that A is pseudo-monotone on C. Let x1 be the initial point be randomly generated vector in C, l = 0.1, γ = 2. We terminate the iterations if ∥xn − yn∥2 ≤ ε with ε = 10− 3, ∥.∥2 is the Euclidean norm on \(\mathbb {R}^{2}\). The results are listed in Table 1 and Figs. 1, 2, 3, and 4 below. We consider different values of μ (Table 2).

Example 4.2

Consider VIP (1) with

and

Then A is not monotone on C but pseudo-monotone (see [18]). Furthermore, the VIP (1) has a unique solution x∗ = (2.707,2.707)T. Take l = 0.1, γ = 3, and μ = 0.2. We terminate the iterations if ∥xn − yn∥2 ≤ ε with ε = 10− 2. The results are listed in Table 3 and Figs. 5, 6, 7, and 8 below. We consider different choices of initial point x1 in C.

Next, we give the following two examples in infinite-dimensional spaces to illustrate our proposed Algorithm 2. Here, we compare our proposed Algorithm 2 with the method proposed by Vuong and Shehu in [46] with \(\alpha _{n}=\frac {1}{n+1}\).

Example 4.3

Consider H := L2([0,1]) with inner product \(\langle x,y\rangle :={{\int }_{0}^{1}} x(t)y(t)dt\) and norm \(\|x\|_{2}:=({{\int }_{0}^{1}} |x(t)|^{2}dt)^{\frac {1}{2}}\). Suppose C := {x ∈ H : ∥x∥2 ≤ 2}. Let \(g: C\rightarrow \mathbb {R}\) be defined by

Observe that g is Lg-Lipchitz continuous with \(L_{g}=\frac {16}{25}\) and \(\frac {1}{5}\leq g(u)\leq 1, \forall u \in C\). Define the Volterra integral mapping F : L2([0,1]) → L2([0,1]) by

Then F is bounded linear monotone (see Exercise 20.12 of [4]). Now, define A : C → L2([0,1]) by

As given in [37], A is pseudo-monotone mapping but not monotone since

with v = 1 and u = 2.

Take l = 0.015, γ = 3 and μ = 0.1 (Table 4). We terminate the iterations if ∥xn − PC(xn − A(xn))∥2 ≤ ε with ε = 10− 2. The results are listed in Table 5 and Figs. 9, 10 ,and 11 below. We consider different choices of initial point x1 in C (Table 6).

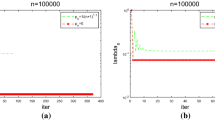

Example 4.4

Take

Define A : L2([0,1]) → L2([0,1]) by

It can also be shown that A is pseudo-monotone but not monotone on H.

Take l = 0.015, γ = 4, and μ = 0.1. We terminate the iterations if ∥xn − PC(xn − A(xn))∥2 ≤ ε with ε = 10− 2. The results are listed in Table 7 and Figs. 12, 13, and 14 below. We consider different choices of initial point x1 in C.

Remark 4.5

-

1.

Our proposed algorithms are efficient and easy to implement evident from many examples provided above.

-

2.

We observe that the choices of initial point x1 and μ have no significant effect on the number of iterations and the CPU time required to reach the stopping criterion. See all the examples above.

-

3.

Clearly from the numerical examples presented above, our proposed algorithms outperformed Algorithm 1 proposed by Iusem both in the number of iterations and CPU time required to reach the stopping criterion. The same observation is seen when compared with the algorithm proposed by Vuong and Shehu in [46].

-

4.

Furthermore, comparison of our proposed algorithms 3.2 and 3.3 are made with both algorithms proposed by Iusem and Vuong and Shehu using the inner loop to obtain λn, see Tables 2, 4, 6, and 8. Again, we could observe great advantages of our proposed algorithms over others.

5 Conclusions

We obtain weak and strong convergence of two projection-type methods for solving VIP under pseudo-monotonicity and non-Lipschitz continuity of the VI-associated mapping A. These two properties emphasize the applicability and advantages over several existing results in the literature. Numerical experiments performed in both finite- and infinite-dimensional spaces real Hilbert spaces show that our proposed methods outperform some already known methods for solving VIP in the literature.

References

Antipin, A.S.: On a method for convex programs using a symmetrical modification of the Lagrange function. Ekon. Mat. Metody. 12, 1164–1173 (1976)

Aubin, J.P., Ekeland, I.: Applied Nonlinear Analysis. Wiley, New York (1984)

Baiocchi, C., Capelo, A.: Variational and Quasivariational Inequalities Applications to Free Boundary Problems. Wiley, New York (1984)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. CMS Books in Mathematics. Springer, New York (2011)

Cegielski, A.: Iterative Methods for Fixed Point Problems in Hilbert Spaces Lecture Notes in Mathematics, vol. 2057. Springer, Berlin (2012)

Censor, Y., Gibali, A, Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Meth. Softw. 26, 827–845 (2011)

Censor, Y., Gibali, A., Reich, S.: Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61, 1119–1132 (2011)

Censor, Y., Gibali, A., Reich, S.: Algorithms for the split variational inequality problem. Numer. Algorithms. 56, 301–323 (2012)

Cottle, R.W., Yao, J.C.: Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 75, 281–295 (1992)

Denisov, S.V., Semenov, V.V., Chabak, L.M.: Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 51, 757–765 (2015)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer Series in Operations Research, Vols I And II. Springer, New York (2003)

Fichera, G.: Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. lincei, VIII. Ser. Rend. Cl. Sci. Fis. Mat. Nat. 34, 138–142 (1963)

Fichera, G.: Problemi elastostatici con vincoli unilaterali: il problema di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei. Mem. Cl. Sci. Fis. Mat. Nat. Sez. I VIII Ser. 7, 91–140 (1964)

Glowinski, R., Lions, J.L., Trémoliéres, R.: Numerical Analysis of Variational Inequalities. Elsevier, Amsterdam (1981)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York (1984)

He, Y.R.: A new double projection algorithm for variational inequalities. J. Comput. Appl. Math. 185, 166–173 (2006)

Hu, X., Wang, J.: Solving pseudo-monotone variational inequalities and pseudo-convex optimization problems using the projection neural network. IEEE Trans. Neural Netw. 17, 1487–1499 (2006)

Iusem, A.N.: An iterative algorithm for the variational inequality problem. Comput. Appl. Math. 13, 103–114 (1994)

Iusem, A.N., Svaiter, B.F.: A variant of Korpelevich’s method for variational inequalities with a new search strategy. Optimization 42, 309–321 (1997)

Iusem, A.N., Gárciga Otero, R.: Inexact versions of proximal point and augmented Lagrangian algorithms in Banach spaces. Numer. Funct. Anal. Optim. 22, 609–640 (2001)

Iusem, A.N., Nasri, M.: Korpelevich’s method for variational inequality problems in Banach spaces. J. Glob. Optim. 50, 59–76 (2011)

Kanzow, C., Shehu, Y.: Strong convergence of a double projection-type method for monotone variational inequalities in Hilbert spaces. J. Fixed Point Theory Appl. 20. Article 51 (2018)

Karamardian, S.: Complementarity problems over cones with monotone and pseudo-monotone maps. J. Optim. Theory Appl. 18, 445–454 (1976)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Khobotov, E.N.: Modifications of the extragradient method for solving variational inequalities and certain optimization problems. USSR Comput. Math. Math. Phys. 27, 120–127 (1987)

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Springer, Berlin (2001)

Konnov, I.V.: Combined relaxation methods for finding equilibrium points and solving related problems. Russ. Math. (Iz. VUZ). 37, 44–51 (1993)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekon. Mat. Metody. 12, 747–756 (1976)

Maingé, P. E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 47, 1499–1515 (2008)

Malitsky, Y.V.: Projected reflected gradient methods for monotone variational inequalities. SIAM J. Optim. 25, 502–520 (2015)

Malitsky, Y.V., Semenov, V.V.: A hybrid method without extrapolation step for solving variational inequality problems. J. Glob. Optim. 61, 193–202 (2015)

Marcotte, P.: Application of Khobotov’s algorithm to variational inequalities and network equilibrium problems. Inf. Syst. Oper. Res. 29, 258–270 (1991)

Moudafi, A.: Viscosity approximating methods for fixed point problems. J. Math. Anal. Appl. 241, 46–55 (2000)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Amer. Math. Soc. 73, 591–597 (1967)

Shehu, Y., Dong, Q.L., Jiang, D.: Single projection method for pseudo-monotone variational inequality in Hilbert spaces Optimization. https://doi.org/10.1080/02331934.2018.1522636 (2018)

Stampacchia, G.: Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. Paris. 258, 4413–4416 (1964)

Solodov, M.V., Svaiter, B.F.: A new projection method for variational inequality problems. SIAM J. Control Optim. 37, 765–776 (1999)

Solodov, M.V., Tseng, P.: Modified projection-type methods for monotone variational inequalities. SIAM J. Control Optim. 34, 1814–1830 (1996)

Thong, D.V., Hieu, D.V.: Weak and strong convergence theorems for variational inequality problems. Numer. Algorithm. 78, 1045–1060 (2018)

Thong, D.V., Hieu, D.V.: Modified subgradient extragradient algorithms for variational inequality problems and fixed point problems. Optimization 67, 83–102 (2018)

Thong, D.V., Hieu, D.V.: Modified subgradient extragradient method for variational inequality problems. Numer. Algorithm. 79, 597–610 (2018)

Thong, D.V., Hieu, D.V.: Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 341, 80–98 (2018)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240–256 (2002)

Vuong, P.T., Shehu, Y.: Convergence of an extragradient-type method for variational inequality with applications to optimal control problems. Numer. Algorithms. https://doi.org/10.1007/s11075-018-0547-6 (2018)

Acknowledgments

The authors would like to thank Professor Aviv Gibali and two anonymous reviewers for their comments on the manuscript which helped us very much in improving and presenting the original version of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Le Dung Muu on the occasion of his 70th birthday

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Thong, D.V., Shehu, Y. & Iyiola, O.S. Weak and strong convergence theorems for solving pseudo-monotone variational inequalities with non-Lipschitz mappings. Numer Algor 84, 795–823 (2020). https://doi.org/10.1007/s11075-019-00780-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-019-00780-0

Keywords

- Projection-type method

- Variational inequality

- Viscosity method

- Pseudo-monotone mapping

- Non-Lipschitz mapping