Abstract

This paper mainly investigates the positive effects of delay-dependent impulses on the synchronization of delayed memristor neural networks. Different from traditional impulsive control, the impulsive sequence in this paper is assumed to have the Markovian property, and is not always stabilizing. Based on a useful inequality, mean square synchronization criterion is derived under such a kind of impulsive effect. It can be seen that the stochastic impulses play an impulsive controller role, if they are stabilizing in an “average” sense. The validity of the theoretical results is illustrated by a numerical example.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowdays, neural networks (NNs) has become a hot research topic, due to its wide applications [1,2,3,4]. In 1971, the concept of memristor was first proposed and some properties of memristor from theoretical level were discussed [5]. Memristor, as a new passive two-terminal circuit element, has been applied in designing integrated circuits and artificial NNs due to its good properties, such as low energy consumption, nanoscale, memory capability and good mimic of the human brain. Hence, the memristor neural networks (MNNs) have been designed to emulate human brains recently [6].

In recent years, synchronization, as a typical dynamical behavior of the NNs, has been studied extensively [7,8,9,10,11]. Compared with the traditional continuous NNs, the synchronization of MNNs is more difficult to investigate because of the switching characteristics of the connection weights [6, 12, 13]. In addition, the internal parameters of memristor, such as length, cross-sectional area and heterogeneity, may also affect the performance of memristor. In [13], the synchronization issue of MNNs with the uncertain parameter is addressed by using the theory of differential inclusion. In [14], by constructing Lyapunov-Krasovskii functionals, two effective synchronization criteria are provided for the coupled MNNs.

In many real networked systems, information transmission and exchange between neighboring nodes will suffer some uncertain factors, such as network attacks [15, 16] or communication delay [17,18,19]. Undoubtedly, the time-delay has become an important factor which should be considered for the synchronization problem of MNNs [12, 20]. In [12], it has been shown that the fixed-time synchronization for DMNNs can be achieved by designing the state-feedback controllers and adaptive controller, respectively. In [20], conservatism of synchronization criteria is reduced by using the average impulsive interval (AII) approach.

Impulsive effect, whether artificial or natural, is common in real networks [21]. In the past few years, more and more attention is paid to the synchronization of NNs with impulsive effects [22]. On the one hand, impulsive control is an effective and economical control technique in networked systems, since it is a discontinuous control and only applied to the nodes in some discrete time instants [2, 23, 24]. On the other hand, a real-world network may suffer from impulsive disturbance. Generally, according to the impulsive intensity, all impulses can be divided into two types: synchronizing impulses, or desynchronizing impulses [25, 26]. In [27,28,29], a uniform synchronization criterion for impulsive dynamic networks is proposed based on AII, where the impulsive strengths are assumed to be determined constants.

As is well known, when impulsive intensity is assumed to be determined, synchronization of a network can be destroyed by desynchronizing impulses, and a pre-designed impulsive controller can synchronize an asynchronous network [30,31,32]. However, impulsive disturbance is often stochastic, while an impulsive controller can also exhibit randomness because of some unstable factors. Hence, it seems more reasonable that the impulsive strengths are stochastic [33,34,35]. The above classification may no longer be applicable when impulsive strengths are not determined. An interesting question naturally arises: can a stochastic impulsive sequence act as an impulsive controller? If yes, what conditions should be satisfied?

Inspired by the discussions above, this paper will focus on the positive effects that stochastic impulses might have on synchronizing a DMNNs. The main contributions of this paper can be listed as follows:

-

(1)

A useful inequality is proposed in this paper which enriches the famous Halanay inequality. Compared with the previous result in [17], it is no longer required that all impulsive strengths are a fixed constant.

-

(2)

Different from [17, 27], the impulsive sequence in this paper is assumed to have the Markovian property. An easy-to-verify criterion is derived to guarantee the mean square synchronization of the concerned DMNNs.

-

(3)

Discussions on the synchronization criterion are made in this paper. It is revealed that the stochastic impulsive sequence can act as a controller and contribute to the synchronous behavior. Unlike the traditional impulsive controller [17], impulses in this paper only need to be stabilizing in an “average" sense, rather than always be stabilizing.

Notations: See Table 1.

2 Preliminaries & Model Description

In this section, some assumptions, definitions, lemmas and the model description are given so as to get the main results.

Definition 1

[23]. Consider \(\frac{{\text {d}}x}{{\text {d}}t}=\digamma (t,x)\), where \(\digamma (t,x)\) is discontinuous in x. Define the set-valued map of \(\digamma (t,x)\) as

where \({\mathcal {B}}(x,\varkappa )=\{y:||y-x||\le \varkappa \}\), \(\mu ({\mathcal {N}})\) is the Lebesgue measure of set \({\mathcal {N}}\). For this system, a Filippov solution with initial condition \(\digamma (0)=\digamma _0\) is absolutely continuous on any subinterval \(t\in [t_1, t_2]\) of [0, T], and the differential inclusion \(\frac{{\text {d}}x}{{\text {d}}t}\in \varPhi (t, x), a.e. t\in [0, T].\)

Definition 2

[27]. (Average Impulsive Interval(AII)) \(T_a\) is said to be the AII of the impulsive sequence \(\zeta =\{t_1,t_2,\cdots \}\), if there exist positive integer \(\daleth _0\) and positive scalar \(T_a\) such that

where \(\daleth _\zeta (t,T)\) stands for the number of impulses during the time interval (t, T).

Remark 1

The concept of AII reduces the conservatism for the synchronization of the NNs under impulsive effects since the positive number \(T_a\) can be used to estimate the number of impulses during the time interval (t, T).

Definition 3

[17] (Average Impulsive Delay(AID)) \({\bar{\tau }} > 0\) is called the AID of a sequence of impulsive delays \(\{\tau _k\}_{k \in {\mathbb {Z}}_+}\), if there is a constant \(\tau ^{(0)} > 0\) satisfying

This paper considers the DMNNs described by the following equations:

where \(x_i(t)=(x_{i1}(t),\cdots ,x_{in}(t))^T\in {\mathbb {R}}^n\) denotes the state variable of the ith neuron at time t, the matrix \(D>0\). \(A(x_i(t)) = (a_{lj}(x_{ij}(t)))_{n \times n}\) and \(B(x_i(t)) = (b_{lj}(x_{ij}(t)))_{n \times n}\) indicate the connection weight matrix and the delayed connection weight matrix, respectively. \(f(\cdot )\) represents the activation function. \({\bar{I}}(t)\) denotes the external input, and \(\tau \) is a positive constant which represents the transmission delay. \({\mathcal {L}}_H = (H_{ij})_{N \times N}\) is the negative Laplacian matrix of the DMNNs. The initial condition of system (1) is given by \(x_i (t)=\varphi _i(t)\in {{\mathbb {P}}}{{\mathbb {C}}}([-\tau , 0],{\mathbb {R}}^n)\).

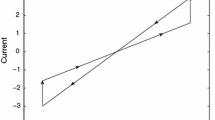

According to the typical current-voltage characteristics of the memristor (see Fig. 1), \(a_{lj}(x_{ij}(t))\) and \(b_{lj}(x_{ij}(t))\) in (1) are defined as

and

Here, \({T_j>0}\) are memristive switching rules, \({{\hat{a}}_{lj}}\), \({{\check{a}}_{lj}}\), \({{\hat{b}}_{lj}}\), \({{\check{b}}_{lj}}\) are constants relating to memristance. For further discussion, based on [23] and Definition 1, the system (1) can be rewritten as:

where \({\bar{co}}[A(x_i(t))]=[{\underline{A}}, {\bar{A}}]\), \({\bar{co}}[B(x_i(t))]=[{\underline{B}}, {\bar{B}}]\), \({\underline{A}} = (\acute{a}_{lj})_{n \times n}\), \({\bar{A}} = (\grave{a}_{lj})_{n \times n}\), \({\underline{B}} = (\acute{b}_{lj})_{n \times n}\), \({\bar{B}} = (\grave{b}_{lj})_{n \times n}\), \(\acute{a}_{lj} \triangleq \min \{{\hat{a}}_{lj}, {\check{a}}_{lj}\}\), \(\grave{a}_{lj} \triangleq \max \{{\hat{a}}_{lj}, {\check{a}}_{lj}\}\), \(\acute{b}_{lj} \triangleq \min \{{\hat{b}}_{lj}, {\check{b}}_{lj}\}\), \(\grave{b}_{lj} \triangleq \max \{{\hat{b}}_{lj}, {\check{b}}_{lj}\}\).

Let \(a_{lj}^*=\frac{1}{2}(\grave{a}_{lj}+\acute{a}_{lj}), b_{lj}^*=\frac{1}{2}(\grave{b}_{lj}+\acute{b}_{lj})\), which express the intervals \([\acute{a}_{lj},\grave{a}_{lj}]\), \([\acute{b}_{lj}, \grave{b}_{lj}]\) in terms of the midpoints, \(a_{lj}^{**}=\frac{1}{2}(\grave{a}_{lj}-\acute{a}_{lj})\) and \(b_{lj}^{**}=\frac{1}{2}(\grave{b}_{lj}-\acute{b}_{lj})\) represent the half-lengths. By denoting \(A^*\triangleq (a_{lj}^*)_{n \times n}\) and \(B^*\triangleq (b_{lj}^*)_{n \times n}\), we can rewrite system (2) as follows:

where \(R_A = E_A \Sigma _A F_A\), \(R_B = E_B \Sigma _B F_B\), \(\Sigma _{A,B} \in \Sigma \), and

where \(e_i\) stands for the i-th column of the identity matrix \(I_n\).

To deal with the uncertain terms \(R_A\) and \(R_B\), let \(E = [E_A, E_B]\) and

Then, we can recast system (2) as

In this paper, it is assumed that the following assumptions are satisfied.

Assumption 1

The function f is Lipschitz continuous. That is, for some constant \(\varpi > 0\) and \(\forall y_1,\,y_2 \in {\mathbb {R}}^n\), \(\Vert f(y_1) - f(y_2) \Vert \le \varpi \Vert y_1 - y_2 \Vert \).

Assumption 2

\({\mathcal {L}}_H\) is symmetric and irreducible.

This paper focuses on how a stochastic impulsive sequence can promote the synchronization of network (1). Due to limited transmission speed, imperfect pulse output devices and some other factors, time delay always inevitably occurs in real-world networks. For this reason, we consider the one delayed impulsive effect, which can be formulated as follows:

where \(\zeta = \{t_k\}_{k=1,2,\cdots }\) is a strictly increasing sequence which represents the impulsive instants subject to Definition 2, and \({\lim _{k \rightarrow +\infty }t_k=\infty }\). \(\tau _k\) is a delay sequence as described in Definition 3. Moreover, it is assumed that \(t_k - t_{k-1} \ge \tau > \tau _k \ge 0\), \(\forall k \in {\mathbb {Z}}_+\). \(\{\sigma _k\}\) is the sequence of impulsive strengths, and satisfies the following assumption.

Assumption 3

Let \(r_k \triangleq r(t_k)\) be a discrete Markov chain which is independent with the initial conditions \(\varphi _i(\cdot )\). \(\varOmega = \{1,2,\cdots ,\beta \}\) is the set of states, from which \(r(t_k)\) takes its values. \({\mathcal {P}} = (p_{ij})_{\beta \times \beta }\) and \(\Pi _1 = (\pi _{11},\cdots ,\pi _{1\beta })\) are the transition matrix and initial distribution of \(r(t_k)\), respectively. \(r(t_k)\) determines the impulsive strength at \(t_k\), that is, \(\sigma _k = \sigma ^{(r_k)}\), where \(\sigma ^{(i)} > 0\) are \(\beta \) different constant representing different impulsive strengths, \(i = 1,\cdots ,\beta \).

By combining (5) with (6), we can obtain the following DMNNs with delay-dependent impulsive effect:

Remark 2

Under Assumption 3, the impulsive network (7) can be regarded as a Markovian mode-jump system which switches among \(\beta \) different “impulsive modes” \(\sigma ^{(1)},\cdots ,\sigma ^{(\beta )}\). As mentioned in [37], systems with Markovian jump can be used to describe many real-world applications, such as economic systems, chemical systems, power systems and so on. Dynamic systems that experience random abrupt variations in their structures or parameters can be well described by Markovian jump systems. Hence, it is meaningful to study impulsive DMNNs with such Markovian property.

To proceed, we introduce \({\bar{x}}(t) \triangleq \frac{1}{N}\sum _{i=1}^{N}x_i(t)\) as the average state. Denote the synchronization error as \(\varepsilon _i(t)=x_i(t)-{\bar{x}}(t)\) and define \(\varepsilon (t) \triangleq (\varepsilon _1^T (t),\) \(\cdots ,\varepsilon _N^T (t))^T\). The following definition and lemmas are needed in the rest of this paper.

Definition 4

System (7) is said to achieve global exponential synchronization (GES) in mean square, if

where M and \(\chi \) are two positive constants.

Lemma 1

Assume that there exist \({\underline{\mu }} > 0\) and \({\bar{\mu }} \ge 1\) such that \({\underline{\mu }} \le \mu _k \le {\bar{\mu }}\). Let \(w(\cdot ) \in {\mathbb {R}}\) be a piecewise continuous function satisfying

where \(q_1 \in {\mathbb {R}}\), \(q_2 \in {\mathbb {R}}_+\), \(t_k-t_{k-1} \ge \tau \ge \tau _k \ge 0\). Then, it holds that

where constant \({\bar{\kappa }} > 0\) satisfies \(-q_1 + \frac{{\bar{\mu }}}{{\underline{\mu }}}q_2 - {\bar{\kappa }} < 0\).

Proof

It directly follows from Theorem 3.9 in [17] that (9) is true on \([t_0-\tau , t_1)\). Assume (9) is true for all \(k \le m-1\), then

It can be showed by contradiction that (9) is true for \(k=m\). Otherwise, there must exists a \(\acute{t} \in [t_m, t_{m+1})\) such that (9) is true on \([t_0, \acute{t})\),

and

But according to (8), (9) and \(t_m - t_{m-1} \ge \tau \ge \tau _k\), we have

which contradicts with (12). The proof is completed. \(\square \)

Remark 3

In particular, if \(\mu _k \equiv \mu _0 \in (0,1)\), then Lemma 1 reduces to Theorem 3.9 in [17] by letting \({\underline{\mu }} = \mu _0\) and \({\bar{\mu }} = 1\). Hence, Lemma 1 can be seen as a generalization of the previous results in [17].

Lemma 2

[36] Assume a matrix \(G = (g_{ij})_{n \times n} \in {\mathbb {R}}^{n \times n}\) satisfying the following:

-

(a)

G is irreducible and symmetric;

-

(b)

\(\forall i \ne j\), \(g_{ij} \ge 0\);

-

(c)

\(g_{ii} = -\sum _{j=1,j \ne i}^{n}g_{ij}\), \(\forall i=1,\cdots ,n\).

Then,

-

(1)

All eigenvalues of G are non-positive;

-

(2)

G has an eigenvalue 0 with multiplicity 1. In addition, \((1,\cdots ,1)^T\) is an eigenvector of 0.

3 Main Result

For the sake of convenience, we denote \(q_1 = c \iota - \lambda _{max}\{-D+\frac{1}{2}B^*B^{*T} + \frac{1}{2} EE^T\} - \varpi \Vert A^*\Vert - \frac{\varpi ^2}{2}\Vert F_A \Vert ^2\) and \(q_2 = \frac{\varpi ^2}{2}(1+\Vert F_B \Vert ^2)\), where \(c,\,\varpi ,\,A^*,\,B^*,\,F_A,\,E,\,F_B\) are given in Sect. 2, \(\iota = -\lambda _2({\mathcal {L}}_H)\) represents opposite number of the second largest eigenvalue of \({\mathcal {L}}_H\).

Now we are ready to provide the main theoretical result.

Theorem 1

Suppose that Assumptions 1–3 hold for DMNNs (1). Let \({\bar{\kappa }} > 0\) satisfy \(-q_1 + \frac{{\bar{\sigma }}^2}{{\underline{\sigma }}^2} q_2 - {\bar{\kappa }} < 0\). Then, system (1) under impulsive effect (6) achieves GES in mean square if

and

where \({\bar{\sigma }} = \max _{i \in \varOmega }\{1, \sigma ^{(i)}\}\), \({\underline{\sigma }} = \min _{i \in \varOmega } \{\sigma ^{(i)}\}\) and \({\widetilde{\mu }} = \sum _{l=1}^{\beta } [(\sigma ^{(l)})^2 \max _{i \in \varOmega } \{p_{il}\}]\).

Proof

Recall that \(\varepsilon _i(t)=x_i(t)-\frac{1}{N}\sum _{i=1}^{N}x_i(t)\) and construct a Lyapunov candidate function \(V(t) = \frac{1}{2} \sum _{i=1}^{N}\varepsilon _i^T (t) \varepsilon _i(t)\). By repeatedly using the fact that \(\sum _{i=1}^{N}\varepsilon _i(t) = 0\), the derivation of V(t) on \([t_k, t_{k+1})\) can be estimated as

Recall (4) and \(E = [E_A, E_B]\), we have

which further indicates that

where Assumption 1 is used. Considering Assumption 2, \({\mathcal {L}}_H\) can be decomposed as

where \(U = (u_1,u_2,\cdots ,u_N)\) is an orthogonal matrix, \(\Lambda _H = {\text {diag}}\{\lambda _1({\mathcal {L}}_H),\lambda _2({\mathcal {L}}_H),\) \(\cdots ,\lambda _N({\mathcal {L}}_H)\}\), and \(0=\lambda _1({\mathcal {L}}_H)>\lambda _2({\mathcal {L}}_H)\ge \cdots \ge \lambda _N({\mathcal {L}}_H)\) are eigenvalues of \({\mathcal {L}}_H\). In addition, \(u_1 = \frac{1}{\sqrt{N}}(1,\cdots ,1)^T\) according to Lemma 2. Let \(y(t) = (U^T \otimes I_n) \varepsilon (t) = (y_1^T(t),\cdots ,y_N^T(t))^T\), we have \(y_1(t) = (u_1^T \otimes I_n) \varepsilon (t) = \frac{1}{\sqrt{N}}\sum _{i=1}^{N}\varepsilon _i(t) = 0\). Hence,

On the other hand, according to Assumption 2, for any two nodes i and j, there is at least one path between i and j. More precisely, there exist some integers \(m_1,\,m_2,\,\cdots ,\,m_s\), such that \(H_{im_1}>0\), \(H_{m_1 m_2}>0\), \(\cdots \), \(H_{m_s j}>0\). Hence, at an impulsive instant \(t_k\), we can infer from (7) that

According to (21) and (22), V(t) satisfies all conditions in Lemma 1 by letting \(\sigma _k^2 = \mu _k\), \({\bar{\sigma }}^2 = {\bar{\mu }}\) and \({\underline{\sigma }}^2 = {\underline{\mu }}\). Hence, for \({\bar{\kappa }} > -q_1 + \frac{{\bar{\mu }}}{{\underline{\mu }}}q_2\), we have

Taking expectation on both sides of (23), we have

Define \(\mu ^{(i)} = (\sigma ^{(i)})^2,\,i = 1,\cdots ,\beta \). Considering Assumption 3, we have

By (24), (25) and the fact that \({\widetilde{\mu }} < 1\), we have

where the second and third inequalities use the AII and AID assumptions, \(\rho _{{\bar{\kappa }}}\) is defined in (15). This completes the proof. \(\square \)

Remark 4

In order to achieve synchronization, the impulsive strengths should be stabilizing in a sense of “average” as shown in (14). It is worth mentioning that (14) does not require \(\mu ^{(i)} < 1\) for all \(i \in \varOmega \), which is different from traditional impulsive controller [17].

Remark 5

According to (15), small \({\bar{\kappa }}\) and \({\widetilde{\mu }}\) are helpful for synchronous behavior of the network. This can be explained by the following physical meaning: smaller \({\bar{\kappa }}\) means a better dynamic property of the network without impulses, while smaller \({\widetilde{\mu }}\) indicates higher costs at an impulsive instant. Another interesting fact is that the impulsive delay \({\bar{\tau }}\) may promote synchronization in some way according to (15). In fact, (15) is equivalent to

Obviously, a larger \({\bar{\tau }}\) is preferred in order to meet the requirement of (15).

Remark 6

It should be mentioned that, the criterion in Theorem 1 is just a sufficient condition, and has some conservatism. This is mainly caused by some imprecise inequalities in the proof.

An important special case of Theorem 1 is when all \(\tau _k\) are the same, that is, \(\tau _k \equiv \tau _1\). In this case, we have the following two corollaries by utilizing Theorem 1.

Corollary 1

In Theorem 1, let the impulsive delays \(\tau _k \equiv \tau _1\). Then, system (1) under impulsive effect (6) is globally exponentially synchronized, if

and

where the parameters \({\widetilde{\mu }}\), \({\bar{\kappa }}\) and \(T_a\) are the same as in Theorem 1.

Proof

When \(\tau _k \equiv \tau _1\), we have \({\bar{\tau }} = \tau _1\). The proof can be completed by directly using Theorem 1. \(\square \)

Corollary 2

Let the impulsive delays \(\tau _k \equiv 0\) in Theorem 1. Then, system (1) under impulsive effect (6) is globally exponentially synchronized, if

and

where the parameters \({\widetilde{\mu }}\), \({\bar{\kappa }}\) and \(T_a\) are the same as in Theorem 1.

When the impulsive sequence has no randomness, we have the following corollary.

Corollary 3

Let the impulsive strengths \(\sigma _k \equiv \sigma _1\) in Theorem 1. Then, system (1) under impulsive effect (6) is globally exponentially synchronized, if

and

where the parameters \({\bar{\tau }}\), \({\bar{\kappa }}\) and \(T_a\) are the same as in Theorem 1.

Proof

Noticing that \({\widetilde{\mu }} = \sigma _1^2\), the proof is trivial. \(\square \)

4 Numerical Example

This section gives a simple example to illustrate the validity of our theoretical results. Moreover, the case of \({\bar{\tau }} = 0\) is also simulated to reflect the promotion of impulsive delay.

Example 1

Consider DMNNs (1) with \(N=50\) and \(n=1\). \(A(x_i (t))\) and \(B(x_i (t))\) are chosen to be

and

Moreover, \(D = 0.5\), \(f(x_i) = 1.5x_i + 0.5\tanh {(x_i)}\), \(\tau = 1\), \({\bar{I}}(t) = 0\), \(c = 1\), \({\mathcal {L}}_H\) is randomly generated with \(\lambda _2({\mathcal {L}}_H) = -0.6239\) and the initial conditions are randomly chosen from \([-5,5]\). Figure 2 shows the state trajectories of all nodes when the DMNNs is free from impulsive effects. It is shown that the system is not synchronized without impulses.

State trajectories of all nodes without impulsive effect in Example 1

Now we exert the delay-dependent impulsive effect (6) on (1). Let \(\sigma ^{(1)} = 0.6\), \(\sigma ^{(2)} = 1.05\). The initial distribution and the transition matrix are \(\Pi _1 = (0.8,0.2)\) and \({\mathcal {P}} = \begin{bmatrix} 0.7 &{} 0.3\\ 0.8 &{} 0.2 \end{bmatrix}\), respectively. Furthermore, we choose \(T_a = 1.05\) and \({\bar{\tau }} = 0.98\). Figure 3a shows the randomly generated impulsive sequence, and Fig. 3b is the impulsive delays.

Impulsive strengths and delays in Example 1

Using the parameters above, we easily calculate that \(q_1 = -0.0761\), \(q_2 = 2.2000\), \({\widetilde{\mu }} = 0.6187\), \({\bar{\sigma }} = 1.05\) and \({\underline{\sigma }} = 0.6\). Choosing \({\bar{\kappa }} = 6.8236\), which satisfies \(-q_1 + \frac{{\bar{\sigma }}^2}{{\underline{\sigma }}^2} q_2 - {\bar{\kappa }} = -0.01 < 0\), we obtain \({\bar{\kappa }}({\bar{\tau }} - T_a) - \ln {{\widetilde{\mu }}} = 0.0024 > 0\). By applying Theorem 1, the DMNNs (1) under delayed impulsive effect (6) can achieve synchronization, which can be seen from Fig. 4.

State trajectories of all nodes under delayed impulsive effects in Example 1

As mentioned at the end of Remark5, the impulsive delay \({\bar{\tau }}\) may promote synchronization in some way. To illustrate this, the impulsive delay is changed to be \({\bar{\tau }}=0\), and all other parameters are the same as above. Figure 5 shows the state trajectories under such impulsive effects without impulsive delay, and it can be seen that synchronization is not achieved before \(t = 12\). To some extent, this can reflect the promotion of impulsive delay \({\bar{\tau }}\).

State trajectories under impulsive effects with \({\bar{\tau }}=0\) in Example 1

Remark 7

In Example 1, \(\sigma ^{(1)} = 0.6 < 1\) is a stabilizing impulsive intensity, and \(\sigma ^{(2)} = 1.05 > 1\) is destabilizing [17, 27]. Hence, the impulsive sequence in Example 1 is neither synchronizing nor desynchronizing according to the classification in [27]. By calculating \({\widetilde{\mu }}\), this paper provides a method to judge whether a stochastic impulsive sequence promotes synchronization or not.

5 Conclusion

This paper investigated the positive effects that stochastic delayed impulses might have on the synchronization of DMNNs. Based on an extended Halanay inequality, sufficient condition was derived to guarantee the mean square synchronization. It was revealed that the impulsive effect should be stabilizing in an “average” sense, rather than always be stabilizing. The theoretical results were verified by a numerical example.

It is worth mentioning that our main results are sometimes conservative. For instance, the hypothesis \(t_k - t_{k-1} \ge \tau _k\), \(\forall k \in {\mathbb {Z}}_+\) is hard to be satisfied in many cases, especially when the interval between two consecutive impulses is short. An interesting future work is to reduce the conservatism by allowing \(t_k - t_{k-1} < \tau _k\). Another interesting future topic is to study the case with stochastic impulsive interval.

References

Liu Y, Zheng Y, Liu J, Cao J, Rutkowski L (2020) Constrained quaternion-variable convex optimization: a quaternion-valued recurrent neural network approach. IEEE Trans Neural Netw Learn Syst 31(3):1022–1035

Wu A, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inform Sci 183:106–116

Rakkiyappan R, Chandrasekar A, Cao J (2015) Passivity and passification of memristor-based recurrent neural networks with additive time-varying delays. IEEE Trans Neural Netw Learn Syst 26(9):2043–2057

Li L, Mu G (2019) Synchronization of coupled complex-valued impulsive neural networks with time delays. Neural Process Lett 50(3):2515–2527

Chua L (1971) Memristor-missing circuit element. IEEE Trans Circ Theory 18(5):507–519

Marco MD, Forti M, Pancioni L (2018) New conditions for global asymptotic stability of memristor neural networks. IEEE Trans Neural Netw Learn Syst 29(5):1822–1834

Wu A, Zeng Z (2012) Exponential stabilization of memristive neural networks with time delays. IEEE Trans Neural Netw Learn Syst 23(12):1919–1929

Zhang W, Li C, Huang T, Xiao M (2015) Synchronization of neural networks with stochastic perturbation via aperiodically intermittent control. Neural Netw 71:105–111

Wang Y, Lu J, Liang J, Cao J, Perc M (2019) Pinning synchronization of nonlinear coupled lure networks under hybrid impulses. IEEE Trans Circ Syst II: Express Brief 66(3):432–436

Li L, Shi X, Liang J (2019) Synchronization of impulsive coupled complex-valued neural networks with delay: the matrix measure method. Neural Netw 117:285–294

He W, Xu T, Tang Y, Du W, Tian Y-C, Qian F (2020) Secure communication based on quantized synchronization of chaotic neural networks under an event-triggered strategy. IEEE Trans Neural Netw Learn Syst 31(9):3334–3345

Yang C, Huang L, Cai Z (2019) Fixed-time synchronization of coupled memristor-based neural networks with time-varying delays. Neural Netw 116:101–109

Yang Z, Luo B, Liu D, Li Y (2020) Adaptive synchronization of delayed memristive neural networks with unknown parameters. IEEE Trans Syst Man Cybernetics-syst 50(2):539–549

Li N, Zheng W (2018) Synchronization criteria for inertial memristor-based neural networks with linear coupling. Neural Netw 106:260–270

Xu W, Hu G, Ho D, Feng Z (2020) Distributed secure cooperative control under denial-of-service attacks from multiple adversaries. IEEE Trans Cybern 50(8):3458–3467

Xu W, Ho D, Zhong J, Chen B (2019) Event/self-triggered control for leader-following consensus over unreliable network with DoS attacks. IEEE Trans Neural Netw Learn Syst 30(10):3137–3149

Jiang B, Lu J, Liu Y (2020) Exponential stability of delayed systems with average-delay impulses. SIAM J Control Optim 58(6):3763–3784

Liu Y, Xu P, Lu J, Liang J (2016) Global stability of Clifford-valued recurrent neural networks with time delays. Nonlinear Dynam 84(2):767–777

Yang X, Song Q, Cao J, Lu J (2019) Synchronization of coupled Markovian reaction-diffusion neural networks with proportional delays via quantized control. IEEE Trans Neural Netw Learn Syst 3(3):951–958

Rakkiyappan R, Gayathri D, Velmurugan G, Cao J (2019) Exponential synchronization of inertial memristor-based neural networks with time delay using average impulsive interval approach. Neural Processing Letters

Lakshmikantham V, Bainov D, Simenonv P (1989) Theory of impulsive different equations. World Scientific, Singapore

Duan S, Wang H, Wang L, Huang T, Li C (2017) Impulsive effects and stability analysis on memristive neural networks with variable delays. IEEE Trans Neural Netw Learn Syst 28(2):476–481

Filippov AF (1988) Differential equations with discontinuous right-hand side. Mathematics and its Applications (Soviet Series), Kluwer Academic, Dordrecht

Yang Z, Xu D (2007) Stability analysis and design of Impulsive control systems with time delay. IEEE Trans Autom Control 52(8):1448–1454

Zhang W, Qi J, He X (2018) Input-to-state stability of impulsive inertial memristive neural networks with time-varying delayed. J Franklin Inst 355(17):8971–8988

Yang Z, Xu D (2005) Stability analysis of delay neural networks with impulsive effects. IEEE Trans Circ Syst II: Express Briefs 52(8):517–521

Lu J, Ho D, Cao J (2010) A unified synchronization criterion for impulsive dynamical networks. Automatica 46(7):1215–1221

Wang N, Li X, Lu J, Alsaadi F (2018) Unified synchronization criteria in an array of coupled neural networks with hybrid impulses. Neural Netw 101:25–32

Liu X, Zhang K (2016) Synchronization of linear dynamical networks on time scales: pinning control via delayed impulses. Automatica 72:147–152

Li X, Yang X, Huang T (2019) Persistence of delayed cooperative models: Impulsive control method. Appl Math Comput 342:130–146

Li X, Shen J, Rakkiyappan R (2018) Exponential synchronization of discontinuous neural networks with time-varying mixed delays via state feedback and impulsive control. Appl Math Comput 329:14–22

Rakkiyappan R, Velmurugan G, Li X (2015) Complete stability analysis of complex-valued neural networks with time delays and impulses. Neural Process Lett 41:435–468

Sun Y, Li L, Liu X (2020) Exponential synchronization of neural networks with time-varying delays and stochastic impulses. Neural Netw 132:342–352

Tang Y, Wu X, Shi P, Qian F (2020) Input-to-state stability for nonlinear systems with stochastic impulses. Automatica 113:108766

Wang Z, Liu Y, Liu X, Shi Y (2010) Robust state estimation for discrete-time stochastic neural networks with probabilistic measurement delays. Neurocomputing 74(1):256–264

Lu W, Chen T (2006) New approach to synchronization analysis of linearly coupled ordinary differential systems. Physica D: Nonlinear Phenomena 213(2):214–230

Shi P, Li F (2015) A survey on Markovian jump systems: modeling and design. Int J Control Autom Syst 13:1–16

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61503115.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, L., Sun, Y., Wang, M. et al. Synchronization of Coupled Memristor Neural Networks with Time Delay: Positive Effects of Stochastic Delayed Impulses. Neural Process Lett 53, 4349–4364 (2021). https://doi.org/10.1007/s11063-021-10600-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10600-z