Abstract

This paper investigates anti-synchronization control of memristive neural networks with multiple proportional delays. Here, we first study the proportional delay, which is a kind of unbounded time-varying delay in the memristive neural networks, by using the differential inclusion theory to handle the memristive neural networks with discontinuous right-hand side. In particular, several new criteria ensuring anti-synchronization of memristive neural networks with multiple proportional delays are presented. In addition, the new proposed criteria are easy to verify and less conservative than earlier publications about anti-synchronization control of memristive neural networks. Finally, two numerical examples are given to show the effectiveness of our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recently, memristor-based neural networks have been designed by replacing the resistors in the primitive neural networks with memristors due to the memristor-based neural networks are well suited to characterize the nonvolatile feature of the memory cell because of hysteresis effects, just as the neurons in the human brain have [1–7]. The memristive neural networks can remember its past dynamical history, store a continuous set of states, and be “plastic” according to the presynaptic and postsynaptic neuronal activity. Because of this feature, the studies of memristive neural networks would benefit a lot of applications in associative memories [1], new classes of artificial neural systems [2–7], etc.

In addition, anti-synchronization control of neural networks [8, 9] play important roles in many potential applications, e.g., non-volatile memories, neuromorphic devices to simulate learning, adaptive and spontaneous behavior. Moreover, the anti-synchronization analysis for memristive neural networks can provide a designer with an exciting variety of properties, richness of flexibility, and opportunities [10]. Therefore, the problem of anti-synchronization control of memristive neural networks is an important area of study.

Moreover, many researchers are concentrated on the dynamical nature of memristor with constant time delays [2, 3], time-varying delays [6], distributed delays or bounded time-varying delays [11]. And in [12], authors researched the mixed delays, which contain the time-varying discrete delays and unbounded distributed delays. While, proportional delay is a time-varying delay with time proportional which is unbounded and different from the above types of delays. Proportional delay is one of many delay types and if object exists, such as in Web quality of service (QoS) routing decision proportional delay usually is required. The proportional delay system as an important mathematical model often rises in some fields such as physics, biology systems and control theory and it has attracted many scholars’ interest [13–18]. To the best of the authors’ knowledge, few researchers have considered dynamical behavior for the anti-synchronization control of memristive neural networks with multiple proportional delays.

Motivated by the above discussions, in this paper, our aim is to shorten thus gap by making an attempt to deal with the anti-synchronization problem for memristive neural networks with proportional delays. The main advantage of this paper lies in the following aspects. Firstly, we study proportional delays of memristive neural networks for the first time. Secondly, anti-synchronization control criteria of memristive neural networks complement and extend earlier publications. Lastly, by using the concept of Filippov solutions for the differential equations with discontinuous right-hand sides and differential inclusions theory, some new criteria are derived to ensure anti-synchronization of memristive neural networks with multiple proportional delays, and the proposed criteria are very easy to verify and achieve a valuable improvement the easier published results.

2 Preliminaries

In this paper, solutions of all the systems considered in the following are intended in Filippov’s sense. \(co\{\hat{\xi },\check{\xi }\}\) denotes closure of the convex hull generated by \(\hat{\xi }\) and \(\check{\xi }\). \([\cdot , \cdot ]\) represents an interval. For a continuous function \(k(t):\mathbb {R} \rightarrow \mathbb {R}\), \(D^{+}k(t)\) is called the upper right Dini derivative and defined as \(D^{+}k(t)=\lim _{h\rightarrow 0^{+}}\frac{1}{h}(k(t+h)-k(t))\).

Hopfield neural network model can be implemented in a circuit where the self-feedback connection weights and the connection weights are implemented by resistors. In [10, 19] authors use memristors instead of resistors to build the memristive Hopfield neural network model, where the time-varying delays are bounded. In the following, we describe a general class of memristive Hopfield neural networks with multiple proportional delays where the delays are unbounded:

where

and

\(M_{ij}\) and \(W_{ij}\) denote the memductances of memristor \(R_{ij}\) and \(\hat{R}_{ij}\), respectively. And \(R_{ij}\) represents the memristor between the neuron activation function \(f_{j}(x_{j}(t))\) and \(x_{i}(t)\). \(\hat{R}_{ij}\) represents the memristor between the neuron activation functions \(f_{j}(x_{j}(q_{ij}t))\) and \(x_{i}(t)\). \(a_{ij}(x_{i}(t))\) and \(b_{ij}(x_{i}(t))\) represent memristors synaptic connection weights, which denote the strength of connectivity between the neuron \(j\) and \(i\) at time \(t\) and \(q_{ij}(t)\); \(q_{ij}(t)\) is a proportional delay factor and satisfying \(q_{ij}(t)=t-(1-q_{ij})t\), in which \((1-q_{ij})t\) corresponds to the time delay required in processing and transmitting a signal from the \(j\hbox {th}\) neuron to the \(i\hbox {th}\) neuron. The capacitor \(\mathcal {C}_{i}\) is constant, the memductances \(M_{ij}\) and \(W_{ij}\) respond to changes in pinched hysteresis loops. So \(a_{ij}(x_{i}(t))\) and \(b_{ij}(x_{i}(t))\) will change, as the pinched hysteresis loops change. According to the feature of the memristor and the current-voltage characteristic, then

in which the switching jump \(T>0\) and \(\hat{a}_{ij}\), \(\check{a}_{ij},\, \hat{b}_{ij}\) and \(\check{b}_{ij}\) are constants, and \(\bar{a}_{ij}=\max \{\hat{a}_{ij}, \check{a}_{ij}\},\, \underline{a}_{ij}=\min \{\hat{a}_{ij}, \check{a}_{ij}\},\, \bar{b}_{ij}=\max \{\hat{b}_{ij}, \check{b}_{ij}\},\, \underline{b}_{ij}=\min \{\hat{b}_{ij}, \check{b}_{ij}\}\).

We give some definitions and assumptions which will be used in the following:

Definition 1

Suppose \(E\subseteq \mathbb {R}^{n}\), then \(x\rightarrow F(x)\) is called a set-valued map from \(E\rightarrow \mathbb {R}^{n}\), if for each point \(x\epsilon E\), there exists a nonempty set \(F(x)\subseteq \mathbb {R}^{n}\). A set-valued map \(F\) with nonempty values is said to be upper semicontinuous at \(x_{0}\epsilon E\), if for any open set \(N\) containing \(F(x_{0})\), there exists a neighborhood \(M\) of \(x_{0}\) such that \(F(M)\subseteq N\). The map \(F(x)\) is said to have a closed (convex, compact) image if for each \(x\epsilon E, F(x)\) is closed (convex, compact).

Definition 2

(See [20]) For the system \(\dot{x}(t)=g(x)\), \(x\epsilon \mathbb {R}^{n}\), with discontinuous right-hand sides, a set-valued map is defined as

where \(co[E]\) is the closure of the convex hull of set \(E\), \(B(x,\delta )=\{y:\Vert y-x\Vert \le \delta \}\) and \(\mu (N)\) is the Lebesgue measure of the set \(N\). A solution in the Filippov’s sense of the Cauchy problem for this system with initial condition \(x(0)=x_{0}\) is an absolutely continuous function \(x(t)\), which satisfies \(x(0)=x_{0}\) and the differential inclusion

Assumption 1

The function \(f_{i}\) is an odd function and bounded, and satisfies a Lipschitz condition with a Lipschitz constant \(L_{i}\), i.e.,

for all \(x,y\epsilon R\).

Assumption 2

For \( i,j=1,2,\ldots , n\),

The system (1) is a differential equation with discontinuous right-hand sides, and based on the theory of differential inclusion, if \(x_{i}(t)\) is a solution of (1) in the sense of Filippov, then system (1) can be modified by the following stochastic neural networks

or equivalently, there exist \(a_{ij}(t)\epsilon co\{a_{ij}(x_{i}(t))\}\) and \(b_{ij}(t)\) \(\epsilon co(b_{ij}(x_{i}(t)))\), such that

Lemma 1

If Assumption 1 holds, then there is at least a local solution \(x(t)\) of system (1), and the local solution \(x(t)\) can be extended to the interval \([0,+\infty ]\) in the sense of Filippov.

In this paper, we consider system (2) or (3) as the drive system and the corresponding response system is:

or equivalently, there exist \(a_{ij}(t)\epsilon co\{a_{ij}(y_{i}(t))\}\) and \(b_{ij}(t)\,\epsilon co(b_{ij}(y_{i}(t)))\), such that

Let \(e(t)=(e_{1}(t),e_{2}(t),\ldots , e_{n}(t))^{T}\) be the anti-synchronization error, where \(e_{i}(t)=x_{i}(t)+y_{i}(t)\). According to Assumption 2, by using the theories of set-valued maps and differential inclusions, then we get the anti-synchronization error system as follows

or equivalently, there exist \(a_{ij}(t)\epsilon co\{a_{ij}(e_{i}(t))\}\) and \(b_{ij}(t)\) \(\epsilon co(b_{ij}(e_{i}(t)))\), such that

where \(F_{j}(e_{j}(t))=f_{j}(x_{j}(t))+f_{j}(y_{j}(t)),\, F_{j}(q_{ij}(t))=f_{j}(x_{j}(q_{ij}t))+f_{j}(y_{j}(q_{ij}t))\).

Remark 1

The model of memristive neural networks with multi-proportional delays in (6) or (7) is different from the neural networks with multi-proportional delays in [13–18], so that those stability results cannot be directly applied to it.

Remark 2

The Eq. (7) is a discontinuous system with proportional delays, but the proportional delays can be transferred into the common time-varying delays, so the discontinuous system (7) with proportional delays exist local solution. The proof is similar to the proof of the local existence theorem for Filippov solution in [20] and similar to the proof of the Theorem 6 in Ref. [21], so it is omitted here.

Remark 3

According to Assumption 1, the activation functions \(f_{j}\) are odd functions. Then we get \(F_{i}(e_{i}(t))\) possesses the following properties:

and

We transform system (7) by

then we get the following system (see [16–18])

where \(\tau _{ij}=-\log q_{ij}\ge 0,\, \tau =\max _{1\le i,j\le n}\{\tau _{ij}\}\).

3 Main Results

In this section, two anti-synchronization criteria are given under designed controllers for memristive neural networks with proportional delays.

Theorem 1

If there exist positive diagonal matrices \(P=diag \{P_{1}, P_{2}, \ldots , P_{n}\},\, K=diag\{k_{1}, k_{2}, \ldots , k_{n}\},\, N_{i}=diag\{n_{i1},n_{i2},\ldots ,n_{in}\}\) and a constant \(r>0\), such that the following inequality holds:

where \(W_{i}\) is an \(n\times n\) square matrix, whose \(i\)th row is composed of \((\overline{b}_{i1}, \overline{b}_{i2},\ldots , \overline{b}_{in})\) and other rows are zeros, \(i=1,2, \ldots ,n\), and \(Q_{i}^{-1}=diag \big (q_{i1}^{-1}, q_{i2}^{-1}, \ldots , q_{in}^{-1}\big ),\, i=1,2, \ldots ,n\). \(L=diag(L_{1},L_{2},\ldots , L_{n}),\, \bar{A}=(\bar{a}_{ij})_{n\times n}\), then the drive system and the response system become anti-synchronized under the controller \(u_{i}(t)\),

Proof

The error system (7) under the controller (13) can be described by

Construct the following Lyapunov functional:

where \(P_{i}>0, n_{ij}>0\), and \(r>0\).

According to (8), we get

In (15), by (16), if \(e(t)=(e_{1}(t), e_{2}(t), \ldots , e_{n}(t))=0,\, e_{i}(t)=0,\, i=1,2,\ldots ,n\), then \(F(e_{i}(t))=0,\, i=1,2,\ldots ,n\), thus \(V(e(t))=0\). And we show \(V(e(t))>0\) as \(e(t)\ne 0\). \(\square \)

In fact, by \(e(t)\ne 0\), there exists at least one index \(i\) such that \(e_{i}(t)\ne 0\). By the integral mean value theorem, \(\int _{0}^{e_{i}(t)}F_{i}(s)ds=F_{i}(\theta _{i})e_{i}(t)\), where \(\theta _{i}\) is a number between \(0\) and \(e_{i}(t)\). From (8), when \(e_{i}(t)>0\), we get \(\theta _{i}>0\), \(F_{i}(\theta _{i})\ge 0\), \(F_{i}(\theta _{i})e_{i}(t)\ge 0\); When \(e_{i}(t)< 0\), we have \(\theta _{i}<0,\, F_{i}(\theta _{i})\le 0,\, F_{i}(\theta _{i})e_{i}(t)\ge 0\). Thus, we obtain \(\int _{0}^{e_{i}(t)}F_{i}(s)ds\ge 0\), and \(\sum _{i=1}^{n}2P_{i}\int _{0}^{e_{i}(t)}F_{i}(s)ds\ge 0, e(t)\ne 0\). Further, we will prove that \(\sum _{i=1}^{n}2P_{i}\int _{0}^{e_{i}(t)}F_{i}(s)\,ds=0\), as \(e(t)\ne 0\) does not hold. Assume that \(\sum _{i=1}^{n}2P_{i}\,\int _{0}^{e_{i}(t)}F_{i}(s)ds=0,\, e(t)\ne 0\), there must be numbers \(\theta _{i},\, i=1,2,\ldots ,n\) that \(\sum _{i=1}^{n}2P_{i}\int _{0}^{e_{i}(t)}F_{i}(s)\,ds=\sum _{i=1}^{n}2P_{i}\,F_{i}(\theta _{i})e_{i}(t)=0\), where \(\theta _{i}\) is a number between \(0\) and \(e_{i}(t)\). Thus, we obtain \(F_{i}(\theta _{i})=0\) or \(e_{i}(t)=0\) for \(i=1,2,\ldots ,n\). When \(e_{i}(t)=0,\, i=1,2, \ldots , n\), we get \(e(t)=0\), this contradicts with \(e(t)\ne 0\). When \(F_{i}(\theta _{i})=0,\, i=1,2,\ldots ,n\), we have \(F_{i}(\theta _{i})=f_{i}(x_{i}+\theta _{i})+f_{i}(y_{i})=0\), i.e. \(f_{i}(x_{i}+\theta _{i})=-f_{i}(y_{i}),\, i=1,2,\ldots ,n\). According to Assumption 1, then \(f_{i}(\theta _{i}+x_{i})\) is a constant functions for \(\theta _{i}\epsilon [0, e_{i}(t)]\) or \(\theta _{i}\epsilon [e_{i}(t),0]\), this contradicts with a nonlinear activation function \(f_{i}(x_{i}(t))\). Thus \(\sum _{i=1}^{n}2P_{i}\int _{0}^{e_{i}(t)}F_{i}(s)ds >0\) as \(e(t)\ne 0\).

That is to say, the first term of \(V(e(t))\) is positive definite. Clearly, the second term of \(V(e(t))\ge 0\). That is \(V(e(t))>0\), \(e(t)\ne 0\). Thus \(V(e(t))\) is positive definite.

Via calculating the upper right derivation of \(V(e(t))\) along the trajectory of system (14), we obtain

where

\(Q_{i}^{-1}=diag\big (q_{i1}^{-1}, q_{i2}^{-1}, \ldots , q_{in}^{-1}\big )\), and \(F(e(t))=\big (F(e_{1}(t)),\, F(e_{2}(t)), \ldots ,\, F(e_{n}(t))\big )^{T},\, P=diag(P_{1},P_{2},\ldots , P_{n}),\, A(t)\,=(a_{ij}(t))_{n\times n}\).

From the following condition

Substituting (18) into (17) yields

From Assumption 1, we obtain

that is

Thus we have

and

Let \(F(e(t))\ne 0\), which implies that \(e(t)\ne 0\). From (19)–(23), we get

Thus, if (12) holds, then \(D^{+}V(e(t))\le 0\).

Consider the case where \(F(e(t))=0\) and \(e(t)\ne 0\), then we have

if there exist at least one index \(i\) such that \(F(e(\overline{q}_{i}t))\ne 0\). We obtain \(D^{+}V(e(t))<0\).

Assume that \(F(e(\overline{q}_{i}t))=0\) for all \(i\). Since \(F(e(\overline{q}_{i}t))=(F_{1}(e(\overline{q}_{i1}t)),F_{2}(e(\overline{q}_{i2}t)),\ldots , F_{n}(e(\overline{q}_{in}t)))^{T}\), we get \(F_{j}\) \((e_{j}(q_{ij}t))=0,\, i,j=1,2,\ldots ,n\), i.e. \(F_{j}(e_{j}(q_{ij}t))=f_{j}(x_{j}(q_{ij}t))+f_{j}(y_{j}(q_{ij}t))=0\), so \(f_{j}(x_{j}(q_{ij}t))=-f_{j}(y_{j}(q_{ij}t))\). Because function \(f_{i}\) is an odd function, we get \(x_{j}(q_{ij}t)=-y_{j}(q_{ij}t)\), so

By \(e(t)\ne 0\), we have \(e(\overline{q}_{i}t)\ne 0\), there exist one index \(j\) such that \(e_{j}(q_{ij}t)\ne 0\), so this contradict with (26). Thus, we have proven that \(D^{+}V(e(t))\) for every \(e(t)\ne 0\).

Next, we let \(e(t)=0\) which implies that \(F(e(t))=0\), then

If there exist one index \(i\) such that \(F(e(\overline{q}_{i}t))\ne 0\), so we get \(D^{+}V(e(t))<0\). And \(D^{+}V(e(t))=0\) if and only if \(F(e(\overline{q}_{i}t))=0,\, i=1,2,\ldots ,n\). Then \(D^{+}V(e(t))\) is negative definite.

In the following, we give another synchronization which is a delay-independent one.

Theorem 2

If there exist positive diagonal matrices \(P=diag \{P_{1}, P_{2}, \ldots , P_{n}\}\), \(K=diag\{k_{1}, k_{2}, \ldots , k_{n}\}\), \(N_{i}=diag\{n_{i1},n_{i2},\ldots ,n_{in}\}\) and a constant \(r>0\), such that the following inequality holds:

where \(L=diag(L_{1},L_{2},\ldots , L_{n})\), \(\bar{A}=(\bar{a}_{ij})_{n\times n}\), \(W_{i}\) is an \(n\times n\) square matrix, whose \(i\hbox {th}\) row is composed of \((\overline{b}_{i1},\overline{b}_{i2},\ldots ,\overline{ b}_{in})\) and other rows are all zeros, \(i,j=1,2, \ldots ,n\). Then the error system under the controller \(u_{i}(t)\) can be described by

and

then the drive system and the response system get anti-synchronized under the controller (30).

Proof

The system (29) under the controller (30) can be described by

Consider the following Lyapunov functional:

where \(P_{i}>0,\, n_{ij}>0,i,j=1,2,\ldots ,n\).

Since \(V(z(t))\ge \sum _{i=1}^{n}\sum _{j=1}^{n}\int _{t-\tau _{ij}}^{t}rn_{ij}F_{j}^{2}z_{j}(s)ds\), where \(\sum _{i=1}^{n}\sum _{j=1}^{n}\int _{t-\tau _{ij}}^{t}rn_{ij}F_{j}^{2}z_{j}(s)ds\) is positive definite, and \(V(0)\equiv 0\), thus \(V(z(t))\) is positive definite. And \(V(z(t))\le U(z(t))\), where

is positive definite. \(\square \)

We calculate the upper right derivation of \(V(z(t))\) along the trajectories of system (31)

where \(F(z(t-\overline{\tau }_{i}))=(F_{1}(z_{1}(t-\tau _{i1})),F_{2}(z_{2}(t-\tau _{i2})),\, \ldots ,F_{n}(z_{n}(t-\tau _{in}))),\, i,j=1,2,\ldots ,n\).

The following condition holds:

then we get

If \(P\bar{A}+\bar{A}^{T}P+\sum _{i=1}^{n}\big (rN_{i}+r^{-1}PW_{i}N_{i}^{-1}W_{i}^{T}P\big ) -2PL^{-1}-2PKL^{-1}<0\), then similar to the proof of Theorem 1, we get \(D^{+}V(z(t))<0\) for any \(z(t)\ne 0\). And \(D^{+}V(z(t))=0\) if and only if \(z(t)=F(z(t))=F(z(t-\overline{\tau }_{i}))=0\), \(i=1,2,\ldots ,n\).

4 Illustrative Example

In this section, two numerical examples are given to illustrate the effectiveness of the results obtained above.

Example 1

We consider a two-dimensional memristive neural network as follows:

where

We take the activation function as: \(f(e_{i}(t))=\frac{1}{2}\big (\mid e_{i}(t)+1\mid -\mid e_{i}(t)-1\mid \big )\). Obviously, \(f(e_{i}(t))\) is odd, bounded and a Lipschitz continuous function with the Lipschitz constants \(L_{1}=L_{2}=0.1\), and \(r=1\).

And

We choose

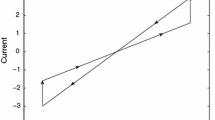

Then we get \(-2PL^{-1}-2PKL^{-1}+P\bar{A}+\bar{A}^{T}P+r^{-1}\) \(\sum _{i=1}^{n}PW_{i}N_{i}^{-1}W_{i}^{-1}P +rN_{i}Q_{i}^{-1}<0\) which satisfy the condition of Theorem 1. Choose randomly two initial conditions for \(e_{1}(t)\) and \(e_{2}(t)\). The simulation results are depicted in Figs. 1 and 2, which show the evolutions of the errors \(e_{1}(t),e_{2}(t)\) for the controlled system (36) in Example 1. So the simulation results have confirmed the effectiveness of Theorem 1.

(Color online) The error curves \(e_{1}(t)\) of system (36) with multi-proportional delays and different initial values

(Color online) The error curves \(e_{2}(t)\) of system (36) with multi-proportional delays and different initial values

Example 2

In order to illustrate Theorem 2, we consider the following two-dimensional memristive neural network

where \(\tau _{ij}=0.5,\, r=1\), and the other parameters are the same as those in Example 1. We verified that \(P\bar{A}+\bar{A}^{T}P+rN+r^{-1}P^{T}\bar{B}N^{-1}\bar{B}P-2PDL^{-1}-2PKL^{-1}<0\), which satisfy the condition of Theorem 2. From Figs. 3 and 4, we see that the curves become convergent which shows the effectiveness of Theorem 2 and the drive system and the response system get anti-synchronized.

(Color online) The curves \(z_{1}(t)\) of system (37) with constant delay \(\tau _{ij}=0.5\) and different initial values

(Color online) The error curves \(z_{2}(t)\) of system (37) with constant delay \(\tau _{ij}=0.5\) and different initial values

5 Conclusion

In this paper, we adopted the differential inclusion theory to handle memristive neural networks with multiple proportional delays. In particular, new sufficient conditions were derived for the anti-synchronization control of memristive neural networks with multiple proportional delays, which was different from the existing ones and also complement, as well as, extend the earlier publications. Finally, two numerical examples were given to illustrate the effectiveness of the proposed results.

References

Pershin Y, DiVentra M (2010) Experimental demonstration of associative memory with memristive neural networks. Neural Netw 23:881–886

Hu X, Wang J (2010) Global uniform asymptotic stability of memristor-based recurrent neural networks with time delays. International Joint Conference on Neural Networks (IJCNN 10), Barcelona, pp 1–8

Wu A, Zeng Z, Zhu X, Zhang J (2011) Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing 74:3043–3050

Wu A, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inf Sci 183:106–116

Wu A, Zhang J, Zeng Z (2011) Dynamic behaviors of a class of memristor-based Hopfield networks. Phys Lett A 375:1661–1665

Wen S, Zeng Z (2012) Dynamics analysis of a class of memristor-based recurrent networks with time-varying delays in the presence of strong external stimuli. Neural Process Lett 35:47–59

Wang W, Li L, Peng H, Xiao J, Yang Y (2014) Synchronization control of memristor-based recurrent neural networks with perturbations. Neural Netw 53:8–14

Ren F, Cao J (2009) Anti-synchronization of stochastic perturbed delayed chaotic neural networks. Neural Comput Appl 18:515–521

Song Q, Cao J (2007) Synchronization and anti-synchronization for chaotic systems. Chaos Solitons Fractals 33(3):929–939

Wu A, Zeng Z (2013) Anti-synchronization control of a class of memristive recurrent neural networks. Commun Nonlinear Sci Numer Simul 18:373–385

Chandrasekar A, Rakkiyappan R, Cao J, Lokshmanan S (2014) Synchronization of memristor-based recurrent neural networks with two delay components based on second-order reciprocally approach. Neural Netw 57:79–93

Yang X, Cao J, Yu W (2014) Exponential synchronization of memristive Cohen-Grossberg neural networks with mixed delays. Cogn Neurodyn 8:239–249

Liu Y (1996) Asymptotic behavior of functional differential equations with proportional time delays. Eur J Appl Math 7(1):11–30

Van B, Marshall J, Wake G (2004) Holomorphic solutions to pantograph type equations with neural fixed points. J Math Anal Appl 295(2):557–569

Zhou L (2011) On the global dissipativity of a class of cellular neural networks with multipantograph delays. Adv Artif Neural Syst 941426

Zhou L (2013) Dissipativity of a class of cellular neural networks with proportional delays. Nonlinear Dyn 73:1895–1903

Zhou L (2013) Delay-dependent exponential stability of cellular neural networks with multi-proportional delays. Neural Process Lett 38:347–359

Zheng C, Li N, Cao J (2015) Matrix measure based stability criteria for high-order neural networks with proportional delay. Neurocomputing 149:1149–1154

Wu A, Zeng Z (2014) New global exponential stability results for a memristive neural systems with time-varying delays. Neurocomputing 144:553–559

Filippov A (1988) Differential equations with discontinuous right-hand sides. Kluwer, Dordrecht

Wu H, Zhang L (2013) Almost periodic solution for memristive neural networks with time-varying delays. J Appl Math 2013(716172):12

Acknowledgments

This paper is supported by the National Natural Science Foundation of China (Grant Nos. 61170269, 61472045), the Beijing Higher Education Young Elite Teacher Project (Grant No. YETP0449), the Asia Foresight Program under NSFC Grant (Grant No. 61411146001) and the Beijing Natural Science Foundation (Grant No. 4142016).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, W., Li, L., Peng, H. et al. Anti-synchronization Control of Memristive Neural Networks with Multiple Proportional Delays. Neural Process Lett 43, 269–283 (2016). https://doi.org/10.1007/s11063-015-9417-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-015-9417-6