Abstract

This paper proposes and develops new linesearch methods with inexact gradient information for finding stationary points of nonconvex continuously differentiable functions on finite-dimensional spaces. Some abstract convergence results for a broad class of linesearch methods are established. A general scheme for inexact reduced gradient (IRG) methods is proposed, where the errors in the gradient approximation automatically adapt with the magnitudes of the exact gradients. The sequences of iterations are shown to obtain stationary accumulation points when different stepsize selections are employed. Convergence results with constructive convergence rates for the developed IRG methods are established under the Kurdyka–Łojasiewicz property. The obtained results for the IRG methods are confirmed by encouraging numerical experiments, which demonstrate advantages of automatically controlled errors in IRG methods over other frequently used error selections.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the unconstrained optimization problem formulated as follows:

with a continuously differentiable (\({\mathcal {C}}^1\)-smooth) objective function \(f:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\). One of the most natural and classical approaches to solve (1.1) is by using linesearch methods; see, e.g., [8, 10, 24, 38, 40, 45]. Given a starting point \(x^1\in \mathrm{I\!R}^n\), such methods construct the iterative procedure

where \(t_k\ge 0\) is a stepsize at the kth iteration, and where the direction \(d^k\) satisfies the condition

The classical choice for the direction is \(d^k=-\nabla f(x^k)\) when the resulting algorithm is known as the gradient descent method; see the aforementioned books and the references therein. If f is twice continuously differentiable (\({\mathcal {C}}^2\)-smooth) and the Hessian matrix \(\nabla ^2 f(x^k)\) is positive-definite, then \(d^k\) is chosen by solving the linear equation

and it is known as a Newton direction [10, 21, 24]. Additionally, more general choices of descent directions widely used are the gradient related directions [10, Page 41], directions satisfying an angle condition [1, Page 541], etc. Together with the descent directions, stepsizes are usually chosen to ensure the decreasing property of the entire sequence \(\left\{ f(x^k)\right\} \) or sometimes only its tail. Well-known stepsize selections are constant stepsize, diminishing stepsize (not summable), stepsizes following Armijo rule, and Wolfe conditions; see, e.g., [1, 8, 10, 24, 38, 40, 45].

The stationarity of accumulation points generated by linesearch methods with gradient related directions and stepsizes following the Armijo rule is established in [10, Proposition 1.2.1]. When the Lipschitz continuity of the gradient is additionally assumed, the same type of convergence is achieved if either the stepsize is constant and directions are gradient related, or the stepsize is diminishing and directions satisfy more involved conditions [10, Proposition 1.2.3]. The global convergence of some linesearch methods to an isolated stationary point relies on the Ostrowski condition; see [42] and [21, Theorem 8.3.9]. If there is no guarantee on the isolation of stationary points, algebraic geometry tools introduced by Łojasiewicz [36] and Kurdyka [33] are used for linesearch methods with directions satisfying the angle conditions and the stepsize following the Wolfe conditions; see [1, Theorem 4.1]. While rates of convergence of general linesearch methods are not considered, some specific methods achieve certain convergence rates for particular classes of functions. For instance, the gradient descent method achieves a local linear rate of convergence if the objective function is twice differentiable with Lipschitz continuous Hessian as in [38, Theorem 1.2.4], and the (generalized) damped Newton method attains a superlinear convergence rate of under the positive-definiteness of the (generalized) Hessian and some additional assumptions; [27, Theorem 4.5]. Furthermore, a linear rate of convergence for the gradient descent method is achieved under either the Polyak–Łojasiewicz condition as in [43] and [26], or under the weak convexity of the objective function as in [48].

Due to its simplicity, the gradient descent method is broadly used to solve various optimization problems; see, e.g., [13, 17, 18, 39, 49]. However, errors in gradient calculations may appear in many situations, which can be found in practical problems arising, e.g., in the design of space trajectories [2] and computer-aided design of microwave devices [23]. Moreover, many nonsmooth optimization problems can be transformed into its smoothed versions by using Moreau envelopes [47] and forward-backward envelopes [50]. Nevertheless, gradients of smoothed functions cannot be usually computed precisely, and therefore, various gradient methods with inexact gradient information have been suggested. We mention the following major developments in this vein:

-

Devolder et al. [20] introduce the notion of inexact oracle and analyze behavior of several first-order methods of smooth convex optimization employed such an oracle. Nesterov [37] develops new methods of this type for nonsmooth convex optimization in the framework of inexact oracle.

-

Gilmore and Kelley [23] propose an implicit filtering algorithm to deal with certain box constrained optimization problems, where the objective function is a sum of a \({\mathcal {C}}^1\)-smooth function with Lipschitz continuous gradient and a noise function.

-

Bertsekas shows in [10, pp. 44–45] that if the objective function is \({\mathcal {C}}^1\)-smooth with a Lipschitz continuous gradient and if the error of inexact gradient is either small relative to the norm of the exact gradient, or proportional to the stepsize, then convergence behavior of gradient methods is similar to the case where there are no errors.

Recently, [22] proposed a frequency-domain analysis of inexact gradient descent methods; [51] analyzed accelerated gradient methods with absolute and relative noise in the gradient; [35] presented a zero-order mini-batch stochastic gradient descent methods. All the convergence results for inexact gradient methods mentioned above assume that the objective function is either \({\mathcal {C}}^1\)-smooth with a Lipschitz continuous gradient, or convex. To the best of our knowledge, general methods of solving nonconvex \({\mathcal {C}}^1\)-smooth optimization problems with inexact information on non-Lipschitzian gradients are not available in the literature. One of the reasons for this is that verifying the descent property of the sequence of function values without the Lipschitz continuity of \(\nabla f\) via the Armijo linesearch requires exact information on gradients. To deal with inexactness, we need a descent direction that allows us to replace the Armijo linesearch procedure by another one not demanding exact gradients. In addition, a practical inexact gradient method that uses constant stepsize for a general nonconvex \({\mathcal {C}}^1\)-smooth function with the Lipschitz gradient is also not established. Although an inexact gradient method with constant stepsize is proposed in [10, pp. 44–45] by using an error smaller than the norm of the exact gradient, the problem of how to control this error while the exact gradient is unknown is still questionable.

Having in mind the above discussion, we introduce new inexact reduced gradient (IRG) methods to find stationary points for a general class of nonconvex \({\mathcal {C}}^1\)-smooth functions. Although our proposed methods address smooth problems, some motivations for them partly come from a certain nonsmooth algorithm and generalized differential tools of variational analysis. Specifically, to find a Clarke stationary point of a nonsmooth locally Lipschitzian function, the gradient sampling (GS) method, introduced by Burke et al. [14] and modified by Kiwiel [30], approximates at each iteration the \(\varepsilon \)-generalized gradient by the convex hull of nearby gradients. In the GS method, the negative projection of the origin onto this convex hull is chosen as the descent direction and the stepsizes are chosen from the backtracking linesearch as in [30, Section 4.1]. Although the GS method works well for nonsmooth problems, using them for smooth functions seems to be challenging due to, in particular, the necessity to solve subproblems of finding projections onto convex hulls. However, replacing the \(\varepsilon \)-generalized gradient by the Fréchet-type \(\varepsilon \)-subdifferential makes our methods much simpler and suitable for smooth problems. Indeed, the latter construction for a \({\mathcal {C}}^1\)-smooth function at the point in question is just the closed ball centered at its gradient with radius \(\varepsilon \). Thus, the projection of the origin onto this ball has an explicit and simple form. The descent direction chosen by this projection also allows us to replace the exact gradient by its approximation and to use a linesearch procedure that does not require exact gradients. Developing this idea, we design our inexact reduced gradient methods with non-normalized directions together with some stepsize selections such as backtracking stepsize, constant stepsize, and diminishing stepsize. To the best of our knowledge, the IRG methods that we propose and develop in this paper are completely new even in the exact case. It should also be emphasized that the proposed IRG methods are not special versions of the GS one since the latter needs exact gradients at multiple points in each iteration, while the IRG methods need only one inexact gradient. Moreover, the iterative sequence of the GS method is chosen randomly, while IRG iterations are designed deterministically. Our main results include the following:

-

Designing a general framework for IRG methods and revealing their basic properties. The inexact criterion used in IRG methods is universal and appears in various contexts of nonsmooth optimization as well as in its smooth derivative-free counterpart.

-

Finding stationary accumulation points of iterations in the IRG methods with backtracking stepsizes as well as with either constant stepsizes, or diminishing ones under an additional descent condition on the objective functions.

-

Obtaining the global convergence of iterations in the IRG methods with constructive convergence rates depending on the exponent of the imposed Kurdyka–Łojasiewicz (KL) property of the objective functions.

These results are achieved by using our newly developed scheme for general linesearch methods described in the following way. To begin with, some conditions are proposed to ensure the stationary of accumulation points in general linesearch methods. If the KL property is additionally assumed, then the global convergence of the iterative sequence to a stationary point is guaranteed. Moreover, the rates of convergence are established if the stepsize is bounded away from zero.

From a practical viewpoint, our IRG methods automatically adjust the errors required for finding approximate gradients, which will be shown to have numerical advantages over decreasing errors, e.g., \(\varepsilon _k=k^{-p}\) as \(p\ge 1\), that are frequently used in the existing methods [10, 20, 23]. To elaborate more on this issue, observe that since the magnitude of the exact gradient is small near the stationary points and is larger elsewhere, decreasing errors that do not take the information of the exact or inexact gradients into consideration may encounter the following phenomena:

-

Over approximation, which happens when the magnitude of the exact gradient is large but the error is too small. In this case, the procedures of finding an approximate gradient may execute longer than needed to obtain a good approximation of the exact gradient.

-

Under approximation, which happens when the magnitude of the exact gradient is small but the error is too large, which may lead us to an approximate gradient that is not good enough. As a consequence of using such an approximate gradient, the next iterative element can be worse instead of being better than the current one.

In contrast to methods using decreasing errors, our IRG methods, by performing a low-cost checking step in each iteration to determine whether the error for the approximation procedure should decrease or stay the same in the next iteration, use errors that automatically adapt with the magnitudes of the exact gradients to avoid the aforementioned phenomena and exhibit a better performance. Note that the bounded errors are not compared here for inexact gradient methods since they are even worse than the decreasing errors in the sense that employing them may cause the divergence in sequences of iterates, gradients, and function values as illustrated in [44, Section 4].

The rest of the paper is organized as follows. Section 2 discusses basic notions related to the methods. A unified convergence framework for general linesearch methods is developed in Sect. 3. In Sect. 4, we introduce a general form of IRG methods and investigate their principal properties. Our main results about the convergence behavior of the IRG methods with different stepsize selections are given in Sect. 5 by adapting the convergence framework of Section 3. The numerical experiments conducted in Sect. 6 support the theoretical results obtained in Sect. 5 and show that the IRG methods with the new type of automatically controlled errors have a better performance in comparison with the inexact proximal point method in the Least Absolute Deviations Curve-Fitting problem taken from [11]. In Sect. 6, we also compare numerically the performance of our IRG methods with those of the reduced gradient method and the gradient decent method employing the exact gradient calculations for two well-known benchmark functions in global optimization. The last Sect. 7 discusses some directions of our future research.

2 Linesearch Methods and Related Properties

First we recall some notions and notation frequently used in what follows. All our considerations are given in the space \(\mathrm{I\!R}^n\) with the Euclidean norm \(\Vert \cdot \Vert \) and scalar/inner product \(\left\langle \cdot ,\cdot \right\rangle \). We use \(\mathrm{I\!N}:=\{1,2,\ldots \}\), \(\mathrm{I\!R}_+\), and \(\overline{\mathrm{I\!R}}:=\mathrm{I\!R}\cup \left\{ \infty \right\} \) to denote the collections of natural numbers, positive numbers, and the extended real line, respectively. The symbol \(x^k{\mathop {\rightarrow }\limits ^{J}}\overline{x}\) means that \(x^k\rightarrow \overline{x}\) as \(k\rightarrow \infty \) with \(k\in J\subset \mathrm{I\!N}\). For a \({\mathcal {C}}^1\)-smooth function \(f:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\), \({\bar{x}}\) is a stationary point of f if \(\nabla f({\bar{x}})=0\). The function f is said to satisfy the L-descent condition with some \(L>0\) if

We see that L-descent condition (2.1) means that the graphs of the quadratic functions \(f_{L,x}(y):=f(x)+\left\langle \nabla f(x),y-x\right\rangle +\frac{L}{2}\left\| y-x\right\| ^2\) lie above that of f for all \(x\in \mathrm{I\!R}^n.\) This condition is equivalent to the convexity of \(\dfrac{L}{2}\left\| x\right\| ^2-f(x)\) [52, Lemma 4], while being a direct consequence of the L-Lipschitz continuity of \(\nabla f\), i.e., the Lipschitz continuity of \(\nabla f\) with constant L; see, e.g., [10, Proposition A.24] and [24, Lemma A.11]. The converse implication holds when f is convex [6, 52] but fails otherwise. A major class of real-valued functions satisfying the L-descent condition but not having the Lipschitz continuous gradient is given by

where A is an \(n\times n\) matrix, \(b\in \mathrm{I\!R}^n\), \(c\in \mathrm{I\!R}\) and \(h:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\) is a smooth convex function whose gradient is not Lipschitz continuous, e.g., \(h(x):=\left\| Cx-d\right\| ^4\), where C is an \(m\times n\) matrix and \(d\in \mathrm{I\!R}^m.\) Indeed, we can find some \(L>0\) such that the matrix \(LI-A\) is positive-semidefinite, where I is the \(n\times n\) identity matrix. It follows from the second-order characterization of convex functions that

Combining this with the convexity of h, we get the convexity of \(\dfrac{L}{2}\left\| x\right\| ^2-f(x),\) which means that f satisfies the L-descent property (2.1).

Even when \(\nabla f\) is Lipschitz continuous with constant \(L>0\), f can satisfy the \({\tilde{L}}\)-descent condition with \({\tilde{L}}<L\). For example, consider the univariate function f together with its gradient \(\nabla f\) given by

The latter representation implies that \(L=3\) is the smallest constant for the Lipschitz continuity of \(\nabla f\). Meanwhile, we see in Fig. 1 that f satisfies the \({\tilde{L}}\)-descent property with \({\tilde{L}}=3/2.\)

Next we recall by following [10, Section 1.2] some basic stepsize selections for the iterative procedure (1.2). The stepsize sequence \(\left\{ t_k\right\} \) satisfies the Armijo rule if there exist a scalar \(\beta \) and a reduction factor \(\gamma \in (0,1)\) such that for all \(k\in \mathrm{I\!N}\) we have the representation

This stepsize selection ensures the nonincreasing property of the entire sequence \(\left\{ f(x^k)\right\} \). However, Armijo stepsizes may be small and thus require a large number of stepsize reducing steps in order to make just small changes of the iterative sequence.

To significantly simplify the iterative sequence design, it is possible to consider a constant stepsize, i.e., \(t_k:=\alpha \) for all \(k\in \mathrm{I\!N}\). For this rule, the nonincreasing property of \(\left\{ f(x^k)\right\} \) is not ensured in general but holds under the L-descent condition (2.1) whenever \(\alpha \) is chosen to be sufficiently small with respect to 1/L, see, e.g., [10, 40]. However, even when the L-descent condition is satisfied for f, while an approximate value of L is unknown, using constant stepsizes becomes inefficient. In such a case, it is possible to use the diminishing stepsize selection, i.e.,

Drawbacks of the latter selection are the eventual slow convergence due to its small stepsizes and the absence of the descent property for the iterative sequence \(\left\{ f(x^k)\right\} \).

Now, we formulate a general type of directions that plays a crucial role in our subsequent analysis of various linesearch methods.

Definition 2.1

Let \(\left\{ x^k\right\} \) be a sequence in \(\mathrm{I\!R}^n\). The direction sequence \(\left\{ d^k\right\} \) is called gradient associated with \(\left\{ x^k\right\} \) if we have the implication

It can be easily checked that if either

or there exists some constant \(c>0\) such that

then \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \). Many methods such as the gradient descent, the generalized damped Newton method [27, 28], and the methods appeared in [10, Proposition 1.2.3] satisfy (2.6), while (2.5) can be considered as a standard condition for inexact gradient directions. It should be also mentioned that the notion of gradient associated directions is different from the notion of gradient-related directions proposed by Bertsekas in [10].

Finally in this section, we discuss two versions of the fundamental KL property playing a crucial role in the results on global convergence and convergence rates established in what follows. The first version, which is mainly used in the paper, is due to Absil et al. [1, Theorem 3.4].

Definition 2.2

Let \(f:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\) be a differentiable function. We say that f satisfies the KL property at \(\bar{x}\in \mathrm{I\!R}^n\) if there exist a number \(\eta >0\), a neighborhood U of \(\bar{x}\), and a nondecreasing function \(\psi :(0,\eta )\rightarrow (0,\infty )\) such that the function \(1/\psi \) is integrable over \((0,\eta )\) and we have

for all \(x\in U\) with \(f({\bar{x}})<f(x)<f({\bar{x}})+\eta \).

Remark 2.3

By using rather standard arguments, we observe that for a smooth function f, the KL property from Definition 2.2 is weaker than the KL property of f at \(\overline{x}\) introduced by Attouch et al. in [5]. It has been realized that KL property in the sense of Attouch et al., and hence, the one from Definition 2.2, is satisfied in broad settings. In particular, it holds at every nonstationary point of f; see [5, Lemma 2.1 and Remark 3.2(b)]. Furthermore, it is proved at the original paper by Łojasiewicz [36] that any analytic function \(f:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\) satisfies the KL property at every point \(\overline{x}\) with \(\varphi (t)~=~Mt^{1-q}\) for some \(q\in [0,1)\). Typical smooth functions satisfying this property are semialgebraic functions and also those from the more general class of functions definable in o-minimal structures; see [12, 33]. For other examples of functions satisfying the KL property, we refer the reader to [5, 34] and the bibliographies therein.

Next we present the convergence result for linesearch methods under the fulfillment of the KL property, which is taken from [1, Theorem 3.4].

Proposition 2.4

Let \(f:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\) be a \({\mathcal {C}}^1\)-smooth function, and let the sequence of iterations \(\left\{ x^k\right\} \subset \mathrm{I\!R}^n\) satisfy the following conditions:

- (H1):

-

(primary descent condition). There exists \(\sigma >0\) such that for sufficiently large \(k\in \mathrm{I\!N}\) we have

$$\begin{aligned} f(x^k)-f(x^{k+1})\ge \sigma \left\| \nabla f(x^k)\right\| \cdot \left\| x^{k+1}-x^k\right\| . \end{aligned}$$ - (H2):

-

(complementary descent condition). For sufficiently large \(k\in \mathrm{I\!N}\), we have

$$\begin{aligned} \big [f(x^{k+1})=f(x^k)\big ]\Longrightarrow [x^{k+1}=x^k]. \end{aligned}$$

If \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \) and f satisfies the KL property at \({\bar{x}}\), then \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \).

Although the convergence of linesearch methods under the KL property is widely exploited, the convergence rates of such methods under conditions (2.8) below have not been established. The following result presents convergence rates of general linesearch methods under these conditions. It should be noted that the proofs in [4, 41] for the convergence rates of proximal-type methods under the KL property cannot be generalized directly to Theorem 2.5. To the best of our knowledge, the results in [26, 43] are the closest to this theorem. However, the known results consider only the convergence of the exact gradient method for smooth functions with Lipschitz gradients under the Polyak–Łojasiewicz (PL) property [43]. Since the exact gradient method is a special case of the linesearch method, while the PL property is a special case of the KL property, we conclude that Theorem 2.5 has a broader range of applications than the results in [26, 43]. The proof of this result can be conducted similarly to the corresponding one from [3, Theorem 1] and thus is omitted.

Theorem 2.5

Let the sequences \(\left\{ x^k\right\} \subset \mathrm{I\!R}^n\), \(\left\{ t_k\right\} \subset \mathrm{I\!R}_+\) and the numbers \(\beta>0,\;c>0\) be such that \(x^{k+1}\ne x^k\) for all \(k\in \mathrm{I\!N}\), and that we have

for sufficiently large \(k\in \mathrm{I\!N}\). Suppose that the sequence \(\left\{ t_k\right\} \) is bounded away from 0, that \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \), and that f satisfies the KL property at \({\bar{x}}\) with \(\psi (t)=Mt^{q}\) for some \(M>0\) and \(q\in (0,1)\). The following convergence rates are guaranteed:

- (i):

-

If \(q\in (0,1/2]\), then the sequence \(\left\{ x^k\right\} \) converges linearly to \({\bar{x}}\).

- (ii):

-

If \(q\in (1/2,1)\), then there exists a positive constant \(\varrho \) such that

$$\begin{aligned} \left\| x^k-{\bar{x}}\right\| \le \varrho k^{-\frac{1-q}{2q-1}}\;\text { for sufficiently large }\;k\in \mathrm{I\!N}. \end{aligned}$$

3 A Unified Convergence Framework for Some Linesearch Methods

In this section, we establish properties for a general class of linesearch methods of type (1.2), which provide major tools for convergence analysis of IRG methods in Sect. 5. One of the most important results desired for linesearch methods is as follows:

By the continuity of the gradient mapping, the desired property (3.1) automatically holds if for each accumulation point \({\bar{x}}\) of \(\left\{ x^k\right\} \) we can find an infinite set \(J\subset \mathrm{I\!N}\) such that \(x^k\overset{J}{\rightarrow }{\bar{x}}\) and \(\nabla f(x^k)\overset{J}{\rightarrow }0\). If the exact information on the gradient is unknown, while \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \), i.e., (2.4) is satisfied, then property (3.1) is satisfied when

We are going to show that (3.2) holds whenever 0 is an accumulation point of \(\left\{ d^k\right\} \) and

The following new result gives us a unified convergence analysis for many linesearch methods.

Lemma 3.1

Let \(\left\{ x^k\right\} \) and \(\left\{ d^k\right\} \) be sequences satisfying (3.3). If \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \) and if 0 is an accumulation point of \(\left\{ d^k\right\} \), then there exists an infinite set \(J\subset \mathrm{I\!N}\) such that

Proof

If \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \), the conclusion obviously holds, so suppose that \(x^k\not \rightarrow {\bar{x}}\). It suffices to show that for any \(\delta >0\) sufficiently small and any \(N\in \mathrm{I\!N}\) there is a number \(k_N\ge N\) such that

Fix such \(\delta >0\) and \(N\in \mathrm{I\!N}\). Since \(\delta \) is sufficiently small and \(x^k\not \rightarrow {\bar{x}}\), suppose that the set

As \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \) and 0 is an accumulation point of \(\left\{ d^k\right\} \), we get that

It suffices to verify that \(A_2\cap A_4\ne \emptyset .\) Suppose on the contrary that \(A_2\cap A_4=\emptyset \), i.e.,

By (3.3), we have the estimates

which ensure the series convergence

Taking any number \(K\in A_3\), we also have \(K\in A_2.\) Since \(A_1\) is infinite and \(A_1,A_2\) form a partition of the set \(\left\{ N,N+1,\ldots \right\} \) including K, there exists a number \({\widehat{K}}\in A_1\) with \({\widehat{K}}>K\) such that \(K,K+1,\ldots ,{\widehat{K}}-1\in A_2\). Then, we have the estimates

Using the triangle inequality and (3.5) gives us

which brings us to a contradiction that completes the proof of the lemma. \(\square \)

Remark 3.2

Let us now present some observations in Lemma 3.1.

- (i):

-

Lemma 3.1 develops a unified convergence analysis framework for linesearch methods (1.2) under a general condition on the directions \(d^k\) without specifying a class of functions f and stepsizes \(t_k\). Note to this end that Bertsekas develops in [10, Section 1.2] some general schemes for linesearch methods (1.2) with specific classes of functions, directions, and stepsizes, while Absil et al. [1, Theorem 4.1] present convergence properties of linesearch methods for smooth functions under the angle and Wolfe conditions for directions. Devolder et al. [20] develop schemes only for convex functions. To the best of our knowledge, the framework presented in Lemma 3.1 is more general than the other schemes mentioned above.

- (ii):

-

Since Lemma 3.1 does not require any assumption on f, its usage is not limited to linesearch methods for smooth functions. Convergence analysis of different nonsmooth optimization methods can be found in [15, 30,31,32, 41] and the references therein.

- (iii):

-

If 0 is not an accumulation point of \(\{d^k\}\), then (3.3) implies that \(\{x^k\}\) is convergent. Indeed, the negation of the statement that 0 is an accumulation point of \(\{d^k\}\) yields the existence of \(\tau >0\) and \(K\in \mathrm{I\!N}\) such that

$$\begin{aligned} \Vert d^k\Vert \ge \;\tau \text { for all }\;k\ge K. \end{aligned}$$It follows from (3.3) that

$$\begin{aligned} \tau \sum _{k=K}^\infty \left\| x^{k+1}-x^k\right\| \le \sum _{k=K}^\infty \left\| x^{k+1}-x^k\right\| \cdot \left\| d^k\right\| <\infty , \end{aligned}$$which implies that \(\{x^k\}\) is a Cauchy sequence, and thus, it converges.

Next we recall the classical results from [21, 42] that describe important properties of the set of accumulation points generated by a sequence satisfying the Ostrowski condition; see (3.6).

Lemma 3.3

Let \(\left\{ x^k\right\} \) be a sequence satisfying the Ostrowski condition

Then, the following assertions hold:

- (i):

-

If \(\left\{ x^k\right\} \) is bounded, then the set of accumulation points of \(\left\{ x^k\right\} \) is nonempty, compact, and connected in \(\mathrm{I\!R}^n\).

- (ii):

-

If \(\left\{ x^k\right\} \) has an isolated accumulation point, then this sequence converges to it.

Now, we are ready to establish the main result of this section revealing major convergence properties of a general class of linesearch methods.

Theorem 3.4

Let \(\left\{ x^k\right\} \) be a sequence generated by a linesearch method (1.2) such that:

- (a):

-

\(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \);

- (b):

-

0 is an accumulation point of \(\left\{ d^k\right\} ;\)

- (c):

-

\(\displaystyle {\sum _{k=1}^\infty }t_k\Vert d^k\Vert ^2<\infty .\)

Then, every accumulation point of \(\left\{ x^k\right\} \) is a stationary point of f. Moreover, if \(\left\{ t_k\right\} \) is bounded from above, then the following assertions hold:

- (i):

-

If \(\left\{ x^k\right\} \) is bounded, then the set of accumulation points of \(\left\{ x^k\right\} \) is nonempty, compact, and connected.

- (ii):

-

If \(\left\{ x^k\right\} \) has an isolated accumulation point, then this sequence converges to it.

Proof

Let \({\bar{x}}\) be an accumulation point of \(\left\{ x^k\right\} \). Note that (c) is equivalent to (3.3) under the linesearch relationship \(x^{k+1}=x^k+t_kd^k\) in (1.2). Applying Lemma 3.1 with taking into account (b) and (c), we can find an infinite set \(J\subset \mathrm{I\!N}\) such that \(x^k\overset{J}{\rightarrow }{\bar{x}}\) and \(d^k\overset{J}{\rightarrow }0\). Then, (a) implies that \(\nabla f(x^k)\overset{J}{\rightarrow }0\). Employing the continuity of \(\nabla f\), we have

which tells us that \({\bar{x}}\) is a stationary point of f. Suppose now that \(\left\{ t_k\right\} \) is bounded from above by some \(\tau >0\). Using (c), we immediately get

This leads us to \(\left\| x^{k+1}-x^k\right\| \rightarrow 0\) and verifies assertions (i) and (ii) by applying Lemma 3.3. \(\square \)

Theorem 3.4 also allows us to ensure the stationarity of accumulation points generated by linesearch methods (1.2) applied to functions satisfying the L-descent condition (2.1), where the stepsize is either constant or diminishing, and where the direction is gradient associated while satisfying the following sufficient descent condition

with some constant \(\kappa >0\). Note that condition (3.7) is different from the gradient associated condition from Definition 2.1, since from (3.7) we only have that \(\nabla f(x^k)\overset{J}{\rightarrow }0\) yields \(d^k\overset{J}{\rightarrow }0\) but the reverse implication may not hold. In addition to the gradient descent and generalized Newton methods discussed above, there exist many other linesearch methods using direction (3.7), e.g., the boosted difference of convex functions algorithm as in [3, Proposition 4]), the inexact Levenberg–Marquardt method as in [19, Algorithm 3.1]), and the GS method for nonsmooth functions with non-normalized direction given in [30, Section 4.1].

We have the following effective consequence of Theorem 3.4.

Corollary 3.5

Let \(\left\{ x^k\right\} \) be a sequence generated by a linesearch method (1.2). Suppose that \(\inf f(x^k)>-\infty \), and that we have the conditions:

- (a):

-

f satisfies the L-descent condition (2.1) for some \(L>0\);

- (b):

-

the sequence \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \) and satisfies (3.7) for some \(\kappa >0;\)

- (c):

-

the sequence \(\left\{ t_k\right\} \) is not summable, i.e.,

$$\begin{aligned} \displaystyle {\sum _{k=1}^\infty } t_k=\infty , \end{aligned}$$(3.8)and there are numbers \(\delta >0\) and \(N\in \mathrm{I\!N}\) such that

$$\begin{aligned} t_k\le \dfrac{2\kappa -\delta }{L}\;\text { for all }\;k\ge N. \end{aligned}$$(3.9)

Then, every accumulation point of \(\left\{ x^k\right\} \) is stationary for f, and the assertions (i), (ii) in Theorem 3.4 hold. Moreover, if \(\left\{ t_k\right\} \) is bounded away from 0, then \(\nabla f(x^k)\rightarrow 0\) as \(k\rightarrow \infty \).

Proof

It follows from (3.9) in condition (c) that

Since f satisfies L-descent condition (2.1), we deduce from (3.7) and the latter inequality that

Then, summing up the relationships \(\dfrac{\delta }{2}t_k\left\| d^k\right\| ^2\le f(x^k)-f(x^{k+1})\) over \(k=N,N+1,\ldots \) and using the assumption \(\inf f(x^k)>-\infty \) give us

Now, we show that 0 is an accumulation point of \(\left\{ d^k\right\} \). Suppose on the contrary that there exist a positive number u and a natural number \(K\ge N\) such that

Using this together with (3.11) implies that \(\sum _{k=K}^\infty t_k<\infty \), which contradicts (3.8). Therefore, 0 is an accumulation point of \(\left\{ d^k\right\} \). Combining the latter with (b), (3.11), and (3.9) allows us to confirm that all the assumptions of Theorem 3.4 are satisfied. Thus, every accumulation point of \(\{x_k\}\) is a stationary point of f, and both assertions in (i) and (ii) hold.

If finally \(\left\{ t_k\right\} \) is bounded away from 0, it follows from (3.11) that \(d^k\rightarrow 0\) as \(k\rightarrow \infty \). Since the sequence \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \) by (b), we get \(\nabla f(x^k)\rightarrow 0\). \(\square \)

By employing the iterative procedure \(x^{k+1}=x^k+t_kd^k,\) the conditions in (2.8) can be rewritten as the following estimates:

4 General Scheme for Inexact Reduced Gradient Methods

In this section, we design a general framework for our novel IRG methods and establish their basic properties prior to constructing particular methods of this type with various stepsize selections. Now, we are ready to formulate our general algorithmic framework (the Master Algorithm) for IRG methods without considering yet particular stepsize selections.

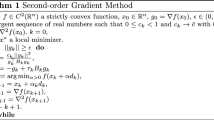

Algorithm 1

(general framework for IRG methods)

Step 0

(initialization) Select an initial point \(x^1\in \mathrm{I\!R}^n\), initial radii \(\varepsilon _1,r_1> 0\), radius reduction factors \(\mu ,\theta \in (0,1)\).

Step 1

(inexact gradient and stopping criterion) Choose \(g^k\) such that

Step 2

(radius update) If \(\left\| g^k\right\| \le r_{k}+\varepsilon _k\), then set \(r_{k+1}:=\mu r_k,\;\varepsilon _{k+1}:=\theta \varepsilon _k\), \(d^k:=0\), and go to Step 3. Otherwise, set \(r_{k+1}:=r_k\), \(\varepsilon _{k+1}:=\varepsilon _k\), and

Step 3

(stepsize) Choose \(t_k>0\) by a specific rule.

Step 4

(iteration update) Set \(x^{k+1}:=x^{k}+t_kd^k\).

Step 5

Increase k by 1 and go back to Step 1.

Let us make some comments on the constructions of Algorithm 1. The first remark concerns the novelty in the choice of errors and directions.

Remark 4.1

We have the following observations on the error criterion used in Algorithm 1:

- (i):

-

The inexact criterion (4.1) is universal and appears in many contexts even when only information on function values is available as in derivative-free optimization [16]. In addition, as shown in [20, Section 2.2], condition (4.1) is also satisfied if f is convex, smooth, and equipped with a first-order oracle, which covers various well-known models in nonsmooth optimization, e.g., Nesterov’s smoothing techniques, Moreau–Yosida regularization, augmented Lagrangians as in [20, Section 3] and [29, Example 1].

- (ii):

-

From (4.1), the radius \(\varepsilon _k\) can be considered as an automatically controlled error for the calculation of \(\nabla f(x^k)\), which does not need to decrease after each iteration. This is different than the choice \(\varepsilon _k=ck^{-p}\) for \(p\ge 1,c>0\) frequently used in the well-known methods [10, 20, 23]. Moreover, Steps 1 and 2 also show that \(\left\| \nabla f(x^k)\right\| \le r_k+2\varepsilon _k\) when \(\varepsilon _k\) is reduced. Therefore, we can conclude intuitively that \(\left\| \nabla f(x^k)\right\| \) is decreasing when \(\varepsilon _k\) is decreasing.

- (iii):

-

In the exact case when \(g^k=\nabla f(x^k)\) for all \(k\in \mathrm{I\!N}\), we label our methods as the reduced gradient (RG) ones, which are different from the standard gradient descent method. Indeed, it follows from Step 2 that \(d^k\) is either 0 or is given by

$$\begin{aligned} d^k=-\left( \dfrac{\Vert \nabla f(x^k)\Vert -\varepsilon _k}{\Vert \nabla f(x^k)\Vert }\right) \nabla f(x^k). \end{aligned}$$(4.3)Therefore, the vector \(d^k\) in (4.3) has the same direction as \(-\nabla f(x^k)\), but its length is \(\left\| \nabla f(x^k)\right\| \) reduced by \(\varepsilon _k\) for each \(k\in \mathrm{I\!N}\).

Further we discuss and illustrate behavior of Algorithm 1 at the major steps of iterations.

Remark 4.2

Notice first that:

- (i):

-

If \(d^k\ne 0\), it follows from (4.2) and the definition of projections that \(d^k=-\textrm{Proj}(0,{\mathbb {B}}(g^k,\varepsilon _k))\).

- (ii):

-

An illustration for Algorithm 1 can be seen in Fig. 2.

Let \(g^1\) be an approximate gradient of \(\nabla f(x^1)\) at the 1st iteration. Then, Fig. 2 shows that the two balls \({\mathbb {B}}(g^1,\varepsilon _1)\) and \({\mathbb {B}}(0,r_1)\) do not intersect. This means by Step 2 that \(r_2=r_1\), \(\varepsilon _2=\varepsilon _1\), and \(d^1=-\textrm{Proj}(0,{\mathbb {B}}(g^1,\varepsilon _1))\). Then, we have a new point \(x^2=x^1+t_1d^1\) after choosing the stepsize \(t_1>0\) as in Step 3 and Step 4.

At the 2nd iteration, it can be seen in Fig. 2 that the two balls \({\mathbb {B}}(g^2,\varepsilon _2)\) and \({\mathbb {B}}(0,r_2)\) intersect each other. Thus by Step 2 of Algorithm 1, the radii \(r_2,\varepsilon _2\) are reduced to \(r_3=\mu r_2\) and \(\varepsilon _3=\theta \varepsilon _2\), while the direction \(d^2\) is zero. The latter means that the iterative point \(x^2\) stays the same, i.e., \(x^3=x^2\) from Step 4.

At the 3rd iteration, although \(\nabla f(x^3)=\nabla f(x^2)\), we still need to recalculate an approximate gradient \(g^3\) with a new error \(\varepsilon _3\). In this iteration, the two balls \({\mathbb {B}}(g^3,\varepsilon _3)\) and \({\mathbb {B}}(0,r_3)\) do not intersect, and hence, the procedure is similar to that at the first iteration.

- (iii):

-

For each \(k\in \mathrm{I\!N}\), we have from Step 2 and Step 3 the equivalences

$$\begin{aligned} x^{k+1}=x^k\Longleftrightarrow d^k=0\Longleftrightarrow r_{k+1}=\mu r_k\Longleftrightarrow \varepsilon _{k+1}=\theta \varepsilon _k\Longleftrightarrow \left\| g^k\right\| \le r_k+\varepsilon _k. \end{aligned}$$(4.4)

The next proposition verifies the decent property of Algorithm 1.

Proposition 4.3

In Algorithm 1, \(\left\{ d^k\right\} \) satisfies the sufficient descent condition with constant 1, i.e.,

Proof

Note that (4.5) automatically holds if \(d^k=0\). Supposing that \(d^k\ne 0\) and using the construction of \(d^k\) in Step 2, we have the expression

It follows from (4.1) that \(\left\| g^k-\nabla f(x^k)\right\| \le \varepsilon _k\), which means that \(\nabla f(x^k)\in {\mathbb {B}}(g^k,\varepsilon _k)\). Invoking the projection description for convex sets yields

which is in turn equivalent to

and thus verifies the claim in (4.5). \(\square \)

Now, we introduce the notion of null iterations and establish some properties of such iterations related to the IRG methods.

Definition 4.4

The kth iteration of Algorithm 1 is called a null iteration if \(x^{k+1}=x^k\). The set of all null iterations is denoted by

The next proposition collects important properties of null iterations.

Proposition 4.5

Let \(\left\{ x^k\right\} ,\left\{ g^k\right\} ,\left\{ d^k\right\} ,\left\{ \varepsilon _k\right\} \), and \(\left\{ r_k\right\} \) be sequences generated by Algorithm 1. The following assertions hold:

- (i):

-

\(k\in {\mathcal {N}}\) if and only if either one of the equivalent conditions in (4.4) holds.

- (ii):

-

\(\varepsilon _k\downarrow 0\) if and only if \(r_k\downarrow 0\), which is equivalent to the set \({\mathcal {N}}\) being infinite.

- (iii):

-

If \({\mathcal {N}}\) is finite, then we have

$$\begin{aligned} \left\| g^k\right\|>r_N+\varepsilon _N\;\text { and }\;\left\| d^k\right\| >r_N \end{aligned}$$for all \(k\ge N\), where \(N:=\max {\mathcal {N}}+1\).

- (iv):

-

If \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is finite, then \(\nabla f(x^K)=0\) and \(\left\{ x^k\right\} _{k\ge K}\) is a constant sequence, where we denote \(K:=\max \{\mathrm{I\!N}\setminus {\mathcal {N}}\}+1\). Otherwise, \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \) if and only if it is an accumulation point of \(\left\{ x^k\right\} _{k\in \mathrm{I\!N}{\setminus }{\mathcal {N}}}\), and therefore \(x^k\rightarrow {\bar{x}}\) if and only if \(x^k\overset{\mathrm{I\!N}\setminus {\mathcal {N}}}{\longrightarrow }{\bar{x}}\).

Proof

Assertions (i) and (ii) follow directly from Definition 4.4. To verify (iii), observe that for any natural number \(k\ge N\), the kth iteration is not a null one and then deduce from (i) that

Together with Step 2 in Algorithm 1, this ensures that

which readily justifies assertion (i).

The proof of (iv) is a bit more involved. Supposing that the set \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is finite, we have that \(k\in {\mathcal {N}}\) for all \(k\ge K\). This means by (i) that

This tells us that \(x^k=x^{K}\) for all \(k\ge K\), and that \(\varepsilon _k\downarrow 0,\;r_k\downarrow 0\), and \(g^k\rightarrow 0\) as \(k\rightarrow \infty \). Taking the limit as \(k\rightarrow \infty \) in \(\left\| g^k-\nabla f(x^k)\right\| \le \varepsilon _k\) gives us \(\nabla f(x^k)\rightarrow 0\), which yields \(\nabla f(x^{K})=0\).

Supposing otherwise that the set \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is infinite, we obviously get that every accumulation point of \(\left\{ x^k\right\} _{k\in \mathrm{I\!N}\setminus {\mathcal {N}}}\) is an accumulation point of \(\left\{ x^k\right\} \). Conversely, taking any accumulation point \({\bar{x}}\) of \(\left\{ x^k\right\} \), it suffices to show that

To verify this, fixing \(\delta >0\) and \(N\in \mathrm{I\!N}\) and remembering that \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \), we find \(K\ge N\) such that \(\left\| x^K-{\bar{x}}\right\| <\delta \). If \(K\in \mathrm{I\!N}\setminus {\mathcal {N}}\), choose \(k_N:=K\). Otherwise, using that \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is infinite allows us to find \({\widehat{K}}\in \mathrm{I\!N}\setminus {\mathcal {N}}\) for which \(K,K+1,\ldots ,{\widehat{K}}-1\in {\mathcal {N}}\). This ensures that

and therefore, with \(k_N:={\widehat{K}}\), we get that \(\left\| x^{k_N}-{\bar{x}}\right\| <\delta \). Since \(\delta \) was chosen arbitrarily, this clearly shows that \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} _{k\in \mathrm{I\!N}\setminus {\mathcal {N}}}\) and thus completes the proof. \(\square \)

The last proposition here establishes relationships between convergence properties of the sequences \(\left\{ g^k\right\} \) and \(\left\{ d^k\right\} \) in Algorithm 1.

Proposition 4.6

Let \(\left\{ g^k\right\} ,\left\{ d^k\right\} ,\;\left\{ \varepsilon _k\right\} \), and \(\left\{ r_k\right\} \) be sequences generated by Algorithm 1. Then, for any \(k\in \mathrm{I\!N}\) we have the estimates

Consequently, the following assertions hold:

- (i):

-

\(\varepsilon _k\downarrow 0\) if and only if there is an infinite set \(J\subset \mathrm{I\!N}\) such that \(g^k\overset{J}{\rightarrow }0\).

- (ii):

-

For any infinite set \(J\subset \mathrm{I\!N}\), we have the equivalence

$$\begin{aligned} g^k\overset{J}{\rightarrow }0\Longleftrightarrow d^k\overset{J}{\rightarrow }0. \end{aligned}$$

Proof

Fix any \(k\in \mathrm{I\!N}\). If \(k\in {\mathcal {N}}\), then we get by Proposition 4.5(i) that \(d^k=0\) and \(\left\| g^k\right\| \le \varepsilon _k+r_k\). Otherwise, Step 2 in Algorithm 1 yields \(\left\| d^k\right\| =\left\| g^k\right\| -\varepsilon _k\le \left\| g^k\right\| \). In both cases, (4.6) holds.

To deduce (i) from (4.6), suppose that \(\varepsilon _k\downarrow 0\) as \(k\rightarrow \infty \). By Proposition 4.5(ii) we have that \(r_k\downarrow 0\) and the set \({\mathcal {N}}\) is infinite. Then, for any \(\delta >0\) and \(N\in \mathrm{I\!N}\) there is \(k\ge N\) with \(k\in {\mathcal {N}}\) such that

where the first inequality follows from (4.4). Thus, we can construct an infinite set \(J\subset \mathrm{I\!N}\) such that \(g^k\overset{J}{\longrightarrow }0\). If conversely the sequence \(\left\{ \varepsilon _k\right\} \) does not converge to 0, then the set \({\mathcal {N}}\) is finite by Proposition 4.5(ii). Using Proposition 4.5(iii) confirms that \(\left\{ g^k\right\} \) is bounded away from 0, which tells us that such an index set J does not exist.

To verify now assertion (ii), observe that assuming \(g^k\overset{J}{\rightarrow }0\) implies by the first inequality in (4.6) that \(d^k\overset{J}{\rightarrow }0\). Conversely, suppose that \(d^k\overset{J}{\rightarrow }0\) and deduce from Proposition 4.5(i) that the set \({\mathcal {N}}\) is infinite. Then, it follows from Proposition 4.5(ii) that \(\varepsilon _k\downarrow 0\) and \(r_k\downarrow 0\) as \(k\rightarrow \infty \). Using the second inequality in (4.6), we arrive at \(g^k\overset{J}{\rightarrow }0\) and thus complete the proof of the proposition. \(\square \)

Finally in this section, we deduce from the obtained results the following desired property of the direction sequence \(\left\{ d^k\right\} \) in Algorithm 1.

Corollary 4.7

The sequence \(\left\{ d^k\right\} \) in Algorithm 1 is gradient associated with \(\left\{ x^k\right\} \).

Proof

It follows from Proposition 4.6 that the convergence \(d^k\overset{J}{\rightarrow }0\) yields \(g^k\overset{J}{\rightarrow }0\) and \(\varepsilon _k\downarrow 0.\) Thus, we get \(\nabla f(x^k)\overset{J}{\rightarrow }0\) by taking into account \(\left\| g^k-\nabla f(x^k)\right\| \le \varepsilon _k\) from (4.1). This shows therefore that the sequence \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \). \(\square \)

5 Inexact Reduced Gradient Methods with Stepsize Selections

In this section, we develop novel IRG methods with the following selections of stepsize rules: backtracking stepsize, constant stepsize, and diminishing stepsize. Address first an IRG method with the backtracking linesearch. Choose a linesearch scalar \(\beta \in (0,1)\), a reduction factor \(\gamma \in (0,1)\), and an artificial stepsize of null iterations \(\tau \in (0,1)\). Consider the Master Algorithm 1 with the stepsize sequence \(\left\{ t_k\right\} \) in Step 3 calculated as follows. If \(d^k=0\), then put \(t_k:=\tau \). Otherwise, we set

The next proposition shows that the stepsize sequence \(\left\{ t_k\right\} \) is well defined.

Proposition 5.1

If \(d^k\ne 0\), then there exists a positive number \({\bar{t}}\) such that

which ensures the existence of \(t_k\) in (5.1).

Proof

Suppose that \(d^k\ne 0\) and get by Proposition 4.3 that \(\left\langle \nabla f(x^k),d^k\right\rangle \le -\left\| d^k\right\| ^2\). By the differentiability of f at \(x^k\), for each \(t>0\) sufficiently small we have

Since \(o(t)/t\rightarrow 0\) as \(t\downarrow 0\) and since \((\beta -1)\left\| d^k\right\| ^2<0\), there exists \({\bar{t}}>0\) such that

Therefore, the selection of \(t_k\) in (5.1) is well defined. \(\square \)

Now, we are ready to establish a major result about the stationarity of accumulation points of the iterative sequence generated by Algorithm 1 with the backtracking line search.

Theorem 5.2

Let \(\left\{ x^k\right\} \) be the sequence of iterations generated by Algorithm 1 with the sequence of stepsizes \(\left\{ t_k\right\} \) being chosen via the backtracking linesearch as in (5.1). Assume in addition to the inexact gradient condition (4.1) that \(\left\| g^k-\nabla f(x^k)\right\| \le \rho _k\) with \(\rho _k\downarrow 0\) as \(k\rightarrow \infty \). Suppose furthermore that \(\inf f(x^k)>-\infty \). Then, the following assertions hold:

- (i):

-

\(\varepsilon _k\downarrow 0\) and \(r_k\downarrow 0\) as \(k\rightarrow \infty \).

- (ii):

-

Every accumulation point of \(\left\{ x^k\right\} \) is a stationary point of f.

- (iii):

-

If the sequence \(\left\{ x^k\right\} \) is bounded, then the set of accumulation points of \(\left\{ x^k\right\} \) is nonempty, compact, and connected.

- (iv):

-

If \(\left\{ x^k\right\} \) has an isolated accumulation point, then the entire sequence \(\left\{ x^k\right\} \) converges to this point.

Proof

From the choice of \(\left\{ t_k\right\} \) and Step 4 in Algorithm 1, for every \(k\in \mathrm{I\!N}\) we have

Since \(\inf f(x^k)>-\infty \), summing up on both sides of (5.2) over \(k=1,2,\ldots \) and using the relation \(x^{k+1}=x^k+t_kd^k\), we get that

To verify assertion (i), recall by Proposition 4.5 (ii) that the convergence \(\varepsilon _k\downarrow 0\) is equivalent to \(r_k\downarrow 0\) and to the set of null iterations \({\mathcal {N}}\) being infinite. Assume on the contrary that \({\mathcal {N}}\) is finite. By Proposition 4.5(iii) with \(N=\max {\mathcal {N}}+1\), we have

Then, (5.3) gives us \(\sum _{k=1}^\infty t_k<\infty \) and thus \(t_k\downarrow 0\) as \(k\rightarrow \infty \). Choosing a larger number N if necessary, we get that \(t_k<1\) for all \(k\ge N\). For such k, it follows from the exit condition of the algorithm that

By the classical mean value theorem, there exists some \({\tilde{x}}^k\in [x^k,x^k+\gamma ^{-1}t_kd^k]\) such that

The latter equality together with (5.5) tells us that

Using (5.3) and (5.4), we have \(\sum _{k=1}^\infty \left\| x^{k+1}-x^k\right\| <\infty \), and thus, \(\left\{ x^k\right\} \) converges to some \({\bar{x}}\in \mathrm{I\!R}^n\). The continuity of \(\nabla f\) ensures that \(\nabla f(x^k)\rightarrow \nabla f({\bar{x}})\). Then, employing \(\left\| g^k-\nabla f(x^k)\right\| \le \rho _k\rightarrow 0\) yields \(g^k\rightarrow \nabla f({\bar{x}})\) as \(k\rightarrow \infty \). It follows from Step 2 that

Letting \(k\rightarrow \infty \) leads us to the equalities

Using \(t_k\downarrow 0\), we get that \({\tilde{x}}^k\rightarrow {\bar{x}}\), and thus \(\nabla f({\tilde{x}}^k)\rightarrow \nabla f({\bar{x}})\) as \(k\rightarrow \infty \). Combining the latter with (5.6), (5.7), and the projection characterization verifies the estimates

This tells us that \({\bar{g}}=0\), which contradicts the condition \(\left\| {\bar{g}}\right\| \ge r_N\) by (5.4). Therefore, we arrive at \(\varepsilon _k\downarrow 0\) and \(r_k\downarrow 0\) as \(k\rightarrow \infty \), which completes the proof of assertion (i).

To justify assertions (ii)–(iv), recall from Corollary 4.7 that \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \). Since \(\varepsilon _k\downarrow 0\), we deduce from Proposition 4.6 that 0 is an accumulation point of \(\left\{ d^k\right\} \). Combining these facts with (5.3) and \(t_k\le 1\) whenever \(k\in \mathrm{I\!N}\) ensures that all the assumptions of Theorem 3.4 are satisfied. Therefore, we verify assertions (ii)–(iv) and finish the proof of the theorem. \(\square \)

Next we consider problem (1.1) with the objective function f satisfying the L-descent condition for some \(L>0\). The following result establishes convergence properties of IRG method, which uses either diminishing or constant stepsizes.

Theorem 5.3

Let \(\left\{ x^k\right\} \) be the sequence generated by Algorithm 1, where

- (a):

-

f satisfies the L-descent condition;

- (b):

-

either \(\left\{ t_k\right\} \) is diminishing, i.e.,

$$\begin{aligned} t_k\downarrow 0\; \text{ as } \;k\rightarrow \infty \;\text { and }\quad \sum _{k=1}^\infty t_k=\infty , \end{aligned}$$(5.9)or there exist \(\delta ,\delta '>0\) such that \(\delta '\le \dfrac{2-\delta }{L}\) and

$$\begin{aligned} t_k\in \left[ \delta ',\dfrac{2-\delta }{L}\right] \;\text { for all }\;k\in \mathrm{I\!N}. \end{aligned}$$(5.10)

Assume that \(\inf f(x^k)>-\infty \). Then, all the conclusions of Theorem 5.2 hold. Moreover, if \(\left\{ t_k\right\} \) is chosen as (5.10), then \(\nabla f(x^k)\rightarrow 0\) as \(k\rightarrow \infty \).

Proof

We know from Remark 4.7 that the direction sequence \(\left\{ d^k\right\} \) is gradient associated with \(\left\{ x^k\right\} \). Furthermore, Proposition 4.3 tells us that \(\left\{ d^k\right\} \) satisfies the sufficient descent condition (3.7) with the constant \(\kappa =1\). Note that if \(\left\{ t_k\right\} \) is chosen as either (5.9) or (5.10), then we always get that

Combining these facts with the imposed L-descent condition on f yields the fulfillment of assumptions (a), (b), (c) in Corollary 3.5. Therefore, conclusions (ii)–(iv) of Theorem 5.2 hold. The proof of Corollary 3.5 also ensures that 0 is an accumulation point of \(\left\{ d^k\right\} \). Thus, it follows from Proposition 4.6 that \(\varepsilon _k\downarrow 0\). Using Proposition 4.5(ii), we have \(r_k\downarrow 0\), which verifies conclusion (i) of Theorem 5.2. If \(\left\{ t_k\right\} \) is chosen as (5.10), its boundedness away from 0 is guaranteed, and so Corollary 3.5 yields \(\nabla f(x^k)\rightarrow 0\) as \(k\rightarrow \infty \) and thus completes the proof of the theorem. \(\square \)

The final part of our convergence analysis of the proposed IRG methods applies the KL property to establishing the global convergence of the entire sequence of iterations to a stationary point with deriving convergence rates. We start with the following simple albeit useful lemma.

Lemma 5.4

Let \(\left\{ x^k\right\} \) be the sequence generated by Algorithm 1 with \(\theta <\mu \). Assume that \(\varepsilon _k\downarrow 0\) and \(r_k\downarrow 0\) as \(k\rightarrow \infty \). Then, there exists some \(N\in \mathrm{I\!N}\) such that

where the set \({\mathcal {N}}\) is taken from Definition 4.4.

Proof

It follows directly from the assumptions of the lemma that there exists a natural number N such that \(\varepsilon _k\le r_k\) for all \(k\ge N\). By Step 2 of the algorithm, for any \(k\ge N\) with \(k\notin {\mathcal {N}}\) we have \(\left\| g^k\right\| >r_k+\varepsilon _k\) with the direction \(d^k\) calculated in (4.2). Thus, for such k we get the estimates

It follows from (4.1) in Step 1 and from (5.12) that

which verifies the conclusion of the lemma. \(\square \)

The following two theorems provide conditions ensuring the global convergence of iterative sequences generated by Algorithm 1 with different stepsize selections to a stationary point of f. The first theorem concerns the IRG methods with the backtracking stepsize.

Theorem 5.5

Let \(\left\{ x^k\right\} \) be the iterative sequence generated by Algorithm 1 with the backtracking linesearch under the condition \(\theta <\mu \). Suppose in addition to the inexact gradient condition (4.1) that \(\left\| g^k-\nabla f(x^k)\right\| \le \rho _k\) with \(\rho _k\downarrow 0\) as \(k\rightarrow \infty \). Assume furthermore that \(\left\{ x^k\right\} \) has an accumulation point \({\bar{x}}\), and f satisfies the KL property at \({\bar{x}}\). Then, \({\bar{x}}\) is a stationary point of f, and \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \).

Proof

Since \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \), we can find some infinite set \(J\subset \mathrm{I\!N}\) such that \(x^k\overset{J}{\rightarrow }{\bar{x}}\). It follows from the choice of \(\left\{ t_k\right\} \) in (5.1) that \(\left\{ f(x^k)\right\} \) is nonincreasing, which implies that

Therefore, the results of Theorem 5.2 tell us that \({\bar{x}}\) is a stationary point of f and that \(\varepsilon _k\downarrow 0\), \(r_k\downarrow 0\) as \(k\rightarrow \infty \). We employ Proposition 2.4 to verify that \(x^k\rightarrow {\bar{x}}\) along the entire sequence of iterations. Indeed, the imposed assumptions and the convergence \(\varepsilon _k\downarrow 0\), \(r_k\downarrow 0\) as \(k\rightarrow \infty \) guarantee that all the requirements of Lemma 5.4 are satisfied. Pick \(N\in \mathrm{I\!N}\) such that (5.11) holds. The choice of \(\left\{ t_k\right\} \) in (5.1) ensures the lower estimate

Combining this with (5.11) and the relation \(x^{k+1}=x^k+t_kd^k\) yields

Observe that when \(k\in {\mathcal {N}}\), both sides of (5.14) reduce to zero, and so (5.14) is satisfied. Therefore, assumption (H1) in Proposition 2.4 holds. Moreover, for \(k\ge N\) the conditions \(f(x^{k+1})=f(x^k)\) and (5.13) imply that \(d^k=0\), and hence, \(x^{k+1}=x^k\). Thus, assumption (H2) in Proposition 2.4 is satisfied as well. Applying the latter proposition, we arrive at \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \) and complete the proof. \(\square \)

The second theorem of the above type addresses the IRG methods with diminishing and constant selections of the stepsize sequence \(\left\{ t_k\right\} \).

Theorem 5.6

Let the objective function f satisfy the L-descent condition (2.1) for some \(L>0\), and let \(\left\{ x^k\right\} \) be the sequence generated by Algorithm 1 with \(\theta <\mu \), and either diminishing (5.9) or constant stepsizes (5.10). Assume in addition that \({\bar{x}}\) is an accumulation point of the sequence \(\left\{ x^k\right\} \) and that f satisfies the KL property at \({\bar{x}}\). Then, \({\bar{x}}\) is a stationary point of f, and we have the convergence \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \).

Proof

Observe first that the assumptions imposed here yield those in Theorem 5.3 and Corollary 3.5 but \(\inf f(x^k)>-\infty \). Similarly to the proof of Corollary 3.5, we can show that \(\left\{ f(x^k)\right\} _{k\ge K}\) is nonincreasing for some \(K\in \mathrm{I\!N}.\) Since \({\bar{x}}\) is an accumulation point of \(\left\{ x^k\right\} \), similarly to the proof of Theorem 5.5, we deduce that \(\inf f(x^k)=f({\bar{x}})>-\infty \), which verifies the remaining assumption. Therefore, \({\bar{x}}\) is a stationary point of f and \(\varepsilon _k\downarrow 0\), \(r_k\downarrow 0\) as \(k\rightarrow \infty \). The latter convergence together with the imposed assumptions guarantees the fulfillment of all the conditions of Lemma 5.4. Let \(N\in \mathrm{I\!N}\) be such that (5.11) holds. Let \(\delta >0\) be the constant given in (5.10). From the proof of Corollary 3.5, we find some \(N_1\ge N\) such that

The relation \(x^{k+1}=x^k+t_kd^k\) and (5.11), (5.15) tell us that

Similarly to the proof of Theorem 5.5, we get \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \) and thus complete the proof. \(\square \)

We will see below that the boundedness of stepsizes away from 0 plays a crucial role in establishing the rate of convergence of the IRG methods. This property automatically holds for constant stepsizes while may fail for diminishing ones. The next proposition shows that the property is satisfied for the backtracking stepsize selection provided that the gradient of the objective function is locally Lipschitzian around accumulation points of iterative sequence. Observe that this property is strictly weaker than the (global) Lipschitz continuous of \(\nabla f\). Indeed, \({\mathcal {C}}^2\)-smooth functions have locally Lipschitzian gradients but do not need to have a globally Lipschitzian one as, e.g., for \(f(x):=x^4\).

Proposition 5.7

Let \(\left\{ x^k\right\} \) be a sequence generated by Algorithm 1 with the backtracking stepsize. Suppose in addition to the inexact gradient condition (4.1) that \(\left\| g^k-\nabla f(x^k)\right\| \le \rho _k\) with \(\rho _k\downarrow 0\) as \(k\rightarrow \infty \). Assume moreover that there exists an infinite set \(J\subset \mathrm{I\!N}\) such that \(\left\{ x^k\right\} _{k\in J}\) converges to some \({\bar{x}}\in \mathrm{I\!R}^n\) and that \(\nabla f\) is locally Lipschitzian around \({\bar{x}}\). Then, the stepsize sequence \(\left\{ t_k\right\} _{k\in J}\) is bounded away from zero.

Proof

Assume on the contrary that \(\left\{ t_k\right\} _{k\in J}\) is not bounded away from zero. Then, we find an infinite set \({\bar{J}}\subset J\) such that \(t_k\overset{{\bar{J}}}{\longrightarrow }0\). Let \(\tau \in (0,1)\) be an artificial stepsize of null iterations. Since \(t_k\overset{{\bar{J}}}{\longrightarrow }0\), there exists a number \(N\in \mathrm{I\!N}\) such that

This means that \(k\notin {\mathcal {N}}\) whenever \(k\ge N,\;k\in {\bar{J}}\). By Proposition 4.5(i), we have \(d^k\ne 0\) for all \(k\ge N,\;k\in {\bar{J}}\). Then, condition (4.2) in Step 2 leads us to

Since \(x^k\overset{{\bar{J}}}{\rightarrow }{\bar{x}}\), the continuity of \(\nabla f\) and the estimate \(\left\| \nabla f(x^k)-g^k\right\| \le \rho _k\rightarrow 0\) yield that \(g^k\overset{{\bar{J}}}{\rightarrow }\nabla f({\bar{x}})\). Using (5.18), we get that the sequence \(\left\{ d^k\right\} _{k\in {\bar{J}}}\) is bounded, and thus,

Since \(\nabla f\) is locally Lipschitzian around \({\bar{x}}\), there exists a positive number \(\delta \) such that \(\nabla f\) is Lipschitz continuous on \({\mathbb {B}}({\bar{x}},\delta )\) with some modulus \(L>0\). By (5.19) and \(x^k\overset{{\bar{J}}}{\rightarrow }{\bar{x}}\), we find \(N_1\ge N\) with \(x^k,x^k+\gamma ^{-1}t_kd^k\in {\mathbb {B}}({\bar{x}},\delta )\) for all \(k\ge N_1,\;k\in {\bar{J}}\). The Lipschitz continuity of \(\nabla f\) on \({\mathbb {B}}({\bar{x}},\delta )\) with modulus L yields by [10, Proposition A.24] the L-descent condition, i.e.,

Fixing \(k\in {\bar{J}},\;k\ge N_1\), we deduce from the above that \(d^k\ne 0\), and \(t_k<1\). The exit condition for the backtracking linesearch implies that

Applying (5.20) for \(x=x^{k}+\gamma ^{-1}t_kd^k\) and \(y=x^k\), we have that

Combining this with (5.21) leads us to

or equivalently to the inequality

Proposition 4.3 and \(d^k\ne 0\) tell us that \(0<\left\| d^k\right\| ^2\le \left\langle \nabla f(x^k),-d^k\right\rangle \). Then, we deduce from (5.22) the fulfillment of the estimate

Dividing both sides above by \(\gamma ^{-1}t_k\left\langle \nabla f(x^k),-d^k\right\rangle >0\), we get \(0<\beta -1+\dfrac{L\gamma ^{-1}t_k}{2}\). Letting \(k\overset{{\bar{J}}}{\longrightarrow }\infty \) yields \(\beta \ge 1\), which contradicts the choice of \(\beta \in (0,1)\). Thus, we verify that the sequence \(\left\{ t_k\right\} _{k\in J}\) is bounded away from zero, which completes the proof of the proposition. \(\square \)

The last two theorems establish sufficient conditions ensuring the convergence rates in Algorithm 1 under different stepsize selections. Having the sequence of iterations \(\left\{ x^k\right\} \) generated by this algorithm, we obtain first from Proposition 4.5(iii) that if \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is finite, then \(\left\{ x^k\right\} \) stops after a finite number of iterations. Thus, we consider the case where the set \(\mathrm{I\!N}\setminus {\mathcal {N}}\) is infinite and can be numerated as \(\left\{ j_1,j_2,\ldots \right\} \). Construct the sequence \(\left\{ z^k\right\} \) by

We have \(j_{k+1}\ge j_{k}+1\) whenever \(k\in \mathrm{I\!N}\). If the equality holds therein, then \(z^{k+1}=x^{j_k+1}\). Otherwise, by taking into account that the indices \(j_{k}+1,\ldots ,j_{k+1}-1\) correspond to null iterations, we get that

Therefore, it follows from \(j_k\notin {\mathcal {N}}\) that

Furthermore, Proposition 4.5(iv) tells us that \({\bar{x}}\) is an accumulation point of \(\left\{ z^k\right\} \) if and only if \({\bar{x}}\) is also an accumulation point of \(\left\{ x^k\right\} \).

The first theorem about the convergence rates concerns Algorithm 1 with the backtracking stepsize.

Theorem 5.8

Consider Algorithm 1 with the backtracking stepsize selections under the condition \(\theta <\mu \). Let \(\left\{ x^k\right\} \) be the iterative sequence generated by this algorithm. Suppose in addition to the inexact gradient condition (4.1) that \(\left\| g^k-\nabla f(x^k)\right\| \le \rho _k\) with \(\rho _k\downarrow 0\) as \(k\rightarrow \infty \). Assume further that \(\left\{ x^k\right\} \) has an accumulation point \({\bar{x}}\), that f satisfies the KL property at \({\bar{x}}\) with \(\psi (t)=Mt^{q}\) for some \(M>0\) and \(q\in (0,1)\), and that \(\nabla f\) is locally Lipschitzian around \({\bar{x}}\). The following convergence rates are guaranteed for the sequence \(\left\{ z^k\right\} \) defined in (5.23):

- (i):

-

If \(q\in (0,1/2]\), then the sequence \(\left\{ z^k\right\} \) converges linearly to \({\bar{x}}\).

- (ii):

-

If \(q\in (1/2,1)\), then there exists a positive constant \(\varrho \) such that

$$\begin{aligned} \left\| z^k-{\bar{x}}\right\| \le \varrho k^{-\frac{1-q}{2q-1}}\;\text { for all large }\;k\in \mathrm{I\!N}. \end{aligned}$$

Proof

The imposed assumptions yield the fulfillment of those in Theorem 5.5, and so lead us to the convergence \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \). Then, the local Lipschitz continuity of \(\nabla f\) around \({\bar{x}}\) and Proposition 5.7 ensure that the sequence \(\left\{ t_k\right\} \) is bounded away from zero.

To deduce now the claimed convergence rates in (i)–(iii) from Theorem 2.5, define \(\tau _k:=t_{j_k}\) for all \(k\in \mathrm{I\!N}\). Then, \(\left\{ \tau _k\right\} \) is also bounded away from zero as a subsequence of \(\left\{ t_k\right\} \). Furthermore, using (5.24) and the linesearch conditions, we have

for all \(k\in \mathrm{I\!N}\). Note that all the assumptions of Theorem 5.2 are satisfied, and so Lemma 5.4 holds. Pick any \(N\in \mathrm{I\!N}\) from (5.11) and fix \(k\ge N\). Then, using (5.24) and (5.11) with taking into account that \(j_k\notin {\mathcal {N}}\) for \(j_k\ge k\) leads us to

Apply finally Theorem 2.5 to \(\left\{ z^k\right\} \) and \(\left\{ \tau _k\right\} \) while remembering that \(z^{k+1}\ne z^k\) for all \(k\in \mathrm{I\!N}\) from (5.25). This verifies the convergence rates (i)–(iii) claimed in the theorem. \(\square \)

The next theorem on the convergence rates addresses Algorithm 1 with the constant stepsizes.

Theorem 5.9

Let f satisfy the L-descent condition for some \(L>0\), and let \(\left\{ x^k\right\} \) be the iterative sequence generated by Algorithm 1 with the constant stepsizes (5.10) under the condition \(\theta <\mu \). Suppose that \(\left\{ x^k\right\} \) has an accumulation point \({\bar{x}}\) and that f satisfies the KL property at \({\bar{x}}\) with \(\psi (t)=Mt^{q}\) for some \(M>0\) and \(q\in (0,1)\). Then, the following convergence rates are guaranteed for the iterative sequence \(\left\{ z^k\right\} \) defined in (5.23):

- (i):

-

If \(q\in (0,1/2]\), then the sequence \(\left\{ z^k\right\} \) converges linearly to \({\bar{x}}\).

- (ii):

-

If \(q\in (1/2,1)\), then there exists a positive constant \(\varrho \) such that

$$\begin{aligned} \left\| z^k-{\bar{x}}\right\| \le \varrho k^{-\frac{1-q}{2q-1}}\;\text { for all large }\;k\in \mathrm{I\!N}. \end{aligned}$$

Proof

Note that our assumptions yield the fulfillment of those in Theorem 5.6, and thus, we have that \(x^k\rightarrow {\bar{x}}\) as \(k\rightarrow \infty \). Defining \(\tau _k:=t_{j_k}\) for all \(k\in \mathrm{I\!N}\) ensures that the stepsize sequence \(\left\{ \tau _k\right\} \) is bounded away from zero. Note that all the assumptions in Corollary 3.5 hold, and let \(\delta >0\) be the constant taken from in (5.10). By the L-descent property of f and the constant stepsize selection, we find by arguing similarly to the proof of Corollary 3.5 a number \( N\in \mathrm{I\!N}\) such that

Since \(j_k\ge k\ge N\) for such k, it follows that

Note that all the assumptions of Theorem 5.3 are satisfied. Using this result together with Lemma 5.4 and then arguing as in the proof of Theorem 5.8, we complete the proof of this theorem. \(\square \)

6 Applications and Numerical Experiments

In this section, we present efficient implementations of the developed IRG methods to solving particular classes of optimization problems that appear in practical modeling. We conduct numerical experiments and compare the results of computations by using our algorithms with those obtained by applying some other well-known methods. This section is split into two subsections addressing different classes of problems with the usage of different algorithms.

6.1 Comparison with Classical Inexact Proximal Point Method

This subsection addresses the Least Absolute Deviations (LAD) Curve-Fitting problem which is formulated as follows:

where A is an \(m\times n\) matrix, b is a vector in \(\mathrm{I\!R}^m\), and \(\left\| u\right\| _1:=\sum _{k=1}^m|u_k|\) for any \(u=(u_1,\ldots ,u_m)\in \mathrm{I\!R}^m\). Problem (6.1) exhibits robustness in outliers resistance and appears in many applied areas; see, e.g., [11] for more discussions. Observe that (6.1) is a problem of nonsmooth convex optimization, but we can reduce it to a smooth problem by using a regularization procedure. In this way, we solve (6.1) by using our IRG method with constant stepsize and compare our approach with the usage of the inexact proximal point method (IPPM) proposed by Rockafellar in [46].

To proceed, recall that the Moreau envelope and the proximal mapping of g are defined by

where the minimization mapping \(\varphi _{x}:\mathrm{I\!R}^n\rightarrow \mathrm{I\!R}\) is given by

Since g is convex, it follows from [7, Propositions 12.28 and 12.30] that \(e_g \) is \({\mathcal {C}}^1\)-smooth and that its gradient is Lipschitz continuous with constant 1 being represented by

Moreover, the set of minimizers of g coincides with the set of zeros of the gradient mapping \(\nabla e_g\).

This tells us that problem (6.1) can be equivalently transformed into the problem of finding stationary points of the smooth function \(f:=e_g\). Therefore, it is possible to solve (6.1) by using Algorithm 1 with constant stepsize, where an inexact gradient \(g^k\) of \(\nabla f(x^k)\) in Step 1 satisfying the condition (4.1) can be chosen from the conditions

Meanwhile, the iterative procedure of IPPM in [46, page 878] for solving (6.1) is given by

Since the function \(\varphi _{x^k}\) in (6.3) is strongly convex with constant 1 [38, Definition 2.1.3], the error bound for the distance between the inexact proximal point \(p^k\) and the exact one \(\textrm{Prox}_g(x^k)\) in (6.5) and (6.6) is satisfied if

where \(\omega _k:=\dfrac{\varepsilon _k^2}{2}\) for (6.5) and \(\omega _k:=\dfrac{\delta _k^2}{2}\) for (6.6) by using [38, Theorem 2.1.8]. In this numerical experiment, we run the Fast Iterative Shrinkage-Thresholding Algorithm (FISTA) of Beck and Teboulle [9] for the dual function of \(\varphi _{x^k}\) until the duality gap is below \(\omega _k\), which therefore ensures (6.7).

The initial points are chosen as \(x^1:=0_{\mathrm{I\!R}^n}\) for both algorithms, while the detailed settings of each algorithm are given as follows:

-

IRG: \(\varepsilon _1=10, \theta =\mu =0.5\). Two selections of the initial radius \(r_1\) are 20 and 5, which correspond to versions IRG-20 and IRG-5, respectively. To simplify the iterative sequence of Algorithm 1 when \(\left\| g^k\right\| \le r_k+\varepsilon _k\), we put \(x^{k+1}:=p^k\), which corresponds to the choice of stepsize \(t_k=\frac{\left\| g^k\right\| }{\left\| g^k\right\| -\varepsilon _k}\).

-

IPPM: \(\omega _k=\dfrac{1}{k^p}\) for all \(k\in \mathrm{I\!N}\), where \(p=4\) or \(p=2.1.\) These selections together with the definition of \(\omega _k\) in (6.7) ensure that \(\sum _{k=1}^\infty \delta _k<\infty \) as required for IPPM in (6.6). We also use the labels IPPM-4 and IPPM-2.1 for these versions of IPPM, respectively.

In this numerical experiment, we let IPPM-2.1 run for 200 iterations and record the function value obtained by this method. Then, other methods run until their function values are lower than the recorded one of IPPM-2.1. We stop the methods when the time reaches the limit of 4000 seconds. The data A, b are generated randomly with i.i.d. (identically and independent distributed) standard Gaussian entries. To avoid algorithms from reaching the solution promptly, we consider only the cases where \(m\le n\) in (6.1).

The numerical experiment is conducted on a computer with 10th Gen Intel(R) Core(TM) i5-10400 (6-Core 12 M Cache, 2.9–4.3 GHz) and 16GB RAM memory. The codes are written in MATLAB R2021a. Detailed information for the results is presented in Table 1, where ‘Test #,’ ‘iter,’ ‘fval,’ ‘time’ mean test number, the number of iterations, value of the objective function at the last iteration, and the computational time, respectively. The bold text indicates the running time of the fastest algorithms in each test. The errors \(\omega _k\) in the inexact proximal point calculations (6.7) and the function values obtained by the algorithms over the duration of time are also graphically illustrated in Figs. 3 and 4.

It can be seen from Table 1 that IRG-5 has the best performance in this numerical experiment. IRG-20 is the second fastest algorithm in Tests 1, 3, 5, while it is slightly slower than IPPM-4 in Tests 2, 4. In Test 5 with the largest dimensions \(m=n=1200,\) IRG-5 is around 4 times faster than IPPM-2.1, while IPPM-4 even cannot reach the value obtained by IPPM-2.1 within the time limit. In this test, IRG-20 is also around 2.5 times faster than IPPM-2.1.

The graphs in Figs. 3 and 4 show that the errors (in inexact proximal point calculations) of IRG are automatically adjusted to be suitable for different problems:

-

In Tests 1, 3, 5 with \(m=n\), it can be seen from Fig. 4 that IPPM-2.1 is faster than IPPM-4, which means that the use of larger errors is preferred in this case. Then, Fig. 3 shows that the errors used in IRG stagnate at most of the iterations. As a result, the IRG methods use the errors larger than that of the IPPM methods and thus achieve better performances.

-

In Tests 2, 4 with \(m<n\), IPPM-4 with smaller errors performs better than IPPM-2.1. In this case, the IRG methods decrease in almost every iteration and achieve smaller errors in comparison with IPPM-4.

6.2 Comparison with Exact Gradient Descent Methods

In the numerical experiments presented in this subsection, we show that our IRG method with backtracking stepsize, based on the usage of inexact gradients, performs well compared with the famous methods employing the exact gradient calculation, which are the reduced gradient (RG) method and gradient descent (GD) method in the following setting:

-

1.

The accuracy of the inexact gradient \(g^k\) is low, i.e., \(\left\| g^k-\nabla f(x^k)\right\| \le \delta _k\), where \(\delta _k\) is not too small relative to \(\left\| \nabla f(x^k)\right\| \).

-

2.

The accuracy required for the solution is increasing.

To demonstrate this, we choose the following two well-known smooth benchmark functions in global optimization taken from the survey paper [25].

-

The Dixon and Price function is defined by

$$\begin{aligned} f_{\textrm{dixon}}({\textbf{x}}):=\left( x_{1}-1\right) ^{2}+\sum _{i=2}^{n}i\left( 2 x_{i}^{2}-x_{i-1}\right) ^{2},\quad x\in \mathrm{I\!R}^n. \end{aligned}$$The global minimum of this function is \({\bar{f}}_{\textrm{dixon}}=0\), and the two solutions \(x^*,y^*\in \mathrm{I\!R}^n\) are

$$\begin{aligned} {\left\{ \begin{array}{ll} x^*_1=1,&{}\\ x^*_{k}=\sqrt{\dfrac{x_{k-1}}{2}}&{}\text { for }\;k=2,\ldots ,n \end{array}\right. } \end{aligned}$$and by \(y^*_k=x^*_k\) for all \(k=1,\ldots ,n-1,\) \(y^*_n=-x^*_n\).

-

The Rosenbrock 1 function defined by

$$\begin{aligned} f_{\textrm{rosen}}({\textbf{x}}):=\sum _{i=1}^{n-1}\left[ 100\left( x_{i+1}-x_{i}^{2}\right) ^{2}+\left( x_{i}-1\right) ^{2}\right] ,\quad x\in \mathrm{I\!R}^n. \end{aligned}$$The global minimum of this function is \({\bar{f}}_{\textrm{rosen}}=0\), and the unique solution is \((1,\ldots ,1)\in \mathrm{I\!R}^n\).

Since the information about the convexity and the Lipschitz continuity of gradients of the chosen objective functions is unknown, our experiments are conducted by algorithms, where stepsizes are obtained from the corresponding linesearches. We use the following abbreviations:

-

GD: Gradient descent method with the backtracking linesearch.

-

RGB and IRGB: Reduced gradient method with the backtracking linesearch and Inexact reduced gradient method with the backtracking linesearch; see (5.1).