Abstract

We propose a family of spectral gradient methods, whose stepsize is determined by a convex combination of the long Barzilai–Borwein (BB) stepsize and the short BB stepsize. Each member of the family is shown to share certain quasi-Newton property in the sense of least squares. The family also includes some other gradient methods as its special cases. We prove that the family of methods is R-superlinearly convergent for two-dimensional strictly convex quadratics. Moreover, the family is R-linearly convergent in the any-dimensional case. Numerical results of the family with different settings are presented, which demonstrate that the proposed family is promising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the unconstrained optimization problem

where \(f(x): \mathbb {R}^n\rightarrow \mathbb {R}\) is a continuously differentiable function. The gradient method solves problem (1) by updating iterates as

where \(g_k=\nabla f(x_k)\) and \(\alpha _k>0\) is the stepsize. Different gradient methods use different formulae for stepsizes.

The simplest gradient method is the steepest descent (SD) method due to Cauchy [6], which computes the stepsize by exact line search,

As is well known, every two consecutive gradients generated by the SD method are perpendicular to each other. Moreover, if f(x) is a strictly convex quadratic function, i.e.,

where \(A\in \mathbb {R}^{n\times n}\) is symmetric positive definite and \(b\in \mathbb {R}^n\), it can be shown that the gradients will asymptotically reduce to a two-dimensional subspace spanned by the two eigenvectors corresponding to the largest and smallest eigenvalues of the matrix A and hence zigzag occurs, see [1, 32] for more details. This property seriously deteriorates the performance of the SD method, especially when the condition number of A is large.

An important approach that changes our perspectives on the effectiveness of gradient methods is proposed by Barzilai and Borwein [2]. They viewed the updating rule (2) as

where \(D_k=\alpha _kI\). Similar to the quasi-Newton method [19], \(D_k^{-1}\) is required to satisfy the secant equation

to approximate the Hessian as possible as it can. Here, \(s_{k-1}=x_k-x_{k-1}\) and \(y_{k-1}=g_k-g_{k-1}\). However, since \(D_k\) is diagonal with identical diagonal elements, it is usually impossible to find an \(\alpha _k\) such that \(D_k^{-1}\) fulfills (5) if the dimension \(n>1\). Thus, Barzilai and Borwein required \(D_k^{-1}\) to meet the secant equation in the sense of least squares,

which yields

Here and below, \(\Vert \cdot \Vert \) means the Euclidean norm. On the other hand, one can also calculate the stepsize by requiring \(D_k\) to satisfy

That is,

which gives

Apparently, when \(s_{k-1}^Ty_{k-1}>0\), there holds \(\alpha _{k}^{BB1}\ge \alpha _{k}^{BB2}\). In other words, \(\alpha _{k}^{BB1}\) is a long stepsize while \(\alpha _{k}^{BB2}\) is a short one, which implies that \(\alpha _{k}^{BB1}\) is more aggressive than \(\alpha _{k}^{BB2}\) in decreasing the objective value. Extensive numerical experiments show that the long stepsize is superior to the short one in many cases, see [4, 22, 34] for example. In what follows we will refer to the gradient method with the long stepsize \(\alpha _{k}^{BB1}\) as the BB method without specification.

Barzilai and Borwein [2] proved their method with the short BB stepsize \(\alpha _{k}^{BB2}\) is R-superlinearly convergent for two-dimensional strictly convex quadratics. An improved R-superlinear convergence result for the BB method was given by Dai [8]. Global and R-linear convergence of the BB method for general n-dimensional strictly convex quadratics were established by Raydan [33] and Dai and Liao [14], respectively. The BB method has also been extended to solve general nonlinear optimization problems. By incorporating the nonmontone line search proposed by Grippo et al. [26], Raydan [34] developed the global BB method for general unconstrained problems. Later, Birgin et al. [3] proposed the so-called spectral projected gradient method which extends Raydan’s method to smooth convex constrained problems. Dai and Fletcher [11] designed projected BB methods for large-scale box-constrained quadratic programming. Recently, by resorting to the smoothing techniques, Huang and Liu [27] generalized the projected BB method with modifications to solve non-Lipschitz optimization problems.

The relationship between the stepsizes in BB-like methods and the spectrum of the Hessian of the objective function has been explored in several studies. Frassoldati et al. [23] tried to exploit the long BB stepsize close to the reciprocal of the smallest eigenvalue of the Hessian, yielding the ABBmin1 and ABBmin2 methods. De Asmundis et al. [18] developed the so-called SDA method which employs a short stepsize approximates the reciprocal of the largest eigenvalue of the Hessian. Following the line of [18], Gonzaga and Schneider [25] suggested a monotone method for quadratics where the stepsizes are obtained in a way similar to the SD method. De Asmundis et al. [17] proposed the SDC method which exploits the spectral property of Yuan’s stepsize [16]. Kalousek [30] considered the SD method with random stepsizes lying between the reciprocal of the largest eigenvalue and the smallest eigenvalue of the Hessian and analysed the optimal distribution of random stepsizes that guarantees the maximum asymptotic convergence rate.

Applications of the BB method and its variants have largely been developed for problems arising in various different areas including image restoration [37], signal processing [31], eigenvalue problems [29], nonnegative matrix factorization [28], sparse reconstruction [38], machine learning [36], etc. We refer the reader to [4, 7, 13, 20, 22, 39] and references therein for more spectral gradient methods and extensions.

The success of the BB method and its variants motivates us to consider spectral gradient methods. Our goal is to present a family of spectral gradient methods for optimization. Notice that the Broyden class of quasi-Newton methods [5] approximate the inverse of the Hessian by

where \(\tau \in [0,1]\) is a scalar parameter and \(H_k^{BFGS}\) and \(H_k^{DFP}\) are the BFGS and DFP matrices, respectively, that satisfy the secant equation (8), which further implies that

i.e.,

Since the inverse of \(H_k^{BFGS}\), say \(B_k^{BFGS}\), satisfies (5), we can modify (12) as

Motivated by the above observation, we employ the idea of the BB method to approximate the Hessian and its inverse by diagonal matrices. Particularly, we require the matrix \(D=\alpha I\) to be the solution of

In the next section, we will show that the stepsize given by the convex combination of the long BB stepsize \(\alpha _k^{BB1}\) and the short BB stepsize \(\alpha _k^{BB2}\), i.e.,

where \(\gamma _k\in [0,1]\), is a solution to (14). Clearly, this is a one-parametric family of stepsizes, which include the two BB stepsizes as special instances. Moreover, any stepsize lies in the interval \([\alpha _{k}^{BB2},\alpha _{k}^{BB1}]\) is a special case of the family. For example, the positive stepsize given by the geometrical mean of \(\alpha _{k}^{BB1}\) and \(\alpha _{k}^{BB2}\) [9],

We further prove that the family of spectral gradient methods (15) is R-superlinearly convergent for two-dimensional strictly convex quadratics. For the n-dimensional case, the family is proved to be R-linearly convergent. Numerical results of the family (15) with different settings of \(\gamma _k\) are presented and compared with other gradient methods, including the BB method [2], the alternate BB method (ALBB) [11], the adaptive BB method (ABB) [40], the cyclic BB method with stepsize \(\alpha _k^{BB1}\) (CBB1) [12], the cyclic BB method with stepsize \(\alpha _k^{BB2}\) (CBB2), the cyclic method with stepsize \(\alpha _k^{P}\) (CP), the Dai-Yuan method (DY) [16], the ABBmin1 and ABBmin2 methods [23], and the SDC method [17]. The comparisons demonstrate that the proposed family is promising.

The paper is organized as follows. In Sect. 2, we show that each stepsize in the family (15) solves some least squares problem (14) and hence possesses certain quasi-Newton property. In Sect. 3, we establish R-superlinear convergence of the family (15) for two-dimensional strictly convex quadratics and R-linear convergence for the n-dimensional case, respectively. In Sect. 4, we discuss different selection rules for the parameter \(\gamma _k\). In Sect. 5, we conduct some numerical comparisons of our approach and other gradient methods. Finally, some conclusions are drawn in Sect. 6.

2 Quasi-Newton property of the family (15)

In this section, we show that each stepsize in the family (15) enjoys certain quasi-Newton property.

For the sake of simplicity, we discard the subscript of \(s_{k-1}\) and \(y_{k-1}\) in the following part of this section, i.e., \(s=s_{k-1}\), \(y=y_{k-1}\). Let

Then, the derivative of \(\phi _\tau (\alpha )\) with respect to \(\alpha \) is

Proposition 1

If \(s^Ty>0\) and \(\tau \in [0,1]\), the equation \(\phi '_\tau (\alpha )=0\) has a unique root in \([\alpha _k^{BB2},\alpha _k^{BB1}]\).

Proof

We only need to consider the case \(\tau \in (0,1)\). Notice that

If \(s^Ty>0\), we have \(y\ne 0\) and \(y^Ty>0\). This implies that \(\psi (\tau ,\alpha _k^{BB2})<0\) and \(\psi (\tau ,\alpha _k^{BB1})>0\). Thus, \(\psi (\tau ,\alpha )=0\) has a root in \((\alpha _k^{BB2},\alpha _k^{BB1})\). Since \(\alpha >0\), we know that the equation \(\phi '_\tau (\alpha )=0\) has a root in \([\alpha _k^{BB2},\alpha _k^{BB1}]\).

Now we show the uniqueness of such a root by contradiction. Suppose that \(\alpha _1<\alpha _2\) and \(\alpha _1,\alpha _2\in [\alpha _k^{BB2},\alpha _k^{BB1}]\) such that \(\phi '_\tau (\alpha _1)=0\) and \(\phi '_\tau (\alpha _2)=0\). It follows from (17) that

By rearranging terms, we obtain

Since \(\alpha _1\ne \alpha _2\), it follows that

which gives \((\alpha _1^2+\alpha _1\alpha _2+\alpha _2^2) -(\alpha _1+\alpha _2)\alpha _k^{BB2}<0\). This is not possible since \(\alpha _k^{BB2}\le \alpha _1<\alpha _2\). This completes the proof. \(\square \)

Proposition 2

If \(s^Ty>0\) and \(\tau \in [0,1]\), the root of \(\phi '_\tau (\alpha )=0\) in \([\alpha _k^{BB2},\alpha _k^{BB1}]\) is monotone with respect to \(\tau \).

Proof

It suffices to show the statement holds for \(\tau \in (0,1)\). By the proof of Proposition 1, \(\alpha \) is an implicit function of \(\tau \) determined by the equation \(\psi (\tau ,\alpha )=0\). The derivative of \(\psi (\tau ,\alpha )\) with respect to \(\tau \) is

Let \(\frac{\partial \psi (\tau ,\alpha )}{\partial \tau }=0\). By simple calculations, we obtain

For \(\alpha \in (\alpha _k^{BB2},\alpha _k^{BB1})\), \(\alpha '>0\). This completes the proof. \(\square \)

Theorem 1

For each \(\gamma _k\in [0,1]\), the stepsize \(\alpha _k\) defined by (15) is a solution of (14).

Proof

We only need to show that, for \(\gamma _k\in (0,1)\), \(\phi '_{\tau }(\alpha _k)\) vanishes at some \(\tilde{\tau }\in (0,1)\). From (17) and (15), we have

Clearly,

is a root of \(\psi (\tau ,\alpha _k)=0\). This completes the proof. \(\square \)

3 Convergence analysis

In this section, we analyze the convergence properties of the family (15) for the quadratic function (3). Since the gradient method (2) is invariant under translations and rotations when applying to problem (3), we assume that the matrix A is diagonal, i.e.,

where \(0<\lambda _1\le \lambda _2\le \cdots \le \lambda _n\).

3.1 Two-dimensional case

In this subsection, based on the techniques in [9], we establish the R-superlinear convergence of the family (15) for two-dimensional quadratic functions.

Without loss of generality, we assume that

where \(\lambda \ge 1\). Furthermore, assume that \(x_1\) and \(x_2\) are such that

Let

Then it follows that

Thus, the stepsize (15) can be written as

By the update rule (2) and \(g_k=Ax_k\), we have

Thus,

From (21), (22) and the definition of \(q_k\), we get

Let

Then we have

Since

we obtain \(h'(w)>0\) for \(\gamma _k\in (0,1)\). Thus,

Denoting \(M_k=\log q_k\). By (23), we have

Let \(\theta \) such that \(\theta ^2-\theta +2=0\). Then, \(\theta =\frac{1\pm \sqrt{7}i}{2}\). Denote by

We have the following result.

Lemma 1

If \(\gamma _k\in (0,1)\) and

there exists \(c_1>0\) such that

Proof

It follows from (26), the definition of \(\theta \), and (27) that

By (25), we know that

Since \(|\theta |=\sqrt{2}\), we get by (30) that

where \(c_1=2\log \lambda \). From (28), we have

This completes the proof. \(\square \)

Since \(|\theta -1|=\sqrt{2}\), we obtain by (27) that

which, together with (29), gives

Lemma 2

Under the conditions of Lemma 1, for \(k\ge 2\), the following inequalities hold:

Proof

The inequality (32) holds true if

or

Suppose that the above two inequalities are false. By (31), we know that either

or

From (26), we have

(i) \(M_{k-1}\le -(\sqrt{2}-1)^22^{\frac{k}{2}}c_1\). If \(M_{k}<0\), it follows from (34) that

Otherwise, if \(M_{k}\ge 0\), by (26), we get

(ii) \(M_{k}\le -(\sqrt{2}-1)^22^{\frac{k}{2}}c_1\). Similar to (i), we can show that

or

Thus, the inequality (32) is valid. The inequality (33) can be established in a similar way. \(\square \)

Theorem 2

If \(\gamma _k\in (0,1)\), (19) and (28) hold, the sequence \(\{\Vert g_k\Vert \}\) converges to zero R-superlinearly.

Proof

From (22), we have

Since \(\alpha _k\in (\lambda ^{-1},1)\), we have

which gives

It follows from (35), (36) and (37) that

which indicates that

As \(M_k=\log q_k\), we know by Lemma 2 and (39) that

Similarly to (35), we have

which together with (37) yields that

By (35) and (40), for any k, we have

That is, \(\{\Vert g_k\Vert \}\) converges to zero R-superlinearly. \(\square \)

Theorem 2, together with the analysis for the BB method in [2, 8], shows that, for any \(\gamma _k\in [0,1]\), the family (15) is R-superlinearly convergent.

3.2 n-dimensional case

In this subsection, we show R-linear convergence of the family (15) for n-dimensional quadratics.

Dai [7] has proved that if A has the form (18) with \(1=\lambda _1\le \lambda _2\le \cdots \le \lambda _n\) and the stepsizes of gradient method (2) have the following Property (A), then either \(g_k=0\) for some finite k or the sequence \(\{\Vert g_k\Vert \}\) converges to zero R-linearly.

Property (A)

[7] Suppose that there exist an integer m and positive constants \(M_1\ge \lambda _1\) and \(M_2\) such that

-

(i)

\(\lambda _1\le \alpha _k^{-1}\le M_1\);

-

(ii)

for any integer \(l\in [1,n-1]\) and \(\epsilon >0\), if \(G(k-j,l)\le \epsilon \) and \((g_{k-j}^{(l+1)})^2\ge M_2\epsilon \) hold for \(j\in [0,\min \{k,m\}-1]\), then \(\alpha _k^{-1}\ge \frac{2}{3}\lambda _{l+1}\).

Here,

Now we show R-linear convergence of the proposed family by proving that the stepsize (15) satisfies Property (A).

Theorem 3

Suppose that the sequence \(\{\Vert g_k\Vert \}\) is generated by the family (15) applied to n-dimensional quadratics with the matrix A has the form (18) and \(1=\lambda _1\le \lambda _2\le \cdots \le \lambda _n\). Then either \(g_k=0\) for some finite k or the sequence \(\{\Vert g_k\Vert \}\) converges to zero R-linearly.

Proof

Let \(M_1=\lambda _n\) and \(M_2=2\). We have by (15) and the fact \(\gamma _k\in [0,1]\) that

Thus, (i) of Property (A) holds. If \(G(k-j,l)\le \epsilon \) and \((g_{k-j}^{(l+1)})^2\ge M_2\epsilon \) hold for \(j\in [0,\min \{k,m\}-1]\), we have

That is, (ii) of Property (A) holds. This completes the proof. \(\square \)

4 Selecting \(\gamma _{k}\)

In this section, we present three different selection rules for the parameter \(\gamma _{k}\) of the family (15).

The simplest scheme for choosing \(\gamma _{k}\) is to fix it for all iterations. For example, we can set \(\gamma _{k}=0.1, 0.2\), etc. However, since the information carried by the two BB stepsizes changes as the iteration process going on such a fixed scheme may deteriorate the performance because it fixes the ratios of the long BB stepsize \(\alpha _{k}^{BB1}\) and the short BB stepsize \(\alpha _{k}^{BB2}\) contributed to the stepsize \(\alpha _{k}\). Thus, it is better to vary \(\gamma _{k}\) at each iteration.

One direct way for modifying \(\gamma _{k}\) is, as the randomly relaxed Cauchy method [35], randomly choose it in the interval (0, 1). But this scheme determines the value of \(\gamma _{k}\) without using any information at the current and former iterations.

The next strategy borrows the idea of cyclic gradient methods [7, 10, 12, 35], where a stepsize is reused for m iterations. Such a cyclic scheme is superior to its noncyclic counterpart in both theory and practice. Dai and Fletcher [10] showed that if the cyclic length m is greater than n / 2, the cyclic SD method is likely to be R-superlinearly convergent. Similar numerical convergence evidences were also observed for the CBB1 method in [12]. Motivated by those advantages of the cyclic scheme, for the family (15), we choose \(\gamma _{k}\) such that the current stepsize approximates the former one as much as possible. That is,

which yields

Clearly, \(\gamma _{k}^{C}=0\) when \(\alpha _{k-1}\le \alpha _{k}^{BB2}\); \(\gamma _{k}^{C}=1\) when \(\alpha _{k-1}\ge \alpha _{k}^{BB1}\). This gives the following stepsize:

Recall that, for quadratics, \(\alpha _{k}^{BB1}=\alpha _{k-1}^{SD}\) and \(\alpha _{k}^{BB2}=\alpha _{k-1}^{MG}\), where

which is a short stepsize and satisfies \(\alpha _{k}^{MG}\le \alpha _{k}^{SD}\). We refer the reader to [15] for additional details on \(\alpha _{k}^{MG}\). It follows from (42) that \(\tilde{\alpha }_{k}\) is adaptively selected and truncated by making use of the information of the former iteration. In particular, the stepsize is increased if the former one is too short (i.e., \(\alpha _{k-1}\le \alpha _{k-1}^{MG}\)) while it is decreased if the former one is too long (i.e., \(\alpha _{k-1}\ge \alpha _{k-1}^{SD}\)). Moreover, the former stepsize will be reused if it lies in \([\alpha _{k}^{BB2},\alpha _{k}^{BB1}]\). Thus, (42) is an adaptive truncated cyclic scheme and we will call it ATC for short.

As cyclic methods, we need to update the stepsize every m iterations to avoid using a stepsize for too many iterations. Many different stepsizes can be employed. Here, we suggest three candidates. The first is the long BB stepsize \(\alpha _{k}^{BB1}\), i.e.,

The second is the short BB stepsize \(\alpha _{k}^{BB2}\), i.e.,

The last is \(\alpha _{k}^P\) given by (16), which is a special case of the family (15). That is,

In what follows we shall refer (43), (44) and (45) to as ATC1, ATC2 and ATC3, respectively.

We tested the family (15) with fixed \(\gamma _{k}\) on quadratic problems to see how the values of \(\gamma _{k}\) affect the performance. In particular, \(\gamma _{k}\) is set to 0.1, 0.3, 0.5, 0.7 and 0.9 for all k, respectively. The examples in [15, 24, 40] were employed, where \(A=QVQ^T\) with

and \(w_1\), \(w_2\), and \(w_3\) are unitary random vectors, \(V=diag(v_1,\ldots ,v_n)\) is a diagonal matrix where \(v_1=1\), \(v_n=\kappa \), and \(v_j\), \(j=2,\ldots ,n-1\), are randomly generated between 1 and \(\kappa \). We stopped the iteration if the number of iteration exceeds 20,000 or

is satisfied for some given \(\epsilon \).

Four values of the condition number \(\kappa \): \(10^3, 10^4, 10^5, 10^6\) as well as three values of \(\epsilon \): \(10^{-6}, 10^{-9}, 10^{-12}\) were used. For each value of \(\kappa \) and \(\epsilon \), 10 instances with \(v_j\) evenly distributed in \([1,\kappa ]\) were generated. For each instance, the entries of b were randomly generated in \([-10,10]\) and the vector \(e=(1,\ldots ,1)^T\) was used as the starting point.

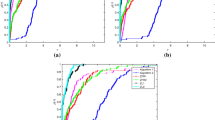

The BB method was also run for comparison, i.e. \(\gamma _{k}=1\). We compared the performance of the algorithms by the required number of iterations, as described in [21]. In other words, for each method, we plot the ratio of problems for which the method is within a factor \(\rho \) of the least iterations. For the 100-dimensional case, we can see from Fig. 1 that the performance of the family improves as \(\gamma _{k}\) becomes larger. However, for the 1000-dimensional case, Fig. 2 shows that the family (15) with \(\gamma _{k}=0.7\) or 0.9 can outperform the BB method for some \(\rho \) around 1.5. That is, for some problems, the long BB stepsize \(\alpha _{k}^{BB1}\) may not be the best choice in the family.

Performance profile of the family (15) with fixed \(\gamma _{k}\) based on number of iterations for 100-dimensional problems

Performance profile of the family (15) with fixed \(\gamma _{k}\) based on number of iterations for 1000-dimensional problems

Then, we applied ATC1, ATC2 and ATC3 to the above problem with \(n=1000\) and different \(v_j\) distributions. Particularly, two sets were generated: (1) \(v_j\) are evenly distributed in \([1,\kappa ]\) as the former example; (2) 20% of \(v_j\) are evenly distributed in [1, 100] and others are in \([\frac{\kappa }{2},\kappa ]\). We used three values of the condition number \(\kappa =10^4, 10^5, 10^6\) and also three values of \(\epsilon \) as the above problem. Other settings were same as the former example.

We tested the three methods with different m. The average number of iterations are presented in Table 1. We can see that, for each \(\kappa \) and m, ATC1 often outperforms ATC2 and ATC3. The performances of the three methods do not improve as m increases. For the first set of problems, ATC1 with \(m=30\) performs better than other values. For the second set of problems, ATC1 with \(m=8\) dominates others. Thus, in the next section we only run ATC1 using these settings.

We close this section by mentioning that there are many other different rules for computing the parameter \(\gamma _{k}\). For example, as the alternate gradient method [7, 11], we can choose \(\gamma _{k}\) to alternate short stepsizes and long stepsizes. In addition, we can also use sophisticated random schemes for \(\gamma _{k}\), see [30] and references therein.

5 Numerical results

In this section, we present numerical results of the family (15) with different settings of the parameter \(\gamma _k\). We compare the performance of the ATC1 method with the following methods: the family (15) with \(\gamma _k\) randomly chose in (0, 1) (RAND), BB [2], ALBB [11], ABB [40], CBB1 [12], CBB2, CP, Dai-Yuan (DY) [16], ABBmin1 and ABBmin2 [23], SDC [17], and the family (15) with basic adaptive truncated cyclic scheme (42) (ATC). Since the SDC method performs better than its monotone counterpart for most problems, we only run SDC.

All methods were implemented in MATLAB (v.9.0-R2016a). All the runs were carried out on a PC with an Intel Core i7, 2.9 GHz processor and 8 GB of RAM running Windows 10 system. Moreover, we stopped the iteration if the number of iteration exceeds 20,000 or (46) is satisfied for some given \(\epsilon \).

Firstly, we considered randomly generated problems in the former section with different \(v_j\) distributions as shown in Table 2. The first two sets are same as the former section. For the third set, half of \(v_j\) are in [1, 100] and the other half in \([\frac{\kappa }{2},\kappa ]\); for the fourth set, 80% of \(v_j\) are evenly distributed in [1, 100] and others are in \([\frac{\kappa }{2},\kappa ]\). The fifth set has 20% of \(v_j\) are in [1, 100], 20% of \(v_j\) are in \([100,\frac{\kappa }{2}]\) and the others in \([\frac{\kappa }{2},\kappa ]\). The last two sets only has either 10 small \(v_j\) or 10 large \(v_j\). Other settings were also same as the former section.

For the three cyclic methods: CBB1, CBB2 and CP, the best m among \(\{3,4,\ldots ,10\}\) was chosen. In particular, \(m=3\) for CBB1 and \(m=4\) for CBB2 and CP. As in [23], the parameters used by the ABBmin1 and ABBmin2 methods are set to \(\tau =0.8\), \(m=9\) and \(\tau =0.9\), respectively. While \(\tau =0.1\) was used for the ABB method which is better than 0.15 and 0.2 in our test. The pair (h, m) of the SDC method was set to (8, 6) which is better than other choices. As for our ATC1 method, we set \(m=30\) for the first and fifth sets of problems and \(m=8\) for other sets.

It can be observed from Table 3 that, the rand scheme performs worse than the fixed scheme with \(\gamma _k=1\), i.e., the BB method. The ATC method is competitive with the BB method and dominates the ALBB and CP methods for most test problems. The CBB2 method clearly outperforms the other two cyclic methods: CBB1 and CP. Moreover, the CBB2 method performs much better than the ATC and ABB methods except the first and fifth sets of problems. The CBB2 method is even faster than the DY method. Although the ABBmin2 method is the fastest one for solving the first and sixth sets of problems, it is worse than the ABBmin1, SDC and ATC1 methods for the other five sets of problems. Our ATC1 method is faster than the CBB2 method except the last set of problems. In addition, the ATC1 method is much better than the ABBmin1 and SDC methods on the first set of problems and comparable to them on the other sets of problems. Furthermore, for each tolerance, our ATC1 method is the fastest one in the sense of total number of iterations.

Then, the compared methods were applied to the non-rand quadratic minimization problem in [17] which is more difficult than its rand counterpart. In particular, A is diagonal whose elements are given by

where \(ncond=\log _{10} \kappa \), and the vector b is null. We tested 10,000-dimensional problems with three different condition numbers: \(\kappa =10^4, 10^5, 10^6\). The stopping condition (46) was employed with \(\epsilon =10^{-6}, 10^{-9}, 10^{-12}\) for all methods. For each value of \(\kappa \) or \(\epsilon \), 10 different starting points with entries in \([-10,10]\) were randomly generated.

Due to the performances of these compared methods on the above problems, only fast methods were tested, i.e., ABB, CBB2, DY, ABBmin1, ABBmin2, SDC, ATC and ATC1. The numbers of iterations averaged over those starting points of each method are listed in Table 4. Here, the results of the SDC method were obtained with the best choice of parameter setting in [17], i.e., \(h=30\) and \(m=2\).

From Table 4 we can see that the ATC method is slightly better than the CBB2 method and comparable to the DY method for most problems. The ABB method performs better than the ABBmin1 method. Although the ABB method is slower than the ABBmin2 method when the tolerance is low, it wins if a tight tolerance is required. Our ATC1 method is competitive with other methods especially for a tight tolerance. Moreover, our ATC1 method takes least total number of iterations to meet the required tolerance.

6 Conclusions

We have proposed a family of spectral gradient methods which calculates the stepsize by the convex combination of the long BB stepsize and the short BB stepsize, i.e., \(\alpha _k=\gamma _k\alpha _k^{BB1}+(1-\gamma _k)\alpha _k^{BB2}\). Similar to the two BB stepsizes, each stepsize in the family possesses certain quasi-Newton property. In addition, R-superlinear and R-linear convergence of the family were established for two-dimensional and n-dimensional strictly convex quadratics, respectively. Furthermore, with different choices of the parameter \(\gamma _k\), we obtain different stepsizes and gradient methods. The family provides us an alternative for the stepsize of gradient methods which can be easily extended to general problems by incorporating some line searches.

Since the parameter \(\gamma _k\) affects the value of \(\alpha _k\) and hence the efficiency of the method, it is interesting to investigate how to choose a proper \(\gamma _k\) to achieve satisfactory performance. We have proposed and tested three different selection rules for \(\gamma _k\), among which the adaptive truncated cyclic scheme with the long BB stepsize \(\alpha _k^{BB1}\), i.e., the ATC1 method performs best. In addition, our ATC1 method is comparable to the state-of-the-art gradient methods including the Dai-Yuan, ABBmin1, ABBmin2 and SDC methods. One interesting question is how to design an efficient scheme to choose m adaptively for the proposed method. This will be our future work.

References

Akaike, H.: On a successive transformation of probability distribution and its application to the analysis of the optimum gradient method. Ann. Inst. Stat. Math. 11(1), 1–16 (1959)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Birgin, E.G., Martínez, J.M., Raydan, M., et al.: Spectral projected gradient methods: review and perspectives. J. Stat. Softw. 60(3), 539–559 (2014)

Broyden, C.G.: A class of methods for solving nonlinear simultaneous equations. Math. Comput. 19(92), 577–593 (1965)

Cauchy, A.: Méthode générale pour la résolution des systemes d’équations simultanées. Comp. Rend. Sci. Paris 25, 536–538 (1847)

Dai, Y.H.: Alternate step gradient method. Optimization 52(4–5), 395–415 (2003)

Dai, Y.H.: A new analysis on the Barzilai–Borwein gradient method. J. Oper. Res. Soc. China 2(1), 187–198 (2013)

Dai, Y.H., Al-Baali, M., Yang, X.: A positive Barzilai–Borwein-like stepsize and an extension for symmetric linear systems. In: Numerical Analysis and Optimization, pp. 59–75. Springer (2015)

Dai, Y.H., Fletcher, R.: On the asymptotic behaviour of some new gradient methods. Math. Program. 103(3), 541–559 (2005)

Dai, Y.H., Fletcher, R.: Projected Barzilai–Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 100(1), 21–47 (2005)

Dai, Y.H., Hager, W.W., Schittkowski, K., Zhang, H.: The cyclic Barzilai–Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26(3), 604–627 (2006)

Dai, Y.H., Kou, C.: A Barzilai–Borwein conjugate gradient method. Sci. China Math. 59, 1511–1524 (2016)

Dai, Y.H., Liao, L.Z.: \(R\)-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Dai, Y.H., Yuan, Y.X.: Alternate minimization gradient method. IMA J. Numer. Anal. 23(3), 377–393 (2003)

Dai, Y.H., Yuan, Y.X.: Analysis of monotone gradient methods. J. Ind. Manag. Optim. 1(2), 181 (2005)

De Asmundis, R., Di Serafino, D., Hager, W.W., Toraldo, G., Zhang, H.: An efficient gradient method using the Yuan steplength. Comput. Optim. Appl. 59(3), 541–563 (2014)

De Asmundis, R., Di Serafino, D., Riccio, F., Toraldo, G.: On spectral properties of steepest descent methods. IMA J. Numer. Anal. 33(4), 1416–1435 (2013)

Dennis Jr., J.E., Moré, J.J.: Quasi-Newton methods, motivation and theory. SIAM Rev. 19(1), 46–89 (1977)

Di Serafino, D., Ruggiero, V., Toraldo, G., Zanni, L.: On the steplength selection in gradient methods for unconstrained optimization. Appl. Math. Comput. 318, 176–195 (2018)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Fletcher, R.: On the Barzilai–Borwein method. In: Optimization and Control with Applications, pp. 235–256 (2005)

Frassoldati, G., Zanni, L., Zanghirati, G.: New adaptive stepsize selections in gradient methods. J. Ind. Manag. Optim. 4(2), 299 (2008)

Friedlander, A., Martínez, J.M., Molina, B., Raydan, M.: Gradient method with retards and generalizations. SIAM J. Numer. Anal. 36(1), 275–289 (1998)

Gonzaga, C.C., Schneider, R.M.: On the steepest descent algorithm for quadratic functions. Comput. Optim. Appl. 63(2), 523–542 (2016)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23(4), 707–716 (1986)

Huang, Y., Liu, H.: Smoothing projected Barzilai–Borwein method for constrained non-Lipschitz optimization. Comput. Optim. Appl. 65(3), 671–698 (2016)

Huang, Y., Liu, H., Zhou, S.: Quadratic regularization projected Barzilai–Borwein method for nonnegative matrix factorization. Data Min. Knowl. Discov. 29(6), 1665–1684 (2015)

Jiang, B., Dai, Y.H.: Feasible Barzilai–Borwein-like methods for extreme symmetric eigenvalue problems. Optim. Methods Softw. 28(4), 756–784 (2013)

Kalousek, Z.: Steepest descent method with random step lengths. Found. Comput. Math. 17(2), 359–422 (2017)

Liu, Y.F., Dai, Y.H., Luo, Z.Q.: Coordinated beamforming for MISO interference channel: complexity analysis and efficient algorithms. IEEE Trans. Signal Process. 59(3), 1142–1157 (2011)

Nocedal, J., Sartenaer, A., Zhu, C.: On the behavior of the gradient norm in the steepest descent method. Comput. Optim. Appl. 22(1), 5–35 (2002)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13(3), 321–326 (1993)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7(1), 26–33 (1997)

Raydan, M., Svaiter, B.F.: Relaxed steepest descent and Cauchy–Barzilai–Borwein method. Comput. Optim. Appl. 21(2), 155–167 (2002)

Tan, C., Ma, S., Dai, Y.H., Qian, Y.: Barzilai–Borwein step size for stochastic gradient descent. In: Advances in Neural Information Processing Systems, pp. 685–693 (2016)

Wang, Y., Ma, S.: Projected Barzilai–Borwein method for large-scale nonnegative image restoration. Inverse Probl. Sci. Eng. 15(6), 559–583 (2007)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479–2493 (2009)

Yuan, Y.X.: Step-sizes for the gradient method. AMS IP Stud. Adv. Math. 42(2), 785–796 (2008)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Acknowledgements

The authors are very grateful to the associate editor and the two anonymous referees whose suggestions and comments greatly improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China (Grant Nos. 11631013, 11701137, 11671116) and the National 973 Program of China (Grant No. 2015CB856002).

Rights and permissions

About this article

Cite this article

Dai, YH., Huang, Y. & Liu, XW. A family of spectral gradient methods for optimization. Comput Optim Appl 74, 43–65 (2019). https://doi.org/10.1007/s10589-019-00107-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00107-8