Abstract

This paper considers the microbial batch culture process for producing 1,3-propanediol (1,3-PD) via glycerol fermentation. Our goal was to design an optimal control scheme for this process, with the aim of balancing two (perhaps competing) objectives: (i) the process should yield a sufficiently high concentration of 1,3-PD at the terminal time and (ii) the process should be robust with respect to changes in various uncertain system parameters. Accordingly, we pose an optimal control problem, in which both process yield and process sensitivity are considered in the objective function. The control variables in this problem are the terminal time of the batch culture process and the initial concentrations of biomass and glycerol in the batch reactor. By performing a time-scaling transformation and introducing an auxiliary dynamic system to calculate process sensitivity, we obtain an equivalent optimal control problem in standard form. We then develop a particle swarm optimization algorithm for solving this equivalent problem. Finally, we explore the trade-off between process efficiency and process robustness via numerical simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1,3-Propanediol (1,3-PD) is an important chemical product with numerous applications in cosmetics, adhesives, lubricants, and medicines. In particular, 1,3-PD has been used as a monomer to synthesize a new type of polyester called polytrimethylene terepthalate [1]. At present, there are two methods for producing 1,3-PD: chemical synthesis and microbial conversion. Microbial conversion, in which a substrate such as glycerol is converted to 1,3-PD via fermentation, is now attracting significant interest because it is relatively easy to implement and does not generate toxic byproducts. However, when compared with traditional chemical synthesis methods, microbial conversion usually yields a lower 1,3-PD concentration. Therefore, optimization techniques are urgently needed to improve the productivity of the microbial conversion process and thus make it competitive with chemical synthesis.

There are three common methods of microbial fermentation: batch culture, continuous culture, and fed-batch culture. In batch culture, the bacteria and substrate are added to the bioreactor at the beginning of the process, and nothing is added during the process. In continuous culture, fresh medium flows into the fermentor continuously to replenish consumed substrate. Fed-batch culture is a mixture of the batch and continuous cultures: the time horizon is divided into periods, and the fermentation process switches between a continuous phase (in which substrate is added continuously to the reactor) and a batch phase (in which no substrate is added to the reactor). In this paper, we focus on the batch culture process with glycerol as the substrate. Previous research indicates that this batch culture process is highly promising for producing commercially viable 1,3-PD of high concentration [2–6].

The microbial conversion process for synthesizing 1,3-PD has been studied since the 1980s [7]. An experimental investigation into the multiple inhibitions of the fermentation process is given in [8], and studies based on metabolic flux and metabolic pathway analysis are given in [9–13]. Mathematical models of the microbial conversion process, together with various process control strategies, have been considered in [14–20]. However, these references do not take parameter uncertainty into account. Parameter uncertainty is a key issue in practice because it is difficult (if not impossible) to determine the exact values of many parameters in the dynamic equations describing microbial conversion. Thus, in this paper, we consider the robust control of the microbial batch culture process in the presence of parameter uncertainties. The problem is to design a control scheme that maximizes the yield of 1,3-PD at the terminal time, and also minimizes the process sensitivity with respect to parameter uncertainties.

Sensitivity analysis deals with the influence that uncertain factors (e.g., random noise) exert on system performance. In the microbial batch culture process, the control variables are the concentrations of biomass and glycerol in the batch reactor at the initial time, as well as the terminal time of the process. The dynamic model contains 9 uncertain model parameters, and the influence that these parameters exert on the final 1,3-PD yield needs to be minimized. Thus, inspired by the work in [21–26], we propose an optimal control formulation that incorporates a non-standard sensitivity term to measure the sensitivity of the 1,3-PD yield with respect to the uncertain parameters. The trade-off in the objective function between process sensitivity and process yield is governed by a non-negative weight factor. When the weight factor is small, the objective function favors maximizing yield over minimizing sensitivity; when the weight factor is large, minimizing sensitivity is the priority.

This paper is organized as follows. In Sect. 2, we introduce a nonlinear dynamic model with uncertain parameters to describe the microbial batch culture process. In Sect. 3, we formulate an optimal control problem that balances the competing objectives of high 1,3-PD yield and low process sensitivity. Because our objective function contains a non-standard sensitivity term, the optimal control problem cannot be solved using conventional techniques. Thus, we develop a computational method for evaluating the process sensitivity term in Sect. 4, and then subsequently use this method to obtain an equivalent optimal control problem in standard form. We then develop a particle swarm optimization method in Sect. 5 for solving the equivalent problem. Finally, numerical results are reported in Sect. 6.

2 Dynamic Model

The dynamic model of the batch culture process is based on the following assumptions [27, 28].

Assumption 2.1

Nothing is added to, or removed from, the batch reactor during the batch culture process.

Assumption 2.2

The solution in the reactor is sufficiently well mixed so that the concentrations of reactants are uniform.

Under the above Assumptions 2.1 and 2.2, the mass balance relationships for biomass, substrate, and products in the microbial batch culture can be expressed as the following nonlinear dynamic system:

and

where \(t\) denotes process time (in hours); \(t_f\) denotes the terminal time of the process; \(x_i(t)\), \(i=1,2,3,4,5,\) are, respectively, the concentrations (in \(\text{ mmol }\ \text{ L }^{-1}\)) of biomass, glycerol, 1,3-PD, acetate and ethanol at time \(t\) in the reactor; and \(x_{0i}\), \(i=1,2,3,4,5,\) are, respectively, the initial concentrations of biomass, glycerol, 1,3-PD, acetate, and ethanol. Furthermore, \(\mu \) is the specific growth rate of cells (in h\(^{-1}\)), \(q_2\) is the specific consumption rate of substrate (in h\(^{-1}\)), and \(q_i\), \(i=3,4,5\), are the specific formation rates of products (in h\(^{-1}\)). These quantities can be expressed by the following equations [27]:

where \(\mu _m\) is the maximum specific growth rate (in \(\text{ h }^{-1}\)); \(k_2\) is the Monod saturation constant for substrate (in \(\text{ mmol }\) \(\text{ L }^{-1}\)); \(x_i^{*}\), \(i=2,3,4,5,\) are, respectively, the critical concentrations of glycerol, 1,3-PD, acetate, and ethanol required for cell growth; \(m_i\), \(i=2,3,4,5,\) are, respectively, the maintenance terms of substrate consumption and product formation (in \(\text{ mmol }\) \(\text{ g }^{-1}\) \(\text{ h }^{-1}\)) under substrate-limited conditions; \(Y_2\) is the maximum growth yield (in \(\text{ mmol }\) \(\text{ g }^{-1}\)); and \(Y_i\), \(i=3,4,5,\) are the maximum product yields (in \(\text{ mmol }\) \(\text{ g }^{-1}\)). The values of \(\mu _m\) and \(x_i^{*}\), \(i=2,3,4,5,\) are well defined [6]:

However, the values of the other model parameters are uncertain and difficult to determine exactly. We collect the uncertain parameters into a vector \(\sigma \):

Methods for estimating the values of these uncertain parameters using experimental data are given in [18, 19, 29–32]. The following estimates are used in [6]:

We will use these estimates as nominal parameter values in this paper.

The initial concentrations of 1,3-PD, acetate, and ethanol in the dynamic model (1)–(2) are given:

The initial concentrations of biomass and glycerol, on the other hand, are control variables to be optimized. Our aim was to choose these control variables in such a way that the sensitivity of the microbial process with respect to changes in the nominal parameter values (7) is minimized.

3 Optimal Control Versus Robust Control: A Trade-off

The control variables in the microbial fermentation process (1)–(2) are the initial concentrations of biomass and glycerol and the terminal time of the process. Let \(x_i(\cdot |x_{01},x_{02},t_f,\sigma )\), \(i=1,2,3,4,5\), denote the solution of (1)–(2) corresponding to the control variables \(x_{01}\), \(x_{02}\), and \(t_f\) and the parameter vector \(\sigma \in \mathbb {R}^{9}\).

Suppose that we are given a nominal parameter vector \(\sigma \in \mathbb {R}^{9}\). The control objective in microbial fermentation was to maximize the yield of 1,3-PD. Thus, we want to choose the control variables \(x_{01}\), \(x_{02}\), and \(t_f\) to maximize the following objective function:

which is proportional to the final 1,3-PD yield.

The control variables are subject to the following bound constraints:

The problem of maximizing 1,3-PD yield can be formulated as follows.

Problem P Given the nominal parameter vector \(\sigma \in \mathbb {R}^{9}\), choose the initial concentration of biomass \(x_{01}\), the initial concentration of glycerol \(x_{02}\), and the process terminal time \(t_f\) to maximize (9) subject to the bound constraints (10).

In Problem P, the optimal control variables are determined under the assumption that the nominal parameter estimates are exact. However, this is usually not the case in practice; the nominal estimates are only approximations of the true model parameters. Thus, inspired by the work in [21–26], we consider the following measure of system sensitivity with respect to the uncertain model parameters:

Clearly, (11) measures the rate at which the process yield changes in response to small changes in the model parameters. Thus, a low value for system sensitivity indicates that the system is robust. We now propose the following modified objective function that incorporates our desire to maximize (9) and minimize (11):

where \(\alpha \ge 0\) is a weight factor selected by the system operator.

Our new optimal control problem is stated below.

Problem P \(_{\alpha }\) Given the nominal parameter vector \(\sigma \in \mathbb {R}^{9}\), choose the initial concentration of biomass \(x_{01}\), the initial concentration of glycerol \(x_{02}\), and the process terminal time \(t_f\) to maximize (12) subject to the bound constraints (10).

4 Problem Transformation

4.1 Time-Scaling Transformation

Problem P\(_{\alpha }\) exhibits two non-standard aspects: (i) the terminal time is free instead of fixed and (ii) the objective function contains a non-standard sensitivity term. To circumvent the first difficulty, we treat \(t_f\) as an optimization variable and apply the transformation \(t=t_f\tau \), where \(\tau \in [0,1]\) is a new time variable. Then the original dynamic system (1) can be converted into an equivalent form as follows:

where

The initial conditions (2) stay the same:

where \(x_{03}\), \(x_{04}\), \(x_{05}\) are given by (8), and \(x_{01}\) and \(x_{02}\) are control variables. Under the time-scaling transformation \(t=t_f\tau \), the objective function (9) becomes:

Furthermore, the modified objective function in Problem P\(_{\alpha }\) becomes:

It follows that Problem P\(_{\alpha }\) is equivalent to the following optimal control problem with fixed terminal time.

Problem \(\tilde{\text {P}}_{\alpha }\). Given the nominal parameter vector \(\sigma \in \mathbb {R}^{9}\), choose the initial concentration of biomass \(x_{01}\), the initial concentration of glycerol \(x_{02}\), and the process terminal time \(t_f\) to maximize (19) subject to the bound constraints (10).

In Problem \(\tilde{\text {P}}_{\alpha }\), the trade-off between process yield and process sensitivity can be adjusted through the weight \(\alpha \). When \(\alpha =0\), the sensitivity term in \(\tilde{J}_\alpha \) disappears, and Problem \(\tilde{\text {P}}_{\alpha }\) involves maximizing process yield without regard for process robustness. In this case, Problem \(\tilde{\text {P}}_{\alpha }\) is a standard optimal control problem and can be solved using conventional optimal control methods. However, when \(\alpha >0\), conventional optimal control methods are not applicable because the objective function (19) contains a non-standard sensitivity term. In the next subsection, we introduce an auxiliary dynamic system to compute the sensitivity term.

4.2 Computing System Sensitivity

Denote

Thus, \(\sigma _1\) corresponds to \(k_2\), \(\sigma _2\) corresponds to \(m_2\), and so on. For each \(k=1,2,\ldots ,9,\) consider the following auxiliary dynamic system:

with the initial conditions

where \(\partial \tilde{\mu }/\partial \sigma _k\), \(\partial \tilde{\mu }/\partial x_j\), \(\partial \tilde{q}_i/\partial \sigma _k\), \(\partial \tilde{q}_i/ \partial x_j\) are defined in the obvious manner (explicit formulas for these derivatives are given in the appendix). Let \(\tilde{\psi }_i^k(\cdot |x_{01},x_{02},t_f,\sigma )\), \(i=1,2,3,4,5\), denote the solution of (20)–(21) corresponding to the control variables \(x_{01},x_{02}\), and \(t_f\) and the nominal parameter vector \(\sigma \in \mathbb {R}^9\).

The following important result shows that the solution of the auxiliary system (20)–(21) gives the sensitivity of the state with respect to the model parameters.

Theorem 4.1

Let \(x_{01}\), \(x_{02}\), \(t_f\), and \(\sigma \) be fixed. Furthermore, assume that there exists an open neighborhood containing \(\sigma \), and a corresponding constant \(L_1>0\), such that for all \(\sigma '\) in the neighborhood,

Then for each \(k=1,2,\ldots ,9,\)

Proof

Let \(k\in \{1,\ldots ,9\}\) be arbitrary but fixed. Furthermore, let \(e^k\) denotes the \(k\)th unit basis vector in \(\mathbb {R}^{9}\), and let \(g\) denotes the right-hand side of the dynamic system (13):

where

To prove the theorem, we need to show that

where \(\tilde{\psi }^k(\cdot )\) denotes the vector-valued solution of (20) and (21) with respect to \(x_{01}\), \(x_{02}\), \(t_f\), and \(\sigma \), and \(\tilde{x}^{\delta }(\cdot )\) denotes the vector-valued solution of (13) and (17) with respect to \(x_{01}\), \(x_{02}\), \(t_f\), and \(\sigma +\delta e^k\). That is,

We will prove equation (22) in four steps.

Step 1 Preliminaries

For each real number \(\delta \in \mathbb {R}\), define a corresponding function \(v^{\delta }:[0,1]\rightarrow \mathbb {R}^5\) as follows:

Thus, using the definition of \(g\),

Since \(\tilde{x}^{\delta }\) and \(\tilde{x}^{0}\) are continuous, \(v^{\delta }\) is also continuous. It follows from the mean value theorem that, for all \(\tau \in [0,1]\),

where

According to the theorem hypothesis, there exists an open bounded neighborhood of \(0\), denoted by \(\Delta \), such that for all \(\delta \in \Delta \),

where \(B_{5}(\sqrt{5}L_1)\) denotes the closed ball in \(\mathbb {R}^5\) of radius \(\sqrt{5}L_1\) centered at the origin. Since \(B_{5}(\sqrt{5}L_1)\) is convex, for each \(\delta \in \Delta \), we have

Furthermore, it is obvious that there exists a constant \(L_2>0\) such that for each \(\delta \in \Delta \),

where \(B_{9}(L_2)\) denotes the closed ball in \(\mathbb {R}^9\) of radius \(L_2\) centered at the origin.

Clearly, from (25) and (26) and the definitions of \(\partial \tilde{\mu }/\partial \sigma _k\), \(\partial \tilde{\mu }/\partial x_i\), \(\partial \tilde{q}_i/\partial \sigma _k\), \(\partial \tilde{q}_i/ \partial x_j\) in the Appendix, there exists a real number \(L_3>0\) such that for each \(\delta \in \Delta \),

and

where \(|\cdot |_{5}\) denotes the Euclidean norm in \(\mathbb {R}^5\) and \(|\cdot |_{5\times 5}\) denotes the corresponding induced matrix norm in \(\mathbb {R}^{5\times 5}\).

Step 2 The function \(v^\delta \) is of order \(\delta \)

Let \(\delta \in \Delta \) be arbitrary. Taking the norm of both sides of (24) and applying the definition of \(L_3\) give

Thus, applying Gronwall’s Lemma gives

Since \(\delta \in \Delta \) was selected arbitrarily, this inequality holds whenever the magnitude of \(\delta \) is sufficiently small. Thus, the function \(v^\delta \) is of order \(\delta \), as required.

Step 3 Definition and limiting behavior of \(\rho _1\)

For each \(\delta \in \Delta \), define two corresponding functions \(\lambda ^{1,\delta }:[0,1]\rightarrow \mathbb {R}^5\) and \(\lambda ^{2,\delta }:[0,1]\rightarrow \mathbb {R}^5\) as follows:

and

Furthermore, define another function \(\rho _1:\Delta \setminus \{0\}\rightarrow \mathbb {R}\) as follows:

Now, clearly:

-

\(x^0(\tau )+\eta v^\delta (\tau )\rightarrow x^0(\tau )\) as \(\delta \rightarrow 0\), uniformly with respect to \(\tau \in [0,1]\) and \(\eta \in [0,1]\);

-

\(\sigma +\eta \delta e^k\rightarrow \sigma \) as \(\delta \rightarrow 0\), uniformly with respect to \(\eta \in [0,1]\).

Moreover, since these convergences take place inside the balls \(B_{5}(\sqrt{5}L_1)\) and \(B_{9}(L_2)\), respectively, and \(\partial g/\partial \sigma _k\) and \(\partial g/\partial x\) are uniformly continuous on the compact set \(B_5(\sqrt{5}L_1)\times B_{9}(L_2)\),

and

uniformly with respect to \(\tau \in [0,1]\) and \(\eta \in [0,1]\). These results, together with inequality (27), imply that \(\delta ^{-1}\lambda ^{1,\delta }\rightarrow 0\) and \(\delta ^{-1}\lambda ^{2,\delta }\rightarrow 0\) uniformly on \([0,1]\) as \(\delta \rightarrow 0\). Consequently,

Step 4 Comparing \(\delta ^{-1}v^\delta \) with \(\tilde{\psi }^k(\cdot |x_{01},x_{02},t_f,\sigma )\)

Now, we use the results proved in the previous steps to establish (22). First, let \(\delta \in \Delta \) be arbitrary but fixed. Using (24), we have

Furthermore, using the definition of \(g\), the vector-valued solution of the auxiliary system (20)–(21) is

Multiplying (29) by \(\delta ^{-1}\) and then subtracting (30) give

Therefore,

By Gronwall’s Lemma,

Since \(\delta \in \Delta \) was selected arbitrarily, we can take the limit as \(\delta \rightarrow 0\) in the above inequality and apply (28) to establish

which proves equation (22), as required. \(\square \)

According to Theorem 4.1, the state is differentiable with respect to the uncertain parameter vector \(\sigma \). Moreover, the partial derivative of the state with respect to \(\sigma \) satisfies the auxiliary system (20)–(21). We now use this result to derive a formula for the system sensitivity in Problem \(\tilde{\text {P}}_{\alpha }\).

Theorem 4.2

Let \(x_{01}\), \(x_{02}\), \(t_f\), and \(\sigma \) be fixed. Furthermore, as in Theorem 4.1, assume that there exists an open neighborhood containing \(\sigma \) and a corresponding constant \(L_1>0\), such that for all \(\sigma '\) in the neighborhood,

Then

Proof

By Theorem 4.1,

Thus, differentiating \(\tilde{G}(x_{01},x_{02},t_f|\sigma )\) with respect to \(\sigma _k\) yields

Consequently,

as required. \(\square \)

Theorem 4.2 shows that the system sensitivity can be computed by solving the auxiliary system (20)–(21). We will now use this result to convert Problem \(\tilde{\text {P}}_{\alpha }\) into a Mayer optimal control problem in which the objective only depends on the final state reached by the system.

4.3 Transformation into Mayer Form

By combining the state and auxiliary systems, we obtain the following expanded system of ordinary differential equations:

where \(k=1,2,\ldots ,9\), and \(\tilde{\mu }\) and \(\tilde{q}_i\) are defined by (15)–(16), with the initial conditions

According to Theorem 4.2, the objective function (19) can be expressed as follows:

This equation expresses \(\tilde{J}_\alpha (x_{01},x_{02},t_f|\sigma )\) in Mayer form as a function of the solution of the expanded system (31)–(32) at the terminal time. Thus, Problem \(\tilde{\text {P}}_{\alpha }\) is equivalent to the following optimal control problem in Mayer form.

Problem \(\tilde{\text {Q}}_{\alpha }\). Given the nominal parameter vector \(\sigma \in \mathbb {R}^{9}\), choose the initial concentration of biomass \(x_{01}\), the initial concentration of glycerol \(x_{02}\), and the process terminal time \(t_f\) to maximize (33) subject to the bound constraints (10).

In the next section, we introduce a particle swarm optimization algorithm to solve Problem \(\tilde{\text {Q}}_{\alpha }\).

5 Particle Swarm Optimization Algorithm

Because of the complex nonlinear differential equations constituting the expanded system (31)–(32), Problem \(\tilde{\text {Q}}_{\alpha }\) is a non-convex dynamic optimization problem. Thus, when applied to Problem \(\tilde{\text {Q}}_{\alpha }\), gradient-based optimization algorithms will likely get trapped at a local solution. To overcome this difficulty, we introduce a particle swarm optimization (PSO) algorithm, similar to those described in [33–35], to solve Problem \(\tilde{\text {Q}}_{\alpha }\). The main idea of the PSO algorithm is to construct a “swarm” of particles in the feasible space defined by the box constraints (10). As the algorithm progresses, the particles in the swarm update their positions according to local and global information. Previous studies [36] have demonstrated that the standard PSO algorithm converges quickly in the initial stages, but slows rapidly when approaching the optimal solution. Therefore, improved PSO algorithms were subsequently proposed in the literature [36, 37]. These improved PSO algorithms tend to avoid local optimal solutions and thus premature convergence, by significantly enhancing the information communication in the evolutionary process. In this paper, we adapt the algorithm in [37] to solve Problem \(\tilde{\text {Q}}_{\alpha }\).

The parameters in the PSO algorithm are defined below.

-

\(N\) is the total number of particles in the swarm.

-

\(l\) is an integer for testing convergence (if the optimal objective value has not changed after \(l\) iterations, then we terminate the algorithm).

-

\(c_1\) and \(c_2\) are the cognitive and social scaling parameters.

-

\(w_{\min }\) and \(w_{\max }\) are the minimum and maximum inertia weights.

-

\(V_{\min }\) and \(V_{\max }\) are vectors containing the minimum and maximum particle velocities.

-

\(K_{\min }\) and \(K_{\max }\) are the minimum and maximum number of iterations.

-

\(d_1\) and \(d_2\) are control factors.

-

\(\tau \) is the convergence tolerance.

The following variables in the PSO algorithm are updated as the algorithm proceeds.

-

\(w\) is the inertia weight.

-

\(k\) is the iteration index.

-

\(\tilde{J}_\alpha ^{n*}\) is the best objective value found by the \(n\)th individual particle.

-

\((x_{01}^{n*},x_{02}^{n*},t_f^{n*})\) is the best control strategy found by the \(n\)th individual particle.

-

\(\tilde{J}_\alpha ^{*}\) is the best objective value found by any member of the swarm.

-

\((x_{01}^{*},x_{02}^{*},t_f^{*})\) is the best control strategy found by any member of the swarm.

-

\(\tilde{\mathcal {J}}_{\alpha }^{*,k}\) is the value of \(\tilde{J}_\alpha ^{*}\) at the end of the \(k\)th iteration.

The detailed steps of the PSO algorithm are described below.

PSO Algorithm

-

Step 1 Initialize the parameters \(N\), \(l\), \(c_1\), \(c_2\), \(d_1\), \(d_2\), \(\tau \), \(w_{\min }\), \(w_{\max }\), \(V_{\min }\), \(V_{\max }\), \(K_{\min }\), \(K_{\max }\).

-

Step 2 Initialize the variables,

$$\begin{aligned} 1\rightarrow k, \quad \quad -\infty \rightarrow \tilde{J}_\alpha ^{n*}, \quad \quad -\infty \rightarrow \tilde{J}_\alpha ^{*}, \quad \quad -\infty \rightarrow \tilde{\mathcal {J}}_{\alpha }^{*,k}. \end{aligned}$$ -

Step 3 According to the uniform distribution, randomly generate the positions of \(N\) particles in the rectangular region defined by constraints (10), and randomly generate the particle velocities in the rectangular region defined by \(V_{\min }\) and \(V_{\max }\). Let \((x_{01}^{n},x_{02}^n,t_f^n)\) denotes the position of the \(n\)th particle, and let \((v^n_1, v^n_2, v^n_3)\) denotes the velocity of the \(n\)th particle.

-

Step 4 For each \(n=1,\ldots ,N,\) solve the expanded dynamic system (31)–(32) and calculate the corresponding objective value \(\tilde{J}_\alpha (x_{01}^n,x_{02}^n,t_f^n|\sigma )\) according to (33).

-

Step 5 If \(\tilde{J}_\alpha (x_{01}^n,x_{02}^n,t_f^n|\sigma )> \tilde{J}_\alpha ^{n*}\), then set \(\tilde{J}_\alpha (x_{01}^n,x_{02}^n,t_f^n|\sigma ) \rightarrow \tilde{J}_\alpha ^{n*}\), and \((x_{01}^n,x_{02}^n,t_f^n)\rightarrow (x_{01}^{n*},x_{02}^{n*},t_f^{n*})\).

-

Step 6 If \(\tilde{J}_\alpha (x_{01}^n,x_{02}^n,t_f^n|\sigma )> \tilde{J}_\alpha ^{*}\), then set \(\tilde{J}_\alpha (x_{01}^n,x_{02}^n,t_f^n|\sigma ) \rightarrow \tilde{J}_\alpha ^{*}\), and \((x_{01}^n,x_{02}^n,t_f^n)\rightarrow (x_{01}^{*},x_{02}^{*},t_f^{*})\).

-

Step 7 Set \(\tilde{J}_\alpha ^{*}\rightarrow \tilde{\mathcal {J}}_{\alpha }^{*,k} \).

-

Step 8 If \(k \ge K_{\max }\), or \(k> K_{\min }\) and \(\mid \tilde{\mathcal {J}}_{\alpha }^{*,k}-\tilde{\mathcal {J}}_{\alpha }^{*,k-l}\mid \le \tau \), then stop. Otherwise go to Step 9.

-

Step 9 Update the inertia term according to the following formula:

$$\begin{aligned} (w_{\max }-w_{\min }-d_1)\exp \Big \{\frac{1}{K_{\max }+d_2(k-1)}\Big \}\rightarrow w. \end{aligned}$$ -

Step 10 For each \(n=1,2,\ldots ,N\), compute:

$$\begin{aligned} \hat{v}^{n}_{1}&= wv^{n}_1+c_1r'_{1}(x_{01}^{n*}-x_{01}^n)+c_2r''_{1}(x_{01}^{*}-x_{01}^n),\\ \hat{v}^{n}_{2}&= wv^{n}_2+c_1r'_{2}(x_{02}^{n*}-x_{02}^n)+c_2r''_{2}(x_{02}^{*}-x_{02}^n), \\ \hat{v}^{n}_{3}&= wv^{n}_3+c_1r'_{3}(t_{f}^{n*}-t_{f}^n)+c_2r''_{3}(t_{f}^{*}-t_{f}^n), \end{aligned}$$where \(r'_{j}\in (0,1)\) and \(r''_{j}\in (0,1)\), \(j=1,2,3\), are random numbers.

-

Step 11 For each \(n=1,2,\ldots ,N\), update the velocity of the \(n\)th particle according to the following formula:

$$\begin{aligned} v^{n}_{j}\leftarrow \left\{ \begin{array}{lll} V_{\min }^j, &{}&{} \text{ if } \ \hat{v}^{n}_{j}<V_{\min }^j,\\ \hat{v}^{n}_{j},&{}&{} \text{ if } \ \hat{v}^{n}_{j} \in [V_{\min }^j,V_{\max }^j],\\ V_{\max }^j,&{}&{} \text{ if } \ \hat{v}^{n}_{j}>V_{\max }^j, \end{array} \right. \end{aligned}$$where \(V_{\min }^j\) and \(V_{\max }^j\) denote the \(j\)th components of \(V_{\min }\) and \(V_{\max }\), respectively.

-

Step 12 For each \(n=1,2,\ldots ,N\), compute:

$$\begin{aligned} \hat{x}^{n}_{01}=x_{01}^n+v_{1}^{n}, \quad \quad \hat{x}^{n}_{02}=x_{02}^n+v_{2}^{n}, \quad \quad \hat{t}^{n}_{f}=t_{f}^n+v_{3}^{n}.\quad \quad \end{aligned}$$ -

Step 13 For each \(n=1,2,\ldots ,N\), update the position of the \(n\)th particle according to the following formula:

$$\begin{aligned}&x^{n}_{01}\leftarrow \left\{ \begin{array}{lll} 0.01, &{}&{} \text{ if } \ \hat{x}^{n}_{01}<0.01,\\ \hat{x}^{n}_{01},&{}&{} \text{ if } \ \hat{x}^{n}_{01} \in [0.01, 1],\\ 1,&{}&{} \text{ if } \ \hat{x}^{n}_{01}>1, \end{array}\right. \qquad x^{n}_{02}\leftarrow \left\{ \begin{array}{lll} 200, &{}&{} \text{ if } \ \hat{x}^{n}_{02}<200,\\ \hat{x}^{n}_{02},&{}&{} \text{ if } \ \hat{x}^{n}_{02} \in [200, 1700],\\ 1700,&{}&{} \text{ if } \ \hat{x}^{n}_{02}>1700, \end{array} \right. \qquad \\&t^{n}_{f}\leftarrow \left\{ \begin{array}{lll} 2, &{}&{} \text{ if } \ \hat{t}^{n}_{f}<2,\\ \hat{t}^{n}_{f},&{}&{} \text{ if } \ \hat{t}^{n}_{f} \in [2, 10],\\ 10,&{}&{} \text{ if } \ \hat{t}^{n}_{f}>10. \end{array} \right. \end{aligned}$$ -

Step 14 Set \(k+1\rightarrow k\) and return to Step 4.

6 Numerical Results

For the parameters in the PSO algorithm, we choose the following values:

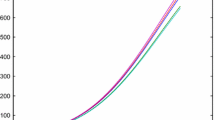

Using the PSO algorithm (implemented within FORTRAN), we solved Problem \(\tilde{\text {Q}}_{\alpha }\) for various values of \(\alpha \). The optimal control variables and optimal objective values generated by the PSO algorithm are listed in Table 1. The results in Table 1 show that, as the weight \(\alpha \) increases, the system sensitivity with respect to the uncertain parameters in \(\sigma \) decreases substantially, with little change to the optimal 1,3-PD yield. This suggests that the optimal control strategies for \(\alpha >0\) are far more robust than the optimal control strategy for \(\alpha =0\). The optimal state trajectories corresponding to the solutions in Table 1 are shown in Fig. 1. Note that our FORTRAN program for implementing PSO uses the 6th Runge–Kutta method to solve the expanded system (31)–(32).

To investigate the robustness properties of the solutions in Table 1, we randomly perturbed the parameter vector \(\sigma \) and calculated the corresponding 1,3-PD yield (as measured by \(\tilde{G}\)) for each optimal control strategy in Table 1. It turns out that the 6th component of \(\sigma \), i.e., \(Y_2\), is the most critical parameter in terms of process sensitivity: \(\partial \tilde{G}/\partial \sigma _6=\partial \tilde{G}/\partial Y_2\) is the dominant term in the sensitivity values in Table 1. Accordingly, in our simulations, we generated the perturbed parameter vectors as follows: for each \(k\ne 6\), we perturbed \(\sigma _k\) by \(1~\%\) (in the negative direction); for \(k=6\), we perturbed \(\sigma _6\) by a random percentage from the intervals

where the upper limit of each interval is referred to as the “disturbance percentage”. For each disturbance percentage \((5~\%,10~\%,\ldots ,50~\%),\) we generated 1,000 random parameter vectors according to the above procedure and calculated the corresponding value of \(\tilde{G}\) under the optimal control strategies for \(\alpha =0\) and \(\alpha =1\). Our results are shown as box plots in Fig. 2. Note that, as expected, the results for \(\alpha =1\) show far less variation in the 1,3-PD yield than the results for \(\alpha =0\). Thus, the optimal control strategy for \(\alpha =1\) gives more robust performance, at minimal cost to the final 1,3-PD yield.

For our next set of simulations, we varied the disturbance percentage from \(0.1~\%\) to \(50~\%\) in increments of \(0.1~\%\). For each disturbance percentage, a single perturbed parameter vector was generated as follows: \(\sigma _6\) was perturbed by the given disturbance percentage (in the negative direction), and the other parameters were perturbed by \(1~\%\) (in the negative direction). For each perturbed parameter vector, the value of \(\tilde{G}\) under the optimal control schemes for \(\alpha =0\) and \(\alpha =1\) was computed. The results are plotted in Fig. 3. Again, as expected, \(\alpha =1\) gives more robust results than \(\alpha =0\), especially for large values of the disturbance percentage.

7 Conclusions

This paper introduces a nonlinear dynamic system with uncertain parameters to describe the batch fermentation process for producing 1,3-PD. To maximize the productivity of the process, we propose an optimization model in which the objective function measures the final yield of 1,3-PD. In practice, the model parameters in the dynamic model are not known exactly and thus need to be estimated. There is inevitably an error between the estimated values and the true values. Thus, in this paper, we augmented the optimization model by including a non-standard sensitivity term, which penalizes deviations in the 1,3-PD yield with respect to parameter changes. A computational method, based on the time-scaling transformation, sensitivity analysis, and particle swarm optimization, was developed for solving the non-standard optimization model. The numerical results in Sect. 6 show that the method is successful at the producing robust control strategies that achieve good performance while ensuring that sensitivity with respect to parameter changes is below acceptable levels. Future work will involve investigating the theoretical properties of the cost function (33) to develop tailored optimization procedures. This has the potential to accelerate numerical convergence.

References

Biebl, H., Menzel, K., Zeng, A.P., Deckwer, W.D.: Microbial production of 1,3-propanediol. Appl. Microbiol. Biot. 52, 289–297 (1999)

Gtinzel, B.: Mikrobielle herstellung von 1,3-propandiol durch clostridium butyricum und adsorptive abtremutng von diolen. Ph.D. Dissertation, TU Braunschweig, Germany (1991)

Sun, Y.Q., Qi, W.T., Teng, H., Xiu, Z.L., Zeng, A.P.: Mathematical modeling of glycerol fermentation by Klebsiella pneumoniae: concerning enzyme-catalytic reductive pathway and transport of glycerol and 1,3-propanediol across cell membrane. Biochem. Eng. J. 38, 22–32 (2008)

Gao, C.X., Wang, Z.T., Feng, E.M., Xiu, Z.L.: Parameter identification and optimization of process for bio-dissimilation of glycerol to 1,3-propanediol in batch culture. J. Dalian Univ. Technol. 46, 771–774 (2006)

Li, X.H., Feng, E.M., Xiu, Z.L.: Optimal control and property of nonlinear dynamic system for microorganism in batch culture. Oper. Res. Trans. 9, 89–96 (2005)

Wang, L., Feng, E.M., Ye, J.X., Xiu, Z.L.: An improved model for multistage simulation of glycerol fermentation in batch culture and its parameter identification. Nonlinear Anal. Hybrid Syst. 3, 455–462 (2009)

Zeng, A.P., Rose, A., Biebl, H.: Multiple product inhibition and growth modeling of Clostridium butyricum and Klebsiella pneumonia in fermentation. Biotechnol. Bioeng. 44, 902–911 (1994)

Zeng, A.P., Biebl, H.: Bulk-chemicals from biotechnology: the case of microbial production of 1,3-propanediol and the new trends. Adv. Biochem. Eng. Biot. 74, 237–433 (2002)

Gong, Z.H., Liu, C.Y., Feng, E.M., Zhang, Q.R.: Computational method for inferring objective function of glycerol metabolism in Klebsiella pneumonia. Comput. Biol. Chem. 33, 1–6 (2008)

Zhang, Q.R., Xiu, Z.L.: Metabolic pathway analysis of glycerol metabolism in Klebsiella pneumonia incorporating oxygen regulatory system. Biotechnol. Progr. 25, 103–115 (2009)

Wang, L.: Determining the transport mechanism of an enzyme-catalytic complex metabolic network based on biological robustness. Bioproc. Biosyst. Eng. 36, 433–441 (2013)

Yuan, J.L., Zhang, X., Zhu, X., Feng, E.M., Xiu, Z.L., Yin, H.C.: Modeling and pathway identification involving the transport mechanism of a complex metabolic system in batch culture. Commun. Nonlinear Sci. Numer. Simul. 19, 2088–2103 (2014)

Yuan, J.L., Zhu, X., Zhang, X., Feng, E.M., Xiu, Z.L., Yin, H.C.: Robust identification of enzymatic nonlinear dynamical systems for 1,3-propanediol transport mechanisms in microbial batch culture. Appl. Math. Comput. 232, 150–163 (2014)

Wang, J., Sun, Q.Y., Feng, E.M.: Modeling and properties of a nonlinear autonomous switching system in fed-batch culture of glycerol. Commun. Nonlinear Sci. Numer. Simul. 17, 4446–4454 (2012)

Zeng, A.P., Deckwer, W.D.: A kinetic model for substrate and energy consumption of microbial growth under substrate-sufficient conditions. Biotechnol. Progr. 11, 71–79 (1995)

Gao, C.X., Feng, E.M., Wang, Z.T., Xiu, Z.L.: Parameters identification problem of the nonlinear dynamical system in microbial continuous cultures. Appl. Math. Comput. 169, 476–484 (2005)

Xiu, Z.L., Zeng, A.P., An, L.J.: Mathematical modeling of kinetics and research on multiplicity of glycerol bioconversion to 1,3-propanediol. J. Dalian Univ. Technol. 40, 428–433 (2000)

Liu, C.Y.: Sensitivity analysis and parameter identification for a nonlinear time-delay system in microbial fed-batch process. Appl. Math. Model. 38, 1449–1463 (2014)

Liu, C.Y.: Modeling and parameter identification for a nonlinear time-delay system in microbial batch fermentation. Appl. Math. Model. 37, 6899–6908 (2013)

Wang, G., Feng, E.M., Xiu, Z.L.: Vector measure for explicit nonlinear impulsive system of glycerol bioconversion in fed-batch cultures and its parameter identification. Appl. Math. Comput. 188, 1151–1160 (2007)

Rehbock, V., Teo, K.L., Jennings, L.S.: A computational procedure for suboptimal robust controls. Dynam. Control 2, 331–348 (1992)

Wei, W., Teo, K.L., Zhan, Z.: A numerical method for an optimal control problem with minimum sensitivity on coefficient variation. Appl. Math. Comput. 218, 1180–1190 (2011)

Loxton, R.C., Teo, K.L., Rehbock, V.: Robust suboptimal control of nonlinear systems. Appl. Math. Comput. 217, 6566–6576 (2011)

Loxton, R.C., Teo, K.L., Rehbock, V., Yiu, K.F.C.: Optimal control problems with a continuous inequality constraint on the state and the control. Autom. J. IFAC 45, 2250–2257 (2009)

Loxton, R.C., Teo, K.L., Rehbock, V., Ling, W.K.: Optimal switching instants for a switched-capacitor DC/DC power converter. Autom. J. IFAC 45, 973–980 (2009)

Loxton, R.C., Teo, K.L., Rehbock, V.: Optimal control problems with multiple characteristic time points in the objective and constraints. Autom. J. IFAC 44, 2923–2929 (2008)

Gao, C.X., Wang, Z.T., Feng, E.M., Xiu, Z.L.: Nonlinear dynamical systems of bio-dissimilation of glycerol to 1,3-propanediol and their optimal controls. J. Ind. Manag. Optim. 1, 377–388 (2005)

Wang, L., Feng, E.M., Xiu, Z.L.: Modeling nonlinear stochastic kinetic system and stochastic optimal control of microbial bioconversion process in batch culture. Nonlinear Anal. Model. Control 18, 99–111 (2013)

Chai, Q., Loxton, R., Teo, K.L., Yang, C.: A unified parameter identification method for nonlinear time-delay systems. J. Ind. Manag. Optim. 9, 471–486 (2013)

Lin, Q., Loxton, R., Teo, K.L.: The control parameterization method for nonlinear optimal control: a survey. J. Ind. Manag. Optim. 10, 275–309 (2014)

Liu, C.Y., Loxton, R., Teo, K.L.: Optimal parameter selection for nonlinear multistage systems with time-delays. Comput. Optim. Appl. (2014). doi:10.1007/s10589-013-9632-x

Lin, Q., Loxton, R.C., Xu, C., Teo, K.L.: State-delay estimation for nonlinear systems using inexact output data. In: Proceedings of the Chinese Control Conference, July 2014

Gao, W.F., Liu, S.Y., Huang, L.L.: Particle swarm optimization with chaotic opposition-based population initialization and stochastic search technique. Commun. Nonlinear Sci. Numer. Simul. 17, 4316–4327 (2012)

Gandomi, A.H., Yun, G.J., Yang, X.S., Talatahari, S.: Chaos-enhanced accelerated particle swarm optimization. Commun. Nonlinear Sci. Numer. Simul. 18, 327–340 (2013)

Zhang, J., Zhang, C., Chu, T., Perc, M.: Resolution of the stochastic strategy spatial prisoner’s dilemma by means of particle swarm optimization. PLoS One 6, e21787 (2011)

Ma, G., Zhou, W., Chang, X.L.: A novel particle swarm optimization algorithm based on particle migration. Appl. Math. Comput. 218, 6620–6626 (2012)

Kennedy J., Eberhart R.: Particle swarm optimization. In: Proceedings of the IEEE International Conference on Neural Networks, Nov–Dec 1995

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant Nos. 11171050, 11101262, and 11371164) and the National Natural Science Foundation for the Youth of China (Grant Nos. 11301081, 11401073).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Mimmo Iannelli.

Appendix

Appendix

The explicit formulas for the derivatives of \(\tilde{\mu }\) in (20)–(21) are given below.

The explicit formulas for the derivatives of \(\tilde{q}_2\) in (20)–(21) are given below.

The explicit formulas for the derivatives of \(\tilde{q}_i\), \(i=3,4,5\), in (20)–(21) are given below.

Rights and permissions

About this article

Cite this article

Cheng, G., Wang, L., Loxton, R. et al. Robust Optimal Control of a Microbial Batch Culture Process. J Optim Theory Appl 167, 342–362 (2015). https://doi.org/10.1007/s10957-014-0654-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-014-0654-z