Abstract

Copositive programming (CP) can be regarded as a special instance of linear semi-infinite programming (SIP). We study CP from the viewpoint of SIP and discuss optimality and duality results. Different approximation schemes for solving CP are interpreted as discretization schemes in SIP. This leads to sharp explicit error bounds for the values and solutions in dependence on the mesh size. Examples illustrate the structure of the original program and the approximation schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the last years copositive programming has attracted much attention due to the fact that many difficult (NP-hard) quadratic and integer programs can be reformulated equivalently as CP (see, e.g., [1–3]). This in particular implies that copositive programming is NP-hard.

However, CP represents a specially structured convex program. So, the hope is that this structure allows the construction of new methods for approximately solving integer programs.

CP can also be regarded as a special instance of linear semi-infinite programming (SIP). The aim of this paper is to take advantage of this relation.

The article is organized as follows. Section 2 provides an introduction into copositive programming and linear semi-infinite optimization. In Sect. 3, first order optimality conditions and duality results of SIP are applied to CP leading to known results but also to a new insight. In Sect. 4, we reinterpret approximation schemes for solving CP (in [1, 4, 5]) as discretization methods in SIP. This leads to new explicit error bounds between the approximate and the original problem. For the schemes in [5], the approximation error for the feasible sets and the value functions are shown to behave like \({\mathcal{O}}(d^{2})\) when the mesh-size d goes to zero. Some examples compare the structure of the original program with that of the approximation schemes.

In Sect. 5, it is proven that for another scheme (in [1]) the (sharp) order of convergence is \({\mathcal{O}}(d)\). Section 6 gives error bounds for the maximizers in dependence on the order of the maximizer of the original program. We also show by examples that maximizers of any order can occur in copositive programming. Section 7 comments briefly on the fact that the results can easily be extended from copositive to set-copositive programming.

2 Preliminaries

In this paper, we consider the pair of primal/dual copositive programs (CP):

with \(c \in \mathbb{R}^{n}\) and \(A_{i}, B \in \mathcal{S}_{m}\). \(\mathcal{S}_{m}\) denotes the set of symmetric m×m-matrices, and 〈Y,B〉=∑ ij y ij b ij the inner product of Y=(y ij ) and B=(b ij ). We assume throughout that the matrices A i (i=1,…,n) are linearly independent. \({\mathcal {C}}_{m}\) denotes the cone of copositive matrices, and \({\mathcal {C}}^{*}_{m}\) its dual, the cone of completely positive matrices:

In this definition, \(\mathbb{R}^{m}_{+}\) denotes the non-negative orthant. It will be convenient to use the fact [6] that the interior \(\operatorname{int} {\mathcal {C}}_{m}\) of the cone \({\mathcal {C}}_{m}\) is the cone of so-called strictly copositive matrices:

For a characterization of the interior of \({\mathcal {C}}^{*}_{m}\) we refer to [7, 8]. Linear semi-infinite programs are of the form:

with an (infinite) compact index set \(Z \subset \mathbb{R}^{m}\) and continuous functions \(a: Z \to \mathbb{R}^{n}\) and \(b:Z \to \mathbb{R}\). The (Haar-) dual reads:

where the min is taken over all finite sums. For an introduction to (linear) SIP we refer, e.g., to [9, 10]. Note that the condition \(A \in {\mathcal {C}}_{m}\) can be equivalently expressed by either of the conditions:

In view of this relation, the primal CP can be written as a SIP with

In this paper, we always take Z=Δ m , and defining \(F(x):= (B - \sum_{i=1}^{n} x_{i} A_{i} )\), we write the copositive primal problem (P) in SIP form:

In view of (2), the feasibility condition for (SIP D ) becomes

and with \(Y:= \sum_{z \in Z} y_{z} zz^{T} \in {\mathcal {C}}_{m}^{*}\) this coincides with the feasibility condition c i =〈Y,A i 〉 (i=1,…,n) of (D). Moreover,

So, the dual (SIP D ) of (P) in SIP form (3) is equivalent to the CP dual (D) and we simply denote both versions by (D).

3 Optimality Conditions and Duality for CP via SIP

From the SIP form of CP it follows that any result for linear SIP can directly be translated to CP. We will do this for some optimality conditions and duality results.

Optimality conditions for SIP are usually presented in terms of Karush–Kuhn–Tucker (KKT) conditions for a feasible candidate maximizer \(\bar {x}\). Denoting the active index-set at a point \(\bar {x}\) by \(Z(\bar {x}) := \{ z \in Z : z^{T} F(\bar {x}) z = 0 \}\) and the Lagrange multipliers by y j , the KKT conditions for the general linear semi-infinite problem (SIP P ) read:

Using (2), for the copositive problem in SIP-form (3), this condition translates to

It is important to note that any solution of the KKT system with feasible \(\bar {x}\) automatically yields a minimizer \(\overline {Y}\) of the dual program (D):

Observe that, by Carathéodory’s Lemma for cones (see, e.g., [11]), we can assume that

This implies that the dual minimizer \(\overline {Y}\) allows a representation (5) with k≤n, i.e., \(\overline {Y}\in {\mathcal {C}}_{m}^{*}\) has CP-rank ≤n. The CP-rank of a matrix \(Y \in {\mathcal {C}}_{m}^{*}\) is defined as the smallest number r possible in a factorization \(Y = \sum_{j=1}^{r} v_{j} v_{j}^{T}\) with \(v_{j} \in \mathbb{R}^{m}_{+}\). Determining the CP-rank of an arbitrary matrix in \({\mathcal {C}}^{*}_{m}\) is an open problem, see [6].

Before applying the standard results of SIP to copositive programming, we have to translate the primal/dual constraint qualification (Slater condition) from SIP to the copositive terminology.

Lemma 3.1

Consider the copositive problem in its SIP-formulation (3). The primal SIP constraint qualification

is satisfied for \(x_{0} \in \mathbb{R}^{n}\) if and only if \(F(x_{0}) \in \operatorname{int} {\mathcal {C}}_{m}\). The dual SIP constraint qualification

holds if and only if there exists Y 0 feasible for (D) such that \(Y_{0} \in \operatorname{int} {\mathcal {C}}_{m}^{*}\).

Proof

The fact that (CQ P ) implies \(F(x_{0}) \in \operatorname{int} {\mathcal {C}}_{m}\) follows immediately from (1).

For the converse we use the (Frobenius) matrix-norm \(\|A\| = (\sum_{ij} a_{ij}^{2} )^{1/2}\). Let \(F(x_{0}) \in \operatorname{int} {\mathcal {C}}_{m}\). Then there exists ε>0 such that \(F \in {\mathcal {C}}_{m}\) for all F with ∥F−F(x 0)∥≤ε. Define \(F := F(x_{0}) - \frac{\varepsilon}{\sqrt{m}} I\). Then ∥F−F(x 0)∥≤ε and thus \(F \in {\mathcal {C}}_{m}\). Consequently,

Using \(z^{T} z \geq \frac{1}{m}\) for z∈Z, we obtain \(z^{T} F(x_{0}) z \geq \frac{\varepsilon}{\sqrt{m}m} =: \sigma_{0} > 0\) for all z∈Z.

To prove the equivalence of the dual constraint qualifications we define the mapping c(Y):=(〈A 1,Y〉,…,〈A n ,Y〉)T. We first show that

To see this, note that \(Y \in {\mathcal {C}}^{*}_{m}\) has a rank-one representation \(Y = \sum_{j=1}^{k} v_{j} v_{j}^{T}\) with 0≠v j ≥0 for all j=1,…,k. Define \(z_{j} := v_{j}/(v_{j}^{T}e)\) to obtain z j ∈Z, and \(y_{j} := (v_{j}^{T} e )^{2} > 0\). Then \(Y = \sum_{j=1}^{k} y_{j} z_{j} z_{j}^{T}\). Therefore we get

and (8) is proved. Now let \(Y_{0} \in \operatorname{int} {\mathcal {C}}^{*}_{m}\) be feasible for (D), i.e., c(Y 0)=c. To prove \(c \in \operatorname{int} M\) we assert that there exists some ε>0 such that, for any \(\gamma \in \mathbb{R}\), |γ|<ε, the relation

To show this we note that, since the A i ’s are linearly independent, for any k the linear system c(Y k )=(〈A 1,Y k 〉,…,〈A n ,Y k 〉)T=e k has a solution \(\widetilde{Y}_{k} \in {\mathcal {S}}_{m}\). Since \(Y_{0} \in \operatorname{int} {\mathcal {C}}^{*}_{m}\), there exists some ε>0 such that for all γ, |γ|<ε:

Using (8) and c(Y 0)=c we get \(M \ni c(Y_{k}) = c(Y_{0}) + \gamma c(\widetilde{Y}_{k}) = c + \gamma e_{k}\), which proves (9).

We finally show that (CQ D ) yields some Y 0 feasible for (D) with \(Y_{0} \in \operatorname{int} {\mathcal {C}}_{m}^{*}\). To do so, choose any \(Y_{*} \in \operatorname{int} {\mathcal {C}}^{*}_{m}\) and define b:=c(Y ∗)=(〈A 1,Y ∗〉,…,〈A n ,Y ∗〉)T. Since \(c \in \operatorname{int} M\), we have for some ε>0 that c−εb∈M, which means that for some y j ≥0, z j ∈Z (j=1,…,k) we have

Defining \(Y:= \sum_{j=1}^{k} y_{j} z_{j}z_{j}^{T} \in {\mathcal {C}}^{*}_{m}\), we find that c(Y)=c−εb by construction. Next, define Y 0:=Y+εY ∗. Then \(Y_{0} \in \operatorname{int} {\mathcal {C}}^{*}_{m}\) because \(Y \in {\mathcal {C}}^{*}_{m}, Y_{*} \in \operatorname{int} {\mathcal {C}}^{*}_{m}\) and \({\mathcal {C}}^{*}_{m}\) is a convex cone. Moreover, c(Y 0)=c(Y)+εc(Y ∗)=c−εb+εb=c, which means that Y 0 is feasible for (D). This completes the proof. □

We mention the standard first order optimality conditions for (the convex program) SIP (see, e.g., [10, Theorem 3] and [12, Theorem 2(b)] for a proof in SIP context):

If a feasible point \(\bar {x}\) satisfies the KKT condition (4) then \(\bar {x}\) is a (global) maximizer of (P). On the other hand, under (CQ P ) a maximizer \(\bar {x}\) of (P) must satisfy the KKT conditions.

We emphasize that relation (6) implies that, under (CQ P ) to any maximizer \(\bar {x}\) of (P), there always exists a corresponding (complementary) optimal solution \(\overline {Y}\) of (D) that has CP-rank≤n.

Finally, we apply some standard duality and existence results in SIP to the copositive problem. Let v(P), v(D) and \({\mathcal {F}}(P)\), \({\mathcal {F}}(D)\) denote the optimal values and feasible sets of (P), (D), respectively. We introduce the upper/lower level sets

Let \({\mathcal {S}}(P)\) denote the set of maximizers of (P). Note that, in general, for SIP (and CP) strong duality need not hold and solutions of (P) and/or (D) need not exist (see [13] for many examples). However, the following is true for linear SIP.

Theorem 3.1

We have:

-

(a)

If either (CQ P ) or (CQ D ) holds, then v(D)=v(P).

-

(b)

Let \({\mathcal {F}}(P)\) be nonempty. Then

$$(\mathit{CQ}_D) \text{ \textit{holds}} \quad \Leftrightarrow\quad \forall \alpha \in \mathbb{R}{:}\ {\mathcal {F}}_\alpha (P) \text{ \textit{is compact}}\quad \Leftrightarrow \quad \emptyset \neq {\mathcal {S}}(P) \text{ \textit{compact}}. $$Thus, if one of these equivalent conditions holds, then a solution of (P) exists.

A result as in (b) also holds for the dual problem.

Proof

See, e.g., [12, Theorems 6.9, 6.11] and [10, Theorem 4] for the second equivalence in (b). □

4 Application of SIP: Discretization Methods for CP

Due to the SIP representation of CP, any solution method of SIP can directly be applied to CP. In this paper, we only consider discretization methods. Recently an inner and outer approximation algorithm for CP has been proposed and analyzed by Bundfuss and Dür [5]. We re-analyze this approach in the light of discretization methods in SIP as outlined in [14]. This will lead to additional insight and explicit error bounds.

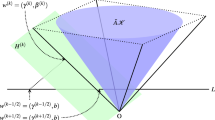

We start with the CP in SIP-form (3) with Z=Δ m . The approach in [5] is based on the following partition of Δ m .

Definition 4.1

We partition the unit simplex Z=Δ m into finitely many sub-simplices Δ 1,…,Δ k of Δ m such that

This partition defines a mesh-size d, a discretization Z d and a set E d of “edges” (pairs of vertices):

In [5], the following outer and inner approximation schemes for (3) are given:

Note that (P d ) represents a special instance of a discretization scheme in SIP. (\(\widetilde {P}_{d}\)) provides feasible points for the original copositive problem (P), see [5] and below. Observe that both (P d ) and (\(\widetilde {P}_{d}\)) are LPs.

Remark 4.1

Note that any point z∈Z=Δ m is contained in one of the sub-simplices Δ l and thus z∈Δ l can be written as a convex combination z=∑ ν λ ν v ν , with ∑ ν λ ν =1,λ ν ≥0 of vertices v ν of Δ l. Consequently, for any z∈Z, the inequality \(\min_{z_{j} \in Z_{d}} \| z- z_{j}\| \leq d\) holds so that d above really defines a mesh-size:

In the following, \({\mathcal {F}}(P)\), \({\mathcal {F}}(P_{d})\), \({\mathcal {F}}(\widetilde {P}_{d})\) and v(P), v(P d ), \(v(\widetilde {P}_{d})\) denote the feasible sets and the maximum values of (P), (P d ), \((\widetilde {P}_{d})\), respectively. The vector \(\bar {x}\) is always a maximizer of (P) and \(\bar {x}_{d}\), \(\widetilde {x}_{d}\) are feasible points (possibly maximizers) of (P d ), \((\widetilde {P}_{d})\). We are now going to discuss some of the convergence results of [14] for our special program (P) in terms of the mesh-size d in an explicit form. The proofs are independent and mainly based on the following two relations: For any \(F \in \mathcal{S}_{m}\) and \(z,\allowbreak u \in \mathbb{R}^{m}\) we have

Moreover, as mentioned earlier, for every z∈Δ l⊆Z we have the representation z=∑ ν λ ν v ν with v ν the vertices of Δ l, λ ν ≥0, and ∑ ν λ ν =1. This gives:

Clearly, \({\mathcal {F}}(P) \subset {\mathcal {F}}(P_{d})\) holds, and using (11) for γ=0 we obtain the relations

We are interested in accurate bounds, e.g., for v(P d )−v(P) and \(v(P)-v(\widetilde {P}_{d})\), depending explicitly on the mesh-size d. From [14], we know that even for nonlinear SIP under a constraint qualification, the approximation error between \({\mathcal {F}}(P), v(P)\) and \({\mathcal {F}}(P_{d}), v(P_{d})\) behaves like \(\mathcal{O}(d^{2})\) in the mesh-size d, provided that the discretization Z d of Z “covers all boundary parts of Z of all dimensions.” In the above discretization scheme this is automatically fulfilled.

The next lemma shows that the inner approximation \((\widetilde {P}_{d})\) yields points feasible for the original program (P) and the outer approximation (P d ) generates points with an infeasibility error of order \({\mathcal {O}}(d^{2})\). In the following, ∥z∥ denotes the 2-norm in \(\mathbb{R}^{m}\) and ∥F∥, the Frobenius norm in \(\mathcal{S}_{m}\).

Lemma 4.1

Let \(\bar {x}_{d}, \widetilde {x}_{d}\) be feasible for \((P_{d}), (\widetilde {P}_{d})\). Then for all z∈Z and for all d we have:

-

(a)

\(z^{T} F(\bar {x}_{d}) z \geq - \frac{1}{2} \|F(\bar {x}_{d}) \| \cdot d^{2}\).

-

(b)

\(z^{T} F(\widetilde {x}_{d}) z \geq 0\).

So \(\widetilde {x}_{d}\) is feasible for (P), and \(\bar {x}_{d}\) is feasible up to an error of order \(\mathcal{O}(d^{2})\).

Proof

Let \(F=F(\bar {x}_{d})\). Using z T Fz≥0 for all z∈Z d , we find from (10) that for all (z,u)∈E d

The second inequality follows from the fact that with the 2-norms the relation ∥Fz∥≤∥F∥∥z∥ holds. In view of (11), this shows (a). Letting \(F:=F(\widetilde {x}_{d})\), (b) follows from (11) with γ=0. □

Assuming a strictly feasible point x 0 we show that small perturbations of any feasible point \(\bar {x}_{d}\) for (P d ) leads to points in \({\mathcal {F}}(P)\) or even \({\mathcal {F}}(\widetilde {P}_{d})\).

Lemma 4.2

Let (CQ P ) be satisfied for \(x_{0} \in {\mathcal {F}}(P)\) with σ 0>0 (see (7)). Then, for any \(\bar {x}_{d}\) feasible for (P d ) and d small enough, we have:

-

(a)

\(\bar {x}_{d}^{*} := \bar {x}_{d} + \rho d^{2} (x_{0} - \bar {x}_{d}) \in {\mathcal {F}}(P)\) for \(\rho \geq \frac{\|F(\bar {x}_{d})\|}{ 2 \sigma_{0}}\) and 0<ρd 2<1.

-

(b)

\(\widetilde {x}_{d}^{*} := \bar {x}_{d} + \tau d^{2} (x_{0} - \bar {x}_{d}) \in {\mathcal {F}}(\widetilde {P}_{d})\) for \(\tau \geq \frac{\|F(\bar {x}_{d})\|}{ 2 \sigma_{0}+ d^{2}(\|F(\bar {x}_{d})\| - \|F(x_{0})\|)}\) and 0<τd 2<1.

Recall that \({\mathcal {F}}(\widetilde {P}_{d}) \subset {\mathcal {F}}(P)\) holds, cf. (12).

-

(c)

If \(\bar {x}_{d}\) is a solution of (P d ), i.e., \(c^{T}\bar {x}_{d} = v(P_{d})\), it follows that \(0 \leq v(P_{d}) - v(\widetilde {P}_{d}) \leq \tau [c^{T}(\bar {x}_{d} - x_{0})] \cdot d^{2}\) for τ satisfying the bound in (b).

Proof

Recall that (CQ P ) means that z T F(x 0)z≥σ 0>0 for all z∈Z. Using this, the fact that \(F(\bar {x}_{d}^{*}) = (1- \rho d^{2}) F(\bar {x}_{d}) + \rho d^{2} F(x_{0}) \), and Lemma 4.1, we see that for any z∈Z and 0≤1−ρd 2,

which shows (a). Part (b) is proven similarly. Here, observing

for any pair (z,u)∈E d , we find using (10), z T F(x 0)z≥σ 0 and \(z^{T} F(\bar {x}_{d}) z \geq 0\):

if τ is chosen as stated (assuming ∥F(x 0)∥d 2≤σ 0, implying τ>0). The inequality (c) for the maximum values is deduced easily using that \(\widetilde {x}^{*}_{d}\) is feasible for (\(\widetilde {P}_{d}\)):

□

Observe that the bounds in Lemma 4.2(c) depend on the actual solutions \(\bar {x}_{d}\) of P d . In order to use these bounds (a-priori) we must assure that the solutions \(\bar {x}_{d}\) exist and that they are bounded. As we shall see below, the key assumption here is a dual constraint qualification. We define the distance between a point x and the set \({\mathcal {S}}(P)\) of maximizers of (P),

Under feasibility of (P), the existence of solutions \(\bar {x}_{d}\) of (P d ) and the convergence towards \({\mathcal {S}}(P)\) follow by only assuming the dual constraint qualification (CQ D ), or equivalently, the boundedness of the level sets \({\mathcal {F}}_{\alpha}(P)\) (or the condition \(\emptyset \neq {\mathcal {S}}(P)\) compact), see Theorem 3.1.

Theorem 4.1

Let (P) be feasible and let (CQ D ) be satisfied. Then, for any mesh-size d small enough, the sets \({\mathcal {S}}(P_{d})\) of optimal solutions of (P d ) are nonempty and compact. Moreover, for any sequence of solutions \(\bar {x}_{d} \in {\mathcal {S}}(P_{d})\) we have \(\delta(\bar {x}_{d} , {\mathcal {S}}(P)) \to 0 \) for d→0.

Proof

See [10, Theorem 9] for a proof. See also [5, Theorem 4.2(b), (c)] for a proof under slightly stronger assumptions. □

Since the feasible set \({\mathcal {F}}(P)\) may consist of a single point, it is clear that, in order to ensure the existence of a feasible point of the inner approximation \((\widetilde {P}_{d})\), we have to assume that \({\mathcal {F}}(P)\) has interior points (see also [5, Theorem 4.2(a), (c)]).

Theorem 4.2

Let (CQ P ) and (CQ D ) hold. Then for any mesh-size d small enough the sets \({\mathcal {S}}(\widetilde {P}_{d})\) of optimal solutions of \((\widetilde {P}_{d})\) are nonempty and compact. Moreover, for any sequence of solutions \(\widetilde {x}_{d} \in {\mathcal {S}}(\widetilde {P}_{d})\) we have \(\delta(\widetilde {x}_{d} , {\mathcal {S}}(P)) \to 0 \) for d→0.

Proof

If (CQ P ) holds for x 0, then we find from (10) that for all (u,v)∈E d and d small enough,

Hence, \(x_{0} \in {\mathcal {F}}(\widetilde {P}_{d})\) if d is small. By (CQ D ) the level sets \({\mathcal {F}}_{\alpha}(P)\) are bounded (compact) (see Theorem 3.1). Since \({\mathcal {F}}_{\alpha}(\widetilde {P}_{d}) \subset {\mathcal {F}}_{\alpha}(P)\) (see (12)), also the level sets \({\mathcal {F}}_{\alpha}(\widetilde {P}_{d})\) are bounded. Therefore, solutions \(\widetilde {x}_{d}\) of the linear programs \((\widetilde {P}_{d})\) exist and the sets \({\mathcal {S}}(\widetilde {P}_{d})\) of maximizers are nonempty and compact.

Suppose now that a sequence \(\widetilde {x}_{d_{k}}\) of such solutions does not satisfy

Then there exists ε>0 and a subsequence \(\widetilde {x}_{d_{k_{\nu}}}\) such that

Since the minimizers \(\widetilde {x}_{d_{k_{\nu}}}\) are elements of a compact set \({\mathcal {F}}_{\alpha}(P)\), we can select a convergent subsequence and w.l.o.g. we can assume \(\widetilde {x}_{d_{k_{\nu}}}\to \widehat {x}\in {\mathcal {F}}_{\alpha}(P)\) for ν→∞. In view of Lemma 4.2(c) we have \(v(P_{d})- v(\widetilde {P}_{d}) \to 0\) and thus, by (12), \(v(\widetilde {P}_{d}) \to v(P)\), d→0. This yields \(c^{T} \widetilde {x}_{d_{k_{\nu}}} = v(P_{d_{k_{\nu}}}) \to c^{T} \widehat {x}= v(P)\), ν→∞ and since \(\widehat {x}\in {\mathcal {F}}_{\alpha}(P) \) is feasible for (P), we obtain \(\widehat {x}\in {\mathcal {S}}(P)\) contradicting (13). □

The next example shows that it may happen that every program (P d ) and \((\widetilde {P}_{d})\) has a solution while no solution of the original program (P) exists.

Example 4.1

Consider the copositive program (based on [13, Theorem 3.1]) with c=(1,1,0)T and

Then (P) becomes:

The feasibility conditions for this program read:

Obviously, x 1+x 2≤1 holds for any feasible x and for any ϵ>0 the point x=(1,−ϵ,−1/ϵ)T is feasible with objective value x 1+x 2=1−ϵ. On the other hand, no feasible \(\bar {x}\) exists with objective \(\bar {x}_{1} + \bar {x}_{2} =1\) (\(\bar {x}_{2} =0\) is excluded). So, the sup value of (P) is v(P)=1 but a maximizer does not exist. Now, consider the program (P d ):

where Z d is any (finite) discretization of Δ 3 containing the basis vectors \(z=e_{i} \in \mathbb{R}^{3}\), i=1,2,3. Then (P d ) in particular contains the constraints

This implies x 1+x 2≤1. So, the linear program (P d ) is bounded and a solution exists. In fact, any program (P d ) has a solution \(\bar {x}_{d} = (1,0, \bar {x}_{3}(d))^{T}\) with objective value v(P d )=1 (and \(\bar {x}_{3}(d) \to -\infty \) for d→0).

Note that also the inner LP-approximations \((\widetilde {P}_{d})\) have solutions. Indeed, since the feasible sets \({\mathcal {F}}(\widetilde {P}_{d})\) are contained in \({\mathcal {F}}(P)\), the values \(v(\widetilde {P}_{d})\) are bounded by 1. Moreover, the feasible sets are nonempty. To see this, take e.g. the (CQ P )-point x 0=(0,−2,−2)T in the interior of \({\mathcal {F}}(P)\). Then, as in the proof of Theorem 4.2, it follows that \(x_{0} \in {\mathcal {F}}(\widetilde {P}_{d})\), provided d is small enough.

We finish this section with some remarks. Note that for any solution \(\bar {x}_{d}\) of the standard linear program (P d ) the KKT condition holds:

where \(Z_{d}(\bar {x}_{d}) := \{ z \in Z_{d} : z^{T} F(\bar {x}_{d}) z = 0\}\). Again, any such solution \(\bar {x}_{d}\) generates a dual feasible matrix

Remark 4.2

Any solution \(\widetilde {x}_{d} \) of \((\widetilde {P}_{d})\) also satisfies the KKT condition

where \(E_{d}(\widetilde {x}_{d}) := \{ (u,v) \in E_{d} : u^{T} F(\widetilde {x}_{d}) v = 0 \}\). Such a solution \(\widetilde {x}_{d}\) generates the matrix \(\widetilde {Y}_{d} := \sum_{j=1}^{s} \widetilde {y}_{j} \cdot \frac{1}{2} ( u_{j} v_{j}^{T} + v_{j} u_{j}^{T})\) which satisfies the constraints \(\langle \widetilde {Y}_{d} , A_{i} \rangle =c_{i}\) for all i. However, in general, \(\widetilde {Y}\notin {\mathcal {C}}_{m}^{*}\), so \(\widetilde {Y}\) is not necessarily feasible for (D). Using (10), we see (under the assumption of Theorem 4.1) that \(\widetilde {Y}_{d}\) is in \({\mathcal {C}}_{m}^{*}\) up to an error of order \({\mathcal {O}}(d^{2})\).

5 Comparison with an Inner Approximation via Sets \({\mathcal {C}}^{r}_{m}\)

In this section, we consider a special discretization scheme first considered in [2] which is connected to an inner approximation of \({\mathcal {C}}_{m}\) by subsets \({\mathcal {C}}^{r}_{m} \subset {\mathcal {C}}_{m}\). For \(r \in \mathbb{N}\), let us define

The following is shown in [2] :

The interesting connection with the discretization approach above is based on the following description of the sets \({\mathcal {C}}^{r}_{m} \)(see[1]) (with \(\operatorname{diag}(A):=(a_{11},\ldots,a_{mm}) \in \mathbb{R}^{m}\)):

where \(I^{r}_{m}\) is the grid \(I^{r}_{m} = \{ v \in \mathbb{N}^{m}_{0} : \sum_{j=1}^{m} v_{j} = r\}\). By (15), we can write

It is not difficult to see that the set \(Z^{0}_{d} := \frac{1}{r} I^{r}_{m}\) defines a uniform discretization of the simplex Z=Δ m with mesh-size of \(Z_{d}^{0}\) given by

So it is natural to compare the outer and inner approximations \((P_{d}), (\widetilde {P}_{d})\) of (P) in Sect. 4 with the following approximations, where \(d=\sqrt{2}/r, r \in \mathbb{N}\):

Denote the solution, the optimal value and the feasible set of (\(\widehat {P}_{d}\)) by \(\widehat {x}_{d}\), \(v(\widehat {P}_{d})\) and \({\mathcal {F}}(\widehat {P}_{d})\), respectively. Note that, by (16), a point x is feasible for \((\widehat {P}_{d})\) if and only if \(F(x) \in {\mathcal {C}}^{r-2}_{m}\). So \((\widehat {P}_{d})\) provides an inner approximation, i.e., \({\mathcal {F}}(\widehat {P}_{d}) \subset {\mathcal {F}}(P)\) and \(v(\widehat {P}_{d}) \leq v(P)\). Similarly to Lemma 4.2 we obtain.

Lemma 5.1

Let (CQ P ) be satisfied for \(x_{0} \in {\mathcal {F}}(P)\). Then with the solutions \(\bar {x}_{d}\) of (P d ) (with discretization \(Z_{d} = Z^{0}_{d}\)) the following holds for all \(d = \frac{\sqrt{2}}{r}, r \in \mathbb{N}\), d small enough:

and

if \(\tau \geq \frac{ \| \operatorname{diag}(F(\bar {x}_{d}))\| }{ {\sqrt{2}} \sigma_{0} - d \| \operatorname{diag}(F(x_{0}))\|} \) and 0<τd<1.

Proof

We use the relation \(F(\widehat {x}_{d}^{*}) = (1- \tau d) F(\bar {x}_{d}) + \tau d F(x_{0}) \) and proceed as in the proof of Lemma 4.2. By Lemma 4.1, using the relation ∥z∥≤1 for \(z \in Z_{d}^{0}\) and \(z^{T} F(\bar {x}_{d})z \geq 0\) for \(z \in Z_{d} = Z_{d}^{0}\), we obtain for any \(z \in Z^{0}_{d}\):

for any d>0 (small enough) if τ is as given above. This shows the first relation. The inequality for the maximum values follows again easily using that \(\widehat {x}^{*}_{d}\) is feasible for (\(\widehat {P}_{d}\)) :

□

According to the analysis above, under the assumption that the sequence \(\bar {x}_{d}\), d→0, is bounded (cf. Theorem 4.1), we have established the following error bounds (the last bound holds for \(Z^{0}_{d} = \frac{1}{r} I^{r}_{m}\) with \(d = \sqrt{2}/r, r \in \mathbb{N}\)):

The next example shows that the bound \(\mathcal{O} (d)\) for \((\widehat {P}_{d})\) is sharp.

Example 5.1

We consider the program

The maximizer of (P) is \(\bar {x}= 0\) with \(v(P) = \bar {x}= 0\). The corresponding unique active index is \(\bar {z}= (\frac{1}{2}, \frac{1}{2})^{T}\). For odd r=2l+1 and \(d = \sqrt{2}/r\), the discretization \(Z^{0}_{d}\) of \(Z = \varDelta _{2} = \{ z \in \mathbb{R}^{2}_{+} : z_{1} + z_{2} = 1 \}\) is given by

It is not difficult to see that the optimal solutions of \((P_{d}), (\widehat {P}_{d})\) are given by the solutions \(\bar {x}_{d}, \widehat {x}_{d}\) of the equations

respectively. After some calculations we obtain \(v(P_{d}) = \bar {x}_{d} = \frac{1}{2} \frac{1}{l(l+1)} = \mathcal{O } (d^{2})\) and

Let us further compare the inner approximations \((\widetilde {P}_{d})\) and \((\widehat {P}_{d})\). It is not difficult to show that the number of points in the discretization \(Z_{d}^{0}\) for \(d=\frac{\sqrt{2}}{(r+2)}\) (approximation by \(\mathcal{C}_{m}^{r}\) see (16)) are given by \(N:={m+ r - 1 \choose r}\) (cf. [4]). To obtain a corresponding inner approximation \((\widetilde {P}_{d}) \) one could think of the so-called Delaunay triangulation (by simplices) of the point set \(Z^{0}_{d}\). The number of edges in such a triangulation is “much smaller” than N 2 (edge from each point to each other, instead of edges only to “neighboring points”). So (for fixed m) the same order of approximation \(O(\frac{1}{r^{2}})\) (w.r.t. r) would require “much less” than \(N^{2} = {m+ r - 1 \choose r}^{2}\) constraints in \((\widetilde {P}_{d})\) and \({m+ r^{2} - 1 \choose r^{2}}\) constraints in \((\widehat {P}_{d})\). This can be seen to be in favor of the scheme \((\widetilde {P}_{d})\).

Interested in an inner approximation, one could also avoid both inner approximations \((\widetilde {P}_{d}), (\widehat {P}_{d})\) and only make use of (P d ). Indeed, the a posteriori error bound of Lemma 4.2 allows us to construct a feasible point \(\overline{x}^{*}_{d} = \overline{x}_{d} + O(d^{2}) \) from the “outer approximation” \(\overline{x}_{d}\), if a strictly feasible point x 0 is available.

Note however that with regard to the “exploding number” of constraints in the above methods, a practical algorithm should avoid most of the constraints. This means that in practice, the pure discretization methods have to be modified to a so-called exchange method where (as in [5]) during the computation only those grid points in Z d are kept in the discretization which still play a role as candidates for the active points \(z_{j} \in Z(\bar {x})\) of a solution \(\bar {x}\) of (P) (see also [10]). We emphasize that for such exchange methods the order bounds obtained above remain valid.

6 Order of Convergence for the Maximizers

In this section, we shortly discuss error bounds for \(\|\bar {x}- \bar {x}_{d}\|, \|\bar {x}- \widetilde {x}_{d}\|, \|\bar {x}- \widehat {x}_{d}\|\) for the maximizers of \((P_{d}), (\widetilde {P}_{d}), (\widehat {P}_{d})\), respectively. These bounds are based on the concept of the order of a maximizer. A feasible point \(\bar {x}\in {\mathcal {F}}(P)\) is a maximizer of (P) of order p>0, iff with some γ>0,ε>0,

holds (and such an inequality does not hold for a smaller \(\overline{p} < p\)). Note that, if \(\bar {x}\) is a maximizer of order 0<p, in particular, \({\mathcal {S}}(P) = \{ \bar {x}\}\) is nonempty and compact. So, by Theorem 3.1 the condition (CQ D ) is satisfied and we can apply Theorem 4.1.

Corollary 6.1

Let (CQ P ) be satisfied and let \(\bar {x}\) be a maximizer of (P) of order p≥1. Then for the maximizers \(\bar {x}_{d}, \widetilde {x}_{d},\widehat {x}_{d}\) of \((P_{d}), (\widetilde {P}_{d}), (\widehat {P}_{d})\), respectively, we have:

Proof

Recall from Lemma 4.2(a) that \(\bar {x}_{d}^{*} := \bar {x}_{d} + \rho d^{2} (x_{0} - \bar {x}_{d}) \in {\mathcal {F}}(P)\) for ρ large enough. Using (18) and \(c^{T} (\bar {x}- \bar {x}_{d}) \leq 0\) we get (\(\bar {x}_{d}^{*}\) is feasible for (P))

or \(\|\bar {x}- \bar {x}^{*}_{d} \| \leq \mathcal{O} (d^{2/p}) \). We thus find using 1≤p,

The other bounds are proven in the same way. For \(\widehat {x}_{d} \), e.g., we obtain using Lemma 5.1,

□

According to this corollary, the smaller the order p of the maximizer \(\bar {x}\), the faster is the convergence. The following examples show that for copositive programs (P) (unique) maximizer of orders 1, 2 and of arbitrarily large order can occur.

Example 6.1

Obviously, in Example 4.1 the maximizer \(\bar {x}=0\) is of order p=1. Considering the copositive program

we see that x is feasible if and only if −x 1≥0,−x 2≥0 and \(-x_{1}-x_{2}^{2}\ge 0\) hold, or

The maximum value is x 1=0 implying x 2=0. So \(\bar {x}=(0,0)^{T}\) is the unique maximizer. For the feasible points \(x=(-x_{2}^{2},x_{2})^{T},x_{2}<0\) (|x 2| small) we find with ∥x∥∞:=max{|x 1|,|x 2|}:

and \(\bar {x}\) is a maximizer of order 2. Now, we take the program

In view of the block structure of F(x), a vector \(x\in \mathbb{R}^{3}\) is feasible iff:

Thus \(\bar {x}=(0,0,0)^{T}\) is the (unique) maximizer and with feasible vectors \(x=(-x_{3}^{4},-x_{3}^{2},x_{3})^{T}\), x 3<0 (|x 3| small) we find

showing that \(\bar {x}\) is maximizer of order 4. Similarly, we can construct copositive programs with maximizer of arbitrarily large order.

Remark 6.1

In [1, Sect. 3], approximation results have been established for the values \(v^{*} = \min_{z \in \varDelta _{m}} z^{T} A z\) with \(A \in {\mathcal {S}}_{m}\). We briefly show that these bounds appear in our result above as special instances. Obviously v ∗ is the value of

with dual

Obviously, (P) satisfies (CQ P ) with some x 0 (small enough) and also (D) has strictly feasible matrices Y 0 (with any \(Y \in \operatorname{int} {\mathcal {C}}_{m}^{*}\) take Y 0=Y/〈Y,I〉). Let \(\bar {x}\) be the solution of (P) and consider the approximations (P d ), \((\widetilde {P}_{d})\) defined by the grids \(Z_{d}^{0}\) (\(d = \sqrt{2}/r\)) with corresponding values \(v_{d}, \widetilde {v}_{d}\) and solutions \(\bar {x}_{d}, \widetilde {x}_{d}\). It is easy to see that these solutions must be unique, satisfy \(x_{0} \leq \widetilde {x}_{d} \leq \bar {x}\leq \bar {x}_{d}\) and are monotonic, i.e., \(\widetilde {x}_{d} \uparrow \bar {x}, \bar {x}_{d} \downarrow \bar {x}\) for d→0. Then by Lemma 4.2(a) we obtain the bound

and Lemma 5.1 yields

The latter gives (up to a constant factor) the bound in[1] and the first bound yields a \(\mathcal{O}(d^{2})\) error instead of a rate \(\mathcal{O}(d)\) in [1].

7 Set-Copositive Programming

Instead of studying copositivity with respect to \(\mathbb{R}^{m}_{+}\), it is also possible to consider copositivity with respect to an arbitrary set \(Z \subset \mathbb{R}^{m}\), meaning that one requires z T Az≥0 for all z∈Z. This concept was studied in detail in [15]. It plays an important role in modeling quadratically constrained quadratic problems, cf. [16].

We can easily extend the analysis above, and consider programs with a feasibility condition

where \(\alpha \in \mathbb{R}\) and a compact set \(Z \subset \mathbb{R}^{m}\) is given. Here again, \(\operatorname{diag}{(A)}\) denotes the vector with components a ii , and later for \(z \in \mathbb{R}^{m}\), \(\operatorname{Diag} (z)\) will denote the diagonal matrix with diagonal elements z i . So we consider

The cases Z=Δ m ,α=0 and \(Z = \varDelta _{m}, \alpha = \frac{1}{r}\) have been discussed in Sect. 5. Here again, the SIP-form of (P) will lead us to the dual program (D) and to the dual cone \({\mathcal {C}}^{*}_{m}(Z,\alpha)\) as follows. In SIP-form (P) reads:

with vector a(z) having components \(a_{i}(z)= z^{T} A_{i} z - \alpha z^{T}\operatorname{diag}{(A_{i})}\) and \(b(z) = z^{T} B z - \alpha z^{T} \operatorname{diag}{(B)}\). The dual of (P SIP) is (with finite sums ∑ j , see Sect. 2):

To convert this SIP-dual to the conic dual of (P) we make use of the relation \(z^{T} \operatorname{diag}{(B)} = \langle B, \operatorname{Diag}{(z)} \rangle\) and find

So (D SIP) is equivalent to the program

where

It is easily shown that this set is the dual of \({\mathcal {C}}_{m}(Z, \alpha)\). So, under the assumption that Z is compact, all results in the preceding sections can be extended to this more general problem (P).

8 Conclusions

Copositive programs have been treated as special cases of linear semi-infinite problems (LSIP). We have applied known results from LSIP to cone programming and re-obtained known results but also gained new insight.

We have interpreted different approximation schemes for solving copositive programs as discretization schemes. The behavior of the approximation error for the optimal values in dependence of the discretization mesh-size d has been analyzed. The concept of order of maximizers allows to analyse the behavior of the error for the maximizers in the approximation schemes. It also has been shown that maximizer of arbitrary large order may appear in copositive programming.

References

Bomze, I.M., De Klerk, E.: Solving standard quadratic optimization problems via linear, semidefinite and copositive programming. J. Glob. Optim. 24(2), 163–185 (2002)

de Klerk, E., Pasechnik, D.V.: Approximation of the stability number of a graph via copositive programming. SIAM J. Optim. 12(4), 875–892 (2002)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120(2), 479–495 (2009)

Alper Yıldırım, E.: On the accuracy of uniform polyhedral approximations of the copositive cone. Optim. Methods Softw. 27(1), 155–173 (2012)

Bundfuss, S., Dür, M.: An adaptive linear approximation algorithm for copositive programs. SIAM J. Optim. 20(1), 30–53 (2009)

Berman, A., Shaked-Monderer, N.: Completely Positive Matrices. World Scientific, Singapore (2003)

Dickinson, P.J.: An improved characterisation of the interior of the completely positive cone. Electron. J. Linear Algebra 20, 723–729 (2010)

Dür, M., Still, G.: Interior points of the completely positive cone. Electron. J. Linear Algebra 17, 48–53 (2008)

Goberna, M.A., López, M.A.: Linear Semi-Infinite Optimization. Wiley, Chichester (1998)

López, M., Still, G.: Semi-infinite programming (invited review). Eur. J. Oper. Res. 180(2), 491–518 (2007)

Faigle, U., Kern, W., Still, G.: Algorithmic Principles of Mathematical Programming. Springer, Berlin (2002)

Hettich, R., Kortanek, K.O.: Semi-infinite programming: theory, methods, and applications. SIAM Rev. 35(3), 380–429 (1993)

Bomze, I.M., Schachinger, W., Uchida, G.: Think co (mpletely) positive! Matrix properties, examples and a clustered bibliography on copositive optimization. J. Glob. Optim. 52(3), 423–445 (2012)

Still, G.: Discretization in semi-infinite programming: the rate of convergence. Math. Program. 91(1), 53–69 (2001)

Eichfelder, G., Jahn, J.: Set-semidefinite optimization. J. Convex Anal. 15(4), 767–801 (2008)

Burer, S., Dong, H.: Representing quadratically constrained quadratic programs as generalized copositive programs. Oper. Res. Lett. 40(3), 203–206 (2012)

Acknowledgements

The authors would like to thank the anonymous referees for many valuable comments and suggestions.

This work was done while Mirjam Dür was working at Johann Bernoulli Institute of Mathematics and Computer Science, University of Groningen, The Netherlands. She was supported by the Netherlands Organization for Scientific Research (NWO) through Vici Grant No. 639.033.907.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Johannes Jahn.

Rights and permissions

About this article

Cite this article

Ahmed, F., Dür, M. & Still, G. Copositive Programming via Semi-Infinite Optimization. J Optim Theory Appl 159, 322–340 (2013). https://doi.org/10.1007/s10957-013-0344-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0344-2