Abstract

We introduce two hybridizable discontinuous Galerkin (HDG) methods for numerically solving the two-dimensional Monge–Ampère equation. The first HDG method is devised to solve the nonlinear elliptic Monge–Ampère equation by using Newton’s method. The second HDG method is devised to solve a sequence of the Poisson equation until convergence to a fixed-point solution of the Monge–Ampère equation is reached. Numerical examples are presented to demonstrate the convergence and accuracy of the HDG methods. Furthermore, the HDG methods are applied to r-adaptive mesh generation by redistributing a given scalar density function via the optimal transport theory. This r-adaptivity methodology leads to the Monge–Ampère equation with a nonlinear Neumann boundary condition arising from the optimal transport of the density function to conform the resulting high-order mesh to the boundary. Hence, we extend the HDG methods to treat the nonlinear Neumann boundary condition. Numerical experiments are presented to illustrate the generation of r-adaptive high-order meshes on planar and curved domains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Monge–Ampère equation has its root in optimal transport theory and arises from many areas in science and engineering such as astrophysics, differential geometry, geostrophic fluid dynamics, image processing, mesh generation, optimal transportation, statistical inference, and stochastic control; see [1] and references therein. The equation belongs to a class of fully nonlinear second-order elliptic partial differential equations (PDEs). Due to the wide range of above-mentioned applications, the Monge–Ampère equation has attracted significant attention from mathematicians and scientists [2,3,4,5,6,7,8,9,10,11,12]. Significant progress has been made in the development of numerical methods for solving the Monge–Ampère equation [1, 4, 5, 7, 9, 13,14,15,16,17,18,19,20,21,22,23,24,25]. A number of finite element methods have been proposed for the Monge–Ampère equation. In [7], Dean and Glowinski presented an augmented Lagrange multiplier method and a least squares method for the Monge–Ampère equation by treating the nonlinear equations as a constraint and using finite elements. Böhmer [14] introduced a projection method using \(C^1\) finite element functions for a class of fully nonlinear second order elliptic PDEs and analyzed the method using consistency and stability arguments. In [15], Brenner et al. proposed \(C^0\) finite element methods and discontinuous Galerkin (DG) methods for the Monge–Ampère equation, which were extended to the three dimensional Monge–Ampère equation [16]. In [4], Benamou et al. proposed a fixed-point method that only requires the solution of a sequence of Poisson equations for the two-dimensional Monge–Ampère equation. In [25], a linearization-then-descretization approach consists in applying Newton’s method to a nonlinear elliptic PDE to produce a sequence of linear nonvariational elliptic PDEs that can be dealt with using nonvariational finite element method. In [20], Feng and Lewis developed mixed interior penalty DG methods for fully nonlinear second order elliptic and parabolic equations. Recently, a finite element/operator-splitting method is introduced for the Monge–Ampère equation [24, 26] by using an equivalent divergence formulation of the Monge–Ampère equation through the cofactor matrix of the Hessian of the solution.

In this paper, two hybridizable discontinuous Galerkin (HDG) methods are considered for the numerical solution of the Monge–Ampère equation in two dimensions. The first HDG method is devised to solve the Monge–Ampère equation by using Newton’s method. The second HDG method is devised to solve a sequence of the Poisson equation until convergence to a fixed-point solution of the Monge–Ampère equation is reached. Numerical examples are presented to compare the convergence and accuracy of the HDG methods. It is found out that while the two methods yield similar orders of accuracy for the numerical solution, the Newton-HDG method requires considerably less number of linear solves than the fixed-point HDG method. However, an advantage of the fixed-point HDG method is that the discretization of the Poisson equation results in linear systems that can be solved more efficiently than those resulting from the Newton-HDG method.

The HDG methods have some unique features which distinguish themselves from other finite element methods for the Monge–Ampère equation. First, the global degrees of freedom are those of the approximate trace of the scalar variable defined on element faces. This translates to computational efficiency for the solution of nonlinear and linear systems arising from the HDG discretization of the Monge–Ampère equation. Second, the approximate gradient and Hessian converge with the same order as the approximate scalar variable. For most other finite element methods, the convergence rates of the approximate gradient and Hessian are lower than that of the approximate scalar variable.

There has been considerable interest in r-adaptive mesh generation by the optimal transport theory via solving the Monge–Ampère equation [27,28,29,30,31,32,33,34,35]. In r-adaptivity, mesh points are neither created nor destroyed, data structures do not need to be modified in-place, and complicated load-balancing is not necessary [36]. Furthermore, the r-adaptivity approach based on the Monge–Ampère equation has the ability to avoid mesh entanglement and sharp changes in mesh resolution [28, 29]. This r-adaptivity approach has its root from the optimal transport theory since it seeks to minimize a deformation functional subject to equidistributing a given scalar monitor function which controls the local density of mesh points. If the functional is defined as the \(L_2\) norm of the grid deformation, the optimal transport theory results in a mesh mapping as the gradient of the solution of the Monge–Ampère equation [27, 31]. To determine the mesh mapping we need to impose a boundary condition that mesh mapping conforms to the boundary of the physical domain. This boundary condition can be characterized as a Hamilton–Jacobi equation on the boundary [5] or a nonlinear Neumann boundary condition [30]. We extend the HDG methods to the Monge–Ampère equation with nonlinear boundary conditions. The methods are used to generate r-adaptive high-order meshes based on an equidistribution of a density function via the optimal transport theory.

The paper is organized as follows. In Sect. 2, we introduce HDG methods for the Monge–Ampère equation and present numerical results to demonstrate their performance. In Sect. 3, we extend these HDG methods to r-adaptivity based on the optimal transport theory and present numerical experiments to illustrate the generation of r-adaptive high-order meshes. Finally, in Sect. 4, we make a number of concluding remarks on the results as well as future work.

2 The Hybridizable Discontinuous Galerkin Methods

HDG methods were first introduced in [37] for elliptic problems and subsequently extended to a wide variety of PDEs: linear convection-diffusion problems [38, 39], nonlinear convection-diffusion problems [40,41,42], Stokes problems [43,44,45,46], incompressible flows equations [47,48,49,50,51,52,53], compressible flows [54,55,56,57,58,59,60,61], Maxwell’s equations [62,63,64], linear elasticity [65,66,67,68,69], and nonlinear elasticity [70,71,72,73]. To the best of our knowledge, however, HDG methods have not been considered for solving the Monge–Ampère equation prior to this work. In this section, we describe HDG methods for numerically solving the Monge–Ampère equation. The proposed HDG methods simultaneously compute approximations to the scalar variable, the gradient variables, and Hessian variables. The HDG methods are computationally efficient owing to a hybridization procedure that eliminates the degrees of freedom of those approximate variables to obtain global systems for the degrees of freedom of an approximate trace defined on the element faces.

2.1 Governing Equations and Approximation Spaces

We consider the Monge–Ampère equation with a Dirichlet boundary condition

Here \(\Omega \in {\mathbb {R}}^2\) is the physical domain with Lipschitz boundary \(\partial \Omega \), the source term f is a strictly positive function, and g is a smooth function. Note that \(D^2 u\) is the Hessian matrix of the exact solution u, and \(\det (D^2 u) = u_{xx} u_{yy} - u_{xy}^2\) is its determinant. The solution u must be convex in order for the equation to be elliptic. Without the convexity constraint, the equation does not have a unique solution. In two dimensions [4], the Monge–Ampère Eq. (1) can be rewritten as

We introduce \(\varvec{q} = \nabla u\) and \(\varvec{H} = \nabla \varvec{q}\), and rewrite the above equation as a first-order system of equations

where \(s(\varvec{H}, f) = \sqrt{H_{11}^2 + H_{22}^2 + H_{12}^2 + H_{21}^2 + 2 f}\). While we consider Dirichlet boundary condition in this section, we will treat Neumann boundary condition in the next section.

We denote by \({\mathcal {T}}_h\) a collection of disjoint regular elements K that partition \(\Omega \) and set \(\partial {\mathcal {T}}_h:= \{\partial K:\;K\in {\mathcal {T}}_h\}\). For an element K of the collection \({\mathcal {T}}_h\), \(e = \partial K \cap \partial \Omega \) is the boundary face if the \((d-1)\)-Lebesgue measure of e is nonzero. For two elements \(K^+\) and \(K^-\) of the collection \({\mathcal {T}}_h\), \(e = \partial K^+ \cap \partial K^-\) is the interior face between \(K^+\) and \(K^-\) if the \((d-1)\)-Lebesgue measure of e is nonzero. Let \({\mathcal {E}}_h^i\) and \({\mathcal {E}}_h^{\partial }\) denote the set of interior and boundary faces, respectively. We denote by \({\mathcal {E}}_h\) the union of \({\mathcal {E}}_h^{i}\) and \({\mathcal {E}}_h^{\partial }\). Let \(\varvec{n}^+\) and \(\varvec{n}^-\) be the outward unit normal of \(\partial K^+\) and \(\partial K^-\), respectively. Let \({\mathcal {P}}^p(D)\) denote the set of polynomials of degree at most p on a domain D and let \(L^2(D)\) be the space of square integrable functions on D. We introduce discontinuous finite element spaces

Note that \(M_h^p\) consists of functions which are continuous inside the faces (or edges) \(e \in {\mathcal {E}}_h\) and discontinuous at their borders.

For functions u and v in \(L^2(D)\), we denote \((u,v)_D = \int _{D} u v\) if D is a domain in \({\mathbb {R}}^d\) and \(\left\langle u,v\right\rangle _D = \int _{D} u v\) if D is a domain in \({\mathbb {R}}^{d-1}\). The inner produces associated with the above approximation spaces are defined as follows

We are ready to describe the HDG methods for the Monge–Ampère problem (3).

2.2 The Newton-HDG Formulation

To numerically solve (3) with the HDG method, we find \((\varvec{H}_h, \varvec{q}_h,u_h,\widehat{u}_h) \in \varvec{W}_{h}^p \times \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) such that

for all \((\varvec{G}, \varvec{v}, w, \mu ) \in \varvec{W}_h^p \times \varvec{V}_h^p \times U_h^p \times M_h^p\), where

Here \(\tau \) is the stabilization parameter which, in general, can be a function of \(\varvec{H}_h, \varvec{q}_h, u_h\) and \(\widehat{u}_h\). The choice of the stabilization \(\tau \) plays a crucial role in both the accuracy and stability of the resulting method. We shall study the effects of the stabilization on the convergence and stability of the numerical solutions for one of the examples presented later.

Newton’s method is used to solve the nonlinear system (4). The procedure evaluates successive approximations \((\varvec{H}_h^l, \varvec{q}_h^l,u_h^l,\widehat{u}_h^l)\) starting from an initial guess \((\varvec{H}_h^0, \varvec{q}_h^0,u_h^0,\widehat{u}_h^0)\). For each Newton step l, the system of Eq. (4) is linearized with respect to the Newton increments \(\left( \delta \varvec{H}_h^l, \delta \varvec{q}_h^l, \delta u_h^l, \delta \widehat{u}_h^l \right) \in \varvec{W}_{h}^p \times \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) that satisfy

for all \((\varvec{G}, \varvec{v}, w, \mu ) \in \varvec{W}_h^p \times \varvec{V}_h^p \times U_h^p \times M_h^p\), the right-hand side residuals are given by

Here \(\partial s_{\varvec{H}}(\varvec{H}, f)\) denotes the partial derivatives of \(s(\varvec{H}, f)\) with respect to \(\varvec{H}\). After solving (6), the numerical approximations are then updated

where the coefficient \(\alpha \) is determined by a line-search algorithm in order to optimally decrease the residual. This process is repeated until the residual norm is smaller than a given tolerance, typically \(10^{-8}\).

At each step of the Newton method, the linearization (6) gives the following matrix system to be solved

where \(\delta U^l\) and \(\delta \widehat{U}^l\) are the vectors of degrees of freedom of \((\delta \varvec{H}_h^l, \delta \varvec{q}_h^l, \delta u_h^l)\) and \(\delta \widehat{u}_h^l\), respectively. The system (9) is first solved for the traces only \(\delta \widehat{U}^l\) as

where \({\mathbb {K}}^l\) is the Schur complement of the block \({\mathbb {A}}^l\) and \(R^l\) is the reduced residual

The reduced system (10) involves fewer degrees of freedom than the full system (9). Moreover due to the discontinuous nature of the approximate solution, the matrix \({\mathbb {A}}^l\) and its inverse are block diagonal, and can be computed elementwise. Once \(\delta \widehat{U}^l\) is known, the other unknowns \(\delta U^l\) are then retrieved element-wise. Therefore, the full system (9) is never explicitly built, and the reduced matrix \({\mathbb {K}}^l\) is built directly in an elementwise fashion, thus reducing the memory storage.

2.3 The Fixed Point-HDG Method

In addition to using Newton’s method, we employ the fixed-point method to solve the nonlinear system (4). We note that the nonlinear source term s in (4) depends on \(\varvec{H}_h\). Hence, starting from an initial guess \(\varvec{H}^0_h\) we repeatedly find \((\varvec{q}_h^l,u_h^l,\widehat{u}_h^l) \in \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) such that

for all \((\varvec{v}, w, \mu ) \in \varvec{V}_h^p \times U_h^p \times M_h^p\), and then compute \(\varvec{H}_h^l\in \varvec{W}_{h}^p\) such that

until \(\Vert \varvec{H}^l_h - \varvec{H}^{l-1}_h\Vert \) is less than a specified tolerance, typically \(10^{-6}\).

We note that the weak formulation (12) is nothing but the HDG method for the Poisson equation \(-\Delta u = -s\) in \(\Omega \) with \(u = g\) on \(\partial \Omega \). Applying the solution strategy described earlier to the system (12), we obtain the following global system

for the degrees of freedom of \(\widehat{u}_h^l\). As the global matrix \({\mathbb {G}}\) is unchanged, it can be computed once prior to carrying out the fixed-point algorithm. However, the right hand side vector \(F^l\) has to be computed at every fixed-point iteration because the source term depends on the previous iterate. Once \( \widehat{U}^l\) is obtained by solving the linear system (14), the degrees of freedom of both \(\varvec{q}_h^l\) and \(u_h^l\) are then retrieved element-wise. Finally, the degrees of freedom of \(\varvec{H}^l_h\) are also computed by solving (13) element-wise.

We see that the fixed-point HDG method for the Monge–Ampère equation means to solving the Poisson problem with a sequence of right-hand side vectors. Hence, the fixed-point HDG method is much easier to implement the Newton-HDG method. Furthermore, its global matrix is computed only once, whereas that of the Newton-HDG method has to be computed for each Newton step. However, the Newton-HDG method can converge considerably faster than the fixed-point HDG method.

2.4 Numerical Examples

In this section we provide examples to demonstrate the HDG methods described above. In the first example we compare the convergence and accuracy of the methods for several polynomial degrees. In the second example we focus on a problem with a nearly singular solution. These examples demonstrate the relative advantages and disadvantages of the HDG methods. For the first problem, the Newton-HDG method has robust convergence in the nonlinear iteration, while the fixed-point HDG method requires more iterations. The second example illustrates the influence of regularity on the performance of the methods. For these examples, we choose \({\mathcal {T}}_h\) as a uniform triangulation of \(\Omega \) with mesh size \(h = 1/n\) and use \(u^0 = (x^2+y^2)/2\) as the initial guess.

Example 1

We consider the Monge–Ampère equation in \(\Omega = (0,1)^2\) with \(f = (1 + x^2 +y^2) e^{x^2 + y^2}\) and \(g = e^{0.5 (x^2 + y^2)}\), which yields the exact solution \(u = e^{0.5 (x^2 + y^2)}\). We use triangular and quadrilateral meshes with uniform mesh size \(h= 1/n\) for \(n = 4, 8, 16, 32\), 64, and polynomial degrees of \(p = 1, 2\), 3. We consider three different values for the stabilization parameter \(\tau = 1, \tau = 1/h\), and \(\tau = h\).

We report the \(L^2\) errors and orders of convergence of the numerical solutions on the triangular meshes in Table 1 and the quadrilateral meshes in Table 2 for \(\tau = 1\). We see that both the Newton-HDG method and the fixed-point HDG method have the same errors and convergence rates. These results are expected because the two methods should converge to the same numerical solution. We observe interestingly that the convergence rates of \(u_h\), \(\varvec{q}_h\), and \(\varvec{H}_h\) are \(O(h^p)\) on triangular and quadrilateral meshes. The convergence rate of \(O(h^p)\) for the computed Hessian \(\varvec{H}_h\) is a good news for the HDG methods since the Hessian H are the second-order partial derivatives of the scalar variable u. However, the convergence rate of \(O(h^p)\) for both \(u_h\) and \(\varvec{q}_h\) are suboptimal. The suboptimal convergence of \(u_h\) and \(\varvec{q}_h\) for the Monge–Ampère equation can be attributed to the fact that the source term s depends on the Hessian \(\varvec{H}\). As the computed Hessian \(\varvec{H}_h\) converges with order \(O(h^p)\), the \(L^2\) projection of the source term \(s(\varvec{H}_h, f)\) also converges with order \(O(h^p)\). In contrast, for linear second-order elliptic problems with smooth data and regular geometries, the \(L^2\) projection of the source term converges with order \(O(h^{p+1})\), the HDG method can yield optimal convergence rate of \(O(h^{p+1})\) for both \(u_h\) and \(\varvec{q}_h\).

We show the \(L^2\) errors and orders of convergence of the numerical solutions on the triangular meshes in Table 3 and the quadrilateral meshes in Table 4 for \(\tau = 1/h\). We observe that the approximate Hessian \(\varvec{H}_h\) for \(\tau = 1/h\) converges with order \(p-1\), which is one order less than that for \(\tau = 1\). The approximate scalar \(u_h\) and gradient \(\varvec{q}_h\) converge with order \(p-1\) for \(p=1,3\) and with order p for \(p=2,4\). Hence, it appears that \(u_h\) and \(\varvec{q}_h\) have the convergence rate of \(O(h^{p-1})\) for odd polynomial degrees and \(O(h^{p})\) for even polynomial degrees when the stabilization parameter is chosen as \(\tau = 1/h\).

We show the \(L^2\) errors and orders of convergence of the numerical solutions on the triangular meshes in Table 5 and the quadrilateral meshes in Table 6 for \(\tau = h\). We observe that the approximate Hessian \(\varvec{H}_h\) converges with order p on triangular meshes, but with order \(p-1\) on quadrilateral meshes. The approximate scalar \(u_h\) converges with order p on triangular meshes, but with order \(p-1\) for \(p=1,3\) on quadrilateral meshes. The approximate gradient \(\varvec{q}_h\) converges with order \(p+1\) for \(p=1,3\) and p for \(p=2,4\) on triangular meshes, but with order \(p-1\) for \(p=1,3\) and p for \(p=2,4\) on quadrilateral meshes.

Table 7 summarizes the convergence rates of the numerical solutions. We see that the convergence rates depend on the stabilization parameter \(\tau \), the type of meshes, and the polynomial degree p. For \(\tau = 1\), the convergence rates of all the approximate variables are \(O(h^p)\) for all polynomial degrees considered on both types of meshes. However, for the other two choices of the stabilization parameter, the convergence rates of the approximate variables are no longer the same. Therefore, we shall use \(\tau = 1\) in the other examples.

Lastly, in Table 8, we show the number of iterations required to reach convergence for \(\tau =1\). As expected, the Newton-HDG method requires considerably less iterations (about six times less) than the fixed-point HDG method. Furthermore, the number of iterations appears consistent for all values of n and p on both triangular and quadrilateral meshes.

Example 2

We consider the Monge–Ampère equation in \(\Omega = (0,1)^2\) with \(f = R^2/(R^2 - x^2 - y^2)^2\) and \(g = - \sqrt{R^2 - x^2 - y^2}\), which yields the exact solution \(u = - \sqrt{R^2 - x^2 - y^2}\) for \(R > \sqrt{2}\). Note that the regularity of the exact solution decreases as R decreases toward \(\sqrt{2}\). Indeed, the gradient and Hessian of the exact solution are singular at the corner (1, 1) for \(R = \sqrt{2}\). In this example, we would like to see how the HDG methods perform as the regularity of the solution decreases. Hence, we will report numerical results for \(R = 2, R = \sqrt{2} + 0.1,\) and \(R = \sqrt{2} + 0.01\). We consider triangular meshes with size \(h = 1/n\), and stabilization parameter \(\tau = 1\).

We report the \(L^2\) errors and orders of convergence of the computed solutions in Table 9 for \(R=2\), Table 10 for \(R=\sqrt{2}+0.1\), and Table 11 for \(R=\sqrt{2}+0.01\). The Newton-HDG method and the fixed-point HDG method have very similar errors and convergence rates. We observe again that \(u_h\), \(\varvec{q}_h\), and \(\varvec{H}_h\) converge with order \(O(h^p)\), except for \(p=3\) and \(R=\sqrt{2}+0.1\) where both \(u_h\) and \(\varvec{q}_h\) appear to converge like \(O(h^{p+1})\). As R decreases, the \(L^2\) errors tend to increase because both \(\varvec{q}\) and \(\varvec{H}\) become less smooth. Hence, we need to increase the mesh resolution in order to observe the expected convergence rates for \(R = \sqrt{2}+0.01\). Table 12 lists the number of iterations required to reach convergence. The Newton-HDG method requires considerably less iterations than the fixed-point HDG method. The number of iterations for the Newton-HDG method remains consistent, whereas that for the fixed-point HDG method tends to increase as R decreases.

3 Optimal Transport for r-adaptive Mesh Generation

In this section, we review optimal transport theory and describe how it can be applied to r-adaptive mesh generation. This r-adaptive mesh generation approach results in the Monge–Ampère equation with nonlinear Neumann boundary condition, which is solved by extending the HDG methods described in the previous section. We present numerical experiments to demonstrate the performance of the HDG methods for r-adaptive mesh generation.

3.1 Optimal Transport Theory

The optimal transport (OT) problem is described as follows. Suppose we are given two probability densities: \(\rho (\varvec{x})\) supported on \(\Omega \in {\mathbb {R}}^d\) and \(\rho '(\varvec{x}')\) supported on \(\Omega ' \in {\mathbb {R}}^d\). The source density \(\rho (\varvec{x})\) may be discontinuous and even vanish. The target density \(\rho '(\varvec{x}')\) must be strictly positive and Lipschitz continuous. The OT problem is to find a map \(\varvec{\phi }: \Omega \rightarrow \Omega '\) such that it minimizes the following functional

where

is the set of mappings which map the source density \(\rho (\varvec{x})\) onto the target density \(\rho '(\varvec{x}')\).

In [2], Brenier gave the proof of the existence and uniqueness of the solution of the OT problem. Furthermore, the optimal map \(\varvec{\phi }\) can be written as the gradient of a unique (up to a constant) convex potential u, so that \(\varvec{\phi }(\varvec{x}) = \nabla u(\varvec{x})\), \(\Delta u(\varvec{x}) > 0\). Substituting \(\varvec{\phi }(\varvec{x}) = \nabla u(\varvec{x})\) into (16) results in the Monge–Ampère equation

along with the restriction that u is convex. The equation lacks standard boundary conditions. However, it is geometrically constrained by the fact that the gradient map takes \(\partial \Omega \) to \(\partial \Omega '\):

This constraint is referred to as the second boundary value problem for the Monge–Ampère equation. In [5], the constraint (18) is replaced with a Hamilton–Jacobi equation on the boundary

If the boundary \(\partial \Omega '\) can be expressed by \(\partial \Omega ' = \{\varvec{x}' \in \Omega ': g(\varvec{x}') = 0\}\) then the Hamilton–Jacobi equation reduces to the following Neumann boundary condition

This boundary condition simplifies to a linear Neumann boundary condition when \(g(\cdot )\) is a linear function, that is, when the boundary \(\partial \Omega '\) is flat. For certain problems where densities are periodic, it is natural and convenient to use periodic boundary conditions instead.

3.2 Adaptive Mesh Redistribution

One approach to mesh adaptation is the equidistribution principle that equidistributes the target density function \(\rho '\) so that the source density \(\rho \) is uniform on \(\Omega \) [27, 31]. The equidistribution principle leads to a constant source density \(\rho (\varvec{x}) = \theta \), where the constant \(\theta \) is given by \(\theta = \int _{\Omega '} \rho '(\varvec{x}') d \varvec{x}' / \int _{\Omega } d \varvec{x}\). Using the optimal transport theory, the optimal mesh is sought by solving the Monge–Ampère equation with the Neumann boundary condition:

where \(f(\nabla u(\varvec{x})) = \theta /\rho '(\nabla u(\varvec{x}))\). The gradient of u gives us the desired mesh.

In two dimensions, we can rewrite the above equation as a first-order system of equations

where \(s(\varvec{H},\varvec{q}) = \sqrt{H_{11}^2 + H_{22}^2 + H_{12}^2 + H_{21}^2 + 2 f(\varvec{q})}\). The system (22) differs from (3) in the source term and the boundary condition. In order to solve (22), we will need to extend the HDG methods described in the previous section.

3.3 HDG Formulation

The HDG discretization of the system (22) is to find \((\varvec{H}_h, \varvec{q}_h,u_h,\widehat{u}_h) \in \varvec{W}_{h}^p \times \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) such that

for all \((\varvec{G}, \varvec{v}, w, \mu ) \in \varvec{W}_h^p \times \varvec{V}_h^p \times U_h^p \times M_h^p\), where

The approximate gradient \(\varvec{q}_h\) represents a mesh that adapts to the target density function \(\rho '\). Since \(\varvec{q}_h\) is a discontinuous field, we need to postprocess it to obtain a conformal continuous mesh. In particular, we simply average the duplicate degrees of freedom of a DG scalar field to obtain a continuous scalar field of the same polynomial degree. This postprocessing is efficient and easy to implement. We are going to describe the Newton method and the fixed-point method to solve the HDG formulation.

3.4 Newton Method

For each Newton step l, we compute \(\left( \delta \varvec{H}_h^l, \delta \varvec{q}_h^l, \delta u_h^l, \delta \widehat{u}_h^l \right) \in \varvec{W}_{h}^p \times \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) by solving the following linear system

for all \((\varvec{G}, \varvec{v}, w, \mu ) \in \varvec{W}_h^p \times \varvec{V}_h^p \times U_h^p \times M_h^p\), the right-hand side residuals are given by

Here \(\partial s_{\varvec{H}}(\varvec{H}, \varvec{q})\) and \(\partial s_{\varvec{q}}(\varvec{H}, \varvec{q})\) denote the partial derivatives of \(s(\varvec{H}, \varvec{q})\) with respect to \(\varvec{H}\) and \(\varvec{q}\), respectively. And \(\partial g_{\varvec{q}}(\varvec{q})\) denotes the partial derivatives of \(g(\varvec{q})\) with respect to \(\varvec{q}\).

At each step of the Newton method, the linearization (25) gives the following matrix system to be solved

where \(\delta U^l\) and \(\delta \widehat{U}^l\) are the vectors of degrees of freedom of \((\delta \varvec{H}_h^l, \delta \varvec{q}_h^l, \delta u_h^l)\) and \(\delta \widehat{u}_h^l\), respectively. The system (27) is first solved for the traces only \(\delta \widehat{U}^l\):

where

Once \(\delta \widehat{U}^l\) is known, the other unknowns \(\delta U^l\) are then retrieved element-wise.

3.5 Fixed-Point Method

To devise the fixed-point method for solving (22), we describe how to deal with the boundary condition \(g(\varvec{q}) = 0\). For any given \(\varvec{q}^{\ell -1}\), we linearize it around \(\varvec{q}^{\ell -1}\) to obtain

Starting from an initial guess \((\varvec{H}^0_h, \varvec{q}_h^0, u_h^0)\) we find \((\varvec{q}_h^l,u_h^l,\widehat{u}_h^l) \in \varvec{V}_{h}^p \times U_{h}^p \times M_h^p\) such that

for all \((\varvec{v}, w, \mu ) \in \varvec{V}_h^p \times U_h^p \times M_h^p\), and then compute \(\varvec{H}_h^l\in \varvec{W}_{h}^p\) such that

Note here that \(b(\varvec{q}_h^{l-1}) = g(\varvec{q}_h^{l-1}) - \partial g_{\varvec{q}}(\varvec{q}_h^{l-1}) \cdot \varvec{q}_h^{l-1}\), and that the numerical flux \(\widehat{\varvec{q}}_h^l\) is defined by (24).

At each step of the fixed-point HDG method, the weak formulation (31) yields the matrix system similar to (27). The global matrix for the degrees of freedom of \(\widehat{u}_h^l\) is changed at each step because of the linearization of the nonlinear boundary condition \(g(\varvec{q}) = 0\). In this case, the fixed-point HDG method is no longer competitive to the Newton-HDG method. We note however that if \(g(\cdot )\) is a linear function, then the global matrix will be unchanged at each fixed-point step. In this case, the global matrix can be formed prior to performing the fixed-point iteration. In any case, the Newton-HDG method is more efficient than the fixed-point HDG method since the former requires considerably less number of iterations than the latter.

3.6 Numerical Experiments

We give several examples of high-order meshes generated using the HDG methods for analytical density functions on both planar and curved domains. We compare the convergence of the HDG methods for these examples. We also demonstrate that our methods can generate smooth high-order meshes even at very high mesh resolutions.

Ring meshes on a square domain. We wish to generate meshes on a square domain \(\Omega ' = (-0.5, 0.5)^2\) with the following target density function:

where \(\varvec{\alpha }= (\alpha _1, \alpha _2, \alpha _3)\) determines the density function. This density function was introduced in [29]. We consider three instances of the density function (33) corresponding to \(\varvec{\alpha }_1 = (5, 200, 0.25)\), \(\varvec{\alpha }_2 = (10, 200, 0.25)\), and \(\varvec{\alpha }_3 = (20, 200, 0.25)\), as shown in Fig. 1.

Three instances of the density function (33)

First, a background mesh on which the optimal transport meshes are generated is a uniform grid of \(50 \times 50 \times 2\) triangles on the square domain \(\Omega = (-0.5, 0.5)^2\). Here we use polynomial degree \(p=3\) to represent the numerical solution. Figure 2 depicts the three high-order meshes generated for these density instances. We observe that as \(\alpha _1\) increases from 5, 10, to 20, it results in more elements concentrating into the ring.

To demonstrate that our methods can generate smooth high-order meshes even at very high mesh resolutions, we consider a background mesh as a uniform grid of \(100 \times 100\) quadrilaterals with polynomial degree \(p=3\). Figure 3 depicts the three high-order meshes generated on this background mesh, while Fig. 4 shows the close-up view near the ring of the first and third meshes. Despite there are many elements concentrating into the ring, the meshes are smooth and non-tangled.

Three high-order meshes are generated for the density functions shown in Fig. 1 using a uniform background mesh of \(50 \times 50 \times 2\) triangles with \(p=3\)

Three high-order meshes are generated for the density functions shown in Fig. 1 using a uniform background mesh of \(100 \times 100\) quadrilaterals with \(p=3\)

Bell meshes on a square domain. We consider three new instances of the density function (33) corresponding to \(\varvec{\alpha }_4 = (10, 200, 0)\), \(\varvec{\alpha }_5 = (20, 200, 0)\), and \(\varvec{\alpha }_6 = (40, 200, 0)\). Figure 5 depicts the three high-order meshes that are generated for these instances on a uniform mesh of \(60 \times 60 \times 2\) triangles with \(p=3\), while Fig. 6 shows the meshes on a uniform mesh of \(60 \times 60\) quadrilaterals. We see that as \(\alpha _1\) increases from 10, 20, to 40, it results in more elements concentrating into the origin (0, 0).

Three high-order meshes are generated for three instances of the density function (33) using a uniform background mesh of \(60 \times 60 \times 2\) triangles with \(p=3\)

Three high-order meshes are generated for three instances of the density function (33) using a uniform background mesh of \(60 \times 60\) quadrilaterals with \(p=3\)

Shock-aligned meshes on a cylindrical domain. High speed flows past a unit circular cylinder is a popular test case in computational fluid dynamics [74,75,76,77,78,79,80]. For high Mach numbers, a strong bow shock forms in front of the cylinder. Therefore, it is important to generate high-quality meshes to align the bow shock. The geometry is described by a half unit cylinder \(x^2 + y^2 = 1\) and a half elliptical boundary \(x^2/2^2 + y^2/4^2 = 1\). To represent a bow shock, the following target density function is considered

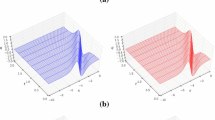

where \(\varvec{\beta }= (\beta _1, \beta _2, \beta _3)\) determines the density function. We consider four instances of the density function (34) corresponding to \(\varvec{\beta }_1 = (5, 5, 3)\), \(\varvec{\beta }_2 = (5, 15, 3)\), \(\varvec{\beta }_3 = (15, 5, 3)\), and \(\varvec{\beta }_4 = (15, 15, 3)\), as shown in Fig. 7.

The background mesh on which the optimal transport meshes are generated is a quadrilateral grid of \(40 \times 60\) elements with polynomial degree \(p=4\). Figure 8 depicts the four high-order meshes generated for these density instances shown in Fig. 7, while Fig. 9 shows the close-up view near the shock region of the last two meshes. We see that increasing the amplitude of the density function in the shock region results in more elements concentrating into the shock region. Furthermore, widening the density function increases the thickness of the shock region. We emphasize that the generated meshes are high-order, smooth, non-tangled, and conforming to the curved boundary of the physical domain.

High-order quadrilateral meshes correspond to the four density instances shown in Fig. 7

4 Conclusion

We have presented two hybridizable discontinuous Galerkin methods for numerically solving the Monge–Ampère equation. The first HDG method is based on the Newton method, whereas the second HDG method is based on the fixed-point method. Numerical results were presented to demonstrate that the convergence and accuracy of the HDG methods. The Newton-HDG method is more efficient since it requires less iterations than the fixed-point HDG method. The numerical results showed that the HDG methods yield the convergence rate of \(O(h^p)\) for the approximate scalar variable \(u_h\), the approximate gradient \(\varvec{q}_h\), and the approximate Hessian \(\varvec{H}_h\). The convergence rate of \(O(h^p)\) for the approximate gradient and Hessian is an attractive feature of the HDG methods. Furthermore, the HDG methods were extended to generate r-adaptive high-order meshes based on equidistribution of a given scalar density function via the optimal transport theory. Several numerical experiments were presented to illustrate the generation of smooth high-order meshes on planar and curved domains.

We would like to construct a stability analysis of the proposed HDG method, that would allow us to properly define the stabilization function. Choosing the optimal stabilization function is still a theoretical issue to be investigated for nonlinear PDEs [73]. It is also important to analyze the HDG methods to understand the convergence rates observed in this paper. Furthermore, we would like to extend our methodology to generate r-adaptive meshes for flow problems in computational fluid dynamics. Therefore, three-dimensional mesh generation based on the optimal transport theory is an important topic to be addressed in future work.

Data Availability

The datasets generated in this study are available from the corresponding author on reasonable request.

References

Feng, X., Glowinski, R., Neilan, M.: Recent developments in numerical methods for fully nonlinear second order partial differential equations. SIAM Rev. 55(2), 205–267 (2013). https://doi.org/10.1137/110825960

Brenier, Y.: Polar factorization and monotone rearrangement of vector-valued functions. Commun. Pure Appl. Math. 44(4), 375–417 (1991). https://doi.org/10.1002/cpa.3160440402

Benamou, J.D., Brenier, Y.: A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem. Numer. Math. 84(3), 375–393 (2000). https://doi.org/10.1007/s002110050002

Benamou, J.D., Froese, B.D., Oberman, A.M.: Two numerical methods for the elliptic Monge–Ampère equation. ESAIM Math. Modell. Numer. Anal. 44(4), 737–758 (2010). https://doi.org/10.1051/m2an/2010017

Benamou, J.D., Froese, B.D., Oberman, A.M.: Numerical solution of the optimal transportation problem using the Monge–Ampère equation. J. Comput. Phys. 260, 107–126 (2014). https://doi.org/10.1016/j.jcp.2013.12.015

Caffarelli, L.A.: Interior \(W^2, p\) estimates for solutions of the Monge–Ampere equation. Ann. Math. 131(1), 135 (1990). https://doi.org/10.2307/1971510

Dean, E.J., Glowinski, R.: Numerical methods for fully nonlinear elliptic equations of the Monge–Ampère type. Comput. Methods Appl. Mech. Eng. 195(13–16), 1344–1386 (2006). https://doi.org/10.1016/j.cma.2005.05.023

Frisch, U., Matarrese, S., Mohayaee, R.: A reconstruction of the initial conditions of the universe by optimal mass transportation. Nature 417(6886), 260–262 (2002). https://doi.org/10.1038/417260a. arXiv:astro-ph/0109483

Froese, B.D., Oberman, A.M.: Fast finite difference solvers for singular solutions of the elliptic Monge–Ampère equation. J. Comput. Phys. 230(3), 818–834 (2011). https://doi.org/10.1016/j.jcp.2010.10.020

Oliker, V.: Mathematical aspects of design of beam shaping surfaces in geometrical optics. In: Trends in nonlinear analysis, pp. 193–224. Springer, Berlin (2003). https://doi.org/10.1007/978-3-662-05281-54

Prins, C.R., Ten Thije Boonkkamp, J.H., Van Roosmalen, J., Ijzerman, W.L., Tukker, T.W.: A Monge–Ampère-solver for free-form reflector design. SIAM J. Sci. Comput. 36(3), B640–B660 (2014). https://doi.org/10.1137/130938876

Trudinger, N.S., Wang, X.-J.: The Monge–Ampere equation and its geometric applications. Handb. Geom. Anal. I, 467–524 (2008)

Awanou, G.: Standard finite elements for the numerical resolution of the elliptic Monge–Ampère equation: classical solutions. IMA J. Numer. Anal. 35(3), 1150–1166 (2015). https://doi.org/10.1093/imanum/dru028

Böhmer, K.: On finite element methods for fully nonlinear elliptic equations of second order. SIAM J. Numer. Anal. 46(3), 1212–1249 (2008). https://doi.org/10.1137/040621740

Brenner, S., Gudi, T., Neilan, M., Sung, L.-Y.: C0 penalty methods for the fully nonlinear Monge–Ampère equation. Math. Comput. 80(276), 1979–1995 (2011). https://doi.org/10.1090/s0025-5718-2011-02487-7

Brenner, S.C., Neilan, M.: Finite element approximations of the three dimensional Monge–Ampère equation. ESAIM Math. Modell. Numer. Anal. 46(5), 979–1001 (2012). https://doi.org/10.1051/m2an/2011067

Caboussat, A., Glowinski, R., Sorensen, D.C.: A least-squares method for the numerical solution of the Dirichlet problem for the elliptic Monge–Ampère equation in dimension two. ESAIM Control Optim. Calc. Var. 19(3), 780–810 (2013). https://doi.org/10.1051/cocv/2012033

Feng, X., Neilan, M.: Finite element approximations of general fully nonlinear second order elliptic partial differential equations based on the vanishing moment method. Comput. Math. Appl. 68(12), 2182–2204 (2014). https://doi.org/10.1016/j.camwa.2014.07.023

Feng, X., Neilan, M.: Mixed finite element methods for the fully nonlinear Monge-Ampère equation based on the vanishing moment method. SIAM J. Numer. Anal. 47(2), 1226–1250 (2009). https://doi.org/10.1137/070710378. arXiv:0712.1241

Feng, X., Lewis, T.: Mixed interior penalty discontinuous Galerkin methods for fully nonlinear second order elliptic and parabolic equations in high dimensions. Numer. Methods Partial Differ. Equ. 30(5), 1538–1557 (2014). https://doi.org/10.1002/num.21856

Feng, X., Jensen, M.: Convergent semi-Lagrangian methods for the Monge–Ampère equation on unstructured grids. SIAM J. Numer. Anal. 55(2), 691–712 (2017). https://doi.org/10.1137/16M1061709

Feng, X., Lewis, T.: Nonstandard local discontinuous Galerkin methods for fully nonlinear second order elliptic and parabolic equations in high dimensions. J. Sci. Comput. 77(3), 1534–1565 (2018). https://doi.org/10.1007/s10915-018-0765-z. arXiv:1801.05877

Froese, B.D.: A numerical method for the elliptic Monge–Ampère equation with transport boundary conditions. SIAM J. Sci. Comput. 34(3), A1432–A1459 (2012). https://doi.org/10.1137/110822372. arXiv:1101.4981

Liu, H., Glowinski, R., Leung, S., Qian, J.: A finite element/operator-splitting method for the numerical solution of the three dimensional Monge–Ampère equation. J. Sci. Comput. 81(3), 2271–2302 (2019). https://doi.org/10.1007/s10915-019-01080-4

Lakkis, O., Pryer, T.: A finite element method for nonlinear elliptic problems. SIAM J. Sci. Comput. 35(4), A2025–A2045 (2013). https://doi.org/10.1137/120887655

Glowinski, R., Liu, H., Leung, S., Qian, J.: A finite element/operator-splitting method for the numerical solution of the two dimensional elliptic Monge–Ampère equation. J. Sci. Comput. 79(1), 1–47 (2019). https://doi.org/10.1007/s10915-018-0839-y

Delzanno, G.L., Chacón, L., Finn, J.M., Chung, Y., Lapenta, G.: An optimal robust equidistribution method for two-dimensional grid adaptation based on Monge–Kantorovich optimization. J. Comput. Phys. 227(23), 9841–9864 (2008). https://doi.org/10.1016/j.jcp.2008.07.020

Budd, C.J., Williams, J.F.: Moving mesh generation using the parabolic Monge–Ampère equation. SIAM J. Sci. Comput. 31(5), 3438–3465 (2009). https://doi.org/10.1137/080716773

Budd, C.J., Russell, R.D., Walsh, E.: The geometry of r-adaptive meshes generated using optimal transport methods. J. Comput. Phys. 282, 113–137 (2015). https://doi.org/10.1016/j.jcp.2014.11.007. arXiv:1409.5361

Browne, P.A., Budd, C.J., Piccolo, C., Cullen, M.: Fast three dimensional r-adaptive mesh redistribution. J. Comput. Phys. 275, 174–196 (2014). https://doi.org/10.1016/j.jcp.2014.06.009

Chacón, L., Delzanno, G.L., Finn, J.M.: Robust, multidimensional mesh-motion based on Monge-Kantorovich equidistribution. J. Comput. Phys. 230(1), 87–103 (2011). https://doi.org/10.1016/j.jcp.2010.09.013

Weller, H., Browne, P., Budd, C., Cullen, M.: Mesh adaptation on the sphere using optimal transport and the numerical solution of a Monge–Ampère type equation. J. Comput. Phys. 308, 102–123 (2016). https://doi.org/10.1016/j.jcp.2015.12.018. arXiv:1512.02935

McRae, A.T., Cotter, C.J., Budd, C.J.: Optimal-transport-based mesh adaptivity on the plane and sphere using finite elements. SIAM J. Sci. Comput. 40(2), A1121–A1148 (2018). https://doi.org/10.1137/16M1109515. arXiv:1612.08077

Sulman, M., Williams, J.F., Russell, R.D.: Optimal mass transport for higher dimensional adaptive grid generation. J. Comput. Phys. 230(9), 3302–3330 (2011). https://doi.org/10.1016/j.jcp.2011.01.025

Sulman, M.H., Nguyen, T.B., Haynes, R.D., Huang, W.: Domain decomposition parabolic Monge–Ampère approach for fast generation of adaptive moving meshes. Comput. Math. Appl. 84, 97–111 (2021). https://doi.org/10.1016/j.camwa.2020.12.007

Aparicio-Estrems, G., Gargallo-Peiró, A., Roca, X.: Combining high-order metric interpolation and geometry implicitization for curved r-adaption. CAD Comput. Aided Des. 157, 103478 (2023). https://doi.org/10.1016/j.cad.2023.103478

Cockburn, B., Gopalakrishnan, J., Lazarov, R.: Unified hybridization of discontinuous Galerkin, mixed and continuous Galerkin methods for second order elliptic problems. SIAM J. Numer. Anal. 47, 1319–1365 (2009)

Cockburn, B., Dong, B., Guzmán, J., Restelli, M., Sacco, R.: A hybridizable discontinuous Galerkin method for steady-state convection-diffusion-reaction problems. SIAM J. Sci. Comput. 31(5), 3827–3846 (2009)

Nguyen, N.C., Peraire, J., Cockburn, B.: An implicit high-order hybridizable discontinuous Galerkin method for linear convection diffusion equations. J. Comput. Phys. 228(9), 3232–3254 (2009). https://doi.org/10.1016/j.jcp.2009.01.030

Cockburn, B., Mustapha, K.: A hybridizable discontinuous Galerkin method for fractional diffusion problems. Numer. Math. 130(2), 293–314 (2015). https://doi.org/10.1007/s00211-014-0661-x. arXiv:1409.7383

Nguyen, N.C., Peraire, J., Cockburn, B.: An implicit high-order hybridizable discontinuous Galerkin method for nonlinear convection diffusion equations. J. Comput. Phys. 228(23), 8841–8855 (2009). https://doi.org/10.1016/j.jcp.2009.08.030

Ueckermann, M.P., Lermusiaux, P.F.: High-order schemes for 2D unsteady biogeochemical ocean models. Ocean Dyn. 60(6), 1415–1445 (2010). https://doi.org/10.1007/s10236-010-0351-x

Cockburn, B., Gopalakrishnan, J.: The derivation of hybridizable discontinuous Galerkin methods for Stokes flow. SIAM J. Numer. Anal. 47(2), 1092–1125 (2009). https://doi.org/10.1137/080726653

Cockburn, B., Gopalakrishnan, J., Nguyen, N.C., Peraire, J., Sayas, F.J.: Analysis of an HDG method for Stokes flow. Math. Comp. 80, 723–760 (2011)

Cockburn, B., Nguyen, N.C., Peraire, J.: A comparison of HDG methods for Stokes flow. J. Sci. Comput. 45(1–3), 215–237 (2010). https://doi.org/10.1007/s10915-010-9359-0

Nguyen, N.C., Peraire, J., Cockburn, B.: A hybridizable discontinuous Galerkin method for Stokes flow. Comput. Methods Appl. Mech. Eng. 199(9–12), 582–597 (2010). https://doi.org/10.1016/j.cma.2009.10.007

Ahnert, T., Bärwolff, G.: Numerical comparison of hybridized discontinuous Galerkin and finite volume methods for incompressible flow. Int. J. Numer. Meth. Fluids 76(5), 267–281 (2014). https://doi.org/10.1002/fld.3938

Nguyen, N.C., Peraire, J., Cockburn, B.: An implicit high-order hybridizable discontinuous Galerkin method for the incompressible Navier-Stokes equations. J. Comput. Phys. 230(4), 1147–1170 (2011). https://doi.org/10.1016/j.jcp.2010.10.032

Nguyen, N. C., Peraire, J., Cockburn, B.: Hybridizable discontinuous Galerkin methods. In: Proceedings of the international conference on spectral and high order methods, pp. 63–84. Springer, Berlin (2009)

Nguyen, N. C., Peraire, J., Cockburn, B.: A hybridizable discontinuous Galerkin method for the incompressible Navier-Stokes equations. In: Proceedings of the 48th AIAA Aerospace Sciences Meeting and Exhibit, pp. AIAA 2010–362. Orlando, Florida (2010)

Rhebergen, S., Cockburn, B.: A space-time hybridizable discontinuous Galerkin method for incompressible flows on deforming domains. J. Comput. Phys. 231(11), 4185–4204 (2012). https://doi.org/10.1016/j.jcp.2012.02.011

Rhebergen, S., Wells, G.N.: A hybridizable discontinuous Galerkin method for the Navier-Stokes equations with pointwise divergence-free velocity field. J. Sci. Comput. 76(3), 1484–1501 (2018). https://doi.org/10.1007/s10915-018-0671-4. arXiv:1704.07569

Ueckermann, M.P., Lermusiaux, P.F.: Hybridizable discontinuous Galerkin projection methods for Navier-Stokes and Boussinesq equations. J. Comput. Phys. 306, 390–421 (2016). https://doi.org/10.1016/j.jcp.2015.11.028

Ciucă, C., Fernandez, P., Christophe, A., Nguyen, N.C., Peraire, J.: Implicit hybridized discontinuous Galerkin methods for compressible magnetohydrodynamics. J. Comput. Phys. X 5, 100042 (2020). https://doi.org/10.1016/j.jcpx.2019.100042

Fernandez, P., Nguyen, N.C., Peraire, J.: The hybridized discontinuous Galerkin method for Implicit Large-Eddy simulation of transitional turbulent flows. J. Comput. Phys. 336, 308–329 (2017). https://doi.org/10.1016/j.jcp.2017.02.015

Franciolini, M., Fidkowski, K.J., Crivellini, A.: Efficient discontinuous Galerkin implementations and preconditioners for implicit unsteady compressible flow simulations. Comput. Fluids 203, 104542 (2020). https://doi.org/10.1016/j.compfluid.2020.104542. arXiv:1812.04789

Moro, D., Nguyeny, N. C., Peraire, J.: Navier-stokes solution using Hybridizable discontinuous Galerkin methods. In: 20th AIAA computational fluid dynamics conference 2011, American Institute of Aeronautics and Astronautics, Honolulu, Hawaii, pp. AIAA–2011–3407 (2011). https://doi.org/10.2514/6.2011-3407. http://arc.aiaa.org/doi/abs/10.2514/6.2011-3407

Nguyen, N.C., Peraire, J.: Hybridizable discontinuous Galerkin methods for partial differential equations in continuum mechanics. J. Comput. Phys. 231(18), 5955–5988 (2012). https://doi.org/10.1016/j.jcp.2012.02.033

Nguyen, N.C., Peraire, J., Cockburn, B.: A class of embedded discontinuous Galerkin methods for computational fluid dynamics. J. Comput. Phys. 302, 674–692 (2015). https://doi.org/10.1016/j.jcp.2015.09.024

Vila-Pérez, J., Giacomini, M., Sevilla, R., Huerta, A.: Hybridisable discontinuous Galerkin formulation of compressible flows. Arch. Comput. Methods Eng. 28(2), 753–784 (2021). https://doi.org/10.1007/s11831-020-09508-z

Vila-Pérez, J., Van Heyningen, R.L., Nguyen, N.-C., Peraire, J.: Exasim: generating discontinuous Galerkin codes for numerical solutions of partial differential equations on graphics processors. SoftwareX 20, 101212 (2022). https://doi.org/10.1016/j.softx.2022.101212

Nguyen, N.C., Peraire, J., Cockburn, B.: Hybridizable discontinuous Galerkin methods for the time-harmonic Maxwell’s equations. J. Comput. Phys. 230(19), 7151–7175 (2011). https://doi.org/10.1016/j.jcp.2011.05.018

Sánchez, M.A., Du, S., Cockburn, B., Nguyen, N.-C., Peraire, J.: Symplectic Hamiltonian finite element methods for electromagnetics. Comput. Methods Appl. Mech. Eng. 396, 114969 (2022). https://doi.org/10.1016/j.cma.2022.114969

Li, L., Lanteri, S., Perrussel, R.: A hybridizable discontinuous Galerkin method combined to a Schwarz algorithm for the solution of 3D time-harmonic Maxwell’s equations. J. Comput. Phys. 256, 563–581 (2014). https://doi.org/10.1016/j.jcp.2013.09.003

Soon, S.-C., Cockburn, B., Stolarski, H.K.: A hybridizable discontinuous Galerkin method for linear elasticity. Int. J. Numer. Meth. Eng. 80(8), 1058–1092 (2009)

Cockburn, B., Shi, K.: Superconvergent HDG methods for linear elasticity with weakly symmetric stresses. IMA J. Numer. Anal. 33(3), 747–770 (2013). https://doi.org/10.1093/imanum/drs020

Fu, G., Cockburn, B., Stolarski, H.: Analysis of an HDG method for linear elasticity. Int. J. Numer. Meth. Eng. 102(3–4), 551–575 (2015)

Qiu, W., Shen, J., Shi, K.: An HDG method for linear elasticity with strong symmetric stresses. Math. Comput. 87(309), 69–93 (2018)

Sánchez, M.A., Cockburn, B., Nguyen, N.C., Peraire, J.: Symplectic Hamiltonian finite element methods for linear elastodynamics. Comput. Methods Appl. Mech. Eng. 381, 113843 (2021). https://doi.org/10.1016/j.cma.2021.113843

Cockburn, B., Shen, J.: An algorithm for stabilizing hybridizable discontinuous Galerkin methods for nonlinear elasticity. Results Appl. Math. 1, 100001 (2019). https://doi.org/10.1016/j.rinam.2019.01.001

Fernandez, P., Christophe, A., Terrana, S., Nguyen, N.C., Peraire, J.: Hybridized discontinuous Galerkin methods for wave propagation. J. Sci. Comput. 77(3), 1566–1604 (2018). https://doi.org/10.1007/s10915-018-0811-x

Kabaria, H., Lew, A., Cockburn, B.: A hybridizable discontinuous Galerkin formulation for non-linear elasticity. Comput. Methods Appl. Mech. Eng. 283, 303–329 (2015)

Terrana, S., Nguyen, N.C., Bonet, J., Peraire, J.: A hybridizable discontinuous Galerkin method for both thin and 3D nonlinear elastic structures. Comput. Methods Appl. Mech. Eng. 352, 561–585 (2019). https://doi.org/10.1016/J.CMA.2019.04.029

Bai, Y., Fidkowski, K.J.: Continuous artificial-viscosity shock capturing for hybrid discontinuous Galerkin on adapted meshes. AIAA J. 60(10), 5678–5691 (2022). https://doi.org/10.2514/1.J061783

Barter, G.E., Darmofal, D.L.: Shock capturing with PDE-based artificial viscosity for DGFEM: Part I. Formulation. J. Comput. Phys. 229(5), 1810–1827 (2010). https://doi.org/10.1016/j.jcp.2009.11.010

Ching, E.J., Lv, Y., Gnoffo, P., Barnhardt, M., Ihme, M.: Shock capturing for discontinuous Galerkin methods with application to predicting heat transfer in hypersonic flows. J. Comput. Phys. 376, 54–75 (2019). https://doi.org/10.1016/j.jcp.2018.09.016

Nguyen, N. C., Perairey, J.: An adaptive shock-capturing HDG method for compressible flows. In: 20th AIAA computational fluid dynamics conference 2011, American Institute of Aeronautics and Astronautics, Reston, Virigina, 2011, pp. AIAA 2011–3060. https://doi.org/10.2514/6.2011-3060. http://arc.aiaa.org/doi/abs/10.2514/6.2011-3060

Moro, D., Nguyen, N.C., Peraire, J.: Dilation-based shock capturing for high-order methods. Int. J. Numer. Meth. Fluids 82(7), 398–416 (2016). https://doi.org/10.1002/fld.4223

Persson, P. O., Peraire, J.: Sub-cell shock capturing for discontinuous Galerkin methods. In: Collection of Technical Papers-44th AIAA Aerospace Sciences Meeting, Vol. 2, pp. 1408–1420. Reno, Neveda (2006). https://doi.org/10.2514/6.2006-112

Persson, P. O.: Shock capturing for high-order discontinuous Galerkin simulation of transient flow problems. In: 21st AIAA computational fluid dynamics conference, p. 3061. San Diego, CA (2013). https://doi.org/10.2514/6.2013-3061

Funding

This work was supported by the United States Department of Energy under contract DE-NA0003965, the National Science Foundation under grant number NSF-PHY-2028125, the Air Force Office of Scientific Research under Grant No. FA9550-22-1-0356, and the MIT Portugal program under the seed grant number 6950138.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nguyen, N.C., Peraire, J. Hybridizable Discontinuous Galerkin Methods for the Two-Dimensional Monge–Ampère Equation. J Sci Comput 100, 44 (2024). https://doi.org/10.1007/s10915-024-02604-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02604-3