Abstract

We present the recent development of hybridizable and embedded discontinuous Galerkin (DG) methods for wave propagation problems in fluids, solids, and electromagnetism. In each of these areas, we describe the methods, discuss their main features, display numerical results to illustrate their performance, and conclude with bibliography notes. The main ingredients in devising these DG methods are (1) a local Galerkin projection of the underlying partial differential equations at the element level onto spaces of polynomials of degree k to parametrize the numerical solution in terms of the numerical trace; (2) a judicious choice of the numerical flux to provide stability and consistency; and (3) a global jump condition that enforces the continuity of the numerical flux to obtain a global system in terms of the numerical trace. These DG methods are termed hybridized DG methods, because they are amenable to hybridization (static condensation) and hence to more efficient implementations. They share many common advantages of DG methods and possess some unique features that make them well-suited to wave propagation problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Discontinuous Galerkin (DG) methods possess many attractive properties for wave propagation problems. In particular, they are locally conservative, high-order accurate, amenable to complex geometries and unstructured meshes, low dissipative and dispersive, highly parallelizable, and more stable than continuous Galerkin (CG) methods for convection-dominated problems. As a result, DG methods have been widely used in conjunction with explicit time-marching schemes to simulate wave phenomena. Explicit time-integration schemes, however, often become impractical due to the severe time-step size restriction, an issue that is overcome by implicit time-marching schemes. When they are paired with implicit time-marching schemes, DG methods yield a much larger system of equations than CG methods due to the duplication of degrees of freedom along the element faces. The high computational cost and memory footprint make implicit DG methods considerably more expensive than CG methods for a wide variety of applications.

The hybridizable DG (HDG) methods were introduced in [25] in the framework of steady-state diffusion as part of the effort of devising efficient implicit DG methods for solving elliptic partial differential equations (PDEs). Indeed, the HDG methods guarantee that only the degrees of freedom of the approximation of the scalar variable on the interelement boundaries are globally coupled, and that the approximate gradient attains optimal order of convergence for elliptic problems [17, 27, 28]. The development of the HDG methods was subsequently extended to a variety of other PDEs: diffusion problems [10, 65], convection-diffusion problems [18, 85, 86, 111], incompressible flow [26, 29, 34, 87, 88], compressible flows [52, 76, 84, 95, 104], continuum mechanics [7, 84, 95, 108], time-dependent acoustic and elastic wave propagation [33, 89], the Helmholtz equation[47, 58, 61], the time-harmonic Maxwell’s equations [72, 90] with the hydrodynamic model [113], and the time-dependent Maxwell’s equations [15]. Since the HDG methods inherit many attractive features of DG methods and offer additional advantages in terms of reduced globally coupled degrees of freedom and enhanced accuracy, they have been widely used in conjunction with implicit time-marching schemes to solve time-dependent problems.

Another appealing feature of the HDG methods is that a superconvergent approximation can be computed through a local (and thus inexpensive and highly parallelizable) post-processing step. The superconvergence property cannot be taken for granted since only some combinations of discontinuous finite element spaces and stabilization functions can ensure that property [31, 32]. Recently, the theory of M-decompositions has provided a simple sufficient condition for the superconvergence. By comparing the dimensions of the space of the approximate trace with the dimensions of the traces of the local volumetric approximations, the M-decompositions provide some guidelines to enrich the gradient space such that the superconvergence is ensured. After being presented for diffusion [19, 20, 23], the M-decompositions tool has been successfully applied to devise superconvergent HDG methods for Stokes flows [22] and linear elasticity [21].

In the setting of wave propagation problems, the HDG methods compare with other finite element methods favorably because they achieve optimal orders of convergence for both the scalar and gradient unknowns and display superconvergence properties [33, 47, 61, 89]. Recently, explicit HDG methods [109] have been introduced for numerically solving the acoustic wave equation. The explicit HDG methods have the same computational cost as other explicit DG methods and provide optimal convergence rates for all the approximate variables. Furthermore, it displays a superconvergence property in agreement with the theoretical results obtained in [33]. In spite of the optimal convergence properties, the HDG methods presented in [89, 109] might not be suitable for long-time computations, due to their energy-dissipative characteristics. The dissipative characteristics of HDG for convection-diffusion systems are investigated in [55]. Indeed, it has been observed that dissipative numerical schemes suffer a loss of accuracy for long-time computations, despite their optimal error estimates. Symplectic Hamiltonian HDG methods introduced in [103] are capable of preserving the Hamiltonian structure of the wave equation, while displaying superconvergence properties. Symplectic HDG methods conserve energy and compare favorably with dissipative HDG methods for long-time simulations.

Further extension of the HDG method leads to the introduction of the embedded DG (EDG) method [63, 96] and the interior EDG (IEDG) method [50, 54, 91]. In this paper, we refer to these DG methods as hybridized DG methods, because they are all amenable to hybridization (static condensation) and hence to more efficient implementations. The essential ingredients of hybridized DG methods are (1) a local Galerkin projection of the underlying PDEs at the element level onto spaces of polynomials of degree k to parametrize the numerical solution in terms of the numerical trace; (2) a judicious choice of the numerical flux to provide stability and consistency; and (3) a global jump condition that enforces the continuity of the numerical flux to arrive at a global weak formulation in terms of the numerical trace. The only difference among them lies in the definition of the approximation space for the numerical trace. In particular, the numerical trace space of the EDG method is a subset of that of the IEDG method, which in turn is a subset of that of the HDG method. While the EDG method and the IEDG method do not have superconvergence properties like the HDG method, they yield a smaller system of equations than the HDG method. Indeed, the EDG method has the same degrees of freedom and sparsity pattern as the static condensation of the CG method. Since the degrees of freedom of the numerical trace on the domain boundary can be eliminated in the IEDG method, IEDG has even less globally coupled unknowns than the EDG method. Thus, the IEDG method is more computationally efficient than both the EDG method and the HDG method.

The remainder of the paper is organized as follows. In Sect. 2, we introduce preliminary concepts and the notation used throughout the paper. In Sect. 3, we describe hybridized DG methods for solving the incompressible and compressible Navier–Stokes equations, and present numerical results to demonstrate their performance for a range of flow regimes and wave phenomena. In Sect. 4, we focus on HDG methods for linear and nonlinear elastodynamics, and show some convergence results for a thin structure. In Sect. 5, we introduce HDG methods for time-dependent Maxwell’s equations with the divergence-free constraint, and present results to verify the convergence and accuracy order. We conclude the paper with our perspectives on future research in Sect. 6.

2 Preliminaries

2.1 Finite Element Mesh

Let \(T > 0\) be a final time and let \(\Omega \subset \mathbb {R}^d\) be an open, connected and bounded physical domain with Lipschitz boundary \(\partial \Omega \). We denote by \(\mathcal {T}_h\) a collection of disjoint, regular, p-th degree curved elements K that partition \(\Omega \),Footnote 1 and set \(\partial \mathcal {T}_h := \{ \partial K : K \in \mathcal {T}_h \} \) to be the collection of the boundaries of the elements in \(\mathcal {T}_h\). For an element K of the collection \(\mathcal {T}_h\), \(F= \partial K \cap \partial \Omega \) is a boundary face if its \(d-1\) Lebesgue measure is nonzero. For two elements \(K^+\) and \(K^-\) of \(\mathcal {T}_h\), \(F=\partial K^{+} \cap \partial K^{-}\) is the interior face between \(K^+\) and \(K^-\) if its \(d-1\) Lebesgue measure is nonzero. We denote by \(\mathcal {E}_h^I\) and \(\mathcal {E}_h^B\) the set of interior and boundary faces, respectively, and we define \(\mathcal {E}_h := \mathcal {E}_h^I \cup \mathcal {E}_h^B\) as the union of interior and boundary faces. Note that, by definition, \(\partial \mathcal {T}_h\) and \(\mathcal {E}_h\) are different. More precisely, an interior face is counted twice in \(\partial \mathcal {T}_h\) but only once in \(\mathcal {E}_h\), whereas a boundary face is counted once both in \(\partial \mathcal {T}_h\) and \(\mathcal {E}_h\).

2.2 Finite Element Spaces

Let \(\mathcal {P}_k(D)\) denote the space of polynomials of degree at most k on a domain \(D \subset \mathbb {R}^n\), let \(L^2(D)\) be the space of Lebesgue square-integrable functions on D, and \(\mathcal {C}^0(D)\) the space of continuous functions on D. Also, let \(\varvec{\psi }^p_K\) denote the p-th degree parametric mapping from the reference element \(K_{ref}\) to an element \(K \in \mathcal {T}_h\) in the physical domain, and \(\varvec{\phi }^p_F\) be the p-th degree parametric mapping from the reference face \(F_{ref}\) to a face \(F \in \mathcal {E}_h\) in the physical domain. We then introduce the following discontinuous finite element spaces in \(\mathcal {T}_h\),

and on the mesh skeleton \(\mathcal {E}_{h}\),

where m is an integer whose particular value depends on the PDE. Note that \(\varvec{\widehat{\mathcal {M}}}_{h}^k\) consists of functions which are discontinuous at the boundaries of the faces, whereas \(\varvec{\widetilde{\mathcal {M}}}_{h}^k\) consists of functions that are continuous at the boundaries of the faces. We also denote by \(\varvec{\mathcal {M}}_{h}^k\) a traced finite element space that satisfies \(\varvec{\widetilde{\mathcal {M}}}_{h}^k \subseteq \varvec{\mathcal {M}}_{h}^k \subseteq \varvec{\widehat{\mathcal {M}}}_{h}^k\). In particular, we define

where \(\mathcal {E}^\mathrm{E}_h\) is a subset of \(\mathcal {E}_h\). Note that \(\varvec{\mathcal {M}}_{h}^k\) consists of functions which are continuous on \(\mathcal {E}^\mathrm{E}_h\) and discontinuous on \(\mathcal {E}^\mathrm{H}_h := \mathcal {E}_h \backslash \mathcal {E}^\mathrm{E}_h\). Furthermore, if \(\mathcal {E}^\mathrm{E}_h = \emptyset \) then \(\varvec{\mathcal {M}}_{h}^k = \varvec{\widehat{\mathcal {M}}}_{h}^k\), and if \(\mathcal {E}^\mathrm{E}_h = \mathcal {E}_h\) then \(\varvec{\mathcal {M}}_{h}^k = \varvec{\widetilde{\mathcal {M}}}_{h}^k\).

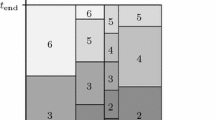

Due to the discontinuous nature of the approximation spaces in (1), only the degrees of freedom of the approximate trace of the solution on the mesh skeleton \(\mathcal {E}_h\), approximated by functions in \(\varvec{\mathcal {M}}_h^k\), are globally coupled in hybridized DG methods [85, 91]. Hence, different choices of \(\mathcal {E}^\mathrm{E}_h\) lead to different schemes within the hybridized DG family. We briefly discuss three important choices of \(\mathcal {E}^\mathrm{E}_h\). The first one is \(\mathcal {E}^\mathrm{E}_h = \emptyset \) and implies \(\varvec{\mathcal {M}}_{h}^k = \widehat{\varvec{\mathcal {M}}}_{h}^k\). This choice corresponds to the hybridizable discontinuous Galerkin (HDG) method [25]. The second choice is \(\mathcal {E}^\mathrm{E}_h = \mathcal {E}_h\) which implies \(\varvec{\mathcal {M}}_{h}^k = \widetilde{\varvec{\mathcal {M}}}_{h}^k\) and thus enforces the continuity of the approximate trace on all faces. This choice corresponds to the embedded discontinuous Galerkin (EDG) method introduced in [63, 96]. Since \(\widetilde{\varvec{\mathcal {M}}}_{h}^k \subset \widehat{\varvec{\mathcal {M}}}_{h}^k\), the EDG method has fewer globally coupled degrees of freedom that the HDG method. And the third choice of the approximation space \(\varvec{\mathcal {M}}_{h}^k\) is obtained by setting \(\mathcal {E}^\mathrm{E}_h = \mathcal {E}^\mathrm{I}_h\), which implies \(\widetilde{\varvec{\mathcal {M}}}_{h}^k \subset \varvec{\mathcal {M}}_{h}^k \subset \widehat{\varvec{\mathcal {M}}}_{h}^k\), where the inclusions are strict. The resulting approximation space consists of functions which are discontinuous over the union of the boundary faces \(\mathcal {E}^{B}_h\) and continuous over the union of the interior faces \(\mathcal {E}^\mathrm{I}_h\). The resulting method has a characteristic of the HDG method on the boundary faces and a characteristic of the EDG method on interior faces. Because the approximate trace is taken to be continuous only on the interior faces, we shall name this method interior embedded DG (IEDG) method [50, 54, 91] to distinguish it from the EDG method for which the trace is continuous on all faces. We note that the IEDG method enjoys advantages of both the HDG and the EDG methods. First, IEDG inherits the reduced number of global degrees of freedom of EDG. In fact, thanks to the use of face-by-face local polynomial spaces on \(\mathcal {E}_h^B\) in the IEDG method, the degrees of freedom of the approximate trace on \(\mathcal {E}_h^B\) can be locally eliminated to yield a global matrix system involving only the degrees of freedom of the numerical trace on the interior faces. As a result, the globally coupled unknowns of the IEDG method are even less than those of the EDG method, and IEDG is more efficient than both EDG and HDG. Second, the IEDG scheme enforces the boundary conditions as strongly as the HDG method, thus retaining the boundary condition robustness of HDG. These features make the IEDG method an excellent alternative to the HDG and EDG methods. For additional details on the efficiency and robustness of HDG, EDG and IEDG, the interested reader is referred to [91].

It remains to define inner products associated with our finite element spaces. For functions a and b in \(L^2(D)\), we denote \((a,b)_D = \int _{D} a b\) if D is a domain in \(\mathbb {R}^d\) and \(\left\langle a,b\right\rangle _D = \int _{D} a b\) if D is a domain in \(\mathbb {R}^{d-1}\). Likewise, for functions \(\varvec{a}\) and \(\varvec{b}\) in \([L^2(D)]^m\), we denote \((\varvec{a},\varvec{b})_D = \int _{D} \varvec{a} \cdot \varvec{b}\) if D is a domain in \(\mathbb {R}^d\) and \(\left\langle \varvec{a},\varvec{b}\right\rangle _D = \int _{D} \varvec{a} \cdot \varvec{b}\) if D is a domain in \(\mathbb {R}^{d-1}\). For functions \(\varvec{A}\) and \(\varvec{B}\) in \([L^2(D)]^{m \times d}\), we denote \((\varvec{A},\varvec{B})_D = \int _{D} {\mathrm {tr}}(\varvec{A}^T \varvec{B})\) if D is a domain in \(\mathbb {R}^d\) and \(\left\langle \varvec{A},\varvec{B}\right\rangle _D = \int _{D} {\mathrm {tr}}(\varvec{A}^T \varvec{B})\) if D is a domain in \(\mathbb {R}^{d-1}\), where \({\mathrm {tr}} \, ( \cdot ) \) is the trace operator of a square matrix. We finally introduce the following element inner products

and face inner products

These notations and definitions are necessary for the remainder of the paper.

2.3 Time-Marching Methods

We describe time-marching methods to integrate in time the following index-1 differential-algebraic equation (DAE) system:

with initial condition \(\varvec{u}(t = 0) = \varvec{u}_0\) and where \(\varvec{M}\) is a matrix. The above DAE system will arise from the hybridized DG discretization of time-dependent PDEs in fluids, solids, and electromagnetism. In this context, \(\varvec{M}\) is the so-called mass matrix.

2.3.1 Linear Multistep Methods

We denote by \(\varvec{u}^n\) an approximation for the function \(\varvec{u}(t)\) at discrete time \(t^n = n \, \Delta t\), where \(\Delta t\) is the time step and n is an integer. Linear multistep (LM) methods use information from the previous s steps, \(\{\varvec{u}^{n+i}\}_{i=0}^{s-1}\), to calculate the solution at the next step \(\varvec{u}^{n+s}\). When we apply a general LM method to the differential part (3a) and treat the algebraic part (3b) implicitly, we arrive at the following algebraic system:

The coefficient vectors \(\varvec{a} = (a_0,a_1,\ldots ,a_s)\) and \(\varvec{b} = (b_0,b_1,\ldots ,b_s)\) determine the method. If \(b_s=0\), the method is called explicit; otherwise, it is called implicit. Note we need to solve the system of equations (4b) regardless of whether the LM method is explicit or implicit. For this reason and due to their superior stability properties, implicit methods are usually preferred over explicit methods for the temporal integration of DAE systems arising from the spatial hybridized DG discretization of time-dependent PDEs.

Backward difference formula (BDF) schemes are the most popular implicit LM methods. For a BDF scheme with s steps, the system (4) becomes

2.3.2 Implicit Runge-Kutta Methods

The coefficients of an s-stage Runge-Kutta (RK) method, \(a_{ij}, \, b_i, \, c_i , \ 1 \le i,j \le s\), are usually arranged in the form of a Butcher tableau:

For the family of implicit RK (IRK) methods, the RK matrix \(a_{ij}\) must be invertible. Let \(d_{ij}\) denote the inverse of \(a_{ij}\), and let \(\varvec{u}^{n,i}\) be the approximation of \(\varvec{u}(t)\) at discrete times \(t^{n,i} = (t_n + c_i \Delta t) , \ 1 \le i \le s\). The s-stage IRK method for the DAE system (3) can be sketched as follows. First, we solve the following 2s coupled systems of equations

for \((\varvec{u}^{n,i}, \varvec{v}^{n,i})\). Then we compute \(\varvec{u}^{n+1}\) from

where \(e_j = \sum _{i=1}^s b_i d_{ij}\). Finally, we solve the following system of equations for \(\varvec{v}^{n+1}\):

Note it is possible to advance the system (7a)–(7b) in time without solving (7c). Hence, we only need to solve (7c) at the particular time steps that we need \(\varvec{v}^{n+1}\) for post-processing purposes.

If the RK matrix \(a_{ij}\) is a lower-triangular matrix, then the method is called diagonally implicit RK (DIRK) scheme [2]. In the case of a DIRK method, each stage of the system (7) is uncoupled from the previous ones due to the fact that the matrix \(d_{ij}\) is lower-triangular, and can be viewed as a BDF step of the form (5).

2.4 Parallel Iterative Solvers

We briefly describe the parallel Newton–Krylov–Schwarz method used to solve the (possibly nonlinear) system of algebraic equations that arises from the temporal discretization of the DAE system (3) discussed in the previous section. A detailed description of the iterative solver can be found in [50, 54].

2.4.1 Nonlinear Solver

To simplify the notation, we shall drop the superscripts that denote the time steps. At any given time step, the nonlinear system of equations (5) reads as

where \(\varvec{h}\) and \(\varvec{g}\) are the discrete nonlinear residuals associated with (5a) and (5b), respectively. We solve this nonlinear system using Newton’s method. In particular, the linearization of (8) around a given state vector \((\bar{\varvec{u}}, \bar{\varvec{v}})\) yields the following linear system:

Here the matrices \(\varvec{A}\), \(\varvec{B}\), \(\varvec{C}\), and \(\varvec{D}\) have entries \(A_{ij} = \frac{\partial h_i(\bar{\varvec{u}}, \bar{\varvec{v}})}{\partial u_j}\), \(B_{ij} = \frac{\partial h_i(\bar{\varvec{u}}, \bar{\varvec{v}})}{\partial v_j}\), \(C_{ij} = \frac{\partial g_i(\bar{\varvec{u}}, \bar{\varvec{v}})}{\partial u_j}\), \(D_{ij} = \frac{\partial g_i(\bar{\varvec{u}}, \bar{\varvec{v}})}{\partial v_j}\), respectively. Since the matrix \(\varvec{A}\) has block-diagonal structure due to the discontinuous nature of the approximation spaces defined in Sect. 2.2, \(\delta \varvec{u}\) can be readily eliminated to obtain a reduced system in terms of \(\delta \varvec{v}\) only

where \(\varvec{K}= \varvec{D} - \varvec{C} \ \varvec{A}^{-1} \varvec{B}\) and \(\varvec{r} = \varvec{g} - \varvec{C} \ \varvec{A}^{-1} \varvec{h}\). This is the global system to be solved at every Newton iteration.

To accelerate the convergence of Newton’s iterations we compute an initial guess as a solution of a nonlinear least squares problem in which we seek to minimize the norm of the residuals over a subspace. The subspace consists of solutions already computed from the previous time steps. The Levenberg–Marquardt algorithm is used to solve the nonlinear least squares problem. Further details can be found in [50, 54].

2.4.2 Linear Solver

The linear system (10) is solved in parallel using the restarted GMRES method [101] with iterative classical Gram-Schmidt (ICGS) orthogonalization. In order to accelerate convergence, a left preconditioner \(\varvec{P}^{-1}\) is used and the linear system (10) is replaced by

A restricted additive Schwarz (RAS) [6] method with \(\delta \)-level overlap is used as parallel preconditioner. This approach relies on a decomposition of the unknowns in \(\delta {\varvec{v}}\) among parallel workers; which is performed as described in [50]. The RAS preconditioner is defined as

where \( \varvec{K}_{i} = \varvec{R}_{i}^{\delta } \ \varvec{K} \ \varvec{R}_{i}^{\delta }\) is the subdomain problem, \(\varvec{R}_{i}^{\beta }\) is the restriction operator onto the subspace associated to the nodes in the \(\beta \)-level overlap subdomain number i, and N denotes the number of subdomains. In our experience, \(\delta = 1\) provides the best balance between communication cost and number of GMRES iterations for almost all problems. In practice, we replace \(\varvec{K}_{i}^{-1}\) by the inverse of the block incomplete LU factorization with zero fill-in, BILU(0), of \(\varvec{K}_{i}\), that is, \(\varvec{K}_{i}^{-1} \approx \varvec{\widetilde{U}}_{i}^{-1} \ \varvec{\widetilde{L}}_{i}^{-1}\). The BILU(0) factorization in each subdomain is performed in conjunction with a Minimum Discarded Fill (MDF) ordering algorithm [50].

3 Wave Propagation in Fluids

In this section, we focus on hybridized DG methods for the incompressible and compressible Navier–Stokes equations. Numerical treatment of shock waves using physics-based shock detection and artificial viscosity is described. Numerical results are presented to demonstrate the performance of the methods. The section is ended with bibliography notes.

3.1 Incompressible Navier–Stokes Equations

3.1.1 Governing Equations

The unsteady incompressible Navier–Stokes equations for a Newtonian fluid with Dirichlet boundary conditions are given by

where \(\nu \) denotes the kinematic viscosity of the fluid, p the pressure, \(\varvec{v} = (v_1, \dots , v_d)\) the velocity vector, \(\varvec{v}_0\) is the initial velocity field and satisfies the divergence-free condition \(\nabla \cdot \varvec{v}_0 = 0\) for all \(x \in \Omega \), and \(\varvec{g}\) is the Dirichlet data and satisfies the compatibility condition \(\int _{\partial \Omega } \varvec{g} \cdot \varvec{n} = 0\) for all \(t \in (0,T)\). We shall discuss the treatment of other boundary conditions shortly later.

3.1.2 Formulation

HDG methods are the only type of hybridized DG method that has been applied to incompressible flows. The HDG method for the unsteady incompressible Navier–Stokes equations (13), as originally proposed in [82, 88], reads as follows: Find \(\big ( \varvec{q}_h(t) , \varvec{v}_h(t) , p_h(t) , \widehat{\varvec{v}}_h(t) \big ) \in \varvec{\mathcal {Q}}_h^k \times \varvec{\mathcal {V}}_h^k \times \mathcal {W}_h^k \times \widehat{\varvec{\mathcal {M}}}_h^k\) such that

for all \((\varvec{r},\varvec{w},q,\varvec{\mu }) \in \varvec{\mathcal {Q}}_h^k \times \varvec{\mathcal {V}}_h^k \times \mathcal {W}_h^k \times \widehat{\varvec{\mathcal {M}}}_h^k\) and all \(t \in (0,T)\), and

for all \(\varvec{w} \in \varvec{\mathcal {V}}_h^k\). The integer m in the definition of the spaces \(\varvec{\mathcal {Q}}_h^k\), \(\varvec{\mathcal {V}}_h^k\) and \(\widehat{\varvec{\mathcal {M}}}_h^k\) in Equations (1)−(2) is \(m=d\) for the incompressible Navier–Stokes equations. Finally, the numerical flux \(\widehat{\varvec{f}}_h\) is defined as

where \(\varvec{n}\) is the unit normal vector pointing outwards from the elements, \(\varvec{I}_d \in \mathbb {R}^{d \times d}\) is the identity matrix, and \(\varvec{S} \in \mathbb {R}^{d \times d}\) is the so-called stabilization matrix which may depend on \(\varvec{v}_h\) and \(\widehat{\varvec{v}}_h\). The stabilization matrix is usually given by \(\varvec{S} = \varvec{S}^{\mathcal {I}} + \varvec{S}^{\mathcal {V}}\), where \(\varvec{S}^{\mathcal {I}}\) is to stabilize the inviscid (convective) operator and \(\varvec{S}^{\mathcal {V}}\) is to stabilize the viscous (diffusive) operator. These stabilization matrices are typically chosen as \(\varvec{S}^{\mathcal {I}} = \tau _i \, \varvec{I}_d\) and \(\varvec{S}^{\mathcal {V}} = \tau _v \, \varvec{I}_d\), where \(\tau _i\) is the inviscid stabilization parameter and \(\tau _v\) is the viscous stabilization parameter. Common choices for the former include local \(\tau _i = |\widehat{\varvec{v}}_h \cdot \varvec{n}|\) and global \(\tau _i = \sup _{\partial \mathcal {T}_h} |\widehat{\varvec{v}}_h\cdot \varvec{n}|\) Lax-Friedrichs type approaches, whereas the later is typically defined as \(\tau _v = \nu / \ell \) for some characteristic length scale \(\ell \) [82, 88] .

3.1.3 Boundary Conditions

We discuss the numerical treatment of other boundary conditions. In particular, we consider boundary conditions of the form

where \(\varvec{B}\) is a linear boundary operator, and \(\partial \Omega _D\) and \(\partial \Omega _N\) are such that \(\partial \Omega _D \cup \partial \Omega _N = \partial \Omega \) and \(\partial \Omega _D \cap \partial \Omega _N = \emptyset \). In order to incorporate these boundary conditions into the HDG discretization, it suffices to replace Eq. (14d) by

where \(\widehat{\varvec{b}}_h\) is a discretized version of \(\varvec{B} \cdot \varvec{n}\). Some examples of \(\varvec{B}\) and the corresponding \(\widehat{\varvec{b}}_h\) are given in Table 1. Note that \((\varvec{q} - \varvec{q}^T) \cdot \varvec{n} = \varvec{\omega } \times \varvec{n}\), where \(\varvec{\omega }\) denotes the vorticity vector, and thus the third and fourth rows in Table 1 correspond to boundary conditions on the vorticity. Other linear boundary conditions can be treated in a similar manner.

3.1.4 Implementation and Local Post-processing

The implementation is discussed in [88]. In short, two different strategies for the Newton–Raphson linearization are proposed in [82, 88]. In the first strategy, the linearized system is hybridized to obtain a reduced linear system involving the degrees of freedom of the approximate velocity and average pressure. The reduced linear system has a structure of the saddle point problem. In the second strategy, the augmented Lagrangian method developed for the Stokes equations [29, 87] is used to solve the linearized system. Within each iteration of the augmented Lagrangian method, a linear system involving the degrees of freedom of the approximate velocity only is solved.

The post-processing procedure proposed in [26, 88] can be used to obtain an exactly divergence-free, \(\varvec{H}({\mathrm{div}})\)-conforming approximate velocity \(\varvec{v}_h^*\). This post-processing procedure is local (i.e. it is performed at the element level) and thus adds very little to the overall computational cost. Numerical results presented in [88] show that the approximate pressure, velocity and velocity gradient converge with the optimal order \(k+1\) for diffusion-dominated problems with smooth solutions. In such case, the post-processed velocity \(\varvec{v}_h^*\) converges with the order \(k+2\) for \(k \ge 1\).

3.2 Compressible Navier–Stokes Equations

3.2.1 Governing Equations

The unsteady compressible Navier–Stokes equations read as

Here, \(\varvec{u} = (\rho , \rho v_{j}, \rho E), \ j=1,\ldots ,d\) is the m-dimensional (\(m = d+2\)) vector of conserved quantities (i.e. density, momentum and total energy), \(\varvec{u}_0\) is an initial condition, \(\varvec{B}\) is a boundary operator, and \(\varvec{F}(\varvec{u},\varvec{q})\) are the Navier–Stokes fluxes of dimension \(m \times d\), given by the inviscid and viscous terms as

where p denotes the thermodynamic pressure, \(\tau _{ij}\) the viscous stress tensor, \(f_j\) the heat flux, and \(\delta _{ij}\) is the Kronecker delta. For a calorically perfect gas in thermodynamic equilibrium, \(p = (\gamma - 1) \, \big ( \rho E - \rho \, \left| \varvec{v}\right| ^2 / 2 \big )\), where \(\gamma = c_p / c_v > 1\) is the ratio of specific heats and in particular \(\gamma \approx 1.4\) for air. \(c_p\) and \(c_v\) are the specific heats at constant pressure and volume, respectively. For a Newtonian fluid with the Fourier’s law of heat conduction, the viscous stress tensor and heat flux are given by

where T denotes temperature, \(\mu \) the dynamic (shear) viscosity, \(\beta \) the bulk viscosity, \(\kappa = c_p \, \mu / Pr\) the thermal conductivity, and Pr the Prandtl number. In particular, \(Pr \approx 0.71\) for air, and additionally \(\beta = 0\) under the Stokes’ hypothesis.

3.2.2 Formulation

The hybridized DG discretization of the unsteady compressible Navier–Stokes equations (17) reads as follows: Find \(\big ( \varvec{q}_h(t),\varvec{u}_h(t), \widehat{\varvec{u}}_h(t) \big ) \in \varvec{\mathcal {Q}}_h^k \times \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {M}}_h^k\) such that

for all \((\varvec{r},\varvec{w},\varvec{\mu }) \in \varvec{\mathcal {Q}}_h^k \times \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {M}}_h^k\) and all \(t \in (0,T)\), and

for all \(\varvec{w} \in \varvec{\mathcal {V}}^k_h\). The integer m in the definition of the spaces \(\varvec{\mathcal {Q}}_h^k\), \(\varvec{\mathcal {V}}_h^k\) and \(\varvec{\mathcal {M}}_h^k\) in Equations (1)−(2) is \(m=d+2\) for the compressible Navier–Stokes equations. Finally, the numerical flux \(\widehat{\varvec{f}}_h\) is defined as

\(\widehat{\varvec{b}}_h\) is a boundary flux and its precise definition depends on the type of boundary condition as discussed in Sect. 3.2.3. Like in the incompressible case, the stabilization matrix \(\varvec{S} \in \mathbb {R}^{m \times m}\) is usually given by the contribution of inviscid and viscous stabilization terms \(\varvec{S} = \varvec{S}^{\mathcal {I}} + \varvec{S}^{\mathcal {V}}\). Several choices for the stabilization of the inviscid fluxes have been proposed in [49, 50, 95], including local

and global

approaches. Here, \(\varvec{A}_n = [\partial \varvec{F}^{\mathcal {I}} /\partial \varvec{u}] \cdot \varvec{n}\) is the Jacobian matrix of the inviscid flux normal to the element face, \(\lambda _{max}\) denotes the maximum-magnitude eigenvalue of \(\varvec{A}_n\), \(| \, \cdot \, |\) is the generalized absolute value operator, and \(\varvec{I}_m\) is the \(m \times m\) identity matrix. In order to improve stability, smooth surrogates for the operators \(( \ \cdot \ + | \cdot |) / 2\) and \(| \cdot |\) above are presented in [49]. The following stabilization matrices for the viscous fluxes have been proposed in [39, 56, 85, 96]:

where \(\ell \) is either a viscous length scale \(\ell _v\) [39, 56], a global length scale \(\ell _g\) [85, 96] or a characteristic element size h. For low Reynolds number flows (in the case \(\ell = \{ \ell _v , \ell _g \}\)) or for low cell Péclet numbers (in the case \(\ell = h\)), the viscous stabilization plays an important role in the accuracy and stability of the method. Otherwise, it plays a secondary role and is usually dropped.

We note that, for well-resolved simulations, the choice of the stabilization matrix becomes less critical as the polynomial order k increases since the inter-element jumps and numerical dissipation are of order \(O(h^{k+1})\) and \(O(h^{2(k+1)})\) [52, 114], respectively, and thus vanish rapidly with increasing k. This may not be the case in under-resolved simulations. A comparison of stabilization matrices for under-resolved turbulent flow simulations is presented in [49]. The relationship between \(\varvec{S}^{\mathcal {I}}\) and the resulting Riemann solver is also discussed in [48, 49].

3.2.3 Boundary Conditions

The definition of the boundary flux \(\widehat{\varvec{b}}_h\) depends on the type of boundary condition. For example, at the inflow and outflow sections of the domain, we define the boundary flux \(\widehat{\varvec{b}}_h\) as

where \(\varvec{A}^\pm _n = (\varvec{A}_n \pm | \varvec{A}_n |)/2\) and \(\varvec{u}_{\partial \Omega }\) is a boundary state. At a solid surface with no slip condition, we extrapolate density and impose zero velocity as follows

The definition of the last component of \(\widehat{\varvec{b}}_h\) depends on the type of thermal boundary condition. For isothermal walls, for example, we prescribe the temperature \(T_w\) as

where the approximate trace of the temperature \(\widehat{T}_h(\widehat{\varvec{u}}_h)\) is computed from \(\widehat{\varvec{u}}_h\). For adiabatic walls, we impose zero heat flux as

Other boundary conditions can be treated in a similar manner.

3.2.4 Shock Capturing Method

For flows involving shocks, we augment the hybridized DG discretization with the physics-based shock capturing method presented in [53]. In short, this shock capturing method increases selected fluid viscosities to stabilize and resolve sharp features, such as shock waves and strong thermal and shear gradients, over the smallest distance allowed by the grid resolution. In particular, the bulk viscosity, thermal conductivity and shear viscosity are given by the contribution of the physical \((\beta _f, \kappa _f, \mu _f)\) and artificial \((\beta ^*, \kappa ^*, \mu ^*)\) values, that is,

Shock waves, thermal gradients, and shear layers are stabilized by increasing the bulk viscosity, thermal conductivity, and shear viscosity, respectively. Contact discontinuities are stabilized through one or several of these mechanisms, depending on their particular structure. The thermal conductivity is also augmented in hypersonic shock waves through the term \(\kappa _1^*\). The artificial viscosities are devised such that the cell Péclet number is of order 1, and in particular are given by

where \(c^*\) is the speed of sound at the critical temperature \(T^*\), \(\Phi _{ \{ \beta , \kappa , \mu \} } \big [ \cdot \big ]\) are smoothing operators (not discussed here), \(\widehat{s}_{ \{ \beta , \kappa , \mu \} }\) are the bulk viscosity, thermal conductivity and shear viscosity sensors (not discussed here), \(Pr_{\beta }^*\) is an artificial Prandtl number relating \(\beta ^*\) and \(\kappa _1^*\), \(k_{ \{ \beta , \kappa , \mu \} }\) are positive constants of order 1, and

are the element size in the direction of the density gradient, the temperature gradient and the smallest element size among all possible directions, respectively. In Eq. (29), \(\varvec{M}_h\) denotes the metric tensor of the mesh, \(h_{ref}\) the reference element size used in the construction of \(\varvec{M}_h\), and \(\epsilon _{h} \sim \epsilon _{m}^2\) is a constant of order machine epsilon squared. The interested reader is referred to [53] for additional details on the shock capturing method.

3.3 Numerical Examples

We present numerical results for several wave phenomena encountered in fluid mechanics, including acoustic waves, shock waves, and the unstable waves responsible for transition to turbulence in a laminar boundary layer. The stabilization matrix is set to \(\varvec{S} = \lambda _{max} (\widehat{\varvec{u}}_h) \ \varvec{I}_m\). All results are presented in non-dimensional form. \(Pr_f = c_p \, \mu _f / \kappa _f = 0.71\), \(\beta _f = 0\) and \(\gamma = 1.4\) are assumed in all the test problems.

3.3.1 Inviscid Interaction Between a Strong Vortex and a Shock Wave

We consider the two-dimensional inviscid interaction between a strong vortex and a shock wave. The problem domain is \(\Omega = (0,2 L) \times (0,L)\) and a stationary normal shock wave is located at \(x = L / 2\). A counter-clockwise rotating vortex is initially located upstream of the shock and advected downstream by the inflow velocity with Mach number \(M_{\infty } = 1.5\). Sixth-order IEDG and third-order DIRK(3,3) schemes are used for the spatial and temporal discretization, respectively. The details of the problem and numerical discretization are presented in [51]. Figure 1 shows the density and pressure fields at the times \(t_1 = 0.35 \, \gamma ^{1/2} L \, |\varvec{v}_{\infty }|^{-1}\) and \(t_2 = 1.05 \, \gamma ^{1/2} L \, |\varvec{v}_{\infty }|^{-1}\). When the shock wave and the vortex meet, the former is distorted and the later split into two separate vortical structures. Strong acoustic waves are then generated from the moving vortex and propagate on the downstream side of the shock. The Mach number fields, together with zooms around the shock wave and the details of the computational mesh, are shown in Fig. 2. The shock is non-oscillatory and resolved within one element. The shock capturing method does not affect the propagation of the acoustic waves in the sense that it does not introduce artificial dissipation or dispersion [51].

Non-dimensional density \(\rho / \rho _{\infty }\) (left) and pressure \(p / (\rho _{\infty } |\varvec{v}_{\infty }|^2)\) (right) fields of the strong-vortex/shock-wave interaction problem at the times \(t_1\) (top) and \(t_2\) (bottom). After the shock wave and the vortex meet, strong acoustic waves are generated and propagate on the downstream side of the shock

3.3.2 Transitional Flow Over the NACA 65-(18)10 Compressor Cascade

We examine the ability of hybridized DG methods to resolve the wave propagation phenomena responsible for natural transition to turbulence in a boundary layer. To this end, we present implicit large-eddy simulation (ILES) results of the three-dimensional NACA 65-(18)10 compressor cascade in design conditions at inlet Reynolds number \(Re_1 = 250,000\) and Mach number \(M_1 = 0.081\). Third-order IEDG and DIRK(3,3) schemes are used for the discretization. The details of the flow conditions and the numerical setup, as well as the methodology and nomenclature for the boundary layer analysis below, are presented in [50].

Due to the lack of bypass and forced transition mechanisms and the quasi-2D nature of this flow, natural transition occurs through two-dimensional unstable modes. The two-dimensional nature of transition is illustrated in Fig. 3 through the much larger amplitude of the streamwise instabilities compared to the cross-flow instabilities. In particular, Tollmien-Schlichting (TS) waves form before the boundary layer separates, and Kelvin-Helmholtz (KH) instabilities are ultimately responsible for transition after separation. The former are shown in Fig. 4 (left) at different BL locations prior to separation. More specifically, the left plot in Fig. 4 shows the superposition of (1) TS waves and (2) the pressure waves generated in the turbulent boundary layer of the blade at hand and the neighboring blades. The latter effect is responsible for the nonzero fluctuating velocity outside the boundary layer. The growth rate of TS waves along the BL is exponential, as shown on the right of Fig. 4Footnote 2 and predicted by linear stability theory. It is worth noting the small magnitude of the instabilities compared to the freestream velocity.Footnote 3 This shows why very small amount of numerical dissipation is required for transition prediction. Similarly, very low numerical dispersion is needed to properly resolve all the frequencies present in the transition process. After separation, TS waves turn into KH instabilities, as illustrated in Fig. 5; which lead to very rapid vortex growth and are ultimately responsible for natural transition in the separated shear layer.

3.3.3 Transitional Flow Over the Eppler 387 Wing

We investigate the grid requirements to predict natural transition to turbulence by ILES. In particular, we present grid convergence studies for the transition location of the flow over the three-dimensional Eppler 387 wing at Reynolds numbers of 100,000, 300,000 and 460,000. The Mach number is \(M_{\infty } = 0.1\) and the angle of attack \(\alpha = 4.0^{\circ }\). Fifth-order HDG and third-order DIRK(3,3) schemes are used for the discretization. Three meshes and non-dimensional time-steps are considered; which correspond to uniform refinement in space and time. The details of these meshes are summarized in Table 2. The interested reader is referred to [50, 54] for additional details on the computational setup.

The negative spanwise- and time-averaged pressure coefficient at Reynolds numbers 100,000, 300,000, and 460,000 are shown in Figs. 6, 7 and 8, respectively. The simulation results converge to the experimental data [75] as the mesh is refined.Footnote 4 In particular, the error in the transition location is below 0.01c, 0.005c, and 0.01c at Reynolds number 100,000, 300,000, and 460,000, respectively, even with mesh No. 1. The effective resolution of this mesh is equivalent to a cell-centered finite volume discretization with 691,200 elements. These grid requirements are much smaller than those typically needed with low-order schemes.

The numerical results for the NACA 65-(18)10 cascade and the Eppler 387 wing demonstrate the advantage of high-order DG methods to simulate transitional flows, as they require much fewer elements and degrees of freedom to accurately predict transition than low-order methods. This is justified by the following observation [50]: Simulating transition is challenging mostly due to the small magnitude of the instabilities involved, rather than due to their length and time scales. A low-order scheme may kill the small instabilities because of high numerical dissipation even when the mesh size and time-step size are sufficiently small to represent the length and time scales of the instabilities. We note, however, that high-order methods become more and more computationally expensive (per degree of freedom) as the order of accuracy increases. As discussed in [49, 50], the hybridized DG methods seem to yield the best trade-off between accuracy and computational cost for transitional flows when the accuracy order is between 3 and 5.

3.3.4 Transonic Flow Over the T106C Low-Pressure Turbine

We present ILES results for the three-dimensional transonic flow around the T106C low-pressure turbine (LPT) in off-design conditions [51]. The isentropic Reynolds and Mach numbers on the outflow are \(Re_{2,s} = 100,817\) and \(M_{2,s} = 0.987\), respectively, and the angle between the inflow velocity and the longitudinal direction is \(\alpha _{1} = 50.54^{\circ }\). Third-order HDG and DIRK(3,3) schemes are used for the discretization. The details of the simulation setup are presented in [51]. Figure 9 shows 2D slices of the time-averaged (left) and instantaneous (right) pressure, temperature and Mach number fields. Several unsteady shock waves that oscillate around a baseline position are present in this flow, as illustrated by the smoother shock profiles in the average fields compared to the instantaneous fields. These unsteady shocks are resolved within one element.

3.4 Bibliography Notes

The HDG method for the incompressible Euler and Navier–Stokes equations was introduced in [82, 88], and further developed in [59, 69, 81, 84, 99, 112]. An analysis of the HDG method for the steady-state incompressible Navier–Stokes equations is presented in [9]. A superconvergent HDG method for the steady-state incompressible Navier–Stokes equations is developed in [98]. A comparison of HDG and finite volume methods for incompressible flows is presented in [1]. No other schemes within the hybridized DG family, such as the EDG and the IEDG methods, have been applied to incompressible flows.

The HDG method for the compressible Euler and Navier–Stokes equations was first introduced in [95], and further investigated in [50, 76, 84, 104, 105, 115]. Additional developments of the HDG method for compressible flows include a multiscale method [93], a time-spectral method [11, 12], and a viscous-inviscid monolithic solver [78]. The Embedded Discontinuous Galerkin (EDG) and Interior Embedded Discontinuous Galerkin (IEDG) methods for the compressible Euler and Navier–Stokes equations were presented in [96] and [54, 91], respectively, and further investigated in [49, 50].

Other miscellaneous topics on hybridized DG methods for fluid flows include error estimation and adaptivity [3, 39, 56, 59, 66, 78, 79, 116, 117], entropy-stable formulations [52, 114], and shock capturing for steady [77, 83] and unsteady [51, 53] flows. The relationship between the stabilization matrix and the resulting Riemann solver is investigated in [48, 49]. Finally, parallel implementation and efficiency considerations are discussed in [50, 100].

4 Wave Propagation in Solids

4.1 Linear Elastodynamics

Several HDG formulations have been proposed in the literature for linear elastic wave propagation. Each of them has pro and cons, and they will be briefly reviewed in Sect. 4.4. We will only focus here on the velocity—deformation-gradient formulation, which is close to the HDG formulation we use for nonlinear elastodynamics.

4.1.1 Governing Equations

We consider small transient adiabatic perturbations of an elastic body, which is at rest in a reference configuration \(\Omega \). The perturbations are described using a deformation mapping \(\varvec{\varphi }\) between a reference configuration \(\Omega \) and a current configuration \(\Omega _t\) of the form \(\varvec{y} = \varvec{\varphi }(\varvec{X},t)\). Here, \(\varvec{X}\) is the coordinate in the reference configuration \(\Omega \) and \(\varvec{y}\) denotes the position of material particle \(\varvec{X}\) after deformation at time t. The velocity is denoted by \(\varvec{v} = \partial _t \varvec{\varphi }\), and the density of the reference configuration is denoted by \(\rho \). Let \(\varvec{f}\) be the body force per unit reference volume. The motion of the elastic body under small perturbations is governed by the following linear elastic wave equation

where \(\varvec{\sigma }\) is the Cauchy stress tensor depending on two Lamé parameters \(\lambda \) and \(\mu \) for an isotropic body, and on the local state of deformation \(\varvec{\varphi }\). It is customary to write \(\varvec{\sigma }\) as a function of the infinitesimal strain tensor \(\varvec{\epsilon }\) under the assumption of small deformations. However, one could also directly write \(\varvec{\sigma }\) as a function of the deformation gradient \(\varvec{F}\), i.e.

Here d is the spatial dimension of the problem, \(\varvec{I}\) is the identity tensor and \(\varvec{F}\) is the deformation gradient

Now the elastic wave equation can be rewritten as

the first equation being the time derivative of (32). The boundary conditions are given as

where \(\partial \Omega _N\) is a part of the boundary \(\partial \Omega \) such that \(\partial \Omega _N \cup \partial \Omega _D = \partial \Omega \) and \(\partial \Omega _N \cap \partial \Omega _D = \emptyset \).

4.1.2 Formulation

The HDG method seeks an approximation \((\varvec{F}_h,\varvec{v}_h,\widehat{\varvec{v}}_h) \in \varvec{\mathcal {Q}}_h^k \times \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {M}}_h^k\) such that

for all \(\varvec{G} \in \varvec{\mathcal {Q}}_{h}^k\), \(\varvec{w} \in \varvec{\mathcal {V}}_h^k\), and \(\varvec{\mu }\in \varvec{\mathcal {M}}_h^k\), where the numerical flux \(\widehat{\varvec{\sigma }}_h\) is given by

Here we make use of the stabilization matrix \(\varvec{S}\) whose expression is discussed below. The first two Eqs. (35a)–(35b) are obtained by multiplying the elastic wave Eqs. (33a)–(33b) by test functions and integrating the resulting equations by parts. The third equation (35c) enforces the continuity of the \(L^2\) projection of the numerical flux \(\widehat{\varvec{\sigma }}_h\varvec{n}\) and imposes weakly the Dirichlet and Neumann boundary conditions. The last equation (35d) defines the numerical flux.

As a side note, the pointwise stress–strain relation (31) could be applied through an element-based \(L^2\)-projection instead. For piecewise-constant Lamé parameters (used to produce the results shown in Table 3) both approaches are equivalent and provide similar results.

4.1.3 Stabilization Matrix

By using a simple dimensional analysis for the expression of the numerical flux (35d), it turns out that the stabilization matrix should be homogeneous to an impedance. It is a natural choice to consider impedances either the compressional elastic wave impedance, i.e. \(\rho c_p\), or the shear wave impedance, i.e. \(\rho c_s\). A simple choice for \(\varvec{S}\) would therefore be

where \(c_p = \sqrt{(\lambda +2\mu ) / \rho }\) is the compressional wave velocity, and \(c_s = \sqrt{\mu / \rho }\) is the shear wave velocity. More sophisticated parameter-free \(\varvec{S}\) have been proposed in [110] do deal with impedance jumps at element boundaries, and acoustic waves coupling. However, for linear elastic wave problems, the accuracy of the approximation is only slightly dependent on \(\varvec{S}\), and therefore a wide range of values is acceptable for \(\varvec{S}\). It appears that, most of the time, choosing either one of the two impedances provides very satisfactory results.

4.2 Nonlinear Elastodynamics

4.2.1 Governing Equations

We now consider large time-dependent deformations of an elastic body defined by a deformation mapping \(\varvec{\varphi }\) between a reference configuration \(\Omega \) and a current configuration \(\Omega _t\) of the form \(\varvec{y} = \varvec{\varphi }(\varvec{X},t)\). Here, \(\varvec{X}\) is the coordinate in the reference configuration \(\Omega \) and \(\varvec{y}\) denotes the position of material particle \(\varvec{X}\) after deformation at time t. The velocity is denoted by \(\varvec{v} = \partial _t \varvec{\varphi }\), and the density of the reference configuration is denoted by \(\rho \). Let \(\varvec{f}\) be the body force per unit reference volume. The boundary \(\partial \Omega \) is divided into two complementary disjoint parts \(\partial \Omega _D\) and \(\partial \Omega _N\), where the prescribed deformation \(\varvec{v}_D\) and traction \(\varvec{t}_N\) are imposed, respectively. The motion of the elastic body under large deformations is governed by the following equations stated in Lagrangian form

The equation (37a) is just the time derivative of the definition of the gradient of deformation \(\varvec{F}\). The conservation of linear momentum and equation is stated with (37b), and equation (37c) relates the first Piola-Kirchhoff tensor \(\varvec{P}\) with the second one \(\mathbf {S}\). The two last equations (37d)–(37e) express the boundary conditions. The gradient \(\nabla \) and divergence \(\nabla \cdot \) operators are taken with respect to the coordinate \(\varvec{X}\) of the reference configuration. To complete the problem description, an initial configuration \(\varvec{v}(\varvec{X},t=0) = \varvec{v}_0(\varvec{X})\) and \(\varvec{F}(\varvec{X},t=0) = \varvec{F}_0(\varvec{X})\) for all \(\varvec{X} \in \Omega \) has to be prescribed.

For hyperelastic materials the first and second Piola-Kirchhoff stress tensors \(\varvec{P}\) and \(\mathbf {S}\) are derived from a scalar strain energy function \(\psi \) through

with \(\varvec{C} = \varvec{F}^T \varvec{F}\) the right Cauchy-Green stress tensor. Hence, both \(\varvec{P}\) and \(\varvec{S}\) are functions of the deformation gradient and material parameters. For the applications in this section, a Saint Venant-Kirchhoff (SVK) model has been considered. For this model the second Piola-Kirchhoff tensors is given by

whith the Lamé parameters \((\lambda ,\mu )\), the Lagrangian strain tensor \(\varvec{E} = \frac{1}{2} (\varvec{C} - \varvec{I})\).

Below we introduce a HDG method for solving the nonlinear elasticity Eq. (37).

4.2.2 Formulation

We seek an approximation \((\varvec{F}_h, \varvec{P}_h, \varvec{v}_h, \widehat{\varvec{v}}_h) \in \varvec{\mathcal {Q}}_{h}^k \times \varvec{\mathcal {Q}}_{h}^k \times \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {M}}_h^k\) such that

for all \(\varvec{G}, \varvec{Q} \in \varvec{\mathcal {Q}}_{h}^k\) \(\varvec{w} \in \varvec{\mathcal {V}}_h^k\), and \(\varvec{\mu }\in \varvec{\mathcal {M}}_h^k\), where the numerical flux \(\widehat{\varvec{P}_h}\) is given by

Here the stabilization tensor \(\varvec{S}\) does have an important effect on both the stability and accuracy of the method, and its design will be discussed below. Let us briefly comment on the equations defining the HDG method. The first two Eqs. (40a)–(40b) are obtained by multiplying the governing Eqs. (37a)–(37b) by test functions and integrating the resulting equations by parts. The third equation (40c) is the weak version of (37c). The fourth equation (40d) enforces the continuity of the \(L^2\) projection of the numerical flux \(\widehat{\varvec{P}_h}\varvec{n}\) and imposes weakly the Dirichlet and Neumann boundary conditions. The last equation (40e) defines the numerical flux.

4.2.3 Stability

We now give an insight into the energy evolution of our HDG method. Let us consider Eq. (40a) with test function \(\varvec{G} = \varvec{P}_h\), Eq. (40b) integrated by part with \(\varvec{w} = \varvec{v}_h\) and Eq. (40d) with \(\varvec{\mu } = \widehat{\varvec{v}}_h\). After summing all these equations, and after some simplifications it comes the following energy identity

with \(\partial _t E_h\) the time derivative of the total discrete energy

where the last term is equal to \(\partial _t \psi _h\), i.e. the time derivative of the discrete elastic potential energy. It comes from (41) that, without external actions (\(\varvec{f} = 0\) and \(\varvec{t}_N= 0\)), and if \(\varvec{S}\) is positive definite, the total energy decreases due to velocity jumps at element boundaries. The proposed HDG scheme is therefore stable with the jump term playing a stabilization role.

4.2.4 Stabilization Matrix

The dimensional analysis done for the \(\varvec{S}\) in the linear case still applies for the nonlinear one. However, when large deformations occurs it becomes necessary to increase \(\varvec{S}\) in order to insure the convergence of the Newton’s method. Therefore, one simple choice for the stabilization matrix is to scale the linear elastic \(\varvec{S}\) from (36) with an amplification factor, i.e.

where the factor \(\alpha \) is problem-dependent. In spite of its simplicity, the above stabilization tensor works well for many nonlinear test cases, with, most of the time, \(\alpha \in [1,10]\). Contrary to the linear case, both the stability and the accuracy of our HDG scheme heavily depend on \(\varvec{S}\) and therefore the coefficient \(\alpha \) plays a crucial role. Practically, \(\alpha \) is determined after only a few trials.

We emphasize that it may not be a good idea to build \(\varvec{S}\) based on the material elasticity tensor, as proposed for elastostatics in [107, 108]. Indeed, in the linear elasticity case, this tensor is symmetric positive-definite everywhere in the domain. However, in the nonlinear elasticity case, it is generally no longer the case in regions where large deformation occurs. In this last configuration the energy identity (41) no longer holds and Newton’s method typically fails to converge.

4.3 Numerical Results

We present here a simple numerical example in order to assess the convergence of our HDG formulations for both linear and nonlinear elastodynamics. In particular, we consider a square plate of dimensions \(1 \times 1\) and of thickness 0.01 that is clamped on its four sides, i.e. with homogeneous Dirichlet boundary conditions \(\varvec{v}_D=0\). The plate vibrates such that the exact deformation mapping—illustrated in Fig. 10-is

where \(\varvec{X} = \left( X,Y,Z \right) ^T\) are the positions in the undeformed initial configuration at \(t=0\). Time dependent body forces, and tractions on the upper and lower surfaces are computed from the exact solution and imposed all along the simulation. The Lamé parameters are \(\mu =1\) and \(\lambda =1.5\), the density \(\rho = 1\) and the stabilization matrix is set \(\varvec{S} = 2 \rho c_s \varvec{I}\). The DIRK(3,3) scheme is used for the temporal discretization, and the time-step size is chosen sufficiently small so that the spatial discretization errors dominate. Both linear elastic and nonlinear hyperelastic (SVK) materials have been considered.

Numerical results are compared at \(t=1\) with the exact ones for HDG-\(\mathbb {P}_{k}\) with polynomial degrees \(k\in \{1,2,3 \}\). The 3D mesh of the plate is uniformly refined in \(\varvec{e}_x\) and \(\varvec{e}_y\) directions. All simulations make use of only one element in the thickness direction. The \(L^2\)-errors with estimated orders of convergence (e.o.c) are reported in Table 3 for the linear case, and in Table 4 for the nonlinear one.

It is worth noting that for the linear case, both the velocity and the gradient \(\varvec{F}_h\) converge with the optimal order \(k+1\). However, the analysis does not guarantee the optimal order of convergence for the gradient (see the discussion in Sect. 4.4). For the nonlinear case, the velocity still converges optimally while the convergence order for the gradient \(\varvec{F}_h\) is not clear, being almost \(k+1/2\) for linear approximations, and somewhere beween \(k+1/2\) and \(k+1\) for \(k\in \{ 2,3 \}\). We emphasize that this last result depends on the choice of the stabilization matrix, and better convergence rates have been obtained when \(\varvec{S}\) is allowed to vary between simulations. However, an automatic optimal design of \(\varvec{S}\) for nonlinear elastic problems is still an open issue, and it is likely that \(\varvec{S}\) should be adaptive, as explained in [44, 45].

4.4 Bibliography Notes

As explained in the introduction, two attractive features of the HDG methods are the optimal convergence of the approximate gradient and the superconvergence property. Namely, when a polynomial degree \(k\ge 1\) is used to build the approximate primal solution and the approximate gradient, both of them may converge with an optimal order \(k+1\), and the post-processed primal solution may then converge with an extra order \(k+2\). Although the superconvergence property has been observed for numerous PDEs (see [84] among many others), it is not always guaranteed. This is especially true for linear elasticity since the symmetric nature of the infinitesimal strain and Cauchy stress tensors adds an extra difficulty. This difficulty has motivated the development of several HDG approaches, which are briefly reviewed here.

The first HDG method for linear elastostatics was introduced by [107, 108], and makes use of a displacement-strain-stress formulation. Optimal convergence of the gradient and superconvergence were then numerically observed. However, the analysis [57] of the same method demonstrated that although the displacement converges with order \(k+1\), the symmetric part of the gradient converges with only \(k+1/2\), and the antisymmetric part of the gradient with k. Moreover, numerical experiments illustrated that suboptimal convergence. Thus no superconvergence property is ensured with this method, although it may sometimes be observed. The strain-velocity formulation in [110] extends the previous method to the elastic wave equations, with an emphasis on the proper design of \(\varvec{S}\) for heterogeneous media, following a methodology similar to [5]. Numerical results for the post-processed solution in [110] confirmed the elastostatics results. The HDG formulation (35) presented in this paper, as well as the stress–velocity formulation presented in the frequency domain by [4] are both variations of the initial [108] HDG method (see [57]). Recently the M-decompositions theory has been used to modify that original HDG method such that it becomes superconvergent on 2D meshes by enriching the local gradient spaces (see [21]).

Based on the study of superconvergent HDG methods for diffusion [31], the method proposed in [36] makes use of approximate weakly symmetric stresses. The superconvergence is then ensured, but at the cost of the extra computation of the approximate rotation tensor, and by enriching the gradient space with matrix bubble functions, which depend on the shape of the elements. However, it is difficult to extend this method to nonlinear elasticity since it involves the explicit inversion of the constitutive relation.

An alternative superconvergent HDG method was proposed for elastostatics [13, 97]. In [64], a 3D time-harmonic elastodynamics version of this method was presented, with an analysis and some numerical experiments. These methods achieve an optimal \(k+1\) convergence for the gradient, and ensure the superconvergence property at the cost of an extra polynomial degree \(k+1\) for the the approximate displacement. However this computational overcost is small since the standard degree k is still used for the approximate trace, so that the size of the global system is not increased.

Finally, a last family of HDG methods for linear elasticity has been presented in [84, 89]. Its elastodynamic version is based on a displacement gradient-velocity-pressure formulation, making use of the relation

in order to mimic the HDG formulation for the Stokes flow. Therefore it inherits all the superconvergence properties of HDG for the Stokes equations (see [26, 30, 35]). This method does not require any enrichment of the gradient space, and it allows for the treatment of nearly-incompressible elastic materials. However, this formulation has some drawbacks. The identity (45) holds only for homogeneous \(\mu \), and when normal stresses are applied as a boundary condition, the superconvergence may be lost (see [88]).

Recently, a new Hybrid High Order (HHO) method was designed for linear elasticity in [41]. Contrary to HDG, the HHO methods are based on a primal formulation, i.e. the gradient is not considered as a separate variable. But like HDG, HHO methods make use of a static condensation procedure to solve a global system on the approximate traces, wich are typically polynomials of degree k, making the computational cost of both methods similar. Moreover, a locally reconstructed displacement field superconverges with a garanteed \(k+2\) order of convergence. Interestingly, in [37], the HHO method was recast into the HDG framework to study the hidden links between the two approaches.

The HDG literature for nonlinear elasticity is less abundant. A first HDG method was proposed in [107] and later recast as a minimization of a nonlinear functional in [67]. Optimal convergence of the deformation and its gradient were numerically observed. The extension of this method to nonlinear elastodynamics is provided in this paper in Sect. 4.2.

In [84] a nonlinear elastodynamic HDG scheme was proposed using a deformation gradient-velocity-pressure formulation. Like for its linear counterpart, this formulation is attractive since it allows for the treatment of nearly-incompressible materials. The method presented in this paper is close, but it does not consider the pressure as a separate variable. Interestingly, both formulations seems to provide suboptimal convergence of the approximate gradient for \(k=1,2\) while it is optimal for \(k=3\).

Finally, an original Green strain-displacement-velocity formulation was proposed in [106] for the purpose of solving fluid-structure interaction problems. Observed convergence rates were \(k+1\) for the approximate displacements and velocities, but only k for the approximate strains.

5 Electromagnetic Wave Propagation

In this section, the HDG methods are extended to the generalized Maxwell’s equations. The resolution of Maxwell’s equations presents one major difference with the systems presented previously. Because of the presence of the vector operator \({{\mathrm{\mathbf{curl}}}}\) the electromagnetic field is determined from its tangential component. As a consequence, the HDG methods are redefined with the introduction of tangential components. In addition, in low frequency regime, the Gauss’s law needs to be numerically enforced on the model. In case of the charge conservation is not satisfied on the discrete level, numerical errors and instabilities are introduced. Many techniques have been developed to impose the charge conservation condition on the system matter [43, 70]. Among them, the generalized Lagrange multiplier (GLM) method [40, 80] enforces the divergence condition by solving a modified system where the constraint condition is imposed through the using of Lagrange multiplier. The nature of the correction allows the control of the propagation and the dissipation of divergence errors. This approach preserves the conservation form of the generalized Maxwell’s equations at a minimal cost of introducing one additional scalar variable inside the system.

5.1 Governing Equations

5.1.1 Generalized Maxwell’s Equations

The generalized Maxwell’s equations are given by

where \(\varvec{e}\) is the electric field, \(\varvec{h}\) the magnetic field, and \(\varvec{j}\) the current density. In addition, \(\varepsilon \), \(\mu \) and \(\rho \) denote the permittivity, permeability and the electric charge density, respectively. We assume boundary conditions of the form

where \(\varvec{n}\) denotes the unit outward normal to \(\partial \Omega \), and \((\varvec{e}^{\text {inc}},\varvec{h}^{\text {inc}})\) is the incident field. Finally, the system is supplemented with the initial conditions

where \(\varvec{e}_0\) and \(\varvec{h}_0\) are the initial electric and magnetic fields.

5.1.2 Generalized Lagrange Multipliers

In order to avoid instabilities and unphysical solutions related to electric field \(\varvec{e}\), we need to impose (46c) on the electromagnetic model. The generalized Lagrange multiplier (GLM) method has been succesfully applied to Maxwell’s equations [40, 80]. The principle of the method is to introduce a new (non-physical) scalar field \(\phi \) into the system (46a)–(46d) through the differential operator \(D(\phi )\) as follows

In addition to (47), the following homogeneous Dirichlet condition is imposed

In order to preserve hyperbolicity of the new system, the operator \(D(\phi )\) is defined as follows

where \(\alpha _1\in \mathbb {R}^+\) and \(\alpha _2\in \mathbb {R}^+\) are dimensionless coefficients that control the amount of artificial coupling between (49a)–(49b) and (49c). The resulting system is referred to as the generalized Lagrange multiplier formulation of the Maxwell’s equations (GLM-Maxwell).

5.2 Formulation

To define the HDG method for the GLM-Maxwell equations we introduce the following space:

Note that this space consists of vector-valued functions whose normal component, \(\varvec{\mu }^n := \varvec{n} \, (\varvec{\mu }\cdot \varvec{n})\), vanishes on every face F of \(\mathcal {E}_h\). In other words, we have \(\varvec{\mu }= \varvec{\mu }^t := \varvec{n} \times \varvec{\mu }\times \varvec{n}\) for all \(\varvec{\mu }\in \varvec{{\mathcal {S}}}_{h}^k\).

Multiplying the GLM-Maxwell equations by appropriate test functions and using the fact \(\phi = 0\), the HDG discretization reads as follows: Find the approximate solution \(\left( \varvec{e}_h,\varvec{h}_h,\phi _h,\widehat{\varvec{e}}^{t}_h \right) \in \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {V}}_h^k \times {{\mathcal {W}}}_h^k \times {\varvec{\mathcal {S}}}_h^k\) such that the following equations are satisfied on each element K:

Note that \(\widehat{\varvec{h}}^{t}_h\) and \(\widehat{\varvec{e}}^{t}_h\) denote the approximate trace of \({\varvec{h}}^{t} := \varvec{n} \times \varvec{h} \times \varvec{n}\) and \({\varvec{e}}^{t} := \varvec{n} \times \varvec{e} \times \varvec{n}\) on the element boundaries, respectively. Next, we define \(\widehat{\varvec{h}}^{t}_h\) as follows

where \(\tau \) is a local stabilization parameter, and enforce the conservativity condition and the boundary condition as follows

The test functions are taken as \(\left( \varvec{v},\varvec{w},\psi ,\varvec{\mu }\right) \in \varvec{\mathcal {V}}_h^k \times \varvec{\mathcal {V}}_h^k \times {{\mathcal {W}}}_h^k \times {\varvec{\mathcal {S}}}_h^k\). The term \(\frac{1}{\alpha _3^2}\left<\phi _h,\psi \right>_{K} \) is added in (55) to provide additional stabilization of the divergence-free constraint.

According to the discussion on time-marching techniques in Sect. 2.3, the semi-discrete HDG formulation can be written as the DAE system (3) with \(\varvec{u}\) being the vector degrees of freedom of \(\left( \varvec{e}_h,\varvec{h}_h, \phi _h\right) \) and \(\varvec{v}\) being the vector of degrees of freedom of \(\widehat{\varvec{e}}_h^t\). Note also that when constructing the global linear system for the degrees of freedom of \(\widehat{\varvec{e}}_h^t\), we locally eliminate the degrees of freedom of \(\phi _h\) by substituting it from (55) into (53). Therefore, the introduction of the Lagrange multiplier \(\phi _h\) does not affect the computational complexity of the HDG method. In other words, the computational complexity of the proposed HDG method is the same as that of the HDG methods presented in [15, 72, 73, 90]. Unlike the proposed HDG method, those HDG methods do not discretize the divergence-free constraint.

5.3 Stability and Consistency

We can show that the local problem (53)–(55) is well-defined. Indeed, inserting (56) into (53) and summing up the three equations (53)–(55) yields

Integrating this equation from time \(t_1=0\) to \(t_2 = t\) and choosing \(\left( \varvec{v},\varvec{w},\psi \right) = \left( \varvec{e}_h,\varvec{h}_h,\phi _h\right) \) as test functions, we obtain

This identity implies the local problem has a unique solution.

In a similar manner, the following energy identity holds for the semi-discrete HDG formulation:

This energy identity shows the existence and uniqueness of the numerical solution. In addition, the discrete energy

decays in time whenever \(\varvec{j} = 0\), \(\rho = 0\), and \(\varvec{e}^{\text {inc}} = 0\). Hence, the HDG method is well-defined and stable. Finally, it is easy to show that the exact solution also satisfies the HDG formulation (53)–(57). Therefore, the HDG method is consistent.

5.4 Numerical Results

In order to demonstrate the convergence and accuracy of the HDG method, a three-dimensional problem with no electric charge density (i.e. \(\rho =0\)) is considered. This problem involves the propagation of a standing wave in a cubic cavity \(\Omega = (0,1)\times (0,1)\times (0,1)\) with perfect electrical conductor (PEC) boundaries up to a final time \(T=1\). The permittivity is \(\varepsilon _r = 2\), the permeability \(\mu _r = 1\), and the current density is neglected, i.e. \(\varvec{j}= 0\). The exact solution of the problem is given by

where the angular frequency (or pulsation) is \(\omega = 1\). The GLM coefficients are set to \(\alpha _1 = \alpha _2 = \alpha _3 = 1\), and the stabilization parameter to \(\tau = 2\). The DIRK(3,3) scheme is used for the temporal discretization, and the time-step size is chosen sufficiently small so that the spatial discretization errors dominate.

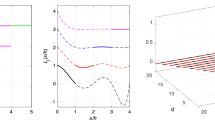

Tables 5, 6 and 7 present the numerical errors and estimated orders of convergence (e.o.c) for HDG-\(\mathbb {P}_{k}\) with polynomial degrees \(k = 1\), 2 and 3, respectively. The convergence rates and errors for \(\phi _h\) are shown in Table 8. We observe that the convergence rates are optimal for all the variables. Finally, we compare the time evolution of the \(L^2\)-error norm of \(\nabla \cdot \varvec{e}_h\) for the uncorrected Maxwell’s equations and the GLM-Maxwell system. In particular, Fig. 11 shows the time evolution for various polynomial orders on a \(8 \times 8 \times 8\) mesh. We observe that the errors with the GLM-Maxwell model are smaller than those with the uncorrected Maxwell’s equations. Therefore, the numerical treatment of the divergence-free constraint using the GLM-Maxwell model enhances accuracy and long-time stability.

5.5 Bibliography Notes

Discretization of Maxwell’s equations in high-frequency regime using HDG methods has been done in both frequency and time domains [14, 15, 46, 71,72,73,74, 90, 113, 119]. The first HDG method for the time-harmonic Maxwell’s equations was proposed in [90] for two-dimensional problems. The extension of the method to three dimensions was presented in [72, 73]. HDG was employed for full 3D modeling of the resonant transmission of THz waves through annular gaps in the field of nanoplasmonics [94, 118]. An HDG method for computing nonlocal electromagnetic effects in three-dimensional metallic nanostructures has been recently introduced in [113].

6 Perspectives

In spite of considerable effort towards making DG methods more robust and computationally efficient, there are still open problems demanding advances on several research fronts. We end this paper with perspectives on ongoing extension and new development of hybridized DG methods for wave propagation problems. While HDG has been only applied to a wide variety of wave propagation problems, EDG and IEDG have been applied to compressible flows. Due to their significantly lower computational cost, the application of EDG and IEDG methods to solid mechanics, incompressible flows, and electromagnetism is encouraged.

Hybridized DG methods use polynomial spaces to approximate the solution on elements and faces. A possible extension is the enrichment of the approximation spaces with non-polynomial functions in order to capture discontinuities, singularities, and boundary layers. The hybridized DG framework may lend itself for this task because the enrichment can be done at the element level thanks to the discontinuous nature of the approximation spaces. Indeed, an HDG method using exponential kernels for high-frequency wave propagation is proposed in [92], and an extended HDG method with heaviside enrichment for heat bimaterial problems is developed in [62].

In this paper, we have exclusively focused on implicit hybridized DG methods. It is highly desirable to develop hybridized DG methods that can be coupled with explicit time discretization for time-dependent problems. They should be computationally competitive to other explicit DG methods, while retaining some important advantages such as the superconvergence property. As a step in this direction, explicit HDG methods have been devised for the acoustics wave equation [109]. While extension of the explicit HDG methods to elastodynamics and electromagnetics is quite straightforward, it is not trivial to develop efficient explicit HDG methods for fluid dynamics. Another area of interest is to devise hybridized DG methods coupled with implicit-explicit (IMEX) time-marching schemes. This is recently persued for acoustics wave problems [68].

Also, the time-marching schemes for hybridized DG methods discussed herein are dissipative in the sense that the discrete energy is decaying in time for problems in which the exact energy is invariant in time. For many wave propagation problems, it is crucial to equip numerical methods with desirable conservation properties such as energy and momentum conservations for long-time simulations. There have been recent work on symplectic HDG methods for acoustic waves [24, 103]. It will be interesting to develop symplectic HDG methods for shallow water waves, elastic waves, and electromagnetic waves.

Finally, we point out other work on the development of HDG methods for wave propagation problems. The first HDG method for the Helmholtz equation was introduced in [61]. In [47], a wide family of discontinuous Galerkin methods, which included the HDG methods, were proven to be stable regardless of the wave number. The methods used piecewise linear approximations. In [38], an analysis of the HDG methods for the Helmholtz equations was carried which shows that the method is stable for any wave number, mesh and polynomial degree and which recovers the orders of convergence and superconvergence obtained previously in [61]. In [60], the HDG method for eigenvalue problems was developed and analyzed. HDG methods for the Oseen equations were developed and analyzed in [8]. A systematic way of defining HDG methods for Friedrichs’ systems has been developed in [5]. An explicit HDG method for Serre-Green-Naghdi wave model is devised in [102]. The first HDG method for solving Korteweg-de Vries (KdV) type equations is developed and analyzed in [42]. An HDG method for coupled fluid-structure interaction problems is presented in [106]. Hybridized DG methods for ideal and resistive MHD problems are recently developed in [16].

Notes

Strictly speaking, the finite element mesh can only partition the problem domain if \(\partial \Omega \) is piecewise p-th degree polynomial. For simplicity of exposition, and without loss of generality, we assume hereinafter that \(\mathcal {T}_h\) actually partitions \(\Omega \).

Note the amplification factor \(N_1\) in the y-axis is a logarithmic quantity.

Note the amplitude of the instabilities in Fig. 3 is non-dimensionalized with respect to the freestream velocity.

References

Ahnert, T., Bärwolff, G.: Numerical comparison of hybridized discontinuous Galerkin and finite volume methods for incompressible flow. Int. J. Numer. Methods Fluids 76(5), 267–281 (2014)

Alexander, R.: Diagonally implicit Runge–Kutta methods for stiff ODEs. SIAM J. Numer. Anal. 14, 1006–1021 (1977)

Balan, A., Woopen, M., May, G.: Adjoint-based hp-adaptation for a class of high-order hybridized finite element schemes for compressible flows. In: 21st AIAA Computational Fluid Dynamics Conference (2013)

Bonnasse-Gahot, M., Calandra, H., Diaz, J., Lanteri, S.: Hybridizable discontinuous galerkin method for the 2-d frequency-domain elastic wave equations. Geophys. J. Int. 213(1), 637–659 (2018)