Abstract

We propose an indefinite proximal subgradient-based algorithm (IPSB) for solving nonsmooth composite optimization problems. IPSB is a generalization of the Nesterov’s dual algorithm, where an indefinite proximal term is added to the subproblems, which can make the subproblem easier and the algorithm efficient when an appropriate proximal operator is judiciously setting down. Under mild assumptions, we establish sublinear convergence of IPSB to a region of the optimal value. We also report some numerical results, demonstrating the efficiency of IPSB in comparing with the classical dual averaging-type algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the nonsmooth composite convex optimization problem

where \(Q \subseteq \mathbb {R}^n\) is a simple closed convex set, \(f,h:\mathbb {R}^n \rightarrow {\mathbb {R} \cup \{+\infty \}}\) are convex (not necessarily smooth) and \(F:\mathbb {R}^n \rightarrow \mathbb {R} \cup \{+\infty \}\) is nonsmooth. Moreover, h is assumed to be the summation of a quadratic convex and a convex function (SQCC). Problem (1.1) has received much attention due to its broad applications in several different areas such as signal processing, system identification, machine learning and image processing; see, for instance, [6, 7, 10] and references therein.

Among the numerical algorithms for solving nonsmooth optimization problems (1.1) such as splitting algorithms [9], cutting plane methods [21], ellipsoid methods [11], bundle methods [17], gradient sampling methods [4] and smoothing methods [19], subgradient methods [25] are fundamental, which have been extensively studied due to their applicability to a wide variety of problems and low requirement on memory [3, 8, 22, 23]. The iteration complexity for applying a subgradient method to solve the general nonsmooth convex minimization problem is \(O({1}/{\epsilon ^2})\), i.e., after \(O({1}/{\epsilon ^2})\) iterations, the difference between the objective function value and the optimum is about \(\epsilon \); see [21]. For problems equipped with additional structure, various approaches are proposed such as smoothing schemes [19], fast iterative shrinkage-thresholding algorithm [1], bundle method [17], to improve the iteration complexity to \(O({1}/{\epsilon })\).

Note that for the nonsmooth optimization problems, it is usually not the case that the subgradient vanishes at the solution point, and as a consequence, the stepsize in the subgradient-based method should be approaching zero. Such a vanishing property of the stepsize slows down the convergence rate of the subgradient method [20]. To deal with this undesirable phenomenon, Nesterov proposed a dual averaging (DA) scheme [20]. Each iteration of DA scheme takes the form

where \(\lambda _k\), \(\forall k \ge 0\) are stepsizes, \( \{\beta _{k}\}_{k=0}^{\infty } \) is a positive nondecreasing sequence and \( r(\cdot ) \) is an auxiliary strongly convex function. Following the DA scheme, Xiao [26] proposed a regularized dual averaging (RDA) scheme, which generates the iterate by minimizing a problem that involves all the past subgradients of f and the whole function h,

where \(x_0\) is the minimizer of h over Q. Setting the auxiliary function \(r(\cdot )\) as \(\frac{1}{2}\Vert \cdot -x_0\Vert ^2 \) in the above RDA scheme (1.3) becomes the so-called proximal subgradient-based (PSB) method

The regularization function \(r(\cdot )\) is crucial in RDA and PSB, which plays a similar role as the proximal term in the classical proximal point algorithm (PPA) [5, 18, 24]. On one hand, it ensures the existence and uniqueness of the solution of the subproblems, and makes the subproblems stable. On the other hand, it also influences the efficiency of the algorithms. Recently, much attention was paid on relaxing the strong convexity requirements on the proximal term in PPA [13] and related algorithms such as augmented Lagrangian method [12] and alternating direction method of multipliers [14, 16], and such a strategy achieves great success in numerical experiments. In this paper, we relax \(r(\cdot )\) in (1.3) to an indefinite one, yielding the following dynamic regularized dual averaging (DRDA) scheme

where \((k+1)h(\cdot )+ {\beta _{k+1}}r_k(\cdot )\) is strongly convex for each k. Note that under this requirement, even if the function \(h(\cdot )\) is convex, \(r_k(\cdot )\) could be carefully chosen to be nonconvex. Specially, we introduce an appropriate indefinite item in (1.4) and then propose the indefinite proximal subgradient-based (IPSB) algorithm. Convergence rate is established under mild assumptions. We do numerical experiments on the regularized least squares problem and elastic net regression. Numerical results demonstrate the efficiency of IPSB in comparing with the existing algorithms SDA and PSB.

The rest of this paper is organized as follows. In the following subsection, we introduce some notations and preliminaries. Section 2 reviews the simple dual averaging algorithm, the proximal subgradient-based algorithm and gives our new extensions. Section 3 presents the convergence analysis. Numerical experiments are performed in Sect. 4. We make conclusions in Sect. 5.

1.1 Notations and preliminaries

In this subsection, we present some definitions and preliminary results that will be used in our analysis later. Let Q be a closed convex set in \(\mathbb {R}^n\). We use \(\langle s, x \rangle \) and \(s^Tx\) to denote the inner product of s and x, two real vectors with the same dimension. Let \(\mathbb {S}^{n}\) denote the set of symmetric matrices of order n, and I denote the identity matrix whose dimension is clear from the context. The Euclidean norm defined by \(\sqrt{\langle \cdot ,\cdot \rangle }\) is denoted by \(\Vert \cdot \Vert \). Let [m] denote the set \(\{1,2,\ldots ,m\}\). The ball with center x and radius r reads as

The subdifferential of a convex function f at point \(x\in \mathrm {dom} f\) is given by

and any element in \(\partial f(x)\) is called a subgradient of f at x, where \(\mathrm {dom} f\) is the domain of f, i.e., the set of \(x\in \mathbb {R}^n\) such that f(x) is finite.

A function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\cup \{+\infty \}\) is called strongly convex if there exists a constant \(\kappa > 0\) such that

where the constant \(\kappa \) is called the strong convexity parameter.

For \(M \in \mathbb {R}^{n \times n}\), we use the notation \(\Vert x\Vert _M^2\) to denote \(x^TMx\) even if M is not positive semidefinite. Denote by \(\,\mathrm{tr }\,(M)\) the trace of the matrix M.

Definition 1.1

(SQCC) A function \(h:\mathbb {R}^n \rightarrow {\mathbb {R} \cup \{\infty \}}\) is called the summation of quadratic convex and convex functions (SQCC) if there exists a (nonlinear) quadratic convex function \(q:\mathbb {R}^n \rightarrow {\mathbb {R} \cup \{\infty \}}\) and a convex function \({\tilde{h}}:\mathbb {R}^n \rightarrow {\mathbb {R} \cup \{\infty \}}\) such that

Since h is SQCC, there exists a non-zero positive semidefinite matrix \(\Sigma _h \in \mathbb {S}^{n}\) such that for all x, \(y \in \mathbb {R}^n\),

or equivalently,

2 A new proximal subgradient algorithm

In the first subsection, we briefly review two existing algorithms SDA and PSB. Then in the second subsection, we describe the indefinite proximal subgradient-based (IPSB) algorithm.

2.1 SDA and PSB

We start from the classical subgradient algorithm [3] for minimizing the problem (1.1)

where \(P_Q\) denotes the projection onto Q, \(d_k\) is either a subgradient \(D_k \in \partial F(x_k)\) or the normalized subgradient \({ D_k}/{\Vert D_k\Vert } \), and the sequence of the stepsizes \(\{\lambda _k\}_{k=0}^{\infty }\) satisfies the divergent-series rule:

In order to avoid taking decreasing stepsizes (i.e., \(\lambda _k \rightarrow 0\)) as in the classical subgradient algorithm, Nesterov [20] proposed the SDA algorithm,

where \(\{\beta _{ k+1}\}_{k=0}^{\infty }\) is a positive nondecreasing sequence and \(x_0\) denotes the initial point. There are two simple strategies for choosing \(\{\lambda _{i}\}_{i=0}^{\infty }\), either \(\lambda _i \equiv 1\) or \(\lambda _i=1/\Vert d_i\Vert \). SDA can solve the generalized nonsmooth convex optimization problem and it has been proved to be optimal from the view point of worst-case black-box lower complexity bounds [20]. By considering problems with additive structure as in (1.1), Xiao [26] proposed the RDA scheme. A detailed algorithm under the RDA scheme is PSB, which is as follows

where \(g_i \in \partial f(x_i)\), \(\forall i\ge 0\), the stepsize \(\lambda _i\equiv 1\), \(\{\beta _{k+1}\}_{k=0}^{\infty }\) is nondecreasing, and \(x_0 \in \mathrm{argmin}\,_{x \in Q} h(x)\). The above iteration (2.3) reduces to (2.2) when \(h \equiv 0\).

2.2 Algorithm IPSB

Motivated by indefinite approaches, we extend RDA to the following dynamic regularized dual averaging (DRDA) scheme

We only assume that the sum \((k+1)h(x)+{\beta _{k+1}}r_k(x)\) is strongly convex. A simple choice of \(r_k(x)\) is

where \(G_{k+1}=I-(k+1)\Sigma _h/\beta _{k+1}\). Algorithm 1 describes the algorithm in detail.

In Algorithm 1, the choice of the indefinite matrix \(G_k\) in step 3 guarantees the strong convexity of the subproblem minimized in step 4. In some specially structured problems, the introduction of \(G_k\) can make the subproblem in step 4 much easier to solve.

Remark 2.1

Note that as the progressing of the iteration, the influence of the initial point \(x_0\) should be vanishing. In other words, the auxiliary quadratic term should be as small as possible. By comparing the auxiliary functions in the k-th step of the algorithms PSB and IPSB, we can obtain

which indicates that the indefinite term can reduce the impact of \(x_0\) on the k-th subproblem as k increases.

Remark 2.2

The following choice of the sequence \(\{{\tilde{\beta }}_{k+1}\}_{k=0}^{\infty }\) initialized in Algorithm 1 is due to Nesterov [20]:

where \( {\hat{\lambda }}>0\) is an initial parameter.

For the sequence \(\{{\tilde{\beta }}_{k+1}\}_{k=0}^{\infty }\), we have the following estimation, which corrected the previous estimation in [20, Lemma 3].

Lemma 2.1

Based on (2.5), we have

Proof

According to (2.5), we can obtain \({\tilde{\beta }}_1 = {\hat{\lambda }}\) and \({\tilde{\beta }}_{k}^2={\tilde{\beta }}_{k-1}^2+{{\tilde{\beta }}_{k-1}^{-2}}+2\). Consequently,

which implies the left-hand side of estimation (2.6). Conversely, we can derive that

From

we have

Finally, the right-hand side of the estimation (2.6) follows from substituting (2.8) into (2.7). \(\square \)

3 Convergence analysis

Similar to Nesterov’s analysis [20], the convergence of the algorithm IPSB is established. First let us define two auxiliary functions as follows

where \({\mathcal {F}}_D=\{x \in Q: \frac{1}{2}\Vert x-x_0\Vert ^2 \le D\}\), \(D > 0\) and \( G_k=I-{k\Sigma _h}/{\beta _{ k }},\ \forall k \ge 1. \) Let \(x_0 \in \mathop {\arg \min }_{x \in Q} h(x)\). Since \(s_0=0\), we have

Notice that (3.3) is a concave maximization problem and then it has a unique optimal solution in the closed convex set Q. According to Danskin’s theorem [2, Proposition B.25], we obtain that both \(V _ { 1 } ( -s_{0} ) \) and \(\nabla V_1(-s_0)\) are well defined. Let

In the following, the first lemma studies the relation between \(U _ { k } ( s )\) and \(V _ {k}(s)\), and the second lemma studies the smoothness of function \(V _ {k}(s)\).

Lemma 3.1

For any \(s \in \mathbb {R}^n\) and \(k \in \mathbb {N}\), we have

Proof

According to the definitions (3.1), (3.2) and \({\mathcal {F}}_D=\{x \in Q: \frac{1}{2}\Vert x-x_0\Vert ^2 \le D\}\), we have

where the first inequality corresponds to the partial Lagrangian relaxation, and the last inequality holds as \({\Sigma _h} \succeq 0\). \(\square \)

Lemma 3.2

The well-defined function \(V _ {k}(s)\) is convex and continuously differentiable. Then we have

where \(x_k(s)\) is the minimizer of the function \(V_{k}(s)\). In addition, \(\nabla V_{k} (s)\) is \({1}/{\beta _k}\)-Lipschitz continuous, i.e., there exists a constant \({1}/{\beta _k} > 0\) such that

Proof

Since the objective function of problem (3.2) is \(\beta _k-\)strongly concave with respect to x, \(x_k(s)\) is the unique maximizer of \(V_{k}(s)\). Then (3.6) follows from Danskin’s theorem [2, Proposition B.25].

For any \(l(x)\in \partial h(x)\), \( s_1, s_2 \in \mathbb {R}^n \), according to the first-order optimality conditions, we have

Adding these two inequalities together, we can get

where last inequality follows from \(G_k=I-\frac{k}{\beta _{ k }}\Sigma _h\). Thus, we have

which is equivalent to

\(\square \)

Let \(F^{*}_{D}=\min _{x \in {\mathcal {F}}_D} F(x)\). According to the convexity of the objective function, we have

Consequently, we define the gap function as

It follows from the inequality (3.5) that

Remark 3.1

For any fixed k, there exists a constant P that satisfies \(\max \nolimits _{i\in [k]} \frac{1}{2}\Vert x_i-x_0\Vert ^2\le P\). Thus we have

where \(\lambda _{max}\) is the maximum eigenvalue of \(\Sigma _h\).

Now we present the upper bounds as follows.

Theorem 3.1

Let the sequence \({\{x_i\}}^{k}_{i=0} \subset Q\) and \({\{g_i\}}^{k}_{i=0} \subset \mathbb {R}^n\) be generated by Algorithm 1. Let sequence \(\{{\beta }_i\}_{i=0}^{k}\) satisfies \(\beta _{ k }=\gamma {\tilde{\beta }}_k\), where \(\{{\tilde{\beta }}_i\}_{i=1}^{k}\) is defined in (2.5), \({\tilde{\beta }}_{ 0 }={\tilde{\beta }}_{1}\) and \(\gamma >0\). Then

-

1.

For any \(k\in \mathbb {N}\), we have

$$\begin{aligned} \delta _k \le \Delta _k \le \beta _{k}D + T + \frac{1}{2}\sum _{i=0}^{k-1}\frac{1}{\beta _i} \Vert g_i\Vert ^2 + \lambda _{max}(k-1)P. \end{aligned}$$(3.11) -

2.

Assume that

-

(1)

the sequence \(\{g_k\}_{k \ge 0}\) is bounded, which means that

$$\begin{aligned} \exists L >0,~ such~ that\ \Vert g_k\Vert \le L,\ \forall k \ge 0, \end{aligned}$$(3.12) -

(2)

there exists a solution \(x^*\) satisfying

$$\begin{aligned} \langle g,x-x^* \rangle \ge 0,~ g \in \partial f(x),\ \forall x \in Q. \end{aligned}$$(3.13)

Then it holds that

-

3.

Let \(x^*\) be an interior solution, i.e., there exist \(r, D >0\) satisfying \(B_r(x^*) \subseteq {\mathcal {F}}_D\). Assume there is a \(\Gamma _h >0\) such that

$$\begin{aligned} \max _{\begin{array}{c} z \in \partial h(y) \\ y \in B_r(x^*) \end{array}} \Vert z\Vert \le \Gamma _h. \end{aligned}$$

Then we have

where \( \bar{{s}}_{k+1}=\frac{1}{k+1}\sum _{i=0}^{k}g_k \).

Proof

-

1.

According to the definitions of \(V_k(s)\) and \(G_k\), for any integer \(k\ge 1\), we have

$$\begin{aligned} V_{k-1}(-s_k)&=\max _{x \in Q}\left\{ \langle -s_k, x-x_0 \rangle -(k-1)h(x)-\frac{\beta _{k-1}}{2}\Vert x-x_0\Vert ^2_{G_{k-1}}\right\} \\&\ge {\langle -s_k, x_k-x_0 \rangle -(k-1)h(x_k)-\frac{\beta _{k-1}}{2}\Vert x_k-x_0\Vert ^2_{G_{k-1}}}\\&= V_{k}(-s_k)+h(x_k)+\frac{\beta _k }{2}\Vert x_k-x_0\Vert ^2_{G_k}-\frac{\beta _{k-1} }{2}\Vert x_k-x_0\Vert ^2_{G_{k-1}}\\&\ge V_k(-s_k)+h(x_k)+\frac{\beta _{ k }-\beta _{k-1} }{2}\Vert x_k-x_0\Vert ^2-\frac{1}{2}\Vert x_k-x_0\Vert ^2_{\Sigma _h}\\&\ge V_k(-s_k)+h(x_k)-\frac{1}{2}\Vert x_k-x_0\Vert ^2_{\Sigma _h}. \end{aligned}$$According to Lemma 3.2, we have

$$\begin{aligned} V_k(s+\sigma ) \le V_k(s) + \langle \sigma , \nabla V_k(s) \rangle +\frac{1}{2\beta _k}\Vert \sigma \Vert ^2,~\forall s, \sigma \in \mathbb {R}^n. \end{aligned}$$(3.16)Substituting \(s_k\) into (3.16) yields that

$$\begin{aligned}&V_k(-s_k)+h(x_k) - \frac{1}{2}\Vert x_k-x_0\Vert ^2_{\Sigma _h}\\&\quad \le V_{k-1}(-s_k) = V_{k-1} (-s_{k-1}-g_{k-1}) \\&\quad \le V_{k-1}(-s_{k-1}) + \langle -g_{k-1}, \nabla V_{k-1}(-s_{k-1}) \rangle +\frac{1}{2\beta _{k-1}}\Vert g_{k-1}\Vert ^2\\&\quad = V_{k-1}(-s_{k-1}) + \langle -g_{k-1}, x_{k-1}-x_0 \rangle +\frac{1}{2\beta _{k-1}}\Vert g_{k-1}\Vert ^2,~ \forall k \ge 1, \end{aligned}$$which further implies that

$$\begin{aligned}&\langle g_{k- 1}, x_{k-1}-x_0 \rangle + h(x_k)\\&\quad \le V_{k-1}(-s_{k-1}) - V_k(-s_k)+\frac{1}{2\beta _{k-1}}\Vert g_{k-1}\Vert ^2 + \frac{1}{2}\Vert x_k-x_0\Vert ^2_{\Sigma _h}, ~ \forall k \ge 1. \end{aligned}$$By summing the above inequality from 1 to k, we obtain

$$\begin{aligned}&\sum \limits _{i=1}^{k} [\langle g_i, x_i-x_0 \rangle + h(x_{i+1})]\\&\quad \le V_{1} (-s_1)- V_{k+1} (-s_{k+1})+ \frac{1}{2}\sum \limits _{i=1}^{k} \left[ \frac{1}{\beta _i} \Vert g_i\Vert ^2+\Vert x_{i+1}-x_0\Vert ^2_{\Sigma _h}\right] , \end{aligned}$$which is equivalent to

$$\begin{aligned}&\sum _{i=0}^{k} [\langle g_i, x_i-x_0 \rangle + h(x_{i})] + V_{k+1} (-s_{k+1}) \le V_{1} (-s_1) + h(x_0)+ h(x_1) - h(x_{k+1})\nonumber \\&\quad +\frac{1}{2}\sum _{i=1}^{k} \left[ \frac{1}{\beta _i} \Vert g_i\Vert ^2+\Vert x_{i+1}-x_0\Vert ^2_{\Sigma _h}\right] . \end{aligned}$$(3.17)By combining with (3.16) and \(s_1=s_0+g_0\), we have

$$\begin{aligned} V_1(-s_1)&=V_1(-s_0-g_0) \le V_1(-s_0) +\langle -g_0, \nabla V_1(-s_0)\rangle + \frac{1}{2\beta _{1}}\Vert g_0\Vert ^2\\&= T - h(x_1)+ \frac{1}{2\beta _{0}} \Vert g_0\Vert ^2, \end{aligned}$$where the equality follows from (3.4) and \(\beta _{ 0 }=\beta _{1}\). By noting that \(x_0 = \arg \min _{ x \in Q } h(x)\), we can obtain that

$$\begin{aligned} h(x_0) \le h(x_{k+1}). \end{aligned}$$Finally, combining (3.9), (3.17) and the above inequalities, we conclude that

$$\begin{aligned} \Delta _{k+1} \le \beta _{k+1}D + T + \frac{1}{2}\sum _{i=0}^{k}\frac{1}{\beta _i} \Vert g_i\Vert ^2 + \frac{1}{2}\sum _{i=1}^{k}\Vert x_{i+1}- x_0\Vert ^2_{\Sigma _h}. \end{aligned}$$ -

2.

Notice that \(x_k = \mathop {\arg \min }_{ x \in Q } \left\langle s_{k} , x - x _ { 0 } \right\rangle +kh ( x )+ \frac{\beta _{k}}{2} \Vert x-x_0\Vert ^2_{G_k} \). By the convexity of the objective function, we have

$$\begin{aligned} \left\langle s_k + k l_k+\beta _{ k } G_k (x_k-x_0), x-x_k \right\rangle \ge 0,\ \forall x \in Q. \end{aligned}$$(3.18)Notice that \(G_k=I-{k\Sigma _h}/{\beta _{ k }}\). Then we can define the following \(\beta _{ k }-\)strongly convex function

$$\begin{aligned} \phi _k(x):=kh(x)+\frac{\beta _{ k }}{2}\Vert x-x_0\Vert ^2_{G_k},\ k \in \mathbb {N}, \end{aligned}$$which implies that

$$\begin{aligned} \phi _k(x) \ge \phi _k(x_k) + \left\langle kl_k+\beta _{ k } G_k (x_k-x_0), x-x_k \right\rangle +\frac{\beta _{ k }}{2}\Vert x_k-x\Vert ^2. \end{aligned}$$(3.19)By taking \(\phi _k(x_k)\) from the right-hand side of the inequality (3.19) to the left-hand side, we can get

$$\begin{aligned}&\left\langle kl_k+\beta _{ k } G_k (x_k-x_0), x-x_k \right\rangle +\frac{\beta _{k }}{2}\Vert x_k-x\Vert ^2\\&\le k[h(x)-h(x_k)] + \frac{\beta _{ k }}{2}\Vert x-x_0\Vert ^2_{G_k} -\frac{\beta _{ k }}{2}\Vert x_k-x\Vert ^2_{G_k}. \end{aligned}$$Combining with (3.18) yields that

$$\begin{aligned} \frac{\beta _{ k }}{2}\Vert x_k-x\Vert ^2 \le&~ kh(x)-kh(x_k)+ \frac{\beta _{ k }}{2}\Vert x-x_0\Vert ^2 _{G_k} - \frac{\beta _{ k }}{2}\Vert x_k-x_0\Vert ^2_{G{_k}} \nonumber \\&+ \left\langle kl_k+\beta _{ k }G_k(x_k-x_0), x_k -x \right\rangle \nonumber \\ \le&~ kh(x)-kh(x_k)+\frac{\beta _{ k }}{2}\Vert x-x_0\Vert ^2_ {G_k}- \frac{\beta _{ k }}{2}\Vert x_k-x_0\Vert ^2_{G{_k}} - \left\langle {s_k}, x_k -x \right\rangle \nonumber \\ =&V_k(s_k) + kh(x) + \frac{\beta _{ k }}{2} \Vert x-x_0\Vert ^2_{G_k} + \left\langle {s_k}, x-x_0 \right\rangle \nonumber \\ =&~ V_k(s_k)+\sum _{i=0}^{k-1}\left\langle g_{i}, x_i-x_0 \right\rangle + \sum _{i=0}^{k-1}h(x_i) \nonumber \\&+ \frac{\beta _{ k }}{2} \Vert x-x_0\Vert ^2_{G_k} + \sum _{i=0}^{k-1}\left\langle g_{i}, x-x_i \right\rangle + kh(x) -\sum _{i=0}^{k-1}h(x_i). \end{aligned}$$(3.20)Furthermore, we notice that (3.17) is taken into the following form

$$\begin{aligned} V_{k+1} (-s_{k+1})+\sum _{i=0}^{k} [\langle g_i, x_i-x_0 \rangle + h(x_{i})] \le T + \frac{1}{2}\sum _{i=0}^{k}\frac{1}{\beta _i} \Vert g_i\Vert ^2 + \frac{1}{2}\sum _{i=1}^{k}\Vert x_{i+1}- x_0\Vert ^2_{\Sigma _h}. \end{aligned}$$(3.21)By substituting (3.20) into (3.21), we can get

$$\begin{aligned} \frac{\beta _{ k}}{2}\Vert x_k-x\Vert ^2 \le T&+\frac{1}{2}\sum \limits _{i=0}^{k-1} \frac{1}{\beta _i} \Vert g_i\Vert ^2 + \frac{1}{2}\sum \limits _{i=1}^{k-1}\Vert x_{i+1}-x_0\Vert ^2_{\Sigma _h}\nonumber \\&+ \frac{\beta _{ k }}{2} \Vert x-x_0\Vert ^2_{G_k} + \left\{ \sum \limits _{i=0}^{k-1} {[f(x)+h(x)]-[f(x_i)+h(x_i)]}\right\} . \end{aligned}$$Finally, we set \(x=x^*:=\mathop {\arg \min }_{x \in {\mathcal {F}}_D} f(x)+h(x)\). Then it holds that

$$\begin{aligned} \frac{\beta _{k }}{2}\Vert x_k-x^*\Vert ^2 \le T + \frac{1}{2}\sum _{i=0}^{k-1} \frac{1}{\beta _i} \Vert g_i\Vert ^2 +\frac{1}{2}\sum _{i=1}^{k-1}\Vert x_{i+1}-x_0\Vert ^2_{\Sigma _h} +\frac{\beta _{ k }}{2} \Vert x^*- x_0\Vert ^2_{G_k}. \end{aligned}$$According to the conditions (2.5) and (3.12), we obtain the inequality (3.14).

-

3.

Based on (3.8), we can obtain

$$\begin{aligned}&\delta _{k+1} = \sum _{i=0}^{k} [\langle g_i, x_i-x^* \rangle + h(x_i)]+ \max _{x \in {\mathcal {F}}_D } \{ \left\langle {s}_{k+1} , x^* - x \right\rangle - (k+1)h ( x ) \}\\&\quad = \sum _{i=0}^{k} [\langle g_i, x_i-x^* \rangle + h(x_i)-h ( x^* )]+ \max _{x \in {\mathcal {F}}_D } \{ \left\langle {s}_{k+1} , x^* - x \right\rangle + (k+1)h ( x^* ) \\&\qquad - (k+1)h ( x ) \}\\&\quad \ge \sum _{i=0}^{k}\{f(x_i)+h(x_i)-f(x^*)-h ( x^* )\} + \max _{x \in {\mathcal {F}}_D } \{ \left\langle {s}_{k+1} , x^* - x \right\rangle + (k+1)h ( x^* ) \\&\qquad - (k+1)h ( x ) \}\\&\quad \ge \max _{x \in B_r(x^*) } \{ \left\langle {s}_{k+1} , x^* - x \right\rangle + (k+1)h ( x^* ) - (k+1)h ( x ) \}. \end{aligned}$$Notice that

$$\begin{aligned} {\bar{x}} =\arg \max _{x \in B_r(x^*) } \left\langle {s}_{k+1} , x^* - x \right\rangle . \end{aligned}$$Then we have \(\Vert x^*-{\bar{x}}\Vert =r\) and

$$\begin{aligned} \left\langle {s}_{k+1} , x^* - {\bar{x}} \right\rangle = \Vert {s}_{k+1}\Vert \Vert x^* - {\bar{x}} \Vert = r\Vert {s}_{k+1}\Vert . \end{aligned}$$Thus, it holds that

$$\begin{aligned} \delta _{k+1}&\ge \max _{x \in B_r(x^*) } \{ \left\langle {s}_{k+1} , x^* - x \right\rangle + (k+1)h ( x^* ) - (k+1)h ( x ) \}\\&\ge \left\langle {s}_{k+1} , x^* - {\bar{x}} \right\rangle + (k+1){h(x^*)-(k+1)h({\bar{x}})} \\&\ge r\Vert {s}_{k+1}\Vert + (k+1){ \left\langle l({\bar{x}}), x^*-{\bar{x}} \right\rangle } \\&\ge r\Vert {s}_{k+1}\Vert - (k+1){ \Vert l({\bar{x}})\Vert \Vert x^*-{\bar{x}}\Vert }\\&=r\Vert {s}_{k+1}\Vert - (k+1) r \Vert l({\bar{x}})\Vert , \end{aligned}$$which implies

$$\begin{aligned} \frac{1}{k+1}\Vert {s}_{k+1}\Vert&\le \frac{1}{r(k+1)}\delta _{k+1}+ \Vert l({\bar{x}})\Vert \le \frac{1}{r(k+1)}\delta _{k+1}+ \Gamma _h. \end{aligned}$$

As a main result, we can now estimate the upper bound on the complexity of IPSB in the following.

Theorem 3.2

Assume there exists a constant \(L>0\) such that \(\Vert g_k\Vert \le L\), \(\forall k \ge 0\). Denote by \(\{x_i\}_{i=0}^{k}\) the sequence generated by Algorithm 1. Let \( F_D^*= \min _ { x \in {\mathcal {F}}_D } F(x)\). Then we have

Proof

By combining (2.5) with the inequalities (3.7) and (3.11), we have

which finishes the proof of the inequality (3.22). \(\square \)

Remark 3.2

According to Lemma 2.1, we know that the sequence \(\{{\tilde{\beta }}_{k}\}_{k=0}^{\infty }\) can be used for balancing the terms appearing in the right-hand side of inequality (3.11). It follows from Theorem 3.2 that IPSB converges to the region of the optimal value with rate \(O({1}/{\sqrt{k}} )\).

4 Numerical experiments

In this section, we perform numerical experiments to compare the algorithms IPSB, SDA and PSB on two kinds of test problems. All experiments were implemented in MTALAB 2018b and run on a laptop with a dual core (\(1.6+1.8\) GHz) processor and 8 GB RAM.

4.1 Regularized least squares problem

In this subsection, we test the regularized least squares problem

where \(A \in \mathbb {R}^{n_1\times n_2}\), \(b\in \mathbb {R}^{n_1}\) and \(f_i(x),i\in [m]\) are all positive and strongly convex. Notice that (4.1) is a special case of (1.1) with \(f(x)=\Vert Ax-b\Vert _2\) and \(h(x)={\bar{\rho }} \max _{i\in [m]} {f_i(x)}\).

In our first test, we set \(m=2\), \(f_1(x)=\Vert x\Vert _{B}^2\) and \(f_2(x)=\Vert x-c\Vert _{B}^2\), where \(B \in \mathbb {R}^{n_2\times n_2}\) and \(c \in \mathbb {R}^{n_2}\). We set \(B\ne 0\) to be positive semidefinite but singular so that the function h is SQCC. In fact, we have \(\Sigma _h=2B\). Applying Algorithm 1 to solve (4.1) reduces to

where \(g_k \in \partial f(x_k)\) and

The three different algorithms in comparison for solving (4.1) are explicitly reformulated as

where expression for \({\bar{\rho }}_1\), \({\bar{\rho }}_2\) and \({\bar{G}}\) is as follows

In addition, \(l_k \in \partial h(x_k)\) and

In our experiments, we choose \({\bar{\rho }} =1\) and \(n_1 \times n_2 \in \{ 400 \times 900, 800 \times 2000, 1500 \times 3000\}\). In Algorithm 1, we set \(\gamma =20\), \({\hat{\lambda }}=1e-3\) and the termination criterion is set as either \(|F( {{\hat{x}}}^{k})-F({\hat{x}}^{k-1})| \le 10^{-3}\) or the number of iterations reaches 300. Starting from a fixed seed, we independently randomly generate \(x^* = (10, \ldots ,10)\in \mathbb {R}^{n_2}\), \(c \in \mathbb {R}^{n_2} \) from standard normal distribution \({\mathcal {N}}(0,0.25)\) and then generate each elment of A from \({\mathcal {N}}(0,20^2)\). We set \(b\in \mathbb {R}^{n_1}\) as follows

The matrix B is constructed by randomly generating eigenvalues and eigenvectors. The first ten eigenvalues of B are random positive numbers and the rest are zero. We construct the eigenvecters by randomly generating orthogonal matrix with uniformly distributed random elements. When \(n_1=400\), \(n_2=900\), MATLAB code to generate the above data is as follows. The others are similar.

where x_op corresponds to the variable \(x^*\).

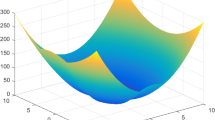

We plot the variants of \(\log (\log F({{{\hat{x}}}}_k))\) versus the iterations number k and CPU runtime in Figs. 1 and 2, respectively.

As shown in Fig. 1, IPSB would have a better function value than SDA in the iteration process. By zooming in on the details of the figures, it can be seen that the value generated by IPSB is decreasing rather than constant.

PSB runs much slower than IPSB because of the heavy computation cost of matrix inversion. It is shown in Fig. 2 that IPSB is much more efficient than PSB.

4.2 Elastic net regression

The elastic net is a regularized regression model [27] by linearly combining LASSO and ridge regression. It is formulated as

where p is the number of samples, n is the number of features, \(y\in \mathbb {R}^p\) is the response vector, \(X \in \mathbb {R}^{p \times n}\) is the design matrix, and \(\eta _1, \eta _2 > 0\) are regularization parameters. It corresponds to setting \(f(\omega )=\eta _1\Vert \omega \Vert _1\) and \(h(\omega )=\Vert y-X\omega \Vert _2^2+\eta _2\Vert \omega \Vert ^2_2\) in (1.1). The iteration schemes of three different algorithms in comparison for solving (4.2) are reformulated as

where the initial point \({\omega _{0}}\) is given by \((X^TX+\eta _2 I)^{-1}Xy = \arg \min _{\omega \in \mathbb {R}^{n}} h(\omega )\), \(sgn(\cdot )\) is the sign function, and the sequence \(\{\beta _{ k }\}_{k\ge 0}\) utilizes the form (2.5). We list in Table 1 the computational complexity in each iteration. It demonstrates that in each iteration PSB has the highest computational cost when n is much larger than k, and SDA takes the highest cost when k is much larger than p and n.

We set the termination criterion as

where \({\bar{f}}\) is an approximation of the optimal value obtained by running 500 iterations of SDA in advance, \({\bar{\omega }}= \sum _{i=1}^t \omega _i/n\) and t is the realistic number of iterations until termination.

We conduct the experiments with the following synthetic data and real data, respectively.

\( \textit{Synthetic data:} \) Starting a fixed seed, we independently and randomly generate \(X_{ij} \sim {\mathcal {N}}(0, 0.01)\), \(\omega ^* \sim {\mathcal {N}}(0,1)\), \(\epsilon _i \sim {\mathcal {N}}(0,0.04)\), and then set \(y_i=\sum _{j=1}^{n} X_{ij} \omega ^* + \epsilon _i\), \(i\in [p]\), \(j\in [n]\). We choose \(p\times n \in \{300 \times 1000, 500 \times 2000, 700 \times 3000, 1000 \times 5000\}\). The hyperparameters used for the synthetic data are set as

When \(p=300\), \(n=1000\), the MATLAB code to generate the data is as follows. The others are similar.

where x_op and ep correspond to the variables \(\omega ^*\) and \(\epsilon \) respectively.

MNIST data [15]: There are 70, 000 samples from the images of 10 digits in the MNIST data set, each with a \(28 \times 28\) gray-scale pixel-map, for a total of 784 features. We take the digits 8 and 9. Thus we have \(p=13783\) and \(n=784\). Moveover, let \(y\in \{+1,-1\}^n\) be the binary label. The hyperparameters used for MNIST data are as follows

Tables 2 and 3 represent the experimental results for synthetic data and MNIST data, respectively. In both Tables, we report the results of the numbers of iterations (Iter.), running time in seconds and the accuracy (Accu.) defined as \(1-|f({\bar{\omega }})-{\bar{f}}|/{{\bar{f}}}\).

In synthetic data, SDA takes the largest number of iterations among the three, IPSB runs in less CPU time than the other two algorithms, and PSB is the most inefficient one. In MNIST data, the three algorithms take almost the same number of iterations so that IPSB takes the least CPU time.

5 Conclusions

Nesterov’s dual averaging scheme succeeds in avoiding that stepsizes decrease as in the subgradient methods for nonsmooth convex minimizing problem. It is then extended to solve problems with an additional regularization, denoted by (RDA).

In this paper, we propose the dynamic regularized dual averaging scheme by relaxing the positive definite regularization term in RDA, which can not only reduce the impact of the initial point on the subproblems in later iterations but also make the new subproblem in each iteration easy to solve. Under this new scheme, we proposed indefinite proximal subgradient-based (IPSB) algorithm. We analyze the convergence rate of IPSB, which is \(O({1}/{\sqrt{k}})\), where k is the number of iterations. And IPSB converges to a region of the optimal value. Numerical experiments on regularized least squares problem and elastic net regression show that IPSB is more efficient than the existing algorithms SDA and PSB. Future works include more real applications of IPSB and further improvement of IPSB by, for example, relaxing the condition on the initial point.

Data availability statements

The authors confirm that all data generated or analysed during this study are included in the paper.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bertsekas, D.P.: Nonlinear Programming. Taylor & Francis, Milton Park (1997)

Boyd, S., Xiao, L., Mutapcic, A.: Subgradient methods. Lecture Notes of EE392o, Stanford University, Autumn Quarter, 2004:2004–2005 (2003)

Burke, J.V., Curtis, F.E., Lewis, A.S., Overton, M.L., Simões, L.E.: Gradient sampling methods for nonsmooth optimization. In: Numerical Nonsmooth Optimization, pp. 201–225. Springer (2020)

Cai, X.-J., Guo, K., Jiang, F., Wang, K., Wu, Z.-M., Han, D.-R.: The developments of proximal point algorithms. J. Oper. Res. Soc. China 1–43 (2022)

Combettes,P.L., Pesquet, J.C.: Proximal splitting methods in signal processing. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 185–212. Springer (2011)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7), 2021–2059 (2011)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(1), 293–318 (1992)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6(6), 721–741 (1984)

Grötschel, M., Lovász, L., Schrijver, A.: The ellipsoid method. In: Geometric Algorithms and Combinatorial Optimization, pp. 64–101. Springer (1993)

He, B., Ma, F., Yuan, X.: Optimal proximal augmented Lagrangian method and its application to full Jacobian splitting for multi-block separable convex minimization problems. IMA J. Numer. Anal. 40(2), 1188–1216 (2020)

Jiang, F., Cai, X., Han, D.: The indefinite proximal point algorithms for maximal monotone operators. Optimization 70(8), 1759–1790 (2021)

Jiang, F., Wu, Z., Cai, X.: Generalized ADMM with optimal indefinite proximal term for linearly constrained convex optimization. J. Ind. Manag. Optim. 16(2), 835–856 (2020)

LeCun, Y., Cortes, C., Burges, C.J.C.: The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist/ (2017)

Li, M., Sun, D., Toh, K.C.: A majorized ADMM with indefinite proximal terms for linearly constrained convex composite optimization. SIAM J. Optim. 26(2), 922–950 (2016)

Mäkelä, M.: Survey of bundle methods for nonsmooth optimization. Optim. Methods Softw. 17(1), 1–29 (2002)

Martinet, B.: Regularization d’inequations variationelles par approximations successives. Revue Francaise d’Informatique et de Recherche Opérationelle 4, 154–159 (1970)

Nesterov, Y.: Smooth minimization of nonsmooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer, Berlin (2013)

Ram, S.S., Nedić, A., Veeravalli, V.V.: Incremental stochastic subgradient algorithms for convex optimization. SIAM J. Optim. 20(2), 691–717 (2009)

Ram, S.S., Nedić, A., Veeravalli, V.V.: Distributed stochastic subgradient projection algorithms for convex optimization. J. Optim. Theory Appl. 147(3), 516–545 (2010)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Shor, N.Z.: Minimization Methods for Non-differentiable Functions. Springer Series in Computational Mathematics, Springer, Berlin (1985)

Xiao, L.: Dual averaging methods for regularized stochastic learning and online optimization. J. Mach. Learn. Res. 11(10), 2543–2596 (2010)

Zou, H., Hastie, T.: Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 67, 301–320 (2005)

Acknowledgements

The authors thank the editor and the referees for the valuable comments/suggestions, which help us improve the paper greatly. The research of the second author was partially supported by NSFC with Nos. 12131004 and 12126603; and the research of the third author was partially supported by NSFC with No. 12171021 and by Beijing NSF with No. Z180005.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, R., Han, D. & Xia, Y. An indefinite proximal subgradient-based algorithm for nonsmooth composite optimization. J Glob Optim 87, 533–550 (2023). https://doi.org/10.1007/s10898-022-01173-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01173-9