Abstract

In this paper, by using the quaternion algebra, the conventional complex-type moments (CTMs) for gray-scale images are generalized to color images as quaternion-type moments (QTMs) in a holistic manner. We first provide a general formula of QTMs from which we derive a set of quaternion-valued QTM invariants (QTMIs) to image rotation, scale and translation transformations by eliminating the influence of transformation parameters. An efficient computation algorithm is also proposed so as to reduce computational complexity. The performance of the proposed QTMs and QTMIs are evaluated considering several application frameworks ranging from color image reconstruction, face recognition to image registration. We show they achieve better performance than CTMs and CTM invariants (CTMIs). We also discuss the choice of the unit pure quaternion influence with the help of experiments. \((i-j-k)/\sqrt{3}\) appears to be an optimal choice.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, with the development of inexpensive digital cameras, almost all acquired images are chromatic. At the same time, a color image has the potential to convey more information than a monochrome or a binary image. The three red, green and blue (RGB) values associated to each pixel or as well its brightness, hue, and saturation, can be successfully employed in many computer vision tasks such as object recognition, tracking, segmentation and registration [1]. The conventional approach to deal with color images consists in processing each channel separately, using gray level techniques, and to combine the individual output results. As a consequence, this approach fails to capture the inherent correlation between color channels [1] and the entirety of three channels [2]. The main issue is therefore to handle three values of each pixel in a holistic manner.

In the past two decades, quaternions have been more and more used in color image processing to represent color images by encoding three channels into the imaginary parts of quaternion numbers [1, 3–16]. The main advantage of this quaternion representation is that a color image can be treated holistically as a vector field [1, 9]. The quaternion algebra has been exploited in digital color image processing by Sangwine [3] and Pei and Cheng [4]. Since then, many classical tools used for gray-scale images have been successfully extended to color image processing using the quaternion algebra. They include the Fourier transform [3, 9, 17, 18], neural networks [19, 20], principal component analysis [8, 14], the wavelet transform [21, 22], independent component analysis [23, 24], singular value decomposition [6, 7], Fourier–Mellin transform [25], polar harmonic transform [26], and moments [12, 13, 15]. Recently, the Clifford algebra, also known as geometric algebra which is a generalization of quaternion algebra, has been reported in the literature allowing the processing of higher dimensional signals like 3D color images. Clifford Fourier transform [27–29], Clifford Fourier–Mellin moments [30], Clifford neural network [31], Clifford support vector machines [32], Clifford wavelet [33] and geometric cross correlation [34] are examples of such processes. A detailed overview of the related works based on Clifford algebra can be found in [35]. In this paper, we focus on the use of the quaternion algebra so as to extend the conventional moments to color image processing.

Moments are scalar quantities used to characterize a function and to capture its significant features [36, 37]. They have been extensively considered for pattern recognition [15, 38], scene matching [12, 15, 39], object classification [15, 40], image registration [13], image reconstruction [41], watermarking [42], and so on, owing to their image description and invariance properties. More details about moments can be found in [36] and [37]. However, moments are mainly used to deal with binary or gray-scale images. For color images, most of the published works are based on the conventional approach mentioned before. Recently, the use of quaternion-based moment functions for color images has been investigated [12, 13, 15]. We introduced the notion of the quaternion Zernike moments (QZMs) [12] and derived a set of quaternion-valued QZM invariants (QZMIs) to image rotation, scale and translation (RST) transformations [15]. In parallel, Guo et al. proposed the quaternion Fourier–Mellin moments (QFMMs) and also derived a set of invariants with respect to RST transformations [13]. However, their rotation invariance was achieved by taking the modulus of the quaternion moments which leads to the loss of the phase information and only provides one real-valued invariant.

Compared with [15], our main motivation here is therefore (i) to extend the conventional complex-type moments (CTMs) to color images as quaternion-type moments (QTMs) in a holistic way and to derive a set of quaternion-type moment invariants (QTMIs) to RST transformations; (ii) to propose an efficient algorithm to compute QTMs in order to reduce their computational complexity; (iii) to carry out experiments considering more application frameworks, including color face recognition and color image registration, in order to demonstrate the efficiency of QTMs and QTMIs in terms of image representation capability and robustness to noise and blurring; and (iv) to consider a general unit pure quaternion and discuss its choice. Regarding the first point, the CTMs we extend in the sequel include the commonly-used rotational moments (ROTMs), radial moments (RADMs), Fourier–Mellin moments (FMMs), orthogonal Fourier–Mellin moments (OFMMs), Zernike moments (ZMs), and pseudo-Zernike moments (PZMs). They all have been applied to solve a number of computer vision problems with the advantage that their modulus is invariant to image rotation.

This paper is organized as follows. In Sect. 2, we first recall some basic features of quaternions and quaternion color representation, and then we present the general formulas of CTMs. Section 3, the theoretical part of this paper, provides a general definition of QTMs, the derivation of the moment invariants with respect to RST transformations and an efficient implementation of QTMs. Experimental results for evaluating the performance of the proposed methods are given in Sect. 4. Overall discussion follows in Sects. 5 and 6 concludes the paper.

2 Some Preliminaries

2.1 Quaternion Number and Quaternion Color Representation

Quaternions, introduced by the mathematician Hamilton [43] in 1843, are generalizations of complex numbers. A quaternion has one real part and three imaginary parts given by

where \(a, b, c, d \in R\), and i, j, k are three imaginary units obeying the following rules

If the real part \(a = 0, q\) is called a pure quaternion.

The conjugate and modulus of a quaternion are respectively defined by

Let \(f(x, y)\) be an RGB image function with the quaternion representation. Each pixel can be represented as a pure quaternion

where \(f_{R}\) \((x, y)\), \(f_{G}\) \((x, y)\) and \(f_{B}\) \((x, y)\) are respectively the red, green and blue components of the pixel.

2.2 Complex-Type Moments

The general formula of the conventional CTM of order \(n\) with repetition \(m\) of a gray-scale image \(g(r, \theta )\) is defined as

where \(\phi _{n,m} (r)\) is the real-valued radial polynomial of different CTMs shown in Table 1. \(\Omega \) is the image definition domain, it corresponds to \([0, \infty )\times [0, 2\uppi ]\) for the three non-orthogonal moments (i.e. ROTMs, RADMs, and FMMs), and to the unit disk \([0, 1]\times [0, 2\uppi ]\) for the orthogonal ones (i.e. OFMMs, ZMs, and PZMs).

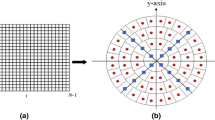

For a digital image of size N \(\times \) N, (6) can be written in the discrete form as

where (\(r_{x,y}\), \(\theta _{x,y})\) is the image pixel coordinate representation in polar form corresponding to the Cartesian coordinate \((x, y)\). The mapping transformation to the interior of the unit circle is given by [44]

with \(c_1 ={\sqrt{2}}/{(N-1)},c_2 =-1/{\sqrt{2}}\).

3 Quaternion-Type Moments and Their RST Invariants

In this section, we first define the general formula of QTMs for color images before constructing a set of quaternion-value RST invariants; invariants that preserve the phase information, an important piece of information in some practical application frameworks. At last we propose an efficient algorithm that implements QTMs.

3.1 Quaternion-Type Moments

According to the general definition of gray-scale image CTMs shown in (6), and to quaternion algebra, the general formula of the right-side QTM of a color image \(f(r, \theta )\) of order \(n\) with repetition \(m\) is introduced as

where \(\phi _{n,m} (r)\) is the real-valued radial polynomial of QTMs shown in Table 1, \(\Omega \) is the image definition domain (like for CTMs), \(\varvec{\mu }\) is a unit pure quaternion. Basically, \(\varvec{\mu }\) can be defined as a linear combination of i, j, and k such as: \(\varvec{\mu } = \alpha {{\varvec{i}}} + \beta {{\varvec{j}}} + \gamma {{\varvec{k}}}, \alpha , \beta , \gamma \in \mathbf{R},{\vert }{\vert }\varvec{\mu }{\vert }{\vert } = 1\).

Due to the fact the difference between the three non-orthogonal quaternion-type moments only stands on the exponent \(k\) of \(\phi _{n,m} (r)=r^{k}\) (the quaternion rotational moment of order \(n\) with repetition \(m\) is equivalent to the quaternion radial moment of order \((n+1)\) with repetition \(m\), and to the quaternion Fourier–Mellin moment of order \((n+2)\) with repetition \(m\)), in the following, we only consider the quaternion rotational moments and three quaternion orthogonal moments. These four types of QTMs are abbreviated hereafter as QROTMs, QOFMMs, QZMs and QPZMs, respectively.

If the radial polynomials are orthogonal, the image \(f(r, \theta )\) can be reconstructed through the following inverse transform:

However, in practice, one has to truncate the infinite series in (10) at a finite number \(M\) [45]. This truncated expansion is an approximation of \(f(r, \theta )\) such as

This approximation mainly induces three kinds of errors [46]: the truncation error because a finite number of moments are used; the geometric error due to the fact that moment computation is not conducted on the complete unit disk; the numerical error that mostly results from the approximation of continuous moments in (9) by their discrete forms considering a discrete digital image \(f(x, y)\) of \(N \times N\) pixels, i.e. approximating the double integration by a double summation as

where (\(r_{x,y}\), \(\theta _{x,y})\) is defined in (8).

It can be seen from (2) that the multiplication of quaternions is not commutative. By shifting the exponential part \(e^{-\mu m\theta }\) in (9) to the left-side of the \(f(r, \theta )\), we can also define the left-side QTM of order \(n\) with repetition \(m\) as

and the corresponding inverse transform

The right-side of QTMs is not equal to the left-side ones. However, using some properties of quaternions, the relationship between them can be deduced as follows

3.2 Translation Invariants

The common centroid \((x_{c}, y_{c})\) of all three channels introduced by Suk and Flusser in [47] is defined as

where \(m_{0,0}(f_{R})\), \(m_{1,0}(f_{R})\) and \(m_{0,1}(f_{R})\) are respectively the zero-order and first-order geometric moments for \(R\) channel, \(m_{0,0}\left( f_{G}\right) \), \(m_{1,0}\left( f_{G}\right) \) and \(m_{0,1}\left( f_{G}\right) \) for \(G\) channel, and \(m_{0,0}(f_{B})\), \(m_{1,0}(f_{B})\) and \(m_{0,1}(f_{B})\) for \(B\) channel. Let the origin of coordinate system be located at \((x_{c}, y_{c})\), the central QTMs (translation invariants) can then be obtained as follows

where \((\bar{{r}},\bar{{\theta }})\) is the image pixel coordinate representation in polar form with the mapping transformation (8) after locating the origin at \((x_{c}, y_{c})\).

3.3 Rotation Invariants

Let \({f}'\) be the rotated version of \(f\), i.e., \({f}'(r,\theta )=f(r,\theta -\alpha )\), where \(\alpha \) denotes the rotation angle, then we have

Equation (18) shows that the modulus of \(\varPhi _{n,m}^R \) is invariant to rotation, which is the conventional magnitude-based method adopted in [12, 13] and [30] for achieving the rotation invariance. However, such a process loses the phase information which may be useful in some applications [48, 49]. Moreover, \(\left\| {\varPhi _{n,m}^R } \right\| \) provides only one real-valued invariant. To overcome these shortcomings, we provide here a new way to construct a set of quaternion-valued rotation invariants.

For the left-side of QTMs, we can proceed by following the way depicted in (18) with

Theorem 1

Let

then \(\xi _{n,k}^m (f)\) is invariant to image rotation.

Proof

Let \({f}'\) be the rotated image of \(f\) with rotation angle \(\alpha \), using (18) and (19), we have

The proof has been completed. \(\square \)

The rotation invariants constructed by taking the modulus of \(\varPhi _{n,m}^R \) (i.e \(k=n\), \(\xi _{n,k}^m (f)=-\left\| {\varPhi _{n,m}^R (f)} \right\| ^{2})\) are just a special case of Theorem 1. Note that each invariant \(\xi _{n,k}^m (f)\) is a quaternion number, which includes four real-valued invariants (one real part and three imaginary parts) except for \(\xi _{n,n}^m (f)\).

3.4 Scaling Invariants and Combined RST Invariants

It is easy to rewrite (9) as

where \(\psi _{p,m}^R (f)\) is the QROTMs of order \(p\) with repetition \(m\) defined as \(\psi _{p,m}^R (f)=\mathop {\int \int }\limits _{\Omega } {r^{p}f(r,\theta )e^{-\mu m\theta }rdrd\theta }\), and the coefficients \(c_{l,k}^m\) are given by

From (22), \(\psi _{n,m}^R (f)\) can also be expressed as a series of QTMs

where \(D_l^m =(d_{i,j}^m)\), with \(0 \le j \le i \le l\), is the inverse matrix of \(C_l^m =(c_{i,j}^m)\). Both \(C_l^m\) and \(D_l^m \) are lower triangular matrices of size \((l+1) \times (l+1)\) and the elements of \(C_l^m\) are defined by (23). The elements of \(D_l^m \) are given by [50]

Let \({f}''\) and \(f\) be two images having the same content but scale (\(\lambda )\), that is, \({f}''(r,\theta )=f(r/\lambda ,\theta \)). Using (22) and (26), the QTMs of the transformed image can be obtained as

where \(l\) is defined in (24), \(p\) in (25), and

Theorem 2

Let

with \(\varGamma _f =\sqrt{\left\| {\varPhi _{0,0}^R (f)} \right\| }\). Then, \(L_{n,m}^R (f)\) is invariant to scaling.

The proof is given in Appendix A.

Corollary 1

Let \({f}'\) be the rotated version of \(f\) with rotation angle \(\alpha \). It holds for any non-negative integers \(n\) and \(m\) that

The proof of Corollary 1 is very similar to (18) and it is thus omitted.

Based on Corollary 1, we have

Corollary 2

Let

then \(\varphi _{n,k}^m (f)\) is invariant to both image rotation and scaling.

The proof of Corollary 2 is very similar to that of Theorem 1 and it is also omitted.

Combining (17) with (32), the RST invariants of QTMs (QTMIs) can be obtained as follows.

Corollary 3

Let

where \(\bar{{L}}_{n,m}^R (f)\) is the scaling invariant defined in (30) using the central QTMs \(\bar{{\varPhi }}_{q,m}^R (f)\) instead of \(\varPhi _{q,m}^R (f)\). Then \(\bar{{\varphi }}_{n,k}^m (f)\) is invariant to image RST transformations for any non-negative integers \(n\), \(k\), and integer \(m\).

The four types of QTMIs based on (33) are abbreviated as QROTMIs, QOFMMIs, QZMIs and QPZMIs. It is worth mentioning that each invariant \(\bar{{\varphi }}_{n,k}^m (f)\) includes four invariants (one real part and three imaginary parts) except \(\bar{{\varphi }}_{n,n}^m (f)\), which has only one. Indeed, if \(n =k\) then \(\bar{{\varphi }}_{n,k}^m (f)=\left\| {\bar{{L}}_{n,m}^R (f)} \right\| ^{2}\) is a real number. Moreover, it can be shown that the set of QTMIs based on orthogonal moments, such as QOFMMIs, QZMIs and QPZMIs, is a complete set. The proof is similar to that in [51] for the conventional OFMM invariants, it is thus omitted here.

This set of invariant descriptor QTMIs defined by equation (33) are experimented and compared in Sect. 4 considering various application frameworks.

3.5 Efficient Implementation of QTMs

It is well known that the computational load of quaternion moments is high and that efficient computation is a major concern. This issue has been initially addressed for QZMs in our previous paper [15] but without analyzing the computational complexity. The same approach is considered and summarized here. It makes use of the relationships between QTMs and CTMs. These relationships allow us exploiting fast algorithms previously developed for computing CTMs [52–54]. Moreover, since \(1/(N-1)^{2}\), \(\phi _{n,m} (r_{x,y} )\) and \(e^{-\mu m\theta _{x,y} }\) are independent of the image contents, their products can be calculated before the computation of moments and then saved, so they will not be considered.

Let us analyze the computational complexity of the direct computation of QTMs defined in (12). Since \(f(r, \theta )\) is a pure quaternion number and the product of \(1/(N-1)^{2}\), \(\phi _{n,m} (r_{x,y})\) and \(e^{-\mu m\theta _{x,y}}\) is a quaternion number, their multiplication requires 12 real number multiplications and 8 additions. Thus, the total amount of real number multiplications and additions involved is respectively \((12N^{2}+1)\) and \((9N^{2}-1)\) for the direct algorithm.

A faster solution we propose makes use of the relationships between QTMs and CTMs, which can be derived in a similar way as for QZMs [15]. Due to space limitation, the detailed derivation is omitted. For \(\varvec{\mu } = \alpha {{\varvec{i}}} + \beta {{\varvec{j}}} + \gamma {{\varvec{k}}}\), we have

where

Here \(\varPhi _{n,m} (f_R )\), \(\varPhi _{n,m} (f_G )\) and \(\varPhi _{n,m} (f_B )\) are respectively the discrete forms of CTMs defined in (7) for the red channel, green and blue channels, Re(\(x\)) represents the real part of a conventional complex number \(x\), and Im(\(x\)) its imaginary part, that is, \(\hbox {Re}(a+b{{\varvec{i}}}) = a\), \(\hbox {Im}(a+b{{\varvec{i}}}) = b\).

As for the direct algorithm, without considering the computation of 1/(\(N\)–1)\(^{2}\), \(\phi _{n,m} (r_{x,y} )\) and \(e^{-jm\theta _{x,y} }\), the CTM defined in (7) needs \(2N^{2}\) real number multiplications and (\(N^{2}\)–1) additions. It can be seen from (34) and (35) that the computation of QTM requires the computation of CTM three times and 9 real number multiplications as well as 8 additions. Therefore, the computational complexity of the proposed efficient algorithm is \((6N^{2}+9)\) real number multiplications and \((3N^{2}+5)\) additions, which is lower than half of the direct algorithm.

Note that the computation of QTMs via CTMs not only reduces the number of arithmetic operations by half, but also allows us to further improve the computational efficiency by using the fast algorithms previously developed for CTMs. Among many efficient algorithms developed for CTMs, one can adopt for example: the recursive algorithm with improved numerical stability proposed in [52] for orthogonal Fourier–Mellin moments (OFMMs), the recursive algorithm of [53] for Zernike moments (ZMs) based on an improved polar tiling scheme or the \(q\)-recursive algorithm of [54] for pseudo-Zernike moments (PZMs).

In order to demonstrate the gain of our approach in terms of complexity, let us consider another solution that can be adopted and which consists in using the decomposition method proposed by Ell and Sangwine in [55] for 2D hypercomplex Fourier transforms. To exploit their algorithm for computing QTMs, we first need to represent the quaternion-valued image function \(f(x, y)\) shown in (5) in a symplectic form by

where \(\tilde{\varvec{\mu }}\) is a unit pure quaternion satisfying \(\tilde{\varvec{\mu }}\bot {\varvec{\mu }}\), \(c_{1}(x, y)\) and \(c_{2}(x, y)\) are respectively called the simplex and perplex parts of \(f(x, y)\) with \(c_{1}(x, y){\vert }{\vert }\) \(c_{2}(x, y){\vert }{\vert }{\varvec{\mu }}\). They can be obtained as follows [55]

Then, substituting (36) into (12), we have

where

Equation (38) means that a quaternion-type moment can be decomposed into pairs of moments \(C_{1}(f)\) and \(C_{2}(f)\) that are isomorphic to the CTMs. As example, if \(\mu \) is chosen as the luminance axis, i.e. \(\mu =({\varvec{i}}+{\varvec{j}}+{\varvec{k}})/\sqrt{3}\), then \(C_{1}(f)\) and \(C_{2}(f)\) are associated to the luminance. Meanwhile, (38) also provides an algorithm for computing QTMs through CTMs, it is obvious that the calculation of \(C_{1}(f)\) or of \(C_{2}(f)\) needs \(4N^{2}+1\) real number multiplications and \(3N^{2}\)–1 additions. Thus, the computational complexity of this decomposition-based algorithm is of \(8N^{2}+2\) real number multiplications and \(6N^{2}\)–2 additions to which we should add the computational complexity of the symplectic representation change of \(f\) into \(c_{1}\) and \(c_{2}\) as shown in (36). Anyway, as it can be seen, the resulting complexity of this solution is still greater than the one of our proposal.

4 Experimental Results

In this section, in order to show the performance of the approach proposed above, three distinct application frameworks are considered. The first one dealing with image reconstruction is briefly presented for orthogonal QTMs. We then test QTMIs’ invariance to rotation and scaling and their robustness to noise before evaluating their performance within the two other applications, a color face recognition problem and a color image registration issue, aiming at highlighting that QTMIs are effective invariant features due to their robustness and RST invariant properties.

Due to the fact an infinite number of unit pure quaternion \(\varvec{\mu } (\varvec{\mu } = \alpha {{\varvec{i}}}+ \beta {{\varvec{j}}}+ \gamma {{\varvec{k}}}, \alpha , \beta , \gamma \in \mathbf{R}, {\vert }{\vert }\varvec{\mu }{\vert }{\vert } = 1)\) can be used to build QTMs, 94 randomly generated values of \(\varvec{\mu }\): \(\varvec{\mu }_{t}\), \(t = 1, 2, {\ldots }, 94\) (with \( \alpha , \beta , \gamma \) uniformly distributed in [0, 1]), as well as 6 common values from the literature: \(\varvec{\mu }_{95} = {{\varvec{i}}}\) [56], \(\varvec{\mu }_{96} = {{\varvec{j}}}\) [57], \(\varvec{\mu }_{97} = ({\varvec{i}}+{\varvec{k}})/\sqrt{2}\) [13], \(\varvec{\mu }_{98} = (-2{\varvec{j}}+8{\varvec{k}})/\sqrt{68}\) [58], \(\varvec{\mu }_{99 }=({\varvec{i}}+{\varvec{j}}+{\varvec{k}})/\sqrt{3}\) [1, 6, 8–12, 15, 16] and \(\varvec{\mu }_{100} = ({\varvec{i}}-{\varvec{j}}-{\varvec{k}})/\sqrt{3}\) [59], were considered in the following experiments for performance assessment.

4.1 Color Image Reconstruction

The well known color Lena image shown in Fig. 1 of size 128 \(\times \) 128 has been used. It was reconstructed using (11). Notice that the reconstructed result is independent of the choice of the unit pure quaternion \(\varvec{\mu }\) since all the terms including \(\varvec{\mu }\) in (11) are eliminated by the corresponding conjugate terms in the forward transform (9). This was also proved by Li using quaternion polar harmonic transform through experiments with 100 randomly generated \(\varvec{\mu }\) [26]. The results for different orthogonal QTMs and various maximum orders \(M\) are shown in Fig. 2. It can be qualitatively seen that the reconstructed images are close to the original image for a maximum order \(M\) equals to or greater than 100.

Let \(f(x, y)\) be the original image and \(\hat{{f}}(x,y)\) the reconstructed image with the quaternion color representation. The following normalized mean square error (NMSE) \(\varepsilon ^{2}\) is used to measure the difference between \(f(x, y)\) and \(\hat{{f}}(x,y)\) [45]

The values of NMSE obtained with different orthogonal QTMs and various maximum orders \(M\) are provided in Fig. 3. These results confirm the comment made on Fig. 2: (1) all the values of NMSE are small, they first decrease, reach a minimum and then increase for all three types of QTMs. Such behavior has also been observed and pointed out by Liao and Pawlak in [45] when focusing on the conventional Zernike moments. The reason mainly stands on the errors described in the Sect. 3.1: the truncation error decreases with the increase of \(M\), while both the numerical and the geometric errors increase along with the number of moments used for reconstruction; (2) when M is smaller than 50, the NMSE for QOFMMs is smaller than for QPZMs and QZMs. However, the opposite is observed for higher values of \(M\).

4.2 Test of Invariance to Rotation, Scaling and Robustness to Noise

For the experiments presented in this subsection, a set of fourteen images (see Fig. 4) of \(128 \times 128\) pixels has been chosen from the public Columbia database [60]. In order to contain the entire transformed image, all original images are enlarged to \(204 \times 204\) pixels by zero-padding so as to ensure the rotation and translation do not change the image size. From this image test set different datasets have been derived for the following purposes : (1) to compare the invariance against rotation, images were rotated by various angles from \(0^{\circ }\) to \(90^{\circ }\) every \(5^{\circ }\) using at the same time a bilinear interpolation when required; (2) to test the invariance against scaling, images were scaled by different factors in the range 20 % to 200 % with interval of 10 %; (3) to evaluate the robustness to noise, images were corrupted by Gaussian noise with standard deviations varying from 0 to 9 and salt-and-pepper noise with a density in the range 0 to 4.5 %, respectively.

Let \(I(f) = \left\{ I_{1}, I_{2}, {\ldots }, I_{p}\right\} \) be a moment invariant vector, where \(I_{t}= a_{t }+b_{t} {{\varvec{i}}}+ c_{t}{{\varvec{j}}}+ d_{t}{{\varvec{k}}}\), \(t = 1, 2, {\ldots }, p\), are the QTMIs defined in (33) and \(p\) is the number of QTMIs used. In order to evaluate the invariance of QTMIs, we define the relative error between the two quaternion vectors \(I(f)\) and \(I(g)\) corresponding to an image \(f\) and its transformed version \(g\) as

where \({\vert }{\vert }.{\vert }{\vert }_{2}\) is the quaternion vector distance defined in [14] as follows

The four different QTMIs we constructed and the QFMM invariants (QFMMIs) derived by Guo et al. [13] of order up to 5 were considered. Notice that when \(n\) and \(m\) are fixed in (33), it is preferable to use the lower order moments with a small value of \(k\) for the second term \(\bar{{L}}_{k,m}^R (f)\) due to the fact lower order moments are less sensitive to image noise than higher order ones [61] (see the upcoming Sect. 4.2(b)). For this reason, \(\bar{{L}}_{0,m}^R (f)\), with \(k\) = 0 in (33), is chosen for QROTMIs and QOFMMIs while \(\bar{{L}}_{m,m}^R (f)\), with \(k=m\), is retained for QZMIs and QPZMIs since the order \(k\) should not be smaller than the repetition \(m\). More clearly and to summarize, according to (33), the set of invariants \(\left\{ {\bar{{\varphi }}_{n,0}^m (f)\left| {0\le n,m\le M} \right. } \right\} \) is used for QROTMIs and QOFMMIs, while \(\big \{ \bar{{\varphi }}_{n,m}^m (f) \big | 0\le m\le M,m\le n\le M, n-m\hbox { being even} \big \}\) for QZMIs and \(\big \{ \bar{{\varphi }}_{n,m}^m (f)\big | 0\le m\le M, m\le n\le M \big \}\) for QPZMIs, where \(M\) is the maximum order used. Notice also that in order to achieve the scaling invariance, invariants in these sets with order 0 and repetition 0 are not considered.

(a) Performance comparison with different choices of \(\varvec{\mu }\)

In order to compare the performance of the 100 different values of \(\varvec{\mu }\) mentioned above, we have considered one image from our original image dataset (see Fig. 4a) and four of its degraded: one rotated with an angle of 30 degree, one scaled with a factor of 40 %, one with a Gaussian noise of standard deviation 3, and one with a salt-and-pepper noise of density 2 %. Then, five types of QTMIs based on our different \(\varvec{\mu }\) test set were computed on these four degraded images and Fig. 4a. Herein, the maximum order \(M\) was set to 5 for all QTMIs. As measure of performance, we considered the relative error \(E(f, g)\) in between QTMIs of one degraded image \(g\) and its original version \(f\). Relative errors we obtained are shown in Fig. 5 where our 100 different test values of \(\varvec{\mu }\) are placed in abscise. The relative error standard deviation on our \(\varvec{\mu }\) test set has also been computed as follows

where \(E_{k}(f, g)\) is the relative error in between QTMIs of \(f\) and \(g\) for the \(k^{th}\) \(\varvec{\mu }\) value of our \(\varvec{\mu }\) test set and \(\bar{{E}}\) is the average relative error of \(E_{k}(f, g)\). Average errors and standard deviations are provided in Table 2. It can be observed from Fig. 5 and Table 2 that: (1) all five types of QTMIs achieve good invariance to rotation and scaling; (2) three orthogonal moment invariants (QPZMIs, QZMIs and QOFMMIs) are still perfect though the images are corrupted by noise, while the other two non-orthogonal QROTMIs and QFMMIs appear to be sensitive to such a noises, especially QFMMIs. This conclusion is in accordance with the results reported in [61] for CTMs; (3) performance of different \(\varvec{\mu }\) are closed. Since the experiments here are only simulations, we will come back on this discussion in Sect. 4.3a where experiments have been conducted in real environments. Nevertheless, although the difference of performance is not very clear, we can still find that \(\varvec{\mu }_{100}\), i.e. \(({\varvec{i}}-{\varvec{j}}-{\varvec{k}})/\sqrt{3}\), appears as the overall optimal value of \(\varvec{\mu }\) among our test set. This is the reason why \(\varvec{\mu }_{100}\) is considered for each type of QTMIs in the following Sects. 4.2b and 4.2c.

(b) Robustness to noise when considering lower order moments to construct rotation invariants

This experiment was carried out so as to evaluate the robustness of rotation invariants in (20) and (33) to noise when they are based on lower order moments instead of higher order ones. Regarding RST invariants defined in (33), we compared them when the subscript \(k\) is set to \(0, 1, {\ldots }, 5\) for QROTMIs and QOFMMIs, to \(m\), \(m\)+2, \(m\)+4 for QZMIs, and to \(m\), \(m+1, {\ldots }, m+5\) for QPZMIs, respectively. Notice that for QZMIs and QPZMIs, when the value of \(k\) is greater than 5, this one is fixed to 5 which is the maximum order \(M\) we considered in this experiment.

Results given here have been achieved using images from our datasets with Gaussian noise of standard deviation 3 and salt-and-pepper noise of density 2 % and the corresponding original images. Table 3 provides the relative error average of our four types of QTMIs. Whatever the type of QTMIs, these results demonstrate that QTMIs based on lower order moments with a small value of \(k\) can indeed obtain the smaller relative error than those based on higher order moments.

(c) Comparison of the performance of different types of QTMIs

The four image datasets were used in this experiment so as to evaluate the performance of our four types of QTMIs and compare them with those of the QFMMIs of Guo et al. [13]. Fig. 6 depicts the average relative errors \(\bar{{E}}_k \), \(k = 1, 2, {\ldots }\), \(K\), where \(k\) corresponds to the \(k^{th}\) intensity level of the image degradation; herein \(K\) equals 19 as each kind of image degradation has been parameterized with 19 distinct values (as example, the Gaussian noise is added considering 19 different standard deviation values). It can be observed that: (1) all the errors caused by rotation and scaling degradations are small, especially for the three orthogonal moment invariants (i.e. QPZMIs, QZMIs and QOFMMIs); (2) the three orthogonal moment invariants are more robust to noise than the other two non-orthogonal QROTMIs and QFMMIs; (3) in general, the errors increase with the increase of the intensity level of image degradation; (4) QPZMIs performs best among the compared five types of QTMIs.

We have also calculated the maximal value of the standard deviation (MSTD) of the relative error \(E_{k}(f_{t}\), \(g_{t})\), \(t = 1, 2, {\ldots }, N\), \(k = 1, 2, {\ldots }\), \(K\), as follows

where \(N\) and \(K\) are the number of original images and the number of intensity levels of a degradation, respectively. They are respectively equal to 14 and 19 in this experiment. The MSTD values of different QTMIs under different degradations are given in Table 4. The small MSTD values in this table demonstrate that the constructed QTMIs have a stable behavior.

4.3 Color Face Recognition

The objective of this test is to evaluate the performance of QTMIs in one practical application. We combined the proposed descriptors with a quaternion back-propagation neural network (QBPNN), which were simultaneously introduced by Arena et al. [19] and Nitta [20] in the mid-1990s to deal with 3D or 4D input data. The basic block diagram is shown in Fig. 7. First, color faces in the training set are represented using the quaternion representation with (5). After that, the QTMI features are extracted from these color faces. Then, the extracted features are fed into the three QBPNN layers. For the test set, we also compute the QTMI features from these color face images after quaternion representation and calculate the output with the trained weights and threshold values. Finally, the minimum quaternion vector distance [14] is used for decision. A similar method was first presented in one of our previous work [62] for the real-valued QZMIs derived in [12] without considering the important phase information. However, in this paper the algorithms based on the quaternion-valued QTMIs with phase information are evaluated and are compared with the algorithm based on the quaternion bidirectional principal component analysis (QBDPCA), which offers the best performance when compared to other quaternion-based principal component analysis according to [14].

Three color face databases (faces95, faces96 and grimace) provided by the University of Essex [63] were chosen. Their properties are summarized in Table 5. A few examples are shown in Fig. 8. We used 600 face samples of 30 individuals from the faces95 database and the faces96 database, and 360 samples of the 18 individuals included in the grimace database. For each individual, 10 random samples are retained for training and the remaining 10 for recognition.

By following the procedure described in Sect. 3 for color images, we can also derive invariants with respect to RST transformations for single channel using different CTMs. These invariants for three channels are combined into a whole set as face features. This is the conventional approach mentioned in Sect. 1. For four different types of CTMs, the features extracted by this approach are denoted respectively hereafter by ROTMIs, OFMMIs, ZMIs and PZMIs. Note that the non-zero real and imaginary parts of CTMIs are both used as features. It makes the size of feature vector almost twice as large. Then, the real-valued CTMIs were fed into the conventional back-propagation neural network (BPNN), while the quaternion-valued QTMIs were trained by QBPNN.

We also compared the proposed algorithm with the other quaternion-based algorithm based on QFMMIs [13] features and BPNN.

(a) Comparison of the performance of different \(\varvec{\mu }\)

Because the comparison was made in a simulation experiment in Sect. 4.2a, we propose herein to compare the performance of different \(\varvec{\mu }\) in a real environment so as to find the optimal value of \(\varvec{\mu }\).

In this experiment, different types of QTMIs based on our 100 test values of \(\varvec{\mu }\) were used as features for face recognition of faces95 database. Again, the maximum order of all QTMIs was set to 5. In order to clearly show the recognition rates achieved with the five types of QTMIs, two figures are given: QFMMIs, QOFMMIs and QPZMIs are shown in Fig. 9a, while QROTMIs and QZMIs in Fig. 9b. Obtained results show that \(\varvec{\mu }_{100}\) (i.e. \(({\varvec{i}}-{\varvec{j}}-{\varvec{k}})/\sqrt{3})\) has the overall best performance among \(\varvec{\mu }\) test set though it is not the best one for each type of QTMIs. The average recognition rate of the five types of QTMIs based on \(\varvec{\mu }_{100}\) is about 92 %. This is consistent with the conclusion drawn in Sect. 4.2a. Beyond, for each types of QTMIs, \(\varvec{\mu }_{32}\) (i.e. \(0.9610{{\varvec{i}}}+0.1080{{\varvec{j}}}+0.2545{{\varvec{k}}}\)) and \(\varvec{\mu }_{100}\) are two relatively optimal choices for QFMMIs; \(\varvec{\mu }_{8}\) (i.e. \(0.1236{{\varvec{i}}}+0.8553{{\varvec{j}}}+0.5032{{\varvec{k}}}\)) and \(\varvec{\mu }_{97}\) (i.e. \(({\varvec{i}}+{\varvec{k}})/\sqrt{2})\) are the best choices for QROTMIs, \(\varvec{\mu }_{43}\) (i.e. \(0.3322{{\varvec{i}}}+0.7646{{\varvec{j}}}+0.5523{{\varvec{k}}}\)) the one for QOFMMIs, \(\varvec{\mu }_{19}\) (i.e. \(0.5502{{\varvec{i}}}+0.4888{{\varvec{j}}}+0.6770{{\varvec{k}}}\)), \(\varvec{\mu }_{99}\) (i.e. \(({\varvec{i}}+{\varvec{j}}+{\varvec{k}})/\sqrt{3})\) and \(\varvec{\mu }_{100}\) for QZMIs, and \(\varvec{\mu }_{26}\) (i.e. \(0.1383{{\varvec{i}}}+0.8536{{\varvec{j}}}+0.5023{{\varvec{k}}}\)) for QPZMIs. As a consequence, the choice of \(\varvec{\mu }\) may become critical in real applications. Base on this statement, in the following Sect. 4.3b and c, each type of QTMIs is tested with its optimal \(\varvec{\mu }\). However, let us notice that only one optimal \(\varvec{\mu }\) was used for QFMMIs, QROTMIs and QZMIs. Our preference went on \(\varvec{\mu }_{100}\), \(\varvec{\mu }_{8}\) and \(\varvec{\mu }_{99}\) for QFMMIs, QROTMIs, and QZMIs, respectively.

(b) Verification of the phase information importance

In object and scenes recognition, phase information plays a role more important than magnitude information [47, 48]. As a consequence, to achieve rotation invariant, we decided to use the method based on Theorem 1 instead of the conventional magnitude-based method by directly considering the modulus of QTMs [12, 13, 30]. In order to evaluate our method and compare with a more conventional strategy, we have constructed new set of QTMIs based on the magnitude-based method. These new QTMIs were respectively denoted as NQROTMIs, NQOFMMIs, NQZMIs, and NQPZMIs. We did not consider QFMMIs due to the fact they were originally constructed with the help of this magnitude-based method in [13]. New QTMIs features with order up to 5 were trained by BPNN. This is possible since new QTMIs are real values. Table 6 presents the recognition results for faces95 database using our QTMIs and these new QTMIs. The results demonstrate that the proposed method is better than the magnitude-based method. This again confirms the discrimination power of the phase information in pattern recognition.

(c) Performance comparison of different types of QTMIs and CTMIs

In order to choose the relatively optimal moment invariants features, we compared the recognition rates of different types of QTMIs and CTMIs up to various maximum-orders \(M = 3, 5, {\ldots }, 15, 17\). Having different values for \(M\) leads to different feature sets which are: \(\big \{ bar{{\varphi }}_{n,0}^m (f)\big | 0\le n, m\le M \big \}\) for QROTMIs and QOFMMIs, \(\big \{ \bar{{\varphi }}_{n,m}^m (f)\big | 0\le m\le M,m\le n\le M \big \}\) for QPZMIs, and \(\big \{ \bar{{\varphi }}_{n,m}^m (f)\big | 0\le m\le M,m\le n\le M,n-m\hbox { being even} \big \}\) for QZMIs. Here, \(\bar{{\varphi }}_{n,m}^m (f)\)is a RST invariant as defined by (33). The recognition rates for faces95 database with various values of \(M\) are shown in Table 7. It can be seen that: (1) an optimal order exists for every set of moment invariant features though these optimal orders are not equal. In this experiment, the optimal orders for ROTMIs, QROTMIs, OFMMIs, QOFMMIs, ZMIs, QZMIs, PZMIs, QPZMIs and QFMMIs are 9, 7, 9, 9, 11, 9, 7, 5 and 5, respectively; (2) the rate first increases, reaches a maximum value and then decreases for all descriptors. This behaviour has also been already mentioned and pointed out in the above color image reconstruction experiments and also for object classification as shown in [64].

We also extracted all nine types of moment invariants features up to their corresponding optimal order from the other two databases used for recognition, while for the algorithm using QBDPCA, the dimensionality for the principal subspace was chosen when the cumulative energy is greater than 99 %. The recognition rates of different algorithms are shown in Table 8. It can be seen from this table that: (1) no matter what databases and what moments, the performance of the proposed quaternion-based algorithms using QTMIs and QBPNN are better than the conventional algorithms using CTMIs and BPNN; (2) the algorithms based on orthogonal moment invariants are superior to those based on non-orthogonal moment invariants, regardless of whether the conventional algorithms or the quaternion-based algorithms are used. This conclusion is in accordance with the results reported in [61] in terms of noise robustness, information redundancy and capability for image representation; (3) among three algorithms using orthogonal QTMIs, the performance of the algorithm using QPZMIs is better than the other two algorithms. The same conclusion can be drawn from three conventional algorithms using orthogonal CTMIs. This is consistent with the conclusion in Sect. 4.2; (4) the recognition rates of almost all algorithms for grimace (mainly with expression variation) are higher than those obtained for faces95 and faces96 (which mainly suffer from illumination and background variations). Such result can be explained by: (i) the grimace database is preprocessed through a face location procedure which allows eliminating the background influence; (ii) the proposed QTMIs being invariant to geometric transformations, they are robust to expression change.

4.4 Color Image Registration

Image registration is a fundamental preprocessing for image fusion and other image processing tasks. In this experiment, color image registration was carried out to further illustrate the efficiency of QTMIs. We use the automatic registration method proposed by Wang et al. [65]. The main difference is that QTMIs were used for feature points (FPs) matching in this experiment. The main steps of registration are as follows

-

(1)

Extract FPs from both reference image and template image using the Harris-Laplace detector;

-

(2)

Extract QTMIs feature with the optimal order of the neighborhood of these FPs;

-

(3)

Match FPs using the minimum Euclidean distance;

-

(4)

Improve matching results using the random sample consensus (RAMSAC) algorithm;

-

(5)

Estimate the similarity transformation through the coordinates of matched FPs by the least-square method;

-

(6)

Transform the template image with the estimated transformation to match the reference image.

Two pictures were taken by digital camera with different focus depth and varying position through a rotation of the camera. One (Fig. 10a) is taken as reference image. The other (Fig. 10b) serves as template image. In order to evaluate the robustness to blurring for different invariant feature, the template image was taken with a little out-of-focus. Then, the above registration steps are carried out. To quantify the registration accuracy, we compute the intensity root mean square error (RMSE) for the overlapped areas \(\Omega \) of the reference image RI(\(x, y\)) and the registered template image TI(\(x, y\)), which is defined by [66]

where \(N_{\Omega }\) is the size of overlapped areas \(\Omega \).

All nine types of moment invariant features with the optimal order used in the former test are evaluated in this test. We found that if the template image was taken well without out-of-focus, two images can be registered correctly for all nine types of moment invariants. This demonstrates the efficiency of the proposed algorithm. But, it is not the case for the out-of-focus template image. The RMSE values for the algorithms using different moment invariants are provided in Table 9. To show visually, we also give the mosaicked images MI(\(x, y)\) in Fig. 10c–h by fusing the registered template image TI(\(x\), \(y)\) into the reference image RI(\(x, y)\) with the weight 0.5, i.e. MI(\(x, y\)) = 0.5*RI(\(x, y\)) + 0.5*TI(\(x, y\)). It can be observed that: (1) QPZMIs performs best among nine types of moment invariants. The reference image and the transformed template image are completely overlapped. The RMSE value is also smallest though this one is not so small due to the blurring of template image. Three algorithms separately using the non-orthogonal ROTMIs, QROTMIs and QFMMIs completely fail to register two images. This is in accordance with their performance in the previous face recognition test; (2) three algorithms respectively using PZMIs, ZMIs and OFMMIs register two images with some error. There are some artifacts in their overlapped images, especially at the top of the pavilion; (3) the algorithms using quaternion-valued invariants (QPZMIs, QZMIs and QOFMMIs) also outperform ones using real-valued invariants (PZMIs, ZMIs, OFMMIs and ROTMIs).

5 Discussion

The proposed approach has been shown of relevance on the four examples we have selected. The reconstruction problem addressed here shows that there exists some “optimal order” minimizing the error made (Fig. 3). This “optimal order” varies with the type of moments and presumably with the image contents. Therefore and although the slope of the curves are low after this order value, it must be adapted to the data set under consideration. A theoretical analysis would be of interest to more objectively understand this behavior but it is not so straightforward.

The QZMIs presented here have been compared in [15] to ZMIs. This comparison has shown that the recognition of objects submitted to RST transformations was largely in favor of QZMIs in noise-free situations and with additive noise (Gaussian and salt-and-pepper) as well. For other types of moment invariants, similar conclusions have been drawn by color face recognition from Tables 7, 8 and color image registration from Table 9. Moreover, performance of each type of QTMIs depends on the unit pure quaternion. However, it is difficult to experimentally find an optimal unit pure quaternion \(\varvec{\mu }\). Conducted experiments with 100 different values of \(\varvec{\mu }\) have shown that \(({\varvec{i}}-{\varvec{j}}-{\varvec{k}})/\sqrt{3}\) is the overall best choice but not the best one for each type of QTMIs. Certainly, one can directly define real-valued invariants under the choice of unit pure quaternion choice constraint with the help of the magnitude-based method of Mennesson et al. [30] at the cost of losing the important phase information. This has been verified again in Sect. 4.3b. Therefore, the only disadvantage of the quaternion-based descriptor QTMIs concerns the increased computational load. This was the main purpose of the efficient algorithm designed in this paper. This may be of critical importance in particular applications like face recognition, object matching and tracking. The computation time required to extract the QTMIs up to order 5 in the face recognition problem is about 0.1225 s for QOFMMIs, 0.1572 s for QZMIs, and 0.1717 s for QPZMIs implemented in Matlab R2006b on a PC with Dual Core 2.33 GHz CPU and 2 GB RAM. They can meet the need for a particular application. In addition, they will be faster if implemented in C/C++.

Of course, the final result in any pattern recognition problem depends not only on the extracted features but also on the data set used for learning (exhaustivity, size, etc.) and on the classification method. The neural network approach used here can be replaced by others like PCA, ICA, and SVM for which quaternion-versions of these approaches have been reported [8, 14, 23, 24, 32]. For the three selected face databases, there also exists an optimal order of each moment invariant feature for face recognition. The same is true for the color image registration problem. The final registration result depends on the selected FPs, the extracted features and the matching method, etc.

6 Conclusions

In this paper, the general formula of QTMs has been introduced to extend commonly-used CTMs defined for gray-scale images to color images. Based on these QTMs, a set of invariants to RST transformations has been constructed. In addition, an efficient algorithm for computing QTMs was proposed. The advantages of the proposed QTMs and QTMIs over the existing descriptors are as follows: (1) the quaternion color representation, processing a color image in a holistic manner, is used in the definition of QTMs; (2) the constructed QTMIs are quaternion-valued invariants instead of real-valued invariants, thus, they retain the important phase information and provide more real-valued invariants. Experimental results on real images corrupted by additive noise, color face recognition and color image registration demonstrate that the proposed descriptors and especially the QPZMIs are more effective than the existing methods. Conducted experiments show that performance varies unit pure quaternion and the proposed method for constructing the rotation invariants is superior to the conventional magnitude-based method. The unit pure quaternion \(({\varvec{i}}-{\varvec{j}}-{\varvec{k}})/\sqrt{3}\) appears to be a relatively optimal choice but not the best one for each type of QTMIs. As future work, we expect to generalize CTMs to process higher dimensional signals with the help of Clifford algebra as Clifford-type moments.

References

Subakan, O.N., Vemuri, B.C.: A quaternion framework for color image smoothing and segmentation. Int. J. Comput. Vis. 91(3), 233–250 (2011)

Koschan, A., Abidi, M.: Digital Color Image Processing. Wiley, Hoboken (2008)

Sangwine, S.J.: Fourier transforms of colour images using quaternion or hypercomplex, numbers. Electron. Lett. 32(1), 1979–1980 (1996)

Pei, S.C., Cheng, C.M.: A novel block truncation coding of color images by using quaternion-moment-preserving principle. In: Proc. IEEE Int. Symp. Circuits and Systems, vol. 2, pp. 684–687 (1996).

Pei, S.C., Cheng, C.M.: Color image processing by using binary quaternion-moment preserving thresholding technique. IEEE Trans. Image Process. 8(5), 22–35 (1999)

Bihan, N.L., Sangwine, S.J.: Color image decomposition using quaternion singular value decomposition’. In: Proc. 2003 Int. Conf. Visual Information Engineering (VIE 2003), pp. 113–116 (2003).

Pei, S.C., Cheng, C.M.: Quaternion matrix singular value decomposition and its applications for color image processing. In: Proc. 2003 Int. Conf. Image Processing (ICIP 2003), vol. 1, pp. 805–808 (2003)s.

Bihan, N.L., Sangwine, S.J.: Quaternion principal component analysis of color images. In: Proc. 2003 10th IEEE Int. Conf. Image Processing (ICIP 2003), vol. 1, pp. 809–812 (2003).

Ell, T.A., Sangwine, S.J.: Hypercomplex fourier transforms of color images. IEEE Trans. Image Process. 16(1), 22–35 (2007)

Alexiadis, D.S., Sergiadis, G.D.: Estimation of motions in color image sequences using hypercomplex fourier transforms. IEEE Trans. Image Process. 18(1), 168–187 (2009)

Assefa, D., Mansinha, L., Tiampo, K.F., Rasmussen, H., Abdella, K.: Local quaternion fourier transform and color image texture analysis. Signal Process. 90(6), 1825–1835 (2010)

Chen, B.J., Shu, H.Z., Zhang, H., Chen, G., Luo, L.M.: Color image analysis by quaternion Zernike moments. In: Proc. 2010 20th Int. Conf. Pattern Recognition (ICPR2010), 2010, pp. 625–628 (2010)

Guo, L.Q., Zhu, M.: Quaternion fourier-mellin moments for color image. Pattern Recognit. 44(2), 187–195 (2011)

Sun, Y.F., Chen, S.Y., Yin, B.C.: Color face recognition based on quaternion matrix representation. Pattern Recognit. Lett. 32(4), 597–605 (2011)

Chen, B.J., Shu, H.Z., Zhang, H., Chen, G., Toumoulin, C., Dillenseger, J.L., Luo, L.M.: Quaternion zernike moments and their invariants for color image analysis and object recognition. Signal Process. 92(2), 308–318 (2012)

Rizo-Rodríguez, D., Méndez-Vázquez, H., García-Reyes, E.: Illumination invariant face recognition using quaternion-based correlation filters. J. Math. Imaging Vis. 45(2), 164–175 (2013)

Ell, T.A.: Hypercomplex spectral transforms. Ph.D. dissertation, Minnesota Univ., Minneapolis, USA (1992)

Bülow, T.: Hypercomplex spectral signal representations for image processing and analysis. Ph.D. dissertation, University of Kiel, Kiel, Germany (1999)

Arena, P., Fortuna, L., Occhipinti, L.: Neural networks for quaternion valued function approximation. In: Proc. IEEE Int. Symp. Circuits and Systems, vol. 6, pp. 307–310 (1994)

Nitta, T.: A quaternary version of the back-propagation algorithm. In: Proc. IEEE Int. Conf. Neural Networks (ICNN’95), vol. 5, 2753–2756 (1995)

Chan, W.L., Choi, H., Baraniuk, G.: Directional hypercomplex wavelets for multidimensional signal analysis and processing. In: Proc. IEEE Int. Conf. Acoustics, Speech and Signal Processing (ICASSP 2004), pp. 996–999 (2004)

Bayro-Corrochano, E.: The theory and use of the quaternion wavelet transform. J. Math. Imaging Vis. 24(1), 19–35 (2006)

Bihan, N.L., Buchholz, S.: Quaternionic independent component analysis using hypercomplex nonlinearities. In: Proc. IMA 7th Conf. Mathematics in, Signal Processing, pp. 1–4 (2006).

Via, J., Palomar, D.P., Vielva, L.: Quaternion ica from second-order statistics. IEEE Trans. Signal Process. 59(4), 1586–1600 (2011)

Hitzer, E.: Quaternionic Fourier-Mellin Transform. arXivpreprint arXiv:1306.1669 (2013)

Li, Y.N.: Quaternion polar harmonic transforms for color images. IEEE Signal Process. Lett. 20(8), 803–806 (2013)

Batard, T., Berthier, M., Saint-Jean, C.: Clifford-Fourier transform for color image processing. In: Geometric Algebra Computing, pp. 135–162. Springer, London (2010)

Mennesson, J., Saint-Jean, C., Mascarilla, L.: Color object recognition based on a Clifford Fourier transform. Guide to Geometric Algebra in Practice, pp. 175–191. Springer, London (2011)

Hitzer, E., Sangwine, S.J. (eds.): Quaternion and Clifford Fourier transforms and wavelets. In: Trends in Mathematics (TIM), Birkhauser, Basel (2013)

Mennesson, J., Saint-Jean, C., Mascarilla, L.: Color fourier-mellin descriptors for image recognition. Pattern Recogn. Lett. 40(4), 27–35 (2014)

Pearson, J.K., Bisset, D.L.: Neural networks in the clifford domain. In: Proc. IEEE World Congress on Neural Networks, vol. 3, 1465–1469 (1994)

Bayro-Corrochano, E.J., Arana-Daniel, N.: Clifford support vector machines for classification, regression, and recurrence. IEEE Trans. Neural Netw. 21(11), 1731–1746 (2010)

Bahri, M., Hitzer, E.: Clifford algebra CI\(_{3, 0}\)-valued wavelet transformation, Clifford wavelet uncertainty inequality and Clifford Gabor wavelets. Int. J. Wavelets Multiresolution Inf. Process. 5(6), 997–1019 (2007)

Bujack, R., Scheuermann, G., Hitzer, E.: Detection of outer rotations on 3D-Vector fields with iterative geometric correlation and its efficiency. Adv. Appl. Clifford Alg. (2013). doi:10.1007/s00006-013-0411-7

Hitzer, E., Nitta, T., Kuroe, Y.: Applications of clifford’s geometric algebra. Adv. Appl. Clifford Alg. 23(2), 377–404 (2013)

Flusser, J., Zitova, B., Suk, T.: Moments and moment invariants in pattern recognition. Wiley, Chichester (2009)

Shu, H.Z., Luo, L.M., Coatrieux, J.L.: Moment-based approaches in image part 1: basic features. IEEE Eng. Med. Biol. Mag. 26(5), 70–74 (2007)

Khotanzad, A., Hong, Y.H.: Invariant image recognition by zernike moments. IEEE Trans. Pattern Anal. Machine Intell. 12(5), 489–497 (1990)

Lin, Y.H., Chen, C.H.: Template matching using the parametric template vector with translation, rotation and scale invariance. Pattern Recognit. 41(7), 2413–2421 (2008)

Yang, C.Y., Chou, J.J.: Classification of rotifers with machine vision by shape moment invariants. Aquac. Eng. 24(1), 33–57 (2000)

Dai, X.B., Shu, H.Z., Luo, L.M., Han, G.N., Coatrieux, J.L.: Reconstruction of tomographic images from limited range projections using discrete radon transform and tchebichef moments. Pattern Recognit. 43(3), 1152–1164 (2010)

Zhang, H., Shu, H.Z., Coatrieux, G., Zhu, J., Wu, Q.M.J., Zhang, Y., Zhu, H.Q., Luo, L.M.: Affine legendre moment invariants for image watermarking robust to geometric distortions. IEEE Trans. Image Process. 20(8), 2189–2199 (2011)

Hamilton, W.R.: Elements of Quaternions. Longmans Green, London (1866)

Chong, C.W., Raveendran, P., Mukundan, R.: Translation invariants of zernike moments. Pattern Recognit. 36(8), 1765–1773 (2003)

Liao, S.X., Pawlak, M.: On image analysis by moments. IEEE Trans. Pattern Anal. Machine Intell. 18(3), 254–266 (1996)

Liao, S.X., Pawlak, M.: On the accuracy of zernike moments for image analysis. IEEE Trans. Pattern Anal. Machine Intell. 20(12), 1358–1364 (1998)

Suk, T., Flusser, J.: Affine moment invariants of color images. In: Proc. CAIP 2009, vol. LNCS5702, pp. 334–341 (2009)

Shams, L., Malsburg, C.V.D.: The role of complex cells in object recognition. Vis. Res. 42(22), 2547–2554 (2002)

Shi, G., Shanechi, M.M., Aarabi, P.: On the importance of phase in human speech recognition. IEEE Trans. Audio Speech Lang. Process 14(5), 1867–1874 (2006)

Shu, H.Z., Luo, L.M., Han, G.N., Coatrieux, J.L.: A general method to derive the relationship between two sets of zernike coefficients corresponding to different aperture sizes. J. Opt. Soc. Am. A 23(8), 1960–1966 (2006)

Zhang, H., Shu, H.Z., Haigron, P., Li, B.S., Luo, L.M.: Construction of a complete set of orthogonal fourier-mellin moment invariants for pattern recognition applications. Image Vis. Comput. 28(1), 38–44 (2010)

Walia, E., Singh, C., Goyal, A.: On the fast computation of orthogonal fourier-mellin moments with improved numerical stability. J. Real-Time Image Process. 7(4), 247–256 (2012)

Liu, C., Huang, X.H., Wang, M.: Fast computation of zernike moments in polar coordinates. IET Image Process. 6(7), 996–1004 (2012)

Singh, C., Walia, E., Pooja, S., Upneja, R.: Analysis of algorithms for fast computation of pseudo Zernike moments and their numerical stability. Digital Signal Process. 22(6), 1031–1043 (2012)

Ell, T., Sangwine, S.J.: Decomposition of 2D hypercomplex Fourier transforms into pairs of complex Fourier transforms. In: Proc. Eusipco., vol. 2 (2000)

Qi, Q., Hu, Y.M., Zhang, C.Y.: A new color pattern recognition algorithm based on quaternion correlation. In: Proc. IEEE Int. Conf. Networking, Sensing and Control (CNSC 2008), pp. 462–466 (2008)

Zuo, W., Wang, W.J.: Color watermarking algorithm based on modified qfft and dct. In: Proc. 2010 Int. Conf. Optoelectronics and Image Processing (ICOIP), vol. 1, pp. 328–331 (2010)

Bas, P., Nihan, N.L., Chassery, J.M.: Color image watermarking using quaternion fourier transform. In: Proc. IEEE Int. Conf. Acoustics, Speech and Signal Processing (ICASSP 2003), vol. III, pp. 521–524 (2003)

Moxey, C.E., Sangwine, S.J., Ell, T.A.: Hypercomplex correlation techniques for vector images. IEEE Trans. Signal Process. 51(7), 1941–1953 (2003)

Nene, S.A., Nayar, S.K., Murase, H.: Columbia Object Image Library (COIL-100), Tech. Rep. CUCS-006-96 (1996)

Teh, C.H., Chin, R.T.: On image analysis by the method of moments. IEEE Trans. Pattern Anal. Machine Intell. 10(4), 496–513 (1988)

Chen, B.J., Shu, H.Z., Chen, G., Ge, J., Luo, L.M.: Color face recognition based on quaternion zernike moment invariants and quaternion bp neural network. Appl. Mech. Mater. 446–447, 1034–1039 (2014)

Spacek, L.: (2008, June 20). Description of the Collection of Facial Images [Online]. Available: http://cswww.essex.ac.uk/mv/allfaces/index.html

Chen, B.J., Shu, H.Z., Zhang, H., Coatrieux, G., Luo, L.M., Coatrieux, J.L.: Combined invariants to similarity transformation and to blur using orthogonal zernike moments. IEEE Trans. Image Process. 20(2), 345–360 (2011)

Wang, W.X., Luo, D.J., Li, W.S.: Algorithm for automatic image registration on harris-laplace features. J. Appl. Remote Sens. 3(033554), 1–13 (2009)

Zhang, Z., Blum, R.S.: A hybrid image registration technique for a digital camera image fusion application. Inf. Fusion 2(2), 135–149 (2001)

Acknowledgments

This work was supported by the National Basic Research Program of China under Grant 2011CB707904, the NSFC under Grants 61271312, 61232016, 61103141, 61173141, 61105007 and 61272421, the Ministry of Education of China under Grant 20110092110023, the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant 13KJB520015, and a Project Funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Corresponding author

Appendix A

Appendix A

Proof of Theorem 2

Equation (28) can be written in a matrix form as

where (\(h\)+1) is the size of the vector, and

Applying (30) to the transformed image \({f}''\), it can also be written in a matrix form as

Based on the definition of \(\varGamma _{f}\) it can be easily verified that

Substituting (46) and (48) into (47), and using the identity \(D_h^m C_h^m =I\), where \(I\) is the identity matrix, we obtain

The proof has been completed. \(\square \)

Rights and permissions

About this article

Cite this article

Chen, B., Shu, H., Coatrieux, G. et al. Color Image Analysis by Quaternion-Type Moments. J Math Imaging Vis 51, 124–144 (2015). https://doi.org/10.1007/s10851-014-0511-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-014-0511-6