Abstract

When Lagrangian stochastic models for turbulent dispersion are applied to complex atmospheric flows, some type of ad hoc intervention is almost always necessary to eliminate unphysical behaviour in the numerical solution. Here we discuss numerical strategies for solving the non-linear Langevin-based particle velocity evolution equation that eliminate such unphysical behaviour in both Reynolds-averaged and large-eddy simulation applications. Extremely large or ‘rogue’ particle velocities are caused when the numerical integration scheme becomes unstable. Such instabilities can be eliminated by using a sufficiently small integration timestep, or in cases where the required timestep is unrealistically small, an unconditionally stable implicit integration scheme can be used. When the generalized anisotropic turbulence model is used, it is critical that the input velocity covariance tensor be realizable, otherwise unphysical behaviour can become problematic regardless of the integration scheme or size of the timestep. A method is presented to ensure realizability, and thus eliminate such behaviour. It was also found that the numerical accuracy of the integration scheme determined the degree to which the second law of thermodynamics or ‘well-mixed condition’ was satisfied. Perhaps more importantly, it also determined the degree to which modelled Eulerian particle velocity statistics matched the specified Eulerian distributions (which is the ultimate goal of the numerical solution). It is recommended that future models be verified by not only checking the well-mixed condition, but perhaps more importantly by checking that computed Eulerian statistics match the Eulerian statistics specified as inputs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Langevin-based Lagrangian stochastic models have proven to be a practical method for describing the dispersion of particulates in many classes of turbulent flows. In flows with inhomogeneous velocity statistics, early workers found that isotropic Langevin-based models failed, as particles tended to accumulate in regions of low turbulent stress. Early heuristic work to remedy this problem began in the atmospheric boundary-layer community and involved adding a mean bias velocity to the Langevin equation, which worked well in cases of weakly inhomogeneous flow (Wilson et al. 1981; Legg and Raupach 1982). The problem was later formalized by enforcing the requirement that the particle plume should satisfy the “well-mixed condition” (WMC; Thomson 1984) or equivalently the “thermodynamic constraint” (Sawford 1986). In either case, it was noted that an initially uniformly distributed particle plume should not un-mix itself in the absence of sources or sinks, or more precisely, entropy cannot decrease (i.e., the second law of thermodynamics). This is a crucial requirement for any model, as a model in which entropy decreases in time is generally not useful given that it does not tend toward a uniform equilibrium state in the absence of sources or sinks.

A rigorous theoretical solution to the “un-mixing” problem was devised concurrently by Thomson (1987) and Pope (1987), whose remedies were based on the notion that the Lagrangian dispersion models should satisfy their corresponding macroscopic Eulerian conservation equations, which clearly satisfy the second law of thermodynamics. Thomson (1987) used the Fokker–Planck equation to determine the proper coefficients in the Langevin equation, while Pope (1987) used the Navier–Stokes equations. The present paper primarily focuses on the Thomson approach because it is the most common in atmospheric applications (Lin et al. 2013), although similar problems are likely to arise when the Pope approach is used depending on the chosen form of the model.

Despite the fact that the Thomson model should satisfy the second law of thermodynamics in theory, authors have begun to report unphysical model behaviour, particularly in cases with complex inhomogeneity. This has been revealed by the failure of computed particle plumes to satisfy the WMC in practice (Lin 2013), or by the presence of unrealistically large or ‘rogue’ particle velocities (Yee and Wilson 2007; Postma et al. 2012; Wilson 2013; Bailey et al. 2014; Postma 2015). Although the Thomson model has been in use for several decades, it is only until recently that authors began explicitly acknowledging the unphysical behaviour in the numerical solutions of model equations.

Several methods have been suggested to deal with such unphysical behaviour. The simplest of which is ad hoc intervention in which velocities are artificially limited to some predetermined range. When a particle becomes rogue (according to some predefined criteria), the particle velocity is either artificially reset to some value, or the particle trajectory is restarted from the beginning. This method removes rogue velocities, but does not in general satisfy the second law of thermodynamics. Numerous discussions between the author and colleagues using these types of models have revealed that some form of ad hoc intervention is ubiquitous, although rarely acknowledged in the literature. In some situations, relatively infrequent occurrence of rogue trajectories or some ad hoc correction may be tolerable, e.g., when only mean concentrations are desired. However, in other instances they may contaminate results to an unacceptable level (e.g., Postma et al. 2012; Wilson 2013).

More advanced integration schemes have been suggested that reduce (although not eliminate) rogue velocities. Yee and Wilson (2007) formulated a semi-analytical integration scheme, whereby the integration is divided into analytical and numerical sub-steps. Unfortunately, certain conditions must be met in order to allow for the analytical sub-step, often requiring ad hoc intervention anyway when the conditions are not met. Bailey et al. (2014) divided the integration into implicit and explicit sub-steps, which reduced but did not eliminate rogue trajectories. Lin (2013) presented a method that treats the turbulence field as stepwise homogeneous, which eliminates sharp local gradients that can cause numerical difficulties such as rogue trajectories. However, this method may be difficult to apply in cases of complex geometry and could require high grid resolution to resolve large gradients.

Authors have reported that reducing the timestep used in numerical integration of the Langevin equation could potentially reduce the frequency of rogue trajectories (e.g., Postma et al. 2012). It is probable that the timestep would have to be unfeasibly small to eliminate all rogue trajectories in complex flows. In accordance with this principle, Postma (2015) developed an adaptive timestep scheme that reduced the timestep based on the local turbulent time scales of the flow. This methodology still did not completely eliminate rogue trajectories, and would also be quite complex to apply in general three-dimensional flows.

The goal of the present study was to uncover the root cause of reported unphysical behaviour in certain Lagrangian stochastic dispersion models. A remedy for the problem was ultimately desired, which involved formulating a stable numerical scheme that satisfies the thermodynamic constraint and matches the Eulerian velocity statistics specified as inputs. The source code and input data for all examples presented herein are provided in the associated online material.

2 Reynolds-Averaged Lagrangian Stochastic Models

In a Lagrangian framework, one can calculate the time evolution of particle position given information about the velocity field as

where \(x_{\mathrm{p},i}\) is particle position in Cartesian direction \(i=(x,\,y,\,z)\), t is time, and \(U_i\) and \(u_i\) are respectively the mean and fluctuating particle velocity components.

Often in turbulent flows, only the ensemble-averaged component of \(\text {d}x_{\mathrm{p},i}/\text {d}t\) is known, and the fluctuating component must be modelled. Thus, there has been large interest in developing practical models for the Lagrangian particle velocity fluctuations that can be driven by easily measured or estimated turbulence quantities such as the local turbulent kinetic energy. The unresolved Lagrangian velocity is commonly modelled using an analogy to Langevin (1908), who developed models for Brownian motion. The stochastic Langevin equation can be written in modern form as

where \(\text {d}W\) is an increment in a Weiner process with zero mean and variance \(\text {d}t\), and a and b are coefficients to be determined. This particular form assumes that the particle velocity is Markovian and Gaussian.

For homogeneous, isotropic turbulence, these coefficients can be determined independently such that \(u_i\) has the proper short- and long-time behaviour, which is made possible by the Markovian assumption. The b coefficient is commonly specified such that the small-time behaviour of \(u_i\) is consistent with Kolmogorov’s second similarity hypothesis, which gives

where \(\langle \cdot \rangle \) is an ensemble average, \(C_0\) is a ‘universal’ constant (Rodean 1991; Du 1997), and \(\overline{\varepsilon }\) is the mean dissipation rate of turbulent kinetic energy. The a coefficient is the inverse of the particle integral time scale \(\tau _\mathrm{L}\), which is commonly assumed to be (cf., Rodean 1996)

where \(\sigma ^2\) is the velocity variance. These choices for a and b give the familiar form of the Langevin equation applied to homogeneous and isotropic turbulence

The first term on the right-hand side of Eq. 5 represents local correlation (with time scale \(\tau _\mathrm{L}\)), and uniquely determines the correlation time scale of \(u_i\); the second term corresponds to (uncorrelated) motions on the order of the Kolmogorov scale (time scale \(\tau _\eta \)).

2.1 Application to Flows with One-Dimensional Inhomogeneity

For inhomogeneous applications such as the atmospheric boundary layer, Eq. 5 is no longer an appropriate model for \(u_i\) for several reasons. First, mean spatial gradients cause Eq. 5 to produce non-uniform mean particle fluxes even in the absence of gradients in particle concentration. This implies that models based on Eq. 5 will violate the second law of thermodynamics, considering that a nonuniform mean flux in a uniformly distributed particle plume will “un-mix” itself or decrease in entropy over time. Secondly, the presence of heterogeneity implies that \(2\sigma ^2/C_0\overline{\varepsilon }\) is no longer the proper correlation time scale.

To obtain a consistent model for \(u_i\), we first assume a form of the (Eulerian) probability distribution of \(u_i\). If the assumption is made that \(u_i\) is Gaussian and isotropic, the Eulerian velocity probability distribution at any instant is given by

This can be substituted into the Fokker–Planck equation, along with the previous assumption that \(b^2=C_0\overline{\varepsilon }\), to yield a consistent Langevin equation for \(u_i\) (cf. Thomson 1987; Rodean 1996)

where \(\text {d}\sigma ^2/\text {d}t=\partial \sigma ^2/\partial t + \left( U_j+u_j\right) \left( \partial \sigma ^2/\partial x_j\right) \). The traditional interpretation of the model terms (e.g., Rodean 1996) is that term \(\text {I}\) is the “fading memory” of the particle’s earlier velocity, term \(\text {II}\) is a “drift correction” that accounts for flow heterogeneity, and term \(\text {III}\) accounts for random pressure fluctuations with very short time scales. Determining the Langevin coefficients in this way leads to a Lagrangian dispersion model that theoretically adheres to the second law of thermodynamics, which is that an initially uniformly distributed (well-mixed) plume of particles cannot un-mix itself in the absence of sources or sinks (i.e., entropy cannot decrease).

2.2 Alternative Interpretation of Terms

A new grouping and interpretation of terms is proposed, which will aid in later discussion. Equation 7 can be equivalently written as

where term I can be interpreted as deceleration due to energy dissipation by viscosity. Since \(C_0 \overline{\varepsilon }/2\sigma ^2\) is always positive, this term always acts to damp the particle velocity and relax it toward the mean exponentially in time. Term II is an energy production/destruction term; if the sign of \(\text {d} \sigma ^2/\text {d}t\) is positive (negative), correlated energy is added to (removed from) the particle velocity. Term III (along with term II) enforces the inherent requirement that the mean of the velocity fluctuations must be zero. Finally, term IV is a random forcing term corresponding to turbulent diffusion. As was the case in Eq. 5, the roles of terms I and IV are to add energy (term IV) and remove energy (term I) at rates consistent with the prescribed values of \(\sigma ^2\) and \(\overline{\varepsilon }\).

Heterogeneity in \(\sigma ^2\) causes term I to induce a mean diffusive particle flux. This is because in regions of small \(\sigma ^2\), term I removes energy at a more rapid rate. As a result, particles decelerate in these regions on average, causing a build-up of particles or a convergence. To counteract this mean flux, terms II and III collectively give an ensemble mean acceleration of \(\partial \sigma ^2/\partial x_i\). It is clear that term III represents exactly half of this acceleration, and the average acceleration given by term II is also \(\frac{1}{2}\partial \sigma ^2/\partial x_i\). To see this consider a steady, one-dimensional example where \(\text {d}\sigma ^2/\text {d}t=u\left( \partial \sigma ^2/\partial x\right) \). When the average is taken, \(\overline{u^2}\) cancels with \(\sigma ^{-2}\) to give an average acceleration of \(\frac{1}{2}\partial \sigma ^2/\partial x\). The other role of term II is to ensure that the ensemble \(u_i\) has the correct local variance by increasing/decreasing particle energy as particles traverse a gradient in \(\sigma ^2\).

Terms I and II represent correlated particle accelerations, whereas terms III and IV are uncorrelated. Terms I and II can be re-written in the form

with the correlation time scale being

Although this is the correlation time scale, it could equivalently be viewed as the time scale associated with energy production/dissipation. Loosely speaking, \(C_0\overline{\varepsilon }/2\sigma ^2\) can be interpreted as the local component of the time scale due to dissipation by viscosity, and \(\dfrac{1}{2\sigma ^2}\dfrac{\text {d} \sigma ^2}{\text {d} t}\) the component corresponding to changes in correlation due to gradients in the velocity variance along the particle path. Note that at any instant, \(\tau \) may be negative, which indicates that the particle has gained more energy due to the gradient in \(\sigma ^2\) than viscosity can dissipate. However, the integral time scale \(\tau _\mathrm{L}\) will always be positive for any bounded or periodic flow because any energy gained due to \(\text {d}\sigma ^2/\text {d}t>0\) will be removed by a corresponding region of \(\text {d}\sigma ^2/\text {d}t<0\) (or the particle could reach an indefinite region of \(\text {d}\sigma ^2/\text {d}t=0\), in which case no energy is added or removed).

2.3 Numerical Integration

Equation 5 can be discretized into time increments of \(\varDelta t\) to numerically calculate the evolution of \(u_i\). Using a simple explicit forward Euler scheme, this can be written for homogeneous isotropic turbulence as

where superscripts n and \(n+1\) correspond to evaluations at times of t and \(t+\varDelta t\), respectively. Since \(C_0\), \(\overline{\varepsilon }\), and \(\sigma ^2\) are all positive (and subsequently the integral time scale is always positive), this scheme is numerically stable (in the absolute sense) when \(C_0\overline{\varepsilon }\varDelta t < 4\sigma ^2\) (Leveque 2007).

Equation 11 can be modified to include the effects of heterogeneity in \(\sigma ^2\) to give the discrete version of Eq. 8 as

where \(\left( \varDelta \sigma ^2\right) ^n\) is approximated as \(\left( \sigma ^2\right) ^{n}-\left( \sigma ^2\right) ^{n-1}\). It should be noted that spatial derivatives have not been discretized at this point, as they are assumed to be an ‘input’ value.

As was previously discussed, the addition of heterogeneity results in the additional mean flux term, as well as the production/destruction term. By applying stability analysis (Leveque 2007), it is found that Eq. 12 is unstable when the correlation time scale is negative (i.e., \(C_0\overline{\varepsilon }<\varDelta \sigma ^2/\varDelta t\)), or when the correlation time scale is positive and

Here, the term ‘unstable’ refers to stability in the absolute sense, which means that the truncation error grows from time t to \(t+\varDelta t\). As a result, the numerical error in the particle velocity does not necessarily become unsuitably large over a single timestep. But with enough consecutive timesteps where the numerical integration is ‘unstable’, the numerical error can grow to overwhelm the calculation. In this case, the numerical error adds more energy than the dissipation due to viscosity plus numerical dissipation can remove, which is analogous to the above discussion in which \(\tau _\mathrm{L}<0\). This is problematic because when a threshold value is chosen to screen for rogue trajectories, particles can be continually adding erroneous energy to the calculation without exceeding the threshold.

2.4 A Numerically Stable Integration Scheme

A common strategy for dealing with stiff differential equations is to use a numerical integration scheme with a large region of absolute stability (Hairer and Wanner 1996; Leveque 2007). Implicit schemes generally have much larger regions of absolute stability than explicit schemes such as the forward Euler scheme. In fully implicit schemes, terms are evaluated at the end of the discrete time increment rather than at the beginning. The complex nature of the problem at hand means that formulating an implicit scheme is not straightforward for several reasons, each to be addressed in this section.

If we begin by considering the integration of Eq. 8 using a fully implicit numerical scheme, there is a problem that seemingly arises. In order to evaluate coefficients such as \(\overline{\varepsilon }\), \(\sigma ^2\), etc. at time \(t+\varDelta t\), we must not only find the unknown velocity at this time, but also the unknown particle position. This means that an iterative solution would be required for \(u_i(t+\varDelta t)\), which is usually quite costly. One way of dealing with this is to ‘lag’ the coefficients, which means evaluating the coefficients at time t but evaluating the velocity at \(t+\varDelta t\). This may result in a slight loss of accuracy, but this loss is usually found not to be significant (Leveque 2007). If the coefficients are lagged, Eq. 8 can now be written using a fully implicit scheme (backward Euler) as

where \(\left( \varDelta \sigma ^2\right) ^n\) is approximated as \(\left( \sigma ^2\right) ^{n}-\left( \sigma ^2\right) ^{n-1}\). Note that all coefficients are evaluated at time n, and that \(u_i\) is evaluated at time \(n+1\). It is then straightforward to obtain an algebraic solution for \(u_i^{n+1}\).

The scheme is stable for all \(\tau _\mathrm{L}>0\). As was discussed in the previous section, \(\tau _\mathrm{L}\) will never be negative due to the particle dynamics alone, and the use of an implicit scheme means that numerical error will also not make \(\tau _\mathrm{L}\) negative. Therefore, the implicit scheme should be unconditionally stable (which will be demonstrated in the following sections).

One important additional item to note about Eq. 14 is that the total derivative of \(\sigma ^2\) is discretized directly as \(\left( \varDelta \sigma ^2 / \varDelta t\right) ^n=\left[ \left( \sigma ^2\right) ^{n}-\left( \sigma ^2\right) ^{n-1}\right] /\varDelta t\) rather than \(\left( \partial \sigma ^2/\partial t \right) ^n+ u_j^{n+1}\left( \partial \sigma ^2 /\partial x_j\right) ^n\). In other words, the total derivative is calculated along particle trajectories (Lagrangian) rather than using the Eulerian definition at a fixed grid point. Using the Eulerian approach would make Eq. 14 non-linear in \(u_i^{n+1}\), thus eliminating the possibility for an explicit algebraic solution for \(u_i^{n+1}\). For consistency, the same Lagrangian approach was used for the explicit method (Eq. 12), although the Eulerian approach was also tested to ensure that it did not significantly change results.

2.4.1 Generalization to Three-Dimensional, Heterogeneous, Anisotropic Turbulence

This analysis can be easily generalized to cases of anisotropic turbulence. We can proceed by assuming a form for the Eulerian velocity probability distribution function in terms of the macroscopic velocity covariances

where \(\varvec{\mathsf {R}}=R_{ij}\) is the Reynolds stress (or velocity covariance) tensor, \(\varvec{\mathsf {R}}^{-1}\) is its inverse, and \(\mathbf {u}^\mathrm {T}\) is the transpose of \(\mathbf {u}\). It is clear that \(\varvec{\mathsf {R}}\) must be positive semi-definite, otherwise the argument to the exponential function is positive and \(P_\mathrm{E}\rightarrow \infty \) as \(\big | \mathbf {u} \big |\rightarrow \infty \). It is known that a true Reynolds stress tensor is positive semi-definite by definition (Du Vachat 1977; Schumann 1977), but modelled stress tensors may not necessarily satisfy this condition. \(\varvec{\mathsf {R}}\) must also be non-singular since this formulation for \(P_\mathrm{E}\) involves a division by \(\mathrm {det}\,\varvec{\mathsf {R}}\). When such conditions are satisfied, the tensor is termed ‘realizable’. A new method for ensuring realizability is suggested in Sect. 5.3 for cases where the modelled Reynolds stress tensor is not necessarily realizable because it is not a true covariance tensor.

Thomson (1987) substituted Eq. 15 along with the previous expression for b into the Fokker–Planck equation to solve for the coefficient a. Although the solution is not unique, Thomson’s ‘simplest’ model for dispersion in Gaussian, inhomogeneous, and anisotropic turbulence was given as

We can obtain an implicit scheme in a manner analogous to the isotropic turbulence case

where \(\left( \varDelta R_{i\ell }\right) ^n = \left[ \left( R_{i\ell }\right) ^n-\left( R_{i\ell }\right) ^{n-1}\right] \). The resulting scheme is unconditionally stable provided that \(R_{ij}\) is realizable.

Equation 17 is a \(3\times 3\) matrix system of equations in terms of \(u_i\), which can be easily inverted analytically. Clearly the system of equations given by Eq. 17 must be non-singular to allow for inversion. However, since velocity increments are generally small compared to the velocity itself, the author has never found any instances where singularity was a problem, as the determinant is generally of order unity.

2.5 Some Notes on Increasing Numerical Accuracy

If higher numerical accuracy is desired, one could use a numerical integration scheme with a higher order of accuracy. Higher-order schemes are available (Kloeden and Platen 1992), but we are limited by the fact that we either have to lag the coefficients, or else we end up with a costly iterative solution.

Although many approaches are available to increase numerical accuracy, the present work controls the accuracy by varying \(\varDelta t\); \(\varDelta t\) could be adjusted autonomously using a standard adaptive timestepping approach (Press et al. 2007), although not explored here. These methods generally proceed as follows: a step of size \(\varDelta t^n\) is taken at some time t and the error of the step is estimated. If that error is less than some predefined tolerance, the timestep is increased for the next iteration in time. If the estimated error is greater than the tolerance, the step is rejected and re-tried with a smaller step size until the error is below the tolerance. There are some complications that arise when dealing with stochastic differential equations, which are described in, e.g., Mauthner (1998) and Lamba (2003).

3 Sinusoidal Test Case

3.1 Test Case Set-Up

To analyze the performance of the proposed methodology in Reynolds-averaged applications, a simple isotropic turbulence field was formulated to facilitate straightforward analysis. The required Eulerian statistics of the velocity field were specified as

such that \(0\le x \le L\), where here \(L=2\pi \). A graphical depiction of \(\sigma ^2\) and \(\overline{\varepsilon }\) is given in Fig. 1. The equation for \(\overline{\varepsilon }\) stems from the scaling argument that \(\varepsilon \sim k^{3/2}/\ell \) and \(k \sim \sigma ^2\), where k is the turbulent kinetic energy and \(\ell \) is a characteristic length scale for energetic eddies. However the choices for \(\sigma ^2\) and \(\overline{\varepsilon }\) are arbitrary, as these are model inputs. The goal of the model is to produce particle velocities whose statistics are consistent with the specified inputs, whether they be physical or non-physical. A sinusoid was chosen because it is periodic, and has regular intervals of heterogeneity; furthermore, it means that function evaluations and derivatives are exact. All units in this section are arbitrary; each component of the mean velocity \(U_i\) was set to zero for these tests.

In the test simulations, 100,000 particles were released from random points uniformly distributed over the interval \(\left( 0,\,2\pi \right) \). Particle trajectories followed a periodic condition at the flow boundaries x / L = 0 and 1; they were tracked over a time period of \(T=10\). \(C_0\) was taken to be equal to 4.0, and unless otherwise noted \(\varDelta t = 0.1\). The particle velocity was initialized by drawing a Gaussian random number with mean zero and variance \(\sigma ^2(x_0)\), where \(x_0\) is the particle’s initial position.

The ‘mixedness’ of the particle plume was quantified using the entropy ‘S’, which, following the common approach used in information theory, is defined as

where \(P(x_i)\) is the probability that a particle resides in the ith discrete subinterval of x (\(i=1,2,\ldots N_\mathrm{bins}\)). When calculated in this way, the entropy of the perfectly mixed particle distribution is \(S=0\). The entropy of the simulated particle plume is expected to be negative, but the goal is to achieve an entropy as close to zero as possible.

If a particle became ‘rogue’, it was discarded and not included in the analysis. For practical purposes, a particle is considered rogue when the absolute value of its velocity exceeds \(10\,\mathrm {max}(\sigma )\). Previous work commonly uses a weaker threshold of closer to \(6\,\sigma \) (e.g., Wilson 2013; Postma 2015). Given the number of particle trajectory updates and the assumed velocity distribution, it is not impossible to find a stable particle with a velocity near \(6\,\mathrm {max}(\sigma )\). The odds are around 1 in \(5\times 10^8\), and the simulations that follow have up to \(10^9\) particle updates. However, it would be exceedingly unlikely to find a stable particle with a velocity greater than \(10\,\mathrm {max}(\sigma )\). Experience has shown that if a particle is unstable, its velocity quickly exceeds \(10\,\mathrm {max}(\sigma )\) or even \(100\,\mathrm {max}(\sigma )\), which clearly distinguishes it from a stable particle whose velocity has become large simply because it lies in the tails of the probability distribution. Thus, it is preferable to choose a large threshold to define rogue trajectories. With this type of thresholding methodology, the cumulative number of rogue trajectories over an entire simulation is also expected to increase with the length of the simulated time period.

3.2 Particle Position Probability Density Functions

Figure 2a gives the probability density function (p.d.f.) of particle position at the end of the simulation, with u calculated according to Eq. 11 (which assumes homogeneity). For comparison, the ‘well-mixed’ particle distribution is shown by the vertical dashed line. The figure illustrates the well-known result that this methodology violates the second law of thermodynamics, and causes particles to accumulate in regions of low velocity variance (see Fig. 1). Using the known region of stability introduced earlier \(\varDelta t < \mathrm {min}\left( 4\sigma ^2/C_0\overline{\varepsilon }\right) \), the timestep should be less than about 0.7 to ensure stability. Thus, as shown in the figure, \(\varDelta t=0.1\) gives no rogue trajectories. Although not shown, it was verified that at around \(\varDelta t \approx 0.7\), rogue trajectories began appearing as expected.

Using the inhomogeneous model for u with an explicit forward Euler integration scheme (Eq. 12) actually degraded results (Fig. 2b). Firstly, the entropy substantially decreases over that of the homogeneous model; secondly, 55 % of particles became rogue according to the definition given above. Note that this value of 55 % increases continually in time until eventually all particles become rogue. Whether there are only a few rogue trajectories or whether there are thousands, the solution is still unstable over some region and all particles are likely to eventually become rogue if the simulation runs for long enough time.

If the same inhomogeneous model for u is used but instead with an implicit backward Euler integration scheme (Eq. 14), an improvement in results can be observed (Fig. 2c). The entropy increases slightly over the homogeneous model (i.e., entropy is closer to zero), and there are no rogue trajectories as expected.

P.d.f. of particle position for the sinusoidal test case: a homogeneous model for u (Eq. 11) integrated using an explicit forward Euler scheme (\(\varDelta t = 0.1\)); b inhomogeneous model for u (Eq. 12) integrated using an explicit forward Euler scheme (\(\varDelta t = 0.1\)); c inhomogeneous model for u (Eq. 14) integrated using an implicit backward Euler scheme (\(\varDelta t = 0.1\)). R is the fraction of particles that were ‘rogue’, or \(\big | u \big | > 10\,\mathrm {max}(\sigma )\), and S is the entropy of the plume

3.3 Effect of the Timestep on Stability and ‘Mixedness’

Figure 3 shows the effect of varying the timestep for the ‘sinusoidal’ test case using the explicit inhomogeneous model for u (Eq. 12, Fig. 3a–d) and the implicit inhomogeneous model (Eq. 14, Fig. 3e–h). It should be noted that for the homogeneous model (Eq. 11), reducing the chosen timestep had little effect, and therefore no further results are shown for that case.

As the timestep is decreased when using the explicit forward Euler scheme, the frequency of rogue trajectories decreases towards zero. This is an expected result, since decreasing the timestep means that the scheme will tend toward its region of absolute stability.

P.d.f. of particle position for the sinusoidal test case using the inhomogeneous model for u (Eq. 8). Columns correspond to varying timestep. Rows correspond to varying integration scheme, with panels a–d using the explicit forward Euler scheme (Eq. 12), and panels e–h using the implicit backward Euler scheme (Eq. 14). R is the fraction of particles that were ‘rogue’, or \(\big | u \big | > 10\,\mathrm {max}(\sigma )\), and S is the entropy of the plume

It is difficult to use Eq. 13 to calculate the required timestep for stability since \(\varDelta \sigma ^2\), or equivalently \(u\left( \partial \sigma ^2/\partial x\right) \varDelta t\), is not readily calculated. If we estimate that \(\mathrm {max}\big | u\big | \sim 6\,\mathrm {max}\left( \sigma \right) \), we can estimate that the explicit scheme is stable when

Substituting values gives that the forward Euler scheme is stable for this test case when \(\varDelta t \lesssim 0.03\), which agrees with Fig. 3. Note that this is only a rough estimate, and should not be considered exact. This equation can also be used to estimate the region(s) of the flow where particles are most likely to become unstable. Figure 4 shows the distribution of the locations of rogue trajectories (i.e., the particle’s location when its velocity first exceeded the threshold of \(10\,\mathrm {max}\left( \sigma \right) \)), as well as the region of absolute instability as estimated from Eq. 20. Not surprisingly, rogue trajectories were most likely to occur in the region where the model equations were unstable.

Probability distribution P(x / L) of the location where particles became ‘rogue’, or first exceeded the velocity threshold of \(10\,\sigma (x)\) for two timestep choices. The shaded area shows the region of instability for \(\varDelta t = 0.05\), as approximated by Eq. 20

For both the explicit and implicit integration schemes, the entropy or ‘mixedness’ tends toward zero as the timestep is decreased. However, it appears that the implicit scheme approaches a well-mixed state more rapidly than the explicit scheme. For moderately small timesteps (e.g., \(\varDelta t\) = 0.1 and 0.05), the entropy is substantially lower when an implicit scheme is used. This result is important for cases where an extremely small timestep cannot be used and compromises must be made. In such cases, it appears preferable to use an implicit scheme, as it results in unconditional stability and better adherence to the well-mixed condition.

When the timestep was increased to extreme levels, there also became a point where the plume started tending back toward a well-mixed state. When this occurred, velocity increments were very large, and an additional diffusive effect became present, which mixed out the particle plume (see Fig. 3a,e). This happens when the particle timestep is on the order of the integral time scale. As will be further illustrated in Sect. 3.4, the WMC is seemingly satisfied because particle timesteps are so large that the particle’s variance is uncoupled with the local Eulerian variance, and thus the local gradient in the variance does not act to un-mix particles. This is an important result, as it indicates that the WMC alone is not a sufficient indicator of the performance of the numerical scheme. The next section presents a more thorough examination.

3.4 Eulerian Profiles of Particle Velocity

The direct purpose of the above models is not necessarily to satisfy the well-mixed condition or second law of thermodynamics (i.e., this is not the governing equation being solved). Satisfying these consistency conditions is simply a byproduct. Rather, the purpose is to produce an ensemble of Lagrangian particles that has the velocity p.d.f. prescribed by Eq. 6, i.e., Gaussian with zero mean and local variance \(\sigma ^2(x_i)\). It will be shown that if the computed Eulerian particle velocity p.d.f. has zero mean and local variance \(\sigma ^2(x_i)\), the well-mixed condition will be satisfied by default.

To better assess the numerical procedure, it is instructive to calculate Eulerian statistics of the Lagrangian particle velocities, and compare them with the ‘exact’ values. Fortunately, the exact values of the Eulerian mean velocity and variance are always known, since they were specified as inputs. The ensemble mean particle velocity should be zero at every point, and the ensemble mean of the particle velocity increments should be equal to \(\partial \sigma ^2(x)/\partial x\). The ensemble particle velocity variance should be equal to \(\sigma ^2(x)\), and the variance of the particle velocity increments should be equal to \(C_0 \overline{\varepsilon }(x) \varDelta t\).

Figures 5 and 6 compare calculated Eulerian profiles of mean particle velocity, mean particle velocity increments, particle velocity variance, and variance of the particle velocity increments. These profiles are determined by establishing a set of discrete spatial bins, and calculating the mean/variance of all particles residing in a given bin. This Eulerian averaging operator is denoted as \(\langle \cdot \rangle _\mathrm{E}\).

Simulated Eulerian profiles for the sinusoidal test case using the explicit scheme: a average particle velocity, b particle velocity variance, c average particle acceleration, d variance of particle velocity increments. Profiles are formed by calculating an average or variance over all particles residing in the ith discrete spatial bin. The solid blue line denotes results when the homogeneous model (Eq. 11) was used with \(\varDelta t = 0.01\). Note that \(\varDelta t = 4.0\) is not shown because so many particles were rogue that it made it difficult to obtain meaningful profiles

Same as Fig. 5 except that the implicit integration scheme was used

3.4.1 Homogeneous Model

When the model lacks correction terms for heterogeneity (Eq. 11), the mean particle velocity correctly remains zero everywhere (Fig. 5a), but the particles do not assume the correct velocity variance profile (Fig. 5b). This is because the mean acceleration required for the particles to assume the correct velocity variance is not properly applied (Fig. 5c). In other words, a mean acceleration is required for particles to assume a heterogeneous \(\sigma ^2(x)\) profile. Since Eq. 11 has zero mean acceleration, un-mixing occurs regardless of the timestep when \(\sigma ^2\) is heterogeneous (Fig. 2a).

3.4.2 Inhomogeneous Model, Implicit Scheme

When the effects of instability are removed from the inhomogeneous model by using an implicit scheme, numerical accuracy dictates the degree to which un-mixing occurs. Numerical errors lead to particle ensembles that deviate from the ‘exact’ Eulerian statistics specified as inputs (Fig. 6). Errors induce a non-zero Eulerian mean particle velocity (Fig. 6a), and cause particles to fail to assume the correct variance distribution (Fig. 6b). When the timestep is too large, the model fails to correctly represent the mean acceleration that corrects for the effects of heterogeneity (Fig. 6c) and the variance of the velocity increments (Fig. 6d). When the timestep is extremely large, the model correctly predicts a zero ensemble mean particle velocity everywhere, but it predicts an incorrect velocity variance profile that is uniform. The uniform variance profile explains why no un-mixing is observed in Fig. 3e.

The mean velocity induced by numerical errors (Fig. 6a) appears to be consistent with the un-mixing patterns shown in Fig. 3e–h. The change in sign of in \(\langle u \rangle _\mathrm{E}\) at \(x/L\approx 0.75\) causes a convergence, while the change in sign at \(x/L\approx 0.25\) causes a divergence. However, it can be seen from Fig. 3e–h that the convergence at \(x/L\approx 0.75\) is much larger than the divergence at \(x/L\approx 0.25\). This is due to the fact that the velocity variance (or standard deviation) is much smaller at \(x/L\approx 0.75\), and thus the induced mean velocity has a much larger effect (i.e., \(\langle u \rangle _\mathrm{E}/\sigma \) is much larger at \(x/L\approx 0.75\)).

3.4.3 Inhomogeneous Model, Explicit Scheme

The explicit scheme showed similar behaviour in the induced mean velocity and acceleration profiles as the timestep was varied (Fig. 6a, c). The primary difference when compared with the implicit scheme was that when the timestep was moderately large, the explicit scheme added too much energy (Fig. 6b, d). An exceptionally large spike in particle energy can be found near \(x/L\approx 0.75\), which corresponds to the most probable location of instability (Fig. 4). Thus, it appears that instabilities act to add erroneous energy to the particles.

Interestingly, the patterns in un-mixing for the explicit scheme (Fig. 3a–d) are opposite of the implicit scheme, where a strong divergence is found at \(x/L\approx 0.75\) rather than a convergence. It is probable that this is related to the presence of instabilities. As was previously shown, instabilities add erroneous energy, which is strongest at \(x/L\approx 0.75\). This energy is liable to create a diffusive effect that will cause particles to vacate this region, thus resulting in a divergence. However, it is difficult to demonstrate directly that this is the case, as the effects of instability and numerical inaccuracy cannot be readily separated for the explicit scheme.

3.5 Effect of Numerical Interpolation and Differentiation

In the above methodology, coefficient evaluations and spatial derivatives were exact since explicit equations were available for \(\sigma ^2\) and \(\overline{\varepsilon }\). However, in most real situations, only discrete ‘gridded’ data are available. This means that in order to evaluate e.g., \(\sigma ^2(x_i)\), a numerical interpolation scheme must be used. Additionally, spatial derivatives such as \(\partial \sigma ^2/\partial x_i\) must be estimated using a numerical scheme. Since the above section found that numerical accuracy in integrating the differential equation for \(u_i\) affected the degree to which the well-mixed condition was satisfied, it is reasonable to imagine that numerical accuracy of the interpolation and spatial differentiation schemes may also play a role.

The previous simulations were repeated using a discrete grid of 20 points, with linear interpolation used for function evaluations between discrete points. Spatial derivatives were calculated at each grid point using a centred finite difference scheme, which were interpolated between grid points using a linear scheme (both of which are second-order accurate). When this approach was used, no significant differences were found in Figs. 2 or 3. It was found that as long as the Eulerian grid was fine enough to adequately resolve mean spatial gradients, numerical errors in the differencing and interpolation schemes had a minimal overall effect on the ability of model outputs to match the specified input fields. In the following section, cases are noted where very large gradients in \(\sigma ^2(x)\) caused difficulty in the numerical estimation of gradients, and some simple solutions are suggested.

4 Channel Flow Test Case

4.1 Test Case Set-Up

The simplicity of the above sinusoidal test case provided a convenient means for testing the given numerical schemes. However, such a case is clearly unphysical. To demonstrate that the above analysis still holds in a more realistic case, a channel flow was considered. The channel-flow direct numerical simulation (DNS) data of Kim et al. (1987) and Mansour et al. (1988) were used to drive the dispersion simulations. Horizontally-averaged profiles were calculated from the DNS dataset in order to set up a one-dimensional inhomogeneous flow that could be used to test the Reynolds-averaged models. In this section, only the isotropic model is considered, with testing of the anisotropic model left for the next section.

Vertical profiles of the normalized mean velocity U, the velocity variance \(\sigma ^2\), and turbulent dissipation rate \(\overline{\varepsilon }\) are given in Fig. 7, whose values were defined on 50 uniform grid points. Dimensional values are normalized by some combination of the channel half-height \(\delta \) and the friction velocity \(u_\tau \). The simulations were set up such that particle position and velocity evolved through time in all three Cartesian coordinate directions, although only transport in the wall-normal direction will be examined. The mean velocity components were equal to U in the streamwise (x) direction, and zero in other directions. The Eulerian velocity variance was equal to \(\sigma ^2\) in all three Cartesian directions (isotropic). Note that the isotropic velocity variance was specified as \(\sigma ^2=\frac{2}{3}k\), where k is the turbulent kinetic energy. \(10^5\) particles were uniformly released at \(t=0\), and simulated for a period of \(T=\delta \,u_\tau ^{-1}\); \(C_0\) was set equal to 4.0.

4.1.1 Boundaries

The zero-flux boundaries and associated problems arising in the model need consideration. The first relates to how the boundaries should affect particle motion. Because the particles are simply fluid parcels with no mass, in reality such particles generally never impact upon the wall. As real particles approach the wall, their velocity is damped to zero by viscosity before impacting the wall. Thus particle velocities should approach zero at the lower wall, and the vertical velocity component should approach zero at the upper boundary. The models used herein do not have a viscous sublayer model, and therefore particles do not necessarily obey such constraints. The strategy used was to ensure that all variables go to zero at the lowest grid point, and all gradients and vertical fluxes go to zero at the highest grid point. In this case, particles tended to naturally follow the zero-flux wall boundary conditions on their own. Cases where particles still crossed boundaries were due to numerical inaccuracies, and in general as the numerical solution converged, fewer and fewer wall crossings were observed. To ensure the boundary conditions were always enforced regardless of numerical errors, perfect reflection was used.

Another boundary-related issue was with regard to calculation of vertical gradients. When gradients were numerically calculated in the previous test case, a central differencing scheme was used throughout, which was made possible by the fact that boundaries were periodic. Near zero-flux boundaries, a forward (backward) scheme must be used at the lower (upper) boundaries. Switching schemes near the walls tended to create problems related to the model’s ability to satisfy the well-mixed condition in those areas. This caused un-mixing to occur near the boundaries regardless of how small a timestep was used. Two possible remedies were found that eliminated this. One was to linearly interpolate gridded data onto a finer grid such that near-boundary gradients were well-resolved. Another was to use the same scheme throughout the domain as is used at the lower wall (i.e., a forward scheme). This still requires a change in schemes at the upper wall, but if gradients are small there, it did not seem to create a problem. In what follows, a second-order forward differencing scheme was used for all nodes except at the top two nodes, in which case a second-order backward scheme was used. Furthermore, 50 vertical grid points were chosen so that there was at least one grid point between the lower wall and the point where \(\sigma ^2\) begins to rapidly decrease.

4.2 Particle Position p.d.f.s

First, the model’s ability to satisfy the WMC or thermodynamic constraint was assessed. Figure 8 gives the probability distribution of vertical particle position at the end of the simulation. Results showed similar behaviour as in the previous ‘sinusoidal’ test case. Using the forward Euler scheme resulted in a substantial number of rogue trajectories, which decreased as the timestep was decreased. The frequency of rogue trajectories was seemingly less than for the sinusoidal case, but this could likely be related to simulation duration. If the simulation time was longer, rogue trajectories would increasingly accumulate.

For moderately small timesteps (\(\varDelta t=0.01\,\delta \,u_\tau ^{-1}\)), the backward Euler scheme gave a more well-mixed plume than the forward Euler scheme, in addition to ensuring stability. For very small timesteps (\(\varDelta t=10^{-4}\,\delta \,u_\tau ^{-1}\)), both schemes gave a very well-mixed plume and zero rogue trajectories. Using Eq. 20, it is estimated that a timestep of \(\varDelta t \lesssim 0.004\,\delta \,u_\tau ^{-1}\) is required for stability. The simulations were used to confirm that rogue trajectories started appearing at roughly \(\varDelta t > 10^{-3}\,\delta \,u_\tau ^{-1}\). It is notable that if a small amount of un-mixing is tolerable, the implicit scheme allowed \(\varDelta t\) to be increased by one or even two orders of magnitude while still achieving stability.

Probability density functions of particle position for the channel-flow test case using the inhomogeneous model for \(u_i\) (Eq. 8). Columns correspond to varying timestep (values given at the top of columns). Rows correspond to varying integration scheme, with panels a–c using the explicit forward Euler scheme (Eq. 12), and panels d–f using the implicit backward Euler scheme (Eq. 14). R is the fraction of particles that are ‘rogue’, or \(\big | u_i \big | > 10\,\mathrm {max}(\sigma )\)

4.3 Eulerian Profiles of Particle Velocity

Figures 9 and 10 depict the ability of the models to match Eulerian statistics given as inputs for several different timestep choices. As expected, refining the timestep leads to a convergence of the solution toward the specified Eulerian statistics. Using too large a timestep induces a mean vertical velocity that tends to un-mix particle plumes. Instabilities resulting from the forward Euler integration scheme tended to add far too much energy to the particles, resulting in overprediction of the variance of the vertical velocity and velocity increments. In general, numerical errors in the implicit backward Euler scheme tended to underpredict variances. For intermediate timesteps (e.g., \(\varDelta t = 0.01\,\delta \,u_\tau ^{-1}\)), the implicit scheme was superior to the explicit scheme at predicting Eulerian profiles, which is likely due to errors associated with instabilities when the explicit scheme was used.

Eulerian particle velocity statistics for the channel-flow test case when an explicit forward Euler integration scheme was used. Calculated profiles are compared to exact profiles for a average particle vertical velocity, b particle vertical velocity variance, c average vertical particle acceleration, d variance of particle vertical velocity increments. Profiles are formed by calculating an average or variance over all particles residing in the ith discrete spatial bin

Eulerian particle velocity statistics for the channel-flow test case when an implicit backward Euler integration scheme was used. Calculated profiles are compared to exact profiles for a average particle vertical velocity, b particle vertical velocity variance, c average vertical particle acceleration, d variance of particle vertical velocity increments. Profiles are calculated by performing an average or variance over all particles residing in the ith discrete spatial bin

5 Large-Eddy Simulation and Anisotropic Models

5.1 Model Formulation

The above analysis was performed in the context of modelling the fluctuating velocity resulting from Reynolds decomposition. A natural generalization can be made to extend the analysis to large-eddy simulation (LES) models. This analysis is also relevant to Reynolds-averaged models, as the close similarities of LES and Reynolds-averaged formulations implies that the LES models can be used to illustrate the feasibility of the methods in any general anisotropic flow scenarios.

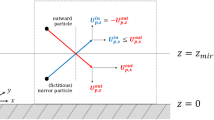

In LES, turbulent length scales larger than the numerical grid scale \(\varDelta \) are calculated directly from the filtered Navier–Stokes equations, while smaller scales are modelled. Using this approach, the evolution of a particle’s position with time can be written as

where \(\tilde{u}_i\) is the resolved particle velocity, which is available from the LES solution assuming the particle velocity is equal to the Eulerian fluid velocity at the point \(x_{\mathrm{p},i}\). \(u_{\mathrm{s},i}\) is the unresolved (subfilter-scale) particle velocity, which must be modelled. This approach has a considerable advantage over the traditional Reynolds-averaged approach described in Sect. 2 in that \(u_{\mathrm{s},i}\) presumably contains only the small ‘universal’ scales of motion, which are more likely to follow standard gradient-diffusion theory.

LES Lagrangian dispersion models have generally evolved in parallel to Reynolds-averaged models. The underlying theme in essentially all LES models is they assume that simulated velocity fluctuations from the ensemble mean can be applied to fluctuations from the filtered velocity (with a few minor modifications). In theory, this assumption seems reasonable as the ensemble averaging operator is simply a filter over all scales.

It is assumed that the subfilter-scale velocity has a Gaussian distribution of a form analogous to Eq. 15, but with the Reynolds stress tensor \(R_{ij}\) replaced by the subfilter-scale stress tensor \(\tau _{ij}\). As in Eq. 15, it is necessary that \(\tau _{ij}\) is positive semi-definite and non-singular (realizable). A method is presented below in Sect. 5.3 to ensure realizability of the stress tensor.

The assumed form for \(P_\mathrm{E}\) can be substituted into the Fokker–Planck equation in the same way as in the Reynolds-averaged case, which yields an equation identical to Eq. 16 except with \(u_i\) replaced by \(u_{\mathrm{s},i}\), \(R_{ij}\) replaced by \(\tau _{ij}\), and \(\varepsilon \) is an instantaneous and local value. The turbulence dissipation rate \(\varepsilon \) represents approximately the same quantity as in Eq. 16 on average. If \(\varDelta \) lies in the inertial subrange (a critical assumption for most LES models), dissipation is still unresolved in LES. Thus, the rate at which turbulence is removed by dissipation should be the same on average in both the Reynolds-averaged and LES approaches. However, the precise value of \(\varepsilon \) that is chosen is not critical for the present discussion, as our goal is simply to match the prescribed distribution of \(\varepsilon \), whatever it may be.

5.2 Eulerian LES Momentum Solution

A large-eddy simulation was performed for a channel flow to drive the 3D anisotropic model. The LES model is described in full detail in Stoll and Porté-Agel (2006), and only essential details are summarized here. As introduced above, LES resolves turbulent motions with length scales larger than the characteristic grid scale \(\varDelta \), which for hexahedral grid cells of size \(\varDelta _x \times \varDelta _y \times \varDelta _z\), can be given by \(\left( \varDelta _x \varDelta _y \varDelta _z\right) ^{1/3}\). In essence, this is a generalization of the Reynolds-averaged approach where all turbulent motions are below the filter scale. In both cases, the effects of the subfilter scales must be modelled.

The deviatoric component of the subfilter-scale stress tensor was modelled using the Smagorinsky approach

where \(k_\mathrm{s}\) is the subfilter-scale turbulent kinetic energy, \(\tilde{S}_{ij}=\frac{1}{2}\left( \frac{\partial \tilde{u}_i}{\partial x_j}+\frac{\partial \tilde{u}_j}{\partial x_i}\right) \) is the resolved strain rate tensor, and \(|\tilde{S}|=\left( 2\tilde{S}_{ij}\tilde{S}_{ij}\right) ^{1/2}\). \(C_\mathrm{s}\) is the Smagorinsky coefficient, which is scale-dependent and calculated dynamically along fluid particle trajectories following Stoll and Porté-Agel (2006). Test filtering for the scale-dependent scheme is performed at scales of \(2\varDelta \) and \(4\varDelta \).

The subfilter-scale dissipation rate (needed by the dispersion model) was calculated following the recommendation of Meneveau and O’Neil (1994), who suggested the scaling of \(\varepsilon \sim k_\mathrm{s} \big | \tilde{S}\big |\). An initial guess for \(k_\mathrm{s}\) was calculated using the model of Mason and Callen (1986) \(k_\mathrm{s}=\left( \varDelta C_\mathrm{s} | \tilde{S} \big |\right) ^2/0.3\), which is equivalent to the frequently used model of Yoshizawa (1986) to within a constant.

5.3 Ensuring a Realizable Stress Tensor

The anisotropic Lagrangian particle dispersion model requires specification of the total (i.e., deviatoric plus normal components) stress tensor. Many turbulence models compute the deviatoric and normal components separately, which means there is no guarantee that the total stress tensor is realizable. In order for \(\tau _{ij}\) (or \(R_{ij}\)) to be realizable, the normal stresses must be large enough that the three invariants of the tensor are positive (Sagaut 2002), i.e.,

In practice, it is necessary to ensure that the invariants are larger than some small positive threshold \(I_\epsilon \) in order to avoid marginal realizability. It was found that \(I_\epsilon \) could not be arbitrarily small, and for this test case \(I_\epsilon =10^{-5}\) was sufficient to eliminate all rogue trajectories. Increasing this value by one or two orders of magnitude seemed to have no noticeable impact on results. Decreasing by one or two orders of magnitude resulted in very infrequent occurrence of rogue trajectories (i.e., less than 10 out of \(10^{5}\) total trajectories), which did not seem to affect results. It is noted that required values of \(I_\epsilon \) may be flow-dependent and also dependent on model details.

The following methodology was used to find the \(k_\mathrm{s}\) that ensured a realizable stress tensor. The parameter \(k_\mathrm{s}\) was estimated at every Eulerian grid point as \(k_\mathrm{s}=\left( \varDelta C_\mathrm{s} | \tilde{S} \big |\right) ^2/0.3\), and the resulting \(\tau _{ij}\) was then checked to ensure that its three invariants were greater than \(I_\epsilon \). If not, \(k_\mathrm{s}\) was incrementally increased by 5 % until the invariants were all above the threshold. This is not the most efficient algorithm, and if further computational efficiency is desired a faster converging method could be used, such as bisection or the Newton-Raphson method. It was possible that although \(\tau _{ij}\) was realizable at every Eulerian grid node, when interpolated to the particle position it could fail to be realizable, particularly near the wall. Thus, a similar check (and possibly correction) was performed to ensure that the interpolated \(\tau _{ij}\) was still realizable. The additional computational expense from performing these checks and corrections was not substantial, as a correction was most commonly only necessary for particles between the lowest computational grid node and the wall.

5.4 Test Case Set-Up

Large-eddy simulation was performed of a very high Reynolds number channel flow; the test case was essentially the same as in Porté-Agel et al. (2000). The flow was bounded in the vertical direction by a lower no-slip, rough wall, and an upper zero stress rigid lid at \(z=\delta \). The lower wall had a characteristic roughness length of \(z_0=10^{-4}\,\delta \), the lateral boundaries were periodic, and the flow was driven by a spatially constant horizontal pressure gradient of \(F_x= 3.125\times 10^{-3} \,u_\tau ^2\). The domain of size \(2\pi \delta \times 2\pi \delta \times \delta \) was discretized into \(32\times 32\times 32\) uniform hexahedral cells, where \(\delta \) is the channel half-height. This grid resolution is quite low for this flow, which was intentionally chosen to emphasize the effects of the unresolved scales.

For simplicity, the Lagrangian dispersion simulations were driven by a single instantaneous realization of the LES. This was preferable as it resulted in minimal data that could be easily distributed with the code provided in the supplementary material. Such a case is physically equivalent to having a flow with high heterogeneity in space, but that is steady in time, similar to that of a Reynolds-averaged flow with highly complex geometry. From the point of view of a particle, the difference between heterogeneity in space and unsteadiness in time is not likely to be significant, as either one gets wrapped into the total derivative term in the same way.

In the dispersion simulations, \(10^5\) particles were instantaneously released from a uniform source, and tracked over a period of \(T=\delta \,u_\tau ^{-1}\). Numerical differentiation of Eulerian fields were calculated using a second-order central finite differencing scheme in the horizontal. In the vertical, a second-order forward scheme was used except at the top two nodes, where a backward scheme was used. Rogue trajectories were assumed to occur when \(\big | u_{\mathrm{s},i} \big | > 10\,\mathrm {max}(\frac{2}{3}k_\mathrm{s})^{1/2}\) was satisfied for any component of \(u_{\mathrm{s},i}\).

5.5 Input Profiles

For reference, various LES flow profiles are given in Fig. 11, which gives a sense of the importance of the subfilter-scale model. On average, about 90 % of the turbulent kinetic energy was resolved by the numerical grid. At maximum, the subfilter-scale turbulent kinetic energy accounted for about 25 % of the total turbulent kinetic energy. Note that all variables were forced to zero at the wall for reasons discussed in the previous test case. This appears especially abrupt for this test case given that the numerical grid is quite coarse. As the LES grid is refined, this assumption will improve.

Probability density functions of particle position for the LES test case with varying timestep (values given in figure). a–c shows results for the explicit forward Euler scheme without ensuring that \(\tau _{ij}\) is realizable, d–f shows results for the explicit forward Euler scheme while ensuring that \(\tau _{ij}\) is realizable, and g–i shows results for the implicit backward Euler scheme (with realizable \(\tau _{ij}\)). R is the fraction of particles that were ‘rogue’, or \(\big | u_{\mathrm{s},i} \big | > 10\,\mathrm {max}(\frac{2}{3}k_\mathrm{s})^{1/2}\), and S is the entropy of the plume

5.6 Particle Position p.d.f.s and Eulerian Profiles

Figure 12 shows p.d.f.s of vertical particle position using various schemes. When an explicit forward Euler scheme was used and no efforts were made to ensure a realizable \(\tau _{ij}\), rogue trajectories became significant (Fig. 12a–c). By the end of this simulation, roughly 20 % of particles were rogue regardless of timestep. Surprisingly, the number of rogue trajectories increased with decreasing timestep.

Ensuring that \(\tau _{ij}\) was realizable significantly decreased the number of rogue trajectories (Fig. 12d–f). However, even for a very small timestep (\(\varDelta t = 10^{-4}\,\delta \,u_\tau ^{-1}\)), the explicit integration scheme still produced over 100 rogue trajectories. It is likely that the timestep would have to be extremely small to eliminate all rogue trajectories. As discussed previously, even a small number of rogue trajectories can be problematic. If the simulation is allowed to run long enough, there may be a point where enough rogue particles accumulate that results are noticeably affected.

As expected, the implicit backward Euler scheme produced no rogue trajectories, provided that \(\tau _{ij}\) is realizable. The timestep did not have a significant effect on the implicit model’s ability to satisfy the second law of thermodynamics. This is likely related to the fact that the resolved velocity (which clearly satisfies the thermodynamic constraint) accounts for the majority of the total velocity. When a relatively large timestep was used with the explicit numerical scheme (\(\varDelta t = 10^{-2}\,\delta \,u_\tau ^{-1}\)), some un-mixing occurred. The presence of rogue trajectories could have had some influence on this un-mixing, since the implicit scheme with an equivalent timestep showed almost no un-mixing.

Figure 13 shows Eulerian profiles of particle velocity statistics near the wall when the implicit integration scheme was used. Well away from the wall, the model was able to match specified Eulerian profiles regardless of timestep, which is because gradients are very small in this region. As with previous test cases, using too large of a timestep meant that Eulerian particle velocity statistics were under predicted in regions of large gradients (i.e., near the wall) with respect to the exact profiles specified as inputs. As the timestep was reduced, particle velocity statistics converged to the exact values. Although not shown, if an isotropic model were used that neglected off-diagonal components of \(\tau _{ij}\), the model would be able to match profiles of \(\langle k_\mathrm{s}^2 \rangle \) and \(\langle \left( \text {d}W_\mathrm{s}\right) ^2\rangle _\mathrm{E}/\varDelta t\). However, all cross-correlations would clearly be zero in that case.

Eulerian particle velocity profiles for the LES test case with an implicit integration scheme: a sub-filter scale turbulent kinetic energy, b covariance between \(u_\mathrm{s}\) and \(w_\mathrm{s}\), and c variance of vertical velocity increments. Profiles are formed by calculating an average or variance over all particles residing in the ith discrete spatial bin

6 Summary

This study explored aspects of the numerical solution of Lagrangian stochastic model equations. Isotropic, Reynolds-averaged models were examined using a simple sinusoidal turbulence field, as well as using channel flow data. The generalized three-dimensional and anisotropic model formulations were examined using large-eddy simulations, although the results are directly applicable to Reynolds-averaged models as well.

It was found that the so-called rogue trajectories result from numerical instability of the temporal integration scheme. Due to the stiff nature of the velocity evolution equations, very small timesteps are required to maintain stability when an explicit scheme is used. A natural remedy for this problem is to use an implicit numerical scheme. Formulating a fully implicit scheme is complicated by the fact that the velocity evolution equation is coupled with the position evolution equation, and by the non-linearity of the velocity evolution equation. Furthermore, in the case of three-dimensional, anisotropic turbulence, the three components of the velocity evolution equation are non-linearly coupled. To solve these problems, the equations were linearized by re-writing the total derivative term using the Lagrangian definition. Furthermore, coefficients were ‘lagged’ in order to avoid having to use a costly iterative scheme. The resulting implicit scheme was shown to be unconditionally stable. In the case of the anisotropic model, it was critical that the velocity covariance tensor be realizable, otherwise rogue trajectories frequently occurred regardless of the size of the timestep. Realizability was enforced by ensuring that the turbulent kinetic energy was large enough that the three tensor invariants were larger than some specified threshold.

In addition to examining stability, the degree to which statistics of the numerical solution matched the specified inputs was examined. Fortunately, the exact statistics of the solution are always known, since they are simply given by model inputs. The fundamental task of the numerical solution is to provide an ensemble of particles whose Eulerian velocity statistics match those that were originally specified. As expected, it was found that the size of the chosen timestep determined the degree to which computed particle statistics matched specified statistics. In addition to failing to match specified statistics, using too large of a timestep also induced a mean particle flux that leads to a violation of the well-mixed condition. If the timestep is extremely large, it was found that the well-mixed condition could also be satisfied, but that Eulerian particle statistics would be incorrect. Thus, it was recommended that numerical solutions be verified by comparing computed Eulerian velocity statistics with those specified as inputs.

No scenario was found where it was preferable to use an explicit scheme over an implicit scheme. When the timestep required for stability of the explicit scheme is unfeasibly small, the implicit scheme is preferable because it can provide reasonable results with a much larger timestep than the explicit scheme. When the anisotropic model is used, there is a small additional cost associated with the inversion of a 3\(\times \)3 matrix which results from the implicit formulation. However, this seems minor compared to the added assurance of obtaining an unconditionally stable scheme. Furthermore, for moderate timesteps, the implicit scheme showed better overall performance than the explicit scheme. Thus, it is recommended to always use the implicit scheme.

Although only Gaussian models were examined, future work should explore the use of implicit numerical schemes for cases of skewed turbulence (e.g., Luhar and Britter 1989; Weil 1990). Skewed models present significant challenges, as the model equations themselves are not algebraically explicit. As such, formulation of an implicit numerical scheme will almost certainly involve an iterative approach. This will create a noticeable increase in computational cost, which may or may not be acceptable given the severity of rogue trajectories. Regardless, many of the results presented herein will likely still apply, such as the importance of numerical accuracy in satisfying the well-mixed condition and matching prescribed Eulerian velocity p.d.f.s.

References

Bailey BN, Stoll R, Pardyjak ER, Mahaffee WF (2014) Effect of canopy architecture on vertical transport of massless particles. Atmos Environ 95:480–489

Du S (1997) Universality of the Lagrangian velocity structure function constant (\({\cal C}_0\)) across different kinds of turbulence. Boundary-Layer Meteorol 83:207–219

Du Vachat R (1977) Realizability inequalities in turbulent flows. Phys Fluids 20:551–556

Hairer E, Wanner G (1996) Solving ordinary differential equations II: stiff and differential-algebraic problems, 2nd edn. Springer, Berlin, 614 pp

Kim J, Moin P, Moser R (1987) Turbulence statistics in fully developed channel flow at low Reynolds number. J Fluid Mech 177:133–166

Kloeden PE, Platen E (1992) Higher-order implicit strong numerical schemes for stochastic differential equations. J Stat Phys 66:283–314

Lamba H (2003) An adaptive timestepping algorithm for stochastic differential equations. J Comput Appl Math 161:417–430

Langevin P (1908) Sur la théorie du mouvement Brownein. C R Acad Sci (Paris) 146:530–533

Legg BJ, Raupach MR (1982) Markov-chain simulation of particle dispersion in inhomogeneous flows: the mean drift velocity induced by a gradient in Eulerian velocity variance. Boundary-Layer Meteorol 24:3–13

Leveque RJ (2007) Finite difference methods for ordinary and partial differential equations: steady-state and time-dependent problems. Society for Industrial and Applied Mathematics, Philadelphia, PA, 357 pp

Lin J, Brunner D, Gerbig C, Stohl A, Luhar A, Webley P (eds) (2013) Lagrangian modeling of the atmosphere. American Geophysical Union, Washington, DC, 349 pp

Lin JC (2013) How can we satisfy the well-mixed criterion in highly inhomogenous flows? A practical approach. In: Lin J, Brunner D, Gerbig C, Stohl A, Luhar A, Webley P (eds) Lagrangian modeling of the atmosphere. American Geophysical Union, Washington, DC, pp 59–69

Luhar AK, Britter RE (1989) A random walk model for dispersion in inhomogeneous turbulence in a convective boundary layer. Atmos Environ 23:1911–1924

Mansour NN, Kim J, Moin P (1988) Reynolds-stress and dissipation-rate budgets in a turbulent channel flow. J Fluid Mech 194:15–44

Mason PJ, Callen NS (1986) On the magnitude of the subgrid-scale eddy coefficient in large-eddy simulations of turbulent channel flow. J Fluid Mech 162:439–462

Mauthner S (1998) Step size control in the numerical solution of stochastic differential equations. J Comput Appl Math 100:93–109

Meneveau C, O’Neil J (1994) Scaling laws of the dissipation rate of turbulent subgrid-scale kinetic energy. Phys Rev E 49:2866–2874

Pope SB (1987) Consistency conditions for randomwalk models of turbulent dispersion. Phys Fluids 30:2374–2379

Porté-Agel F, Meneveau C, Parlange MB (2000) A scale-dependent dynamic model for large-eddy simulations: application to a neutral atmospheric boundary layer. J Fluid Mech 415:261–284

Postma JV (2015) Timestep buffering to preserve the well-mixed condition in Lagrangian stochastic simulations. Boundary-Layer Meteorol 156:15–36

Postma JV, Yee E, Wilson JD (2012) First-order inconsistencies caused by rogue trajectories. Boundary-Layer Meteorol 144:431–439

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2007) Numerical recipes: the art of scientific computing. Cambridge University Press, Cambridge, U.K., 1256 pp

Rodean HC (1991) The universal constant for the Lagrangian structure function. Phys Fluids A 3:1479–1480

Rodean HC (1996) Stochastic Lagrangian models of turbulent diffusion. American Meteorological Society, Boston, MA, 84 pp

Sagaut P (2002) Large eddy simulation for incompressible flows: an introduction, 3rd edn. Springer, Berlin, 585 pp

Sawford BL (1986) Generalized random forcing in randomwalk turbulent dispersion models. Phys Fluids 29:3582

Schumann U (1977) Realizability of Reynolds-stress turbulence models. Phys Fluids 20:721–725

Stoll R, Porté-Agel F (2006) Dynamic subgrid-scale models for momentum and scalar fluxes in large-eddy simulations of neutrally stratified atmospheric boundary layers over heterogeneous terrain. Water Resour Res 42(W01):409

Thomson DJ (1984) Random walk modelling of diffusion in inhomogeneous turbulence. Q J R Meteorol Soc 110:1107–1120

Thomson DJ (1987) Criteria for the selection of stochastic models of particle trajectories in turbulent flows. J Fluid Mech 180:529–556

Weil JC (1990) A diagnosis of the asymmetry in top-down and bottom-up diffusion using a Lagrangian stochastic model. J Atmos Sci 47:501–515

Wilson JD (2013) “Rogue velocities” in a Lagrangian stochastic model for idealized inhomogeneous turbulence. In: Lin J, Brunner D, Gerbig C, Stohl A, Luhar A, Webley P (eds) Lagrangian modeling of the atmosphere. American Geophysical Union, Washington, DC, pp 53–57

Wilson JD, Thurtell GW, Kidd GE (1981) Numerical simulation of particle trajectories in inhomogeneous turbulence, II: systems with variable turbulent velocity scale. Boundary-Layer Meteorol 21:423–441

Yee E, Wilson JD (2007) Instability in Lagrangian stochastic trajectory models, and a method for its cure. Boundary-Layer Meteorol 122:243–261

Yoshizawa A (1986) Statistical theory for compressible turbulent shear flows, with the application to subgrid modeling. Phys Fluids 29:2152

Acknowledgments

The author wishes to acknowledge fruitful discussions with Drs. Rob Stoll and Eric Pardyjak in formulating the ideas presented in this work. This research was supported by U.S. National Science Foundation Grants IDR CBET-PDM 113458 and AGS 1255662, and United States Department of Agriculture (USDA) Project 5358-22000-039-00D. The use, trade, firm, or corporation names in this publication are for information and convenience of the reader. Such use does not constitute an endorsement or approval by the USDA or the Agricultural Research Service of any product or service to the exclusion of others that may be suitable.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bailey, B.N. Numerical Considerations for Lagrangian Stochastic Dispersion Models: Eliminating Rogue Trajectories, and the Importance of Numerical Accuracy. Boundary-Layer Meteorol 162, 43–70 (2017). https://doi.org/10.1007/s10546-016-0181-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10546-016-0181-6