Abstract

We apply the modulated Fourier expansion to a class of second order differential equations which consists of an oscillatory linear part and a nonoscillatory nonlinear part, with the total energy of the system possibly unbounded when the oscillation frequency grows. We comment on the difference between this model problem and the classical energy bounded oscillatory equations. Based on the expansion, we propose the multiscale time integrators to solve the ODEs under two cases: the nonlinearity is a polynomial or the frequencies in the linear part are integer multiples of a single generic frequency. The proposed schemes are explicit and efficient. The schemes have been shown from both theoretical and numerical sides to converge with a uniform second order rate for all frequencies. Comparisons with popular exponential integrators in the literature are done.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A large amount of work in the literature has been devoted to study the following second order oscillatory differential equations arising from various aspects of Hamiltonian dynamics [19, 21, 23, 24, 36, 38, 39, 41, 42, 46, 49]

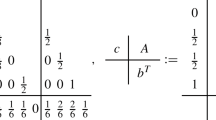

where \(\mathbf {x}=\mathbf {x}(t)\in \mathbb {R}^d\) and \(A=\varOmega ^2\in \mathbb {R}^{d\times d}\) is a positive semi-definite symmetric matrix of arbitrarily large norm. In a Hamiltonian system, \(\mathbf {x}\) is interpreted as the positions of the particles and \({\dot{\mathbf {x}}}\) denotes the corresponding velocities. By a diagonalization of the matrix, one can consider

where \(0<\varepsilon \le 1\) is inversely proportional to the spectral radius of \(\varOmega \) and \(\varLambda \) is a diagonal matrix with nonnegative entries independent of \(\varepsilon \). The function \(\mathbf {g}(\cdot ):\mathbb {R}^d\rightarrow \mathbb {R}^d\) is a possible nonlinearity and has a Lipschitz constant bounded independently of \(\varepsilon \) [41, 42], which in a Hamiltonian system, usually represents the internal force generated by a potential function \(V(\cdot )\) [42, 44], i.e. \(\nabla V(\mathbf {x})=\mathbf {g}(\mathbf {x})\), and consequently the total energy E(t) of the system is conserved as

When large frequencies are involved in the Hamiltonian system, which corresponds to the parameter \(0<\varepsilon \ll 1\), the solution of the ODEs (1.1) becomes highly oscillatory and propagates waves with wavelength at \(O(\varepsilon )\). Due to the high oscillations, designing uniformly accurate numerical integrators for solving (1.1) in the limit regime is the major and challenging subject in order to study the ODE system efficiently. The ODEs (1.1) is widely considered in the literatures [36, 38, 39, 41, 42, 46, 49] to associate with two given initial values, i.e. \(\mathbf {x}(0)\) and \(\dot{\mathbf {x}}(0)\), that make the reduced energy

uniformly bounded as \(\varepsilon \rightarrow 0\). For simplicity of illustration, let us assume here \(\varLambda \) is non-singular. Then the boundedness of the reduced energy implies

Thus, the initial value problem of (1.1) is imposed as

with given data \(\phi _0,\phi _1\in \mathbb {R}^d\) independent of \(\varepsilon \). This energy bounded type data usually arises from classical Hamiltonian system. In particular, we would like to remark in molecular dynamics, the nonlinearity \(\mathbf {g}(\cdot )\) is often generated by some nonbonded potentials [44]. The two most typical nonbonded potentials are the Coulomb potential \(V_C\) with \(n_0\) particles in \(m_0\,(d={n_0}m_0)\) dimensions as [44]

where \(\mathbf {q}_l\in \mathbb {R}^{m_0}\) and \(\mathbf {x}=(\mathbf {q}_1,\ldots ,\mathbf {q}_{n_0})^\mathrm {T}\) and the Lennard-Jones potential \(V_{LJ}\) [42]

In these two cases, in order to provide that the nonlinearity \(\mathbf {g}(\mathbf {x})\) in (1.3) has a Lipschitz constant essentially independent of \(\varepsilon \), one has to modify the practical potential \(V(\mathbf {x})\) used in (1.2) for generating \(\mathbf {f}(\mathbf {x})\) to

instead of taking \(V=V_C\) or \(V=V_{LJ}\) directly. It is clear to see that it is the initial data in (1.3) makes the position value small in the highly oscillatory regime. The higher the oscillation frequency is, however the smaller the amplitude is. The problem (1.3) with this type of initial data has been well studied by Sanz-Serna and Lubich etc in the past decades. The exponential (wave) integrators (EIs) or also known as trigonometric integrators with different kinds of filters [25, 32, 36,37,38,39,40, 42] have been proposed and shown to offer uniform second order accuracy in the absolute position error for \(0<\varepsilon \le 1\) and offer uniform first order accuracy in velocity error. The long time energy preserving property of the EIs has been analyzed in [19, 21, 22, 41] by means of a powerful tool known as modulated Fourier expansion. Besides, Enquist etc developed a general methodology known as the heterogeneous multiscale method (HMM) [2, 26, 50,51,52] for problems with widely different scales, and the method has been applied to solve the highly oscillatory ODEs [2, 26, 50]. Different from numerical methods like EIs which look for complete knowledge of the problem at every scale, the HMM only solves an averaged effective model by a macro-solver and predicts local information of the original model by a micro-solver. The modulated Fourier expansions have been used to analyze HMM in [47].

Recently, another type initial data which comes from some limit regimes of quantum physics [3,4,5,6, 14] has been considered to the ODEs as

where in order to distinguish the scales, we switch the notations from \(\mathbf {x}\) and \(\mathbf {g}\) to \(\mathbf {y}\) and \(\mathbf {f}\), respectively. This type of initial data makes the reduced energy \({\widetilde{E}}(t)=\dot{\mathbf {y}}^\mathrm {T}\dot{\mathbf {y}}+\frac{1}{\varepsilon ^2}\mathbf {y}^\mathrm {T}\varLambda ^2\mathbf {y}\) turn to infinity as \(\varepsilon \rightarrow 0\). Without confusions, we shall address the problem (1.7) as the energy unbounded type and refer to (1.3) as energy bounded type. Compared to the previous energy bounded case, the energy unbounded type problem corresponds to larger position value case, that is \(\mathbf {y(t)}=O(1)\) as \(0<\varepsilon \ll 1\), and it leads to much wider oscillations in the solution. Thus, we also address the initial data in (1.7) as large initial data. The two types of problems are connected by a scaling. In fact, by introducing a scaling to the variables as

we can rewrite the energy bounded problem (1.3) in an energy unbounded form

or rewrite the energy unbounded problem (1.7) in an energy bounded form

Thus, to consider the energy bounded problem (1.3) is to consider (1.7) with the nonlinearity

and conversely to consider the energy unbounded problem (1.7) is to consider (1.3) with the nonlinearity

Now comparing (1.3) with (1.10) or (1.7) with (1.9), we can see that under the scaling, the essential difference between the two types of initial data lies on the behavior of the function \(\mathbf {g}(\cdot )\) (or \(\mathbf {f}\)). For the linear function, i.e. \(\mathbf {g}(\mathbf {x})=M \mathbf {x}\) with \(M\in \mathbb {R}^{d\times d}\), clearly the energy bounded problem (1.9) (or (1.10)) is completely equivalent to the unbounded case (1.7) (or (1.3)). However for nonlinear \(\mathbf {g}(\cdot )\), they could be quite different. For \(\mathbf {g}=\nabla V\) in (1.3) generated by the practical unbonded potential V in (1.6), the scaled \(\mathbf {g}_\varepsilon (\mathbf {y})\) in (1.9) is indeed generated by the original unbond potential functions (1.4) or (1.5). In practise, another major brunch of nonlinearities \(\mathbf {g}(\cdot )\) take the power functions, i.e. each component \(g_j(\mathbf {x})\,(j=1,\ldots ,d)\) of \(\mathbf {g}\) is a (or a sum of) pth-order (\(p\ge 2\)) pure power function of \(x_1,\ldots ,x_d\). These power type nonlinearities widely occur in the scalar field theory in quantum physics [3,4,5] and in the classical dynamical systems such as the Fermi–Pasta–Ulam (FPU) problem [42, 43]. These type of functions make the nonlinearity in the equation (1.9) a very small quantity. For example, if \(g_j(\mathbf {x})\) is a cubic power function, then \(\mathbf {g}_\varepsilon (\mathbf {y})=O(\varepsilon ^2)\) as \(0<\varepsilon \ll 1\). The higher the order of the polynomial is, the smaller the nonlinearity becomes. Thus, the energy bounded problem (1.3) with the power nonlinearity is just a very small perturbation to the harmonic oscillator as \(\varepsilon \) is small. While for the energy unbounded problem (1.7) with power nonlinearity where \(\mathbf {f}(\mathbf {y})=O(1)\) as \(0<\varepsilon \ll 1\), the nonlinearity is no longer a small perturbation. Under this sense, we can also say that considering the energy unbounded problem (1.7) is considering a much stronger nonlinearity in the second order oscillatory equations, where wider resonance could happen in the solution in a shorter time scale. We give an example to illustrate the different oscillatory behaviors in the solutions of the two types of problems. We choose in (1.3) and (1.7): \(d=2,\mathbf {x}=(x_1,x_2)^\mathrm {T},\mathbf {y}=(y_1,y_2)^\mathrm {T},\phi _0=(1,2)^\mathrm {T},\phi _1=(1,3)^\mathrm {T},\varLambda =diag(\pi ,\pi )\) and \(\mathbf {g}(\mathbf {x})=(x_1x_2^2,x_1^2x_2)^\mathrm {T}, \mathbf {f}(\mathbf {y})=(y_1y_2^2,y_1^2y_2)^\mathrm {T}\), and the solutions under different \(\varepsilon \) are shown in Fig. 1. Figure 2 shows the error between the solution of (1.3) or (1.7) and its corresponding the harmonic oscillator by removing the nonlinearity:

By denoting \(\dot{\mathbf {y}} = \varepsilon ^{-1} \mathbf {u}\), (1.7) reads \(\dot{\mathbf {u}} = -\varepsilon ^{-1} \mathbf {y}- \varepsilon \mathbf {f}(\mathbf {y})\) and one can see that the nonlinearity is a higher order perturbation to the linear part. Formally as \(\varepsilon \rightarrow 0\), both \(\mathbf {x}(t)\) and \(\mathbf {y}(t)\) converges to \(\mathbf {x}_{ho}(t)\) and \(\mathbf {y}_{ho}(t)\) respectively for fixed \(t>0\) (we shall see more clearly later). Figure 2 indicates the convergence rates of the two types of problems in \(\varepsilon \) are quite different. Another way to analyse (1.7), for example under the polynomial nonlinearity of degree \(p+1\), is to define \(\mathbf {u}=(\varepsilon ^{1/p}\mathbf {y}+i\varepsilon ^{(p+1)/p}\varLambda ^{-1}\dot{\mathbf {y}})/\sqrt{2},\) then the problem becomes

It corresponds to a nonlinear Schrödinger-type equation with small initial data and has been intensively studied by means of the modulated Fourier expansion [20, 27, 28, 34, 35].

First row errors \(|\mathbf {x}(t)-\mathbf {x}_{ho}(t)|/\varepsilon \) and \(|\mathbf {y}(t)-\mathbf {y}_{ho}(t)|\) between the solutions of (1.3) and (1.7) and their corresponding the harmonic oscillators under different \(\varepsilon \). Second row growths of the maximum errors \(\displaystyle e_x(t):=\sup \nolimits _{0\le s\le t}\{|\mathbf {x}(s)-\mathbf {x}_{ho}(s)|/\varepsilon \}\) and \(\displaystyle e_y(t):=\sup \nolimits _{0\le s\le t}\{|\mathbf {y}(s)-\mathbf {y}_{ho}(s)|\}\)

Another essential difference between the energy bounded and unbounded problems is that from the computational point of view, if one has a numerical integrator to (1.3) and (1.9) or (1.7) and (1.10) with numerical solutions denoted as \(\mathbf {x}^n\) and \(\mathbf {y}^n\) as approximations to \(\mathbf {x}(t_n)\) and \(\mathbf {y}(t_n)\) respectively at some time grids \(t_n=n\tau \) with \(n\in \mathbb {N}\) and \(\tau >0\) the time step, the absolute position error for the energy unbounded problem

can be treated as the relative error for the energy bounded case. Though uniform accurate second order numerical integrators have been developed for solving energy bounded problem (1.3) as we mentioned before, the approximations could make no sense when the step size \(\tau ^2\gg \varepsilon \) since the solution \(\mathbf {x}(t)=O(\varepsilon )\), and the uniform convergence would be lost after scaling by \(\varepsilon ^{-1}\). Thus, considering the relative error bound to the energy bounded case or switching to considering the energy unbounded type problem is more convincible for uniform convergence. A detailed table of the relations between each quantity connected by the scaling (1.8) in the two problems is given in Table 1. Along the numerical aspects for the energy unbounded ODEs (1.7), investigations in the literatures so far are limited. Due to the strong oscillation features, existing numerical methods in the energy bounded case do not give any clues on their performance in the energy unbounded case. Popular multiscale integrators in the literature such as the stroboscopic averaging method (SAM) [8,9,10] and the multi-revolution composition method (MRCM) [12, 13] could apply in the limit regime. Generalisations of SAM and MRCM are known as the envelope-following methods which we refer to [45] and the references therein. Other methods that could apply to (1.7) include the flow averaging integrators (FLAVORS) [48] and the HMM with Poincaré map technique [1]. To design the uniformly accurate (UA) schemes for solving (1.7) for all \(0<\varepsilon \le 1,\) there are two approaches. One is the two-scaled formulation [14] which extends the problem with another degree-of-freedom. The other one is the multiscale time integrator which is based on some asymptotic expansion of the solution [3, 5,6,7].

In this paper, we are going to propose and study some multiscale time integrators (MTIs) for solving the energy unbounded model problem (1.7) in the highly oscillatory regime. The basic tools we are utilizing here are the modulated Fourier expansion which is well-known as a fundamental framework for solving and analyzing highly oscillatory differential equations [19, 21,22,23,24, 41, 42, 47]. We shall apply the expansion to the solution at every time step and decompose the equations according to the \(\varepsilon \) amplitude and frequency, which is different from the usual way of applying the modulated Fourier expansion. We find out not only the leading order terms in the modulated Fourier expansion, but also take care of the remainder’s equation with proper numerical integrators. The strategy has been used before in [3, 5,6,7]. However, unlike the previous work, for the model (1.7) we are able to identify the leading order term exactly and approximate the oscillatory remainder with uniform accuracy, which essentially benefit us in the end to reach a uniform second order accuracy. On the contrast, for the problem studied in [3, 5,6,7], only a uniform first order accuracy is achieved. For the polynomial type nonlinearity case in (1.7), we use the exponential integrators to get the remainder. As for general nonlinearity case, under the assumption that the diagonal entries of \(\varLambda \) are multiples of a generic constant \(\alpha >0\), we apply the stroboscopic like numerical integrator [8,9,10, 29, 30]. The proposed MTIs are explicit, easy to implement and have uniformly second order convergence in the absolute position error for solving (1.1) with (1.7), which in turn shows the MTIs can give uniformly second order convergence in the relative position error for the energy bounded model (1.3). We remark that for the general nonlinearity with multiple irrational frequencies case, designing and analyzing uniformly accurate integrators in the highly-oscillatory regime are very challenging. We refer to [11] for some recent progress which makes use of Diophantine approximation from number theory.

The rest of the paper is organized as follows. In Sect. 2, we shall propose the MTIs based on the multiscale decomposition. In Sect. 3, we shall give the main convergence theorem and the proof. Numerical results and comparisons are given in Sect. 4 followed by conclusions drawn in Sect. 5. Throughout this paper, we use the notation \(A\lesssim B\) for two scalars A and B to represent that there exists a generic constant \(C>0\), which is independent of time step \(\tau \) (or n) and \(\varepsilon \), such that \(|A|\le CB\), and all the absolute value of a vector \(|\mathbf {y}|\) is interpreted as the standard Euclidian norm.

2 Numerical schemes

Now assume \(0<\varepsilon \le 1\) and we are solving the energy unbounded model problem (1.7) which we write it down again for readers’ convenience.

To keep us away from extra troubles in presenting the core of our method, we first assume \(\varLambda \) is positive definite and later we will come to discuss the case when \(\varLambda \) has some zero entries.

Take \(\tau =\Delta t>0\) and denote \(t_n=n\tau \) for \(n=0,1,\ldots ,\). With

for \(t=t_n+s\), we take ansatz of the solution, which is known as the modulated Fourier expansion, as

where \(\mathbf {r}^n(s)=(r_1^n(s),\ldots ,r_d^n(s))^\mathrm {T}\in \mathbb {R}^d\) and \(\mathbf {z}^n=(z_1^n,\ldots ,z_d^n)^\mathrm {T}\in \mathbb {C}^d\) is independent of time. Here and after, the exponential function of a matrix A is interpreted as \(\mathrm {e}^A:=\sum \nolimits _{n=0}^\infty \frac{A^n}{n!}\). Plugging the ansatz back to (1.7), we get

With \(s=0\) in (2.2) and the derivative of (2.2) with respect to s, we find

We choose \(\mathbf {r}^n(0)={\dot{\mathbf {r}}}^n(0)=0\) to keep the remainder \(\mathbf {r}^n(s)\) as small as possible, and then by matching the \(O(\frac{1}{\varepsilon })\) and O(1) terms in the second equation, we get

Solving the above equations we get

Then by using the variation-of-constant formula to (2.3), we get

Taking derivative with respect to s on both sides of above equation, we get

Let \(s=\tau \), we then get

Now we can see in the modulated expansion (2.2), we have found out the leading order term \(\mathbf {z}^n=O(1)\) exactly and the unknowns become the next order terms which are denoted as the remainder \(\mathbf {r}^n(s)\) satisfying the equations (2.3). From (2.5), we can see clearly \(\mathbf {r}^n(s)=O(\varepsilon ),\,{\dot{\mathbf {r}}}^n(s)=O(1)\). Thus, though the equations (2.3) of the remainder are similar to the original problem (1.7), the oscillation amplitude is reduced. Based on (2.5), we can start to design numerical integrators hoping to get uniform convergence. The numerical integrator is designed in two different cases. One is when the nonlinearity is polynomial type, the other is when \(\varLambda \) is a multiple of a single frequency but for general nonlinearity.

Once we obtain the approximations of \(\mathbf {r}^n(\tau )\) and \({\dot{\mathbf {r}}}^n(\tau )\), then we use the ansatz to recover the approximations of \(\mathbf {y}^{n+1}\) and \({\dot{\mathbf {y}}}^{n+1}\), i.e.

At each time step, the algorithm proceeds as a decomposition-solution-reconstruction flow [3, 5, 6]. We refer to the numerical integrators proposed the following based on the multiscale decomposition (2.2) as multiscale time integrators (MTIs).

Remark 2.1

We remark that the above multiscale decomposition also shows the average of the problem (1.7). One can consider the expansion (2.2) as

Hence with \(\mathbf {r}(t)=O(\varepsilon )\) from (2.5), we see that formally for a fixed \(t>0\), as \(\varepsilon \rightarrow 0\), \(\mathbf {y}(t)\) converges to \(\mathrm {e}^{\frac{i t}{\varepsilon }\varLambda }\mathbf {z}^0+\mathrm {e}^{-\frac{i t}{\varepsilon }\varLambda }\overline{\mathbf {z}}^0\) which solves the linear model in (1.11). At last, by using the setup in example (4.2), we show in Fig. 3 the dynamics of \(\mathbf {r}(t)\) which solves

2.1 MTIs for polynomial case

We begin with the case that the nonlinearity \(\mathbf {f}\) is polynomial type, where we can find out the explicit dependence of \(\mathbf {f}(\mathrm {e}^{\frac{i\theta }{\varepsilon }\varLambda }\mathbf {z}^n+\mathrm {e}^{-\frac{i\theta }{\varepsilon }\varLambda }\overline{\mathbf {z}}^n+\mathbf {r}^n)\) on the fast variable \(\frac{\theta }{\varepsilon }\), in particular for the leading order part \(\mathbf {f}(\mathrm {e}^{\frac{i\theta }{\varepsilon }\varLambda }\mathbf {z}^n+\mathrm {e}^{-\frac{i\theta }{\varepsilon }\varLambda }\overline{\mathbf {z}}^n)\) for

with \(\omega _1,\ldots ,\omega _d>0\) independent of \(\varepsilon \).

We denote for \(j=1,\ldots ,d\),

and further we can find

where \(\displaystyle L_{m_j}=\sum \nolimits _{l=1}^dn_{l}^{(m_j)}\omega _l,\ n_{l}^{(m_j)}\in \mathbb {N}\) and \(g_{m_j}\) is a pure power function of \(\mathbf {z}^n\) and \(\overline{\mathbf {z}}^n\), i.e.

with \(p_l^{(m_j)},q_l^{(m_j)}\in \mathbb {N},\ \lambda _{m_j}\in \mathbb {R}\). Then the integral in (2.5) becomes

where

can be obtained exactly. For the last unknown integral in (2.9) which depends on \(\mathbf {r}^n(\theta )\), noticing that from (2.13) we have

so we simply apply the standard single step trapezoidal rule to approximate it. Noting (2.4) and \(h_j(\mathbf {z}^n,\mathbf {0},0)=0\), all together in (2.5) we get for each \(j=1,\ldots ,d,\)

Similarly, we have

with

Then the detailed numerical scheme of the MTI for the polynomial type nonlinearity case reads as follows.

For \(n\ge 0\), let \(\mathbf {y}^n\) and \({\dot{\mathbf {y}}}^n\) be the approximations of \(\mathbf {y}(t_n)\) and \({\dot{\mathbf {y}}}(t_n)\), \(\mathbf {r}^{n+1}=(r_1^{n+1},\ldots ,r_d^{n+1})^\mathrm {T}\) and \({\dot{\mathbf {r}}}^{n+1}=(\dot{r}_1^{n+1},\ldots ,\dot{r}_d^{n+1})^\mathrm {T}\) be the approximations of \(\mathbf {r}^{n}(\tau )\) and \({\dot{\mathbf {r}}}^{n}(\tau )\), and \(Z^n\) be \(\mathbf {z}^n\), respectively. Choosing \(\mathbf {y}^0=\mathbf {y}(0)= \phi _0\), \({\dot{\mathbf {y}}}^0={\dot{\mathbf {y}}}(0)=\frac{\phi _1}{\varepsilon }\), for \(n\ge 0\), \(\mathbf {y}^{n+1}\) and \({\dot{\mathbf {y}}}^{n+1}\) are updated as

where

2.2 MTIs for general nonlinearity case

Next we consider the case for general nonlinearity but under the assumption that \(\varLambda \) is a multiply of a single frequency \(\alpha >0\), i.e.

We also introduce the function,

for \(n\ge 0\) and \(0\le \theta \le \tau \), and we rewrite (2.3) as

For the second integral term on the right hand side of (2.14a) or (2.14b), we do the single step trapezoidal rule as before. As for the first integral, we do a change of variable \(s=\frac{\alpha \theta }{\varepsilon }\) and denote \(\frac{\alpha \tau }{2\pi \varepsilon }=m+\gamma \) with \(m\in \mathbb {N},\, 0\le \gamma <1\), then

Due to the periodicity and denoting \(\mathbf {g}\left( \mathbf {z}^n,s\right) :=\mathbf {f}\left( \mathrm {e}^{i s D}\mathbf {z}^n+\mathrm {e}^{-i s D}\overline{\mathbf {z}}^n\right) \), we get

Similarly,

Now the functions in the integrals (2.15) and (2.16) become smooth in terms of s. Though the explicit formulas of (2.15) and (2.16) are not available for general \(\mathbf {f}\), with \(\mathbf {z}^n\) known, they can be approximated very accurately with error bounds independent of \(\varepsilon \). Here since the first integral part in (2.15) or (2.16) is over a complete period, so we apply the composite trapezoidal rule which can efficiently offer spectral accuracy as

with \(s_j=\frac{2\pi j}{M}\) and \(M\in \mathbb {N}\) chosen sufficiently large such that the truncation error of the quadrature is negligible. For the second integral in (2.15) or (2.16), we can do further transformation and use the Gauss-Legendre quadrature which is also spectrally accurate as

where \(x_j\) for \(j=1,\ldots ,M,\) is the j-th root of the Legendre polynomial \(P_M(x)\) and \(\nu _j\) is the associated weight. Again we choose M large enough such that all the approximations here are ‘accurate’. Note that the integrals in both (2.17) and (2.18) are for functions without any large frequencies on O(1) intervals. The errors from the quadratures are uniformly bounded with respect to \(\varepsilon \). Hence, M can be chosen independently from \(\varepsilon \). Moreover, if the nonlinearity \(\mathbf {f}\) is smooth, then thanks to the spectral accuracy of the used quadratures, the practical demand on \(M\in \mathbb {N}\) to reach high accuracy is not severe.

Then the detailed numerical scheme of the MTI in this case reads as follows. For \(n\ge 0\), let \(\mathbf {y}^n\) and \({\dot{\mathbf {y}}}^n\) be the approximations of \(\mathbf {y}(t_n)\) and \({\dot{\mathbf {y}}}(t_n)\), \(\mathbf {r}^{n+1}\) and \({\dot{\mathbf {r}}}^{n+1}\) be the approximations of \(\mathbf {r}^{n}(\tau )\) and \({\dot{\mathbf {r}}}^{n}(\tau )\), and \(Z^n\) be \(\mathbf {z}^n\), respectively. Choosing \(\mathbf {y}^0=\mathbf {y}(0)= \phi _0\), \({\dot{\mathbf {y}}}^0={\dot{\mathbf {y}}}(0)=\frac{\phi _1}{\varepsilon }\), for \(n\ge 0\), \(\mathbf {y}^{n+1}\) and \({\dot{\mathbf {y}}}^{n+1}\) are updated the same as (2.10)–(2.11a), but with

where \(\mathbf {I}_1,\mathbf {I}_2,\mathbf {J}_1,\mathbf {J}_2\) are defined in (2.17) and (2.18).

It is clear that the proposed MTI (2.10) with (2.11) or (2.19) is fully explicit and easy to implement in practice. We remark that the ansatz (2.2) we used is just a modulated Fourier expansion of the solution \(\mathbf {y}(t_n+s)\) for \(s\in [0,\tau ]\). The solution is expanded at every time grid, thus it is different from the standard use of the modulated Fourier expansion in the studies of oscillatory equations in [19, 21, 22, 41].

We remark that when the nonlinearity \(\mathbf {f}\) in this case is taken as a polynomial type function, then the practical computational results of the MTI (2.19) is indeed the same as the MTI (2.11) derived for the polynomial case in Sect. 2.1. This is because both numerical schemes evaluate the leading order oscillatory part of the solution exactly or very accurately, and approximate the remainder by the same way. From computational cost point of view, the MTI (2.19) is more expensive than the MTI (2.11), since the MTI (2.19) for the general case needs to compute the integrals \(I_j\) and \(J_j\,(j=1,2)\) at each time level by quadratures, while in MTI (2.11) the integral coefficients \(C_{m_j}\) and \(\dot{C}_{m_j}\) are computed once for all. Thus, when both (2.19) and (2.11) are applicable, we would recommend the MTI (2.11) for the polynomial case.

We summarize here the schemes proposed so far which are the main algorithm for solving (1.7). The rest part of this section will discuss two practical situations as complements to the main algorithm.

2.3 Two scales in the linear part

At last, we write this subsection as a short note for the case

where in the linear oscillatory part, the matrix A has two different scales, i.e.

Here \(\varLambda \in \mathbb {R}^{d_1\times d_1}\) is similar as before with all positive entries kept away from zero as \(\varepsilon \rightarrow 0\), and \(M\in \mathbb {R}^{d_2\times d_2}\, (d_1+d_2=d)\) is a non-negative matrix independent of \(\varepsilon .\) In this situation, the highly oscillatory part and the non-oscillatory part in (2.20) are separated. Denoting the solution \(\mathbf {y}=(\mathbf {y}_O,\mathbf {y}_N)^\mathrm {T}\) with \(\mathbf {y}_O\in \mathbb {R}^{d_1}\) and \(\mathbf {y}_N\in \mathbb {R}^{d_2}\), and \(\mathbf {f}=(\mathbf {f}_O,\mathbf {f}_N)^\mathrm {T},\) with \(\mathbf {f}_O\in \mathbb {R}^{d_1}\) and \(\mathbf {f}_N\in \mathbb {R}^{d_2}\), (2.20) becomes

Then one can apply the expansion (2.2) to the oscillatory part \(\mathbf {y}_O(t_n+s)\) and carry out the same decomposition as used before. Whenever the approximation to the non-oscillatory part \(\mathbf {y}_N(t_n+s)\) is needed, since it is smooth so one can apply any quadratures, for example the Gautschi’s type quadrature,

for a second order of accuracy. Then for the polynomial case discussed in Sect. 2.1, it is straightforward to derive an MTI with uniform convergence. As for the general case in Sect. 2.2, one can use

in order to make it easier to evaluate the integration of the oscillatory but periodic part.

2.4 With source terms

The above MTIs in Sects. 2.1 and 2.2 can be extended to study the second order oscillatory ODEs with a source term. The problem reads

where the source term \(\mathbf {s}(t)\) is given as

with \(\mathbf {a}_l(t)\in \mathbb {R}^d\) a smooth function and \(\upsilon _l\in \mathbb {R}\). The source term \(\mathbf {s}(t)\) denotes the external force applied to the system and the form (2.23) usually arise from the studies of electronic circuits [15,16,17,18].

Carrying out the decomposition of the differential equations (2.22) based on the same ansatz (2.2) similarly as before, one will end up with

with initial data (2.4). We will have two additional integrations regarding to the source term in the variation-of-constant formulas, i.e.

From real application point of view, \(\mathbf {a}_l(t)\) are usually simple analytic functions such as polynomials and trigonometric functions [16, 17], where the above integration (2.25) can be either evaluated exactly or approximated as accurate as desired. In this sense, we can always integrate the source term \(\mathbf {S}^n=(S_1^n,\ldots ,S_d^n)\) and \({\dot{\mathbf {S}}}^n=(\dot{S}_1^n,\ldots ,\dot{S}_d^n)\) accurately and uniformly for \(0<\varepsilon \le 1\) and the rest approximations in the MTIs can be done similarly as before.

3 Convergence results

In this section, we shall give the convergence result of the proposed MTIs for solving (1.7) under the two cases. In order to obtain rigorous error estimates, we assume that the exact solution \(\mathbf {y}(t)\) to (1.7) satisfies the following assumptions

for \(0<T<T^*\) with \(T^*\) the maximum existence time. Denoting

and the error functions as

then we have the following error estimates for the MTIs.

Theorem 3.1

(Error bounds of MTIs) For the numerical integrator MTIs, i.e. (2.10) with (2.11) or (2.19), under the assumption (3.1) and \(\varLambda \) is nonsingular, there exits a constant \(\tau _0>0\) independent of \(\varepsilon \) and n, such that for any \(0<\varepsilon \le 1\),

Here we shall only show the proof of the theorem for the MTI (2.10) with (2.19) for solving (1.7) under the case (2.12), while the other case is similar. To proceed to proof, we first establish some lemmas.

Lemma 3.1

Suppose \(\mathbf {y}^n\) and \({\dot{\mathbf {y}}}^n\) are the numerical solutions from the MTI (2.10) with (2.19), then we have

Proof

First of all, as we remarked before, we assume in (2.19) the quadratures in \(I_j(Z^n),\,J_j(Z^n)(j=1,2)\) are accurate enough to recover

Then plugging (2.11a) into (2.10), we get

Then by using (2.19) and (3.6), we get directly the assertion (3.1). \(\square \)

Introducing the local truncation errors based on (3.1) as

then we have

Lemma 3.2

For the local truncation errors \(\xi ^n\) and \(\dot{\xi }^n\), we have estimates

Proof

Applying the variation-of-constant formula directly to (1.7) for \(t=t_n+s\), we have

Using the expansion (2.2) and noticing (2.13), we find

Now since the leading order oscillatory part is evaluated exactly, so the truncation error is only caused by the single step trapezoidal rule, i.e.

We note that

then by using the error formula of trapezoidal rule we get

or using (3.9) directly we get

Similarly, we can get the results for \(\dot{\xi }^n\) as

and the proof is completed. \(\square \)

Next, we introduce the nonlinear errors

then we have

Lemma 3.3

For the errors from the nonlinearity \(\eta ^n\) and \(\dot{\eta }^n\), under (3.4b) which will be proven by induction later, we have estimates

Proof

Firstly, under assumption (3.1) and (3.4b), we can easily have

Then similarly, together with triangle inequality we get

Noting (2.3) and (3.1), we have

Plugging the above estimate back to (3.11), we complete the proof. \(\square \)

Combing the above lemmas, now we can prove the main theorem by the energy method and it is carried out in the framework of mathematical induction in order to guarantee the boundedness of the numerical solutions [3,4,5].

Proof of Theorem 3.1

For \(n=0,\) from the choice of initial data in the scheme, we have

and results (3.4a) and (3.4b) are obviously true.

Now for \(n\ge 1\), assume (3.4a) and (3.4b) are true for all \(n\le m\le \frac{T}{\tau }-1\), and then we need to show results (3.4a) and (3.4b) are still valid for \(n=m+1\). Subtracting (3.7) from (3.1), we get

Multiplying \(\varLambda \) to the left on both sides of (3.12a) and multiplying (3.12b) by \(\varepsilon \), then taking the inner product with themself, respectively, by the Hölder inequality, we get

Adding (3.13b) to (3.13a), with definition

we get

Summing (3.14) up for \(n=0,\ldots ,m\), we get

Noting \(\mathscr {E}({\dot{\mathbf {e}}}^{0},\mathbf {e}^{0})=0\) and using results from (3.2) and (3.3), we get

Then by discrete Gronwall’s inequality, we get

which implies (3.4a) is true for \(n=m+1\). Furthermore, by triangle inequality,

when \(0<\tau \le \tau _0\) for some \(\tau _0>0\) independent of \(\varepsilon \) and n. Thus (3.4b) also holds for \(n=m+1\) and the proof is completed. \(\square \)

Remark 3.2

We remark in the Theorem 3.1 and the proof, we use the assumption the nonlinearity \(\mathbf {f}\) is smooth in \(\mathbb {R}^d\). Thus the error estimates (3.4a) in the Theorem 3.1 do not give any clues about the error bounds of the MTIs for solving the rational type nonlinearities generated by unbond potentials. We will study this case numerically later.

4 Numerical results

In this section, we show the numerical results of the proposed MTIs for both polynomial case and smooth general case. Also, we show the numerical results of the rational type nonlinearity. As benchmark of comparisons, we consider the EIs with three most popular filters as suggested in [36].

The general scheme of the EIs with filters are widely presented in the literatures [33, 36,37,38,39, 42]. Three most popular groups of filters which offer the best numerical performance for solving (1.1) with energy bounded type initial data are suggested in [36] as

The filter (4.1a) is proposed by García-Archilla et al. in [31] (shorted as GA), (4.1b) is proposed by Hochbruck and Lubich in [39] (shorted as HL) and (4.1c) is proposed by Grimm and Hochbruck in [36] (shorted as GH).

Firstly, we test the MTI (2.11) for the polynomial nonlinearity case. We choose

and

in model (1.7). Here since the analytical solution to this problem is not available, the ‘exact’ solution \(\mathbf {y}(T)\) is obtained numerically by the MTI (2.11) under a very small time step \(\tau = 10^{-5}\). The position error \(\mathbf {\mathrm {e}}^N=\mathbf {y}(T)-\mathbf {y}^N\) is computed at \(N=\frac{T}{\tau }\) and measured under standard Euclidian norm. The results of the MTI method and the three chosen EIs under different time steps \(\tau \) and \(\varepsilon \) are shown in Table 2 at \(T=2\).

Secondly, we test the MTI (2.19) for the general smooth nonlinearity case under condition (2.12). We choose

and

in problem (1.7). The position error of the MTIs and EIs under different time steps \(\tau \) and \(\varepsilon \) are shown in Table 3 at \(T=2\). We take the \(M=10\) in (2.17) and (2.18) to reach machine accuracy.

Thirdly, we test the MTIs for solving the ODEs (1.7) with rational type nonlinearity. We choose

and the nonlinearity generated by the Coulomb potential \(V_C\) (1.4), i.e.

with

in (1.7). \(M=10\) is also used in (2.17) and (2.18) to get machine accuracy. The position error of different numerical methods are shown in a similar way in Table 4 at \(T=2\).

From Tables 2, 3 and 4, Figs. 4, 5 and 6 and additional results not shown here for brevity, the following observations can be drawn:

-

1.

With fixed \(\tau \) as \(\varepsilon \) decreases, the MTIs in all cases have uniform error bounds (cf. Tables 2, 3 and 4), while the EIs have clearly increase in the sine nonlinearity test and the rational nonlinearity test (cf. Fig. 6).

-

2.

For the smooth nonlinearity cases, the MTIs have second order convergence in \(\tau \) when \(\tau \lesssim \varepsilon \) decreases (cf. Tables 2, 3; Fig. 4), and have second order convergence in \(\varepsilon \) when \(\varepsilon \lesssim \tau \) decreases (cf. Tables 2, 3; Fig. 5). Thus, the theoretical error estimates are optimal.

-

3.

The MTIs work very well for rational nonlinearity case from both stability and accuracy points of view (cf. Table 4). For all cases, the MTIs offer much smaller position error than the EIs under the same time step.

Next, we show a numerical example with zero mode frequency in the linear part and a standard forced Van der Pol oscillator type source term [16] with a frequency \(\upsilon >0\):

by using the MTIs with the techniques mentioned in Sects. 2.3 and 2.4.

The error at \(T=1\) for two different \(\upsilon \) under different \(\tau \) and \(\varepsilon \) are shown in Fig. 7. The numerical results show that the MTI with the techniques from Sects. 2.3 and 2.4 works well in this case with performance similarly as before and clearly once again illustrate that the convergence is uniform in \(\varepsilon \).

At last, we use an example to show the long-time behaviour of the proposed MTI for solving (1.7). We take in (1.7)

Then the energy H(t) is conserved by (1.7), i.e.

We solve (1.7) by MTI (2.11) and compute the numerical energy \(H^n=(\dot{\mathbf {y}}^n)^T\dot{\mathbf {y}}^n+\frac{1}{\varepsilon ^2}(\mathbf {y}^n)^T\varLambda \mathbf {y}^n+(y_1^ny_2^n)^2\) from the numerical solution. The relative energy error \(|H^n-H(0)|/|H(0)|\) for \(\varepsilon =0.1\) and \(\varepsilon =0.01\) are shown in Fig. 8 till \(t=100\) under several step size \(\tau \).

5 Conclusion

In this paper, we proposed some multiscale time integrators (MTIs) for solving oscillatory second order ODEs with large initial data that leads to unbounded energy in the limit regime. Comments on the relation between the energy unbounded type initial data and the widely used energy bounded type initial data in the ODEs are given. The MTIs are proposed based on modulated Fourier expansion of the solution at every time step. Detailed numerical schemes are derived for the case when the nonlinearity in the ODEs is a polynomial type, and for the case of general nonlinearity but with the linear oscillatory part in the ODEs is multiples of a single frequency. The schemes are fully explicit and easy to implement. Rigorous error estimates of the proposed MTIs for solving the oscillatory ODEs with smooth nonlinearity are established to show the uniform second order convergence rate when the oscillation frequency increases. Extensive numerical experiments of the MTIs are done to confirm the analysis together with comparisons with popular EIs. Numerical explorations of the MTIs for solving the ODEs with rational type nonlinearity are also done.

References

Ariel, G., Engquist, B., Kim, S., Lee, Y., Tsai, R.: A multiscale method for highly oscillatory dynamical systems using a Poincaré map type technique. J. Sci. Comput. 54, 247–268 (2013)

Ariel, G., Engquist, B., Tsai, R.: A multiscale method for highly oscillatory ordinary differential equations with resonance. Math. Comput. 78, 929–956 (2009)

Bao, W., Cai, Y., Zhao, X.: A uniformly accurate multiscale time integrator pseudospectral method for the Klein-Gordon equation in the nonrelativistic limit regime. SIAM J. Numer. Anal. 52, 2488–2511 (2014)

Bao, W., Dong, X., Zhao, X.: An exponential wave integrator pseudospectral method for the Klein-Gordon-Zakharov system. SIAM J. Sci. Comput. 35, A2903–A2927 (2013)

Bao, W., Dong, X., Zhao, X.: Uniformly correct multiscale time integrators for highly oscillatory second order differential equations. J. Math. Study 47, 111–150 (2014)

Bao, W., Zhao, X.: A uniformly accurate (UA) multiscale time integrator Fourier pseoduspectral method for the Klein-Gordon-Schrödinger equations in the nonrelativistic limit regime. Numer. Math. to appear (2016). doi:10.1007/s00211-016-0818-x

Bao, W., Zhao, X.: A uniformly accurate multiscale time integrator pseudospectral method for the Klein-Gordon-Zakharov system in the high-plasma-frequency limit regime. J. Comput. Phys. 327, 270–293 (2016)

Calvo, M.P., Chartier, Ph, Murua, A., Sanz-Serna, J.M.: A stroboscopic numerical method for highly oscillatory problems. Numer. Anal. Multiscale Comput., Lecture Notes in Computational Science and Engineering 82, 71–85 (2012)

Calvo, M.P., Chartier, Ph, Murua, A., Sanz-Serna, J.M.: Numerical experiments with the stroboscopic method. Appl. Numer. Math. 61, 1077–1095 (2011)

Castella, F., Chartier, Ph, Méhats, F., Murua, A.: Stroboscopic averaging for the nonlinear Schrödinger equation. Found. Comput. Math. 15, 519–559 (2015)

Chartier, Ph., Lemou, M., Méhats, F.: Highly-oscillatory evolution equations with multiple frequencies: averaging and numerics, preprint, hal-01281950 (2016)

Chartier, Ph., Méhats, F., Thalhammer, M., Zhang, Y.: Convergence analysis of multi-revolution composition time-splitting pseudo-spectral methods for highly oscillatory differential equations of Schrödinger type, preprint (2016)

Chartier, Ph, Makazaga, J., Murua, A., Vilmart, G.: Multi-revolution composition methods for highly oscillatory differential equations. Numer. Math. 128, 167–192 (2014)

Chartier, Ph, Crouseilles, N., Lemou, M., Méhats, F.: Uniformly accurate numerical schemes for highly oscillatory Klein-Gordon and nonlinear Schrödinger equations. Numer. Math. 129, 211–250 (2015)

Condon, M., Deaño, A., Iserles, A.: On highly oscillatory problems arising in electronic engineering. ESAIM Math. Model. Numer. Anal. 43, 785–804 (2009)

Condon, M., Deaño, A., Iserles, A.: On second order differential equations with highly oscillatory forcing terms. Proc. R. Soc. A 466, 1809–1828 (2010)

Condon, M., Deaño, A., Gao, J., Iserles, A.: Asymptotic solvers for ordinary differential equations with multiple frequencies, University of Cambridge DAMTP Tech. Rep., NA2011/11 (2011)

Condon, M., Iserles, A., Nørsett, S.P.: Differential equations with general highly oscillatory forcing terms. Proc. R. Soc. A 470 (2013). doi:10.1098/rspa.2013.0490

Cohen, D.: Conservation properties of numerical integrators for highly oscillatory Hamiltonian systems. IMA J. Numer. Anal. 26, 34–59 (2005)

Cohen, D., Gauckler, L.: One-stage exponential integrators for nonlinear Schrödinger equations over long times. BIT 52, 877–903 (2012)

Cohen, D., Hairer, E., Lubich, Ch.: Numerical energy conservation for multi-frequency oscillatory differential equations. BIT Numer. Math. 45, 287–305 (2005)

Cohen, D., Hairer, E., Lubich, Ch.: Conservation of energy, momentum and actions in numerical discretizations of non-linear wave equations. Numer. Math. 110, 113–143 (2008)

Cohen, D., Hairer, E., Lubich, Ch.: Modulated Fourier expansions of highly oscillatory differential equations. Found. Comput. Math. 3, 327–345 (2003)

Cohen, D.: Analysis and numerical treatment of highly oscillatory differential equations, Ph.D. thesis, Université de Genève (2004)

Deuflhard, P.: A study of extrapolation methods based on multistep schemes without parasitic solutions. ZAMP 30, 177–189 (1979)

Engquist, B., Tsai, Y.H.: Heterogeneous multiscale methods for stiff ordinary differential equations. Math. Comput. 74, 1707–1742 (2005)

Faou, E., Gauckler, L., Lubich, C.: Plane wave stability of the split-step Fourier method for the nonlinear Schrödinger equation. Forum Math. Sigma 2, e5 (2014)

Faou, E., Gauckler, L., Lubich, C.: Sobolev stability of plane wave solutions to the cubic nonlinear Schrödinger equation on a torus. Comm. Partial Differ. Equ. 38, 1123–1140 (2013)

Frénod, E., Hirstoaga, S., Sonnendrücker, E.: An exponential integrator for a highly oscillatory Vlasov equation. DCDS-S 8, 169–183 (2015)

Frénod, E., Hirstoaga, S., Lutz, M., Sonnendrücker, E.: Long time behaviour of an exponential integrator for a Vlasov-Poisson system with strong magnetic field. Commun. Comput. Phys. 16, 440–466 (2014)

Garcia-Archilla, B., Sanz-Serna, J.M., Skeel, R.D.: Long-time-step methods for oscillatory differential equations. SIAM J. Sci. Comput. 20, 930–963 (1998)

Gautschi, W.: Numerical integration of ordinary differential equations based on trigonometric polynomials. Numer. Math. 3, 381–397 (1961)

Gauckler, L.: Error analysis of trigonometric integrators for semilinear wave equations. SIAM J. Numer. Anal. 53, 1082–1106 (2015)

Gauckler, L., Lubich, C.: Nonlinear Schrödinger equations and their spectral semi-discretizations over long times. Found. Comput. Math. 10, 141–169 (2010)

Gauckler, L., Lubich, C.: Splitting integrators for nonlinear Schrödinger equations over long times. Found. Comput. Math. 10, 275–302 (2010)

Grimm, V., Hochbruck, M.: Error analysis of exponential integrators for oscillatory second-order differential equations. J. Phys. A Math. Gen. 39, 5495–5507 (2006)

Grimm, V.: On error bounds for the Gautschi-type exponential integrator applied to oscillatory second-order differential equations. Numer. Math. 100, 71–89 (2005)

Grimm, V.: A note on the Gautschi-type method for oscillatory second-order differential equations. Numer. Math. 102, 61–66 (2005)

Hochbruck, M., Lubich, Ch.: A Gautschi-type method for oscillatory second-order differential equations. Numer. Math. 83, 403–426 (1999)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Hairer, E., Lubich, Ch.: Long-time energy conservation of numerical methods for oscillatory differential equations. SIAM J. Numer. Anal. 38, 414–441 (2000)

Hairer, E., Lubich, Ch., Wanner, G.: Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations. Springer, Berlin (2006)

Hairer, E., Lubich, Ch.: On the energy disctribution in Fermi–Pasta–Ulam lattices. Arch. Ration. Mech. Anal. 205, 993–1029 (2012)

Leimkuhler, B., Reich, S.: Simulating Hamiltonian Dynamics. Cambridge University Press, Cambridge (2004)

Petzold, L.R., Jay, L.O., Yen, J.: Numerical solution of highly oscillatory ordinary differential equations. Acta Numer. 6, 437–483 (1997)

Sanz-Serna, J.M.: Mollified impulse methods for highly oscillatory differential equations. SIAM J. Numer. Anal. 46, 1040–1059 (1998)

Sanz-Serna, J.M.: Modulated Fourier expansions and heterogeneous multiscale methods. IMA J. Numer. Anal. 29, 595–605 (2009)

Tao, M., Owhadi, H., Marsden, J.E.: Non-intrusive and structure preserving multiscale integration of stiff ODEs, SDEs and Hamiltonian systems with hidden slow dynamics via flow averaging. Multiscale Model. Simul. 8, 1269–1324 (2010)

Wang, B., Iserles, A., Wu, X.: Arbitrary-order trigonometric Fourier collication methods for multi-frequency osciilatory systems. Found. Comput. Math. 16, 151–181 (2016)

Weinan, E.: Analysis of the heterogeneous multiscale method for ordinary differential equations. Commun. Math. Sci. 1, 423–436 (2003)

Weinan, E., Engquist, B.: The heterogeneous multiscale methods. Commun. Math. Sci. 1, 87–132 (2003)

Weinan, E., Engquist, B., Li, X., Ren, W., Vanden-Eijnden, E.: The heterogeneous multiscale method: a review. Commun. Comput. Phys. 2, 367–450 (2007)

Acknowledgements

This work is supported partially by the French ANR project MOONRISE ANR-14-CE23-0007-01 and partially by the Singapore A*STAR SERC PSF-Grant 1321202067. Part of the work was done the author was visiting the Institute for Mathematical Sciences at the National University of Singapore in 2015. The author would like to thank Prof. Weizhu Bao for stimulating discussion and thank the referees for their valuable suggestions that greatly improves the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by David Cohen.

Rights and permissions

About this article

Cite this article

Zhao, X. Uniformly accurate multiscale time integrators for second order oscillatory differential equations with large initial data. Bit Numer Math 57, 649–683 (2017). https://doi.org/10.1007/s10543-017-0646-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0646-0

Keywords

- Multiscale time integrator

- Oscillatory equations

- Large data

- Unbounded energy

- Error estimate

- Uniform accuracy

- Exponential integrator