Abstract

Semi-supervised feature selection plays a crucial role in semi-supervised classification tasks by identifying the most informative and relevant features while discarding irrelevant or redundant features. Many semi-supervised feature selection approaches take advantage of pairwise constraints. However, these methods either encounter obstacles when attempting to automatically determine the appropriate number of features or cannot make full use of the given pairwise constraints. Thus, we propose a constrained feature weighting (CFW) approach for semi-supervised feature selection. CFW has two goals: maximizing the modified hypothesis margin related to cannot-link constraints and minimizing the must-link preserving regularization related to must-link constraints. The former makes the selected features strongly discriminative, and the latter makes similar samples with selected features more similar in the weighted feature space. In addition, L1-norm regularization is incorporated in the objective function of CFW to automatically determine the number of features. Extensive experiments are conducted on real-world datasets, and experimental results demonstrate the superior effectiveness of CFW compared to that of the existing popular supervised and semi-supervised feature selection methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

For decades, semi-supervised learning has yielded promising results in situations where obtaining labeled data is an expensive or time-consuming process. As a pre-processing method in the semi-supervised learning domain, semi-supervised feature selection aims at identifying a subset of relevant and informative features from a large set of input features [1, 2]. By leveraging both labeled and unlabeled data, semi-supervised feature selection methods can enhance the performance of models by incorporating the underlying data structure information furnished by the unlabeled data. Presently, feature selection has some practical applications, such as cancer classification [3, 4], text classification [5, 6], and image classification [7].

Researchers have proposed numerous semi-supervised feature selection methods. From the perspective of the input semi-supervised information, two types of learning frameworks are available for these methods: label-guided and constraint-guided frameworks. A label-guided framework provides a labeled dataset containing a few labeled samples and many unlabeled samples, while a constraint-guided framework addresses a constrained dataset containing some pairwise constraints and numerous unlabeled samples. Note that a labeled dataset can be transformed into a constrained dataset in the meaning of neighborhood, but not vice versa. Thus, the methods utilizing these two learning frameworks intersect. We do not discuss the possible transformations between these two types of frameworks here, we simply describe methods that operate under these two paradigms.

Generally, semi-supervised algorithms are mainly constructed from supervised methods, unsupervised methods, and both types of methods under the label-guided framework [3, 8,9,10,11,12]. Some examples are given as follows. The neighborhood discrimination index (NDI) method is supervised [13], and a Laplacian score (LS) is unsupervised [14]. On the basis of the NDI and LS, Pang and Zhang proposed a semi-supervised neighborhood discrimination index (SSNDI) [3] and a recursive feature retention (RFR) method [8] for semi-supervised feature selection. The maximum-relevance and minimum-redundancy (MRMR) criterion is supervised [15], while the Pearson correlation coefficient (PCC) is unsupervised [12]. Based on the MRMR criterion and the PCC, a relevance, redundancy and Pearson criterion (RRPC) was proposed [12]. A multi-class semi-supervised LIR (MSLIR) method was proposed based on a supervised method called logistic I-Relief (LIR) [11]. This approach assigns pseudo labels to unlabeled data [9]. Tang and Zhang developed a local preserving LIR (LPLIR) method by incorporating a manifold regularization term into LIR [10]. A locality sensitive discriminant feature (LSDF) algorithm was presented based on the Fisher criterion and two adjacent graphs. This method aims to maximize the margin between samples that belong to different classes and discover the geometric structure of data using both labeled and unlabeled data [16]. It is interesting to note that the LSDF algorithm can be easily converted into a constrained-guided framework [17].

During the data annotation process, we may not know anything about the labels of samples without a priori knowledge, but we may judge whether two samples are similar or not. Constraints are binary annotations that indicate whether two samples are similar (must-link constraints) or not similar (cannot-link constraints) [18, 19]. In the constraint-guided framework, algorithms can utilize constraints and unlabeled information by constructing graphs. Classic supervised constraint scores (CSs) were proposed to evaluate the relevance of features using Laplacian matrices constructed according to pairwise constraints [20]. On the basis of CSs, many graph-based semi-supervised constraint scores have been proposed. Benabdeslem and Hindawi [21] introduced a new semi-supervised method based on CSs, called constrained Laplacian score (CLS). CLS uses the given must-link and cannot-link constraints to construct adjacent graphs and then corresponding Laplacian matrices. Salmi et al. [17] proposed a new constraint score based on a similarity matrix, called the similarity-based constraint score (SCS). The SCS can be implemented in the selected feature subspace, and it constructs similarity graphs to evaluate the relevance of feature subsets. Samah et al. [22] developed a basic Relief with side constraints (Relief-SC) algorithm that adopts only cannot-link constraints to solve a simple convex problem in a closed form. Chen et al. [23] took advantage of CSs and Relief-SC and then explored a new semi-supervised method called an iterative constraint score based on hypothesis margin (HM-ICS). This method not only considers the relevance between features but also calculates the hypothesis margin of a single feature.

Under the constraint-guided framework, the above semi-supervised methods cannot automatically determine the optimal number of features, which is the main issue faced by these methods. In addition, Relief-SC is unable to fully utilize pairwise constraints, which causes it to miss the information provided by must-link constraints, and it is sensitive to the given cannot-link constraints because of a lack of adequate neighborhood information.

To overcome the drawbacks of Relief-SC, this study proposes a novel semi-supervised feature selection approach, called constrained feature weighting (CFW). By utilizing the hypothesis margin idea derived from Relief-SC, CFW modifies the definition of the hypothesis margin calculated from cannot-link constraints to enrich the available neighborhood information. By adjusting the hypothesis margin in a logarithmic manner and introducing an L1-norm regularization term, the optimization process of CFW tends to produce a sparse solution, effectively selecting only the most informative features with non-zero weights and leading to a more compact and interpretable model. Moreover, CFW designs a must-link preserving regularization that contains the information provided by must-link constraints, which can be used to make similar samples with selected features more similar in the weighted feature space. The main contributions of this study are as follows.

-

We redefine the process of calculating the hypothesis margin to reduce its sensitivity to cannot-link constraints. The modified hypothesis margin is derived from multiple nearest neighbors of the cannot-link constraints, whose probabilities are also considered to ensure the robustness of the selection process.

-

We design a must-link preserving regularization that contains information provided by must-link constraints. Our goal is to minimize this regularization to maintain the desired relationships between the input samples. In this case, similar (or must-link) samples are more similar in the weighted feature space.

-

We propose CFW based on the modified hypothesis margin concept and the must-link preserving regularization. CFW makes full use of pairwise constraints and the given unlabeled data, where the modified hypothesis margin depends on the cannot-link constraints and unlabeled samples, and the must-link preserving regularization considers the information contained in the must-link constraints. In addition, CFW can automatically determine the optimal number of features by incorporating the L1-norm regularization term into its objective function.

The remainder of this paper is organized as follows. Section 2 provides a brief explanation of the pairwise constraints and algorithms related to the hypothesis margin. In Section 3, we describe our proposed method in detail. Furthermore, experimental results are presented in Section 4. Finally, Section 5 concludes this paper.

2 Related work

In this section, we briefly review some constraint-guided methods: CS, LSDF, CLS, Relief-SC and HM-ICS. Under a constraint-guided semi-supervised learning framework, assume that we have two sets of pairwise constraints, \(\mathcal {M}\) and \(\mathcal {C}\), and a set \(X=\{\textbf{x}_1,\cdots ,\textbf{x}_n\}\) of unlabeled samples, where \(\textbf{x}_i=[x_{i1},\cdots , x_{id}]^T\in \mathbb {R}^{d}\) is the i-th unlabeled sample, d is the number of features, n is the number of samples, \(\mathcal {M}\) is the set of must-link constraints, and \(\mathcal {C}\) is the set of cannot-link constraints.

Let \(F=\{f_1, \cdots , f_d\}\) be the set of feature indexes, and \(\textbf{F}=[\textbf{f}_1, \cdots , \textbf{f}_d]\in \mathbb {R}^{n\times d}\) be the feature matrix, where \(\textbf{f}_r=[x_{1r},\cdots , x_{nr}]^T\in \mathbb {R}^{n}\) is the r-th feature vector.

2.1 CS

Algorithms under the constraint-guided framework are often designed according to the basic CS method. The original CS method is supervised and computes a score for each feature according to both \(\mathcal {M}\) and \(\mathcal {C}\) [20]. For a feature \(f_r\in F\), the score function is as follows:

CS can be expressed in matrix form using similarity matrices \(\textbf{W}^{\mathcal {M}}\) and \(\textbf{W}^{\mathcal {C}}\), which can be constructed by constraint sets \(\mathcal {M}\) and \(\mathcal {C}\), respectively. That is

and

where \(W_{i j}^{\mathcal {M}}\) and \(W_{i j}^{\mathcal {C}}\) are the i-th and j-th entries of \(\textbf{W}^\mathcal {M}\) and \(\textbf{W}^\mathcal {C}\), respectively.

Then CS can be expressed as

According to CS, some semi-supervised CS methods, such as LSDF [16] and CLS [21], have been proposed.

2.2 LSDF

Zhao et al. [16] proposed LSDF, where \(\textbf{W}^{\mathcal {M}}\) is substituted with \(\textbf{W}^{KNN}\). This approach constructs the similarity matrix \(\textbf{W}^{KNN}\) using both must-link constraints and unlabeled data samples. That is

where \(\gamma \) serves as a constant, \(KNN(\textbf{x}_j)\) denotes the set of k nearest neighbors of the sample \(\textbf{x}_j\), and \(X_U \subset X\) is the set of unlabeled samples.

The feature score formula for LSDF is as follows:

where \(\textbf{L}^{KNN}=\textbf{D}^{KNN}-\textbf{W}^{KNN}\), \(\textbf{L}^{KNN}\) is the unnormalized constrained Laplacian matrix of \(\textbf{W}^{KNN}\), and \(\textbf{D}^{KNN}\) is the diagonal matrix that is calculated from \(\textbf{W}^{KNN}\).

2.3 CLS

Benabdeslem et al. [21] proposed a CLS method that modifies the similarity matrix (6), ensuring that the k nearest neighbors or must-link constraints are close to each other. The similarity matrix \(\textbf{W}^{C L S}\) of CLS is formulated as follows:

where the similarity value \(w_{ij}\) is computed by the heat kernel function and has the form

\(\delta \left( \textbf{x}_i, \textbf{x}_j\right) \) is the Euclidean distance between two samples \(\textbf{x}_i\) and \(\textbf{x}_j\), and \(\sigma \) is a scaling parameter.

CLS is defined in terms of Laplacian matrices as follows:

where \(\textbf{L}^{CLS}=\textbf{D}^{CLS}-\textbf{W}^{CLS}\), \(\textbf{L}^{CLS}\) represents the unnormalized constrained Laplacian matrix of \(\textbf{W}^{CLS}\), and \(\textbf{D}^{CLS}\) is the diagonal matrix derived from \(\textbf{W}^{CLS}\).

2.4 Relief-SC

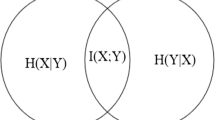

The hypothesis margin concept is derived from Relief-based methods [9,10,11, 24]. The hypothesis margin \(\varvec{\rho }(\textbf{x}_i)\) of a sample \(\textbf{x}_i\) is the difference between the distance from \(\textbf{x}_i\) to its nearest miss (or the nearest sample to \(\textbf{x}_i\) in the opposite class) and the distance from \(\textbf{x}_i\) to its nearest hit (or the nearest sample in the same class as that of \(\textbf{x}_i\)). That is,

where \(\textbf{H}(\textbf{x}_i)\) is the nearest hit of \(\textbf{x}_i\), and \(\textbf{M}(\textbf{x}_i)\) is the nearest miss of \(\textbf{x}_i\). Note that (11) is defined based on label information. Thus, Relief-based methods are mostly supervised [11, 24], and the corresponding semi-supervised approaches are under the label-guided framework [9, 10].

Under the constraint-guided learning framework, Samah et al. [22] proposed Relief-SC using only cannot-link constraints \(\mathcal {C}\). In this case, the hypothesis margin is re-designed for a cannot-link constraint and denoted by \(\varvec{\rho }\left( \textbf{x}_i, \textbf{x}_j\right) \) with pairwise constraint \(\left( \textbf{x}_i, \textbf{x}_j\right) \in \mathcal {C}\). Then, the hypothesis margin vector of \(\left( \textbf{x}_i, \textbf{x}_j\right) \in \mathcal {C}\) has the form shown below:

In (12), the nearest miss of \(\textbf{x}_i\) is given by \(\textbf{H}(\textbf{x}_j)\), which is also the nearest hit of \(\textbf{x}_j\). The objective function of Relief-SC can be described as follows:

where \(\Vert \cdot \Vert _2\) is the L2-norm of a vector, and \(\textbf{w}\) is the feature weighting vector that reveals the impact of each feature on enlarging the margin. In fact, \(\textbf{w}\) can also be considered feature scores. The higher a weight value is, the more discriminative the corresponding feature is.

2.5 HM-ICS

The above methods all give scores to features according to some criteria related to pairwise constraints. However, these methods ignore the correlation between features.

Based on (2) and (12), Chen et al. [23] proposed the HM-ICS algorithm for semi-supervised feature selection. Let R be the current selected feature subset. For any feature \(f_r\) in the candidate feature subset \(\bar{R} \subset F\), HM-ICS calculates the score of \(R\cup \left\{ f_{r}\right\} \), which is defined as follows:

where \(ICS\left( R\cup \{ f_{r}\}\right) \) is the iterative form of the CS for the subset \(R \cup \left\{ f_{r}\right\} \), \(\lambda \in [0,1]\) is a regularization parameter, \(\gamma >0\) is a constant parameter, and \(w_{r}\) is the weight of \(f_{r}\), which is calculated by a modified Relief-SC algorithm. In each iteration, HM-ICS selects the feature \(f_{r}\) with the smallest score \(J\left( R\cup \{ f_{r}\}\right) \), R is updated by adding this feature, and \(\bar{R}\) is updated by deleting it.

The iterative constraint score \(ICS\left( R\cup \{ f_{r}\}\right) \) in HM-ICS has the following form:

The modified Relief-SC in HM-ICS simplifies the hypothesis margin concept and defines a new margin formula as follows [23]:

It can be seen that the difference between (16) and (12) is that the modified Relief-SC strategy replaces \(\textbf{H}\left( \textbf{x}_{j}\right) \) with \(\textbf{x}_j\) for simplicity.

3 Proposed method

This section discusses the proposed CFW algorithm in detail. We first provide a new way to calculate the hypothesis margin with cannot-link constraints and then design a must-link preserving regularization that can maintain the underlying relationships between samples. Next, we describe the objective function of CFW and its solution. Finally, we present the algorithm description of CFW. We use the notations mentioned before and assume that \(\left( X, F, \mathcal {M},\mathcal {C}\right) \) is a semi-supervised information system, where X is a set of unlabeled samples, F is a set of feature indexes, and \(\mathcal {M}\) and \(\mathcal {C}\) are sets of must-link and cannot-link constraints, respectively.

3.1 Modified hypothesis margin

For the first time, Relief-SC provides a way to calculate the hypothesis margin based on cannot-link constraints [22], as shown in (12). However, Relief-SC is sensitive to the given cannot-link constraints. To remedy this drawback, we redefine the hypothesis margin calculation process with cannot-link constraints.

The sensitivity issue is caused by the lack of adequate neighborhood information. Relief-SC calculates the hypothesis margin with only one nearest hit for each of two dissimilar samples in a cannot-link constraint. Thus, it is possible to enrich the neighborhood information by finding more near hits. Considering a cannot-link constraint \(\left( \textbf{x}_i, \textbf{x}_j\right) \in \mathcal {C}\), we define its hypothesis margin as follows:

where \(NH\left( \textbf{x}_i \right) \) represents the set containing the k nearest neighbors of sample \(\textbf{x}_i\) under the weighted feature space, and \(P\left( \textbf{x}_s = H\left( \textbf{x}_i \right) |\textbf{w} \right) \) denotes the probability that \(\textbf{x}_s\) is the nearest hit of \(\textbf{x}_i\) under the weighted feature space. One possible way to calculate the probability is

where \(\left\| \textbf{x}_i-\textbf{x}_s \right\| _{\textbf{w}} = \sum _{r=1}^d w_s \left| x_{ir}-x_{sr} \right| \) refers to the weighted distance between \(\textbf{x}_i\) and \(\textbf{x}_s\) under the weighted feature space, and \(\sigma >0\) is a preset parameter.

Equation (17) improves the process of calculating the hypothesis margin (12) from two perspectives. First, (17) considers not only the k nearest neighbors of \(\textbf{x}_i\) and \(\textbf{x}_j\) separately but also their probabilities of being the nearest hit, which enriches the neighborhood information and alleviates the sensitivity of (12) to constraints. Second, the hypothesis margin in (17) is calculated in the weighted feature space, which adaptively adjusts the margin with feature weights during the iteration process and then finds discriminative features.

3.2 Must-link preserving regularization

As mentioned before, the hypothesis margin uses only the information contained in the cannot-link constraints. To incorporate the information provided by must-link constraints, we define a must-link preserving regularization that can maintain the data structure of the must-link constraints and make similar samples more similar. The must-link preserving regularization in the weighted feature space is defined as follows:

where the feature weight \(\textbf{w} \ge 0\), and \(\odot \) is the element-wise multiplication operator.

In accordance with (19), the must-link preserving regularization \(J_{R}\left( \textbf{w}\right) \) describes the scatter of the similar samples provided by the must-link constraints in the weighted feature space induced by \(\textbf{w}\). Minimizing \(J_{R}\left( \textbf{w} \right) \) means that similar samples should be as close as possible in the weighted feature space. In other words, the must-link structure in the original space is maintained in the weighted feature space, as a smaller distance signifies a stronger similarity between the corresponding samples.

Now, we express the must-link preserving regularization in matrix form. First, we need to construct a similarity graph \(S^{\mathcal {M}}\) according to the must-link constraints \(\mathcal {M}\) by taking samples in the set X as vertices. In this graph, if \(\left( \textbf{x}_i,\textbf{x}_j\right) \in \mathcal {M}\), then an edge exists between them. Thus, the similarity matrix \(\textbf{S}^{\mathcal {M}}\) is represented as follows:

where \(S_{i j}^{\mathcal {M}}\) is the i-th and j-th entries of \(\textbf{S}^\mathcal {M}\). For simplification, let \(\textbf{m}_i\) be the weighted image of \(\textbf{x}_i\) in the weighted feature space; that is, \(\textbf{m}_i = \textbf{w} \odot \textbf{x}_i\), \(i=1,\cdots ,n\). By substituting \(\textbf{m}_i,~i=1,\cdots ,n\) and \(S^{\mathcal {M}}\) into (19), we obtain

where \(trace(\cdot )\) denotes the sum of the diagonal elements of a matrix, \(\textbf{M} = [\textbf{m}_1, \cdots , \textbf{m}_n]\in \mathbb {R}^{d \times n}\), \({D}_{i i}^ \mathcal {M}=\sum _{j=1}^{n} S_{ij}^{\mathcal {M}}\) denotes the diagonal element of \(\textbf{D}^\mathcal {M}\), and the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\) is defined as

Furthermore, \(\textbf{M} = \textbf{F} \textbf{W} \) because \(\textbf{m} = \textbf{w} \odot \textbf{x}\), where \(\textbf{F}\in \textbf{R}^{n\times d}\) is the feature matrix, and \(\textbf{W} = diag(\textbf{w})\) is the diagonal matrix. Obviously, \(J_{R}\left( \textbf{w} \right) \) can be rewritten as

3.3 Optimization problem and solution

On the basis of the modified hypothesis margin (17) and the must-link preserving regularization (23), we construct the optimization problem for CFW. That is,

where \(\textbf{z}\) is the margin vector calculated by the cannot-link constraints, \(\Vert \textbf{w}\Vert _{1}\) is the L1-norm of \(\textbf{w}\), \(\textbf{Q}=\textbf{F}^T \textbf{L}^{\mathcal {M}} \textbf{F} \in \mathbb {R}^{d \times d}\), the parameter \(\lambda _1 \ge 0\) controls the sparsity level in the weight vector \(\textbf{w}\), and the parameter \(\lambda _2 \ge 0\) controls the must-link information of the samples. Here, the margin vector \(\textbf{z}\) is expressed by

where \(\varvec{\rho }\left( \textbf{x}_i, \textbf{x}_j\right) \) is defined in (17).

The objective function of (24) consists of three terms. The first term in (24) is minimized to maximize the modified hypothesis margin calculated from the cannot-link constraints. In addition, the exponential and logarithmic functions are used to adjust the range of \(\textbf{w}^T\textbf{x}\) and encourage the model to produce accurate predictions. The second term, \(\Vert \textbf{w}\Vert _{1}\), is the L1-norm regularization term, which encourages sparsity in the weight vector \(\textbf{w}\) and can automatically select features. A larger \(\lambda _{1}\) value leads to stronger sparsity enforcement. The third term in (24) focuses on preserving a structure similar to that provided by the must-link constraints in the weighted feature space.

To solve CFW, we first prove that the optimization problem (24) is convex; thus it must have a globally optimal solution. Then, we demonstrate how to use the gradient descent method to obtain the final solution.

Theorem 1

Given the feature matrix \(\textbf{F}\in \mathbb {R}^{n \times d}\) and the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\), the must-link preserving regularization (19) is a convex function with respect to \(\textbf{w}\), where \(\textbf{w} \ge 0\).

Theorem 2

Given the feature matrix \(\textbf{F}\in \mathbb {R}^{n \times d}\) and the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\), the optimization problem (24) is a convex problem with respect to \(\textbf{w}\), where \(\textbf{w} \ge 0\).

Corollary 1

Given the feature matrix \(\textbf{F}\in \mathbb {R}^{n \times d}\) and the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\), the optimization problem (24) has a global solution with respect to \(\textbf{w}\).

The proofs of Theorems 1 and 2 are given in Appendices A and B, respectively. Theorem 1 indicates that when the margin vector \(\textbf{z}\) is fixed, \(J_{R}\left( \textbf{w} \right) \) is a convex function with respect to \(\textbf{w}\). Theorem 2 implies that the problem in (24) is a convex problem. For convex problems, it is known that any locally optimal point is also globally optimal. Thus, Corollary 1 holds true, and its proof can be omitted. Naturally, the convex problem could be solved by applying the gradient descent method.

For the purpose of applying the gradient descent method, when the margin vector \(\textbf{z}\) is fixed, we need to find the first partial derivative of \(J(\textbf{w})\) with respect to \(\textbf{w}\), which can be expressed as follows:

where \(\textbf{q}=\left[ Q_{11},\cdots ,Q_{dd}\right] ^T\in \mathbb {R}^{d}\) is a vector composed of the diagonal elements of \(\textbf{Q}\). After obtaining \(\nabla J(\textbf{w})\), we can iteratively update the weight vector \(\textbf{w}\). Let t be the iteration variable. Then, we have

where \(0 < \eta \ \le 1\) is the learning rate. Due to the constraint of \(\textbf{w} \ge 0\), \(\textbf{w}\) should abide by the following rules in each iteration:

3.4 Algorithm description

CFW implements semi-supervised feature selection under the constraint-guided learning framework. CFW maximizes the hypothesis margin to magnify the discriminative features using cannot-link constraints and minimizes the must-link preserving regularization to strengthen the local structure of the similar samples.

A detailed description of the proposed algorithm is given in Algorithm 1. In step 1, CFW starts by initializing the feature weight vector \(\textbf{w}(0)=[1,\cdots ,1]^{T}\in \mathbb {R}^{d}\) and the margin vector \(\textbf{z}(0)=[0, \cdots , 0]^{T}\in \mathbb {R}^d\), where d is the number of features. Step 2 constructs a Laplacian matrix \(\textbf{L}^\mathcal {M}\) using (22). Steps 3–7 iteratively update the weight vector \(\textbf{w}\) based on the calculated margin vector until one of the preset convergence conditions is satisfied. The final weight vector \(\textbf{w}\) is returned in step 8.

Subsequently, we analyze the computational complexity of CFW. The computational complexity of constructing the Laplacian matrix \(\textbf{L}^\mathcal {M}\) by (22) is \(O\left( n^2d\right) \), where n is the number of samples, and d is the number of features. Step 4 computes the margin vector \(\textbf{z}(t)\) via (25), which has a computational complexity level of \(O\left( \left| \mathcal {C}\right| nd\right) \), where \(\left| \mathcal {C}\right| \) is the number of cannot-link constraints. Step 5 updates \(\textbf{w}\left( t\right) \) and has a computational complexity level of \(O\left( nd\right) \). In each iteration, CFW has a computational complexity level of \(O\left( \left| \mathcal {C}\right| nd\right) \). Then the total computational complexity of CFW is \(O\left( T\left| \mathcal {C}\right| nd + n^2d\right) \).

Lastly, we delve into the properties of CFW. According to Theorem 2 and Corollary 1, the problem formulated in (24) is convex with respect to \(\textbf{w}\) and ensures a globally optimal solution. CFW is guaranteed to converge if \(\textbf{z}(t)\) remains fixed and the gradient descent method is applied to solve (24). However, \(\textbf{z}(t)\) evolves during the iteration process. The subsequent theorem explores how changes in \(\textbf{z}(t)\) influence on the solution to (24).

Theorem 3

Given the learning procedure of CFW in Algorithm 1, the following inequalities

hold true, where \(J(\textbf{w}(t) \mid \textbf{z}(t))\) represents the objective function \(J(\textbf{w}(t))\) when \(\textbf{z}(t)\) is fixed, \(t\ge 0\).

The proof of Theorem 3 is provided in Appendix C. Theorem 3 demonstrates that \(J(\textbf{w}(t))\) represents a better solution than \(J(\textbf{w}(t-1))\) when \(\textbf{z}(t)\) is fixed, which could be attributable to the gradient descent update rule. In essence, regardless of changes in \(\textbf{z}(t)\), the solution derived in the current iteration is better than the one from the previous iteration.

4 Experiments

To validate the feasibility and effectiveness of CFW, we perform extensive experiments on nine public datasets. The information of these datasets is listed in Table 1, including the number of samples (\(\#\) Sample), the number of features (\(\#\) Feature), and the number of classes (\(\#\) Class) in each dataset. The features contained in all datasets are normalized to the interval of \(\left[ 0,1\right] \).

All experiments were carried out in Pycharm 2020 and run in a hardware environment with an Intel Core i9 CPU at 2.50 GHz and 32 GB of RAM.

4.1 Analysis of CFW

We analyze our proposed CFW method according to its convergence, sparsity and discriminant ability, parameter sensitivity, and number of constraints. Each of the nine datasets [25,26,27,28,29,30,31,32,33] in Table 1 is randomly divided into a training set with 2/3 of the total samples and a test set with the remaining samples. In addition, we randomly select samples from the training set to construct a certain number of pairwise constraints, where one half of the constraints are must-link constraints, and the other half are cannot-link constraints.

4.1.1 Convergence

In experiments, we select 100 pairwise constraints, including 50 must-link constraints and 50 cannot-link constraints. Let \(\eta =0.01\) in (27), \(k=5\) in (17), which follows the setting used in [16], and the regularization parameters \(\lambda _1=1\) and \(\lambda _2=1\). The convergence of CFW can be validated by observing the variation exhibited by the objective function with respect to the iteration variable. Thus, we consider only the maximum number of iterations as the stop condition of CFW and set \(T=200\).

Figure 1 shows the trend curves yielded by the objective function vs. the number of iterations on the nine datasets. From Fig. 1, we can see that the objective function arrives at its minimum value after a certain number of iterations, which indicates that CFW is convergent. Generally, CFW converges within 50 iterations on most datasets, such as CNS (Fig. 1b) and Glioma (Fig. 1d). Notably, CFW can converge faster or slower, depending on the utilized dataset. For example, CFW converges quickly on the Colon dataset (Fig. 1c) and slowly on the CNAE-9 dataset (Fig. 1a). In short, CFW is convergent.

Based on the experiments conducted above, we find that CFW converges within 100 iterations on all datasets, with many requiring less than 50 iterations. To provide a comprehensive overview, we set the number of iterations to 100 and summarize the running times required by the CFW algorithm on these datasets in Table 2.

Referring to Table 2, it is evident that the CFW demonstrates commendable efficiency, with execution times under one minute on five datasets. For instance, a mere 25 seconds running time is required on the CNS dataset. The maximum recorded running time for CFW is 321 seconds on the CNAE-9 dataset. As analyzed in Section 3.4, the computational complexity of CFW is related to only the sample number n and the feature number d when T and \(\mathcal {C}\) are given. Thus, CFW will take more time when dealing with the dataset with large number of samples and features, which is supported by running times in Table 2.

4.1.2 Sparsity and discriminant capability

The experimental settings employed here are the same as those utilized in Section 4.2.1 except the stopping conditions of CFW. Let \(T=100\) and \(\theta =0.001\) in step 3 of Algorithm 1. Because CFW is developed based on Relief-SC, we compare them on their sparsity and discriminant capabilities.

First, we observe the sparsity of CFW by plotting its feature weights on nine datasets, as shown in Fig. 2. Although both methods exhibit certain degrees of sparsity, CFW is much sparser than Relief-SC. We count the numbers of non-zero weights produced by both CFW and Relief-SC for each of these nine datasets and summarize them in Table 3, which further implies the good sparsity of CFW. For example, 2000 features are contained in the Colon dataset. CFW obtains 214 non-zero weights, while Relief-SC generates 1979 non-zero weights. Note that Relief-SC cannot always obtain sparse weights for some datasets, such as ORL. At the same time, we list the accuracies achieved by the nearest neighbor (NN) classifier with the features selected by both methods in Table 3. The findings indicate that CFW has better classification performance and can select more discriminative features than Releif-SC.

To further validate the discriminant capability of the features, we use the idea behind the Fisher criterion. Namely, good features minimize the must-link scatter and maximize the cannot-link scatter. Let D(X) be the ratio of the must-link scatter to the cannot-link scatter with respect to the set X, which can be defined as follows:

Generally, the smaller D(X) is, the stronger the discriminant ability the features in the set X have. Let \(X_w\) be the weighted feature space, i.e., \(\textbf{x}_w=\textbf{w} \odot \textbf{x}\), where \(\textbf{x}_w\in X_w\) and \(\textbf{x}\in X\). Thus, we hope that \(D(X_w)\) is much smaller than D(X). In other words, it is better to make \(D(X)/D(X_w)\) large.

Table 4 lists values of D(X), \(D(X_w)\) and \(D(X)/D(X_w)\) obtained by CFW and Relief-SC. As can be seen from Table 4, the \(D(X_w)\) values obtained by CFW are much smaller than the D(X) values for all datasets, while the \(D(X_w)\) values obtained by Relief-SC are not always smaller than the D(X) values of all datasets. The \(D(X)/D(X_w)\) values obtained by CFW are all greater than 1, which indicates that CFW selects highly discriminant feature subsets. However, the \(D(X)/D(X_w)\) values obtained by Relief-SC are near 1 and even less than 1, which suggests that Relief-SC does not significantly improve the discriminant ability of the selected feature subset.

4.1.3 Parameter sensitivity

Here, we investigate the sensitivity of the parameters \(\lambda _1\) and \(\lambda _2\) in CFW and keep the other experimental settings unchanged. The value range for these two parameters is set to \(\{0.01, 0.1, 1, 10, 100\}\).

The parameter analysis results are given in Fig. 3. As evident from this figure, the regularization parameters have different effects on the classification performance achieved on different datasets. For example, the CNAE-9 dataset (Fig. 3a) is significantly influenced by the parameters \(\lambda _1\) and \(\lambda _2\), exhibiting substantial variations. On some datasets (Colon, Glioma, Normal, and Prostate-GE), the accuracy of CFW fluctuates with the parameters. Conversely, the remaining datasets display relatively minimal fluctuations.

Furthermore, Fig. 3(c), (d), (e), (f), and (i) illustrate that the proposed algorithm achieves the highest classification accuracy when \(\lambda _1\)=1 and \(\lambda _2=1\). Thus, we suggest that \(\lambda _1\)=1 and \(\lambda _2=1\) in the following experiments.

4.1.4 Number of constraints

Now, we analyze the impact of the number of pairwise constraints on the classification accuracy of CFW and keep the other experimental settings the same as before. The number of total pairwise constraints varies within the set \(\{4, 20, 40, \cdots , 180, 200\}\), where the number of must-link constraints is the same as the number of cannot-link constraints.

Figure 4 plots curves showing the accuracy vs. number of constraints obtained by CFW on each of the nine datasets. Notably, the classification accuracy fluctuates with the number of constraints. At the beginning, the classification accuracy varies significantly when increasing the number of pairwise constraints, this satisfies our expectation that more constraints would induce better performance. However, when the number of pairwise constraints increases beyond a certain value, i.e., 80, the classification performance displays only minor fluctuations, which means that we cannot further improve the performance of the model by increasing the number of constraints. The main reason for this finding is the limitation imposed by supervised information. In the experiments, pairwise constraints are constructed from a limited training set that provides limited supervised information. The small observed classification performance fluctuations may be due to the randomness of constructing constraints.

4.2 Comparison under constraint-guided learning framework

4.2.1 Experimental setting

A 3-fold cross-validation method [34] is employed on the nine datasets. Specifically, each dataset is randomly divided into three subsets, where two subsets are used for training and the third subset is employed for testing. Thus, 3 trials are implemented in the 3-fold cross-validation process. The average results of ten 3-fold cross-validation experiments, totally 30 trials, are reported. In each trial, we construct 50 cannot-link and 50 must-link constraints from the training set.

Six CS-based feature selection methods are compared with CFW, including CS [20], LSDF [16], CLS [21], Relief-SC [22], SCS [17], and HM-ICS [23]. In addition, a part of CFW is related to Relief-SC, and this component is called CFW-SC. In detail, the objective function of CFW-SC contains the first two terms in (24) and can be also solved by the gradient descent method. Here, CFW-SC is included in our list of comparison methods.

The parameter settings of the compared supervised and semi-supervised feature selection algorithms are all derived from their corresponding references. Note that the supervised learning algorithms, e.g., the CS, handle only pairwise constraints. The semi-supervised learning algorithms utilize not only the constraints but also the set of unlabeled training samples. In both CFW and CFW-CS, we set \(k=5\), \(\eta =0.01\), \(\theta =0.001\), \(T=100\), and \(\lambda _1=1\). Additionally, let \(\lambda _2=1\) for CFW. Because the compared methods cannot determine the optimal number of features, we assume that the number of optimal features varies within the set \(\{20,40,\cdots ,200\}\).

4.2.2 Experimental results

Figure 5 presents comparisons among the results produced by the different feature selection algorithms on the nine datasets under the constraint-guided learning framework. We first analyze the experimental results as a whole. Observation on Fig. 5 indicates that CFW achieves better classification performance than that of the other compared methods. The curves depicted in Fig. 5(b), (d), (f), and (g) clearly indicate that CFW consistently surpasses the other methods in terms of all 10 feature numbers across the CNS, Glioma, Novartis, and ORL datasets. On the CNAE-9, Colon, and Normal datasets, it is worth noting that CFW achieves the highest classification accuracies, but it does not exhibit superiority with respect to all 10 feature numbers. Next, we analyze CFW, CFW-SC, and Relief-SC. As a variant of Relief-SC, CFW-SC achieves higher accuracies than Relief-SC on most datasets, as demonstrated in Fig. 5(b), (c), (d), (e), (g), and (i). By incorporating the must-link constraints into CFW-SC, CFW can obtain more supervised information and then achieve better performance.

According to Fig. 5, we summarize the highest average accuracies and the corresponding standard deviations produced by the compared methods in Table 5, where the bold values represent the best results among the compared methods, and the numbers in brackets represent the optimal number of features. It can be seen that CFW-SC performs much better than Relief-SC on all datasets except CNAE-9 and Novartis, which validates the efficiency of CFW-SC in terms of improving Relief-SC by enriching the neighborhood information. Additionally, CFW is superior to CFW-SC on all datasets, which suggests that it is necessary to introduce the supervised information provided by must-link constraints. As evident from the results presented in Table 5, CFW consistently achieves the best performance across all datasets. For example, on the CNAE-9 dataset, CFW achieves a 1.92% higher accuracy rate than that of HM-ICS (the second best method) and a 2.58% improvement over Relief-SC (the third best method). These findings demonstrate the superiority of CFW with respect to selecting discriminative features in comparison with other methods.

The superiority of CFW can be ascribed to two key factors. First, the innovative hypothesis margin calculation formula developed for CFW enhances the discriminative power of the selected features. Second, the incorporation of the must-link preserving regularization term ensures that the chosen features effectively preserve the information embedded within must-link constraints.

4.3 Comparison under label-guided learning framework

4.3.1 Experimental setup

Under the label-guided learning framework, we can construct pairwise constraints from the given labeled samples. Thus, we compare our CFW and CFW-SC approaches with some feature selection methods under a label-guided learning framework. The compared methods are described as follows.

-

LPLIR [16]. A local preserving logistic I-Relief (LPLIR) algorithm is a semi-supervised feature selection method that aims to maximize the expected margin of the given labeled data and retain the local structural information of all data.

-

S2LFS [7]. A semi-supervised local feature selection (S2LFS) method selects different discriminative feature subsets to represent samples from different classes.

-

ASLCGLFS [35]. A semi-supervised feature selection via adaptive structure learning and constrained graph learning (ASLCGLFS) algorithm introduces adaptive structure learning and graph learning to select features.

-

SFS-AGGL [36]. A semi-supervised feature selection method based on an adaptive graph with global and local information (SFS-AGGL) effectively leverages the structural distribution information from labeled data to derive label information for unlabeled samples.

As before, 3-fold cross-validation experiments are repeated ten times. We report the average results obtained across the 30 trials. In each trial, 40% of the training samples are treated as labeled data, and the remaining samples are taken as unlabeled data. For both CFW and CFW-CS, we use the labeled data to construct the must-link constraint set \(\mathcal {M}\) and the cannot-link constraint set \(\mathcal {C}\) separately. The number of selected features is also set within the range of [20, 200] with an interval of 20.

4.3.2 Outcome of experiments

We compare the methods described above under the label-guided learning framework. The curves demonstrating the accuracy vs. the number of features are shown in Figure 6. From Figure 6, we can see that CFW is better than the other methods.

Table 6 presents a summary of Figure 6, where the highest average accuracy of each method and the corresponding standard deviation are listed, the bold values are the best results obtained among the compared methods, and the numbers in parentheses represent the optimal feature numbers. Table 6 indicates that CFW is superior to the other methods on eight out of the nine datasets. For example, CFW achieves the highest accuracy of 77.34% on the Colon dataset, LPLIR yields the second best accuracy of 76.38%. Only on the ORL dataset did CFW fail to achieve the best results.

CFW can mostly stand out when compared to these label-guided methods due to its comprehensive utilization of both must-link and cannot-link constraints. By employing constraints, CFW gains deep insights into the constraint structure of the training data, enabling the identification of features that not only effectively differentiate between classes but also respect the intrinsic relationships indicated by the constraints.

4.4 Statistical tests

To conduct a thorough comparison, we perform the Friedman test [34] and the corresponding Bonferroni-Dunn test [37] on the experimental results described above. The Bonferroni-Dunn test results indicate significant differences between CFW and the other algorithms. The critical difference between any two methods is defined as follows:

where \(\alpha \) corresponds to the preset threshold value, l denotes the number of methods, N represents the number of datasets, and \(q_{\alpha }\) is the critical value.

In this study, we set \(\alpha =0.1\) by following the guidelines outlined in Refs. [38, 39]. Therefore, the statistical tests are conducted at a confidence level of 90\(\%\). Referring to Ref. [37], we obtain a critical value of \(q_{\alpha }=2.241\). In the comparison experiments conducted under the constraint-guided learning framework, \(l=8\) and \(N=9\). Consequently, the critical difference is \(CD_{0.1}=2.59\). If the difference between two algorithms is greater than 2.59, then there is a significant distinction between them. In the comparison experiments conducted under the label-guided framework, \(l=6\) and \(N=9\). Then, the corresponding critical difference is \(CD_{0.1}=1.89\).

Table 7 displays the rank differences obtained through the Friedman test with the Bonferroni-Dunn test when comparing CFW with the other methods. All rank differences, which are represented by the values contained in the second row of Table 7, are found to be greater than the critical difference threshold of 2.59, which suggests that CFW is significantly superior to the seven compared methods. Similarly, the rank differences presented in the fourth row of Table 7 also imply that CFW performs significantly better than the other five methods. In short, CFW has excellent performance regardless of the employed learning framework.

5 Conclusions

This paper focuses on the task of semi-supervised feature selection under the constraint-guided learning framework and proposes a novel method called, CFW. The proposed CFW integrates the hypothesis margin concept and the constraint information provided by pairwise constraints. CFW first allocates probabilities to neighbor samples, thereby modifying the calculation formula of the hypothesis margin. The modified hypothesis margin term aims to identify features with significant discriminant capabilities. Subsequently, the L1 regularization term is incorporated into the model to guarantee the sparsity of CFW, thereby achieving the purpose of automatic feature selection. Moreover, CFW designs a must-link preserving regularization term, which is aimed at selecting features that have the ability to maintain must-link information.

A comprehensive series of experiments demonstrates the effectiveness of CFW. First, we assess the convergence, sparsity and discriminant ability, parameter sensitivity, and number of constraints of CFW. The experimental results show that CFW can converge quickly while exhibiting good sparsity and discriminant ability. Subsequently, CFW is compared with various supervised and semi-supervised methods on nine high-dimensional datasets under two learning frameworks. The findings show that CFW achieves good classification performance when using an NN as the classifier. Finally, to statistically compare the performance of CFW with that of other algorithms, the Friedman test is performed on the experimental results. The statistical test results suggest that CFW significantly outperforms the other compared algorithms.

However, our method is not without its limitations. The determination of the parameters, \(\lambda _{1}\) and \(\lambda _{2}\), requires careful tuning. Although we provide guidelines for the parameter settings based on our experiments, the optimal settings may vary across different datasets and application scenarios. Though CFW exhibits robust performance across a variety of datasets, scaling this method to extremely large datasets is a challenge. The computational complexity of CFW significantly increases for datasets with vast numbers of samples and features. Future efforts will be dedicated to overcoming these challenges, with the aim of improving the scalability of CFW to efficiently accommodate larger datasets.

Data availability and material

Data is openly available in public repositories. http://archive.ics.uci.edu/ml/index.php; https://cam-orl.co.uk/facedatabase.html.

References

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A survey on semi-supervised feature selection methods. Pattern Recogn 64:141–158

Bouchlaghem Y, Akhiat Y, Amjad S (2022) Feature selection: A review and comparative study. E3S Web of Conferences 351:01046

Pang Q, Zhang L (2020) Semi-supervised neighborhood discrimination index for feature selection. Knowl-Based Syst 204:106224

Chen H, Chen H, Li W, Li T, Luo C, Wan J (2022) Robust dual-graph regularized and minimum redundancy based on self-representation for semi-supervised feature selection. Neurocomputing 490:104–123

Jin L, Zhang L, Zhao L (2023) Max-difference maximization criterion: A feature selection method for text categorization. Front Comp Sci 17(1):171337

Jin L, Zhang L, Zhao L (2023) Feature selection based on absolute deviation factor for text classification. Inform Process Manag 60(3):103251

Li Z, Tang J (2021) Semi-supervised local feature selection for data classification. Inf Sci 64(9):192108

Pang Q, Zhang L (2021) A recursive feature retention method for semi-supervised feature selection. Int J Mach Learn Cybern 12(9):2639–2657

Tang B, Zhang L (2019) Multi-class semi-supervised logistic I-Relief feature selection based on nearest neighbor. In: Advances in knowledge discovery and data mining. pp 281–292

Tang B, Zhang L (2020) Local preserving logistic I-Relief for semi-supervised feature selection. Neurocomputing 399:48–64

Sun Y, Todorovic S, Goodison S (2009) Local-learning-based feature selection for high-dimensional data analysis. IEEE Trans Pattern Anal Mach Intell 32(9):1610–1626

Xu J, Tang B, He H, Man H (2016) Semi-supervised feature selection based on relevance and redundancy criteria. IEEE Trans Neural Netw Learn Syst 28(9):1974–1984

Wang C, Hu Q, Wang X, Chen D, Qian Y, Dong Z (2017) Feature selection based on neighborhood discrimination index. IEEE Trans Neural Netw Learn Syst 29(7):2986–2999

He X, Cai D, Niyogi P (2005) Laplacian score for feature selection. Adv Neural Inf Process Syst 18:507–514

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Zhao J, Lu K, He X (2008) Locality sensitive semi-supervised feature selection. Neurocomputing 71(10–12):1842–1849

Salmi A, Hammouche K, Macaire L (2020) Similarity-based constraint score for feature selection. Knowl-Based Syst 209:106429

Kalakech M, Biela P, Macaire L, Hamad D (2011) Constraint scores for semi-supervised feature selection: A comparative study. Pattern Recogn Lett 32(5):656–665

Hindawi M, Allab K, Benabdeslem K (2011) Constraint selection-based semi-supervised feature selection. In: 2011 IEEE 11th international conference on data mining. pp 1080–1085

Zhang D, Chen S, Zhou Z-H (2008) Constraint score: A new filter method for feature selection with pairwise constraints. Pattern Recogn 41(5):1440–1451

Benabdeslem K, Hindawi M: Constrained laplacian score for semi-supervised feature selection. In: Joint European conference on machine learning and knowledge discovery in databases. pp 204–218

Hijazi S, Kalakech M, Hamad D, Kalakech A (2018) Feature selection approach based on hypothesis-margin and pairwise constraints. In: 2018 IEEE Middle East and North Africa Communications Conference, pp 1–6

Chen X, Zhang L, Zhao L (2023) Iterative constraint score based on hypothesis margin for semi-supervised feature selection. Knowl-Based Syst 271:110577

Sun Y (2007) Iterative Relief for feature weighting: Algorithms, theories, and applications. IEEE Trans Pattern Anal Mach Intell 29(6):1035–1051

Asuncion A, Newman D (2013) UCI machine learning repository. University of California, Irvine, School of Information and Computer Sciences. http://archive.ics.uci.edu/ml

Pomeroy S, Tamayo P, Gaasenbeek M, Sturla L, Angelo M, McLaughlin M, Kim J, Goumnerova L, Black P, Lau C (2002) Gene expression-based classification and outcome prediction of central nervous system embryonal tumors. Nature 415(24):436–442

Alon U, Barkai N, Notterman DA, Gish K, Ybarra S, Mack D, Levine AJ (1999) Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc Natl Acad Sci 96(12):6745–6750

Zhao Z, Zhang K-N, Wang Q, Li G, Zeng F, Zhang Y, Wu F, Chai R, Wang Z, Zhang C (2021) Chinese glioma genome atlas (CGGA): a comprehensive resource with functional genomic data from chinese glioma patients. Genom Proteom Bioinf 19(1):1–12

Ramaswamy S, Tamayo P, Rifkin R, Mukherjee S, Yeang C-H, Angelo M, Ladd C, Reich M, Latulippe E, Mesirov JP (2001) Multiclass cancer diagnosis using tumor gene expression signatures. Proc Natl Acad Sci 98(26):15149–15154

Su AI, Cooke MP, Ching KA, Hakak Y, Walker JR, Wiltshire T, Orth AP, Vega RG, Sapinoso LM, Moqrich A (2002) Large-scale analysis of the human and mouse transcriptomes. Proc Natl Acad Sci 99(7):4465–4470

Gross R (2005) Face databases. In: Handbook of face recognition. Springer, Pittsburgh, USA pp 301–327

Singh D, Febbo PG, Ross K, Jackson DG, Manola J, Ladd C, Tamayo P, Renshaw AA, D’Amico AV, Richie JP (2002) Gene expression correlates of clinical prostate cancer behavior. Cancer Cell 1(2):203–209

Yeoh E-J, Ross ME, Shurtleff SA, Williams WK, Patel D, Mahfouz R, Behm FG, Raimondi SC, Relling MV, Patel A (2002) Classification, subtype discovery, and prediction of outcome in pediatric acute lymphoblastic leukemia by gene expression profiling. Cancer Cell 1(2):133–143

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Lai J, Chen H, Li W, Li T, Wan J (2022) Semi-supervised feature selection via adaptive structure learning and constrained graph learning. Knowl-Based Syst 251:109243

Yi Y, Zhang H, Zhang N, Zhou W, Huang X, Xie G, Zheng C (2024) SFS-AGGL: Semi-supervised feature selection integrating adaptive graph with global and local information. Information 15(1):57

Dunn OJ (1961) Multiple comparisons among means. J Am Stat Assoc 56(293):52–64

Chen H, Tiňo P, Yao X (2009) Predictive ensemble pruning by expectation propagation. IEEE Trans Knowl Data Eng 21(7):999–1013

Huang X, Zhang L, Wang B, Li F, Zhang Z (2018) Feature clustering-based support vector machine recursive feature elimination for gene selection. Appl Intell 48(3):594–607

Funding

This work was supported in part by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 19KJA550002, by the Six Talent Peak Project of Jiangsu Province of China under Grant No. XYDXX-054, and by the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Contributions

Xinyi Chen: Conceptualization, Methodology, Software, Validation, Formal analysis, Writing - original draft; Li Zhang: Writing- reviewing & editing, Supervision, Project administration; Lei Zhao: Supervision, Project administration; Xiaofang Zhang: Supervision, Project administration.

Corresponding author

Ethics declarations

Conflicts of interest

We declare that there have been no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent to participate

All authors agreed to participate.

Consent for publication

All authors have consented to publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix A: Proof of Theorem 1

It is well known that a function defined on an open set is convex if and only if its Hessian matrix is positive semi-definite. Therefore, to prove the convexity of the function \(J_{R}\left( \textbf{w} \right) \) in (19), we must demonstrate that its Hessian matrix is positive semi-definite.

Because the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\) is symmetric and positive semi-definite, \(\textbf{Q}=\textbf{F}^T \textbf{L}^{\mathcal {M}} \textbf{F}\) is also symmetric and positive semi-definite. Therefore, we can express the must-link preserving regularization as \(J_{R}\left( \textbf{w} \right) =\text {trace} \left( 2\textbf{M}^T \textbf{Q} \textbf{M} \right) \), as shown in (21).

Then, the first partial derivative of \(J_{R}\left( \textbf{w} \right) \) with respect to \(w_r\) can be calculated as follows:

where \(Q_{rr}\) is the element in the r-th row and r-th column of \(\textbf{Q}\). The second partial derivative of \(J_{R}\left( \textbf{w} \right) \) with respect to \(w_s\) can be expressed as

Therefore, the Hessian matrix \(\textbf{H}\) of \(J_{R}\left( \textbf{w}\right) \) is a diagonal matrix, where the diagonal elements are \(H_{rr}=4Q_{rr}\).

Since \(\textbf{Q}\) is positive semi-definite, we have that \(Q_{rr} \ge 0\). As a result, the Hessian matrix \(\textbf{H}\) of \(J_{R}\left( \textbf{w}\right) \) is positive semi-definite. In other words, \(J_{R}\left( \textbf{w} \right) \) is a convex function of \(\textbf{w}\) when \(\textbf{w} \ge 0 \). This concludes the proof.

Appendix B: Proof of Theorem 2

Let \(J_{1}\left( \textbf{w}\right) =\log \left( 1+\exp \left( -\textbf{w}^{T} {\textbf{z}}\right) \right) \) and \(J_{2}\left( \textbf{w}\right) =\lambda _{1}\Vert \textbf{w}\Vert _{1}\), then the objective function (24) can be rewritten as:

According to the properties of convex functions, \(J\left( \textbf{w}\right) \) is a convex function if and only if \(J_{1}\left( \textbf{w}\right) \), \(J_{2}\left( \textbf{w}\right) \), and \(J_{R}\left( \textbf{w} \right) \) are convex functions. Theorem 1 states that \(J_{R}\left( \textbf{w} \right) \) is a convex function. Now, we need to prove that the other two functions are also convex. Following the approach used to prove Theorem 1, we simply need to demonstrate that the Hessian matrices of both \(J_{1}\left( \textbf{w}\right) \) and \(J_{2}\left( \textbf{w}\right) \) are positive semi-definite.

We start by calculating the first and second partial derivatives of \(J_{1}\left( \textbf{w}\right) \) with respect to \(\textbf{w}\), as shown below

and

Without loss of generality, let \(c=\sqrt{\frac{\exp \left( -\textbf{w}^{T} \textbf{z}\right) }{\left( 1+\exp \left( -\textbf{w}^{T} {\textbf{z}}\right) \right) ^{2}}}\). Substituting c into (B5), we have

where \(\textbf{H}_1\) is the Hessian matrix of \(J_{1}(\textbf{w})\). Because \(\textbf{H}_1\) can be regarded as the outer product of a column vector \(c{\textbf{z}} \) and its own transpose vector \(\left( c {\textbf{z}}\right) ^{T}\). So \(\textbf{H}_1\) is a matrix of rank 1 with only one non-zero eigenvalue. It can be calculated that the non-zero eigenvalue of matrix \(\textbf{H}_1\) is \(c^2\left\| \textbf{z}\right\| ^2\), which is greater than 0. In this case, the Hessian matrix of (B6) is positive semi-definite. Therefore, \(J_{1}\left( \textbf{w}\right) \) is a convex function.

As for \(J_{2}\left( \textbf{w}\right) \), we have

and

Thus, the Hessian matrix of \(J_{2}\left( \textbf{w} \right) \) is a matrix with all zeros, which means that the Hessian matrix of \( J_{2}\left( \textbf{w} \right) \) is positive semi-definite. Hence, \( J_{2}\left( \textbf{w} \right) \) is a also convex function.

In summary, \(J_{1}\left( \textbf{w} \right) \), \(J_{2}\left( \textbf{w} \right) \) and \(J_{R}\left( \textbf{w} \right) \) are convex functions. Thus, \(J\left( \textbf{w} \right) \) is a convex function. This completes the proof.

Appendix C: Proof of Theorem 3

Note that \(\textbf{z}(t)\) is updated by \(\textbf{w}(t-1)\) in the t-th iteration. When \(\textbf{z}(t)\) and \(\textbf{w}(t-1)\) are given, \(\textbf{w}(t)\) is updated using the gradient descent scheme in (27) and the truncation rule in (28). Consequently, the objective function achieves its minimum \(J(\textbf{w}(t) \mid \textbf{z}(t))\) for fixed \(\textbf{z}(t)\). In other words,

which completes the proof of Theorem 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, X., Zhang, L., Zhao, L. et al. Constrained feature weighting for semi-supervised learning. Appl Intell 54, 9987–10006 (2024). https://doi.org/10.1007/s10489-024-05691-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05691-9