Abstract

Nearest neighbor search is a core process in many data mining algorithms. Finding reliable closest matches of a test instance is still a challenging task as the effectiveness of many general-purpose distance measures such as \(\ell _p\)-norm decreases as the number of dimensions increases. Their performances vary significantly in different data distributions. This is mainly because they compute the distance between two instances solely based on their geometric positions in the feature space, and data distribution has no influence on the distance measure. This paper presents a simple data-dependent general-purpose dissimilarity measure called ‘\(m_p\)-dissimilarity’. Rather than relying on geometric distance, it measures the dissimilarity between two instances as a probability mass in a region that encloses the two instances in every dimension. It deems two instances in a sparse region to be more similar than two instances of equal inter-point geometric distance in a dense region. Our empirical results in k-NN classification and content-based multimedia information retrieval tasks show that the proposed \(m_p\)-dissimilarity measure produces better task-specific performance than existing widely used general-purpose distance measures such as \(\ell _p\)-norm and cosine distance across a wide range of moderate- to high-dimensional data sets with continuous only, discrete only, and mixed attributes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In order to make a prediction for a test instance, many data mining algorithms search for its k closest matches or nearest neighbors (k-NNs) in the given training set and make a prediction based on the k-NNs. They use a (dis)similarity or distance measure to find k-NNs. However, finding reliable k-NNs becomes a challenging task as the number of dimensions increases. In high-dimensional space, data distribution becomes sparse which makes the concept of distance meaningless, i.e., all pairs of points are almost equidistant for a wide range of data distributions and distance measures [1, 6, 12].

Let \(D=\{\mathbf{x}^{(1)}, \mathbf{x}^{(2)},\cdots , \mathbf{x}^{(N)}\}\) be a collection of N data instances in an M-dimensional space \({{\mathcal {X}}}\). Each instance \(\mathbf{x}\) is represented as an M-dimensional vector \(\langle x_{1},x_{2},\cdots ,x_{M}\rangle \). Let \(d:{{\mathcal {X}}}\times {{\mathcal {X}}} \rightarrow {{\mathcal {R}}}\) (where \({{\mathcal {R}}}\) is a real domain) be a measure of dissimilarity between two vectors in \({{\mathcal {X}}}\). The most common approach of measuring dissimilarity of two data instances \(\mathbf{x}\) and \(\mathbf{y}\) is based on a geometric model where \({{\mathcal {X}}}\) is assumed to be a metric space (which has nice mathematical properties) and \(d(\mathbf{x}, \mathbf{y})\) is estimated as their geometric distance in the space. We use distance measures to refer to dissimilarity measures which are metric.

Minkowski distance (aka \(\ell _p\)-norm) [10] is a widely used distance measure. It estimates the dissimilarity of \(\mathbf{x}\) and \(\mathbf{y}\) by combining their distances in each dimension. Euclidean distance (\(\ell _2\)-norm) is a popular choice of distance function as it intuitively corresponds to the distance defined in the real three-dimensional world. In bag-of-words vector representation of documents, cosine distance (aka angular distance) has been shown to produce more reliable k-NNs than \(\ell _2\)-norm [26]. Cosine distance is proportional to the Euclidean distance of the length normalized vectors (i.e., they are translated in the space to be of unit lengths).

The performance of general-purpose distance measures such as \(\ell _p\)-norm and cosine distance depends on the data distribution: A distance measure that performs well in one distribution may perform poorly in others. This has been suspected to be due to the fact that these distance measures compute the dissimilarity between two instances solely based on their geometric positions in the vector space, and data distribution (positions of other vectors) is not taken into consideration.

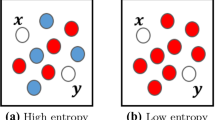

Many psychologists have expressed their concerns on the geometric model of dissimilarity measure [17, 31] arguing that the judged dissimilarity between two objects is influenced by the context of measurements and other objects in proximity. Krumhansl [17] has suggested a distance density model of dissimilarity measure arguing that two objects in a relatively dense region would be less similar than two objects of equal distance but located in a less dense region. For example, two Chinese individuals will be judged as more similar when compared in Europe (where there are less Chinese and more Caucasian people) than in China (where there are many Chinese people).

In order to understand the influence of data distribution in judged dissimilarity, let us consider an example of a data set with distributions in dimensions i and j as shown in Table 1. In this example, \(\mathbf{x}^{(1)}\) and \(\mathbf{x}^{(2)}\) have the same values in dimensions i and j. Their value in dimension i is significantly different from the rest of the instances, but their value is a common value in dimension j (9 out of 10 instances have the same value). In a geometric distance measure such as \(\ell _p\), because \(x^{(1)}_i - x^{(2)}_i = x^{(1)}_j - x^{(2)}_j = 0\), the differences in dimensions i and j have the same contribution in \(d(\mathbf{x}^{(1)}, \mathbf{x}^{(2)})\). The main concern raised by psychologists is that having the same value in dimension j (where probability of the value is high) does not provide the same amount of information about the (dis)similarity between \(\mathbf{x}^{(1)}\) and \(\mathbf{x}^{(2)}\) as having the same value in dimension i (where the probability of the value is small). This scenario where many instances have the same value in many dimensions can be very common in high-dimensional spaces as data often lies in a low-dimensional subspace. For example, in bag-of-words vector representation, many entries in document vectors are zero as each document has only a small number of terms from the dictionary.

In this paper, we propose a simple data-dependent general-purpose dissimilarity measure called ‘\(m_p\)-dissimilarity’ in which dissimilarity between two instances is estimated based on data distribution in each dimension. Rather than using the spatial distance in each dimension, \(m_p\)-dissimilarity evaluates the dissimilarity between two instances in terms of probability data mass in a region covering the two instances in each dimension. The final dissimilarity between the two instances is estimated by combining dissimilarity in every dimension as in \(\ell _p\)-norm. The intuition behind the proposed dissimilarity measure is that two instances are likely to be dissimilar if there are many instances in-between and around them in many dimensions. Under the proposed data-dependent dissimilarity measure, two instances in a dense region of the distribution are more dissimilar than two instances in a sparse region, even if the two pairs have the same geometric distance, which is prescribed by psychologists.

Our empirical evaluation in k-NN classification and content-based multimedia information retrieval tasks shows that the proposed \(m_p\)-dissimilarity measure produces better task-specific performance than existing widely used general-purpose distance measures such as \(\ell _p\)-norm and cosine distance across a wide range of moderate- to high-dimensional data sets with continuous only, discrete only, and mixed attributes.

The rest of the paper is organized as follows. Previous work related to this paper is discussed in Sect. 2. The proposed \(m_p\)-dissimilarity is presented in Sect. 3, followed by empirical results in Sect. 4. The relationship of \(m_p\)-dissimilarity with \(\ell _p\)-norm after rank transformation of data is discussed in Sect. 5 followed by the related discussion in Sect. 6. Finally, we conclude the paper with conclusions and future work in the last section. From now on, we refer to \(m_p\)-dissimilarity and \(\ell _p\)-norm by \(m_p\) and \(\ell _p\), respectively.

2 Related work

In this section, we review some widely used techniques to measure dissimilarity between instances in domains with continuous only, discrete only, and mixed attributes.

2.1 Dissimilarity measures in continuous domain

In continuous domain where each dimension is numeric, i.e., \(\forall _i\) \(x_i\in {{\mathcal {R}}}\), the dissimilarity between two M-dimensional vectors \(\mathbf{x}\) and \(\mathbf{y}\) is primarily based on their positions in the vector space. Minkowski distance of order \(p>0\) (also known as \(\ell _p\)-norm distance) is defined as follows:

where \(abs(\cdot )\) is an absolute value.

Euclidean distance (\(p=2\)) is a popular choice of distance function as it intuitively corresponds to the distance defined in the real three-dimensional world.

As distance in each dimension has equal influence, \(\ell _p\) is very sensitive to the units and scales of measurement. Min–max normalization (\(x_i'=\frac{x_i-\hbox {min}_i}{\hbox {max}_i-\hbox {min}_i}\), where \(\mathrm{min}_i\) and \(\hbox {max}_i\) are the minimum and maximum values in dimension i respectively) is commonly used to rescale feature values in the unit range ([0,1]). Even though min–max normalization takes care of scale differences between different dimensions, it does not take care of differences in variance across different dimensions. A unit distance in a dimension with low variance may not be the same as that in a dimension with high variance. In order to ensure the equal variance in each dimension, standard deviation normalization (\(x_i''=\frac{x_i}{\sigma _i}\) where \(\sigma _i\) is the standard deviation of values of instances in dimension i) is used in the literature. We call the \(\ell _p\) applied on standard deviation normalized vectors as standardized \(\ell _p\) (s-\(\ell _p\)) i.e., s-\(\ell _p(\mathbf{x},\mathbf{y}) = \ell _p(\mathbf{x''},\mathbf{y''})\). Standardized \(\ell _p\) with \(p=2\) (s-\(\ell _2\)) is the simplest variant of Mahalanobis distance [10] where the covariance matrix is a diagonal matrix of variance of values in each dimension.

The Mahalanobis distance [10, 22] is defined as follows:

where \(\Sigma \in {{\mathcal {R}}}^{M\times M}\) is the covariance matrix of D.

Rather than using the inverse of the sample covariance matrix, metric learning literature focus on learning a generalized Mahalanobis distance [5, 18, 32, 33] from D defined as follows:

where \(\Omega \in {{\mathcal {R}}}^{M\times M}\) is a positive semi-definite matrix.

Since \(\Omega \) is positive semi-definite, it can be factorized as \(\Omega = \Lambda ^{T}\Lambda \) where \(\Lambda \in {\mathcal R}^{\omega \times M} \) and \(\omega \) is a positive integer and \(d_{genMah}(\mathbf{x}, \mathbf{y})\) can be written as: \(d_{genMah}(\mathbf{x}, \mathbf{y}) = \Vert \Lambda \mathbf{x}-\Lambda \mathbf{y}\Vert _2\) [5, 18, 32]. The generalized Mahalanobis distance is the Euclidean distance of vectors transformed by matrix \(\Lambda \). The goal of metric learning is to learn a transformation matrix \(\Lambda \) to improve the task-specific performance of the Euclidean distance, subject to some optimality constraints, e.g., similar instances become closer to each other (similarity constraints) and dissimilar instances are separated further apart from each other (dissimilarity constraints). Learning the best \(\Lambda \) requires learning intensive optimization which is expensive in high-dimensional and/or large data sets. Furthermore, \(\Lambda \) is optimized specifically for the given task; and it may not be good for other tasks using the same data set. It is not a general-purpose measure like \(\ell _p\).

In many high-dimensional problems, data have the same value (0 or any other constant) in many dimensions. This leads to sparseness in data distribution. For example, only a small proportion of terms in a dictionary appear in each document of a corpus. Many entries of a term vector representing a document are zero. Euclidean distance is not a good choice of distance measure in such problems. The direction of vectors is more important than their lengths. The angular distance measure (aka cosine distance) [10] is a more sensible choice to measure dissimilarity between two documents. The cosine distance between two vectors \(\mathbf{x}\) and \(\mathbf{y}\) is defined as follows [10]:

Cosine distance is proportional to Euclidean distance when the vectors are length normalized to be of unit lengths which is referred as cosine normalization in the literature. Different term weighting schemes are used to adjust the positions of the document vectors in the space based on the importance of their terms in order to improve the task-specific performance of the cosine distance [19, 25]. Cosine distance with term frequency–inverse document frequency (TF-IDF)-based term weighting [25] has been shown to perform well in many text-mining problems such as text categorization, text clustering, and text retrieval tasks.

In both metric learning and term weighting, the focus is to transform data so that task-specific performance of the Euclidean or cosine distance is maximized in the given data set. Some aspects of data distribution is taken into consideration in the transformation in metric learning and in term weighting, but still restricted to be a metric in the transformed space, i.e., dissimilarity is still computed solely based on geometrical positions in the transformed space.

2.2 Dissimilarity measures in discrete domain

In discrete domain, each attribute is a categorical attribute, i.e., \(\forall _i\) \(x_{i}\) \(\in \) \(\{v_{i,1}\),\(\cdots ,\) \(v_{i,u_i}\}\) where \(v_{i,j}\) is a label out of \(u_i\) possible labels for \(x_i\). A discrete attribute can be ordinal (where there is an ordering of discrete labels \(v_{i,1}< v_{i,2}< \cdots < v_{i,u_i}\)) or nominal (where there is no ordering of discrete labels).

In order to measure similarity between two labels \(x_i\) and \(y_i\) for a discrete attribute i, \(s(x_i,y_i)\), the simplest overlap approach assigns maximum similarity of 1 if \(x_i=y_i\) and minimum similarity of 0 if \(x_i \ne y_i\) [7, 29]. Other approaches such as occurrence frequency (OF) and inverse occurrence frequency (IOF) [7] estimate \(s(x_i,y_i)\) based on the frequencies of \(x_i\) and \(y_i\) in D if \(x_i \ne y_i\) and assign maximum similarity of 1 if \(x_i=y_i\) regardless of the frequency. The definition of \(s(x_i,y_i)\) based on overlap, OF, and IOF [7] is provided in Table 2.

Lin [20] defined similarity using information theory and suggested a probabilistic measure of similarity in ordinal discrete domain. The similarity between two ordinal labels \(x_i\) and \(y_i\) is defined as follows:

where \(P(x_i)\) is the probability of \(x_i\) and it is estimated from D as \({\hat{P}}(x_i) = \frac{f(x_i)+1}{N+u_i}\) where \(f(x_i)\) is the occurrence frequency of label \(x_i\) in D.

Boriah et al. [7] used Lin’s information theoretic definition of similarity in nominal discrete domain as follows:

In multivariate discrete domain, dissimilarityFootnote 1 between two instances \(\mathbf{x}\) and \(\mathbf{y}\) using Lin’s measure can be estimated as follows [7]:

Boriah et al. [7] have shown that \(d_{lin}\) performs better than \(d_{of}\) and \(d_{iof}\) in discrete domains. Even though measures such as \(s_{of}\), \(s_{iof}\) and \(s_{lin}\) assign similarity between \(x_i\) and \(y_i\) in each dimension based on the distribution of labels if \(x_i\ne y_i\), they assign the maximum similarity of 1 in the case of \(x_i = y_i\) regardless of the distribution of the label.

2.3 Dissimilarity measures in mixed domain

Many real-world applications have both continuous and discrete attributes resulting in mixed domain. In order to measure (dis)similarity between two instances in such a domain, the most commonly used \(\ell _p\)-norm uses the overlap approach to measure dissimilarity between two labels \(x_i\) and \(y_i\) of a discrete attribute i as \(x_i-y_i = 0\) if \(x_i=y_i\); and 1 otherwise.

Other approaches include converting attributes into continuous only or discrete only and using (dis)similarity measures designed for continuous or discrete domain. A continuous attribute can be converted into a discrete attribute through discretization [13]. A discrete attribute with u discrete labels can be converted into u continuous attributes as follows: Each discrete label is converted into a binary attribute where 0 represents the absence of the label and 1 represents the presence, and all converted u binary attributes are treated as continuous attributes [13].

3 Data-dependent dissimilarity measure

In order to measure dissimilarity between \(\mathbf{x}\) and \(\mathbf{y}\), instead of using \(abs(x_i-y_i)\) in Eq. 1, we propose to consider the relative positions of \(\mathbf{x}\) and \(\mathbf{y}\) with respect to the rest of the data distribution in each dimension. The dissimilarity between \(\mathbf{x}\) and \(\mathbf{y}\) in dimension i can be estimated as the probability data mass in region \(R_i(\mathbf{x}, \mathbf{y})\) that encloses \(\mathbf{x}\) and \(\mathbf{y}\). If there are many instances in \(R_i(\mathbf{x}, \mathbf{y})\), \(\mathbf{x}\) and \(\mathbf{y}\) are likely to be dissimilar in dimension i. Using the same power mean formulation as in \(\ell _p\)-norm, the data-dependent dissimilarity measure based on probability mass is defined as:

where \(|R_i(\mathbf{x}, \mathbf{y})|\) is the data mass in region \(R_i(\mathbf{x}, \mathbf{y}) = [\min (x_i,y_i) - \delta , \max (x_i,y_i) + \delta ]\) (i.e., \(|R_i(\mathbf{x}, \mathbf{y})| = |\{z_i:\min (x_i,y_i)-\delta \le z_i \le \max (x_i,y_i)+\delta \}|\)), \(\delta \ge 0\), \(p>0\) and N is the total number of instances in D. An example of \(R_i(\mathbf{x}, \mathbf{y})\) is shown in Fig. 1.

Contour plots of dissimilarity of points in the space with reference to the centre (0.5, 0.5), based on \(m_p\) (with \(\delta \) for each dimension i set to \(\frac{\sigma _i}{2}\) where \(\sigma _i\) is the standard deviation of values of instances in dimension i) in three data distributions (uniform: left column, normal: middle column, and bimodal: right column). The darker the color, the lesser the dissimilarity (a) \(p=2.0\), (b) \(p=0.5\)

The region is extended by small \(\delta > 0\) beyond \(x_i\) and \(y_i\) to consider the density distribution around them along with the distribution in-between them. The role of parameter p is similar to that in \(\ell _p\), i.e., p controls the influence of the dissimilarity in each dimension.

We call the proposed dissimilarity measure \(m_p(\mathbf{x}, \mathbf{y})\) as ‘\(m_p\)-dissimilarity’. This measure captures the essence of the distance density model proposed by psychologists [17] which prescribes that two instances in a sparse region are more similar than two instances in a dense region. Although \(m_p\) employs the same power mean formulation as \(\ell _p\), the core calculation is based on probability mass rather than distance. The proposed \(m_p\)-dissimilarity has a probabilistic interpretation which is provided in “Appendix 1”.

The dissimilarity between a pair of instances using Eq. 8 depends on the distribution of data. Fig. 2 shows the contour plots of \(m_p\)-dissimilarity between the point (0.5,0.5) and any other point in the feature space in three different data distributions (uniform, normal and bimodal) for \(p=2.0\) and \(p=0.5\). In contrast, \(\ell _p\) or \(d_{cos}\) would produce the same contour in all three distributions. Under uniform distribution and infinite samples, \(m_p\) will yield the same result as \(\ell _p\) because the data mass in \(R_i(\mathbf{x}, \mathbf{y})\) will be proportional to \(abs(x_i-y_i)\). This is depicted in the two contour plots in the first column in Fig. 2 where they exhibit similar contour plots to those of \(\ell _2\) and \(\ell _{0.5}\).

3.1 Time complexity and efficient approximation

In continuous domains, estimating \(m_p(\mathbf{x}, \mathbf{y})\) using Eq. 8 is expensive, especially when either \(\mathbf{x}\) or \(\mathbf{y}\) is an unseen instance, as it requires a range search in each dimension to estimate \(|R_i(\mathbf{x}, \mathbf{y})|\). One-dimensional range search can be done in \(O(\log N)\) using binary search trees resulting in the time complexity of \(O(M\log N)\) to measure dissimilarity of a pair of instances against O(M) of \(\ell _p\). It is expensive to compute in large data sets.

Alternatively, \(|R_i(\mathbf{x}, \mathbf{y})|\) can be approximated efficiently by using a histogram, i.e., divide the range of real values in each dimension i into b bins (\(h_{i1}, h_{i2},\cdots ,h_{ib}\)). The number of instances in each bin can be computed in a preprocessing step. When two instances \(\mathbf{x}\) and \(\mathbf{y}\) are given for dissimilarity measurement, \(R_i(\mathbf{x}, \mathbf{y})\) can be computed by using the bins in-between \(\mathbf{x}\) and \(\mathbf{y}\) as shown in Fig. 3. Even though the approximation using bins does not extend the range exactly by \(\delta \) beyond \(x_i\) and \(y_i\), the bins (where \(x_i\) and \(y_i\) fall into) provide a reasonable approximation of the distribution around \(x_i\) and \(y_i\).

If \(h_{il}\) and \(h_{io}\) are the two bins in which \(\min (x_i, y_i)\) and \(\max (x_i, y_i)\) fall, respectively, then \(|R_i(\mathbf{x}, \mathbf{y})|\) can be estimated as follows:

Note that the binning can be done in two ways: (i) equal width: Each bin is of the same size (bins in dense region have more data mass than those in the sparse region); (ii) equal frequency: Each bin has approximately the same number of instances as much as possible (bins are smaller in dense region than those in the sparse region). The former one is sensitive to outliers. If there is only one instance having significantly different value than others, it may affect the discrimination between the other instances as they all may fall in the same bin, and many bins in the middle will be left empty. Hence, we used the latter approach of binning where each bin has approximately the same number of instances with \(b=100\) using WEKA implementationFootnote 2 [13] in this paper. Note that bins in a dimension can have different data mass if many instances have the same values in that dimension making them impossible to split in b bins with the equal data mass.

The preprocessing requires a total of \(O(NMb+Mb^2)\) time and \(O(Mb^2)\) space complexities. It builds the histogram and the pairwise dissimilarity matrix of bins in each dimension. A histogram of b bins is built for each dimension and the number of instances falling in each bin can be calculated in O(NMb) time. The dissimilarity matrix for \(|R_i(\cdot ,\cdot )|\) can be precomputed for each pair of bins in each dimension in \(O(Mb^2)\) time and stored in \(O(Mb^2)\) space. Having preprocessed, the dissimilarity between two instances in each dimension can be done as a table look-up in O(1) time, resulting in O(M) time to measure dissimilarity between a pair of instances, equivalent to those of \(\ell _p\) and \(d_{cos}\).

3.2 Handling discrete attributes

For ordinal discrete attributes, \(|R_i(\mathbf{x}, \mathbf{y})|\) can be estimated as follows:

where \(f(z_i)\) is the frequency of discrete label \(z_i\) in D.

Unlike \(d_{lin}\) that assigns dissimilarity in an ordinal attribute i based on the frequencies of labels if \(x_i\ne y_i\) and assigns minimal dissimilarity of 0 regardless of the distribution of labels if \(x_i=y_i\), \(m_p\) assigns dissimilarity based on the frequency of the label even in the case of \(x_i=y_i\).

For nominal discrete attributes, \(|R_i(\mathbf{x}, \mathbf{y})|\) can be estimated as follows:

It is interesting to note the difference between \(m_p\) and the existing dissimilarity measures for nominal domains such as \(d_{lin}\), \(d_{of}\) and \(d_{iof}\) [7]. For a nominal attribute i, they use the frequency of labels if two instances have different labels (\(x_i\ne y_i\)), and assign the maximal similarity of 1 (or minimal dissimilarity of 0) if \(x_i=y_i\). In contrast, \(m_p\) uses the opposite approach and uses the frequency of the label if \(x_i=y_i\) and assigns the maximal dissimilarity of 1 otherwise. In the case of \(x_i=y_i\), existing measures assign maximal similarity of 1 without considering the distribution of the label. It might be the case that all the other instances have the same label, and there is no discrimination between instances w.r.t the attribute.

The frequency of each discrete label can be computed in a preprocessing step which requires O(NM) time and O(Mu) (where u is the average number of discrete labels per dimension) space.

3.3 Dissimilarity measure in bag-of-words vector representation

In the case of bag-of-words (bow) [26] vector representations, each component of a vector represents frequency of a feature (term in documents or a visual descriptor in images). Given any two vectors \(\mathbf{x}\) and \(\mathbf{y}\), many features have zero frequency i.e., \(x_i=y_i=0\) for many dimensions, because a document contains only a small proportion of words in the dictionary. Since the absence of a feature in both the instances does not provide any information about the (dis)similarity of \(\mathbf{x}\) and \(\mathbf{y}\), those features should be ignored. Hence, in the bow vector representation, \(m_p\)-dissimilarity of \(\mathbf{x}\) and \(\mathbf{y}\) is estimated using only those features that occur in either of \(\mathbf{x}\) or \(\mathbf{y}\) as follows:

where \(F_\mathbf{x}\) is the set of features that occur in \(\mathbf{x}\) (i.e., \(F_\mathbf{x}=\{i:x_i>0\}\)), and \(|F_{\mathbf{x},\mathbf{y}}|=|F_\mathbf{x}\cup F_\mathbf{y}|\) is the normalization term employed to account for different numbers of features used for measuring dissimilarity of any two instances.

It is important to ignore those features which have zero frequency in both instances (\(x_i=y_i=0\)); otherwise, \(m_p\) would assign large dissimilarity w.r.t those features as many instances in the data set will have 0 values. This is not an issue for \(\ell _p\) because it assigns 0 dissimilarity when \(x_i=y_i=0\).

3.4 Distinguishing properties of \(m_p\)

3.4.1 Data-dependent self-dissimilarity

The distinguishing characteristic of \(m_p\) against the geometry-based (\(\ell _p\) and \(d_{cos}\)) and probabilistic (\(d_{lin}\)) dissimilarity measures discussed in Sect. 2 is the self-dissimilarity. The self-dissimilarity of \(m_p\) is not zero, and it ranges from the minimum of \(\frac{1}{N}\) to the maximum of 1, depending on the data distribution in each dimension. In contrast, \(\ell _p(\mathbf{x}, \mathbf{x})=d_{cos}(\mathbf{x},\mathbf{x})=d_{lin}(\mathbf{x}, \mathbf{x})=0\) irrespective of the data distribution. Because of the data-dependent self-dissimilarity, \(m_p\) is non-metric.

The approximation of \(|R_i(\mathbf{x}, \mathbf{y})|\) using equal-frequency bins will yield a nonzero constant self-dissimilarity for \(m_p\) only if each bin has the same number of instances in each dimension. This is often not be possible because there are duplicate values in many instances and this occurs in many dimensions. This is a common characteristic of many high-dimensional data sets because data often lie in a low-dimensional subspace. As a result, bins often have different numbers of instances resulting in data-dependent self-dissimilarity. The advantage of data-dependent self-dissimilarity of \(m_p\) over data-independent self-dissimilarity of existing measures is discussed in Sect. 5.

In discrete domains, unlike \(d_{lin}\), \(d_{of}\) and \(d_{iof}\) based measures that use the probabilities of categorical labels only in the case of different labels, \(m_p\) uses the probability of the label even in the case of matching labels—data-dependent self-dissimilarity in action.

3.4.2 \(m_p\) is equivalent to \(\ell _p\) only under uniform distribution

Under uniform distribution and infinite data, \(m_p\) is equivalent to \(\ell _p\) as the data mass in the range is proportional to its length. This is the only condition under which \(m_p\)—a data-dependent measure—is equivalent to \(\ell _p\)—a geometric model-based measure.

3.4.3 Robust to scale, units of measurement and outliers

As \(m_p\) is based on counts and does not use the feature values in the dissimilarity measure directly, it is robust to scale and units of measurements in continuous domains. It does not require preprocessing of data to address the scaling issue (min–max normalization) or difference in variance across different dimensions (standard deviation normalization). In many real-world applications, different properties of data may have been represented or measured in different scales (e.g., income is represented in dollars and age in normal integer scale: One unit difference is not the same in these two attributes). This can be the case in high-dimensional problems where different properties are measured by different sensors. Also for the same reason (i.e., based on the count and not the actual feature values), \(m_p\) is less sensitive to outliers. In the case of \(\ell _p\), outliers can have an adverse impact as they might change variance significantly.

4 Empirical evaluation

This section presents the results of experiments conducted to demonstrate that simply by replacing the geometric distance with the probability mass in each dimension, \(m_p\) produces better task-specific performances than \(\ell _p\) and \(d_{cos}\) across a wide range of data sets. We have evaluated the performance of \(m_p\) against the general-purpose dissimilarity measures of Minkowski distance (\(\ell _p\)), Minkowski distance after standard deviation normalization (s-\(\ell _p\)), Cosine distance (\(d_{cos}\)) and Lin’s probabilistic measure (\(d_{lin}\)) in k-nearest neighbor (k-NN) classification and content-based multimedia information retrieval (CBMIR) tasks. We used two settings of \(p\in \{0.5, 2.0\}\) in \(\ell _p\), s-\(\ell _p\) and \(m_p\) resulting in eight measures: \(d_{cos}, d_{lin}, \ell _{0.5}, \ell _2, s\)-\(\ell _{0.5}, s\)-\(\ell _2, m_{0.5}\) and \(m_2\). All dissimilarity measures and algorithms were implemented in Java using the WEKA platform [13].

We used moderately high to high-dimensional (\(M\ge 20\)) data sets from different application areas such as text, image, music, characters and digits recognition, medical and biology, games. In text collections, documents were represented by TF-IDF [25] weighted ‘bag-of-words’ [26] vectors. Feature values in each dimension in all other non-text data sets were normalized to be in the unit range. For \(d_{lin}\), continuous attributes were converted into ordinal attributes using discretization as in the case of \(m_p\).

The properties of the data sets are provided in Table 3. NG20, R52, R8, Webkb were from ([8])Footnote 3; Ohscal, Wap, New3s and Fbis were from [14]Footnote 4; Caltech256 (sift bag-of-words features) from [30]Footnote 5; Corel and Gtzan were from [34]; HBA was from [2] and the rest of the other data sets were from UCI [4]Footnote 6 and WEKA [13]Footnote 7.

We discuss the experimental setups and results in k-NN classification and content-based multimedia information retrieval (CBMIR) tasks in the next two subsections.

4.1 k-NN classification

In the k-NN classification context, in order to predict a class label for a test instance \(\mathbf{x}\), its k nearest neighbors were searched in the training set using all eight dissimilarity measures and the most frequent label in k-NNs was predicted as the class label for the test instance. All classification experiments were conducted using a tenfold cross-validation: 10 train-and-test trials using 90% of the given data set for training and 10% for testing. We set k to a commonly used value of 5 (i.e., \(k=5\)). The average classification accuracy (%) over a tenfold cross-validation was reported. The accuracies of two algorithms were considered to be significantly different if their confidence intervals (based on two standard errors over the tenfold cross-validation) did not overlap. The average classification accuracies over the tenfold cross-validation of the eight dissimilarity measures in all data sets are provided in Table 4.

Out of 30 data sets, \(m_{0.5}\) and \(m_2\) produced the best result or equivalent to the best result in 23 and 16 data sets, respectively. Either \(m_{0.5}\) or \(m_2\) produced significantly better classification accuracy than any other contenders in the New3s, Ohscal, Wap, R52, NG20, R8, Webkb, Caltech, Corel, Connect-4 and Hypothyroid data sets. The summarized results in the last two rows in Table 4 show that both \(m_{0.5}\) and \(m_2\) produced consistently top or near top results across different data sets. \(m_{0.5}\) and \(m_2\) have average ranking of 1.97 and 2.37, respectively; whereas the average rank of the closest contender \(d_{cos}\) is 3.30. Table 5 provides the summarized result in terms of the win:loss:draw counts of \(m_{0.5}\) and \(m_2\) against the other six contenders using confidence interval based on the two standard errors in the tenfold cross-validation (standard errors are provided in Table 10 in “Appendix 3”). It shows that both \(m_{0.5}\) and \(m_2\) had significantly more wins than losses against all other contenders.

Note that in data sets with nominal attributes only (e.g., Connect-4, Chess, and Splice), \(d_{cos}\), \(\ell _p\) and s-\(\ell _p\) produced exactly the same results because they are effectively the same measure. Because of the one-of-all transformation, all the vectors are of the same length of M (as each instance has exactly M 1s) in which case \(d_{cos}\) is proportional to \(\ell _2\). Since the difference in each dimension is either 0 or 1, the parameter p is meaningless.

All eight measures had run time in the same order of magnitude. For example, predicting class labels for instances in one fold of train-and-test in NG20 took 21,458 seconds for \(m_2\) and 26,484 seconds for \(m_{0.5}\) in comparison to 16,296 (\(d_{cos}\)), 28,168 (\(\ell _{0.5}\)), 24,380 (\(\ell _2\)), 29,210 (s-\(\ell _{0.5}\)), 25,944 (s-\(\ell _2\)) and 20,515 (\(d_{lin}\)) seconds. In Corel, \(m_2\) and \(m_{0.5}\) took 37 and 47 seconds whereas \(d_{cos}\) took 22 s followed by 32 (\(\ell _2\)), 45 (\(\ell _{0.5}\)), 47 (s-\(\ell _2\)), 59 (s-\(\ell _{0.5}\)) and 90 (\(d_{lin}\)) seconds.

4.2 Content-based multimedia information retrieval (CBMIR)

Given a query instance \(\mathbf{q}\) for a retrieval task, all the instances in a data set were ranked in ascending order of their dissimilarity to \(\mathbf{q}\) based on a dissimilarity measure; and the first k instances were presented as the relevant instances to \(\mathbf{q}\). For performance evaluation, an instance was considered to be relevant to \(\mathbf{q}\) if they have the same category label. A good information retrieval system returns relevant instances at the top. Hence, the precision in the top 10 (P@10) retrieved results was used as the performance measure. The same process was repeated for each instance in a data set as a query and the rest of the instances were ranked. The average P@10 of N queries was reported. For information retrieval task, we used 10 data sets with 10 or more classes from multimedia (text, music and image) applications: New3s, Ohscal, Wap, R52, NG20, Fbis, Caltech, Gtzan, Hba and Corel. The average P@10 of \(d_{cos}, \ell _{0.5}, \ell _2, s\)-\(\ell _{0.5}, s\)-\(\ell _2, d_{lin}, m_{0.5}\) and \(m_2\) are provided in Table 6.

Table 7 presents the summarized result in terms of the win:loss:draw counts of \(m_{0.5}\) and \(m_2\) using confidence interval based on the two standard errors over N queries (standard errors are provided in Table 11 in “Appendix 3”). It shows that both \(m_{0.5}\) and \(m_2\) produced significantly better retrieval results than the other six contenders in many data sets: \(m_{0.5}\) had only 1 loss and between 7 and 10 wins; \(m_2\) has at least 7 wins and at most 3 losses. The detailed result in Table 6 shows that, out of 10 data sets used, \(m_{0.5}\) and \(m_2\) produced the best result or equivalent to the best result in 9 and 6 data sets, respectively. They have the average ranking of 1.20 and 2.70, respectively whereas the closest contender \(d_{cos}\) has an average ranking of 3.2.

5 Relation to \(\ell _p\) with rank transformation

In the first glance, it appears that \(m_p\) (Eq. 8 with \(\delta =0\)) is equivalent to \(\ell _p\) with rank transformation [9] in continuous domains because they both measure dissimilarity based on the number of instances in-between the two instances under measurement. In rank transformation [9], instances in each dimension are ranked in ascending order with the smallest value having ranked 1, the second smallest value having ranked 2, and so on. The values of instances are then replaced by their ranks. If there are \(n<N\) instances which have the same value and the value has rank r, then all instances are assigned the same rank r; and the next available rank is \(r+n\) (i.e, the minimum rank is assigned in the case of tie)Footnote 8.

The distance between two instances in each dimension after the rank transformation as discussed above can be defined as: \(abs(rank(x_i)-rank(y_i)) = |\{z_i:\min (x_i,y_i)\le z_i < \max (x_i,y_i)\}|\). In \(m_p\) (with \(\delta =0\)) using the implementation based on the range search, \(|R_i(x_i,y_i)| = |\{z_i:\min (x_i,y_i)\le z_i \le \max (x_i,y_i)\}|\) Footnote 9.

These two formulations are equivalent only if all values in dimension i are distinct, i.e., \(|R_i(x_i,y_i)|=abs(rank(x_i)-rank(y_i))+1\). They are different when there are duplicate values; and the degree of difference is proportional to the number of duplicates.

It is interesting to note that the self-dissimilarity of \(x_i\) if there are duplicate \(x_i\): abs(rank \((x_i)-rank(x_i)) = 0\) versus \(|R_i(x_i,x_i)| = f(x_i)\) where \(f(x_i)\) is the frequency of \(x_i\). Even though rank difference between \(x_i\) and \(y_i\) is density (data) dependent when \(x_i\ne y_i\) (i.e., the rank difference between \(x_i\) and \(y_i\) is larger in denser region than in sparse region even if the geometric distance is the same), it is zero irrespective of the distribution when \(x_i=y_i\). In the extreme case where all the instances have the same value in dimension i, the self-dissimilarity is 1 (maximum) in the case of \(m_p\), whereas the self-dissimilarity of \(\ell _p\) after rank transformation is 0 (minimum). Often in high-dimensional real-world problems, many instances can have the same value in many dimensions, e.g., many documents in a collection can have the same occurrence frequency of a term; or different individuals can have the same age, etc.

We have compared the performances of \(m_p\) and \(\ell _p\) with rank transformation (\(\ell _p^{rank}\)) in the k-NN classification task using data sets with continuous attributes only (as rank transformation is applicable only in continuous domains). Both \(\ell _p^{rank}\) and \(m_p\) (since the efficient approximation of \(R_i(\cdot ,\cdot )\) as discussed in Sect. 3.1 is not used) have high time complexities. Estimating \(|R_i(\cdot ,\cdot )|\) in \(m_p\) and computing the rank of an unseen value of a test instance in \(\ell _p^{rank}\) in each dimension requires \(O(\log N)\) time using binary search resulting in the total time complexity of measuring dissimilarity of a pair instances to be \(O(M\log N)\) which is very expensive in large data sets. We only managed to get a tenfold cross-validation of k-NN classification completed in 24 h in ten relatively small data sets only: Hba, Gtzan, Arcene, Mfeat, Madelon, Satellite, Fbis, Wap, Webkb, and R8.

In order to provide an idea about the number of duplicate values per dimension in a data set, the factor of distinct values averaged over all dimensions, i.e., \(\alpha \), is calculated as:

where \(w_i\) is the number of distinct values in dimension i. \(\alpha =1\) indicates that the data set has unique values in all dimensions (no duplicate at all) and \(\alpha =\frac{1}{N}\) indicates that all instances have the same value in each and every dimension.

The average accuracies of 5-NN classification over a tenfold cross-validation using \(\ell _{2}^{rank}\), \(\ell _{0.5}^{rank}\), \(m_{2}\) and \(m_{0.5}\) are provided in Table 8. Based on two standard error confidence interval significance test, \(\ell _{2}^{rank}\) & \(m_{2}\) and \(\ell _{0.5}^{rank}\) & \(m_{0.5}\) produced similar results in Hba, Gtzan, Arcene, MFeat, Madelon and Satellite; but both \(m_{2}\) and \(m_{0.5}\) produced significantly better accuracies than \(\ell _{2}^{rank}\) and \(\ell _{0.5}^{rank}\) in Fbis, Wap, Webkb, and R8. These results show that \(m_p\) performs better than \(\ell _p^{rank}\) in the case where many instances have the same values (i.e., there are only a very few distinct values) in many dimensions.

In order to further demonstrate this difference, we conducted experiments with the Hba and Gtzan data sets (having large \(\alpha \)) by increasing the number of duplicate values in many dimensions. The range of values in dimension i was divided into 10 equal-width bins represented by bin id \(1,2,\cdots ,10\) and an instance’s value was replaced by the id of the bin in which the instance falls into, resulting in many duplicate values in dimension i. In order to control the number of dimensions with duplicate values, we introduced a parameter a that determines the proportion of attributes to be converted into bins, i.e., \(a=0\) indicates that no attribute was converted into bins (i.e., values in all attributes were left as they were and no duplicate values were introduced) and \(a=1.0\) indicates that all attributes were converted into bins (i.e., many instances have duplicate values in all dimensions). The k-NN (\(k=5\)) classification accuracies of \(\ell _{2}^{rank}, \ell _{0.5}^{rank}, m_{2}\) and \(m_{0.5}\) in the Hba and Gtzan data sets with \(a=0, 0.1, 0.2, 0.5, 0.75\) and 1.0 are shown in Fig. 4 and corresponding \(\alpha \) values are provided in Table 9.

Figure 4 shows that there is a significant difference between the classification accuracies of \(m_{2}\) and \(m_{0.5}\) in compare to those of \(\ell _{2}^{rank}\) and \(\ell _{0.5}^{rank}\) for \(a\ge 0.75\) in both the Hba and Gtzan data sets. This indicates that \(m_{p}\) can provide more reliable nearest neighbors than \(\ell _{p}^{rank}\) if many instances have duplicate values in many dimensions. This superior performance of \(m_p\) over \(\ell _{p}^{rank}\) is primarily due to the data-dependent self-dissimilarity.

Furthermore, the rank transformation is possible in continuous domains only. In contrast, \(m_p\) not only can apply to both continuous and discrete domains, but has a seamless treatment of mixed attribute type domains.

6 Discussion

In a high-dimensional space, the most widely used Euclidean distance (\(\ell _2\)-norm) becomes ineffective. Many researchers have argued that it is due to the ‘concentration’ effect of \(\ell _p\), i.e., pairwise distances become almost equal or similar and the contrast between the nearest and farthest instances diminishes [1, 6, 12]. Let \(dmax(\mathbf{x},d)\) and \(dmin(\mathbf{x},d)\) be the dissimilarity of \(\mathbf{x}\) to its farthest and nearest neighbors in D using dissimilarity measure d, respectively. For a given instance, the distance between the nearest and farthest instances does not increase as fast as the distance to the nearest instance for many distributions [6], i.e., the ‘relative contrast’ \(\left( \frac{dmax(\mathbf{x},{\ell _p})-dmin(\mathbf{x},{\ell _p})}{dmin(\mathbf{x},{\ell _p})}\right) \) vanishes as the number of dimensions increases.

In our investigation, we observed that \(m_p\) is more concentrated than \(\ell _p\) and \(d_{cos}\), i.e., the relative contrast of \(m_p\) is smaller than that of \(\ell _p\) and \(d_{cos}\). Despite having a higher concentration effect, \(m_p\) provides more reliable nearest neighbors than \(\ell _p\) and \(d_{cos}\) in many data sets, particularly in high-dimensional problems (see the experimental results in Sects. 4.1 and 4.2). This indicates that the negative impact of the concentration phenomenon in practice may not be as severe as it is thought theoretically in the literature. This finding is consistent with that suggested by François et al. [12]. The detailed empirical result of the phenomenon of concentration of \(m_2\), \(\ell _2\) and \(d_{cos}\) is provided in the “Concentration” section of “Appendix 2”.

Another issue of distance measures in high-dimensional spaces discussed in the literature is “hubness” [24]. Let \(N_k(\mathbf{y})\) be the set of k nearest neighbors of \(\mathbf{y}\); and k-occurrences of \(\mathbf{x}\), \(O_k(\mathbf{x}) = |\{\mathbf{y}: \mathbf{x}\in N_k(\mathbf{y})\}|\), be the number of other instances in the given data set where \(\mathbf{x}\) is one of their k nearest neighbors. As the number of dimensions increases, the distribution of \(O_k(\mathbf{x})\) becomes considerably skewed (i.e., there are many instances with zero or small \(O_k\) and only a few instances have large \(O_k\)) for many widely used distance measures [24]. The instances with large \(O_k(\cdot )\) are considered as “hubs,” i.e., the popular nearest neighbors. Hubness becomes prominent in high-dimensional space, and it affects the performance of k-NN based algorithms. For example, if \(\mathbf{x}\) is a hub, it appears in the k-NN sets of many test instances and contributes in the prediction decisions, but it may not be relevant to make predictions for all of those test instances.

We observed that the hubness phenomenon in \(m_p\) is not as severe as in the case of \(\ell _p\) and \(d_{cos}\) when the number of dimensions is increased particularly in non-uniform distributions. This may contribute to the superior performance of \(m_p\) over \(\ell _p\) and \(d_{cos}\). The detailed empirical result of the phenomenon of hubness of \(m_2\), \(\ell _2\) and \(d_{cos}\) is provided in the “Hubness” section of “Appendix 2”.

In order to circumvent the high-dimensionality issue, dimensionality reduction [11] techniques are used before using distance measures. In continuous domain, Principal Component Analysis (PCA) [16] is commonly used to project data into a lower-dimensional space defined by principal components with high variance. The principal components are computed by the eigen decomposition of covariance or correlation matrix which is computationally expensive in the case of large M and N. It relies on variance of data in each dimension which may not be enough to capture the characteristics of local data distribution. As it selects the dimensions with high variance, we may lose differences between instances in the dimensions with low variance.

In a nutshell, the main purpose of PCA is dimensionality reduction that enables an application to high-dimensional data sets; and it usually does not improve predictive accuracy. This is exactly what we observed in the 5-NN classification task. For example, 5-NN classification accuracies of \(d_{cos}\) and \(\ell _2\) were increased in Corel and Hba but that of \(\ell _{0.5}\) was decreased in both data sets. Similarly, the classification accuracies of all three measures decreased significantly in Mnist and R52. In general, \(m_{2}\) and \(m_{0.5}\) in the original space (without dimensionality reduction) produced better and consistent results across different data sets. The detailed results of this comparison are provided in Table 12 in “Appendix 4”.

Note that PCA changes the distribution of data to maximize the variance (which is defined by inter-point distances). Thus, it does not make sense to apply PCA when using \(m_p\).

Different data-dependent distance metric adaptation techniques are discussed in the literature to improve task-specific performance of distance measures in a given data set. Weighted Minkowski distance [10] assigns weight to the distance in each dimension based on the observed data. Note that standardized Euclidean distance (s-\(\ell _2\)) is a simple weighted Euclidean distance where the distance in each dimension is weighted by the inverse of data variance in that dimension. Assigning weights more intelligently requires some learning or optimization. In transductive learning, Lundell and Ventura [21] corrected the Euclidean distance between two instances based on meta-clustering which itself relies on pairwise Euclidean distances and can be computationally expensive in large and high-dimensional problems.

Metric learning [32, 33] projects data from the original space to a new low-dimensional space that best suits the Euclidean distance to solve the task at hand. Rather than projecting data in low-dimensional space by ignoring dimensions with small eigen values, regularized matrix relevance learning [28] uses a regularization scheme which inhibits decays in the eigen profile. Both of these techniques require intensive learning which is computationally expensive in large and/or high-dimensional data sets. They optimize distance metric specifically for the given task which may not be good for other tasks using the same data set. They are not a general-purpose measures like \(m_p\), \(\ell _p\) or \(d_{cos}\).

All the adaptive metric learning techniques discussed in the literature attempt to adjust the inter-point distances in the space based on the data distribution that satisfies some optimality constraints. Because the transformed space is still embedded in the Euclidean space, the self-similarity is still constant regardless of the data distribution, and they still rely on geometric model and metric assumptions. Even though metric-based measures have a nice mathematical properties, their assumptions might be inappropriate to model some problems. Recently, Schleif and Tino [27] discussed issues of metric-based proximity learning and provided a comprehensive review of non-metric proximity learning.

In this paper, we focus on general-purpose distance or dissimilarity measures which requires no learning. We have evaluated the performance of the proposed data-dependent general-purpose dissimilarity measure \(m_p\) against the geometric general-purpose distance measures \(\ell _p\) and \(d_{cos}\). In the future, it would be interesting to investigate how learning can be applied to data-dependent dissimilarity measure such as \(m_p\) to produce non-metric learning and then compare non-metric learning with metric learning.

Because of the implementation of \(m_p\) using bins, one can see some similarity with Locality Sensitive Hashing (LSH) [15]. The aims of binning are different in the two cases. In LSH, bins are used to find a small set of candidate nearest neighbors of a test instance quickly where the k-NNs are searched using the Euclidean distance. In contrast, \(m_p\) probability data mass in bins is used as a measure of dissimilarity directly. It is an open question whether LSH can be used to generate candidate set quickly for \(m_p\). LSH has a nice theoretical bounds for the Euclidean distance but it is not clear if similar bounds can be derived for \(m_p\).

7 Conclusions and future work

In this paper, we proposed a new dissimilarity measure called “\(m_p\)-dissimilarity”. It estimates the dissimilarity between two instances in each dimension as a probability data mass in the region enclosing the two instances. The final dissimilarity between the two instances is estimated by combining all single-dimensional dissimilarities as in the case of \(\ell _p\). The fundamental difference between the formulations of \(m_p\) and \(\ell _p\) is the replacement of the geometric distance with the probability mass in each dimension.

Our empirical evaluations in k-NN classification and content-based multimedia information retrieval tasks show that \(m_p\) provides better closest matches than those provided by \(\ell _p\) and cosine distance in high-dimensional spaces. Its performance is more consistent across different data sets. By simply replacing the geometric distance in each dimension with the probability mass, k-NN using \(m_p\) significantly improves the performance of k-NN using \(\ell _p\) in many high-dimensional data sets.

In contrast to the commonly used distance measures, \(m_p\) is not using the values of instances in each dimension in the measure directly. Because it is based on data mass, it is insensitive to units and scale of measurement and the difference in variance of values of instances between dimensions. Thus, it does not require any preprocessing such as min–max normalization to rescale values in the same range, or standard deviation normalization to ensure unit variance across all dimensions, or TF-IDF weighting to adjust the importance of a term in a document.

Even though \(\ell _p\) can be made data dependent though rank transformation, it is applicable only in the case where all instances have distinct values (or a few duplicates only) in each dimension. However, the data-dependent characteristics of \(m_p\) is applicable in both cases of with and without many instances having duplicate values in many dimensions. Many instances having duplicate values in many dimensions are a common characteristic of high-dimensional data sets where data lies in a low-dimensional subspace. In such high-dimensional data sets, \(m_p\) produces better task-specific performance than \(\ell _p\) with the rank transformation.

Future work includes investigating learning for \(m_p\) and compare the non-metric learning with metric learning; examining the effectiveness of \(m_p\) in other data mining tasks such as clustering, anomaly detection, vector quantization and SVM kernel learning; and developing indexing schemes for \(m_p\) to speed up the nearest neighbor search in the case of large N.

Notes

We used dissimilarity so that it is consistent with other distance or dissimilarity measures.

We used sufficiently large b in order to discriminate instances well.

Available with WEKA software http://www.cs.waikato.ac.nz/ml/weka/.

Another approach of assigning rank in the case of tie is to assign the average rank, i.e., \(\frac{r+(r+1)+\cdots +(r+n)}{n}\).

We used the implementation based on the range search and not the approximation using binning in order to have similar formulation as \(\ell _p\) with rank transformation.

References

Aggarwal CC, Hinneburg A, Keim DA (2001) On the surprising behavior of distance metrics in high dimensional space. In: Proceedings of the 8th international conference on database theory. Springer, Berlin, pp. 420–434

Ariyaratne HB, Zhang D (2012) A novel automatic hierachical approach to music genre classification. In: Proceedings of the 2012 IEEE international conference on multimedia and expo workshops, IEEE Computer Society, Washington DC, USA, pp. 564–569

Aryal S, Ting KM, Haffari G, Washio T (2014) Mp-dissimilarity: a data dependent dissimilarity measure. In: Proceedings of the IEEE international conference on data mining. IEEE, pp. 707–712

Bache K, Lichman M (2013) UCI machine learning repository, http://archive.ics.uci.edu/ml. University of California, Irvine, School of Information and Computer Sciences

Bellet A, Habrard A, Sebban M (2013) A survey on metric learning for feature vectors and structured data, Technical Report, arXiv:1306.6709

Beyer KS, Goldstein J, Ramakrishnan R, Shaft U (1999) When Is “Nearest Neighbor” meaningful? Proceedings of the 7th international conference on database theory. Springer, London, pp. 217–235

Boriah S, Chandola V, Kumar V (2008) Similarity measures for categorical data: a comparative evaluation. In: Proceedings of the eighth SIAM international conference on data mining, pp. 243–254

Cardoso-Cachopo A (2007) Improving methods for single-label text categorization, PhD thesis, Instituto Superior Tecnico, Technical University of Lisbon, Lisbon, Portugal

Conover WJ, Iman RL (1981) Rank transformations as a bridge between parametric and nonparametric statistics. Am Stat 35(3):124–129

Deza MM, Deza E (2009) Encyclopedia of distances. Springer, Berlin

Fodor I (2002) A survey of dimension reduction techniques. Technical Report UCRL-ID-148494, Lawrence Livermore National Laboratory

François D, Wertz V, Verleysen M (2007) The concentration of fractional distances. IEEE Trans Knowl Data Eng 19(7):873–886

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. SIGKDD Explor Newsl 11(1):10–18

Han E-H, Karypis G (2000) Centroid-based document classification: Analysis and experimental results. In: Proceedings of the 4th European conference on principles of data mining and knowledge discovery. Springer, London, pp. 424–431

Indyk P, Motwani R (1998) Approximate nearest neighbors: towards removing the curse of dimensionality. Proceedings of the thirtieth annual ACM symposium on theory of computing, STOC ’98, ACM, New York, pp. 604–613

Jolliffe I (2005) Principal component analysis. Wiley Online Library, Hoboken

Krumhansl CL (1978) Concerning the applicability of geometric models to similarity data: the interrelationship between similarity and spatial density. Psychol Rev 85(5):445–463

Kulis B (2013) Metric learning: a survey. Found Trends Mach Learn 5(4):287–364

Lan M, Tan CL, Su J, Lu Y (2009) Supervised and traditional term weighting methods for automatic text categorization. IEEE Trans Pattern Anal Mach Intell 31(4):721–735

Lin D (1998) An information-theoretic definition of similarity. In: Proceedings of the fifteenth international conference on machine learning. Morgan Kaufmann Publishers Inc., San Francisco, pp. 296–304

Lundell J, Ventura D (2007) A data-dependent distance measure for transductive instance-based learning. In: Proceedings of the IEEE international conference on systems, man and cybernetics, pp. 2825–2830

Mahalanobis PC (1936) On the generalized distance in statistics. Proc Natl Inst Sci India 2:49–55

Minka TP (2003) The ‘summation hack’ as an outlier model, http://research.microsoft.com/en-us/um/people/minka/papers/minka-summation.pdf. Microsoft Research

Radovanović M, Nanopoulos A, Ivanović M (2010) Hubs in space: popular nearest neighbors in high-dimensional data. J Mach Learn Res 11:2487–2531

Salton G, Buckley C (1988) Term-weighting approaches in automatic text retrieval. Inf Process Manage 24(5):513–523

Salton G, McGill MJ (1986) Introduction to modern information retrieval. McGraw-Hill Inc, New York

Schleif F-M, Tino P (2015) Indefinite proximity learning: a review. Neural Comput 27(10):2039–2096

Schneider P, Bunte K, Stiekema H, Hammer B, Villmann T, Biehl M (2010) Regularization in matrix relevance learning. IEEE Trans Neural Netw 21(5):831–840

Tanimoto TT (1958) Mathematical theory of classification and prediction, International Business Machines Corp

Tuytelaars T, Lampert C, Blaschko MB, Buntine W (2010) Unsupervised object discovery: a comparison. Int J Comput Vision 88(2):284–302

Tversky A (1977) Features of similarity. Psychol Rev 84(4):327–352

Wang F, Sun J (2015) Survey on distance metric learning and dimensionality reduction in data mining. Data Min Knowl Disc 29(2):534–564

Yang L (2006) Distance metric learning: a comprehensive survey, Technical report, Michigan State Universiy

Zhou G-T, Ting KM, Liu FT, Yin Y (2012) Relevance feature mapping for content-based multimedia information retrieval. Pattern Recogn 45(4):1707–1720

Acknowledgements

The preliminary version of this paper is published in Proceedings of the IEEE International conference on data mining (ICDM) 2014 [3]. We would like to thank anonymous reviewers for their useful comments. Kai Ming Ting is partially supported by the Air Force Office of Scientific Research (AFOSR), Asian Office of Aerospace Research and Development (AOARD) under Award Number FA2386-13-1-4043. Takashi Washio is partially supported by the AFOSR AOARD Award Number 15IOA008-154005 and JSPS KAKENHI Grant Number 2524003.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Probabilistic interpretation of \(m_p\)

The formulation of \(m_p(\mathbf{x}, \mathbf{y})\) (Eq. 8) has a probabilistic interpretation. The simplest form of data-dependent dissimilarity measure is to define an M-dimensional region \(R(\mathbf{x}, \mathbf{y})\) that encloses \(\mathbf{x}\) and \(\mathbf{y}\), and to estimate the probability of a randomly selected point \(\mathbf{t}\) from the distribution of data, \(\phi (\mathbf{x})\), falling in \(R(\mathbf{x}, \mathbf{y})\), \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y})|\phi (\mathbf{x}))\). Let \(R(\mathbf{x}, \mathbf{y})\) has length of \(R_i(\mathbf{x}, \mathbf{y})\) in dimension i. Assuming that the dimensions are independent, \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y})|\phi (\mathbf{x}))\) can be approximated as:

where \(P(t_i\in R_i(\mathbf{x},\mathbf{y})|\phi _i(\mathbf{x}))\) is the probability of \(t_i\) falling in \(R_i(\mathbf{x},\mathbf{y})\) for dimension i.

The approximation in Eq. 14 is sensitive to outliers. An approximation which is tolerant to outliers can be estimated by replacing the product with the summation [23]. The sum-based approximation relates to the probability of \(\mathbf{t}\) in Eq. 14 under the following outlier model. Consider a data generation process in which in order to sample \(t_i\), a coin with probability of turning head \((1-\epsilon )\) is flipped. If the coin turns head, \(t_i\) is drawn from the distribution of data in dimension i, \(\phi _i(\mathbf{x})\), where the probability of sampling \(t_i\) is \(P_i(t_i|\phi _i(\mathbf{x}))\), otherwise it is sampled from the uniform distribution with probability 1 / A, and A is a constant.

Lemma 1

[23] Under the data generation process described above, the probability of a data point \(P'(\cdot )\) can be approximated as

where \(C_1\) and \(C_2\) are constants.

Proof

Under the outlier model, the probability of generating the value of the i’th dimension \(t_i\) is

We assume that each dimension is generated independently, hence

In the extreme case where the probability of generating \(t_i\) from the uniform distribution (i.e., the outlier component) is high, i.e., \(\epsilon \) is close to 1, only the first two terms matter. Assuming \(C_1 := (\epsilon /A)^M\) and \(C_2 := (\epsilon /A)^{M-1} (1-\epsilon )\), the lemma follows. \(\square \)

In addition to the above approximation given by Minka [23], we propose that the chance of \(t_i\) being drawn from the outlier model can be further reduced by sampling from \(\phi _i(\mathbf{x})^p\), \(p>1\) when coin turns up head in the above mentioned data generation process. The probability of sampling \(t_i\) from \(\phi _i(\mathbf{x})^p\) is \(\frac{P(t_i|\phi _i(\mathbf{x}))^p}{Z_{i,p}}\), where \(P(\cdot )^p\) is the probability of a random event occurring in p successive trials and \(Z_{i,p}\) is the normalization constant to ensure the total probability sums up to 1 in the \(i^{th}\) dimension.

Lemma 2

Under the data generation process of sampling from exponential distribution described above, the probability of a data point \(P''(\cdot )\) can be approximated as

where \(C_1\), \(C_2\), and \(\{Z_{i,p}\}_{i=1}^M\) are constants.

Proof

This follows from Lemma 1 by drawing \(t_i\) from \(\phi _i(\mathbf{x})^p\) \(p>1\) when coin turns up head in the data generation process. \(\square \)

As a result of Lemma 2 (by considering the outlier tolerant model), \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y}))\) can be approximated as:

Note that \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y}))\) is a data-dependent dissimilarity measure for \(\mathbf{x}\) and \(\mathbf{y}\). All the constants on RHS of Eq. 16 are independent of \(\mathbf{x}\) and \(\mathbf{y}\) and they are just the scaling factors of the dissimilarity measure. Particularly, in order to find the nearest neighbor of \(\mathbf{x}\) among a collection of data instances, the only important term in the measure is \(\sum _{i=1}^M P_i(t_i \in R_i(\mathbf{x},\mathbf{y}))^p\). The constants can be ignored as they do not change the ranking of data points. Hence, by ignoring the constants in Eq. 16, \(m_p(\mathbf{x}, \mathbf{y})\) can be expressed as its rescaled version as follows:

where the outer power of \(\frac{1}{p}\) is just a rescaling factor and \(\frac{1}{M}\) is a constant.

In practice, \(P_i\left( t_i\in R_i(\mathbf{x}, \mathbf{y})\right) \) can be estimated from D as:

Hence, Eqs. 17 and 18 lead to \(m_p\) defined in Eq. 8.

Appendix 2: Analysis of concentration and hubness

In order to examine the concentration and hubness of the three dissimilarity measures \(m_2\), \(\ell _2\) and \(d_{cos}\) in different data distributions with the increase in the number of dimensions, we used synthetic data sets with uniform (each dimension is uniformly distributed between [0,1]) and normal (each dimension is normally distributed with zero mean and unit variance) distributions with \(M=10\) and \(M=200\). Feature vectors were normalized to be in unit range in each dimension.

1.1 Concentration

The relative contrast between the nearest and farthest neighbor is computed for all \(N=1000\) instances in each data set using \(m_2\), \(\ell _2\) and \(d_{cos}\). The relative contrast for each instance in uniform and normal distributions with \(M=10\) and \(M=200\) are shown in Fig. 5.

The relative contrast of all three measures decreased substantially (note that the y-axes have different scales in Fig. 5) when the number of dimensions was increased from \(M=10\) to \(M=200\) in both distributions. It is interesting to note that \(m_2\) has the least relative contrast in both distributions with \(M=10\) and \(M=200\); and \(d_{cos}\) has the maximum relative contrast in all cases. The relative contrasts of \(\ell _2\) and \(m_2\) are almost the same except in the case of normal (\(M=200\)), where the relative contrast of \(\ell _2\) is slightly higher than that of \(m_2\) for many instances.

This suggests that \(m_2\) is more concentrated than \(\ell _2\) and \(d_{cos}\). Even in real-world data sets, we observed that \(m_2\) is more concentrated than \(\ell _2\) and \(d_{cos}\).

1.2 Hubness

In order to examine the hubness phenomenon, 5-Occurrences of each instance \(\mathbf{x}\in D\) is estimated, i.e., \(O_5(\mathbf{x}) = |\{\mathbf{y}: \mathbf{x}\in N_5(\mathbf{y})\}|\), where \(N_5(\mathbf{y})\) is the set of 5-NN of \(\mathbf{y}\). Then, the \(O_5\) distribution is plotted for each measure (\(m_2\), \(\ell _2\) and \(d_{cos}\)) in all four synthetic data sets which is shown in Fig. 6.

The \(O_5\) distributions of all three measures become skewed when the number of dimensions was increased from \(M=10\) to \(M=200\) in both distributions. It is interesting to note that the \(O_5\) distributions of \(m_2\) in uniform and normal distributions are almost similar for both \(M=10\) and \(M=200\), whereas those of \(\ell _2\) and \(d_{cos}\) in the case of normal distribution are more skewed than those in uniform distribution for both \(M=10\) and \(M=200\). Note that the \(O_5\) distributions of \(m_2\) and \(\ell _2\) in uniform distribution are similar for both \(M=10\) and \(M=200\). This is because of the fact that \(m_2\) is proportional to \(\ell _2\) under uniform distribution (also reflected in Fig. 2a). In the case of normal distribution and \(M=200\), the \(O_5\) distribution of \(m_2\) is less skewed than those of \(\ell _2\) and \(d_{cos}\). There are 361 and 348 (out of 1000) instances with \(O_5=0\) (which do not occur in the 5-NN set of any other instance) in the case of \(\ell _2\) and \(d_{cos}\), respectively; whereas there are only 161 instances with \(O_5=0\) in the case of \(m_2\). Similarly, the most popular nearest neighbors using \(\ell _2\) and \(d_{cos}\) have \(O_5=146\) and 152, respectively; whereas the most popular nearest neighbor using \(m_2\) has \(O_5=69\).

We observed similar behavior in many real-world data sets as well where the \(O_5\) distribution of \(m_2\) is less skewed than that of \(\ell _2\) and \(d_{cos}\).

Appendix 3: Standard error

Table 10 shows the standard error of classification accuracies (in %) of k-NN classification (\(k=5\)) over a tenfold cross-validation (average classification accuracy is presented in Table 4 in Sect. 4.1).

Table 11 shows the standard error of precision at top 10 retrieved results (P@10) over N queries in content-based multimedia information retrieval (average P@10 is presented in Table 6 in Sect. 4.2).

Appendix 4: Comparison with geometric distance measures after dimensionality reduction

Average 5-NN classification accuracies over a tenfold cross-validation of \(d_{cos}, \ell _{0.5}\) and \(\ell _2\) before and after dimensionality reduction through PCA along with those of \(m_{0.5}\) and \(m_2\) in the original space in 16 out of 22 data sets with continuous only attributes are provided in Table 12. With PCA, the number of dimensions was reduced by projecting data in the lower-dimensional space defined by the principal components capturing 95% of the variance in data. The principal components were computed by the eigen decomposition of the correlation matrix of the training data to ensure that the projection is robust to scale differences in the original dimensions. Note that PCA did not complete in 24 h in the remaining six data sets with \(M>5000\): New3s (26,832), Ohscal (11,465), Arcene (10,000), Wap (8460), R52 (7369) and NG20 (5489).

Rights and permissions

About this article

Cite this article

Aryal, S., Ting, K.M., Washio, T. et al. Data-dependent dissimilarity measure: an effective alternative to geometric distance measures. Knowl Inf Syst 53, 479–506 (2017). https://doi.org/10.1007/s10115-017-1046-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-017-1046-0