Abstract

Twin support vector machine (TWSVM) is proved to be better than support vector machine (SVM) in most cases, since it only deals with two smaller quadratic programming problems, which leads to high computational efficiency. It is proposed to solve a single-task learning problem, just like many other machine learning algorithms. However, a learning task may have relationships with other tasks in many practical problems. Training those tasks independently may neglect the underlying information among all tasks, while such information may be useful to improve the overall performance. Inspired by the multi-task learning theory, we propose two novel multi-task \(\nu\)-TWSVMs. Both models inherit the merits of multi-task learning and \(\nu\)-TWSVM. Meanwhile, they overcome the shortcomings of other multi-task SVMs and multi-task TWSVMs. Experimental results on three benchmark datasets and two popular image datasets also clearly demonstrate the effectiveness of our methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As a milestone in the development of support vector machine (SVM) [1], twin support vector machines (TWSVMs) attract much attention during recent years. It is first introduced in [2]. After a decade of research, there are many variants appeared, such as least squares twin support vector machine (LS-TWSVM) [3], twin bounded support vector machine (TBSVM) [4], robust twin support vector machine (robust-TWSVM) [5] and improved twin support vector machine (ITWSVM) [6]. A classical variant of TWSVM is \(\nu\)-TWSVM [7]. It is motivated by the classical \(\nu\)-SVM [8] and is proved to be more effective and efficient than \(\nu\)-SVM. Experiments on both synthetic and real datasets also demonstrate the effectiveness and efficiency of TWSVMs when compared to SVMs [9, 10]. TWSVM has also been applied into many machine learning areas, such as multi-view learning [11], domain adaptation [12] and clustering (TWSVC) [13]. Based on the PAC-Bayes theory, the generalization ability of TWSVM is analyzed [14]. Some novel safe screening rules are also proposed to speed up TWSVM without performance degradation [15, 16]. More advances of TWSVM can be found in recent survey [17, 18].

We should note that most machine learning algorithms belong to single-task learning, such as support vector machine, linear discriminant analysis, decision tree and so on. Many variants of TWSVM also belong to single-task learning. Actually, we usually train multiple tasks independently. In other words, one task is trained at one time. However, researchers point out that we may neglect the shared information among these tasks, which may be useful to improve the overall performance of these learning algorithms. The multi-task learning theory is thus proposed and has been studied extensively during the past two decades [19, 20]. It aims at improving the overall performance of several related tasks. Compared to single-task learning, it suggests that related tasks may share underlying knowledge, which should be learned jointly so as to take full advantages of the underlying information behind all tasks. Empirical works have demonstrated the effectiveness of multi-task learning and have also pointed out the mechanism of this machine learning paradigm [21].

A multi-task learning problem may be composed of several single-label learning problems regardless of how these tasks are related. One prerequisite is that all the samples in these tasks share the same feature space, which is also termed as homogeneous multi-task learning [22]. A special case of multi-task learning is multi-label learning, which studies the problem where each sample is associated with a set of labels simultaneously. The relation between these two machine learning paradigms has been clarified in [23]. Suppose the prediction of each label is a task, the multi-label learning problem can be transformed into a multi-task learning problem. By modeling the correlation of all tasks, the relations among multiple labels can be captured as well.

The research on multi-task learning in the early days can be found in [21, 24]. It mainly focused on neural network-based multi-task learning methods and also discussed k-nearest neighbors (k-NN) and decision tree-based multi-task learning algorithms. The generalization bound of multi-task learning was also discussed in early research [25]. At present, many multi-task learning methods appeared, such as Bayesian multi-task learning [26] and multi-task Gaussian process [27]. Recent survey on multi-task learning categorizes these methods into several types, including multi-task feature learning, multi-task relation learning, low-rank approach, dirty approach-based methods, task-clustering methods and other methods [22]. More surveys on multi-task learning can be found in [23, 28].

Recent success in multi-task support vector machines is interesting as well. The first practice is regularized multi-task learning (RMTL) [29,30,31], which suggests all tasks share a common separating hyperplane and belongs to mean-regularized multi-task learning. It has been used in human action recognition [32]. A generalized sequential minimal optimization (GSMO) is proposed for SVM \(+\) MTL [33]. Some other multi-task SVMs are also proposed recently, including multi-task least square support vector machine (MTLS-SVM) [34], multi-task proximal support vector machine (MTPSVM) [35] and multi-task asymmetric least squares support vector machine (MT-aLS-SVM) [36], all of which are based on a certain single-task learning method. Some other variants, such as multi-task infinite latent support vector machines (MT-iLSVM) [37], multi-task multi-class support vector machine (MTMCSVM) [38] and multi-view multi-task support vector machine (MVMTSVM) [39], are also inspiring. Based on SVM, an online multi-task learning algorithm is proposed for semantic concept detection in video [40]. A multi-task ranking SVM is proposed for image co-segmentation [41]. A least squares support vector machine for semi-supervised multi-task learning is also proposed recently [42].

In contrast to the extensive research on multi-task SVMs, few attention is focused on multi-task TWSVMs. Recent works on multi-task TWSVMs have directed multi-task twin support vector machine (DMTSVM) [43], multi-task centroid twin support vector machine (MTCTSVM) [44] and multi-task least squares twin support vector machine (MTLS-TWSVM) [45]. Compared to their single-task learning counterparts, these models show better generalization performance. They suppose all tasks share two mean hyperplanes, one for the positive and the other for the negative. Inspired by the multi-task learning, we propose two novel multi-task \(\nu\)-twin support vector machines (MT-\(\nu\)-TWSVMs) to take full advantage of the regularized multi-task learning and \(\nu\)-TWSVM. Both models inherit the merits of \(\nu\)-TWSVM and multi-task learning and overcome the shortage of TWSVM, DMTSVM and MTCTSVM. Thus, our model can perform better than DMTSVM and MTCTSVM. The main contributions of our paper are as follows:

-

1.

We propose two novel multi-task \(\nu\)-twin support vector machines based on different assumptions. They are natural extensions of \(\nu\)-TWSVM in multi-task setting.

-

2.

Both models inherit the merits of \(\nu\)-TWSVM. The fraction of support vectors is thus easier to control in our models than other multi-task SVMs and TWSVMs.

-

3.

The task relation is easier to control in our models and more flexible than other methods.

-

4.

Our models achieve better performance than other multi-task SVMs and TWSVMs.

The remainder of this paper is organized as follows. After a brief review of \(\nu\)-TWSVM and DMTSVM in Sect. 2, we give a detailed derivation of the proposed MT-\(\nu\)-TWSVMs in Sects. 3 and 4. Analysis of algorithms is shown in Sect. 5. The numerical experimental results are shown in Sect. 6. Finally, we show the conclusions and future work in Sect. 7.

2 Related work

Here we introduce \(\nu\)-twin support vector machine (\(\nu\)-TWSVM) and the primal multi-task twin support vector machine (DMTSVM). It would be better to clarify the original inspiration of these methods, since they lay a solid foundation for our proposed methods.

2.1 \(\nu\)-Twin support vector machine

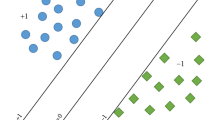

For a standard TWSVM model, it aims at finding two nonparallel hyperplanes rather than one hyperplane in SVM. It is also proved to be faster than SVM for four times on training large datasets. The \(\nu\)-TWSVM is similar to TWSVM. Suppose \(X_p\) represents all the positive samples, and \(X_n\) stands for the negative. For simplicity, denote \(A=[X_p\,e]\), \(B=[X_n\,e]\), \(u=[w_1, b_1]^\top\) and \(v=[w_2, b_2]^\top\), and this model generates two nonparallel hyperplanes by solving the following problems,

and

where \(\nu _1\) and \(\nu _2\) are positive parameters. \(l_{+}\) and \(l_{-}\) denote the numbers of positive samples and negative samples, respectively. Both \(e_{1}\) and \(e_{2}\) are vectors of ones of appropriate dimensions. Then, a new point \(x \in R^n\) is assigned to class \(i (i=+1,-1)\) by

This model is modeled after the \(\nu\)-SVM. It can adjust the fraction of support vectors and is proved to be more efficient and effective than traditional SVMs and TWSVMs. However, just like many other single-task learning models, it is not designed to deal with the commonality and individuality of multiple tasks.

2.2 Multi-task twin support vector machine

This model introduces TWSVM into multi-task learning setting, is modeled after the RMTL and also is called direct multi-task twin support vector machine (DMTSVM) [43], unlike multi-task support vector machines, which supposes all tasks share two mean hyperplanes. Suppose the positive (negative) samples in the tth task are represented by \(X_{pt}\)(\(X_{nt}\)). Meanwhile, \(X_p\) represents the positive samples, while \(X_n\) stands for the negative. Now, we let

where \(e_t\) and e are one vectors of appropriate dimensions.

Suppose there are two mean hyperplanes \(u=[w_1, b_1]^\top\) and \(v=[w_2, b_2]^\top\) shared by all tasks, the two hyperplanes in the tth task are \((u+u_t)=[w_{1t},b_{1t}]^\top\) and \((v+v_t)=[w_{2t},b_{2t}]^\top\), respectively. The bias between the hyperplanes in the tth task and the common hyperplanes u and v is captured by \(u_t\) and \(v_t\). Then, the primal problem of DMTSVM is illustrated as follows:

and

where \(t \in \{1,2,\ldots ,T\}\), \(e_{1t}\) and \(e_{2t}\) are one vectors. \(c_1\) and \(c_2\) are nonnegative trade-off parameters. The relationships of all tasks can be adjusted by parameters \(\rho _t\) and \(\lambda _t\). Both \(p_t\) and \(q_t\) are slack variables. Then all tasks can be modeled unrelated when \(\rho _t\rightarrow 0\) and \(\lambda _t\rightarrow 0\) simultaneously. On the contrary, these models will be learned the same when \(\rho _t\rightarrow \infty\) and \(\lambda _t\rightarrow \infty\). Finally, the label of a new point x in the tth task can be determined by

3 Multi-task \(\nu\)-twin support vector machine I

3.1 Linear case

In this section, based on the regularized multi-task learning and the \(\nu\)-TWSVM, we propose a primal multi-task learning problems as follows:

and

where \(t \in \{1,2,\ldots ,T\}\), \(l_+\) and \(l_-\) are the numbers of positive and negative samples, separately. Note that, \(w_{rt}(r\in\{+1,-1\})\) are the weight vectors of the hyperplanes for each task. Here, \(u_0(v_0)\) and \(u_t(v_t)\) indicate the commonality and personality of each task, separately. The difference between all tasks is controlled by \(\mu\). However, we take ideas from \(\nu\)-TWSVM. Its merits may be different from the DMTSVM and MTCTSVM. Two additional variables \(\rho _\pm\) in (7) and (8) need to be optimized. Before analyzing the effect of \(\nu\), we take the dual problem of (7) and (8). The Lagrangian function of problem (7) is given by

where \(\alpha _t\), \(\beta _t\) and \(\eta\) are the Lagrangian multipliers. The Karush–Kuhn–Tucker (KKT) conditions are given below

where \(\alpha =[\alpha _1^\top ,\alpha _2^\top ,\ldots ,\alpha _T^\top ]^\top\) and \(p=[p_1^\top ,p_2^\top ,\ldots ,p_T^\top ]^\top\). Then, we have

Then, substituting \(u_0\), \(u_t\) into function (9)

and using below equations

the dual problem of (7) can be simplified as

Similarly, we introduce below equations

The dual problem of (8) can be written as

Finally, the label of a new sample x in the tth task can be determined by

3.2 Nonlinear case

Since linear classifier may not be appropriate for linear nonseparable cases, the kernel trick can be used in such case. Now, we introduce the kernel function \(K(\cdot )\) and define

where X represents training samples from all tasks, i.e., \(X=[A_1^\top ,B_1^\top ,A_2^\top ,B_2^\top ,\ldots ,A_T^\top ,B_T^\top ]^\top\). By substituting A and B in (7) and (8) with E and F, respectively, we can obtain the kernel version of this model. The primal problems of the nonlinear model are

and

Then the corresponding decision function of the tth task is

4 Multi-task \(\nu\)-twin support vector machine II

Although it is easy to understand MT-\(\nu\)-TWSVM I, this model may have some disadvantages. Because the range of parameters \(\mu _1\) (\(\mu _2\)) is \((0,+\,\infty )\), it is hard for us to adjust the relationship among multiple tasks. Thus we propose another multi-task \(\nu\)-TWSVM to address this problem in this section.

4.1 Linear case

Suppose the hyperplane of the tth task can be expressed as a linear convex combination of the common vectors \(u_0(v_0)\) and the task specific vectors \(u_t(v_t)\), we propose another problem as follows:

and

where \(t \in \{1,2,\ldots ,T\}\).

Similar to DMTSVM, the differences between all tasks are controlled by parameters \(\mu _1\) and \(\mu _2\). But it is different from DMTSVM, that is, the task relation is captured by a linear convex combination of the common hyperplane \(u_0\)(\(v_0\)) and a specific vector \(u_t\)(\(v_t\)) for the positive (negative). If we set \(\mu _1=0\) and \(\mu _2=0\), it means that \(u_0\) and \(v_0\) have no effect on the tth task. Then T completely different tasks will be learned, and the hyperplanes of the tth task will be far away from the common hyperplanes. When \(\mu _1=1\) and \(\mu _2=1\), our model reduces to an enlarged ν-TWSVM, and it means all tasks have the same two hyperplanes. Therefore, the difference among all tasks can be easily captured by two parameters \(\mu _1\) and \(\mu _2\). It is more flexible than DMTSVM and MTCTSVM.

However, both models are based on \(\nu\)-TWSVM. Their merits may be different from the DMTSVM and MTCTSVM. Two additional variables \(\rho _\pm\) in (21) and (22) need to be optimized. Before analyzing the effect of \(\nu\), we take the dual problem of (21). The Lagrangian function of problem (21) is given by

Taking the partial derivatives of Lagrangian function (23) with respect to (\(w_0\), \(w_t\), \(\rho _+\), p), we obtain the following KKT conditions:

where \(\alpha =[\alpha _1^\top ,\alpha _2^\top ,\ldots ,\alpha _T^\top ]^\top\).

Then, we have the following equalities with respect to primal problem variables (\(u_0\), \(u_t\))

Then, we substitute \(u_0\) and \(u_t\) into function (23)

The dual problem of (21) can be simplified as

Similarly, the dual problem of (22) can be written as

Finally, the label of a new sample x in the tth task can be determined by

4.2 Nonlinear case

A linear classifier may not be suitable for training samples that are linear inseparable. The kernel trick can be used to deal with such problems. Similarly, we introduce the kernel function \(K(\cdot )\) and define

where X represents training samples from all tasks, i.e., \(X=[A_1^\top ,B_1^\top ,A_2^\top ,B_2^\top ,\ldots ,A_T^\top ,B_T^\top ]^\top\). The \(K(\cdot )\) is a kernel function. The primal problems of the nonlinear case are given as

and

Then the decision function of the tth task is

5 Analysis of algorithms

5.1 Equivalent form of model

The dual problems of MT-\(\nu\)-TWSVM are similar to that of \(\nu\)-TWSVM. The difference lies in the Hessian matrix. Besides, these models share similar features with \(\nu\)-TWSVM. Similar to \(\nu\)-SVM and \(\nu\)-TWSVM, to compute \(\rho _\pm\), we select samples \(x_i\) (or \(x_j\)) with \(0< \alpha _i < \frac{1}{l_-}\) (or \(0< \gamma_j < \frac{1}{l_+}\)) from all tasks, which means that \(p_t=0\) (or \(q_t=0\)) and \(w_1^\top x_j+b_+=-\rho _+\)(or \(w_2^\top x_i+b_2=\rho _-\)). According to the KKT conditions, the \(\rho _\pm\) can be calculated by

where \(N_{tn}\) and \(N_{tp}\) represent the number of negative and positive samples satisfying above constraints in the tth task.

Here we show an equivalent form of QPP (14). However, the optimal value of parameter \(\rho _+\) (\(\rho _-\)) is actually larger than zero. According to previous conclusions, we have the following Proposition 1.

Proposition 1

QPP (14) can be transformed into the following QPP.

The difference between (14) and (34) lies in the second constraint. The second inequality constraint\(e_2^\top \alpha \ge \nu _1\)can be transformed into an equality constraint\(e_2^\top \alpha = \nu _1\).

Proof

According to the KKT conditions \(\eta \rho _+=0\) and the assumption \(\rho _+ > 0\), we have that \(\eta =0\). Then we obtain the equality constraint \(e_2^\top \alpha = \nu _1\) by substituting \(\eta\) into (10). Thus we prove Proposition 1.

Similar to \(\nu\)-TWSVM, dual problems (14) and (15) of MT-\(\nu\) TWSVM I can be seen as minimizing the generalized Mahalanobis norm. This norm is defined as \(\Vert u\Vert _{GM}=\sqrt{u^\top Su}\). Here, we set \(S=Q+\frac{T}{\mu _1}P\), and problem (14) can be written as a standard generalized Mahalanobis norm minimizing problem as follows,

where \(\alpha _m=\frac{e_2}{\nu_1l_-}\). Further analysis of this property can be found in [7], and the only difference lies in the Hessian matrix. Similar conclusions can be obtained for QPP (15) as well. The MT-\(\nu\)-TWSVM II also has these features. \(\square\)

5.2 Property of parameter \(\nu\)

As in \(\nu\)-TWSVM, parameter \(\nu\) in our multi-task \(\nu\)-TWSVMs also has these properties. They are discussed in the following propositions.

Proposition 2

Suppose we run both MT-\(\nu\)-TWSVM I and II withnsamples on dataset\(\mathcal {D}\), obtaining the result that\(\rho _\pm \ge 0\). Then

-

1.

\(\nu _2\) (or\(\nu _1\)) is an upper bound on the fraction of positive (or negative) margin errors of the common task.

-

2.

\(\nu _2\) (or\(\nu _1\)) is a lower bound on the fraction of positive (or negative) support vectors of the common task.

Proof

The proof of Proposition 2 is similar to that of Proposition 5 in [8]. These results can be extended to the nonlinear case by introducing the kernel function. \(\square\)

5.3 Complexity analysis

We analyze the training time complexity of our proposed algorithms. Clearly, both models need solving two smaller quadratic programming problems. It is the same as training original single-task learning \(\nu\)-TWSVM on all the samples in these tasks. Although one may notice that there is \(2T+2\) times matrix inversion in a training process, we note that it can be better optimized by carefully organizing the training procedure of the grid process. It will not affect the overall time complexity theoretically. Therefore, the training time complexity of our proposed algorithms is the same as that of \(\nu\)-TWSVM. Suppose the number of training samples in all the tasks is l, the time complexity of our algorithms is also \(O(\frac{l^3}{4})\).

According to the analysis, we know that training such a multi-task learning model needs additional computation when compared to training a unify model on all the samples. But in one aspect, the personality and commonality can be modeled to improve the overall performance. In other aspect, the training tasks could help each other in a multi-task learning scenario. This is what a single-task learning method cannot achieve practically.

6 Numerical experiments

In this section, we present experimental results on both single-task learning methods and multi-task learning algorithms. The single-task learning algorithms are consisted of SVM, PSVM, LSSVM, TWSVM, LSTWSVM and \(\nu\)-TWSVM, while the multi-task learning methods are MTPSVM, MTLS-SVM, MTL-aLS-SVM, DMTSVM, MCTSVM and our proposed MT-\(\nu\)-TWSVM I and II. The numerical experiments are first conducted on three benchmark datasets. To further evaluate these methods, we have also made comparisons on popular Caltech 101 and 256 datasets.

For each algorithm, all parameters, such as \(\lambda\), \(\gamma\) and \(\rho\), are turned by grid-search strategy. Without specification, all parameters are selected from set \(\{2^i|i=-3,-2,\ldots ,8\}\). The parameter p in MTL-aLS-SVM is selected from set \(\{0.82, 0.86,0.90,0.95\}\). The parameter \(\nu\) in \(\nu\)-TWSVM and MT-\(\nu\)-TWSVMs is selected from set \(\{0.1,0.2,\ldots ,1.0\}\). The parameter \(\mu\) in MT-\(\nu\)-TWSVM II is selected from set \(\{0,0.1,\ldots ,0.9,1\}\). Then, we use fivefold cross-validation to obtain average performance. Finally, all experiments are conducted in MATLAB R2018b on Windows 8.1 running on a PC with system configuration of Intel(R) Core(TM) i3-6100 CPU (3.90 GHz) with 12.00 GB of RAM.

We note one special operation we had done to handle multi-task learning problems when conducting simulations. Since training a group of unrelated tasks may have negative impact on the performance of our proposed multi-task learning models, all the training tasks should be conceptually positive related. In our work, the training tasks satisfy such requirement to a certain extent. Thus it can better utilize the generalization ability of our proposed multi-task learning methods.

6.1 Benchmark datasets

In this subsection, we conduct experiments on three datasets. The general information is show in (Table 1). The details of these datasets are as follows.

Monk This dataset comes from a first international comparison of learning algorithms and contains three Monk’s problems corresponding to three tasks [46]. The domains of all tasks are the same. Thus, these tasks can be seen as related. We select different number of samples to test these methods.

Emotions This is a multi-label dataset in Mulan library [47] and is used to recognize different emotions. There are six kinds of labels for all samples. Each sample may have more than one label (or emotion). Suppose the recognition tasks of different emotions share similar features and can be seen as related tasks. We cast it into a multi-task classification problem, and each task is to recognize one type of emotion. We select 100 to 200 samples from this dataset to evaluate these multi-task learning algorithms.

Flags This is a multi-label dataset in Mulan library [47] as well. Each sample may have seven labels. Since the recognition task of each label can be seen as related. Thus, we also consider it as a multi-task learning problem. Then we select different number of samples from this dataset to compare the performance of these multi-task learning methods.

Finally, we use Gaussian kernel function on Monk dataset only. But considering the feature mapping of the Gaussian kernel, the data could be more linear separable in high-dimensional space, causing the classification performance of each model cannot be easily distinguished on limited testing samples. Therefore, a polynomial kernel function is applied in our experiments, i.e.,

In our experiments, we set the kernel parameter \(c=1\) and \(d=2\). By the kernel trick, the input data are mapped into a high-dimensional feature space. In the feature space, a linear classifier is implemented which corresponds to a nonlinear separating surface in the input space.

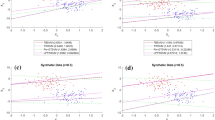

Figures 1 and 2 show the performance comparison on Monk dataset with RBF kernel function. We can learn from them that our algorithms clearly outperform these single-task learning algorithms. The performance of each algorithm increases when increasing the size of each task. With the task size increases, the performance gap between single-task learning methods and our methods decreases. It can be explained as follows. Since our models train all tasks simultaneously, it can take advantage of the underlying information among all tasks when there are few samples in each task. The performance of single-task learning algorithms also becomes better when increasing the number of samples. Thus our multi-task learning methods are suitable for training small tasks. Meanwhile, the performance of our models in Fig. 2 is clearly better than other multi-task learning algorithms when there are few samples in each task. With the increase in task size, the performance of each algorithm also increases. In addition, we note that the average training time of these four multi-task TWSVMs is almost the same, while the training time of MTPSVM and MTLS-SVM is lower than the other algorithms.

In the following, the performance comparison on Monk dataset with polynomial kernel function is shown in Figs. 3 and 4. We point out that our algorithms also outperform other single tasks at varying task size. In addition, since our methods train all tasks simultaneously, the training time is surely larger than those single-task learning algorithms. But we also note that the training time of SVM, TWSVM and \(\nu\)-TWSVM is close to our methods when there are few training samples in each task, since these algorithms need to solve one or two quadratic programming problems. In addition, our algorithms also perform better than other multi-task learning methods at varying task size in terms of the mean accuracy. Meanwhile, the average training time of the last four algorithms is almost the same. The training time of PSVM, LSSVM and their multi-task leaning extensions MTPSVM and MTLS-SVM is the lowest among all algorithms. Finally, our algorithms perform better than these single-task learning and multi-task learning methods on Monk dataset in our experimental results in terms of the mean accuracy.

The experimental results on Flags dataset between multi-task learning algorithms with polynomial kernel function are illustrated in Fig. 5. Our algorithms perform better than other multi-task learning algorithms when the number of samples in each task is larger than 100. Since there are 7 tasks in this dataset, we cannot suppose these tasks are really correlated. The performance of these multi-task learning algorithms may not be so well. But our algorithms still perform better than these three multi-task SVMs in terms of mean accuracy. In addition, the training time of MPTSVM and MTLS-SVM is clearly lower than other algorithms. However these two algorithms just solve a larger linear equation problem, while our algorithms need to solve two smaller quadratic programming problems and several small matrix inversions. The computational costs of our methods are naturally high.

The comparison between multi-task learning algorithms on Emotions dataset with polynomial kernel function is shown in Fig. 6. In this group of experiments, our algorithms perform better than other similar methods in terms of the mean accuracy. The MTPSVM and MTLS-SVM are faster than other methods. The average training time of the last five algorithms is almost the same.

6.2 Image datasets

To further evaluate the effectiveness of MT-\(\nu\)-TWSVMs, we conduct experiments on two image datasets. The images are selected from the Caltech 101 [48, 49] and the Caltech 256 datasets [50], which have been widely used in computer vision researches. There are 102 categories in Caltech 101 dataset, and each category has more than 50 samples. Each image has about \(300\times 200\) pixels [48]. We select about 50 samples from each category in our experiments. Caltech 256 dataset has 256 categories of images in total, such as mammals, birds, insects and flowers. There is a clutter category in this dataset, which can be seen as negative samples. The number of images in each category ranges from 80 to 827. We select no more than 80 samples from each category. Then, we manually cluster these images into 15 main categories according to the hierarchy of category, each category contains three to ten classes of images. Some images are shown in Fig. 7. We note that the images in one column have similar features. But each row belongs to different subclass. Therefore, the recognition tasks of different subclasses belonging to the same category can be regarded as a group of related tasks. Then, we train these tasks simultaneously to evaluate these multi-task learning methods.

As a classical image feature extractor, scale invariant feature transform (SIFT) algorithm [51] is widely used in many computer vision researches before [52,53,54]. Until few years ago, hand crafted features such as SIFT represented the state of the art for visual content analysis. In particular, SIFT is widely regarded as the gold standard in the context of local feature extraction [55]. In this paper, a fast and dense version of SIFT, called dense-SIFTFootnote 1, is used in accompany with Bag of Visual Words (BoVW) method to obtain the vector representation of the images. It is a fast algorithm for the calculation of a large number of SIFT descriptors of densely sampled features of the same scale and orientation.Footnote 2 It not only runs faster than original SIFT feature extractor, but also can generate more feature descriptors. Thus it can provide more information of an image. It is especially important in building the feature vector of an image with BoVW method. The feature vector of a preprocessed image is 1000 dimensions in our experiments. Afterward, the dimensions of those feature vectors are reduced with PCA to capture \(97\%\) of the variance. Thus, to reduce the training complexity, the task is to recognize those samples in each subclass. Finally, considering the high dimensional of samples, all experiments on these two datasets are conducted with a polynomial kernel function as described in previous experiments.

Figures 8 and 9 illustrate our experimental results on the Caltech 101 and Caltech 256 datasets. We find that our methods perform better than MTPSVM, MTLS-SVM and MTL-aLS-SVM on four categories in Caltech 101 dataset. However, from the training time, multi-task TWSVMs are almost the same. But our methods outperform the DMTSVM and MTCTSVM. In comparison, the MTL-aLS-SVM performs badly on these two metrics in most cases. In addition, our algorithms perform better than other two multi-task TWSVMs on seven categories in Caltech 256 dataset. Our algorithms also perform better than MTLS-SVM on six categories. We notice that the training time of the last five algorithms is almost the same in this group of experiments. However, they all need to solve one or two quadratic programming problems instead of one larger linear equation. The MTPSVM and MTLS-SVM perform well in terms of the average training time. The reason has been clarified in the previous section. Although the feature vector has been reduced to a low dimension, the dimensions are still high when compared to the number of all sample in most cases. We point out that the number of features is about 300–600 dimensions in these two groups of experiments. In contrast to previous results on benchmark datasets, the ability of our algorithms in dealing with such case may not be so well.

After showing our experimental results, we then have an overview of the accuracy levels other researchers reported when they used Caltech 101 and 256 datasets in Table 2. As we can see, the SVM is applied with a specific feature extractor to evaluate the performance in these researches. We note that recently proposed ResFeats-152 \(+\) PCA-SVM achieves the best accuracy on both datasets. It uses deep neural network as an image feature extractor and then feeds the preprocessed feature vectors into the SVM. In contrast, the other methods are manually designed feature extractors. The Pyramid SIFT is a feature extractor based on SIFT. In a word, the main difference of above researches on Caltech datasets lies in the feature extraction method. Compared to previous results, the accuracy level of our methods is comparable to the other method on Caltech 101 dataset. Meanwhile, the accuracy level of our methods is better than the above methods in most cases. The comparison shows the effectiveness of our methods on Caltech datasets.

Finally, to verify our hypothesis that recognizing images belongs to different subclasses but in a common category can be trained simultaneously, Fig. 10 shows the trend of mean accuracy with respect to the parameters \(\mu\) and \(\nu\) around the best parameters when the kernel parameters are fixed. The raw data come from the result of MT-\(\nu\)-TWSVM II on Caltech 256 image dataset with a RBF kernel function. According to this figure, we can directly know whether the performance is largely affected by the choice of \(\mu\) or \(\nu\). This figure indicates that the performance has strong correlation with the value of \(\mu\) rather than \(\nu\). Our model achieves the highest accuracy at a larger value of \(\mu\). According to the previous analysis of our model, it means all tasks share two mean hyperplanes and have high correlation. Thus, we should choose a larger parameter \(\mu\) in the range of [0, 1]. This result is in consistent with our hypothesis. It means the tasks selected from Caltech 256 dataset are related and should be learned jointly rather than separately. However, this is not the case on all the datasets. But we can find the relationships of all tasks according to this figure. Therefore, it provides a better way to choose the best parameters.

7 Conclusion and future work

In this paper, we propose two novel multi-task classifiers, termed as MT-\(\nu\)-TWSVM I and II, which are natural extension of \(\nu\)-TWSVM in multi-task learning. Both models inherit the merits of \(\nu\)-TWSVM and multi-task learning. Our analysis shows that both models share similar properties with \(\nu\)-TWSVM. The main difference lies in the two Hessian matrices, which model the personality and commonality of all tasks. Unlike original \(\nu\)-TWSVM, it is the fraction of support vectors of the common task that can be bounded by parameter \(\nu\). It overcomes the shortage of DMTSVM and MTCTSVM. The multi-task relationship can be modeled from completely irrelevant to fully relevant in the second model. Therefore, it is more flexible. Experimental results on three benchmark datasets and two image datasets demonstrate the effectiveness and efficiency of our algorithms. Meanwhile, the accuracy levels other researchers obtained on these two image datasets are also discussed. This comparison also clearly confirms that our proposed methods are powerful and consistently outperform the other image classification algorithms.

Finally, our future work will focus on speeding up the training process of multi-task SVM and TWSVMs on large datasets.

References

Burges CJ (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(4):7535–7543

Shao YH, Zhang CH, Wang XB, Deng NY (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Qi Z, Tian Y, Shi Y (2013) Robust twin support vector machine for pattern classification. Pattern Recogn 46(1):305–316

Tian Y, Ju X, Qi Z, Shi Y (2014) Improved twin support vector machine. Sci China Math 57(2):417–432

Peng X (2010) A \(\nu\)-twin support vector machine (\(\nu\)-TSVM) classifier and its geometric algorithms. Inf Sci 180(20):3863–3875

Schlkopf B, Smola AJ, Williamson RC, Bartlett PL (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Xu Y, Yang Z, Pan X (2017) A novel twin support-vector machine with pinball loss. IEEE Trans Neural Netw 28(2):359–370

Xu Y, Li X, Pan X, Yang Z (2018) Asymmetric \(\nu\)-twin support vector regression. Neural Comput Appl 30(12):3799–3814

Xie X (2018) Regularized multi-view least squares twin support vector machines. Appl Intell 48(9):3108–3115

Xie X, Sun S, Chen H, Qian J (2018) Domain adaptation with twin support vector machines. Neural Process Lett 48:1213–1226

Wang Z, Shao YH, Bai L, Deng NY (2015) Twin support vector machine for clustering. IEEE Trans Neural Netw 26(10):2583–2588

Xie X (2017) Pac-bayes bounds for twin support vector machines. Neurocomputing 234(19):137–143

Pan X, Yang Z, Xu Y, Wang L (2018) Safe screening rules for accelerating twin support vector machine classification. IEEE Trans Neural Netw 29(5):1876–1887

Wang H, Xu Y (2018) Scaling up twin support vector regression with safe screening rule. Inf Sci 465:174–190

Ding S, Zhang N, Zhang X, Wu F (2017) Twin support vector machine: theory, algorithm and applications. Neural Comput Appl 28(11):3119–3130

Ding S, Zhao X, Zhang J, Zhang X, Xue Y (2019) A review on multi-class TWSVM. Artif Intell Rev 52(2):775–801

Qi K, Liu W, Yang C, Guan Q, Wu H (2017) Multi-task joint sparse and low-rank representation for the scene classification of high-resolution remote sensing image. Remote Sens 9(1):10

Jeong JY, Jun CH (2018) Variable selection and task grouping for multi-task learning. In: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery and data mining, pp 1589–1598

Caruana R (1998) Multitask learning. In: Learning to learn, pp 95–133

Zhang Y, Yang Q (2017) A survey on multi-task learning. arXiv preprint arXiv:1707.08114

Thung KH, Wee CY (2018) A brief review on multi-task learning. Multimed Tools Appl 77(22):29705–29725

Caruana R (1993) Multitask learning: a knowledge-based source of inductive bias. In: Proceedings of the tenth international conference on machine learning (ICML), pp 41–48

Baxter J (2000) A model of inductive bias learning. J Artif Intell Res 12(1):149–198

Bakker B, Heskes T (2003) Task clustering and gating for bayesian multitask learning. J Mach Learn Res 4:83–99

Yu K, Tresp V, Schwaighofer A (2005) Learning Gaussian processes from multiple tasks. In: Proceedings of the 22nd international conference on machine learning (ICML), pp 1012–1019

Zhang Y, Yang Q (2018) An overview of multi-task learning. Natl Sci Rev 5(1):30–43

Evgeniou T, Pontil M (2004) Regularized multi-task learning. In: Proceedings of the tenth ACM SIGKDD international conference on knowledge discovery and data mining, pp 109–117

Jebara T (2004) Multi-task feature and kernel selection for SVMs. In: Proceedings of the 21st international conference on machine learning (ICML), p 55

Micchelli CA, Pontil M (2004) Kernels for multi-task learning. In: Advances in neural information processing systems (NIPS), pp 921–928

Liu A, Xu N, Su Y, Lin H, Hao T, Yang Z (2015) Single/multi-view human action recognition via regularized multi-task learning. Neurocomputing 151:544–553

Cai F, Cherkassky VS (2012) Generalized SMO algorithm for SVM-based multitask learning. IEEE Trans Neural Netw 23(6):997–1003

Xu S, An X, Qiao X, Zhu L (2014) Multi-task least-squares support vector machines. Multimed Tools Appl 71(2):699–715

Li Y, Tian X, Song M, Tao D (2015) Multi-task proximal support vector machine. Pattern Recogn 48(10):3249–3257

Lu L, Lin Q, Pei H, Zhong P (2018) The ALS-SVM based multi-task learning classifiers. Appl Intell 48(8):2393–2407

Zhu J, Chen N, Xing EP (2011) Infinite latent SVM for classification and multi-task learning. In: Advances in neural information processing systems (NIPS), vol 24, pp 1620–1628

Ji Y, Sun S, Lu Y (2012) Multitask multiclass privileged information support vector machines. In: Proceedings of the 21st international conference on pattern recognition (ICPR), pp 2323–2326

Zhang J, He Y, Tang J (2018) Multi-view multi-task support vector machine. In: International conference on computational science (ICCS), pp 419–428

Markatopoulou F, Mezaris V, Patras I (2016) Online multi-task learning for semantic concept detection in video. In: IEEE international conference on image processing (ICIP), pp 186–190

Liang X, Zhu L, Huang D (2017) Multi-task ranking SVM for image cosegmentation. Neurocomputing 247:126–136

Jia X, Wang S, Yang Y (2018) Least-squares support vector machine for semi-supervised multi-tasking. In: IEEE 16th international conference on software engineering research, management and applications (SERA), pp 79–86

Xie X, Sun S (2012) Multitask twin support vector machines. In: Proceedings of the 19th international conference on neural information processing (ICONIP), pp 341–348

Xie X, Sun S (2015) Multitask centroid twin support vector machines. Neurocomputing 149:1085–1091

Mei B, Xu Y (2019) Multi-task least squares twin support vector machine for classification. Neurocomputing 338:26–33

Dua D, Graff C (2019) UCI machine learning repository. University of California, School of Information and Computer Science, Irvine. http://archive.ics.uci.edu/ml

Tsoumakas G, Spyromitros-Xioufis E, Vilcek J, Vlahavas I (2011) Mulan: a Java library for multi-label learning. J Mach Learn Res 12:2411–2414

Li F, Fergus R, Perona P (2004) Learning generative visual models from few training examples: an incremental bayesian approach tested on 101 object categories. In: 2004 Conference on computer vision and pattern recognition workshop, pp 178–178

Li F, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611

Griffin G, Holub AD, Perona P. The Caltech 256. Caltech Technical Report

Lowe D (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Li F, Fergus P (2005) A Bayesian hierarchical model for learning natural scene categories. In: IEEE computer society conference on computer vision and pattern recognition (CVPR), vol 2, pp 524–531

Ehab S, Qasaimeh M (2017) Recent advances in features extraction and description algorithms: a comprehensive survey. In: IEEE international conference on industrial technology (ICIT), pp 1059–63

Zheng L, Yang Y, Tian Q (2018) SIFT meets CNN: a decade survey of instance retrieval. IEEE Trans Pattern Anal Mach Intell 40(5):1224–1244

Baroffio L, Redondi A, Tagliasacchi M, Tubaro S (2016) A survey on compact features for visual content analysis. APSIPA Trans Signal Inf Process 5:e13

Seidenari L, Serra G, Bagdanov AD, Bimbo AD (2014) Local pyramidal descriptors for image recognition. IEEE Trans Pattern Anal Mach Intell 36(5):1033–1040

Satpathy A, Jiang X, Eng H-L (2014) LBP-based edge-texture features for object recognition. IEEE Trans Image Process 23(5):1953–1964

Kim J, Tahboub K, Delp EJ (2017) Spatial pyramid alignment for sparse coding based object classification. In: 2017 IEEE international conference on image processing (ICIP), pp 1950–1954

Mahmood A, Bennamoun M, An S, Sohel FA (2017) Resfeats: residual network based features for image classification. In 2017 IEEE international conference on image processing (ICIP), pp 1597–1601

Pan Y, Xia Y, Song Y, Cai W (2018) Locality constrained encoding of frequency and spatial information for image classification. Multimed Tools Appl 77(19):24891–24907

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation. This work was supported in part by the National Natural Science Foundation of China (No. 11671010), Beijing Natural Science Foundation (No. 4172035) and Chinese People’s Liberation Army General Hospital (No. 2017MBD-002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mei, B., Xu, Y. Multi-task \(\nu\)-twin support vector machines. Neural Comput & Applic 32, 11329–11342 (2020). https://doi.org/10.1007/s00521-019-04628-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04628-5