Abstract

Twin support vector machines (TWSVMs) have been shown to be effective classifiers for a range of pattern classification tasks. However, the TWSVM formulation suffers from a range of shortcomings: (i) TWSVM uses hinge loss function which renders it sensitive to dataset outliers (noise sensitivity). (ii) It requires a matrix inversion calculation in the Wolfe-dual formulation which is intractable for datasets with large numbers of features/samples. (iii) TWSVM minimizes the empirical risk instead of the structural risk in its formulation with the consequent risk of overfitting. This paper proposes a novel large scale pinball twin support vector machines (LPTWSVM) to address these shortcomings. The proposed LPTWSVM model firstly utilizes the pinball loss function to achieve a high level of noise insensitivity, especially in relation to data with substantial feature noise. Secondly, and most significantly, the proposed LPTWSVM formulation eliminates the need to calculate inverse matrices in the dual problem (which apart from being very computationally demanding may not be possible due to matrix singularity). Further, LPTWSVM does not employ kernel-generated surfaces for the non-linear case, instead using the kernel trick directly; this ensures that the proposed LPTWSVM is a fully modular kernel approach in contrast to the original TWSVM. Lastly, structural risk is explicitly minimized in LPTWSVM with consequent improvement in classification accuracy (we explicitly analyze the properties of classification accuracy and noise insensitivity of the proposed LPTWSVM). Experiments on benchmark datasets show that the proposed LPTWSVM model may be effectively deployed on large datasets and that it exhibits similar or better performance on most datasets in comparison to relevant baseline methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Support vector machines (SVMs), introduced by Vapnik and co-workers (Cortes & Vapnik, (1995; Vapnik, 1999), are a class of highly effective machine learning models for pattern classification. SVMs are based on statistical learning theory (Trafalis & Ince, 2000; Vapnik, 1998, 2013; González-Castano et al., 2004; Fung & Mangasarian, 2005) and have been applied extensively in relation to binary classification problems. The traditional SVM model works by margin maximisation; deriving two unique parallel supporting hyperplanes such that the distance between the samples of two classes is maximized. The fact that relatively few training objects are required for this support gives SVMs their characteristic robustness. Further, casting the problem in dual form enables explicit kernelization, greatly extending the method’s utility. The versatility of SVMs has enabled their widespread adoption in various fields such as financial time-series forecasting (Cao & Tay, 2003), computational biology (Borgwardt, 2011; Noble, 2004), face recognition (Déniz et al. 2003), cancer recognition (Valentini et al., 2004), and EEG signal classification (Richhariya & Tanveer, 2018). To address the issue of parameter tuning, an automated procedure (Chapelle et al., 2002) for selecting kernel parameters was introduced in Chapelle et al. (2002) as exhaustive search may become intractable. However, in the era of big data, with significantly increasing number of features, many traditional SVM models fail to perform satisfactorily on reference datasets (Van Gestel et al., 2004; Fernández-Delgado et al., 2014).

To address this, SVMs have recently been enhanced by the development of several non-parallel hyperplane-based classifiers; e.g. the generalized eigen-value proximal SVM (GEPSVM) proposed by Mangasarian and Wild (2006), and the twin support vector machines, proposed by Jayadeva et al. (2007). TWSVM, in particular, improves SVM classification accuracy through the calculation of two non-parallel hyperplanes, each of which aims to be as close as possible to its corresponding class while being as far away as possible from the other. TWSVM, hence, modifies its hyperplanes so as to better accommodate the different distributions of the two classes, i.e., by changing the parameters of the two sub-problems TWSVM solves. These sub-problems (TWSVM solves two smaller quadratic programming problems (QPPs) unlike the original SVM which solves a single QPP) also endow TWSVM with additional computational efficiency, being approximately 4 times faster than the original support vector machine. As a result of these advantages, TWSVMs have been widely studied (Chen et al., 2011; Kumar & Gopal, 2008, 2009; Peng, 2010; Qi et al., 2013; Tanveer et al., 2016a; Richhariya & Tanveer, 2020).

Despite the merits of TWSVM, it still suffers from noise sensitivity and instability under resampling as a consequence of hinge loss function. To address these limitations within a standard SVM context, Huang et al. (2014) introduced a novel pinball loss function (\(L_{\tau }(u)\)) within the SVM formulation. The pinball loss function uses a quantile metric to measure margin distances in order to reduce noise sensitivity and increase stability under re-sampling. However, introduction of the pinball loss function leads to a loss of sparsity, a key component of SVM classification performance. To handle this drawback, a \(\epsilon\)-insensitive zone pinball loss (\(L_{\tau }^{\epsilon }(u)\)) SVM (Huang et al., 2014) is introduced to reduce the effect of noise while obtaining sparse solutions.

Several modifications to the TWSVM methodology have been proposed in order to reduce time complexity and improve overall performance (Kumar & Gopal, 2008; Gao et al., 2011; Kumar et al., 2010; Sharma et al., 2021; Tanveer, 2015; Tanveer et al., 2016b; Tian & Ping, 2014; Xu & Wang, 2014; Yan et al., 2019). Attempts have also been made to handle multiclass classification problems (Cheong et al., 2004; Madzarov et al., 2009; Shao et al., 2013). These TWSVM based formulations, however, are based on the hinge-loss function which, as indicated, suffers from noise sensitivity and re-sampling instability on large datasets. Also, the pin-SVM (Huang et al., 2014) solves a single large QPP further reducing its applicability to large-scale datasets. To resolve these issues (noise, resampling instability and high computational complexity), a pinball loss TWSVM was proposed in Tanveer et al. (2019a). However, introduction of pinball loss function within the TWSVM leads to a loss of sparsity; in order to gain the benefits of noise insensitivity, resampling stability, and sparsity, the sparse pinball twin support vector machine (SPTWSVM) was introduced in Tanveer et al. (2019b), Wang et al. (2020) and Singla et al. (2020) in which a \(\epsilon -\)insensitive zone pinball loss function is introduced in the standard TWSVM. For more details, interested readers can refer to comprehensive review on TWSVM (Tanveer et al., 2021).

However, all of the discussed formulations remain inapplicable to large scale problems due to the requirement of computationally expensive or intractable matrix inversion. Hence, extension of the above problems to large scale problems is still an open challenge.

Motivated by the existing twin bounded SVMs (TBSVM) (Shao et al., 2011) and Pin-general twin SVMs (Pin-GTSVM) (Tanveer et al., 2019a), we here propose a novel large scale pinball twin SVM (LPTWSVM). In particular, the merits of the proposed LPTWSVM formulation are as follows:

-

LPTWSVM, by virtue of changes directly introduced to the primal form of TBSVM, can be feasibly applied to real-world large-scale datasets. This is done by the elimination of the matrix inverse calculation in the dual problem of our model, which can be intractable for large scale datasets or even impossible for singular matrices.

-

LPTWSVM explicitly minimizes the structural risk in its formulation in accordance with statistical learning theory, and consequently matches or improves generalization/classification accuracy compared to baseline methods.

-

LPTWSVM obviates the requirement for the computation of the matrix inverse that exists in the TBSVM and Pin-GTSVM formulations, and the consequent risk of intractability.

-

LPTWSVM does not implicate kernel-generated surfaces in its methodology in contrast to the majority of twin support vector machine formulations, and is thus free to incorporate the kernel trick directly into its dual problem.

-

LPTWSVM achieves outlier-insensitivity by virtue of the introduction of pinball loss into the modified TBSVM problem.

A broad paper outline is given as follows: Sect. 2 gives the background of the previous work, Sect. 3 outlines the proposed model. Section 4 covers the theoretical properties of our model in detail. Experimental results are given in Sect. 5 and conclusions are provided in Sect. 6.

2 Background

The formulations of Pin-SVM, TBSVM and Pin-GTSVM are given briefly in this section. For further details, readers are referred to Jayadeva et al. (2007), Huang et al. (2014), Shao et al. (2011) and Tanveer et al. (2019a). We also have a notation subsection before these formulations.

Consider a binary dataset \(\mathbf {z} = \{x_{i}, y_{i}\}^{m}_{i = 1}\) where \(x_{i} \in \mathbb {R}^{n}\) and \(y_{i} \in \{-1, 1\}\). Let the number of samples in class \(+1\) and \(-1\) be \(m_1\) and \(m_2\), respectively. Let A represent the positive class (\(+1\)) samples and B represent the negative class (\(-1\)) samples.

2.1 Notations used

Table 1 list the various abbreviations and symbols used throughout the paper here.

2.2 Pinball support vector machines

Huang et al. (2014) first formulated the noise insensitive pinball loss function based SVM classifier. The optimization problem of the pinball SVM is:

where \(\mathbf{w} \in \mathbb {R}^{n} ~~\text{and}~~ \phi(x) \) is the weight vector and Hilbert space transformation of \(x,~~b \in \mathbb {R}\) is bias and \(L_{\tau }\) is the pinball loss function. The optimal separating hyperplane is given as \(\mathcal {H}: \mathbf{w} ^{T}\phi(x) + b = 0\); a test data sample \(x\in \mathbb {R^n}\) is hence assigned to the respective class of \(+1\) or \(-1\) based on the sign of \(\mathbf{w} ^{T}x + b\) i.e. if the sign is positive it is classified as positive class sample otherwise it is negative class sample. In pinball loss SVM, correctly classified samples are additionally penalized via the loss function with the intention of reducing noise sensitivity:

Here, \(\tau \in [0, 1]\) is a penalisation parameter that controls the magnitude of negative loss values. Equation (2) is a generalized \(\ell _{1}\) loss with both vectors close to the decision boundary as well as vectors further away from it contributing to the weight vector \(\mathbf{w}\). In particular, quantile distance is maximized by the Pin-SVM model.

Incorporating the Pinball loss function in Eq. (1), the QPP is given as follows:

where \(\mathbf{w} \in \mathbb {R}^{n}\) is the weight vector, \(b \in \mathbb {R}\) is bias, \(\xi =(\xi _1, \xi _2, \dots , \xi _m)^T\) is a slack variable and \(C>0\) is a penalty parameter.

Both Pin-SVM and SVM solve a QPP which is used to find an optimal hyperplane. However, SVM used hinge loss function and Pin-SVM used pinball loss function. For Eq. (3), the constraints are:

When \(\tau \ne 0\), Eq. (5) can be recast as:

when \(\tau\) tends to zero, (6) degenerates into \(\xi _i \ge 0\). The optimization problem of nonlinear SVM is expressed as follows:

where \(\mathbf{w} \in \mathbb {R}^{n}\) is the weight vector, \(b \in \mathbb {R}\) is bias and \(L_{{\textit{hinge}}}\) is the hinge loss function. We can get the following inequalities after substituting the hinge loss in (7):

After comparing Eqs. (5) and (8), one can conclude that the pinball loss gives an additional penalty to the correctly classified data points. Although Pin-SVM is developed from the original SVM, SVM can be regarded as a special case of Pin-SVM.

2.3 Twin bounded support vector machines (TBSVM)

In an attempt to improve the TWSVM model, Shao et al. (2011) proposed the twin bounded support vector machines (TBSVM) in which they directly introduced the structural risk minimization principle into the TWSVM problem and eliminate the need to ensure a well-conditioned matrix (so as to calculate its inverse) involved in the dual formulation of TWSVM. The structural risk minimization principle is implemented via the introduction of a regularization term in the objective function of original TWSVM. An added benefit of introducing this regularization term is that it eliminates the need to derive the dual of the problem without additional assumptions unlike TWSVM. Thus, TBSVM stands as a significant improvement over TWSVM. The formulation of TBSVM is given as:

and

Here, \(\mathbf{w} ^{(1)}, \mathbf{w} ^{(2)} \in \mathbb {R}^{n}\) are the weight vectors with \(b^{(1)}, b^{(2)} \in \mathbb {R}\) the corresponding biases for QPPs (9) and (10) respectively; \(e_1\) and \(e_2\) are vectors of ones of appropriate dimensions and \(\xi\) is a slack variable. The term \(\frac{1}{2}c_{3}(||\mathbf{w} ^{(1)}||^{2} + b^{(1)2})\) in (9) introduces the structural risk minimization principle since the term corresponds to the distance between the proximal hyperplane, \(\mathbf{w} ^{(1)T}x + b^{(1)} = 0\), and the bounding hyperplane, \(\mathbf{w} ^{(1)T}x + b^{(1)} = -1\). A similar analysis holds for (10).

We only consider the dual problem of (9) since a similar approach can be followed for the others. The Lagrangian of (9) is given as,

where \(\alpha \in \mathbb {R}^{m_{2}}\), \(\beta \in \mathbb {R}^{m_{2}}\) are Lagrangian multipliers corresponding to the different constraints. After applying the necessary and sufficient K.K.T. conditions, the QPP (9) in dual form is given as:

where \(H = \begin{bmatrix}{} A&e_{1} \end{bmatrix},\; G = \begin{bmatrix}{} B&e_{2} \end{bmatrix},~\delta \in \mathbb {R}^{m_{2}}\), I is the identity matrix of size \(m_{1} \times m_{1}\). As is evident, \((H^{T}H + c_{3}I)^{-1}\) is naturally nonsingular and, hence, invertible without making any extra assumptions unlike TWSVM’s dual problems. However, despite the differences in formulation, the decision function of TBSVM is similar to that of TWSVM.

2.4 General twin support vector machine with pinball loss function (Pin-GTSVM)

To remove the limitations of hinge loss based support vector machines, in particular, sensitivity to noise and resampling, Tanveer et al. (2019a) formulated general twin SVMs using the pinball loss function, demonstrating that their Pin-GTSVM model successfully reduces sensitivity to feature noise and exhibits stability under re-sampling while retaining the same computational complexity as that of the TWSVM with hinge loss. The formulation of the linear Pin-GTSVM is given as follows:

and

where \(\mathbf{w} ^{(1)}, \mathbf{w} ^{(2)} \in \mathbb {R}^{n}\) are the weight vectors with \(b^{(1)}, b^{(2)} \in \mathbb {R}\) the corresponding biases, \(c_1, c_2\) are positive penalty parameters, \(e_1, e_2\) are vectors of ones of appropriate dimensions and \(\xi _1, \xi _2\) are the slack variables.

Similarly, the formulation of the non-linear Pin-GTSVM is given as follows:

and

where \(c_1, c_2\) are positive penalty parameters, \(e_1, e_2\) are the vector of ones with appropriate dimensions and \(\xi _1, \xi _2\) are the slack variables, \(D=[A;B]\); \(u^{(1)},u^{(2)} \in \mathbb {R}^n\) and \(K(\cdot )\) is a kernel function.

To solve the QPPs (13−16), we derive their dual form. We consider QPP (15) for this purpose. The dual of QPP (15) can be written as:

Likewise the dual of QPP (16) is given as :

where \(P= \begin{bmatrix} K(A, D^T)&e_1 \end{bmatrix}\) and \(Q=\begin{bmatrix} K(B, D^T)&e_2\end{bmatrix}\). \(\alpha , \beta , \gamma\) and \(\sigma\) are Lagrangian multipliers.

Once we solve the QPP’s (17) and (18), the optimal hyperplanes are given as:

and

3 Proposed large scale pinball twin support vector machines (LPTWSVM)

In order to render our model suitable for large scale datasets, we aim to re-formulate the problem so as to embody the merits of both TBSVM and Pin-GTSVM while eliminating the requirement for calculating large matrix inverses. This will involve the introduction of a regularization term (as in TBSVM) along with the addition of an equality constraint. Furthermore, since pinball loss is already present in the primal problem of Pin-GTSVM, LPTWSVM is insensitive to noise and is thus more stable with respect to resampling. The LPTWSVM also allows for the kernel trick to be incorporated directly into the dual problem without having to accommodate kernel-generated surfaces. Lastly, LPTWSVM, unlike Pin-GTSVM, directly embodies a structural risk minimization principle, potentially allowing LPTWSVM to obtain better classification accuracy. LPTWSVM thus stands as a significant improvement over both TBSVM and Pin-GTSVM.

3.1 Linear LPTWSVM

We reformulate the optimization problem of TBSVM (9) by incorporating the pinball loss function. The reformulated optimization problem is given as:

and

Here \(\mathbf{w} ^{(1)}, \mathbf{w} ^{(2)} \in \mathbb {R}^{n}\) are the weight vectors with \(b^{(1)}, b^{(2)} \in \mathbb {R}\) the corresponding biases, \(c_{1}\), \(c_{2}\), \(c_{3}\), \(c_{4}>\) 0, \(\eta _{1} \in \mathbb {R}^{m_{1}}\), \(\eta _{2} \in \mathbb {R}^{m_{2}}\), \(\xi\) is a slack vector and \(e_{1}\) and \(e_{2}\) are vectors of ones with \(m_{1}\) and \(m_{2}\) elements respectively. The third term in both problems is the error minimization term that arises according to whether or not the samples satisfy the constraints. Here, pinball loss (2) gives penalty to both correctly as well as incorrectly classified samples.

Compared to Pin-GTSVM problem, the proposed LPTWSVM model [QPPs (21) and (22)] introduced the regularization terms \(\frac{1}{2}c_{3}(||\mathbf{w} ^{(1)}||^{2} + b^{(1)2})\) and \(\frac{1}{2}c_{4}(||\mathbf{w} ^{(2)}||^{2} + b^{(2)2})\) and added an extra equality constraint in both primal problems. The addition of the regularization terms also introduces structural risk minimization since they correspond to the distance between the proximal hyperplane, \(\mathbf{w} ^{(1)T}x+b^{(1)}=0\), and the bounding hyperplane, \(\mathbf{w} ^{(1)T}x+b^{(1)}=-1\) (both planes correspond to the first problem). The objective functions of both problems (21) and (22) minimize the distance of the data sample of one class from its corresponding hyperplane while remaining as far as possible from the hyperplane belonging to the samples of the other class. The modified Lagrangian in the proposed LPTWSVM is such that the previous large matrix inverse calculation is bypassed; it is immediately evident that eliminating these calculations will lead to significant efficiency improvements with respect to large scale datasets.

To solve problems (21) and (22), we thus consider their dual formulations. The dual of (21) and its Lagrangian function can be written as (a similar approach can be followed for others):

here \(\alpha \in \mathbb {R}^{m_{2}}\), \(\alpha \ge 0\), \(\gamma \in \mathbb {R}^{m_{2}}\), \(\gamma \ge 0\) and \(\mu \in \mathbb {R}^{m_{1}}, \mu \ge 0\) represent the Lagrangian multipliers. Applying the K.K.T conditions on (23), we have:

Using the K.K.T. conditions (24)–(27) and (28)–(30) and substituting \(\alpha - \gamma = \lambda\), the Lagrangian of (21) is given as:

Here, E is a matrix of all ones of size \((m_{1} + m_{2}) \times (m_{1} + m_{2})\). The first constraint can also be written as \(\lambda + \gamma (1 + \frac{1}{\tau }) = c_{1}e_{2}\), using \(\alpha = \gamma + \lambda\). Since \(\gamma \ge 0\), (31) can be equivalently written as:

Likewise, the dual formulation of (22) is given as:

Once solutions of (32) and (33) are derived so as to obtain the vectors \(\begin{bmatrix} \mu&\lambda&\alpha \end{bmatrix}\) and \(\begin{bmatrix} \theta&\phi&\omega \end{bmatrix}\), the hyperplanes corresponding to each class can be written as:

The decision function for assigning the test data sample \(x\in \mathbb {R}^{n}\) to a particular class is then similar to decision function used by Pin-GTSVM problem.

3.2 Non-linear LPTWSVM

In contrast to the non-linear Pin-GTSVM case, we do not need to consider kernel generated surfaces for LPTWSVM and can thus directly introduce arbitrary kernel functions in the linear case of LPTWSVM. Hence, we introduce an explicit kernel function \(K(x, y) = \phi (x)^{T}\phi (y)\) into the linear case, which enacts the Hilbert space transformation \(\mathbf{x} = \phi (x)\), \(\mathbf{x} \in \mathbb {H}\). In a similar fashion to (21) and (22), we now consider the following primal problems in the Hilbert space \(\mathbb {H}\):

and

Here, all constants and notations have similar meaning as in the linear case. We derive the dual problem of (35) and (36):

and

All variables, constants and notations are again similar to those from the linear case. Once we solve the QPPs (37) and (38), a new test-data sample \(x \in \mathbb {R}^{n}\) is assigned to a given class based on its distance from the corresponding hyperplanes in a manner similar to the linear case.

4 Theoretical properties

We will now examine the properties of the proposed large-scale pinball twin support vector machine (LPTWSVM) in more detail.

4.1 Noise insensitivity

For simplicity, we will discuss the noise sensistivity with respect to the linear LPTWSVM problem (21). However, a similar analysis is also applicable to both the non-linear case of the first LPTWSVM problem and also the second LPTWSVM problem.

The generalized sign function, sgn\(_{\tau }(x)\), of the Pinball loss function is given as:

Henceforth, for typographic simplicity, let \(\mathbf{w}\) and b represent \(\mathbf{w} ^{(1)}\) and b(1) respectively, such that problem (21) can be equivalently written:

where \(L_{\tau }(e_{2} + (B\mathbf{w} + e_{2}b))\) is the Pinball loss function. Differentiating (40) with respect to \(\mathbf{w}\), we have:

where 0 is a zero vector of appropriate dimensions and \(x_{i}^{-} \in B\).

The index set for B is partitioned in three parts as follows:

where \(i = 1,\ldots ,m_{2}\). With the above notations and the existence of \(\theta _{i} \in [-\tau , 1]\) Eq. (41) can be rewritten as:

The above condition shows that when \(\mathbf{w} , b, c_{1},\) and \(c_{3}\) are fixed, \(\tau\) controls the number of samples of each set \(S_{0}^\mathbf{w , b}\), \(S_{1}^\mathbf{w , b}\) and \(S_{2}^\mathbf{w , b}\). For small values of \(\tau\), the number of samples in \(S_{2}^\mathbf{w , b}\) is high with fewer number of samples in other sets, and hence the result is an intrinsic sensitivity to feature noise. On the other hand, for larger values of \(\tau\), set-allocation is more evenly distributed, with data samples allocated to all of the three sets and hence the result is less sensitivity to feature noise.

Proposition 1

If the QPPs (32) or (37) have a solution then the following inequalities must hold:

where \(p_{0}\) is the number of samples in \(S_{0}^\mathbf{w , b}\).

Proof

Let \(x_{i_{0}}^{-} \in S_{0}^\mathbf{w , b}\), (1 \(\le i_{0} \le m_{2}\)) be an arbitrary sample. From the KKT condition (29), \(\gamma _{i_{0}}\) = 0. From the KKT condition (26), we then obtain \(\alpha _{i_{0}} = c_{1}\) and, subsequently, \(\lambda _{i_{0}} = \alpha _{i_{0}} - \gamma _{i_{0}} = c_{1}\). Also, from the KKT condition (25), we have

Now, since \(\alpha _{i} \ge 0\) and \(\gamma _{i} \ge 0\), we have \(-\tau c_{1} \le \lambda _{i} \le c_{1}\). Therefore,

which gives us \(\frac{-(c_{3}b + e_{1}^{T}\mu )}{c_{1}m_{2}} \le 1\) and \(p_{0}(1 + \tau ) \le \frac{-(c_{3}b + e_{1}^{T}\mu ) + \tau c_{1}m_{2}}{c_{1}}\). The second condition gives us

hence proving our proposition. \(\square\)

One can see that an upper bound on the number of samples in \(S_{0}^\mathbf{w , b}\) is placed by the above proposition; for small values of \(\tau\), \(p_{0}\) gets smaller, hence leading to feature noise sensitivity as smaller numbers of data samples are distributed in sets other than \(S_{2}^\mathbf{w , b}\). Hence, classification results that are affected significantly by feature noise around the decision boundary can be adjusted for by varying \(\tau\) accordingly. A similar analysis holds for the other LPTWSVM problems.

5 Numerical experiments

A relative performance analysis of TBSVM, TWSVM, Pin-GTSVM and the proposed LPTWSVM model is given in this section. The experimental evaluation of the models is performed on MATLAB R2017b with a Windows 10 machine and an Intel Xeon(R) Processor (\(2.30 \times 2\) GHz) and 128 GB RAM. We perform the experiments on 20 benchmark UCI datasets (Dheeru & Taniskidou, 2017) and one synthetic dataset. Grid selection is used to select optimal parameters over the following parametric ranges: \(c_1=[10^{-7}, 10^{-6},\dots , 10^{6}, 10^{7}]\), \(c_2=[10^{-7}, 10^{-6},\dots , 10^{6}, 10^{7}]\), \(c_3=[10^{-7}, 10^{-6},\dots , 10^{6}, 10^{7}]\), \(c_4=[10^{-7}, 10^{-6},\dots , 10^{6}, 10^{7}]\), \(\tau =[0.01, 0.2, 0.5, 1.0]\).

To avoid computational complexity, we use \(c_1=c_2\) and \(c_3=c_4\) for the TBSVM and LPTWSVM models. We use a 70 : 30 ratio for training and testing, with 10-fold cross validation for choosing optimal parameters. We employ a Gaussian kernel \(K(x,y)=exp^{-||x-y||^2/\gamma ^2}\) with parameter values of \(\gamma =[10^{-7}, 10^{-6},\dots , 10^{6}, 10^{7}]\). Details of each dataset are given in Tables 4 and 5. The number of samples, features and classes in each dataset are given as (number of samples \(\times\) number of features \(\times\) number of classes). For example, the UCI blood dataset containing 748 samples with feature length 4 and number of classes 2 is given as \((748\times 4\times 2)\). Table 6 contains 5 publicly available real world classification datasets (Zhang et al., 2019) including two biomedical datasets i.e., Carcinom and Lung, two human face datasets i.e., ORL and Yale and one object recognition datasets i.e., COIL20.

-

Biomedical datasets: The Carcinom dataset consists of 174 samples, with each sample represented by a 9182 dimensional feature vector with 11 categories. The Lung dataset consists of 203 instances with 634 dimensional vectors.

-

Face image datasets: The ORL face image dataset consists of 400 images and 40 categories, with each image of the size \(32 \times 32\). The Yale face database consists of 165 face images belonging to 15 different people with each image of the size \(32 \times 32\).

-

Object recognition datasets: COIL20 dataset consists of 20 categories with 1440 sample size, each sample of 1024 dimensions.

For the above real world application datasets, we repeated the 4-fold cross validation 5 times and report the average accuracy and the standard deviation in Table 6. We used one-vs-all strategy in multiclass datasets for all the classification models.

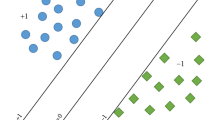

Figures showing noise insensitivity properties of the proposed LPTWSVM model compared to the baseline methods TBSVM, TWSVM and Pin-GTSVM. In the figures, we have \(r = 0\) (noise free), \(r = 0.2\), \(r = 0.3\) and \(r = 0.5\). Here, r is defined as \(r = \frac{\text {total number of noise samples}}{\text {total number of samples in the synthetic dataset}}\). The legend below each figure is used to match the separating hyperplanes with the corresponding models. The values in the bracket after each model name are the slopes of the lower and upper hyperplanes of that model, respectively

5.1 Synthetic dataset

One of the main properties of LPTWSVM is that it is insensitive to noisy samples in and around the decision boundary which can perturb the hyperplanes and, eventually, the performance of the model. We analyse LPTWSVM’s robustness to noise in Fig. 1, where we take synthetic data samples from two Gaussian distributions (the number of samples in both the classes are kept equal to 100). The two distributions are: \(x_{i}, i \in \{ i:y_{i} = 1 \} \sim \mathcal {N}(\mu _{1}, \sum _{1})\) and \(x_{i}, i \in \{ i:y_{i} = -1 \} \sim \mathcal {N}(\mu _{2}, \sum _{2})\) where \(\mu _{1} = [0.5, -3]^{T}, \mu _{2} = [-0.5, 3]^{T}\) and \(\sum _{1} = \sum _{2} = \begin{bmatrix}{} 0.2 &{}0 \\ 0 &{}3 \end{bmatrix}\). These samples constitute our synthetic dataset and the above Gaussian distributions can be separated by the Bayes classifier whose separating hyperplane is \(f_{c}(x) = 2.5x(1) - x(2)\). The data samples are now contaminated with noise where the noisy samples are also Gaussian distributed: \(x_{{\textit{noise}}} \sim \mathcal {N}(\mu _{{\textit{noise}}}, \sum _{{\textit{noise}}})\) where \(\mu _{{\textit{noise}}} = [0, 0]^{T}\) and \(\sum _{{\textit{noise}}} = \begin{bmatrix}{} 1 &{}-0.8 \\ -0.8 &{}1 \end{bmatrix}\). The total number of noisy samples is calculated by the value of r, which is defined as \(r = \frac{\text {total number of noise samples}}{\text {total number of samples in the synthetic dataset}}\). These noisy samples are then assigned to either of class \(+1\) or class \(-1\) with equal probability. Despite the noise introduced to the synthetic dataset affecting labels in and around the decision boundaries, the Bayes classifier remains unchanged. In the results mentioned in Fig. 1, \(\tau = 0.01\) for Pin-GTSVM and LPTWSVM and all penalty parameters (that is, the c constants) for the four models TBSVM, TWSVM, Pin-GTSVM and LPTWSVM are equal to 1. In the figures, it can be observed that as the amount of noise is increased from \(r = 0\), \(r = 0.2\), \(r = 0.3\) to \(r = 0.5\), the separating hyperplanes of Pin-GTSVM are affected a lot by the feature noise and their slope values deviate a lot, going from positive to negative values. TBSVM and TWSVM also decrease and then ultimately increase their slope values by big absolute values as noise is increased. On the other hand, the absolute change in the slopes of the hyperplanes of our model LPTWSVM is generally by the smallest amounts out of the four models as noise levels are progressively increased. This comparative robustness in the absolute change of slope values of our model implies the noise insensitivity of LPTWSVM.

5.2 Performance scaling evaluation on NDC datasets

Experiments conducted on progressively large-scale binary classification datasets are set out in Table 2. Here, the NDC Data Generator (Musicant, 1998) is used to assess the computational efficiency of the tested models with respect to linearly-increasing training set sizes (methodological parameters are fixed to be identical across all classifiers: \(c_1=1, c_2=1, \gamma =1 \;\text{ and }\;\tau =0.5\)).

Table 3 shows the execution time results of the non-linear TBSVM, TWSVM, Pin-GTSVM and LPTWSVM. From the table, one can see that the existing models (TBSVM, TWSVM and Pin-GTSVM) rapidly become infeasible—in fact exceeded memory constraints—as the dataset size exceeds 70k. However, the proposed LPTWSVM model still functions, illustrating the efficacy of the proposed approach.

5.3 Results comparison and discussion

We analyze the relative performance of the proposed LPTWSVM, TBSVM, TWSVM and Pin-GTSVM models. The experimental results corresponding to the linear and Gaussian kernels are given in Tables 4 and 5, respectively. From Table 4, representing the linear kernel, it may be seen that the proposed LPTWSVM and TWSVM models achieve the best performance in 5 datasets while Pin-GTSVM and TBSVM emerge as overall winners in datasets 1 and 2, respectively. The average prediction accuracy of the proposed LPTWSVM is 82.74%; better than other baseline models. Also, it may be seen that the proposed LPTWSVM achieved lowest average rank among the baseline models.

The Gaussian kernel results presented in Table 5 show that the average prediction accuracy of the proposed LPTWSVM is 85%; again better than the other baseline models. It can be seen from Table 5 that the proposed LPTWSVM approach emerged as overall winner in 5 datasets; a greater number than Pin-GTSVM, TWSVM and TBSVM, which achieved best performance for 4, 3 and 2 datasets, respectively.

Table 6 gives the performance of the classification models on the datasets from different with large features. One can see that the performance of the proposed LPTWSVM model on COIL20, Yale, Lung and Carcinom is better as compared to the baseline models. In COIL20 dataset, the proposed LPTWSVM model achieved 98.73% accuracy which is better than the baseline models. Also, in Yale face dataset the performance of the proposed model is better compared to baseline models. In biological datasets i.e., Lung and Carcinom, the performance of the proposed LPTWSVM is better compared to the baseline models.

5.4 Statistical analysis

We carry out an analysis of the statistical significance of the performance values obtained for the different models via Friedman testing. In Friedman testing, models are ranked for each dataset separately with the best performing model being allocated the lowest rank. The results of this test for the models for linear kernel is given in Table 4 and the Gaussian kernel results are given in Table 5.

5.4.1 Linear results

Under the null hypothesis, the Friedman statistics are distributed according to \(\chi ^2_F\) with \((k-1)\) degrees of freedom as follows (Demšar, 2006):

where \(R_j=\frac{1}{N}\sum _jr_i^j\) and \(r_{{i}}^j\) denotes the rank of the \(j^{th}\) algorithm on the \(i^{th}\) dataset out of k algorithms and N datasets with \((k-1)\) and \((k-1)(N-1)\) degrees of freedom. Here, we are comparing the four algorithms on 16 datasets i.e. \(k=4\) and \(N=16\). Also, the average ranks of TBSVM, TWSVM, Pin-GTSVM and the proposed LPTWSVM are 2.59375, 2.5, 2.875 and 2.03125, respectively. Therefore,

For \(k=4, N=16\), the critical values of F(3, 45) for \(\alpha =0.05\) is 2.815. Thus, Friedman test fails to detect the significant difference among the models. However, one can see that the proposed LPTWSVM model achieved better average accuracy compared to the existing models. Also, the average rank of the proposed LPTWSVM model is better compared to baseline models.

5.4.2 Non-linear results

Under the null hypothesis, the Friedman statistics are distributed according to \(\chi ^2_F\) with \((k-1)\) degrees of freedom as follows (Demšar, 2006):

Similar to linear case, here also \(k=4\) and \(N=16\). The average ranks of the TBSVM, TWSVM, Pin-GTSVM and the proposed LPTWSVM with Gaussian kernel are 2.65625, 2.59375, 2.3125 and 2.4375, respectively. Therefore,

For \(k=4, N=16\), the critical values of F(3, 45) with \(\alpha =0.05\) is 2.815. Thus, Friedman test fails to detect the significant difference among the models. However, one can see that the proposed LPTWSVM model achieved better average accuracy compared to the existing models. Also, the average rank of the proposed LPTWSVM model is better compared to TBSVM and TWSVM models.

6 Conclusions

In this paper, we have proposed a novel classification model, LPTWSVM. In contrast to the TWSVM, the proposed model is tunably-insensitive to feature noise while exhibiting greater stability under resampling. Furthermore, a structural risk minimization principle is directly implemented within the proposed LPTWSVM model to ensure better generalization. Numerical experiments conducted on standard benchmark datasets with respect to both linear as well as non-linear implementations show the validity of the proposed LPTWSVM approach, for which the classification performance is similar or better than the baseline methods. We further performed experiments on progressively-increased NDC dataset sizes to demonstrate the effectiveness of the proposed LPTWSVM model on large-scale datasets. Finally, we note that in the regularised LPTWSVM, additional parameters need to be tuned via cross-validation; future work will focus on the appropriate mechanisms for automatic selection of these parameters.

Availability of data and material

The datasets are the benchmark datasets available online (Data Source available in manuscript.)

Code availability

The codes will be available at https://github.com/mtanveer1.

References

Borgwardt, K. M. (2011). Kernel methods in bioinformatics. In Handbook of statistical bioinformatics (pp. 317–334). Springer.

Cao, L.-J., & Tay, F. E. H. (2003). Support vector machine with adaptive parameters in financial time series forecasting. IEEE Transactions on Neural Networks, 14(6), 1506–1518.

Chapelle, O., Vapnik, V., Bousquet, O., & Mukherjee, S. (2002). Choosing multiple parameters for support vector machines. Machine Learning, 46(1–3), 131–159.

Chen, X., Yang, J., Ye, Q., & Liang, J. (2011). Recursive projection twin support vector machine via within-class variance minimization. Pattern Recognition, 44(10–11), 2643–2655.

Cheong, S., Oh, S. H., & Lee, S.-Y. (2004). Support vector machines with binary tree architecture for multi-class classification. Neural Information Processing Letters and Reviews, 2(3), 47–51.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Demšar, J. (2006). Statistical comparisons of classifiers over multiple data sets. Journal of Machine Learning Research, 7, 1–30.

Déniz, O., Castrillon, M., & Hernández, M. (2003). Face recognition using independent component analysis and support vector machines. Pattern Recognition Letters, 24(13), 2153–2157.

Dheeru, D. & Karra Taniskidou, E. (2017). UCI machine learning repository [Online]. Available: http://archive.ics.uci.edu/ml

Fernández-Delgado, M., Cernadas, E., Barro, S., & Amorim, D. (2014). Do we need hundreds of classifiers to solve real world classification problems? The Journal of Machine Learning Research, 15(1), 3133–3181.

Fung, G. M., & Mangasarian, O. L. (2005). Multicategory proximal support vector machine classifiers. Machine Learning, 59(1–2), 77–97.

Gao, S., Ye, Q., & Ye, N. (2011). 1-Norm least squares twin support vector machines. Neurocomputing, 74(17), 3590–3597.

González-Castano, F. J., García-Palomares, U. M., & Meyer, R. R. (2004). Projection support vector machine generators. Machine Learning, 54(1), 33–44.

Huang, X., Shi, L., & Suykens, J. A. (2014). Support vector machine classifier with pinball loss. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(5), 984–997.

Jayadeva, Khemchandani, R. & Chandra, S. (2007). Twin support vector machines for pattern classification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(5), 905–910.

Kumar, M. A., & Gopal, M. (2008). Application of smoothing technique on twin support vector machines. Pattern Recognition Letters, 29(13), 1842–1848.

Kumar, M. A., & Gopal, M. (2009). Least squares twin support vector machines for pattern classification. Expert Systems with Applications, 36(4), 7535–7543.

Kumar, M. A., Khemchandani, R., Gopal, M., & Chandra, S. (2010). Knowledge based least squares twin support vector machines. Information Sciences, 180(23), 4606–4618.

Madzarov, G., Gjorgjevikj, D., & Chorbev, I. (2009). A multi-class SVM classifier utilizing binary decision tree. Informatica, 33(2)

Mangasarian, O. L., & Wild, E. W. (2006). Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(1), 69–74.

Musicant, D. (1998). Normally distributed clustered datasets, Computer Sciences Department, University of Wisconsin, Madison. http://www.cs.wisc.edu/dmi/svm/ndc

Noble, W. S. (2004). Support vector machine applications in computational biology. Kernel Methods in Computational Biology, 71, 92.

Peng, X. (2010). TSVR: An efficient twin support vector machine for regression. Neural Networks, 23(3), 365–372.

Qi, Z., Tian, Y., & Shi, Y. (2013). Robust twin support vector machine for pattern classification. Pattern Recognition, 46(1), 305–316.

Richhariya, B., & Tanveer, M. (2018). EEG signal classification using universum support vector machine. Expert Systems with Applications, 106, 169–182.

Richhariya, B., & Tanveer, M. (2020). A reduced universum twin support vector machine for class imbalance learning. Pattern Recognition, 102, 107150.

Shao, Y.-H., Chen, W.-J., Huang, W.-B., Yang, Z.-M., & Deng, N.-Y. (2013). The best separating decision tree twin support vector machine for multi-class classification. Procedia Computer Science, 17, 1032–1038.

Shao, Y.-H., Zhang, C.-H., Wang, X.-B., & Deng, N.-Y. (2011). Improvements on twin support vector machines. IEEE Transactions on Neural Networks, 22(6), 962–968.

Sharma, S., Rastogi, R., & Chandra, S. (2021). Large-scale twin parametric support vector machine using pinball loss function. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 51(2), 987–1003.

Singla, M., Ghosh, D., Shukla, K. & Pedrycz, W. (2020). Robust twin support vector regression based on rescaled hinge loss. Pattern Recognition, 107395

Tanveer, M. (2015). Robust and sparse linear programming twin support vector machines. Cognitive Computation, 7(1), 137–149.

Tanveer, M., Khan, M. A., & Ho, S.-S. (2016a). Robust energy-based least squares twin support vector machines. Applied Intelligence, 45(1), 174–186.

Tanveer, M., Mangal, M., Ahmad, I., & Shao, Y.-H. (2016b). One norm linear programming support vector regression. Neurocomputing, 173, 1508–1518.

Tanveer, M., Rajani, T., Rastogi, R., & Shao, Y. (2021). Comprehensive review on twin support vector machines. arXiv preprint arXiv:2105.00336

Tanveer, M., Sharma, A., & Suganthan, P. N. (2019a). General twin support vector machine with pinball loss function. Information Sciences, 494, 311–327.

Tanveer, M., Tiwari, A., Choudhary, R., & Jalan, S. (2019b). Sparse pinball twin support vector machines. Applied Soft Computing, 78, 164–175.

Tian, Y., & Ping, Y. (2014). Large-scale linear nonparallel support vector machine solver. Neural Networks, 50, 166–174.

Trafalis, T. B. & Ince, H. (2000). Support vector machine for regression and applications to financial forecasting. In Proceedings of the IEEE-INNS-ENNS international joint conference on neural networks, 2000. IJCNN 2000 (Vol. 6. pp. 348–353). IEEE.

Valentini, G., Muselli, M., & Ruffino, F. (2004). Cancer recognition with bagged ensembles of support vector machines. Neurocomputing, 56, 461–466.

Van Gestel, T., Suykens, J. A., Baesens, B., Viaene, S., Vanthienen, J., Dedene, G., De Moor, B., & Vandewalle, J. (2004). Benchmarking least squares support vector machine classifiers. Machine Learning, 54(1), 5–32.

Vapnik, V. (1998). Statistical learning theory. 1998 (Vol. 3). Wiley.

Vapnik, V. N. (1999). An overview of statistical learning theory. IEEE Transactions on Neural Networks, 10(5), 988–999.

Vapnik, V. (2013). The nature of statistical learning theory. Springer.

Wang, H., Xu, Y., & Zhou, Z. (2020). Twin-parametric margin support vector machine with truncated pinball loss. Neural Computing and Applications, 1–18.

Xu, Y., & Wang, L. (2014). K-nearest neighbor-based weighted twin support vector regression. Applied Intelligence, 41(1), 299–309.

Yan, H., Ye, Q.-L., & Yu, D.-J. (2019). Efficient and robust twsvm classification via a minimum l1-norm distance metric criterion. Machine Learning, 1–26.

Zhang, Y., Wu, J., Cai, Z., Du, B., & Philip, S. Y. (2019). An unsupervised parameter learning model for RVFL neural network. Neural Networks, 112, 85–97.

Acknowledgements

This work was supported by Science and Engineering Research Board (SERB) as Early Career Research Award grant no. ECR/2017/000053 and Council of Scientific & Industrial Research (CSIR), New Delhi, INDIA under Extra Mural Research (EMR) Scheme Grant No. 22(0751)/17/EMR-II. We gratefully acknowledge the Indian Institute of Technology Indore for providing facilities and support.

Author information

Authors and Affiliations

Contributions

M. Tanveer: Conceptualization, Methodology, Formal analysis, Investigation, Resources, Writing—review & editing, Supervision, Funding acquisition. A. Tiwari: Conceptualization, Methodology, Validation, Resources. R. Choudhary: Conceptualization, Methodology, Validation, Resources, Writing—original draft, Writing—review & editing, Visualization. M.A. Ganaie: Conceptualization, Methodology, Validation, Resources, Writing—original draft, Writing—review & editing, Visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethics approval

The study is original and has not been submitted to any other journal/conference.

Additional information

Editors: João Gama, Alípio Jorge, Salvador García.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tanveer, M., Tiwari, A., Choudhary, R. et al. Large-scale pinball twin support vector machines. Mach Learn 111, 3525–3548 (2022). https://doi.org/10.1007/s10994-021-06061-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-06061-z