Abstract

This paper is concerned with the stabilization of bidirectional associative memory neural networks with time-varying delays in the leakage terms using sampled-data control. We apply an input delay approach to change the sampling system into a continuous time-delay system. Based on the Lyapunov theory, some stability criteria are obtained. These conditions are expressed in terms of linear matrix inequalities and can be solved via standard numerical software. Finally, one numerical example is given to demonstrate the effectiveness of the proposed results .

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As we all know, time delays are unavoidable in many practical systems such as biology systems, automatic control systems and artificial neural networks [1–4]. The existence of time delays may lead to oscillation, divergence or instability of dynamical systems [2, 3]. Various types of time-delay systems have been investigated, and many significant results have been reported [4–10].

Recently, the stability of systems with leakage delays becomes one of the hot topics. The research about the leakage delay (or forgetting delay), which has been found in the negative feedback of system, can be traced back to 1992. In [11], it was observed that the leakage delay had great impact on the dynamical behavior of the system. Since then, many researchers have paid much attention to the systems with leakage delay and some interesting results have been derived. For example, Gopalsamy [12] considered a population model with leakage delay and found that the leakage delay can destabilization system. In [13], the bidirectional associative memory (BAM) neural networks with constant leakage delays were investigated based on Lyapunov–Krasovskii functions and properties of M-matrices. Inspired by [13], in [14], a class of BAM fuzzy cellular neural networks (FCNNs) with leakage delays was considered, in which the global asymptotic stability was studied by using the free-weighting matrices method. Furthermore, Liu [15] discussed the global exponential stability for BAM neural networks with time-varying leakage delays, which extended and improved the main results in [13, 14]. In addition, Lakshmanan et al. considered the stability of BAM neural networks with leakage delays and probabilistic time-varying delays via the Lyapunov–Krasovskii functional and stochastic analysis approach [16]. Li et al. [17] investigated the existence, uniqueness and stability of recurrent neural networks with leakage delay under impulsive perturbations and showed that the impact of leakage delay cannot be ignored. In particular, Li et al. gave the following example to describe this phenomenon [17].

Remark 1

Consider the following system with leakage delays.

where

and \(f=[f_1,\,f_2]^{T},\,{f_{i}}(y)=\frac{y^2}{1+y^2},\ \ \tau _1(t)=0.01{\sin ^2}t,\,\tau _2(t)=0.01{\cos ^2}t\).

If there is no leakage delay, system is stable. However, if leakage delays \(\rho _1=\rho _2 =0.2\), system becomes unstable (see Figs. 1, 2).

The phenomena mentioned above show that a larger leakage delay can cause fluctuant. As we all know, stability is an important index of the system in application [18, 19]. So it is very important to take some control strategies to stabilize the instable system. Up to now, various control approaches have been adopted to stabilize those instable systems. Controls such as feedback control [20, 21], intermittent control [22, 23], impulsive control [24–27], fuzzy logical control [28] and sampled-data control [29] are adopted by many authors. The sampled-data control deals with continuous system by sampled data at discrete time. It drastically reduces the amount of transmitted information and increases the efficiency of bandwidth usage [30]. Compared with continuous control, the sampled-data control is more efficient, secure and useful [31], so it has a lot of applications. For example, Wu et al. [32] investigated the stability of neural network by using a sampled-data control with an optimal guaranteed cost. The synchronization problem of some networks was studied via sampled-data control [33, 34]. In addition, the performance of some nonlinear sampled-data controllers was analyzed in terms of the quantitative trade between robustness and sampling bandwidth in [35].

Motivated by the above discussion, the main purpose of this paper is to investigate the stabilization of BAM neural networks with time-varying leakage delays via sampled-data control. To the best of our knowledge, so far, there were no results on the stabilization analysis of BAM neural networks with time-varying leakage delays. By using the input delay approach, the BAM with leakage delay under the sampled-data control is transformed into a continuous system. Then, by using the Lyapunov stability theory, a stability criterion was expressed in terms of LMI toolbox. The paper is organized as follows. In the next section, the problem is formulated and some basic preliminaries and assumptions are given. In Sect. 3, an appropriate sampled-data controller is designed to stabilize BAM neural networks with time-varying leakage delays. In Sect. 4, one illustrative example is given to show the effectiveness of our results. Some conclusions are made in Sect. 5.

2 Preliminaries

Consider the following BAM neural network with time-varying leakage delays:

where \(\mu _{i}(t)\) and \(\nu _j(t)\) are the state variables of the neuron at time \(t\), respectively. The positive constants \(a_{i}\) and \(c_j\) denote the time scales of the respective layers of the networks. \( b_{ij}^{(1)}, b_{ij}^{(2)}, d_{ji}^{(1)}, d_{ji}^{(2)}\) are connection weights of the network. \(I_{i}\) and \(\ J_j\) denote the external inputs. \(\sigma (t)\) and \(\rho (t)\) are leakage delays; \(\tau _1(t)\) and \(\tau _2(t)\) are time-varying delays; \(\tilde{g}_j(\cdot ),\ \tilde{f}_{i}(\cdot )\) are neuron activation functions.

Let \((\mu ^{*},\nu ^{*})^{T}\) be an equilibrium point of Eq. (2). Then, \((\mu ^{*},\nu ^{*})^{T}\) satisfies the following equations:

Shift the equilibrium point \((\mu ^{*},\nu ^{*})^{T}\) to the origin by \(x_{i}(t)=\mu _{i}(t)-\mu _{i}^{*},\ \ y_{i}(t)=\nu _{i}(t)-\nu _{i}^{*}\). System (2) can be rewritten into the following compact matrix form:

where \(A=\hbox {diag}[a_1,a_2\ldots a_n]>0,\ \ C=\hbox {diag}[c_1,c_2\ldots c_n]>0\), \(B_k=(b_{ij}^{(k)})_{n\times n}\), \(D_k=(d_{ij}^{(k)})_{n\times n},\,k=1,2\), \(g(y(t))=\tilde{g}(y(t)+\nu ^{*})-\tilde{g}(\nu ^{*})\), and \(f(x(t))=\tilde{f}(x(t)+\mu ^{*})-\tilde{f}(\mu ^{*})\).

Remark 2

In [17], the impact of leakage delay on stability of systems was discussed. That is, larger leakage delay can lead to the instability of system. However, how to control the harmful effect is not investigated. Next, we will study the stabilization of (4) via sampled-data control.

Let \( \mu (t)=K x(t_k),\ \ \nu (t)=M y(t_k),\) where \(K,M \in R^{n\times n}\) are the sampled-data feedback controller gain matrices to be designed. \(t_k\) denotes the sample time point and satisfies \(0=t_0<t_1\cdots <t_k<\cdots \), and \(\lim \nolimits _{k\rightarrow +\infty }t_k=+\infty \). Moreover, there exists a positive constant \(\tau _3\) such that \(t_{k+1}-t_k\le \tau _3,\ \forall k\in {\mathbb {N}}\).

Under the sampled-data control, system (4) can be modeled as:

Let \(\tau _3(t)=t-t_k\), for \(t\in [t_k,\ t_{k+1}).\) Using the input delay method, we have

The initial conditions of model (6) are given as:

where \(s_1=\max \{\sigma _2, \tau _2, \tau _3 \},\ \ s_2=\max \{\rho _2, \tau _1, \tau _3 \}\)

Remark 3

As is well known, it is difficult to analyze the stabilization of system (5) because of discrete term \( \mu (t)=K x(t_k),\) and \(\nu (t)=M y(t_k)\). Based on the input delay approach, which was put forward by Fridman in [36], the system (5) is changed as a continuous-time system (6). So we can discuss the stabilization of system (6).

In order to investigate stabilization of system (6) and calculate the sampled-data feedback controller gain matrices \(K, M \), we need the following assumptions and lemmas.

Assumption 1

The leakage delays \(\sigma (t),\ \rho (t)\) and the time-varying delays \(\tau _1(t),\ \tau _2(t) \) are continuous differential functions and satisfy \( 0\le \sigma _1\le \sigma (t)<\sigma _2,\ \ 0\le \rho _1\le \rho (t)<\rho _2,\ \dot{\sigma }(t)<\sigma ,\ \dot{\rho }(t)<\rho ,\ 0<\tau _1(t)<\tau _1,\ \dot{\tau }_1(t)<\tau _{11}<1,\ 0<\tau _2(t)<\tau _2,\ \dot{\tau }_2(t)<\tau _{22}<1,\) where \(\sigma _1,\sigma _2,\rho _1,\rho _2,\sigma ,\rho ,\tau _1,\tau _2,\tau _{11}\) and \(\tau _{22} \) are constants.

Remark 4

It is worth pointing out that only upper bounds of leakage delays were considered in [37–39]. In this paper, we considered not only the upper bounds of leakage delays but also the lower bounds. Therefore, our results are more general than that in [37–39].

Assumption 2

There exist positive constants \(\mu _{i}, \nu _{i}\), such that

for all \(x_{i},\,\tilde{x}_{i} \in {\mathbb {R}}\), \(x_{i}\ne \tilde{x}_{i},\,i=1,2\ldots n\)

Lemma 1

[40] Given any real positive define symmetric matrix \(M\) and a vector function \(\omega (\cdot ): [a,b]\rightarrow {\mathbb {R}}^{n}\); then,

Lemma 2

(Barbalat’s lemma) [41] If \(w(t)\) is uniformly continuous, and \(\int _{t_{0}}^{t}w(s)\mathrm{d}s\) has a finite limit as \(t\rightarrow +\infty \), then \(\lim \nolimits _{t\rightarrow +\infty }w(t)=0\)

3 Main results

In this section, the stability of system (6) is investigated and the sufficient conditions for ensuring the stability of system are derived. By solving the LMIs, a suitable sampled-data controller is obtained to stabilize the BAM neural networks with time-varying leakage delays.

Theorem 1

Let Assumption 1 and 2 hold; then, the trivial solution of system (6) is globally asymptotically stable if there exist positive-definite symmetric matrices \(P,\ Q,\) \(P_{i}(i=1,2, 3),\ Q_{i}(i=1,2, 3),\ R_{i}(i=1,\ldots , 6),\ T_{i}(i=1,\ldots , 8),\ S_{i}(i=1,\ldots , 6)\), positive-definite diagonal matrices \(X_{i}(i=1,\ldots , 4)\) and any matrices \(X,Y\) such that the following LMIs hold:

where

Moreover, desired controller gain matrices are given as \(K=R_5^{-1}X,\ \ M=R_6^{-1}Y\).

Proof

Consider the following general Lyapunov–Krasovskii functional:

where

Calculate the derivative of \(V(t)\) along the solution of the system (6),

In addition,

From Assumption 2, we have

So,

where \(\Pi _1^\star =-\Pi _1>0,\ \Pi _2^\star =-\Pi _2>0\) are defined in (7) and (8) and

From (19), one has

Moreover,

where \(\Phi =\max \{\sup \limits _{\theta \in [-s_1,0]}\parallel \phi (\theta )\parallel ,\sup \limits _{\theta \in [-s_1,0]} \parallel \dot{\phi }(\theta )\parallel \},\) \(\Psi =\max \{\sup \limits _{\theta \in [-s_2,0]}(\parallel \psi (\theta )\parallel ,\sup \limits _{\theta \in [-s_2,0]} \parallel \dot{\psi }(\theta )\parallel )\}.\)

On the other hand, by the definition of \(V(t)\), we get

Then, combining (21) and (22), we obtain

which demonstrates that the solution of (6) is uniformly bound on \([0,\infty )\). Next, we shall prove that \((\parallel x(t)\parallel ,\parallel y(t)\parallel )\rightarrow (0,0)\) as \(t\rightarrow \infty \). On the one hand, the boundedness of \(\parallel \dot{x}(t)\parallel \) and \( \parallel \dot{y}(t)\parallel \) can be deduced from (6) and (23). On the other hand, from (19), we have \(\dot{V}(t)\le -\xi _1^{T}(t)\Pi _1^\star \xi _1(t)-\xi _2^{T}(t)\Pi _2^\star \xi _2(t)\le -\lambda _{\mathrm{min}}(\Pi _1^\star )x^{T}(t) x(t)-\lambda _{\mathrm{min}}(\Pi _2^\star )y^{T}(t) y(t).\) So, \(\int _0^t x^{T}(s)x(s)\mathrm{d}s< \infty \) and \(\int _0^t y^{T}(s)y(s)\mathrm{d}s< \infty \). By the lemma 2, we have \((\parallel x(t)\parallel ,\parallel y(t)\parallel )\rightarrow (0,0)\). That is, the equilibrium point of system (6) is globally asymptotically stable, which implies that the designed sampled-data control can stabilize the instable BAM neural networks with leakage delays. \(\square \)

In particular, if the leakage delays are constant, that is, \(\sigma (t)=\sigma ,\ \ \rho (t)=\rho \). Then, the (6) becomes

Similar to the proof of Theorem 1, we have the following corollary.

Corollary 1

Let Assumptions 1 and 2 hold; then, the trivial solution of system (24) is globally asymptotically stable if there exist positive-definite symmetric matrices \(P,\ Q,\) \(P_1,\ Q_1,\ R_{i}(i=1,\ldots , 6),\ T_{i}(i=1,\ldots , 8),\ S_{i}(i=1,2)\), positive-definite diagonal matrices \(X_{i}(i=1,\ldots , 4)\) and any matrices \(X,Y\) such that the following LMIs hold:

where

Moreover, desired controller gain matrices are given as \(K=R_5^{-1}X,\ \ M=R_6^{-1}Y\).

Remark 5

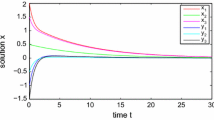

From Fig. 2, we can see that system (1) is unstable if \(\rho _1=\rho _2=[0.2,0.2]^{T}\). Taking the sampling time points \(t_k=0.01k,\ \ k=1,2,\ldots \ldots \) and solving LMIs (25), the gain matrices of the designed sampled-data controller can be obtained as follows:

By Corollary 1, system (1) is asymptotically stable under the given sampled-data control. Figure 3 shows the trajectories of state variables.

Remark 6

In fact, the leakage delay has very important effect on the dynamic behavior of systems. The larger leakage delay can cause instability of system. This phenomenon can be seen in [17]. In pervious papers [13–17], the author only considered the stability of BAM with leakage delays based on Lyapunov–Krasovskii functionals, free-weighting matrices and so on. Different from those articles, our paper mainly investigates the stabilization of BAM neural networks with leakage delays. The sampled-data controllers are designed by solving LMIs. When the leakage delays lead to the instability of system, we can stabilize the system by sampled-data control.

4 Numerical examples

In this section, a simulation example is given to show the feasibility and efficiency of the theoretic results.

Example 1

Consider the following BAM neural networks with leakage delays

where

The neuron activation functions \(g_{i}(y)={\tan }h(y_{i}),\ \ f_j(x)={\tan }h(x_j)\). The time-varying leakage delays \(\sigma (t)=0.5+0.01 {\sin }t,\ \ \rho (t)=0.4+0.01 {\cos }t\). Time-varying delays are chosen as \(\tau _1(t)=0.1 \sin ^2 t,\ \ \tau _2(t)=0.1 \cos ^2 t.\)

It is easy to see that \(\sigma _1=0.51,\ \sigma _2=0.49,\ \ \rho _1=0.41,\ \ \rho _2=0.39,\ \ \sigma = \rho =0.01,\ \ \tau _{11}=\tau _{12}=0.2<1.\) \(\mu _{i}^+=1,\ \ \nu _{i}^+=1,\ \ U=\hbox {diag}[1,1,1], \ \ V=\hbox {diag}[1,1,1].\) By the simulation results, it can be observed that the system (26) is unstable (Fig. 4).

The state trajectories of system (26)

In addition, the stabilization of the system (26) by designing suitable sampled-data controller is investigated. Taking sampling time points \(t_k=0.02k, \,k=1,2,\ldots \ldots ,\) and the sampling period is \(\tau =0.02\). Solving LMIs (7) and (8), we have:

The gain matrices of the designed controller can be obtained as follows:

Due to the limitation of space, the other solution matrices \(\left( T_{i}(i=1,\ldots , 8),\ \ S_{i}(i=1,\ldots , 6),\ \ X_{i}(i=1,\ldots , 4)\right) \) are not given. This indicates that all conditions in Theorem 1 are satisfied. By Theorem 1, system (26) is globally asymptotically stable under the given sampled-data control. Figure 5 shows the trajectories of the state variables.

The state trajectories of system (26) with sampled-data control

5 Conclusion

In this paper, a new sampled-data control strategy and its stability analysis are developed to stabilize BAM neural networks with leakage delays. We firstly analyzed instability phenomena caused by the leakage delays. Afterward, we employed sampled-data control strategy to stabilize the instable systems. Some LMIs were derived to calculate the gain matrix of the designed sampled-data controller. Finally, a numerical example was given to show the effectiveness of our theoretic results. The complexity of some control strategies will be considered in future. Some biological networks with leakage delay are also important research direction in our future work.

References

Wang WQ, Zhong SM, Nguang SK, Liu F (2013) Novel delay-dependent stability criterion for uncertain genetic regulatory networks with interval time-varying delays. Neurocomputing 121:170–178

Arunkumar A, Sakthivel R, Mathiyalagan K, Marshal Anthoni S (2014) Robust state estimation for discrete-time BAM neural networks with time-varying delay. Neurocomputing 131:171–178

Huang S, Xiang Z (2013) Robust \(L_\infty \) reliable control for uncertain switched nonlinear systems with time delay under asynchronous switching. Appl Math Comput 222:658–670

Syed AM, Balasubramaniam P (2009) Robust stability of uncertain fuzzy Cohen–Grossberg BAM neural networks with time-varying delays. Expert Syst Appl 36:10583–10588

Balasubramaniam P, Vidhya C (2010) Global asymptotic stability of stochastic BAM neural networks with distributed delays and reaction–diffusion terms. J Comput Appl Math 234:3458–3466

Kwon OM, Park JH, Lee SM, Cha EJ (2012) New results on exponential passivity of neural networks with time-varying delays. Nonlinear Anal Real World Appl 13:1593–1599

Sakthivel R, Arunkumar A, Mathiyalagan K, Marshal Anthoni S (2011) Robust passivity analysis of fuzzy Cohen–Grossberg BAM neural networks with time-varying delays. Appl Math Comput 218:3799–3809

Li H, Gao H, Shi P (2010) New passivity analysis for neural networks with discrete and distributed delays. IEEE Trans Neural Netw 21:1842–1847

Li XD, Fu XL (2013) Effect of leakage time-varying delay on stability of nonlinear differential systems. J Frankl Inst 350:1335–1344

Xu Y (2014) New results on almost periodic solutions for CNNs with time-varying leakage delays. Neural Comput Appl 25:1293–1302

Kosko B (1992) Neural networks and fuzzy systems. Prentice Hall, New Delhi

Gopalsamy K (1992) Stability and oscillations in delay differential equations of population dynamics. Kluwer, Dordrecht

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Balasubramaniam P, Kalpana M, Rakkiyappan R (2011) Global asymptotic stability of BAM fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays. Math Comput Model 53:839–853

Liu B (2013) Global exponential stability for BAM neural networks with time-varying delays in the leakage terms. Nonlinear Anal Real World Appl 14:559–566

Lakshmanan S, Park JH, Lee TH, Jung HY, Rakkiyappan R (2013) Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl Math Comput 219:9408–9423

Li XD, Fu XL, Balasubramaniamc P, Rakkiyappan R (2010) Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal Real World Appl 11:4092–4108

Sakthivel R, Raja R, Marshal Anthoni S (2010) Asymptotic stability of delayed stochastic genetic regulatory networks with impulses. Phys Scr 7:055009

Sakthivel R, Samidurai R, Marshal Anthoni S (2010) New exponential stability criteria for stochastic BAM neural networks with impulses. Phys Scr 82:045802

Calise A, Hovakimyan N, Idam M (2001) Adaptive output feedback control of nonlinear systems using neural networks. Automatica 37:1201–1211

Behrouz E, Reza T, Matthew F (2014) A dynamic feedback control strategy for control loops with time-varying delay. Int J Control 87:887–897

Cai S, Hao J, He Q, Liu Z (2011) Exponential synchronization of complex delayed dynamical networks via pinning periodically intermittent control. Phys Lett A 375:1965–1971

Liu Y, Jiang HJ (2012) Exponential stability of genetic regulatory networks with mixed delays by periodically intermittent control. Neural Comput Appl 21:1263–1269

Stamovaa I, Stamovb G (2011) Impulsive control on global asymptotic stability for a class of impulsive bidirectional associative memory neural networks with distributed delays. Math Comput Model 53:824–831

Zhu Q, Cao J (2012) Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans Neural Netw Learn Syst 23:467–479

Li XD, Song SJ (2013) Impulsive control for existence, uniqueness, and global stability of periodic solutions of recurrent neural networks with discrete and continuously distributed delays. IEEE Trans Neural Netw Learn Syst 24:868–877

Li XD, Rakkiyappan R (2013) Impulsive controller design for exponential synchronization of chaotic neural networks with mixed delays. Commun Nonlinear Sci Numer Simul 18:1515–1523

Jagannathan S, Vandegrift M, Lewis FL (2000) Adaptive fuzzy logic control of discrete-time dynamical systems. Automatica 36:229–241

Zhang WT, Liu Y (2014) Distributed consensus for sampled-data control multi-agent systems with missing control inputs. Appl Math Comput 240:348–357

Lu J, Hill DJ (2008) Global asymptotical synchronization of chaotic Lure systems using sampled data: a linear matrix inequality approach. IEEE Trans Circuits Syst Part II 55:586–590

Friman E (2010) A refined input delay approach to sampled-data control. Automatica 46:421–427

Wu ZG, Shi P, Su H, Chu J (2014) Exponential stabilization for sampled-data neural-network-based control systems. IEEE Trans Neural Netw Learn Syst 99:2180–2190

Lee TH, Wu ZG, Park JH (2012) Synchronization of a complex dynamical networks with coupling time-varying delays via sampled-data control. Appl Math Comput 219:1354–1366

Gan Q, Liang Y (2012) Synchronization of chaotic neural networks with time delay in the leakage term and parametric uncertainties based on sampled-data control. J Frankl Inst 349:1955–1971

Chen ZY, Fujioka H (2014) Performance analysis of nonlinear sampled-data emulated controllers. IEEE Trans Autom Control 59:2778–2783

Fridman E (1992) Using models with aftereffect in the problem of design of optimal digital control. Autom Remote Control 53:1523–1528

Zhu Q, Huang C, Yang X (2011) Exponential stability for stochastic jumping BAM neural networks with time-varying and distributed delays. Nonlinear Anal Hybrid Syst 5:52–77

Zhu Q, Cao J (2010) Robust exponential stability of Markovian jump impulsive stochastic Cohen–Grossberg neural networks with mixed time delays. IEEE Trans Neural Netw 21:1314–1325

Zhu Q, Cao J (2010) Stability analysis for stochastic neural networks of neutral type with both Markovian jump parameters and mixed time delays. Neurocomputing 73:2671–2680

Gu K (2000) An integral inequality in the stability problem of time-delay systems In: Proceedings of the 39th IEEE conference on decision control, Sydney, Australia 2805–2810

Slotine J, Li W (1991) Applied nonlinear control. Prentice-Hall, Englewood Cliffs

Acknowledgments

This work was jointly supported by the National Natural Science Foundation of China under Grant 11226116, the Foundation of Key Laboratory of Advanced Process Control for Light Industry (Jiangnan University) Ministry of Education, P.R. China, and the Fundamental Research Funds for the Central Universities (JUSRP51317B, JUDCF13042).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, L., Yang, Y. & Lin, G. The stabilization of BAM neural networks with time-varying delays in the leakage terms via sampled-data control. Neural Comput & Applic 27, 447–457 (2016). https://doi.org/10.1007/s00521-015-1865-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1865-4