Abstract

In this paper, the globally asymptotical stability in the mean square for a class of high-order bidirectional associative memory neural networks with time-varying delays and fixed moments of impulsive effect are studied. The proof makes use of Lyapunov–Krasovskii functionals, and the conditions are expressed in terms of linear matrix inequalities. A controller has been derived to robustly stabilize this network. Two illustrative examples are also given at the end of this paper to show the effectiveness of our results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The stability of dynamical neural networks with time delays which have been used in many applications such as optimization, control and image processing has received much attention recently (see, such as [2, 3, 6, 17,18,19,20,21,22, 25,26,27, 43, 44]). For example, the stability of delay Hopfield neural networks [2, 3, 5, 31, 33] and Cohen–Grossberg neural networks [20, 42, 45] has been investigated. In special, BAM neural networks were first proposed by Kosko [29, 30], and since then, the BAM neural system has been extensively studied [9, 11, 13, 27, 28, 34, 35, 37, 39, 41, 46]. As a class of dynamic systems, BAM neural networks are usually featured by either first-order or high-order forms described in continuous or discrete time. The low-order BAM systems have been widely studied (such as [34, 35]) because of its potential for signal and image processing, pattern recognition. Recently, the higher-order BAM systems [12, 27, 28] display nice properties due to the special structure of connection weights. The existence of a globally asymptotically stable equilibrium state has been studied under a variety of assumptions on the activation functions. Generally, the activation functions have been assumed to be continuously differentiable, monotonic and bounded [2, 5, 10, 13, 15, 18, 20, 36, 37, 39, 40]. But in some applications, one is required to use unbounded and non-monotonic activation functions [27, 41, 46]. It has been shown by Crespi [16], Morita [32] that the capacity of an associative memory network can be significantly improved if the sigmoidal functions are replaced by non-monotonic activation functions. On the other hand, the state of electronic networks is often subject to display impulsive effects [7, 22].

To the best of our knowledge, except [27, 28] for higher-order BAM neural networks by using the differential inequality with delay and impulse, the stability analysis for impulsive high-order BAM dynamical systems with time-varying delays has seldom been investigated and remains important and challenging. In this paper, we shall study another generation of high-order BAM dynamical neural networks with impulsive by using Lyapunov–Krasovskii functionals, employing linear matrix inequalities (LMIs) and differential inequalities. The organization of this paper is as follows. In Sect. 2, problem formulation and preliminaries are given. In Sect. 3, several sufficient criteria will be established for the equilibrium of the system to be asymptotical stability in the mean square. In Sect. 4, two examples will be given to demonstrate the effectiveness of our results.

2 Preliminaries and Lemmas

In this paper, we will consider the following impulsive neural networks

or, equivalently,

where \(t\ge 0;\ i=1,2,\ldots ,n;\ j=1,2,\ldots ,m;~\triangle x_i(t)=x_i(t)-x_i(t^-),~\triangle y_j(t)=y_j(t)-y_j(t^-),~ \triangle x(t)=x(t)-x(t^-)=(\triangle x_1(t),\ldots ,\triangle x_n(t)),~\triangle y(t)=y(t)-y(t^-)=(\triangle y_1(t),\ldots ,\triangle y_m(t)),~0\le t_0<\cdots<t_k<\cdots ,\lim \limits _{k\rightarrow \infty }t_k=\infty \); \(x_i(t), y_j(t)\) denote the potential of the cell i and j at time t. \(x(t)=(x_1(t), x_2(t),\ldots , x_n(t))^T\in R^n,y(t)=(y_1(t), y_2(t),\ldots , y_m(t))^T\in R^m\); \(f(y(t)) = (f_1(y_1(t)),\ldots , f_m(y_m(t)))^T\in R^m\) and \(g(x(t)) = (g_1(x_1(t)), g_2(x_2(t)),\ldots , g_n(x_n(t)))^T\in R^n\) denote the activation functions of the neuron at time t, \(u(t)=(u_1(t), \ldots , u_{m_1}(t))^T\in R^{m_1},\ \widetilde{u}(t)=(\widetilde{u}_1(t), \widetilde{u}_2(t),\ldots , \widetilde{u}_{m_2}(t))^T\in R^{m_2}\) are continuous control input, I and \(\widetilde{I}\) denote the identity matrix of size n and m, respectively. \(J(t) = (J_1(t), \ldots , J_{n}(t))^T\in R^{n},\) \(\widetilde{J}(t) = (\widetilde{J}_1(t), \widetilde{J}_2(t),\ldots , \widetilde{J}_{m}(t))^T \in R^{m}\) are the impulsive control input at time t, \(\Upsilon _f(s) = \mathrm{diag}( f(y(t-s)), f (y(t-s)), \ldots , f (y(t-s)))_{n\times n},\Upsilon _g(s) = \mathrm{diag}(g(x(t-s)), g(x(t-s)), \ldots , g(x(t-s)))_{m\times m};\) \(D = \mathrm{diag}(d_1, \ldots , d_n)> 0,\widetilde{D} = \mathrm{diag}(\widetilde{d_1}, \ldots , \widetilde{d_m}) > 0\) are positive diagonal matrices, and \(d_i\), \(\widetilde{d_j}\) represent the rate of isolation of cells i and j from the other states and inputs, respectively. This means that cells i and j reset their potential to the other state during the isolation. \(A = (a_{ij})_{n\times m}, B = (b_{ij})_{n\times m},~R = (r_{ij})_{n\times m_1},~M = (m_{ij})_{n\times n},~E = (e_{ij}(t))_{n\times n},~\widetilde{A} = (\widetilde{a_{ji}})_{m\times n}, ~\widetilde{B} = (\widetilde{b_{ji}})_{m\times n},~\widetilde{R} = (\widetilde{r}_{ji})_{m\times m_2},~ \widetilde{M} = (\widetilde{m}_{ji})_{m\times m},~\widetilde{E} = (\widetilde{e_{ji}}(t))_{m\times m}\) are the feedback matrix and the delayed feedback matrix, respectively. \(\Gamma _1 = [C^T_1, C^T_2, \ldots , C^T_n]^T,C_i = (c_{ijl})_{m\times m}; \Gamma _2 = [\widetilde{C}^T_1, \widetilde{C}^T_2, \ldots , \widetilde{C}^T_m]^T,\widetilde{C}_j = (\widetilde{c}_{jil})_{n\times n}\). \(E(t), \widetilde{E}(t)\) are matrix functions with time-varying uncertainties, that is, \(E(t)=E+\Delta E,\widetilde{E}(t)=\widetilde{E}+\Delta \widetilde{E}\), where \(E,\widetilde{E}\) are known real constant matrices, \(\Delta E,\Delta \widetilde{E}\) are unknown matrices representing time-varying parameter uncertainties. We assume that the uncertainties are norm-bounded and can be described as

where \(H,\widetilde{H},D_1, \widetilde{D}_1 \) are known real constant matrices with appropriate dimensions, and the uncertain matrix F(t), which may be time varying, is unknown and satisfies \(F^T(t)F(t)\le I,\widetilde{F}^T(t)\widetilde{F}(t)\le \widetilde{I}\) for any given t. It is assumed the elements of F(t) are Lebesgue measurable. When \(F(t) = 0,\widetilde{F}(t)=0,\) system (2) is referred to as nominal neural impulsive systems. Time delays \(\tau (t), \sigma (t)\) are continuous functions, which correspond to the finite speed of axonal signal transmission and \(0\le \tau (t)\le \tau , 0 \le \sigma (t) \le \sigma \) and \(0<\sigma '(t)\le \sigma _1<1,0<\tau (t)\le \tau _1<1.\)

The initial conditions associated with (1) or (2) are of the form

in which \(\phi _i (t), \varphi _j (t) (i = 1, 2, \ldots , n; j = 1, 2, \ldots , m)\) are continuous functions. The notations used in this paper are fairly standard. The matrix \(M>\ (\ge ,<,\le )~0\) denotes a symmetric positive definite (positive semidefinite, negative, negative semidefinite) matrix, respectively. For \(x\in R^n,\) denote \(\Vert x\Vert =\sqrt{x^Tx}\) and \(|x| =\sum _{i=1}^n|x_i|.\)

Remark 1

BAM is a type of recurrent neural network. Giving a pattern, it can return another pattern which is potentially of a different size. It is similar to the Hopfield network [2, 3, 5, 31, 33] in that they are both forms of associative memory. However, Hopfield networks return patterns of the same size. As for competitive neural networks [1, 4], they can model the dynamics of cortical cognitive maps with unsupervised synaptic modifications.

Throughout this paper, the activation functions \(f(\cdot ), g(\cdot ), J(\cdot ), \widetilde{J}(\cdot )\) are assumed to possess the following properties:

-

(H1)

There exist matrices \(K\in R^{m\times m}, U\in R^{n\times n}\) such that, for all \(y,z\in R^m;x,s\in R^n\),

$$\begin{aligned} |f(y)-f(z)|\le |K(y-z)|;\ \ |g(x)-g(s)|\le |U(x-s)|. \end{aligned}$$ -

(H2)

\(f(0)= g(0)=J(0)= \widetilde{J}(0)=0.\)

-

(H3)

There exist positive numbers \(O_{j}, \widetilde{O}_{j}\) such that

$$\begin{aligned} |f_j(x)|\le O_{j},\ |g_i(x)|\le \widetilde{O}_{i}; \end{aligned}$$for all \(x\in R (i = 1, 2, \ldots , n; j = 1, 2, . . . ,m).\)

Remark 2

Under assumption (H2), we have that the equilibrium point of system (2) is the trivial solution of system (2). In fact, when the equilibrium point \((x^*,y^*)\) of system (2) in the engineering background isn’t the trivial solution of system (2), one can transfer the equilibrium point \((x^*,y^*)\) to (0,0) by the transformation \(u=x-x^*,v=y-y^*\). Then, by the transformation \(\widetilde{f}(v)=f(v+y^*)-f(y^*),\widetilde{g}(u)=g(u+x^*)-g(x^*)\), assumption (H2) is always satisfied(see [19,32]), where \(J(0)=0,~\widetilde{J}(0) = 0\) mean that the impulsive control inputs at time 0 do not work in the engineering background.

Let \(x(t; \phi ),\ y(t; \varphi )\) denote the state trajectory of neural network (1) or (2) from the initial data \(x(s) = \phi (s)\in PC([t_0-\sigma , t_0]; R^n),\ y(s) = \varphi (s)\in PC([t_0-\tau , t_0]; R^m)\), respectively, where \(PC([t_0-r, t_0]; R^n)\) denote the set of piecewise right continuous function \(\phi : [-r,0]\rightarrow R^p\) with the norm defined by \(\Vert \phi \Vert _r=\sup _{-r\le \theta \le 0}\Vert \phi (\theta )\Vert .\) It can be easily seen that system (2) admits a trivial solution \(x(t; 0) = 0,\ y(t; 0) = 0\) corresponding to the initial data \(\phi = 0,\ \varphi =0\).

Definition 1

([46]) For system (1) or (2) and every \(\xi _1\in PC([t_0-\sigma , t_0]; R^n)\) and \(\xi _2\in PC([t_0-\tau , t_0]; R^m)\), the trivial solution (equilibrium point) is robustly, globally, asymptotically stable in the mean square if the following holds:

Lemma 1

For any vectors \(a,b\in R^n,\) the inequality

holds for \(\forall \ \rho >0.\)

Lemma 2

For any vectors \(a,b\in R^n,\) the inequality

holds for any matrices \(X>0.\)

Lemma 3

([9]) Given constant matrices \(\Sigma _1, \Sigma _2, \Sigma _3\) where \(\Sigma _1 = \Sigma _1^T\) and \(0<\Sigma _2 = \Sigma ^T_2\), then

if and only if

Lemma 4

([38]) Let A, D, E, F and P be real matrices of appropriate dimensions with \(P >0\) and F satisfying \(F^TF\le I.\) Then, for any scalar \(\varepsilon >0\) satisfying \(P^{-1}-\varepsilon ^{-1}DD^T >0,\) we have

3 Impulsively Exponential Stability

Now, we shall present and prove our main results. Our results complement and improve some of the known results found in the literature.

-

\(\widetilde{\Lambda }_1=-Q\widetilde{D}-\widetilde{D}^TQ+Q_1+W_1+W_1^T\),

-

\(\widetilde{\Lambda }_2=-(1-\tau _1)Q_1-W_2-W_2^T+\rho _{Z_2}K^TK,\ \widetilde{\Lambda }_3=-\sigma P_2+\epsilon _1\sigma \lambda _{2}I\),

-

\(\Lambda _1=-PD-D^TP+N_1+N_1^T+P_1\),

-

\(\Lambda _2=-(1-\sigma _1)P_1-N_2-N_2^T+\rho _{Z_1}U^TU,\ \Lambda _3=-\tau Q_2+\epsilon _2\tau \lambda _{1}I\).

where \(\lambda _1=\rho _{\Gamma ^T_1\Gamma _1},\lambda _2=\rho _{\Gamma ^T_2\Gamma _2}.\)

Theorem 1

Consider system (2) with the impulsive control inputs \(J(\cdot )=0,\ \widetilde{J}(\cdot )=0\). Under assumptions (H1)–(H3), the equilibrium point of system (2) is robustly, globally, asymptotically stable in the mean square if there exist some scalars \(\epsilon _i>0(i=1,2,3,4),\rho _X> 0 (X=Q_2,P_2,X_1,X_2,Z_1,Z_2)\) and matrices \(N_i(i=1,2,3),W_i(i=1,2,3),X_1>0,X_2>0,Z_1>0,Z_2>0,P>0,Q>0,P_1>0,Q_1>0,P_2>0,Q_2>0\) such that

hold.

Proof

Let

(I) We consider the case of \(t\ne t_k.\) Calculate the derivative of V(t) along the solutions of (2), and we obtain

From Lemmas 1, 2 and 4, we have

Since \(\Upsilon ^T_g\Upsilon _g = \Vert g(x(t - s)\Vert ^2 I\) and \(\Vert g(x(t - s)\Vert ^2\le \sum _{j=1}^mO_j=\alpha ,\) it follows that

Since \(\Upsilon ^T_f\Upsilon _f = \Vert f(y(t - s)\Vert ^2 I\) and \(\Vert f(y(t - s)\Vert ^2\le \sum _{i=1}^n\widetilde{O}_i=\widetilde{\alpha },\) it follows that

Noting that \(x(t)-x(t-\sigma (t))-\int _{t-\sigma (t)}^t\dot{x}(s)\hbox {d}s=0,\ y(t)-y(t-\tau (t))-\int _{t-\tau (t)}^t\dot{y}(s)\hbox {d}s=0,\) then, there exist matrices \(N_1,N_2,N_3,W_1,W_2,W_3\) such that

Moreover,

Thus,

where \(\xi _1=[y^T(t)\ y^T(t-\tau (t))\ (\int _{t-\tau (t)}^t\dot{y}(s)\hbox {d}s)^T\ g^T(x(s))]^T,\xi _2=[x^T(t)\ x^T(t-\sigma (t))\ (\int _{t-\sigma (t)}^t\dot{x}(s)\hbox {d}s)^T\ f^T(y(s))]^T\), and

By Lemma 3, it is obvious from (5) and (6) that \(\Pi _1<0,\Pi _2<0\). There must exist scalars \(\eta _1>0,\eta _2>0\) such that

So

(II) We consider the case of \(t = t_k\). By (4), we have

By (7)–(10) and combining with Schur complements (Lemma 3) yield

then, \(V(t_k)-V(t_k^-)\le 0\), that is,

This and (I) implie that the equilibrium point of system (2) is robustly, globally, asymptotically stable in the mean square. The proof is complete.

Let us now consider to design a state feedback memory control law of the form

to stabilize system (2), where \(K_c,K_{c1}\in R^{m_1\times n},\ \widetilde{K}_c,\widetilde{K}_{c1}\in R^{m_2\times m}\), \(K_d\in R^{n\times n},\ \widetilde{K}_d\in R^{m\times m}\) are constant gains to be designed.

Substituting (13) into (2) and applying Theorem 1, it is easy to obtain the next theorem.

Theorem 2

Consider system (2). Under assumptions (H1)–(H3), if there exist scalars \(\epsilon _i>0(i=1,2,3,4),\rho _X> 0 (X=Q_2,P_2,X_1,X_2,Z_1,Z_2)\) and matrices \(N_i(i=1,2,3),W_i(i=1,2,3),Y_c,Y_{c1},Y_d,\widetilde{Y}_c,\widetilde{Y}_{c1},\widetilde{Y}_d,X_1>0,X_2>0,Z_1>0,Z_2>0,P>0,Q>0,P_1>0,Q_1>0,P_2>0,Q_2>0\) such that (4), (9), (10) and

hold. Then, for any bounded time delay \(\tau (t),\sigma (t)\), the control law (13) with \(K_c=Y_{c}P^{-1},\widetilde{K}_c=\widetilde{Y}_{c}Q^{-1},K_{c1}=Y_{c1}P^{-1}, \widetilde{K}_{c1}=\widetilde{Y}_{c1}Q^{-1},K_d=Y_{d}P^{-1} \) and \(\widetilde{K}_d=\widetilde{Y}_{d}Q^{-1}\) robustly stabilizes system (1) or (2) in the mean square for any impulsive time sequence \(\{t_k\}\) where

Remark 3

Some stability analysis of higher-order BAM neural networks with delays is presented [12, 27, 28, 37]. However, simple and efficient conditions for the design and robustness analysis of such controllers were missing. The present paper fills this gap through introducing simple LMIs for robust stability analysis of the BAM systems with multiple delays and justifying that these LMIs are always feasible for small enough delays. Moreover, all of these criteria in this paper are easy to verify.

Remark 4

In this paper, the methods are by using Lyapunov–Krasovskii functionals, employing linear matrix inequalities and differential inequalities. In [31, 33], the authors mainly used the differential inequality with delay and impulse, coincidence degree theory as well as a priori estimates and Lyapunov functional, respectively. Moreover, the methods derived in this paper can be used to analyze, design and control some other high-order artificial neural networks.

Remark 5

In this paper, the essential difficulties encountered are how to choose suitable Lyapunov–Krasovskii functionals, and how to design a state feedback memory control law. The first is because that the time-varying delay functions \(\tau (t), \sigma (t)\) exist; the second is because designing the delay feedback impulsive control is a new theory in the impulsive stabilization. And, they were solved by adding the four terms

to Lyapunov–Krasovskii functionals and designing a linear delay feedback impulsive control law (13) (since f, g is global Lipschitz continuous), respectively.

Remark 6

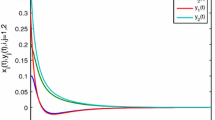

When the system is running with impulses, our results show that impulses make contribution to the stability of differential systems with time delay even if they are unstable, which is shown in Figs. 2 and 3.

4 Numerical Examples

Example 1

Consider system (2) with:

Then,

By solving LMIs (3)–(9) for \(\epsilon _i>0(i=1,2,3,4),\rho _X> 0 (X=Q_2,P_2,X_1,X_2,Z_1,Z_2)\) and matrices \(X_1>0,X_2>0,Z_1>0,Z_2>0,P>0,Q>0,P_1>0,Q_1>0,P_2>0,Q_2>0\), we obtain

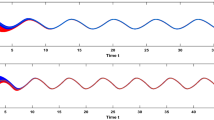

which implies from Theorem 1 that the above delayed stochastic high-order neural network is robustly, globally, asymptotically stable in the mean square with the impulsive control inputs \(J(\cdot )=0,\ \widetilde{J}(\cdot )=0.\) The time trajectories of Example 1 with initial conditions

and \( \ f_1(x)=\tanh (0.9x),\ f_2(x)=\tanh (0.78x),\ f_3(x)=\tanh (0.96x);\ g_1(x)=\tanh (0.83x),\ g_2(x)=\tanh (0.88x),\ g_3(x)=\tanh (0.93x)\) are shown Fig. 1.

State of system Example 1

Example 2

Consider impulsive neural networks (2) with:

Then,

By solving LMIs (3)–(9) for \(\epsilon _i>0(i=1,2,3,4),\rho _X> 0 (X=Q_2,P_2,X_1,X_2,Z_1,Z_2)\) and matrices \(X_1>0,X_2>0,Z_1>0,Z_2>0,P>0,Q>0,P_1>0,Q_1>0,P_2>0,Q_2>0\), we obtain

which implies from Theorem 2 that the above neural network is robustly, globally, asymptotically stable in the mean square by designing a state feedback memory control law and a impulsive feedback control law of the form

The time trajectories of Example 2 with initial conditions

and \( \ f_i(x)=\tanh (x) (i=1,2,3);\ g_j(x)=\tanh (x) (j=1,2,3)\) are shown in Figs. 2 [without impulsive controller (18)] and 3 [with impulsive controller (18)].

State of system Example 2 without impulsive control

5 Conclusion and Future Works

In this article, we have investigated some high-order bidirectional neural networks with time-varying delays and impulsive, established a new globally asymptotical stability and designed a state feedback memory controller to robustly stabilize this network. Due to the development of the fractional-order calculus and its applications [23, 24], some results on fractional-order neural networks have been obtained [8, 14]. To the best of our knowledge, the model of the high-order fractional-order BAM neural networks has not been investigated until now. The corresponding results will appear in the near future.

References

A. Arbi, A. Alsaedi, J. Cao, Delta-differentiable weighted pseudo-almost automorphicity on time-space scales for a novel class of high-order competitive neural networks with WPAA coefficients and mixed delays. Neural Process. Lett. (2017). https://doi.org/10.1007/s11063-017-9645-z

A. Arbi, C. Aouiti, F. Chèrif, A. Touati, A.M. Alimi, Stability analysis of delayed Hopfield neural networks with impulses via inequality techniques. Neurocomputing 158, 281–294 (2015)

A. Arbi, C. Aouiti, F. Chèrif, A. Touati, A.M. Alimi, Stability analysis for delayed high-order type of Hopfield neural networks with impulses. Neurocomputing 165, 312–329 (2015)

A. Arbi, J. Cao, Pseudo-almost periodic solution on time-space scales for a novel class of competitive neutral-type neural networks with mixed time-varying delays and leakage delays. Neural Process. Lett. 46, 719–745 (2017)

A. Arbi, F. Chèrif, C. Aouiti, A. Touati, Dynamics of new class of hopfield neural networks with time-varying and distributed delays. Acta Math. Sci. 36(3), 891–912 (2016)

S. Arik, Global robust stability of delayed neural networks. IEEE Trans. Circ. Syst. I(50), 156–160 (2003)

D.D. Bainov, P.S. Simeonov, Systems with Impulse Effect: Stability, Theory and Applications (Ellis Horwood, Chichester, 1989)

A. Boroomand, M. Menhaj, Fractional-order Hopfield neural networks. Lect. Notes Comput. Sci. 5506, 883–890 (2009)

S. Boyd, L.E.I. Ghaoui, E. Feron, V. Balakrishnan, Linear Matrix Inequality in System and Control Theory (SIAM, Philadelphia, 1994)

J. Cao, M. Dong, Exponential stability of delayed bidirectional associative memory networks. Appl. Math. Comput. 135, 105–112 (2003)

J. Cao, Q. Jiang, An analysis of periodic solutions of bi-directional associative memory networks with time-varying delays. Phys. Lett. A 330, 203–213 (2004)

J. Cao, J. Liang, J. Lam, Exponential stability of high-order bidirectional associative memory neural networks with time delays. Physica D 199, 425–436 (2004)

J. Cao, L. Wang, Exponential stability and periodic oscillatory solution in BAM networks with delays. IEEE Trans. Neural Netw. 13, 457–463 (2002)

V. Çelik, Y. Demir, Chaotic fractional order delayed cellular neural network, in New Trends in Nanotechnology and Fractional Calculus Applications, ed. by D. Baleanu, Z. Guvenc, J. Machado (Springer, Dordrecht, 2010), pp. 313–320

A. Chen, L. Huang, J. Cao, Existence and stability of almost periodic solution for BAM neural networks with delays. Appl. Math. Comput. 137, 177–193 (2003)

B. Crespi, Storage capacity of non-monotonic neurons. Neural Netw. 12, 1377–1389 (1999)

Y. Guo, Mean square global asymptotic stability of stochastic recurrent neural networks with distributed delays. Appl. Math. Comput. 215, 791–795 (2009)

Y. Guo, Mean square exponential stability of stochastic delay cellular neural networks. Electron. J. Qual. Theory Differ. Equ. 34, 1–10 (2013)

Y. Guo, Global asymptotic stability analysis for integro-differential systems modeling neural networks with delays. Z. Angew. Math. Phys. 61, 971–978 (2010)

Y. Guo, Global stability analysis for a class of Cohen–Grossberg neural network models. Bull. Korean Math. Soc. 49(6), 1193–1198 (2012)

Y. Guo, Exponential stability analysis of traveling waves solutions for nonlinear delayed cellular neural networks. Dyn. Syst. 32(4), 490–503 (2017)

Y. Guo, Globally robust stability analysis for stochastic Cohen–Grossberg neural networks with impulse and time-varying delays. Ukr. Math. J. 69(8), 1049–1060 (2017)

Y. Guo, Solvability for a nonlinear fractional differential equation. Bull. Aust. Math. Soc. 80, 125–138 (2009)

Y. Guo, Solvability of boundary value problems for a nonlinear fractional differential equations. Ukr. Math. J. 62(9), 1211–1219 (2010)

Y. Guo, S.-T. Liu, Global exponential stability analysis for a class of neural networks with time delays. Int. J. Robust Nonlinear Control 22, 1484–1494 (2012)

Y. Guo, M. Xue, Periodic solutions and exponential stability of a class of neural networks with time-varying delays. Discrete Dyn. Nat. Soc. 2009(415786), 1–14 (2009)

D.W.C. Ho, J. Liang, J. Lam, Global exponential stability of impulsive high-order BAM neural networks with time-varying delays. Neural Netw. 19, 1581–1590 (2006)

H.-F. Huo, W.-T. Li, S. Tang, Dynamics of high-order BAM neural networks with and without impulses. Appl. Math. Comput. 215, 2120–2133 (2009)

B. Kosto, Neural Networks and Fuzzy Systems—A Dynamical System Approach Machine Intelligence (Prentice-Hall, Englewood Cliffs, 1992), pp. 38–108

B. Kosto, Adaptive bi-directional associative memories. Appl. Optim. 26(23), 4947–4960 (1987)

L. Liu, Q. Zhu, Almost sure exponential stability of numerical solutions to stochastic delay Hopfield neural networks. Appl. Math. Comput. 266, 698–712 (2015)

M. Morita, Associative memory with nonmonotone dynamics. Neural Netw. 6, 115–126 (1993)

H. Qiao, J. Peng, Z.B. Xu, Nonlinear measures: a new approach to exponential stability analysis for Hopfield-type neural networks. IEEE Trans. Neural Netw. 12(2), 360–370 (2001)

S. Senan, S. Arik, New results for global robust stability of bidirectional associative memory neural networks with multiple time delays. Chaos Solitons Fractals 41(4), 2106–2114 (2009)

S. Senan, S. Arik, D. Liu, New robust stability results for bidirectional associative memory neural networks with multiple time delays. Appl. Math. Comput. 218(23), 11472–11482 (2012)

B. Shen, Z.D. Wang, H. Qiao, Event-triggered state estimation for discrete-time multidelayed neural networks with stochastic parameters and incomplete measurements. IEEE Trans. Neural Netw. Learn. Syst. 28(5), 1152–1163 (2017)

P.K. Simpson, Higher-ordered and intraconnected bidirectional associative memories. IEEE Trans. Syst. Man Cybern. 20, 637–653 (1990)

Y. Wang, L. Xie, C.E. de Souza, Robust control of a class of uncertain nonlinear systems. Syst. Control Lett. 19, 139–149 (1992)

Y. Zhang, Y.R. Yang, Global stability analysis if bi-directional associative memory neural networks with time delay. Int. J. Circuit Theory Appl. 29, 185–196 (2001)

H.Y. Zhao, Global stability of bi-directional associative memory neural networks with distributed delays. Phys. Lett. A 297, 182–190 (2002)

Q. Zhu, J. Cao, Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans. Neural Netw. Learn. Syst. 23(3), 467–479 (2012)

Q. Zhu, J. Cao, Exponential input-to-state stability of stochastic Cohen–Grossberg neural networks with mixed delays. Neurocomputing 131, 157–163 (2014)

Q. Zhu, J. Cao, Exponential stability of stochastic neural networks with both Markovian jump parameters and mixed time delays. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 41‘(2), 341–353 (2011)

Q. Zhu, J. Cao, T. Hayat, F. Alsaadi, Robust stability of Markovian jump stochastic neural networks with time delays in the leakage terms. Neural Process. Lett. 41(1), 1–27 (2015)

Q. Zhu, J. Cao, R. Rakkiyappan, Exponential input-to-state stability of stochastic Cohen–Grossberg neural networks with mixed delays. Nonlinear Dyn. 79(2), 1085–1098 (2015)

Q. Zhu, C. Huang, X. Yang, Exponential stability for stochastic jumping BAM neural networks with time-varying and distributed delays. Nonlinear Anal. Hybrid Syst. 5, 52–77 (2011)

Acknowledgements

The authors would like to thank the Associate Editor and the anonymous Reviewers for their constructive comments and suggestions to improve the quality of the paper. This work was supported financially by the Natural Science Foundation of Shandong Province under Grant No. ZR2017MA045; the Open Research Project of the State Key Laboratory of Industrial Control Technology, Zhejiang University, China, under Grant No. ICT170289.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Guo, Y., Xin, L. Asymptotic and Robust Mean Square Stability Analysis of Impulsive High-Order BAM Neural Networks with Time-Varying Delays. Circuits Syst Signal Process 37, 2805–2823 (2018). https://doi.org/10.1007/s00034-017-0706-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-017-0706-3

Keywords

- Impulsive bidirectional neural networks

- Lyapunov functionals

- Linear matrix inequality

- Globally robust stability