Abstract

Fuzzy C-means (FCM) clustering method has been widely used in image segmentation that plays an important role in a variety of applications in image processing and computer vision systems, but the performance of FCM heavily relies on the initial cluster centers which are difficult to determine. To solve the problem, the paper proposes a new hybrid method for image segmentation, which first randomly generates a population of initial clustering solutions and then uses an evolutionary algorithm to search for better clustering solutions; at each iteration, the FCM method is applied on each initial clustering solution to produce its segmentation result. Among a set of popular evolutionary algorithms, we find that the biogeography-based optimization (BBO) metaheuristic exhibits good performance on the considered problem. Besides the basic BBO, we have also proposed a set of improved BBO versions in their combination with FCM for image segmentation. Computational experiments on a set of test images show that the proposed method has significant advantage over the basic FCM algorithm and those hybrid algorithms combining FCM with other evolutionary algorithms such as artificial bee colony and particle swarm optimization.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is a process that partitions a raw image into a set of non-overlapping regions, each of which has different characteristics so that some interesting objects can be obtained. Traditional image segmentation techniques include edge detection, region extraction, and histogram method (threshold) (Han and Wang 2002; Lin and Tian 2002; Lin et al. 2005; Sharon et al. 2000). In recent years, image segmentation methods combining with some new calculation models, such as wavelet analysis, clustering analysis, artificial neural networks, and evolutionary algorithms, have attracted much attention (Bhandarkar and Zhang 1999; Choi and Baraniuk 2001; Coleman and Andrews 1979; Kuntimad and Ranganath 1999).

Essentially, the process of image segmentation is to classify and restructure image pixels according to their characteristics. So it is not surprising that clustering methods have been widely used in image segmentation. K-means algorithm is one of the most classical clustering algorithms, the basic idea of which is dividing data points into k clusters, and then continuously adjusting the points among the clusters to improve clusters. This method is fast and easy to implement, but it is difficult to find appropriate clusters when the distribution shape is complex.

The fuzzy C-means (FCM) clustering algorithm Bezdek (1981) is based on K-means algorithm, and its process can be divided into two stages: (1) dividing the given set of n sample points into c initial clusters and calculating the membership degree of each point with respect to each cluster i; (2) constantly updating the clustering centers to improve the intra-class polymerization and the inter-class difference until all the clustering centers converged. FCM has been widely used because it introduces the concept of membership degree of fuzzy set to image segmentation to avoid the setting of thresholds and the intervention of the clustering (Liu et al. 2016). However, FCM has some shortcomings, e.g., it can be very hard to determine the number of clusters and their initial centers, and the iterative convergence process can be very time-consuming and easy to fall into local optima (Gong et al. 2013; Zhu 2009). Therefore, recently some researchers combine FCM with evolutionary algorithms to simultaneously handle multiple clustering solutions to improve clustering speed and accuracy (Hruschka et al. 2004; Li 2013).

This paper proposes a new hybrid method which combines the FCM and an evolutionary algorithm named biogeography-based optimization (BBO) Simon (2008) for image segmentation. The key idea is regarding an initial matrix of cluster centers as a solution, and using BBO to evolve a population of solutions, where each solution is evaluated by using FCM to obtain the clustering result. For our hybrid method, we have used not only the basic BBO but also a set of its improved versions in their combination with FCM. Computational experiments show that the hybrid evolutionary method has significant advantage over the basic FCM method (e.g., the minimum-distance separation for partition of our method is over 2000 times of that of FCM on the test image), and BBO outperforms a number of other popular evolutionary algorithms in our hybrid framework (in particular, the cohesion within clusters of BBO–FCM is much higher than the other algorithms).

The rest of this paper is organized as follows: Sect. 2 reviews related work, Sect. 3 introduces the basic FCM, Sect. 4 describes the hybrid BBO–FCM for image segmentation, Sect. 5 presents the computational experiments, and Sect. 6 concludes.

2 Related work

In general, conventional segmentation methods only perform well on small-size problem instances, but their performance deteriorates rapidly as the instance size increases. Therefore, a number of studies have been devoted to evolutionary optimization algorithms or their hybridization with conventional methods for complex segmentation problems. In this section, we review related work on metaheuristics for image segmentation and related problems.

Ant colony optimization (ACO) Dorigo et al. (1996) is a metaheuristic inspired by ants’ behavior for computationally difficult optimization problems. Wang et al. (2005) proposed an approach that uses the active contour model to convert the image segmentation problem to a path searching problem and then uses ACO to search for the best path in a constrained region for image segmentation. Simulation results show that the new method has a better performance than GA. Melkemi et al. (2006) proposed a distributed image segmentation algorithm structured as a multiagent system composed of a coordinator agent and a set of segmentation agents. For an initial image, each segmentation agent employs the iterated conditional method to obtain a sub-optimal segmented image, and the coordinator agent uses the crossover and mutation operators of GA to diversify the initial image along with the extremal local search process. Experiments show that the competitive and cooperative process accedes to a good segmentation.

Artificial bee colony (ABC) is Karaboga (2005) another popular metaheuristic that mimics a colony of bees cooperating in finding good solutions to optimization problems. Hancer et al. (2012) proposed an ABC-based algorithm to find a mapping of images to clusters in order to support high-level description of image content for image understanding. Ozturk et al. (2014) proposed an ABC-based color quantization method for reducing the number of colors in a digital image without causing significant information loss, and their results show the ABC algorithm is better than other quantization methods including K-means and FCM. Xue et al. (2017) equipped ABC with a self-adaptive mechanism based on the global best candidate, and their results showed that the improved algorithm can better solve complex optimization problems, including a real K-means clustering problem.

Particle swarm optimization (PSO) Eberhart and Kennedy (1995) is a stochastic algorithm which is inspired by behavior of flock birds searching for food, and it also demonstrates the effectiveness and efficiency in solving a wide range of optimization problems in image applications. Omran et al. (2006) proposed a dynamic clustering approach based on PSO (DCPSO), which can automatically determine the most appropriate number of clusters to segment images. This algorithm consists of two main steps. In the first step, it partitions the image data into a relatively large number of clusters, selects the best number of clusters using binary PSO, and then refines the center of chosen clusters by K-means algorithm. In the second step, it segments the image according to the center of clusters. The proposed approach is suitable for both synthetic and natural images. The advantage of the approach is that the number of clusters does not need to be specified by users in advance. Li and Li (2008) proposed another image segmentation approach based on fuzzy entropy and PSO, where PSO is used to select the optimal fuzzy parameter combination and fuzzy threshold compatibly. Compared with the exhaustive search method, the algorithm consumes less search time to get the same optimal solution and shows better robustness. Lee et al. (2012) proposed a modified PSO for determining thresholds in color image segmentation, where each particle adjusts its flying speed based on its own flying experience and its neighbors’ experience. In order to reduce the computational time of image segmentation, Gao et al. (2013) presented a PSO algorithm with intermediate disturbance searching strategy (IDPSO). Different from the basic PSO, IDPSO inserts a disturbance factor depending on the current local optimum into the displacement updating process and thus not only enhances the global search ability but also increases the convergence speed. The proposed approach can also be applied in medical image segmentation. Li et al. (2015) proposed a dynamic-context cooperative quantum-behaved PSO algorithm, the key point of which is to incorporate a dynamical mechanism for updating each particle, while it completes a cooperation operation with other particles. Recently, there are also studies on multiobjective PSO algorithms for clustering and classification problems using multiple criteria (Armano and Farmani 2016; Zheng et al. 2014c).

BBO has also been used in some difficult optimization problems in image processing. Wang and Wu (2016) adopted BBO to combine features in salient object detection to facilitate the delineation of pathological structures and other regions for further image segmentation. The method first extracts and normalizes the feature maps of the target image and then utilizes BBO to determine an optimal weight vector that combines the feature maps into one saliency map. Although BBO may take much computational time, the proposed algorithm distinguishes the foreground objects and the background more clear, and its segmentation effect is much better than other conventional segmentation methods. For detecting tumors from normal brains in MRI scanning, Zhang et al. (2015) proposed a hybrid method that uses BBO and PSO to optimize a feed-forward neural network for detection. Yang et al. (2016) proposed a similar hybrid wavelet-energy and BBO method for automated classification of brain images, where BBO is used to optimize the weights of the SVM classifier. BBO-based algorithms have also been used in medical applications such as CT scan image segmentation (Chatterjee et al. 2012) and atrial fibrillation diagnostic (Smiley and Simon 2016).

3 Image segmentation and fuzzy C-means clustering algorithm

3.1 Image segmentation

Image segmentation is to partition a image into a set of non-overlapping regions, each of which has different characteristics so that some interesting objects can be obtained. In this paper, we focus on pixel image segmentation, which is done based on the features of and the distances between the pixels of the image. For color images, we first convert them into grayscale images and then perform segmentation.

Formally, given a homogeneity predicate H, a segmentation of an image defined by a set R of pixels is to divide R into N connected, non-empty subsets \(R_{1}, R_{2},\ldots , R_{N}\) that satisfy the following conditions (Pal and Pal 1993):

-

\(\bigcup _{1\le i\le N}R_{i}=R\);

-

\(R_{i}\bigcap R_{j}=\emptyset \), \(\forall i\ne j\);

-

\(H(R_{i})=1\), \(i=1,2,\dots ,N\);

-

\(H(R_{i}\bigcup R_{j})=0\), \(\forall \) adjacent i and j.

3.2 Traditional K-means clustering

Given a set of n samples \(X={x_{1},x_{2},\ldots ,x_{n}}\) in a D-dimensional Euclid space, K-means clustering is to divide the set into c subsets to minimize the following objective function:

where \(u_{ik}=1\) denotes that the kth sample point belongs to the ith class and \(u_{ik}=0\) otherwise, and \(\Vert x_{k}-v_{i}\Vert \) denotes the Euclid distance between the two points \(x_{k}\) and \(v_{i}\) (denoted as \(d_{ik}\) in short).

The K-means algorithm first randomly selects c clustering centers and then continuously updates the centers as follows:

3.3 Fuzzy C-means algorithm

FCM algorithm improves the traditional K-means in that the value \(u_{ik}\), i.e., the membership of point \(x_{k}\) to the cluster centered at \(u_i\), is a real value in the range of [0,1] instead of binary 0 or 1. The objective function is improved by using a fuzzy exponent m (large than 1 and typically set to 2) as follows:

In FCM, the cluster centers are iteratively updated as follows:

Interested readers can refer to Nayak (2015) for a comprehensive review on FCM clustering and its improve methods.

4 The proposed hybrid BBO–FCM algorithm

It is known that the performance of FCM heavily relies on the initial cluster centers which are difficult to determine. To tackle the difficulty, we propose a new hybrid method for image segmentation, which first randomly generates a population of initial clustering solutions and then uses BBO metaheuristic to search for better clustering solutions, where each solution is evaluated by employing FCM on it to obtain the clustering result, i.e., the objective function (4).

4.1 The basic BBO–FCM algorithm

BBO Simon (2008, 2013) is a metaheuristic optimization algorithm inspired by the migration mechanism of species. In biogeography, if a habitat is very suitable for living, it has high habitat suitability index (HSI); on the contrary, habitats supporting less species have low HSI. In BBO, each solution to the optimization problem is analogous to a habitat, the fitness of the solution is analogous to its HSI, and the solution components are analogous to a set of suitability index variables (SIVs). The center of BBO is the equilibrium theory of biogeography, which indicates that high HSI solutions have high emigration rates and low HSI solutions have high immigration rates. For example, Fig. 1 shows a simple linear migration model. When the species number of a habitat is 0, its emigration rate \(\mu \) is 0 and its immigration rate \(\lambda \) is the maximum; when the species number of a habitat is \(S_{\max }\), its immigration rate \(\lambda \) is 0 and its emigration rate \(\mu \) is maximum; when species number of a habitat is \(S_{0}\), its immigration rate \(\lambda \) and emigration rate \(\mu \) are equal, which indicates that the habitat achieves a dynamic balance at this time.

Suppose that the solutions are sorted in ascending order of their fitness values, the immigration rate \(\lambda _{i}\) and emigration rate \(\mu _{i}\) of the ith habitat can be calculated as:

where I and E are, respectively, the maximum possible immigration rate and emigration rate, which are typically both set to 1.

When a solution achieves a dynamic balance, its habitat probability P(i) is calculated as:

where \(v_{i}\) is defined as:

here \(i'\) is an integer which is greater n / 2.

The habitat probability is used in mutation operator. The habitats with more species or less species are more easily influenced by external factors, which may easily lead to mutations. It is beneficial for habitats with low HSI to improve their HSI by mutation. There is a close inverse correlation between mutation rate and habitat probability, and the mutation rate \(\pi _i\) of the ith habitat is computed as:

where \(\pi _{\max }\) is the maximum mutation rate that is usually a constant value and \(P_{\max }\) is the maximum habitat probability of the population.

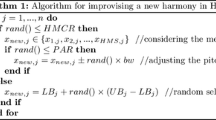

The main disadvantage of FCM is that its performance highly depends on the quality of the initial cluster centers. Here we propose a hybrid BBO–FCM algorithm to combine the BBO’s advantage with FCM for image segmentation. In our algorithm, an initial matrix of cluster centers is regarded as a solution (habitat) of BBO. The algorithm starts by initializing a population of solutions and then uses BBO to evolve the solutions (i.e., search for the optimal initial matrix), where the fitness of each solution is evaluated by employing FCM to obtain the clustering result according to the initial matrix. Algorithm 1 presents the framework of the BBO–FCM algorithm (where \(\textit{rand}()\) is the function for producing a random number uniformly distributed in [0,1]), and Fig. 2 presents the algorithm flowchart.

This framework is easy to be extended by replacing the basic BBO with its variants. Next we present some improved variants of BBO–FCM for image segmentation.

4.2 The Blended BBO–FCM algorithm

The migration operation of the basic BBO is clonal-based, i.e., it has a probability of \(\lambda _i\) of replacing a cluster center of a solution \(H_{i}\) with the corresponding cluster center of another solution \(H_{j}\) selected with the probability proportional to \(\mu _j\). In other words, the operation never generates a new position of cluster center, and thus, it may limit the diversity of the solutions.

Blended biogeography-based optimization (B-BBO) Ma and Simon (2010) replaces the original migration operation with a blended migration which learns from the crossover operation in GA to combine the features of both the two parent solutions. The blended migration is defined as follows:

where \(\alpha \) is a constant or random number within the range of [0,1]. In this way, the immigrating solution can not only obtain the features from an emigrating solution \(H_{j}\) but also retain its own features.

The procedure of the blended BBO–FCM (B-BBO–FCM) is similar to Algorithm 1 except that Line 14 is replaced by Eq. (12).

4.3 The DE/BBO–FCM algorithm

Differential evolution (DE) Storn and Pric,e (1997) is a fast and robust evolutionary algorithm for global optimization. Unlike GA, DE generates a mutant solution \(V_i\) for each solution \(H_i\) in the population by adding the weighted difference between two randomly selected vectors to a third one:

where \(r_1\), \(r_2\), and \(r_3\) are random indices in \(\{1,2,\ldots ,n\}\) and F is a positive scale coefficient.

A trial vector \(U_i\) is then generated by using the crossover operator which mixes the components of the mutant vector and the original one, where each component of \(U_i\) is determined as follows:

where \(C_r\) is the crossover probability ranged in (0, 1) and r(i) is a random integer within [1, n] for each i.

In the last step of each iteration, the selection operator chooses the better one for the next generation by comparing \(U_i\) with \(X_i\):

DE/BBO Gong et al. (2010) is a hybrid algorithm that combines DE mutation and BBO migration, i.e., each component of a solution has a probability of \(C_r\) of being modified by DE mutation and a probability of \((1-C_r)\) of being modified by BBO migration. Algorithm 2 presents the procedure of the DE/BBO–FCM algorithm for image segmentation.

4.4 The localized BBO–FCM algorithm

The basic BBO uses a global topology that enables any two solutions in the population to communicate with each other: if a solution is selected for immigration, all the rest solutions have chances to be an emigrating habitat. Such a global topology often makes most solutions be strongly attracted by the best current best solution and thus easily causes premature convergence. To address this issue, Zheng et al. (2014a) proposes localized BBO where each solution is only connected to its neighbors which are only a portion of the population, and migration can only occur between neighboring habitats, so as to improve the solution diversity and suppress premature convergence.

The hybrid localized BBO–FCM (denoted by L-BBO–FCM) algorithm employs a local random topology where the probability of any two solutions being connected is \(K/(n-1)\) where K is a control parameter in the range of \([2,n-1]\). Algorithm 3 presents the procedure for setting the local random topology (where \(\textit{Link}\) is the \(n\times n\) adjacent matrix), and Algorithm 4 presents the L-BBO–FCM algorithm for image segmentation. Note that in Lines 26–28 of Algorithm 4, if no new best solution is found for \(l_N\) iterations (where \(l_N\) is a control parameter), the local random topology will be reset.

We can also equip the local topology to other variants of BBO–FCM , such as localized DE/BBO–FCM (denoted by L-DE/BBO–FCM).

4.5 The EBO–FCM algorithm

EBO Zheng et al. (2014b) is a recent improved variant of BBO, which designs two new migration operators, called a global migration and a local migration, to balance the exploration and exploitation of the algorithm. It is also based on the local random topology. For each solution \(H_{i}\) to be immigrated, the global migration selects a neighbor \(H_{\textit{nb}}\) and a non-neighbor \(H_{\textit{far}}\) and then performs the migration operation as follows:

where \(\alpha \) is a random number in the range from 0 to 1.

And the local migration operates as follows:

EBO introduces a new parameter \(\eta \), named the immaturity index, for determining whether to perform global migration or local migration: Each migration operation has a probability of \(\eta \) of being a global migration and a probability of \((1-\eta )\) of being a local migration. The value of \(\eta \) is dynamically adjusted as follows:

where t is the current iteration number and \(t_{\max }\) is the maximum iteration number, and \(\eta _{\max }\) and \(\eta _{\min }\) are the upper limit and lower limit of \(\eta \), respectively.

Algorithm 5 presents the procedure of the EBO–FCM algorithm for image segmentation.

5 Computational experiments

5.1 Experimental setup

We have implemented six versions of BBO–FCM, i.e., the basic BBO–FCM, B-BBO–FCM, DE/BBO–FCM, L-BBO–FCM, L-DE/BBO–FCM, and EBO–FCM, and test their performance of image segmentation in comparison with the basic FCM and other three hybrid algorithms combing FCM and metaheuristics including AFSA–FCM, ABC–FCM, and PSO–FCM (Hruschka et al. 2004; Li 2013). For FCM, we set the maximum number of iterations to 50, the fuzzy parameter m to 2, and set the number of cluster centers to 4 for each image. Then, we set the termination condition as the difference between two adjacent solutions is less than \(\zeta =10^{-5}\) or the algorithm reaches the maximum number of iterations. The parameters of AFSA–FCM, ABC–FCM and PSO–FCM are set as suggested in the literature (Hruschka et al. 2004; Li 2013). For BBO–FCM, we set the population size \(n=10\), the maximum mutation rate \(\pi _{\max }=0.005\), the number of maximum non-improving iterations \(l_N=6\) (for localized BBO–FCM), \(\eta ^{\max }=0.7\) and \(\eta ^{\min }=0.4\) (for EBO). The algorithms are tested on a set of eight images shown in Fig. 3.

Segmentation results on the Lena image. (Reproduced with permission from Li 2013). a Lena, b FCM, c BBO–FCM, d AFSA–FCM, e ABC–FCM and f PSO–FCM

5.2 Evaluation criteria

We use the following five metrics from Balasko et al. (2005); Bezdek (1981) to evaluate the performance of the algorithms:

-

(1)

Subarea coefficient (SC), which measures the ratio of the sum of compactness and separation of the clusters:

$$\begin{aligned} \hbox {SC}=\sum \limits _{i=1}^{c}\frac{\sum _{k=1}^{n}(u_{ik})^{m}\left\| x_{k}-v_{i} \right\| ^{2}}{n_{i}\sum _{j=1}^{c}\left\| v_{j}-v_{i} \right\| ^{2}} \end{aligned}$$(19)where \(n_{i}\) is the number of samples which belongs to clustering i.

-

(2)

Xie and Beni’s index (XB), which measures the ratio of the total variation within clusters and the separation of clusters:

$$\begin{aligned} \hbox {XB}=\frac{\sum _{i=1}^{c}\sum _{k=1}^{n}(u_{ik})^{m}\left\| x_{k}-v_{i} \right\| ^{2}}{n\min \limits _{1\le i\le c,1\le k\le n}\left\| x_{j}-v_{i} \right\| ^{2}} \end{aligned}$$(20) -

(3)

Classification entropy (CE), which measures the fuzzyness of the cluster partition:

$$\begin{aligned} \hbox {CE}=-\frac{1}{n}\sum _{i=1}^{c}\sum _{k=1}^{n}u_{ik}\log u_{ik} \end{aligned}$$(21) -

(4)

Separation index (S), which uses a minimum-distance separation for partition validity:

$$\begin{aligned} S=\frac{\sum _{i=1}^{c}\sum _{k=1}^{n}(u_{ik})^{2}\left\| x_{k}-v_{i} \right\| ^{2}}{n\min \limits _{1\le i\le c,1\le k\le n}\left\| x_{j}-v_{i} \right\| ^{2}} \end{aligned}$$(22) -

(5)

Partition coefficient (PC), which measures the amount of overlapping between cluster:

$$\begin{aligned} \hbox {PC}=\frac{1}{n}\sum _{i=1}^{c}\sum _{k=1}^{n}(u_{ik})^{2} \end{aligned}$$(23)

According to the above five metrics, the smaller the values of SC, XB and CE, or the larger the values of S and PC, the better the clustering performance is.

5.3 Comparative results

Previous studies He et al. (2009), Li et al. (2007); Li (2013) and Ouadfel and Meshoul (2012) have shown that BBO–FCM can achieve much better image segmentation effects than FCM, AFSA–FCM, ABC–FCM and PSO–FCM. For these five algorithms, here we simply take their results on the first image (Lena) from Li (2013) and show the segmented images in Fig. 4 and present the comparative results in terms of the five metrics in Table 1 (where the bold values in each row denote the best result among the five algorithms). As we can see, BBO–FCM obtains the best XB and CE values, the third best SC and S values, and the worst PC values. In particular, the XB and CE values of BBO–FCM are close to zero, which indicates that the cohesion within clusters is very high. As the other metaheuristics use the same random approach to produce the initial clustering solutions, the results demonstrate that BBO can evolve the solutions to much more coherent cluster centers than the other metaheuristics. In general, the overall performance of BBO–FCM is the best among the five methods.

The comparative results of these five algorithms on the rest seven images are similar, which are not presented here [see Li (2013) for more details].

Next we test the performance of six BBO versions on the test images, show the resultant segmented images in Figs. 5, 6, 7, 8, 9, 10, 11 and 12, and present the metric values in Tables 2, 3, 4, 4, 5, 6, 7, 8 and 9.

From the results of the first image Lena, we can see that the image obtained by EBO-FCM is better than others because its outline is more clear, especially the areas of lips and eyes. Besides, according to Table 2, the values of CE and PC of EBO–FCM are slightly better than other BBO versions. But in terms of XB, L-BBO–FCM achieves the best clustering effect.

For the second image Baboon, EBO–FCM only achieves the best S value among all the algorithms, which is far greater than others, but its values of other 4 metrics are close to best values. Thus, EBO–FCM obtains a good segmentation effect. Generally speaking, the image of B-BBO–FCM may be the best one, which shows obvious features, i.e., the eyes and nostrils.

From the results of the image Woman, we could see the obvious edges on the image of DE/BBO–FCM, and its XB value is much smaller than other algorithms. In terms of PC, EBO–FCM gets the best result.

On images Camera, Milktrop and Bridge, EBO–FCM shows better performance than other BBO versions, and its images embody clear edges and show significant characteristics. For the last two boundary-blurring pictures, the segmentation effect of EBO–FCM is not obvious enough. Hence in general, EBO–FCM shows preferable segmentation effect on most test images, especially those images having human face, where EBO–FCM could distinguish eyes, hair and background clearly and provide more clear edges.

Generally speaking, EBO–FCM shows the best overall performance among the six BBO version, because it combines global migration and local migration to better balance exploration and exploitation than the other versions and thus achieves clustering results that better balance the intra-class polymerization and the inter-class difference (but its performance is also a bit more than the other versions). Therefore, in most conditions we suggest using EBO–FCM except when the real-time requirement is very strict. DE/BBO–FCM is the most efficient, because the DE operators are typically fast and the algorithm does not employ any local topology to maintain the neighborhood structure of the solutions, and thus, it is more suitable for high-performance applications.

In summary, the proposed BBO–FCM and its variants effectively segment the test images and achieve clear results, which shows the combination of BBO and FCM is beneficial to improve the accuracy and precision of image segmentation. A shortcoming of BBO–FCM is that its PC values are typically small, which indicates the overlapping between the resultant clusters is small. We can find that the other BBO versions cannot significantly improve the PC values, mainly due to they use the inherently similar migration operators. Thus, we do not suggest to use BBO–FCM for those images with many overlapping objects.

6 Conclusion

In order to overcome the shortcomings of FCM clustering methods, this paper proposes a hybrid BBO–FCM that integrates the BBO with FCM for image segmentation, utilizing BBO to evolve a population of solutions to initial cluster center settings and using FCM to produce effective clustering results. Based on the framework of BBO–FCM, we have implemented five algorithms by combining FCM with different variants of BBO. Experimental results on a set of test images demonstrate that BBO–FCM can effectively improve the performance of the basic FCM algorithm and those hybrid methods combining FCM with other well-known metaheuristics and, in particular, the cohesion within clusters of BBO–FCM is much higher than the other metaheuristic algorithms. Among the five BBO–FCM variants, EBO–FCM achieves the best overall performance.

The proposed BBO–FCM and its variants can also be applied to many other clustering problems, and now we are testing the algorithms for more applications including object detection, image retrieval, and speech recognition. In particular, we are interested in improving the robustness of BBO to handle problems with much noise (Ma et al. 2015), typically by the hybridization with other metaheuristics (Ma et al. 2014; Zheng et al. 2014d; Zheng 2015). We also plan to incorporate artificial neural networks (including deep learning models) to exact features (Vincent 2008; Zheng et al. 2016, 2017) for enhancing image segmentation.

References

Armano G, Farmani MR (2016) Multiobjective clustering analysis using particle swarm optimization. Expert Syst Appl 55:184–193

Balasko B, Abonyi J, Feil B (2005) Fuzzy clustering and data analysis toolbox. Department of Process Engineering, University of Veszprem, Veszprem

Bezdek JC (1981) Pattern recognition with fuzzy objective function algorithms. Plenum Press, New York

Bhandarkar SM, Zhang H (1999) Image segmentation using evolutionary computation. IEEE Trans Evol Comput 3(1):1–21

Chatterjee A, Siarry P, Nakib A, Blanc R (2012) An improved biogeography based optimization approach for segmentation of human head CT-scan images employing fuzzy entropy. Eng Appl Artif Intell 25(8):1698–1709

Choi H, Baraniuk RG (2001) Multiscale image segmentation using wavelet-domain hidden Markov models. IEEE Trans Image Process 10(9):1309–1321

Coleman GB, Andrews HC (1979) Image segmentation by clustering. Proc IEEE 5(67):773–785

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst Man Cybern 26(1):29–41

Eberhart RC, Kennedy J(1995) A new optimizer using particle swarm theory. In: Proceedings of the sixth international symposium on micro machine and human science. IEEE, pp 39–43

Gao H, Kwong S, Yang J, Cao J (2013) Particle swarm optimization based on intermediate disturbance strategy algorithm and its application in multi-threshold image segmentation. Inf Sci 250:82–112

Gong WY, Cai ZH, Ling CX (2010) DE/BBO: a hybrid differential evolution with biogeography-based optimization for global numerical optimization. Soft Comput 15:645–665

Gong M, Liang Y, Shi J, Ma W, Ma J (2013) Fuzzy c-means clustering with local information and kernel metric for image segmentation. IEEE Trans Image Process 22(2):573–584

Han SQ, Wang L (2002) Threshold method for image segmentation. Syst Eng Electr 24(6):91–94

Hancer E, Ozturk C, Karaboga D (2012) Artificial bee colony based image clustering method. In: IEEE congress on evolutionary computation, Brisbane, pp 1–5

He S, Belacel N, Hamam H, Bouslimani Y (2009) Fuzzy clustering with improved artificial fish swarm algorithm. Int Jt Conf Comput Sci Optim 2:317–321

Hruschka ER, Campello RJ, de Castro LN (2004) Evolutionary search for optimal fuzzy C-means clustering. IEEE Int Conf Fuzzy Syst 2:685–690

Karaboga D (2005) An idea based on honey bee swarm for numerical optimization. Technical report TR06, Erciyes University, Engineering Faculty, Computer Engineering Department

Kuntimad G, Ranganath HS (1999) Perfect image segmentation using pulse coupled neural networks. IEEE Trans Neural Netw 10(3):591–598

Lee CY, Leou JJ, Hsiao HH (2012) Saliency-directed color image segmentation using modified particle swarm optimization. Sig Process 92(1):1–18

Li ZZ (2013) Image segmentation technology and application based on biogeography-based optimization. Harbin Institute of Technology, Harbin

Li L, Li D (2008) Fuzzy entropy image segmentation based on particle swarm optimization. Prog Nat Sci 18(9):1167–1171

Li L, Liu X, Xu M (2007) A novel fuzzy clustering based on particle swarm optimization. In: IEEE international symposium on information technologies and applications in education, pp 88–90

Li Y, Jiao L, Shang R, Stolkin R (2015) Dynamic-context cooperative quantum-behaved particle swarm optimization based on multilevel thresholding applied to medical image segmentation. Inf Sci 294:408–422

Lin Y, Tian J (2002) Medical image segmentation methods. Pattern Recognit Artif Intell 15(2):192–204

Lin KY, Wu JH, Xu LH (2005) Color image segmentation methods. J Image Graph China 10(1):1–10

Liu L, Sun SZ, Yu H, Yue X, Zhang D (2016) A modified Fuzzy C-Means (FCM) Clustering algorithm and its application on carbonate fluid identification. J Appl Geophys 129:28–35

Ma H, Simon D (2010) Biogeography-based optimization with blended migration for constrained optimization problem. In: Proceedings of the genetic and evolutionary computation conference, pp 55–78

Ma H, Simon D, Fei M, Shu X, Chen Z (2014) Hybrid biogeography-based evolutionary algorithms. Eng Appl Artif Intell 30:213–224

Ma H, Fei M, Simon D, Chen Z (2015) Biogeography-based optimization in noisy environments. Trans Inst Meas Control 37(2):190–204

Melkemi KE, Batouche M, Foufou S (2006) A multiagent system approach for image segmentation using genetic algorithms and extremal optimization heuristics. Pattern Recogn Lett 27(11):1230–1238

Nayak J, Naik B, Behera HS (2015) Fuzzy C-means (FCM) clustering algorithm: a decade review from 2000 to 2014. In: Computational intelligence in data mining. Springer, New Delhi, vol 2, pp 133–149

Omran MG, Salman A, Engelbrecht AP (2006) Dynamic clustering using particle swarm optimization with application in image segmentation. Pattern Anal Appl 8(4):332–344

Ouadfel S, Meshoul S (2012) Handling fuzzy image clustering with a modified ABC algorithm. Int J Intell Syst Appl 4(12):65–74

Ozturk C, Hancer E, Karaboga D (2014) Color image quantization: a short review and an application with artificial bee colony algorithm. Informatic 25(3):485–503

Pal NR, Pal SK (1993) A review on image segmentation techniques. Pattern Recogn 26:1227–1248

Sharon E, Brandt A, Basri R (2000) Fast multiscale image segmentation. IEEE Conf Comput Vis Pattern Recognit 1:70–77

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12(6):702–713

Simon D (2013) Evolutionary optimization algorithms. Wiley, New York

Smiley A, Simon D (2016) Evolutionary optimization of atrial fibrillation diagnostic algorithms. Int J Swarm Intell 2(2–4):117–133

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11:341–359

Vincent P, Larochelle H, Bengio Y, Manzagol P (2008) Extracting and composing robust features with denoising autoencoders. In: International conference on machine learning, New York, pp 1096–1103

Wang Z, Wu X (2016) Salient object detection using biogeography-based optimization to combine features. Appl Intell 45(1):1–17

Wang XN, Feng YJ, Feng ZR (2005) Ant colony optimization for image segmentation. Int Conf Mach Learn Cybern 9:5355–5360

Xue Y, Jiang J, Zhao B, Ma T (2017) A self-adaptive artificial bee colony algorithm based on global best for global optimization. Soft Comput. https://doi.org/10.1007/s00500-017-2547-1

Yang G, Zhang Y, Yang J, Ji G, Dong Z, Wang S et al (2016) Automated classification of brain images using wavelet-energy and biogeography-based optimization. Multimed Tools Appl 75(23):15601–15617

Zheng YJ (2015) Water wave optimization: a new nature-inspired metaheuristic. Comput Oper Res 55(1):1–11

Zheng YJ, Ling HF, Wu XB, Xue JY (2014a) Localized biogeography-based optimization. Soft Comput 18(11):2323–2334

Zheng YJ, Ling HF, Xue JY (2014b) Ecogeography-based optimization: enhancing biogeography-based optimization with ecogeographic barriers and differentiation. Comput Oper Res 50(1):115–127

Zheng YJ, Ling HF, Xue JY, Chen SY (2014c) Population classification in fire evacuation: a multiobjective particle swarm optimization approach. IEEE Trans Evol Comput 18(1):70–81

Zheng YJ, Ling HF, Shi HH, Chen HS, Chen SY (2014d) Emergency railway wagon scheduling by hybrid biogeography-based optimization. Comput Oper Res 43:1–8

Zhang Y, Wang S, Dong Z, Phillip P, Ji G, Yang J (2015) Pathological brain detection in magnetic resonance imaging scanning by wavelet entropy and hybridization of biogeography-based optimization and particle swarm optimization. Prog Electromagn Res 152:41–58

Zheng YJ, Sheng WG, Sun XM, Chen SY (2016) Airline passenger profiling based on fuzzy deep machine learning. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2016.2609437

Zheng YJ, Chen SY, Xue Y, Xue JY (2017) A Pythagorean-type fuzzy deep denoising autoencoder for industrial accident early warning. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2017.2738605

Zhu WP (2009) Image segmentation based on clustering algorithms. Jiangnan University, Wuxi

Acknowledgements

This work was supported by National Natural Science Foundation of China under Grant Nos. 61325019 and U1509207.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Zhang, M., Jiang, W., Zhou, X. et al. A hybrid biogeography-based optimization and fuzzy C-means algorithm for image segmentation. Soft Comput 23, 2033–2046 (2019). https://doi.org/10.1007/s00500-017-2916-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-017-2916-9