Abstract

Purpose

The purpose of this study is to investigate test-retest reliability and agreement of the quantitative contrast sensitivity function test (qCSF) in the retina clinic.

Methods

A total of 121 right eyes of 121 patients were tested and consecutively re-tested with qCSF in the retina clinic. Outcomes included area under the logarithm of contrast sensitivity function curve (AULCSF), contrast acuity, and contrast sensitivity thresholds at 1–18 cycles per degree (cpd). Test-retest means were compared with paired t-test, variability was compared with the Brown-Forsythe test, and intraclass correlation coefficient (ICC) and Bland Altman plots evaluated reliability and agreement.

Results

Mean test-retest differences for all qCSF metrics ranged from 0.02 to 0.05 log units without statistically significant differences in variability. Standard deviations ranged from 0.08 to 0.14. Coefficients of repeatability ranged from 0.16 to 0.27 log units. ICC > 0.9 for all metrics except 1cpd (ICC = 0.84, all p < 0.001); AULCSF ICC = 0.971.

Conclusion

qCSF-measured contrast sensitivity shows great test-retest repeatability and agreement in the retina clinic.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Contrast sensitivity has been gaining traction recently as a visual function endpoint with good promise. Compared to visual acuity (VA), the traditionally used functional endpoint used for decades, contrast sensitivity seems to better correlate with vision-related quality of life and patient-reported outcomes [1] and to also better correlate with structural changes in several retinal conditions [2,3,4,5,6]. Test-retest repeatability, responsiveness to time and intervention, testing efficiency, and validity against criterion tests are also crucial towards the validation of a new functional endpoint.

Among the currently available contrast sensitivity tests, the quantitative contrast sensitivity function (qCSF) method seems the most promising. Thanks to its built-in active learning algorithms, the qCSF method efficiently measures contrast sensitivity function in only 3–5 min per eye, while also offering personalized testing. Further, in contrast to the traditionally used Pelli-Robson test, the qCSFtest measures contrast sensitivity thresholds at multiple spatial frequencies, fulfilling the FDA requirement for use of contrast sensitivity as a functional endpoint. It has already been used to report visual function outcomes in several ocular diseases [3,4,5, 7, 8]. At the same time, small studies suggest that the qCSF contrast sensitivity shows very good test-retest reliability [9,10,11,12], while other contrast sensitivity tests that evaluate thresholds at various spatial frequencies have been criticized for their low test-retest reliability [8,9,10,11,12,13,14].

Recent literature points towards the validation of qCSF-measured contrast sensitivity as a functional endpoint: in terms of responsiveness to time, qCSF contrast sensitivity seems to be affected earlier in the course of neurodegenerative diseases, including age-related macular degeneration and diabetic retinopathy (DR), and to be significantly different across disease stages [4, 13, 15,16,17,18,19,20]. In terms of responsiveness to treatment, changes in qCSF contrast sensitivity seem be larger than changes in VA following anti-vascular endothelial growth factor (VEGF) injections [5, 21,22,23].

Regarding validity against criterion or construct tests such as the Pelli-Robson, several clinical trials that directly compare qCSF results with the traditionally used Pelli-Robson test are currently ongoing. In diabetic retinopathy only, three ongoing trials are currently evaluating the validity of qCSF contrast sensitivity as a functional endpoint [24,25,26].

While ongoing studies evaluate the qCSF method in terms of responsiveness to time and intervention, and validity against criterion tests, a comprehensive study reporting on the test-retest repeatability of the qCSF method is currently missing. Any potential visual function endpoint should be highly repeatable so that clinicians and investigators can accurately define true change in visual function.

Herein, we aim to investigate and report on the test-retest reliability and agreement of the qCSF method in the retina clinic, adding a piece of evidence towards the validation of qCSF contrast sensitivity as a functional endpoint.

Methods

Study design

This is a cross-sectional observational, single-center study including patients from the retina clinic at Massachusetts Eye and Ear (MEE) recruited and tested from June 2021 to December 2022. The Institutional Review Boards at Massachusetts General Brigham approved the study protocol. Informed consent was waived as qCSF-measured contrast sensitivity is part of standard clinical testing at MEE, and data were retrospectively reviewed. The study was compliant with the Health Insurance Portability and Accountability Act of 1996 and was adherent to the tenets of the Declaration of Helsinki.

Subject enrollment and standard clinical testing

All retina clinic patients were eligible to be included in this test-retest study of the qCSF device as long as they had no previous experience with qCSF-measured contrast sensitivity testing. All participants were tested only on their right eye. Exclusion criteria included best corrected visual acuity (BCVA) < 20/200 or inability to complete testing. All subjects underwent a comprehensive ophthalmic examination including history taking, measurement of visual acuity (VA) with Snellen charts, measurement of intra-ocular pressure, color fundus photography, spectral-domain OCT imaging, slit lamp examination, and dilated fundus examination. Demographic characteristics and clinical characteristics, such as lens status, were recorded. Lens status grading followed the Lens Opacities Classification System (LOCS) III [7, 27] and then simplified for the purposes of the multivariate regression analysis so that clear lens was graded as “clear,” NO1NC1 was considered as 1+NS, NO2NC1, NO1NC2, or NO2NC2 graded as 2+NS, NO3NC1-3, and NO1-2NC3 graded as 3+NS, and the presence of NO4 and/or NC4 was graded as 4+NS.

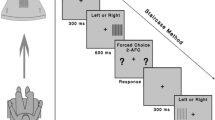

qCSF-measured contrast sensitivity test and re-test methodology

Contrast sensitivity was measured using the qCSF method on the AST platform (Adaptive Sensory Technology, San Diego, CA, USA), as previously described [13]. In brief, the qCSF method estimates contrast sensitivity function by presenting spatially filtered optotypes to the patient that modulate in both spatial frequency and contrast, thus enabling the efficient testing of contrast sensitivity across multiple spatial frequencies in parallel [10]. Three filtered Sloan letters of the same spatial frequency and decreasing contrast are simultaneously displayed in a horizontal line on a LED screen, at a viewing distance of 400 cm. The contrast of the right-most letter is chosen by the qCSF method and is usually near threshold contrast, with the middle and left-most letters displayed at two and four times the contrast of the right letter, respectively [10]. The patient verbally reports the three letters presented on each screen to the examiner, who operates the test with a handheld tablet, recording “correct,” “incorrect,” or “no response.” The built-in adaptive Bayesian active learning algorithm uses a one-step-ahead search to identify the next grating stimulus (defined by frequency and contrast) that maximizes the expected information gain [10], in a way that data collected at single spatial frequency improve sensitivity estimates across all frequencies. This allows for the device to select and display to each patient personalized optotypes of optimal contrast-spatial frequency combinations that are based on their previous responses. Based on the novel active learning sampling, the quantitative contrast sensitivity function method generates a contrast sensitivity function curve, integrating spatial frequencies ranging from 1 cycle per degree (cpd) to 18 cpd. The respective time for test completion is to 3–5 min per eye [10], a reasonable time that allows for a contrast sensitivity test to be integrated into routine clinical practice. To investigate test-retest variability and agreement, each participant’s right eye was tested two consecutive times in the same day.

Statistical analysis and test-retest reliability and agreement outcomes

Statistical analysis was performed using RStudio version 4.1.2. The population demographics and ocular characteristics were described using traditional descriptive methods. Data that was not distributed normally were reported as median with interquartile range (IQR). In evaluating test-retest repeatability, variability, and agreement of the qCSF-measured contrast sensitivity, the following study outcomes were included for each one of the qCSF metrics: (1) the means of the test and re-test measurements were compared using a paired t-test. (2) The variability of the test and re-test measurement were compared using the Brown-Forsythe test since the data were not distributed normally. Statistical significance was considered when the p-value was <0.05. (3) The intraclass correlation coefficient (ICC) with 95% confidence intervals (CI) was used to evaluate the reliability between the test and re-test measurements. (4) Bland-Altman plots with mean deviation (MD), coefficients of repeatability (CoR), and 95% limits of agreement (LoA) (set at two standard deviations) were used to evaluate the agreement and repeatability between the test and re-test measurements [28]. Density and box plots were calculated to evaluate the distribution of results in the test and re-test trials.

Results

Demographics

Our cohort comprised of 121 eyes of 121 patients. The mean age was 58 ± 18.4 years and ranged from 13 to 89 years old. Fifty-seven percent of patients were male and 43% were female. Regarding race, 76.9% were white, 6.6% were Asian, 4.1% were black or African American, 9.1% were another race, and 3.3% declined to share their race or their race was unavailable. Regarding ethnicity, 84.3% were non-Hispanic, 10.7% were Hispanic, and 5% declined to share their ethnicity or their ethnicity was unavailable. The lens status was normal in 25.6%, 1+ in 28.9%, 2+ in 14.1%, 3+ in 5%, and pseudophakic in 26.5% of eyes. The mean best corrected visual acuity (VA) was 0.21 ± 0.29 logMAR (20/17 to 20/63) ranging from −0.12 to 1.40 logMAR (20/15 to 20/502). The ocular diseases present included: retinal detachment (n=21), epiretinal membrane (n=18), diabetic retinopathy (n=13), age-related macular degeneration (n=12), retinal tear or hole (n=10), lattice degeneration (n=10), central serous chorioretinopathy (n=7), diabetes mellitus without diabetic retinopathy (n=6), open-angle glaucoma (n=5), high myopia (n=4), macular hole (n=4), retinal vein occlusion (n=4), ocular hypertension (n=3), central retinal artery occlusion (n=2), history of retinopathy of prematurity (n=2), choroidal metastases (n=1), long-term hydroxychloroquine use without retinopathy (n=1), cranial nerve VII palsy (n=1), graft versus host disease (n=1), hypertensive retinopathy (n=1), and vitreomacular adhesion (n=1).

Descriptive statistics

Density plots for the test and re-test measurements of each qCSF metric revealed a skewed distribution and a mild learning effect (Supplemental Figure 1). Similarly, box plots for the test and re-test measurements for each qCSF metric reveal slightly increased means for the re-test measurements due to the mild learning effect and similar test-retest variability for all qCSF metrics (Fig. 1).

Box plots displaying the distribution of results in the test and re-test measurements of the quantitative contrast sensitivity function (qCSF) test. The box represents the two middle quartiles of the sample, and the horizontal line within the box is the mean of the sample. The vertical lines extending from the box represent the range of the data sample. AULCSF, area under the logarithm of the contrast sensitivity function; CA, contrast acuity; CPD, cycles per degree

Test-retest reliability and variability

The difference between the means of the test and retest measurements for all qCSF metrics ranged from 0.02-0.05 (Table 1). When comparing the means of the test and re-test measurements using a paired t-test, these differences were found to be statistically significant, despite their small absolute value (Table 1). Brown-Forsythe test revealed no significant differences between the variability of the test and retest measurements for any of the qCSF metrics (Table 2).

The ICC revealed a strong correlation and reliability between the test and re-test measurements for all qCSF metrics, with all ICC values being >0.9 except for the 1cpd metric (ICC=0.838, Table 3). The qCSF metric with the highest ICC was AULCSF (ICC= 0.971, p = <0.001, Table 3).

The mean difference ± the standard deviation and coefficient of repeatability (CoR) for qCSF metrics were the following: AULCSF 0.04 ± 0.08 (0.16), CA 0.03 ± 0.09 (0.17), 1cpd 0.03 ± 0.14 (0.27), 1.5cpd 0.03 ± 0.12 (0.22), 3cpd 0.04 ± 0.11 (0.21), 6cpd 0.05 ± 0.13 (0.26), 12cpd 0.04 ± 0.14 (0.26), and 18cpd 0.02 ± 0.10 (0.19) (Table 3, Fig. 2). Upper and lower levels of agreement (LoA) and 95% confidence intervals are also shown in Table 3 and Fig. 2 (Table 3, Fig. 2). A mild learning effect was observed in all qCSF metrics as mean retest measurements were consistently 0.02–0.05 higher than the respective mean test measurements (Fig. 2). For AULCSF, 95% confidence intervals revealed that for 95% of patients, qCSF test and retest difference (levels of agreement) range from −0.12 to +0.21 (without correcting for the learning effect) with the most probable difference being +0.04.

Bland-Altman plots showing the difference between the re-test and test measurements of the quantitative contrast sensitivity function (qCSF) test. The solid black line represents the mean difference between the trials, and the dotted red lines represent the 95% confidence interval for each qCSF outmode metric. AULCSF, area under the logarithm of the contrast sensitivity function; CA, contrast acuity; CPD, cycles per degree; CI, confidence interval

Discussion

In this study, we comprehensively evaluated the test-retest reliability and variability of qCSF-measured contrast sensitivity in the retina clinic. Our results showed very strong reliability (ICC values) and test-retest agreement (Bland-Altman-derived CoR and limits of agreement) for all qCSF outcome metrics, adding a piece of evidence towards validating qCSF-measured contrast sensitivity as a functional endpoint.

In particular, the Bland-Altman plots revealed a mean difference of 0.02–0.05 log units between test and retest measurements for all qCSF metrics. Standard deviations ranged from 0.08 to 0.14. CoR ranged from 0.16 to 0.27, without correcting for the learning effect that was evident in our cohort of qCSF-naive participants (Table 4). When evaluating the reliability of the qCSF test, we found very strong ICC values for all qCSF metrics (all ICC>0.9 except threshold at 1cpd where ICC=0.84, all p<0.001). This is in line with the finding that the test-retest variability of each of the qCSF metrics was not significantly different from test to retest. The means of the test and re-test trials were found to be significantly different from one another for all qCSF metrics, yet the absolute difference of between the test and retest means seems to be clinically insignificant (<0.05 log units). This can be explained by the strict nature of the paired t-test we employed and by the mild learning effect that was observed in our qCSF-naive participants. The mild learning effect observed herein can also be appreciated when examining the results of the Bland-Altman plots, where the absolute value of the upper confidence interval is greater than the absolute value of the lower confidence interval and mean differences are positive for all qCSF metrics (Fig. 2).

Our findings are in line with previous smaller reports of qCSF repeatability in healthy volunteers [9, 11, 12], indicating that qCSF-measured contrast sensitivity has great test-retest repeatability, variability, and agreement even in eyes with retinal diseases. Unsurprisingly, same visit repeatability (as reported herein) was better than between-visit repeatability assessed at visits spaced up to 4 months apart (CoR 0.16-0.27 vs 0.21-27) [18]. Surprisingly though, the CoR we report herein for eyes with retinal diseases for AULCSF (CoR=0.16) was found be as good as the CoR reported for the qCSF in healthy volunteers [9], matching the step-wise resolution of the Pelli-Robson chart (0.15 logCS change between consecutive letter triplets).

In Table 4, we provide a comprehensive comparison of repeatability metrics between qCSF-measured contrast sensitivity and the other currently available contrast sensitivity testing methods [29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45]. qCSF repeatability measures are equal to or better than those of the Pelli Robson and the Mars test (Table 4), but the qCSF offers contrast sensitivity thresholds at multiple spatial frequencies as the FDA mandates. Other currently available contrast sensitivity tests that operate across multiple spatial frequencies (such as FACT, Vistech, CSV-1000) have been criticized for having large variability and therefore limited clinical use (Table 4) [14]. As there is no functional endpoint that is currently validated and acceptable besides ETDRS visual acuity, we also provide a companion of qCSF-measured contrast sensitivity and VA in terms of repeatability measures (Table 4).

The US Food and Drug Administration (FDA) recommends change in visual function as a primary endpoint in trials assessing novel ocular therapeutics. A change of 15 Early Treatment Diabetic Retinopathy Study (ETDRS) letters in high-contrast best corrected visual acuity (BCVA) has consistently been used for the approval of octal therapeutics. Yet, in most retinal conditions, VA is insensitive to early changes [1, 2, 5, 8, 15, 46, 47]. It is not uncommon that patients with very good VA have subjective visual complaints and contrast sensitivity deficits [48,49,50]. However, BCVA is currently the only validated visual function endpoint recognized by regulators. This paucity of accepted and validated endpoints prompts for the evaluation of new functional endpoints that could change the paradigm in visual function testing. qCSF-measured contrast sensitivity emerges as a promising endpoint as it is (1) measured in a time-efficient manner (2–5 min per eye) [10, 13]; (2) strongly associated with patient-reported outcomes, even more so than VA is [1]; (3) sensitive to longitudinal changes and differentiates between disease stages better than VA does [4, 17]; (4) well correlated with structural biomarkers in retinal disorders, even more than VA is [3, 4, 17,18,19,20]; and (5) highly repeatable as presented herein.

The main limitation of the study was the diversity of retinal conditions included, which was thought to accurately represent the population of a real-world retina clinic where clinicians seek to measure visual function in a sensitive, time-efficient, and repeatable way. We sought to employ the qCSF method and measure its test-retest repeatability and variability in the “general population” of a retina clinic irrespective of condition in the same way visual acuity is used. Following the logic above, among the strengths of this study is the inclusion of a broad range of ages and visual acuities. Same-day consecutive testing and retesting further strengthens our results. Lastly, our moderate sample size did not allow for a sub-analysis to report on test-retest repeatability of the qCSF method per visual acuity stratum or per different retinal condition; nevertheless, it provides an overall testament of the performance of the qCSF method in the retina clinic. Future work evaluating qCSF’s reliability and variability across different sites will render even more solid evidence in the path towards validating qCSF as a functional endpoint.

In conclusion, in this study, we present data showing very strong reliability (ICC values) and test-retest repeatability and agreement (Bland-Altman-derived CoR and limits of agreement) for all qCSF outcome metrics, adding a piece of evidence towards validating qCSF-measured contrast sensitivity as a functional endpoint.

References

Vingopoulos F, Bannerman A, Zhou P, Koch T, Wescott HE, Kim L, Vavvas D, Miller JW, Miller JB (2023) Towards the validation of quantitative contrast sensitivity as a clinical endpoint: correlations with vision-related quality of life in bilateral AMD. Br J Ophthalmol. https://doi.org/10.1136/bjo-2023-323507

Thomas M, Silverman RF, Vingopoulos F, Kasetty M, Yu G, Kim EL, Omari AA, Joltikov KA, Choi EY, Kim LA, Zacks DN, Miller JB (2021) Active learning of contrast sensitivity to assess visual function in macula-off retinal detachment. J Vitreoretin Dis 5:313–320. https://doi.org/10.1177/2474126420961957

Zeng R, Vingopoulos F, Wang M, Bannerman A, Wescott HE, Baldwin G, Katz R, Koch T, Elze T, Kim LA, Vavvas DG, Husain D, Miller JB (2023) Structure-function association between contrast sensitivity and retinal thickness (total, regional, and individual retinal layer) in patients with idiopathic epiretinal membrane. Graefes Arch Clin Exp Ophthalmol 261:631–639. https://doi.org/10.1007/s00417-022-05819-y

Vingopoulos F, Baldwin G, Katz R, Garg I, Cui Y, Moon JY, Patel NA, Wu DM, Husain D, Miller JW, Kim LA, Vavvas DG, Miller JB (2022) Structure-function associations between contrast sensitivity (CS) and vascular metrics on wide field swept source optical coherence tomography angiography (WF SS OCTA) across stages of diabetic retinopathy. Invest Ophthalmol Vis Sci 63:2206 – F0269

Silverman RF, Kasetty M, Vingopoulos F, Katz R, Cho J, Lesmes LA, Zacks DN, Kim LA, Miller JB (2020) Measuring contrast sensitivity function with active learning in retinal vein occlusion: a new endpoint of visual function. Ophthalmic Surg Lasers Imaging Retina 51:392–400. https://doi.org/10.3928/23258160-20200702-04

Wang J, Cui Y, Vingopoulos F, Kasetty M, Silverman RF, Katz R, Kim L, Miller JB (2022) Disorganisation of retinal inner layers is associated with reduced contrast sensitivity in retinal vein occlusion. Br J Ophthalmol 106:241–245. https://doi.org/10.1136/bjophthalmol-2020-317615

Vingopoulos F, Garg I, Kim EL, Thomas M, Silverman RF, Kasetty M, Hassan ZY, Yu G, Joltikov K, Choi EY, Laíns I, Kim LA, Zacks DN, Miller JB (2022) Quantitative contrast sensitivity test to assess visual function in central serous chorioretinopathy. Br J Ophthalmol 107(8):1139–1143. https://doi.org/10.1136/bjophthalmol-2021-320415

Vingopoulos F, Kasetty M, Garg I, Silverman RF, Katz R, Vasan RA, Lorch AC, Luo ZK, Miller JB (2022) Active learning to characterize the full contrast sensitivity function in cataracts. Clin Ophthalmol 16:3109–3118. https://doi.org/10.2147/opth.S367490

Chen Z, Zhuang Y, Xu Z, Chan LYL, Zhang S, Ye Q, Feng L, Lu ZL, Li J (2021) Sensitivity and stability of functional vision tests in detecting subtle changes under multiple simulated conditions. Transl Vis Sci Technol 10:7. https://doi.org/10.1167/tvst.10.7.7

Lesmes LA, Lu ZL, Baek J, Albright TD (2010) Bayesian adaptive estimation of the contrast sensitivity function: the quick CSF method. J Vis 10:17.11-21. https://doi.org/10.1167/10.3.17

Thurman SM, Davey PG, McCray KL, Paronian V, Seitz AR (2016) Predicting individual contrast sensitivity functions from acuity and letter contrast sensitivity measurements. J Vis 16:15. https://doi.org/10.1167/16.15.15

Rosenkranz SC, Kaulen B, Zimmermann HG, Bittner AK, Dorr M, Stellmann JP (2021) Validation of computer-adaptive contrast sensitivity as a tool to assess visual impairment in multiple sclerosis patients. Front Neurosci 15:591302. https://doi.org/10.3389/fnins.2021.591302

Vingopoulos F, Wai KM, Katz R, Vavvas DG, Kim LA, Miller JB (2021) Measuring the contrast sensitivity function in non-neovascular and neovascular age-related macular degeneration: the quantitative contrast sensitivity function test. J Clin Med 10:2768. https://doi.org/10.3390/jcm10132768

Richman J, Spaeth GL, Wirostko B (2013) Contrast sensitivity basics and a critique of currently available tests. J Cataract Refract Surg 39:1100–1106. https://doi.org/10.1016/j.jcrs.2013.05.001

Joltikov KA, de Castro VM, Davila JR, Anand R, Khan SM, Farbman N, Jackson GR, Johnson CA, Gardner TW (2017) Multidimensional functional and structural evaluation reveals neuroretinal impairment in early diabetic retinopathy. Invest Ophthalmol Vis Sci 58:Bio277-bio290. https://doi.org/10.1167/iovs.17-21863

Wai KM, Vingopoulos F, Garg I, Kasetty M, Silverman RF, Katz R, Laíns I, Miller JW, Husain D, Vavvas DG, Kim LA, Miller JB (2022) Contrast sensitivity function in patients with macular disease and good visual acuity. Br J Ophthalmol 106:839–844. https://doi.org/10.1136/bjophthalmol-2020-318494

Vingopoulos F, Patel N, Katz R, Garg I, Lu ES, Lains I, Kasetty M, Silverman R, Nigalye A, Lesmes LA, Kim IK, Kim LA, Husain D, Miller JW, Vavvas DG, Miller JB (2021) Contrast sensitivity function in non-neovascular age-related macular degeneration measured with active learning. Invest Ophthalmol Vis Sci 62:328–328

Ou WC, Lesmes LA, Christie AH, Denlar RA, Csaky KG (2021) Normal- and low-luminance automated quantitative contrast sensitivity assessment in eyes with age-related macular degeneration. Am J Ophthalmol 226:148–155. https://doi.org/10.1016/j.ajo.2021.01.017

Baldwin G, Vingopoulos F, Garg I, Moon JY, Zeng R, Cui Y, Katz R, Le R, Lu ES, Sayah DN, Hassan Z, Kim LA, Elze T, Husain D, Miller JB (2023) Structure-function associations between contrast sensitivity and widefield swept-source optical coherence tomography angiography in diabetic macular edema. Graefes Arch Clin Exp Ophthalmol. https://doi.org/10.1007/s00417-023-06086-1

Baldwin G, Vingopoulos F, Zeng R, Wescott H, Bannerman A, Koch T, Wang K, Garg I, Katz R, Kim LA, Miller JB (2023) Association between contrast sensitivity and central subfield thickness in center-involving diabetic macular edema. J Vitreoretin Dis 7:232–238. https://doi.org/10.1177/24741264231165611

Preti RC, Ramirez LM, Pimentel SL, Motta AA, Machado CG, Monteiro ML, Takahashi WY (2012) Single intravitreal bevacizumab injection effects on contrast sensitivity in macular edema from branch retinal vein occlusion. Arq Bras Oftalmol 75:29–32. https://doi.org/10.1590/s0004-27492012000100006

Preti RC, Ramirez LM, Pimentel SL, Nakashima Y, Machado CG, Pelayes DE, Monteiro ML, Takahashi WY (2014) Effect of a single intravitreal bevacizumab injection on contrast sensitivity and macular thickness in eyes with macular edema from central retinal vein occlusion: a prospective, nonrandomized, three-month follow-up study. Ophthalmic Res 51:140–145. https://doi.org/10.1159/000357737

Whang K, Baldwin G, Vingopoulos F, Garg I, Wescott H, Koch T, Zeng R, Lains I, Tracy J, Miller JW, Husain D, Vavvas DG, Miller JB (2023) Changes in qCSF contrast sensitivity following anti-vegf injection in exudative age-related macular degeneration. Investigat Ophthalmol Vis Sci 64:OD71–OD71

Chew E (2023) A randomized clinical trial evaluating fenofibrate for prevention of diabetic retinopathy worsening. ClinicalTrials.gov identifier: NCT04661358. https://clinicaltrials.gov/study/NCT04661358. Accessed 19 Jun 2023

La Roche H (2023) A randomized, double-masked, 48-week, parallel-group, placebo-controlled, proof-of-concept study to investigate the efficacy and safety of RG7774 in patients with diabetes mellitus type 1 or type 2 with treatment-naive diabetic retinopathy. ClinicalTrials.gov identifier: NCT04265261. https://clinicaltrials.gov/study/NCT04265261. Accessed 19 Jun 2023

Greater Houston Retina Research (2023) Faricimab for retinal non-perfusion associated with non-proliferative diabetic retinopathy: the MAGIC phase 2, multi-center, open-label, randomized controlled trial. ClinicalTrials.gov identifier: NCT05681884. https://clinicaltrials.gov/study/NCT05681884. Accessed 19 Jun 2023

Chylack LT Jr, Wolfe JK, Singer DM, Leske MC, Bullimore MA, Bailey IL, Friend J, McCarthy D, Wu SY (1993) The lens opacities classification system III. The longitudinal study of cataract study group. Arch Ophthalmol 111:831–836. https://doi.org/10.1001/archopht.1993.01090060119035

Bland JM, Altman DG (1986) Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1:307–310

Arditi A, Cagenello R (1993) On the statistical reliability of letter-chart visual acuity measurements. Invest Ophthalmol Vis Sci 34:120–129

Chaikitmongkol V, Nanegrungsunk O, Patikulsila D, Ruamviboonsuk P, Bressler NM (2018) Repeatability and agreement of visual acuity using the ETDRS number chart, Landolt C chart, or ETDRS alphabet chart in eyes with or without sight-threatening diseases. JAMA Ophthalmol 136:286–290. https://doi.org/10.1001/jamaophthalmol.2017.6290

Veselý P, Synek S (2012) Repeatability and reliability of the visual acuity examination on logMAR ETDRS and Snellen chart. Cesk Slov Oftalmol 68:71–75

Patel PJ, Chen FK, Rubin GS, Tufail A (2009) Intersession repeatability of contrast sensitivity scores in age-related macular degeneration. Invest Ophthalmol Vis Sci 50:2621–2625. https://doi.org/10.1167/iovs.08-2407

Phung L, Gregori NZ, Ortiz A, Shi W, Schiffman JC (2016) Reproducibility and comparison of visual acuity obtained with Sightbook mobile application to near card and Snellen chart. Retina 36:1009–1020. https://doi.org/10.1097/iae.0000000000000818

Faria BM, Duman F, Zheng CX, Waisbourd M, Gupta L, Ali M, Zangalli C, Lu L, Wizov SS, Spaeth E, Richman J, Spaeth GL (2015) Evaluating contrast sensitivity in age-related macular degeneration using a novel computer-based test, the Spaeth/Richman contrast sensitivity test. Retina 35:1465–1473. https://doi.org/10.1097/iae.0000000000000474

Gupta L, Cvintal V, Delvadia R, Sun Y, Erdem E, Zangalli C, Lu L, Wizov SS, Richman J, Spaeth E, Spaeth GL (2017) SPARCS and Pelli-Robson contrast sensitivity testing in normal controls and patients with cataract. Eye (Lond) 31:753–761. https://doi.org/10.1038/eye.2016.319

Haymes SA, Roberts KF, Cruess AF, Nicolela MT, LeBlanc RP, Ramsey MS, Chauhan BC, Artes PH (2006) The letter contrast sensitivity test: clinical evaluation of a new design. Invest Ophthalmol Vis Sci 47:2739–2745. https://doi.org/10.1167/iovs.05-1419

Rubin GS, Bandeen-Roche K, Huang GH, Muñoz B, Schein OD, Fried LP, West SK (2001) The association of multiple visual impairments with self-reported visual disability: SEE project. Invest Ophthalmol Vis Sci 42:64–72

Haymes SA, Chen J (2004) Reliability and validity of the Melbourne edge test and high/low contrast visual acuity chart. Optom Vis Sci 81:308–316. https://doi.org/10.1097/01.opx.0000134904.21274.db

Kaiser PK (2009) Prospective evaluation of visual acuity assessment: a comparison of Snellen versus ETDRS charts in clinical practice (an AOS thesis). Trans Am Ophthalmol Soc 107:311–324

Dougherty BE, Flom RE, Bullimore MA (2005) An evaluation of the Mars letter contrast sensitivity test. Optom Vis Sci 82:970–975. https://doi.org/10.1097/01.opx.0000187844.27025.ea

Hong YT, Kim SW, Kim EK, Kim TI (2010) Contrast sensitivity measurement with 2 contrast sensitivity tests in normal eyes and eyes with cataract. J Cataract Refract Surg 36:547–552. https://doi.org/10.1016/j.jcrs.2009.10.048

Pesudovs K, Hazel CA, Doran RM, Elliott DB (2004) The usefulness of Vistech and FACT contrast sensitivity charts for cataract and refractive surgery outcomes research. Br J Ophthalmol 88:11–16. https://doi.org/10.1136/bjo.88.1.11

Reeves BC, Wood JM, Hill AR (1991) Vistech VCTS 6500 charts--within- and between-session reliability. Optom Vis Sci 68:728–737. https://doi.org/10.1097/00006324-199109000-00010

Kelly SA, Pang Y, Klemencic S (2012) Reliability of the CSV-1000 in adults and children. Optom Vis Sci 89:1172–1181. https://doi.org/10.1097/OPX.0b013e318264097b

Pomerance GN, Evans DW (1994) Test-retest reliability of the CSV-1000 contrast test and its relationship to glaucoma therapy. Invest Ophthalmol Vis Sci 35:3357–3361

Cocce KJ, Stinnett SS, Luhmann UFO, Vajzovic L, Horne A, Schuman SG, Toth CA, Cousins SW, Lad EM (2018) Visual function metrics in early and intermediate dry age-related macular degeneration for use as clinical trial endpoints. Am J Ophthalmol 189:127–138. https://doi.org/10.1016/j.ajo.2018.02.012

Sunness JS, Rubin GS, Broman A, Applegate CA, Bressler NM, Hawkins BS (2008) Low luminance visual dysfunction as a predictor of subsequent visual acuity loss from geographic atrophy in age-related macular degeneration. Ophthalmology 115(1480-1488):1488.e1481–1488.e1482. https://doi.org/10.1016/j.ophtha.2008.03.009

Owsley C, Huisingh C, Clark ME, Jackson GR, McGwin G Jr (2016) Comparison of visual function in older eyes in the earliest stages of age-related macular degeneration to those in normal macular health. Curr Eye Res 41:266–272. https://doi.org/10.3109/02713683.2015.1011282

Owsley C, McGwin G Jr, Scilley K, Kallies K (2006) Development of a questionnaire to assess vision problems under low luminance in age-related maculopathy. Invest Ophthalmol Vis Sci 47:528–535. https://doi.org/10.1167/iovs.05-1222

Scilley K, Jackson GR, Cideciyan AV, Maguire MG, Jacobson SG, Owsley C (2002) Early age-related maculopathy and self-reported visual difficulty in daily life. Ophthalmology 109:1235–1242. https://doi.org/10.1016/s0161-6420(02)01060-6

Author information

Authors and Affiliations

Contributions

Concept and design: FV, NAP, DW, and JBM. Data collection: MF, PZ, AB, and ZH. Data analysis: MF, FV, and YZ. Data interpretation: MF, FV, YZ, and JBM. Drafting initial manuscript: MF and FV. Critical revision of the manuscript: MF, FV, YZ, PZ, AB, ZH, NAP, DW, and JBM.

Corresponding author

Ethics declarations

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the Massachusetts Eye and Ear Infirmary and with the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards. The Institutional Review Board at Massachusetts General Brigham approved the study protocol (ID: 2019P001311).

Informed consent

Informed consent was obtained from all individual participants included in the study.

Conflict of interest

1. J.B.M. is a consultant for Alcon, Allergan, Carl Zeiss, Sunovion, Topcon, and Genentech.

2. D.M.W. holds a patent through Massachusetts Eye and Ear.

3. N.A.P. is a consultant for Alimera Sciences, Alcon, Allergan, and Genentech.

4. The following authors do not have any competing interests to disclose: MF, FV, YZ, PZ, AB, FR, XD, and ZH.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

ESM 1

(DOCX 500 KB )

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Finn, M., Vingopoulos, F., Zhao, Y. et al. Test-retest repeatability and agreement of the quantitative contrast sensitivity function test: towards the validation of a new clinical endpoint. Graefes Arch Clin Exp Ophthalmol 262, 813–822 (2024). https://doi.org/10.1007/s00417-023-06291-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00417-023-06291-y